{

"cells": [

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# Semantic Segmentation Input"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Install Dependencies"

]

},

{

"cell_type": "code",

"execution_count": 10,

"metadata": {},

"outputs": [],

"source": [

"!pip install panoptica auxiliary rich numpy > /dev/null"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"If you installed the packages and requirements on your own machine, you can skip this section and start from the import section.\n",

"\n",

"### Setup Colab environment (optional) \n",

"Otherwise you can follow and execute the tutorial on your browser.\n",

"In order to start working on the notebook, click on the following button, this will open this page in the Colab environment and you will be able to execute the code on your own (*Google account required*).\n",

"\n",

"\n",

"  \n",

"\n",

"\n",

"Now that you are visualizing the notebook in Colab, run the next cell to install the packages we will use. There are few things you should follow in order to properly set the notebook up:\n",

"1. Warning: This notebook was not authored by Google. Click on 'Run anyway'.\n",

"1. When the installation commands are done, there might be \"Restart runtime\" button at the end of the output. Please, click it.\n",

"If you run the next cell in a Google Colab environment, it will **clone the 'tutorials' repository** in your google drive. This will create a **new folder** called \"tutorials\" in **your Google Drive**.\n",

"All generated file will be created/uploaded to your Google Drive respectively.\n",

"\n",

"After the first execution of the next cell, you might receive some warnings and notifications, please follow these instructions:\n",

" - 'Permit this notebook to access your Google Drive files?' Click on 'Yes', and select your account.\n",

" - Google Drive for desktop wants to access your Google Account. Click on 'Allow'.\n",

"\n",

"Afterwards the \"tutorials\" folder has been created. You can navigate it through the lefthand panel in Colab. You might also have received an email that informs you about the access on your Google Drive."

]

},

{

"cell_type": "code",

"execution_count": 2,

"metadata": {},

"outputs": [],

"source": [

"import sys\n",

"\n",

"# Check if we are in google colab currently\n",

"try:\n",

" import google.colab\n",

"\n",

" colabFlag = True\n",

"except ImportError as r:\n",

" colabFlag = False\n",

"\n",

"# Execute certain steps only if we are in a colab environment\n",

"if colabFlag:\n",

" # Create a folder in your Google Drive\n",

" from google.colab import drive\n",

"\n",

" drive.mount(\"/content/drive\")\n",

" # clone repository and set path\n",

" !git clone https://github.com/BrainLesion/tutorials.git /content/drive/MyDrive/tutorials\n",

" BASE_PATH = \"/content/drive/MyDrive/tutorials/panoptica\"\n",

" sys.path.insert(0, BASE_PATH)\n",

"\n",

"else: # normal jupyter notebook environment\n",

" BASE_PATH = \".\" # current working directory would be BraTs-Toolkit anyways if you are not in colab"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Setup Imports"

]

},

{

"cell_type": "code",

"execution_count": 3,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"No module named 'pandas'\n",

"OPTIONAL PACKAGE MISSING\n"

]

}

],

"source": [

"from auxiliary.nifti.io import read_nifti\n",

"from rich import print as pprint\n",

"from panoptica import (\n",

" InputType,\n",

" Panoptica_Evaluator,\n",

" ConnectedComponentsInstanceApproximator,\n",

" NaiveThresholdMatching,\n",

")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Load Data"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

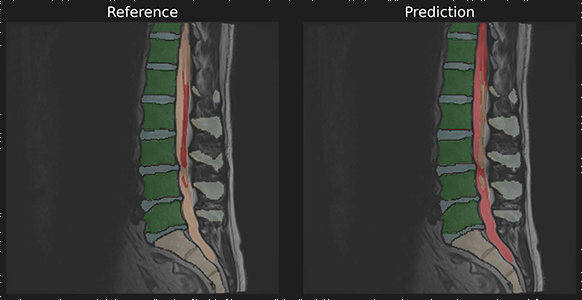

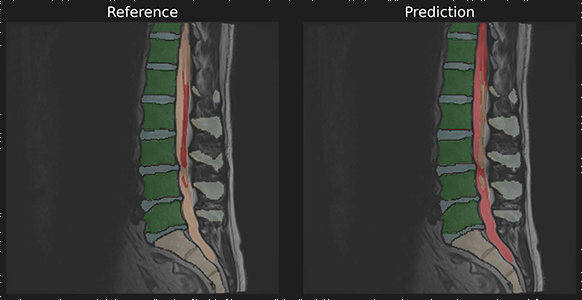

"To demonstrate we use a reference and predicition of spine a segmentation without instances.\n",

"\n",

""

]

},

{

"cell_type": "code",

"execution_count": 4,

"metadata": {},

"outputs": [],

"source": [

"ref_masks = read_nifti(f\"{BASE_PATH}/spine_seg/semantic/ref.nii.gz\")\n",

"pred_masks = read_nifti(f\"{BASE_PATH}/spine_seg/semantic/pred.nii.gz\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"To use your own data please replace the example data with your own data.\n",

"\n",

"In ordner to successfully load your data please use NIFTI files and the following file designation within the \"semantic\" folder: \n",

"\n",

"```panoptica/spine_seg/semantic/```\n",

"\n",

"- Reference data (\"ref.nii.gz\")\n",

"- Prediction data (\"pred.nii.gz\")\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Run Evaluation"

]

},

{

"cell_type": "code",

"execution_count": 5,

"metadata": {},

"outputs": [],

"source": [

"evaluator = Panoptica_Evaluator(\n",

" expected_input=InputType.SEMANTIC,\n",

" instance_approximator=ConnectedComponentsInstanceApproximator(),\n",

" instance_matcher=NaiveThresholdMatching(),\n",

")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Inspect Results\n",

"The results object allows access to individual metrics and provides helper methods for further processing\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"

\n",

"\n",

"\n",

"Now that you are visualizing the notebook in Colab, run the next cell to install the packages we will use. There are few things you should follow in order to properly set the notebook up:\n",

"1. Warning: This notebook was not authored by Google. Click on 'Run anyway'.\n",

"1. When the installation commands are done, there might be \"Restart runtime\" button at the end of the output. Please, click it.\n",

"If you run the next cell in a Google Colab environment, it will **clone the 'tutorials' repository** in your google drive. This will create a **new folder** called \"tutorials\" in **your Google Drive**.\n",

"All generated file will be created/uploaded to your Google Drive respectively.\n",

"\n",

"After the first execution of the next cell, you might receive some warnings and notifications, please follow these instructions:\n",

" - 'Permit this notebook to access your Google Drive files?' Click on 'Yes', and select your account.\n",

" - Google Drive for desktop wants to access your Google Account. Click on 'Allow'.\n",

"\n",

"Afterwards the \"tutorials\" folder has been created. You can navigate it through the lefthand panel in Colab. You might also have received an email that informs you about the access on your Google Drive."

]

},

{

"cell_type": "code",

"execution_count": 2,

"metadata": {},

"outputs": [],

"source": [

"import sys\n",

"\n",

"# Check if we are in google colab currently\n",

"try:\n",

" import google.colab\n",

"\n",

" colabFlag = True\n",

"except ImportError as r:\n",

" colabFlag = False\n",

"\n",

"# Execute certain steps only if we are in a colab environment\n",

"if colabFlag:\n",

" # Create a folder in your Google Drive\n",

" from google.colab import drive\n",

"\n",

" drive.mount(\"/content/drive\")\n",

" # clone repository and set path\n",

" !git clone https://github.com/BrainLesion/tutorials.git /content/drive/MyDrive/tutorials\n",

" BASE_PATH = \"/content/drive/MyDrive/tutorials/panoptica\"\n",

" sys.path.insert(0, BASE_PATH)\n",

"\n",

"else: # normal jupyter notebook environment\n",

" BASE_PATH = \".\" # current working directory would be BraTs-Toolkit anyways if you are not in colab"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Setup Imports"

]

},

{

"cell_type": "code",

"execution_count": 3,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"No module named 'pandas'\n",

"OPTIONAL PACKAGE MISSING\n"

]

}

],

"source": [

"from auxiliary.nifti.io import read_nifti\n",

"from rich import print as pprint\n",

"from panoptica import (\n",

" InputType,\n",

" Panoptica_Evaluator,\n",

" ConnectedComponentsInstanceApproximator,\n",

" NaiveThresholdMatching,\n",

")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Load Data"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"To demonstrate we use a reference and predicition of spine a segmentation without instances.\n",

"\n",

""

]

},

{

"cell_type": "code",

"execution_count": 4,

"metadata": {},

"outputs": [],

"source": [

"ref_masks = read_nifti(f\"{BASE_PATH}/spine_seg/semantic/ref.nii.gz\")\n",

"pred_masks = read_nifti(f\"{BASE_PATH}/spine_seg/semantic/pred.nii.gz\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"To use your own data please replace the example data with your own data.\n",

"\n",

"In ordner to successfully load your data please use NIFTI files and the following file designation within the \"semantic\" folder: \n",

"\n",

"```panoptica/spine_seg/semantic/```\n",

"\n",

"- Reference data (\"ref.nii.gz\")\n",

"- Prediction data (\"pred.nii.gz\")\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Run Evaluation"

]

},

{

"cell_type": "code",

"execution_count": 5,

"metadata": {},

"outputs": [],

"source": [

"evaluator = Panoptica_Evaluator(\n",

" expected_input=InputType.SEMANTIC,\n",

" instance_approximator=ConnectedComponentsInstanceApproximator(),\n",

" instance_matcher=NaiveThresholdMatching(),\n",

")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Inspect Results\n",

"The results object allows access to individual metrics and provides helper methods for further processing\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"────────────────────────────────────────── Thank you for using panoptica ──────────────────────────────────────────\n",

"\n"

],

"text/plain": [

"\u001b[92m────────────────────────────────────────── \u001b[0mThank you for using \u001b[1mpanoptica\u001b[0m\u001b[92m ──────────────────────────────────────────\u001b[0m\n"

]

},

"metadata": {},

"output_type": "display_data"

},

{

"data": {

"text/html": [

" Please support our development by citing \n",

"\n"

],

"text/plain": [

" Please support our development by citing \n"

]

},

"metadata": {},

"output_type": "display_data"

},

{

"data": {

"text/html": [

" https://github.com/BrainLesion/panoptica#citation -- Thank you! \n",

"\n"

],

"text/plain": [

" \u001b[4;94mhttps://github.com/BrainLesion/panoptica#citation\u001b[0m -- Thank you! \n"

]

},

"metadata": {},

"output_type": "display_data"

},

{

"data": {

"text/html": [

"───────────────────────────────────────────────────────────────────────────────────────────────────────────────────\n",

"\n"

],

"text/plain": [

"\u001b[92m───────────────────────────────────────────────────────────────────────────────────────────────────────────────────\u001b[0m\n"

]

},

"metadata": {},

"output_type": "display_data"

},

{

"data": {

"text/html": [

"\n",

"\n"

],

"text/plain": [

"\n"

]

},

"metadata": {},

"output_type": "display_data"

},

{

"name": "stdout",

"output_type": "stream",

"text": [

"\n",

"+++ MATCHING +++\n",

"Number of instances in reference (num_ref_instances): 87\n",

"Number of instances in prediction (num_pred_instances): 89\n",

"True Positives (tp): 73\n",

"False Positives (fp): 16\n",

"False Negatives (fn): 14\n",

"Recognition Quality / F1-Score (rq): 0.8295454545454546\n",

"\n",

"+++ GLOBAL +++\n",

"Global Binary Dice (global_bin_dsc): 0.9731641527805414\n",

"\n",

"+++ INSTANCE +++\n",

"Segmentation Quality IoU (sq): 0.7940127477906024 +- 0.11547745015679488\n",

"Panoptic Quality IoU (pq): 0.6586696657808406\n",

"Segmentation Quality Dsc (sq_dsc): 0.8802182546605446 +- 0.07728416427007166\n",

"Panoptic Quality Dsc (pq_dsc): 0.7301810521615881\n",

"Segmentation Quality ASSD (sq_assd): 0.20573710924944655 +- 0.13983482367660682\n",

"Segmentation Quality Relative Volume Difference (sq_rvd): 0.01134021986061723 +- 0.1217805112447998\n",

"\n"

]

}

],

"source": [

"# print all results\n",

"result = evaluator.evaluate(pred_masks, ref_masks, verbose=False)[\"ungrouped\"]\n",

"print(result)"

]

},

{

"cell_type": "code",

"execution_count": 7,

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"result.pq=0.6586696657808406\n",

"\n"

],

"text/plain": [

"result.\u001b[33mpq\u001b[0m=\u001b[1;36m0\u001b[0m\u001b[1;36m.6586696657808406\u001b[0m\n"

]

},

"metadata": {},

"output_type": "display_data"

}

],

"source": [

"# get specific metric, e.g. pq\n",

"pprint(f\"{result.pq=}\")"

]

},

{

"cell_type": "code",

"execution_count": 8,

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"results dict: \n",

"{\n",

" 'num_ref_instances': 87,\n",

" 'num_pred_instances': 89,\n",

" 'tp': 73,\n",

" 'fp': 16,\n",

" 'fn': 14,\n",

" 'prec': 0.8202247191011236,\n",

" 'rec': 0.8390804597701149,\n",

" 'rq': 0.8295454545454546,\n",

" 'sq': 0.7940127477906024,\n",

" 'sq_std': 0.11547745015679488,\n",

" 'pq': 0.6586696657808406,\n",

" 'sq_dsc': 0.8802182546605446,\n",

" 'sq_dsc_std': 0.07728416427007166,\n",

" 'pq_dsc': 0.7301810521615881,\n",

" 'sq_assd': 0.20573710924944655,\n",

" 'sq_assd_std': 0.13983482367660682,\n",

" 'sq_rvd': 0.01134021986061723,\n",

" 'sq_rvd_std': 0.1217805112447998,\n",

" 'global_bin_dsc': 0.9731641527805414\n",

"}\n",

"\n"

],

"text/plain": [

"results dict: \n",

"\u001b[1m{\u001b[0m\n",

" \u001b[32m'num_ref_instances'\u001b[0m: \u001b[1;36m87\u001b[0m,\n",

" \u001b[32m'num_pred_instances'\u001b[0m: \u001b[1;36m89\u001b[0m,\n",

" \u001b[32m'tp'\u001b[0m: \u001b[1;36m73\u001b[0m,\n",

" \u001b[32m'fp'\u001b[0m: \u001b[1;36m16\u001b[0m,\n",

" \u001b[32m'fn'\u001b[0m: \u001b[1;36m14\u001b[0m,\n",

" \u001b[32m'prec'\u001b[0m: \u001b[1;36m0.8202247191011236\u001b[0m,\n",

" \u001b[32m'rec'\u001b[0m: \u001b[1;36m0.8390804597701149\u001b[0m,\n",

" \u001b[32m'rq'\u001b[0m: \u001b[1;36m0.8295454545454546\u001b[0m,\n",

" \u001b[32m'sq'\u001b[0m: \u001b[1;36m0.7940127477906024\u001b[0m,\n",

" \u001b[32m'sq_std'\u001b[0m: \u001b[1;36m0.11547745015679488\u001b[0m,\n",

" \u001b[32m'pq'\u001b[0m: \u001b[1;36m0.6586696657808406\u001b[0m,\n",

" \u001b[32m'sq_dsc'\u001b[0m: \u001b[1;36m0.8802182546605446\u001b[0m,\n",

" \u001b[32m'sq_dsc_std'\u001b[0m: \u001b[1;36m0.07728416427007166\u001b[0m,\n",

" \u001b[32m'pq_dsc'\u001b[0m: \u001b[1;36m0.7301810521615881\u001b[0m,\n",

" \u001b[32m'sq_assd'\u001b[0m: \u001b[1;36m0.20573710924944655\u001b[0m,\n",

" \u001b[32m'sq_assd_std'\u001b[0m: \u001b[1;36m0.13983482367660682\u001b[0m,\n",

" \u001b[32m'sq_rvd'\u001b[0m: \u001b[1;36m0.01134021986061723\u001b[0m,\n",

" \u001b[32m'sq_rvd_std'\u001b[0m: \u001b[1;36m0.1217805112447998\u001b[0m,\n",

" \u001b[32m'global_bin_dsc'\u001b[0m: \u001b[1;36m0.9731641527805414\u001b[0m\n",

"\u001b[1m}\u001b[0m\n"

]

},

"metadata": {},

"output_type": "display_data"

}

],

"source": [

"# get dict for further processing, e.g. for pandas\n",

"pprint(\"results dict: \", result.to_dict())"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"InputType.SEMANTIC\n",

"Prediction array shape = (170, 512, 17) unique_values= [ 0 26 41 42 43 44 45 46 47 48 49 60 61 62 100]\n",

"Reference array shape = (170, 512, 17) unique_values= [ 0 26 41 42 43 44 45 46 47 48 49 60 61 62 100]\n",

"\n",

"InputType.UNMATCHED_INSTANCE\n",

"Prediction array shape = (170, 512, 17) unique_values= [ 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23\n",

" 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47\n",

" 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71\n",

" 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89]\n",

"Reference array shape = (170, 512, 17) unique_values= [ 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23\n",

" 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47\n",

" 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71\n",

" 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87]\n",

"\n",

"InputType.MATCHED_INSTANCE\n",

"Prediction array shape = (170, 512, 17) unique_values= [ 0 1 2 3 4 6 7 8 9 10 12 13 14 15 16 17 18 19\n",

" 20 22 23 25 26 31 33 34 35 38 40 41 42 44 45 46 47 48\n",

" 49 50 51 52 53 54 55 56 57 58 59 60 61 63 64 65 66 67\n",

" 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85\n",

" 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103]\n",

"Reference array shape = (170, 512, 17) unique_values= [ 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23\n",

" 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47\n",

" 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71\n",

" 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87]\n",

"\n"

]

}

],

"source": [

"# To inspect different phases, just use the returned intermediate_steps_data object\n",

"\n",

"import numpy as np\n",

"\n",

"intermediate_steps_data = result.intermediate_steps_data\n",

"intermediate_steps_data.original_prediction_arr # yields input prediction array\n",

"intermediate_steps_data.original_reference_arr # yields input reference array\n",

"\n",

"intermediate_steps_data.prediction_arr(\n",

" InputType.MATCHED_INSTANCE\n",

") # yields prediction array after instances have been matched\n",

"intermediate_steps_data.reference_arr(\n",

" InputType.MATCHED_INSTANCE\n",

") # yields reference array after instances have been matched\n",

"\n",

"# This works with all InputType\n",

"for i in InputType:\n",

" print(i)\n",

" pred = intermediate_steps_data.prediction_arr(i)\n",

" ref = intermediate_steps_data.reference_arr(i)\n",

" print(\"Prediction array shape =\", pred.shape, \"unique_values=\", np.unique(pred))\n",

" print(\"Reference array shape =\", ref.shape, \"unique_values=\", np.unique(ref))\n",

" print()"

]

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3 (ipykernel)",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.10.14"

}

},

"nbformat": 4,

"nbformat_minor": 2

}