This document is an outline of resources for a course for those wanting to learn to use Python and DeepLabCut (while responsibly isolating due to COVID-19!). We expect it to take *roughly* 1-2 weeks to get through if you do it rigorously. To get the basics, it should take 1-2 days.

[CLICK HERE to launch the interactive graphic to get started!](https://view.genial.ly/5fb40a49f8a0ef13943d4e5e/horizontal-infographic-review-learning-to-use-deeplabcut) (mini preview below) Or, jump in below!

This document is an outline of resources for a course for those wanting to learn to use Python and DeepLabCut (while responsibly isolating due to COVID-19!). We expect it to take *roughly* 1-2 weeks to get through if you do it rigorously. To get the basics, it should take 1-2 days.

[CLICK HERE to launch the interactive graphic to get started!](https://view.genial.ly/5fb40a49f8a0ef13943d4e5e/horizontal-infographic-review-learning-to-use-deeplabcut) (mini preview below) Or, jump in below!

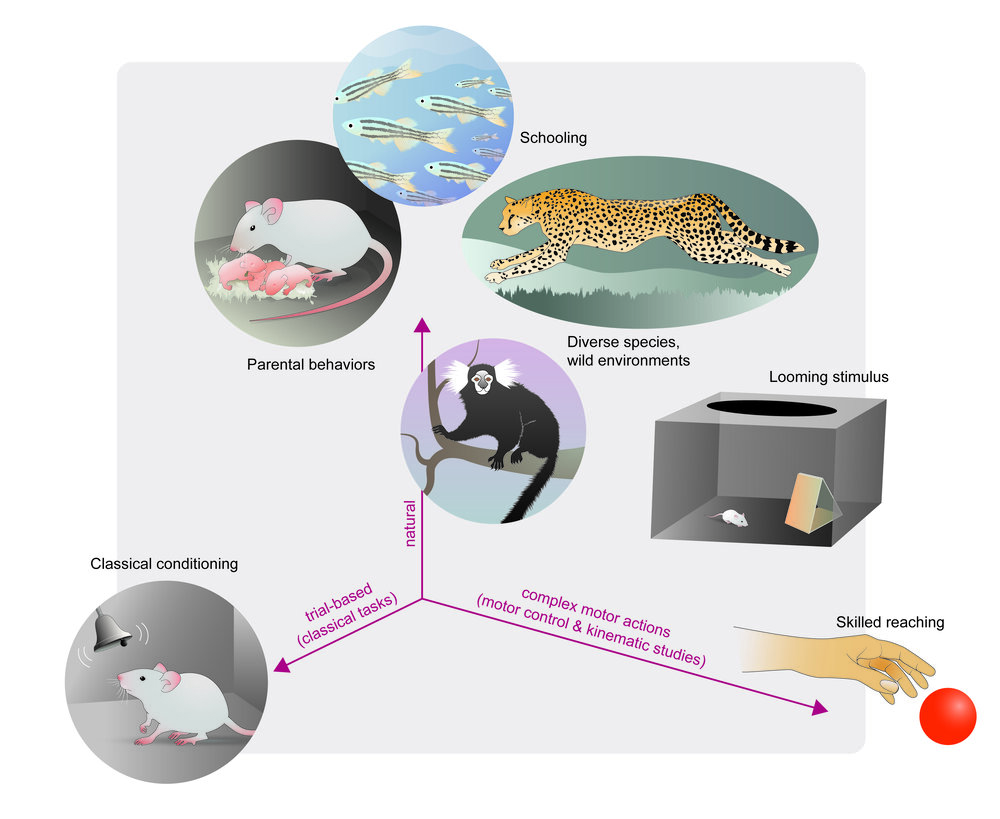

- **REVIEW PAPER:** The state of animal pose estimation w/ deep learning i.e. "Deep learning tools for the measurement of animal behavior in neuroscience" [arXiv](https://arxiv.org/abs/1909.13868) & [published version](https://www.sciencedirect.com/science/article/pii/S0959438819301151)

- **NEW! REVIEW PAPER:** A Primer on Motion Capture with Deep Learning: Principles, Pitfalls and Perspectives

https://arxiv.org/abs/2009.00564

- **WATCH:** There are a lot of docs... where to begin: [Video Tutorial!](https://www.youtube.com/watch?v=A9qZidI7tL8)

### **Module 1: getting started on data**

**What you need:** any videos where you can see the animals/objects, etc.

You can use our demo videos, grab some from the internet, or use whatever older data you have. Any camera, color/monochrome, etc will work. Find diverse videos, and label what you want to track well :)

- IF YOU ARE PART OF THE COURSE: you will be contributing to the DLC Model Zoo :smile:

:purple_heart: **NOTE:** if you want to contribute back to community-science, please get in touch with us as we have a LOT of data we want to label to be able to share back with everyone; So, if you want to help sign up here (labeling can be on data we provide or possibly yours): https://forms.gle/KRtdKKYB57ZkaBwH7 :purple_heart:

- **Slides:** [Overview of starting new projects](https://github.com/DeepLabCut/DeepLabCut-Workshop-Materials/blob/master/part1-labeling.pdf)

- **READ ME PLEASE:** [DeepLabCut, the science](https://rdcu.be/4Rep)

- **READ ME PLEASE:** [DeepLabCut, the user guide](https://rdcu.be/bHpHN)

- **WATCH:** Video tutorial 1: [using the Project Manager GUI](https://www.youtube.com/watch?v=KcXogR-p5Ak)

- Please go from project creation (use >1 video!) to labeling your data, and then check the labels!

- **WATCH:** Video tutorial 2: [using the Project Manager GUI for multi-animal pose estimation](https://www.youtube.com/watch?v=Kp-stcTm77g)

- Please go from project creation (use >1 video!) to labeling your data, and then check the labels!

- **WATCH:** Video tutorial 3: [using ipython/pythonw (more functions!)](https://www.youtube.com/watch?v=7xwOhUcIGio)

- multi-animal DLC: [labeling](https://www.youtube.com/watch?v=Kp-stcTm77g)

- Please go from project creation (use >1 video!) to labeling your data, and then check the labels!

- **June 5th RECAP: AFTER LABELING (ACTION/WATCH):**

- IF YOU LABELED FOR THE MODEL ZOO, [please upload your labeled data here!!](https://docs.google.com/forms/d/e/1FAIpQLSf0z6CWihGOxBUiALpN-ms4hr42xHNPAbvfeI3WxZRbEk9Reg/viewform)

- Once you label on your laptop and you want to train on the cloud, please upload your project folder to google drive, and then use this [COLAB NOTEBOOK](https://github.com/DeepLabCut/DeepLabCut/blob/master/examples/COLAB/COLAB_YOURDATA_TrainNetwork_VideoAnalysis.ipynb) for single animal projects/model zoo, etc; and this [COLAB NOTEBOOK](https://github.com/DeepLabCut/DeepLabCut/blob/master/examples/COLAB/COLAB_maDLC_TrainNetwork_VideoAnalysis.ipynb) if you have a multi-animal project to create a training set, train, and start evaluating.

- [VIDEO on using COLAB with your data](https://www.youtube.com/watch?v=qJGs8nxx80A)

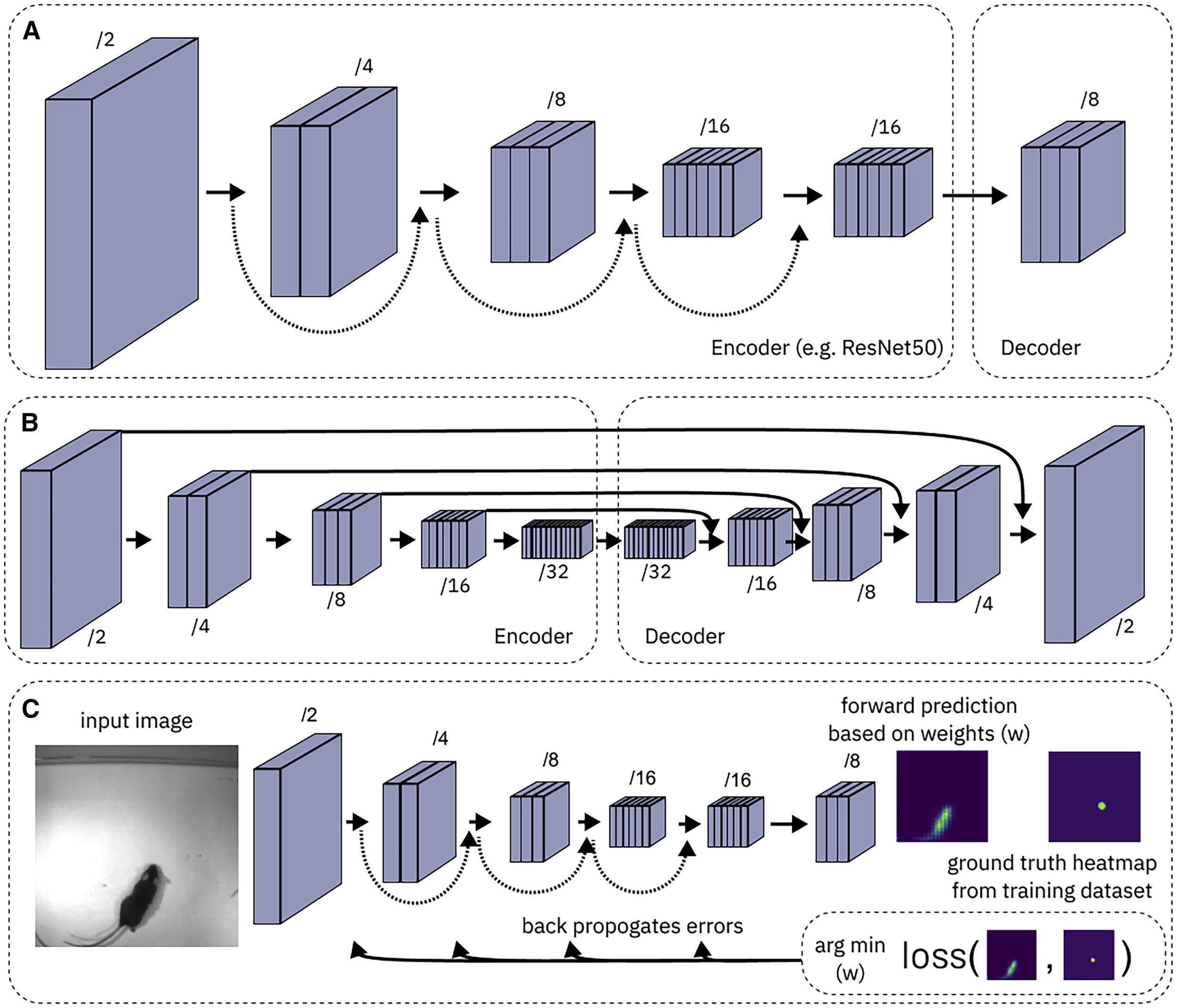

### **Module 2: Neural Networks**

- **Slides:** [Overview of creating training and test data, and training networks](https://github.com/DeepLabCut/DeepLabCut-Workshop-Materials/blob/master/part2-network.pdf)

- **READ ME PLEASE:** [What are convolutional neural networks?](https://towardsdatascience.com/a-comprehensive-guide-to-convolutional-neural-networks-the-eli5-way-3bd2b1164a53)

- **READ ME PLEASE:** Here is a new paper from us describing challenges in robust pose estimation, why PRE-TRAINING really matters - which was our major scientific contribution to low-data input pose-estimation - and it describes new networks that are available to you. [Pretraining boosts out-of-domain robustness for pose estimation](https://paperswithcode.com/paper/pretraining-boosts-out-of-domain-robustness)

- **MORE DETAILS:** ImageNet: check out the original paper and dataset: http://www.image-net.org/ (link to [ppt from Dr. Fei-Fei Li](http://www.image-net.org/papers/ImageNet_2010.ppt))

- **NEW! REVIEW PAPER:** A Primer on Motion Capture with Deep Learning: Principles, Pitfalls and Perspectives

https://arxiv.org/abs/2009.00564

- **REVIEW PAPER:** The state of animal pose estimation w/ deep learning i.e. "Deep learning tools for the measurement of animal behavior in neuroscience" [arXiv](https://arxiv.org/abs/1909.13868) & [published version](https://www.sciencedirect.com/science/article/pii/S0959438819301151)

- **NEW! REVIEW PAPER:** A Primer on Motion Capture with Deep Learning: Principles, Pitfalls and Perspectives

https://arxiv.org/abs/2009.00564

- **WATCH:** There are a lot of docs... where to begin: [Video Tutorial!](https://www.youtube.com/watch?v=A9qZidI7tL8)

### **Module 1: getting started on data**

**What you need:** any videos where you can see the animals/objects, etc.

You can use our demo videos, grab some from the internet, or use whatever older data you have. Any camera, color/monochrome, etc will work. Find diverse videos, and label what you want to track well :)

- IF YOU ARE PART OF THE COURSE: you will be contributing to the DLC Model Zoo :smile:

:purple_heart: **NOTE:** if you want to contribute back to community-science, please get in touch with us as we have a LOT of data we want to label to be able to share back with everyone; So, if you want to help sign up here (labeling can be on data we provide or possibly yours): https://forms.gle/KRtdKKYB57ZkaBwH7 :purple_heart:

- **Slides:** [Overview of starting new projects](https://github.com/DeepLabCut/DeepLabCut-Workshop-Materials/blob/master/part1-labeling.pdf)

- **READ ME PLEASE:** [DeepLabCut, the science](https://rdcu.be/4Rep)

- **READ ME PLEASE:** [DeepLabCut, the user guide](https://rdcu.be/bHpHN)

- **WATCH:** Video tutorial 1: [using the Project Manager GUI](https://www.youtube.com/watch?v=KcXogR-p5Ak)

- Please go from project creation (use >1 video!) to labeling your data, and then check the labels!

- **WATCH:** Video tutorial 2: [using the Project Manager GUI for multi-animal pose estimation](https://www.youtube.com/watch?v=Kp-stcTm77g)

- Please go from project creation (use >1 video!) to labeling your data, and then check the labels!

- **WATCH:** Video tutorial 3: [using ipython/pythonw (more functions!)](https://www.youtube.com/watch?v=7xwOhUcIGio)

- multi-animal DLC: [labeling](https://www.youtube.com/watch?v=Kp-stcTm77g)

- Please go from project creation (use >1 video!) to labeling your data, and then check the labels!

- **June 5th RECAP: AFTER LABELING (ACTION/WATCH):**

- IF YOU LABELED FOR THE MODEL ZOO, [please upload your labeled data here!!](https://docs.google.com/forms/d/e/1FAIpQLSf0z6CWihGOxBUiALpN-ms4hr42xHNPAbvfeI3WxZRbEk9Reg/viewform)

- Once you label on your laptop and you want to train on the cloud, please upload your project folder to google drive, and then use this [COLAB NOTEBOOK](https://github.com/DeepLabCut/DeepLabCut/blob/master/examples/COLAB/COLAB_YOURDATA_TrainNetwork_VideoAnalysis.ipynb) for single animal projects/model zoo, etc; and this [COLAB NOTEBOOK](https://github.com/DeepLabCut/DeepLabCut/blob/master/examples/COLAB/COLAB_maDLC_TrainNetwork_VideoAnalysis.ipynb) if you have a multi-animal project to create a training set, train, and start evaluating.

- [VIDEO on using COLAB with your data](https://www.youtube.com/watch?v=qJGs8nxx80A)

### **Module 2: Neural Networks**

- **Slides:** [Overview of creating training and test data, and training networks](https://github.com/DeepLabCut/DeepLabCut-Workshop-Materials/blob/master/part2-network.pdf)

- **READ ME PLEASE:** [What are convolutional neural networks?](https://towardsdatascience.com/a-comprehensive-guide-to-convolutional-neural-networks-the-eli5-way-3bd2b1164a53)

- **READ ME PLEASE:** Here is a new paper from us describing challenges in robust pose estimation, why PRE-TRAINING really matters - which was our major scientific contribution to low-data input pose-estimation - and it describes new networks that are available to you. [Pretraining boosts out-of-domain robustness for pose estimation](https://paperswithcode.com/paper/pretraining-boosts-out-of-domain-robustness)

- **MORE DETAILS:** ImageNet: check out the original paper and dataset: http://www.image-net.org/ (link to [ppt from Dr. Fei-Fei Li](http://www.image-net.org/papers/ImageNet_2010.ppt))

- **NEW! REVIEW PAPER:** A Primer on Motion Capture with Deep Learning: Principles, Pitfalls and Perspectives

https://arxiv.org/abs/2009.00564

Before you create a training/test set, please read/watch:

- **More information:** [Which types neural networks are available, and what should I use?](https://github.com/AlexEMG/DeepLabCut/wiki/What-neural-network-should-I-use%3F)

- **WATCH:** Video tutorial 1: [How to test different networks in a controlled way](https://www.youtube.com/watch?v=WXCVr6xAcCA)

- Now, decide what model(s) you want to test.

- IF you want to train on your CPU, then run the step `create_training_dataset`, in the GUI etc. on your own computer.

- IF you want to use GPUs on google colab, [**(1)** watch this FIRST/follow along here!](https://www.youtube.com/watch?v=qJGs8nxx80A) **(2)** move your whole project folder to Google Drive, and then [**use this notebook**](https://github.com/DeepLabCut/DeepLabCut/blob/master/examples/COLAB/COLAB_YOURDATA_TrainNetwork_VideoAnalysis.ipynb)

**MODULE 2 webinar**: https://youtu.be/ILsuC4icBU0

### **Module 3: Evalution of network performance**

- **Slides** [Evalute your network](https://github.com/DeepLabCut/DeepLabCut-Workshop-Materials/blob/master/part3-analysis.pdf)

- **WATCH:** [Evaluate the network in ipython](https://www.youtube.com/watch?v=bgfnz1wtlpo)

- why evaluation matters; how to benchmark; analyzing a video and using scoremaps, conf. readouts, etc.

### **Module 4: Scaling your analysis to many new videos**

Once you have good networks, you can deploy them. You can create "cron jobs" to run a timed analysis script, for example. We run this daily on new videos collected in the lab. Check out a simple script to get started, and read more below:

- [Analyzing videos in batches, over many folders, setting up automated data processing](https://github.com/DeepLabCut/DLCutils/tree/master/SCALE_YOUR_ANALYSIS)

- How to automate your analysis in the lab: [datajoint.io](https://datajoint.io), Cron Jobs: [schedule your code runs](https://www.ostechnix.com/a-beginners-guide-to-cron-jobs/)

### **Module 5: Got Poses? Now what ...**

Pose estimation took away the painful part of digitizing your data, but now what? There is a rich set of tools out there to help you create your own custom analysis, or use others (and edit them to your needs). Check out more below:

- [Helper code and packages for use on DLC outputs](https://github.com/DeepLabCut/DLCutils)

- Create your own machine learning classifiers: https://scikit-learn.org/stable/

- **REVIEW PAPER:** [Toward a Science of Computational Ethology](https://www.sciencedirect.com/science/article/pii/S0896627314007934)

- **REVIEW PAPER:** The state of animal pose estimation w/ deep learning i.e. "Deep learning tools for the measurement of animal behavior in neuroscience" [arXiv](https://arxiv.org/abs/1909.13868) & [published version](https://www.sciencedirect.com/science/article/pii/S0959438819301151)

- **REVIEW PAPER:** [Big behavior: challenges and opportunities in a new era of deep behavior profiling](https://www.nature.com/articles/s41386-020-0751-7)

- **READ**: [Automated measurement of mouse social behaviors using depth sensing, video tracking, and machine learning](https://www.pnas.org/content/112/38/E5351)

*compiled and edited by Mackenzie Mathis*

Before you create a training/test set, please read/watch:

- **More information:** [Which types neural networks are available, and what should I use?](https://github.com/AlexEMG/DeepLabCut/wiki/What-neural-network-should-I-use%3F)

- **WATCH:** Video tutorial 1: [How to test different networks in a controlled way](https://www.youtube.com/watch?v=WXCVr6xAcCA)

- Now, decide what model(s) you want to test.

- IF you want to train on your CPU, then run the step `create_training_dataset`, in the GUI etc. on your own computer.

- IF you want to use GPUs on google colab, [**(1)** watch this FIRST/follow along here!](https://www.youtube.com/watch?v=qJGs8nxx80A) **(2)** move your whole project folder to Google Drive, and then [**use this notebook**](https://github.com/DeepLabCut/DeepLabCut/blob/master/examples/COLAB/COLAB_YOURDATA_TrainNetwork_VideoAnalysis.ipynb)

**MODULE 2 webinar**: https://youtu.be/ILsuC4icBU0

### **Module 3: Evalution of network performance**

- **Slides** [Evalute your network](https://github.com/DeepLabCut/DeepLabCut-Workshop-Materials/blob/master/part3-analysis.pdf)

- **WATCH:** [Evaluate the network in ipython](https://www.youtube.com/watch?v=bgfnz1wtlpo)

- why evaluation matters; how to benchmark; analyzing a video and using scoremaps, conf. readouts, etc.

### **Module 4: Scaling your analysis to many new videos**

Once you have good networks, you can deploy them. You can create "cron jobs" to run a timed analysis script, for example. We run this daily on new videos collected in the lab. Check out a simple script to get started, and read more below:

- [Analyzing videos in batches, over many folders, setting up automated data processing](https://github.com/DeepLabCut/DLCutils/tree/master/SCALE_YOUR_ANALYSIS)

- How to automate your analysis in the lab: [datajoint.io](https://datajoint.io), Cron Jobs: [schedule your code runs](https://www.ostechnix.com/a-beginners-guide-to-cron-jobs/)

### **Module 5: Got Poses? Now what ...**

Pose estimation took away the painful part of digitizing your data, but now what? There is a rich set of tools out there to help you create your own custom analysis, or use others (and edit them to your needs). Check out more below:

- [Helper code and packages for use on DLC outputs](https://github.com/DeepLabCut/DLCutils)

- Create your own machine learning classifiers: https://scikit-learn.org/stable/

- **REVIEW PAPER:** [Toward a Science of Computational Ethology](https://www.sciencedirect.com/science/article/pii/S0896627314007934)

- **REVIEW PAPER:** The state of animal pose estimation w/ deep learning i.e. "Deep learning tools for the measurement of animal behavior in neuroscience" [arXiv](https://arxiv.org/abs/1909.13868) & [published version](https://www.sciencedirect.com/science/article/pii/S0959438819301151)

- **REVIEW PAPER:** [Big behavior: challenges and opportunities in a new era of deep behavior profiling](https://www.nature.com/articles/s41386-020-0751-7)

- **READ**: [Automated measurement of mouse social behaviors using depth sensing, video tracking, and machine learning](https://www.pnas.org/content/112/38/E5351)

*compiled and edited by Mackenzie Mathis*