{

"cells": [

{

"cell_type": "markdown",

"metadata": {},

"source": [

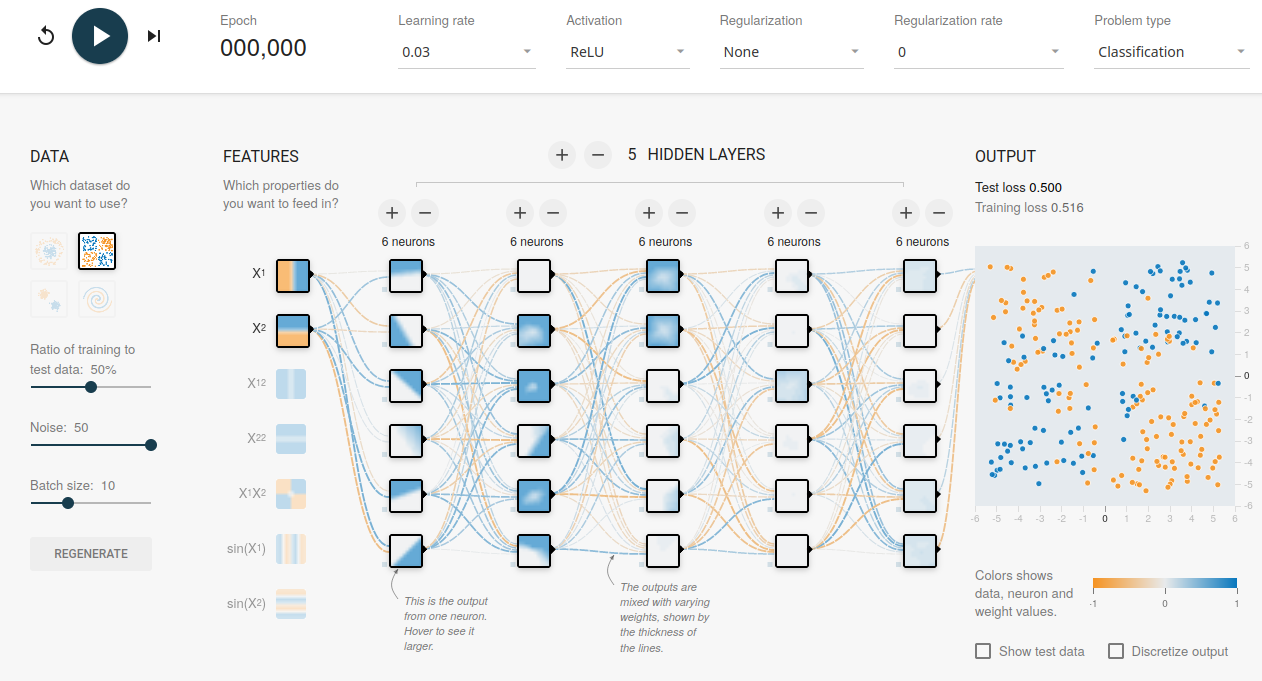

"# Exercise 5.1 - Solution\n",

"## Overtraining and Regularization:\n",

"Open the Tensorflow Playground\n",

"(https://playground.tensorflow.org) and select on the left the checkerboard\n",

"pattern as the data basis (see [Exercise 3.3](Exercise_3_3.ipynb)). As input features, select the two\n",

"independent variables $x_1$ and $x_2$ and set the noise to $50\\%$.\n",

"\n",

"\n",

"[](https://playground.tensorflow.org/#activation=relu&batchSize=10&dataset=xor®Dataset=reg-plane&learningRate=0.03®ularizationRate=0&noise=50&networkShape=6,6,6,6,6&seed=0.82577&showTestData=false&discretize=false&percTrainData=50&x=true&y=true&xTimesY=false&xSquared=false&ySquared=false&cosX=false&sinX=false&cosY=false&sinY=false&collectStats=false&problem=classification&initZero=false&hideText=true)\n",

"\n",

"## Tasks\n",

"1. Choose a deep (many layers) and wide (many nodes) network and train it for more than 1000 epochs. Comment on your observations.\n",

"2. Apply L2 regularization to reduce overfitting. Try low and high regularization rates. What do you observe?\n",

"3. Compare the effects of L1 and L2 regularization."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Solutions"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

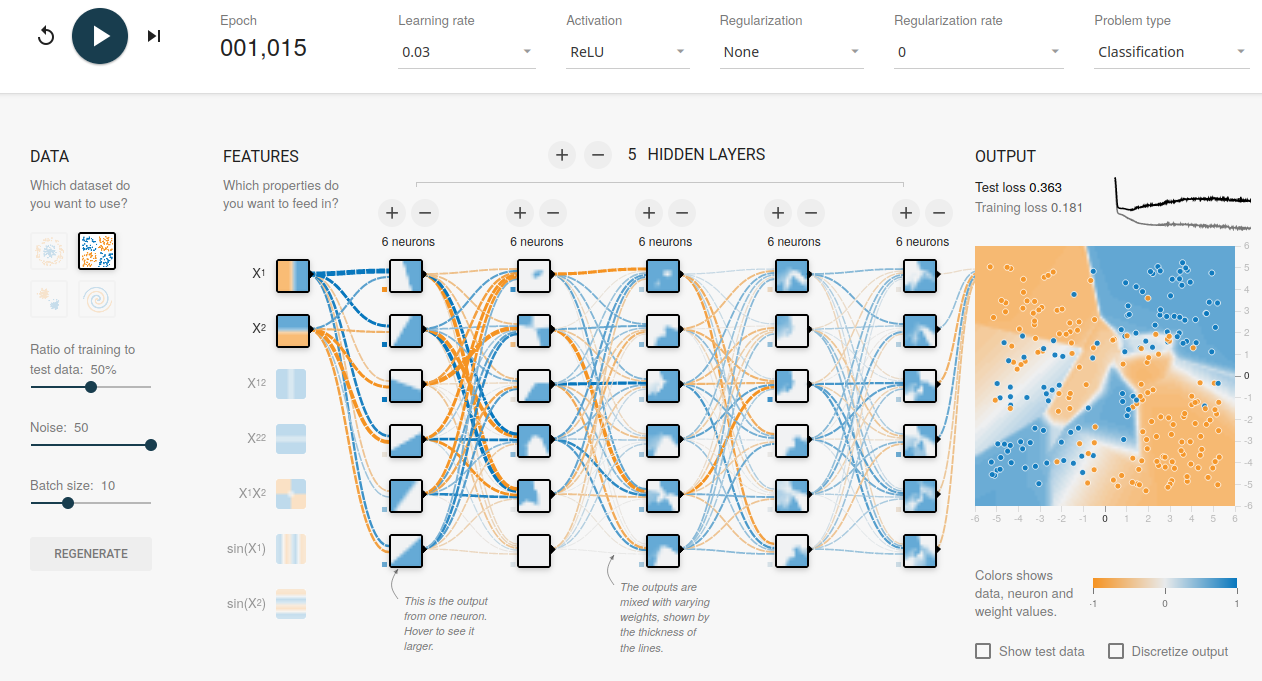

"### Task 1.\n",

"Choose a deep (many layers) and wide (many nodes) network and train it for more than 1000 epochs. Comment on your observations.\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"[](https://playground.tensorflow.org/#activation=relu&batchSize=10&dataset=xor®Dataset=reg-plane&learningRate=0.03®ularizationRate=0&noise=50&networkShape=6,6,6,6&seed=0.38222&showTestData=false&discretize=false&percTrainData=50&x=true&y=true&xTimesY=false&xSquared=false&ySquared=false&cosX=false&sinX=false&cosY=false&sinY=false&collectStats=false&problem=classification&initZero=false&hideText=true)\n",

"\n",

"Overtraining is observed (the network learns statistical fluctuations)."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

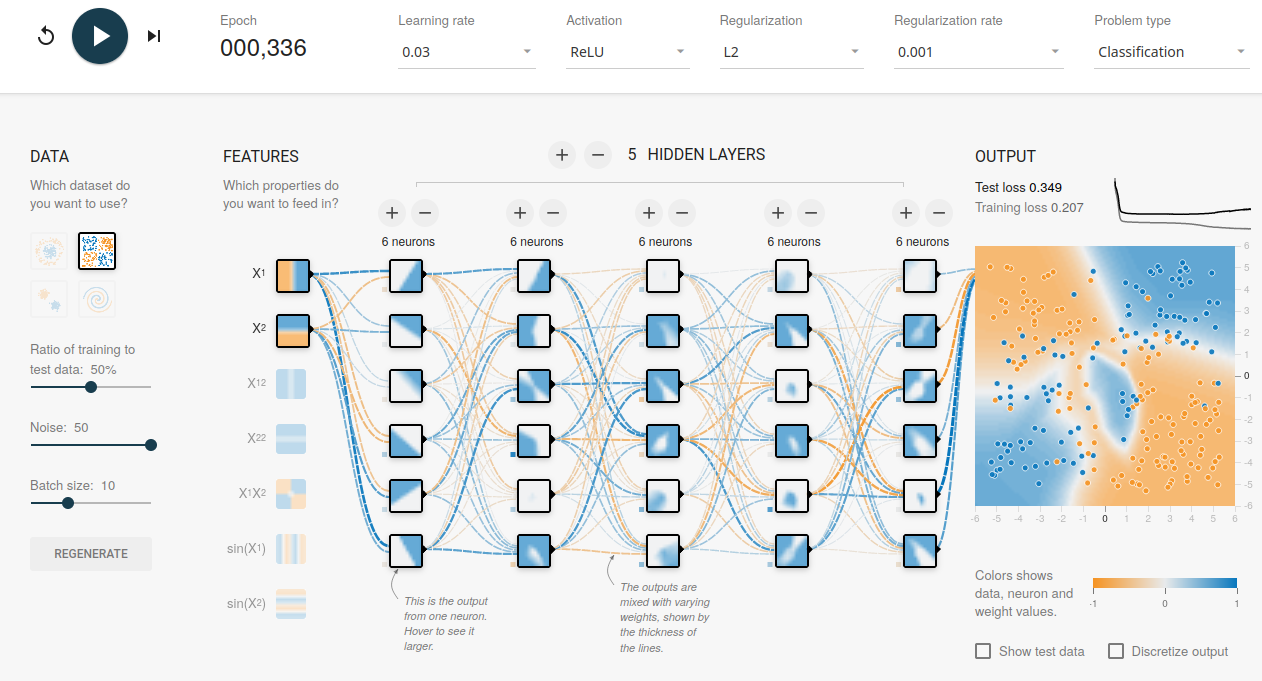

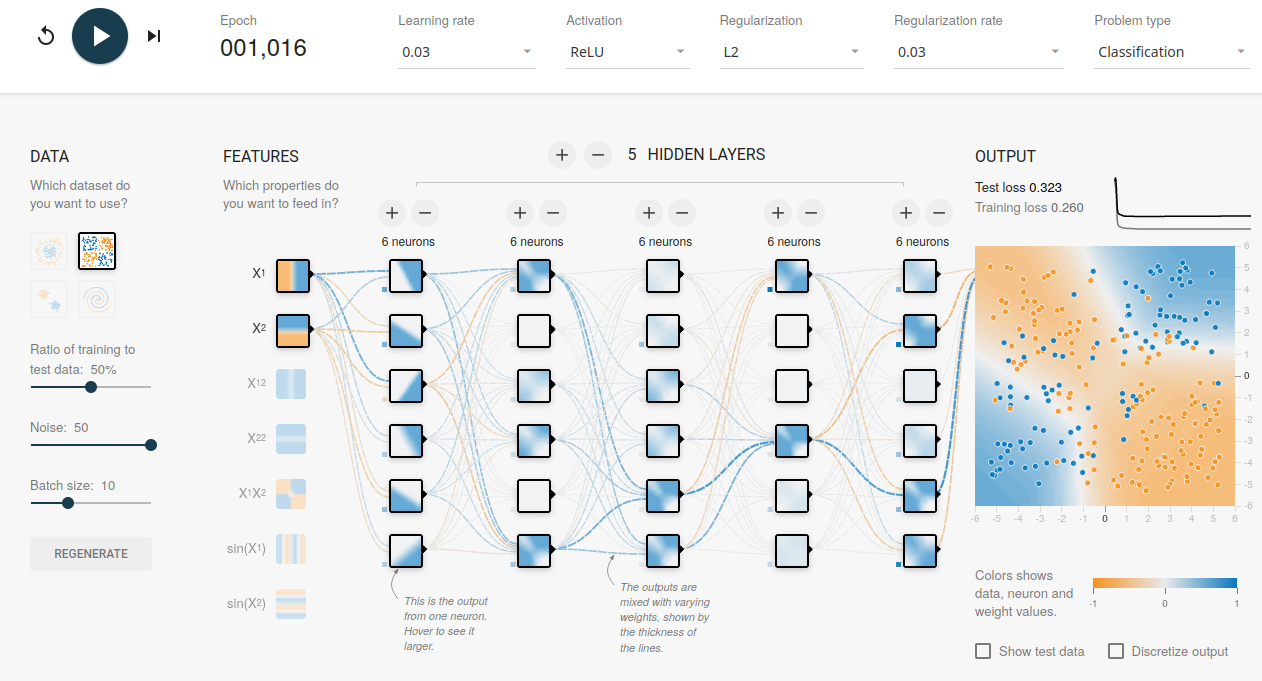

"## Task 2\n",

"Apply L2 regularization to reduce overfitting. Try low and high regularization rates. What do you observe?\n",

"\n",

"#### Low regularization rates"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"[](https://playground.tensorflow.org/#activation=relu&batchSize=10&dataset=xor®Dataset=reg-plane&learningRate=0.03®ularizationRate=0.001&noise=50&networkShape=6,6,6,6,6&seed=0.82577&showTestData=false&discretize=false&percTrainData=50&x=true&y=true&xTimesY=false&xSquared=false&ySquared=false&cosX=false&sinX=false&cosY=false&sinY=false&collectStats=false&problem=classification&initZero=false&hideText=true)\n",

"\n",

" For very low regularization rates ($\\lambda \\rightarrow 0$), the L2 norm penalty is only hardly considered in the training. Thus, the network is still overfitting. \n",

"\n",

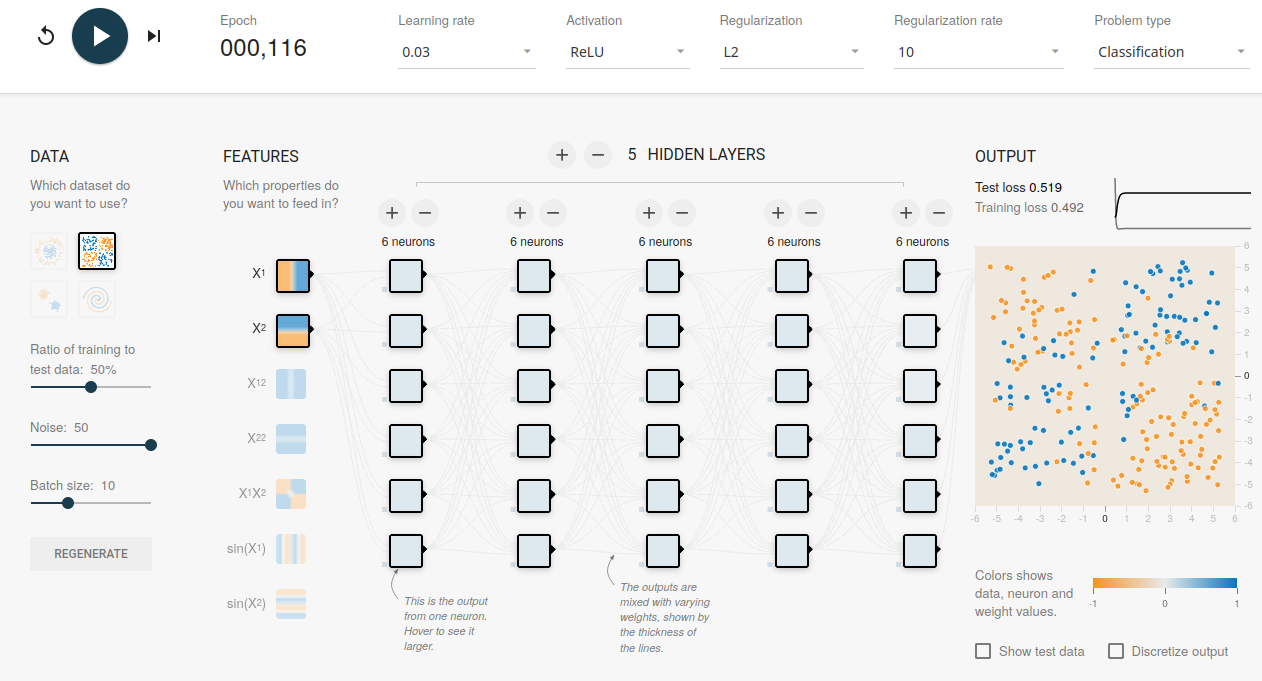

"#### High regularization rates\n",

"[](https://playground.tensorflow.org/#activation=relu&batchSize=10&dataset=xor®Dataset=reg-plane&learningRate=0.03®ularizationRate=10&noise=50&networkShape=6,6,6,6,6&seed=0.82577&showTestData=false&discretize=false&percTrainData=50&x=true&y=true&xTimesY=false&xSquared=false&ySquared=false&cosX=false&sinX=false&cosY=false&sinY=false&collectStats=false&problem=classification&initZero=false&hideText=true)\n",

"\n",

"\n",

" For high regularization rates ($\\lambda >> 0$), almost only the L2 norm penalty is considered in training. Thus, all adaptive parameters are pushed to zero. \n",

"\n",

"#### Moderate regularization rates\n",

"[](https://playground.tensorflow.org/#activation=relu&batchSize=10&dataset=xor®Dataset=reg-plane&learningRate=0.03®ularizationRate=10&noise=50&networkShape=6,6,6,6,6&seed=0.82577&showTestData=false&discretize=false&percTrainData=50&x=true&y=true&xTimesY=false&xSquared=false&ySquared=false&cosX=false&sinX=false&cosY=false&sinY=false&collectStats=false&problem=classification&initZero=false&hideText=true)\n",

"\n",

" For moderate regularization rates, no overtraining can be observed.\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

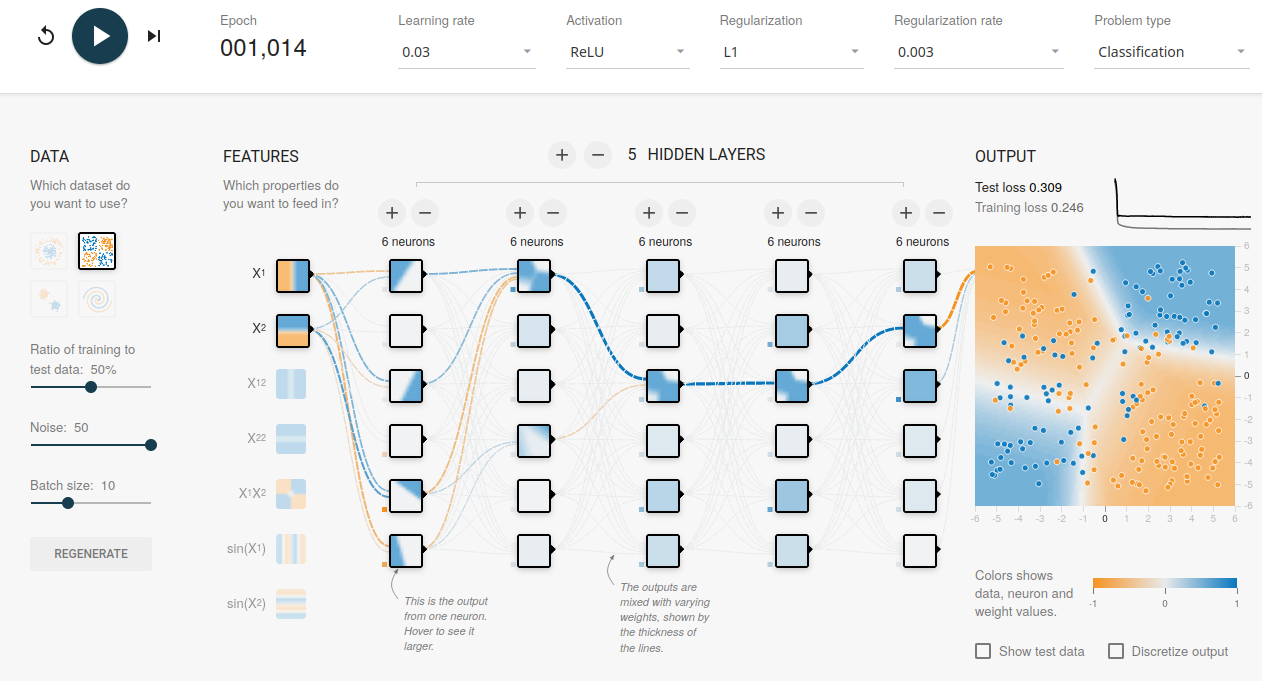

"## Task 3\n",

"Compare the effects of L1 and L2 regularization."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"[](https://playground.tensorflow.org/#activation=relu&batchSize=10&dataset=xor®Dataset=reg-plane&learningRate=0.03®ularizationRate=0&noise=50&networkShape=6,6,6,6,6&seed=0.82577&showTestData=false&discretize=false&percTrainData=50&x=true&y=true&xTimesY=false&xSquared=false&ySquared=false&cosX=false&sinX=false&cosY=false&sinY=false&collectStats=false&problem=classification&initZero=false&hideText=true)\n",

"\n",

"As was observed in Task 1 and Task 2, L2 regularization with moderate regularization rates pushes the weights to smaller values — but not to zero. \n",

"\n",

"In contrast, L1 regularization pushes certain, unimportant weights to zero. Therefore, L1 regularization can, in principle, be used as a feature-selection technique."

]

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.6.9"

}

},

"nbformat": 4,

"nbformat_minor": 4

}