PerfView is a tool for quickly and easily collecting and viewing both time and memory performance data. PerfView uses the Event Tracing for Windows (ETW) feature of the operating system which can collect information machine wide a variety of useful events as described in the advanced collection section. ETW is the same powerful technology the windows performance group uses almost exclusively to track and understand the performance of windows, and the basis for their Xperf tool. PerfView can be thought of a simplified and user friendly version of that tool. In addition PerfView has ability to collect .NET GC Heap information for doing memory investigation (Even for very large GC heaps). PerfView's ability to decode .NET symbolic information as well as the GC heap make PerfView ideal for managed code investigations .

Deploying and Using PerfView

PerfView was designed to be easy to deploy and use. To deploy PerfView simply copy the PerfView.exe to the computer you wish to use it on. No additional files or installation step is needed. PerfView features are 'self-discoverable'. The initial display is a 'quick start' guide that leads you through collecting and viewing your first set of profile data. There is also a built in tutorial. Hovering the mouse over most GUI controls will give you short explanations, and hyperlinks send you to the most appropriate part of this user's guide. Finally PerfView is 'right click enabled' which means that you want to manipulate data in some way, right clicking allows you to discover what PerfView's can do for you.

PerfView is a V4.6.2 .NET application. Thus you need to have installed a V4.6.2 .NET Runtime on the machine which you actually run PerfView. On Windows 10 and Windows Server 2016 has .NET V4.6.2. On other supported OS you can install .NET 4.6.2 from standalone installer. PerfView is not supported on Win2K3 or WinXP. While PerfView itself needs a V4.6.2 runtime, it can collect data on processes that use V2.0 and v4.0 runtimes. On machines that don't have V4.6.2 or later of the .NET runtime installed, it is also possible to collect ETL data with another tool (e.g. XPERF or PerfMonitor) and then copy data file to a machine with V4.6.2 and view it with PerfView.

PerfView was designed to collect and analyze both time and memory scenarios.

See also PerfView Reference Guide.

Hopefully the documentation does a reasonably good job of answering your most common questions about PerfView and performance investigation in general. If you have a question, you should certainly start by searching the user's guide for information

Inevitably however, there will be questions that the docs don't answer, or features you would like to have that don't yet exist, or bugs you want to report. PerfView is an GitHub open source project and you should log questions, bugs or other feedback at

If you are just asking a question there is a Label called 'Question' that you can use to indicate that. If it is a bug, it REALLY helps if you supply enough information to reproduce the bug. Typically this includes the data file you are operating on. You can drag small files into the issue itself, however more likely you will need to put the data file in the cloud somewhere and refer to it in the issue. Finally if you are making a suggestion, the more specific you can be the better. Large features are much less likely to ever be implemented unless you yourself help with the implementation. Please keep that in mind.

You can get the latest version of PerfView by going to the PerfView GitHub Download Page

See Also Tutorial of a GC Heap Memory Investigation

Perhaps the best way to get started is to simply try out the tutorial example. On windows 7 it is recommended that you doc your help as described in help tips. PerfView comes with two tutorial examples 'built in'. Also we strongly suggest that any application you write have performance plan as described in part1 and part2 of Measure Early and Often for Performance .

To run the 'Tutorial' example:

You can also run the tutorial example by typing 'PerfView run tutorial' at the command line. See collecting data from the command line for more.

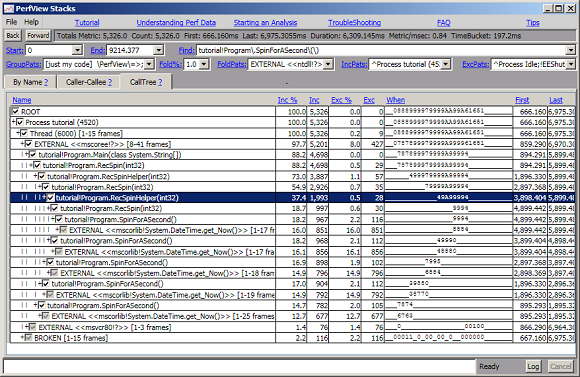

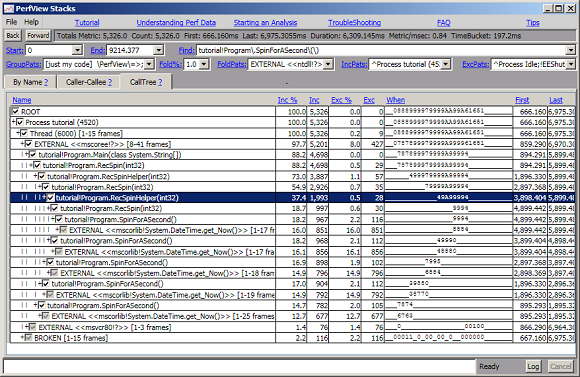

After selecting 'Tutorial.exe' as the process of interest, PerfView brings up the stack viewer looking something like this:

This view shows you where CPU time was spent. PerfView took a sample of where each processor is (including the full stack), every millisecond (see understanding perf data) and the stack viewer shows these samples. Because we told PerfView we were only interested in the Tutorial.exe process this view has been restricted (by 'IncPats') to only show you samples that were spent in that process.

It is always best to begin your investigation by looking at the summary information at the top of the view. This allows you to confirm that indeed the bulk of your performance problem is related to CPU usage before you go chasing down exactly where CPU is spent. This is what the summary statistics are for. We see that the process spent 84% of its wall clock time consuming CPU, which merits further investigation. Next we simply look at the 'When' column for the 'Main' method in the program. This column shows how CPU was used for that method (or any method it calls) over the collection time interval. Time is broken into 32 'TimeBuckets' (in this case we see from the summary statistics that each bucket was 197 msec long), and a number or letter represents what % of 1 CPU is used. 9s and As mean you are close to 100% and we can see that over the lifetime of the main method we are close to 100% utilization of 1 CPU most of the time. Areas outside the main program are probably not interesting to use (they deal with runtime startup and the times before and after process launch), so we probably want to 'zoom in' to that area.

It is pretty common that you are only interested in part of the trace. For example you may only care about startup time, or the time from when a mouse was clicked and when the menu was displayed. Thus zooming in is typically one of first operations you will want to do. zooming in is really just selecting a region of time for investigation. The region of time is displayed in the 'start' and 'end' textboxes. These can be set in three ways

Try out each of these techniques. For example to 'zoom into' just the main method, simply drag the mouse over the 'First' and 'Last' times to select both, right click, and Select Time Range. You can hit the 'Back' button to undo any changes you made so you can re-select. Also notice that each text box remembers the last several values of that box, so you can also 'go back' particular past values by selecting drop down (small down array to the right of the box), and selecting the desired value.

For GUI applications, it is not uncommon to take a trace of the whole run but then 'zoom into' points where the users triggered activity. You can do this by switching to the 'CallTree' tab. This will show you CPU starting from the process itself. The first line of is the View is 'Process32 tutorial.exe' and is a summary of the CPU time for the entire process. The 'when' column shows you CPU for the process over time (32 time buckets). In a GUI application there will be lulls where no CPU was used, followed by bursts of higher CPU use corresponding to user actions. These show up in the numbers in the 'when' column. By clicking on a cell in the 'when' column, selecting a range, right clicking and selecting SetTimeRange (or Alt-R), you can zoom into one of these 'hot spots' (you may have to zoom in more than once). Now you have focused in on what you are interested in (you can confirm by looking at the methods that are called during that time). This is a very useful technique.

For managed applications, you will always want to zoom into the main method before starting your investigation. The reason is that when profile data is collected, after Main has exited, the runtime spends some time dumping symbolic information to the ETW log. This is almost never interesting, and you want to ignore it in your investigation. Zooming into the Main method will do this.

Right clicking, and select 'Lookup Symbols'. After looking up the symbols it will become

If you are doing an unmanaged investigation there are probably a handful of DLLs you will need symbols for. A common workflow is to look at the byname view and while holding down the CTRL key select all the cells that contain dlls with large CPU time but unresolved symbols. Then right click -> Lookup Symbols, and PerfView will look them all up in bulk. See symbol resolution for more details or if lookup symbols fails.

PerfView starts you with the 'ByName view' for doing a bottom-up analysis (see also starting an analysis). In this view you see every method that was involved in a sample (either a sample occurred in the method or the method called a routine that had a sample). Samples can either be exclusive (occurred in within that method), or inclusive (occurred in that method or any method that method called). By default the by name view sorts methods based on their exclusive time (see also Column Sorting). This shows you the 'hottest' methods in your program.

Typically the problem with a 'bottom-up' approach is that the 'hot' methods in your program are

In both cases, you don't want to see these helper routines, but rather the lowest 'semantically interesting' routine. This is where PerfView's powerful grouping features comes into play. By default PerfView groups samples by

For example, the top line in the ByName view is

This is an example of an 'entry group'. 'OTHER' is the group's name and mscorlib!System.DateTime.get_Now() is the method that was called that entered the group. From that point on any methods that get_Now() calls that are within that group are not shown, but rather their time is simply accumulated into this node. Effectively this grouping says 'I don't want to see the internal workings of functions that are not my code, but I do want see public methods I used to call that code. To give you an idea of how useful this feature is, simply turn it off (by clearing the value in the 'GroupPats' box), and view the data. You will see many more methods with names of internal functions used by 'get_Now' which just make your analysis more difficult. (You can use the 'back' button to quickly restore the previous group pattern).

The other feature that helps 'clean up' the bottom-up view is the Fold % feature. This feature will cause all 'small' call tree nodes (less than the given %) to be automatically folded into their parent. Again you can see how much this feature helps by clearing the textbox (which means no folding). With that feature off, you will see many more entries that have 'small' amounts of time. These small entries again tend to just add 'clutter' and make investigation harder.

A quick way of accomplishing (2) is to add the pattern '!?' . This pattern says to fold away any nodes that don't have a method name. See foldPats textbox for more. This leaves us with very 'clean' function view that has only semantically relevant nodes in it.

Review: what all this time selection, grouping and folding is for?

The first phase of a perf investigation is forming a 'perf model' The goal is it assign times to SEMANTICALLY RELEVANT nodes (things the programmer understands and can do something about). We do that by either forming a semantically interesting group and assigning nodes to it, or by folding the node into an existing semantically relevant group or (most commonly) leveraging entry points into large groups (modules and classes), as handy 'pre made' semantically relevant nodes. The goal is to group costs into a relatively small number (< 10) of SEMANTICALLY RELEVANT entries. This allows you to reason about whether that cost is appropriate or not, (which is the second phase of the investigation).

One of the nodes that is left is a node called 'BROKEN'. This is a special node that represents samples whose stack traces were determined to be incomplete and therefore cannot be attributed properly. As long as this number is small (< a few %) then it can simply be ignored. See broken stacks for more.

PerfView displays both the inclusive and exclusive time as both a metric (msec) as well as a % because both are useful. The percentage gives you a good idea of the relative cost of the node, however the absolute value is useful because it very clearly represents 'clock time' (e.g. 300 samples represent 300 msec of CPU time). The absolute value is also useful because when the value gets significantly less than 10 it becomes unreliable (when you have only a handful of samples they might have happened 'by pure chance' and thus should not be relied upon.

The bottom up view did an excellent job of determining that the get_Now() method as well as the 'SpinForASecond' consume the largest amount of time and thus are worth looking at closely. This corresponds to our expectations given the source code in Tutorial.cs. However it can also be useful to understand where CPU time was consumed from the top down. This is what the CallTree view is for. Simply by clicking the 'CallTree' tab of the stack viewer will bring you to that view. Initially the display only shows the root node, but you can open the node by clicking on the check box (or hitting the space bar). This will expand the node. As long as a node only has one child, the child node is also auto-expanded, to save some clicking. You can also right click and select 'expand-all' to expand all nodes under the selected node. Doing this on the root node yields the following display

Notice how clean the call tree view is, without a lot of 'noise' entries. In fact this view does a really good job of describing what is going on. Notice it clearly shows the fact that Main calls 'RecSpin, which runs for 5 seconds (from 894ms to 5899msec) consuming 4698 msec of CPU while doing so (The CPU is not 5000msec because of the overheads of actually collecting the profile (and other OS overhead which is not attributed to this process as well as broken stacks), which typically run in the 5-10% range. In this case it seems to be about 6%). The 'When' column also clearly shows how one instance of RecSpin runs SpinForASecond (for exactly a second) and then calls a RecSpinHelper which consumes close to 100% of the CPU for the rest of the time. . The call Tree is a wonderful top-down synopsis.

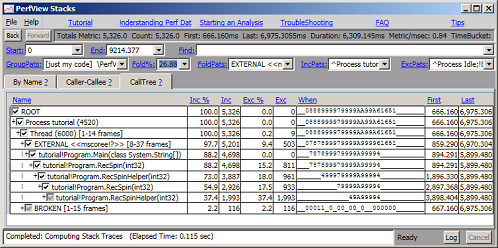

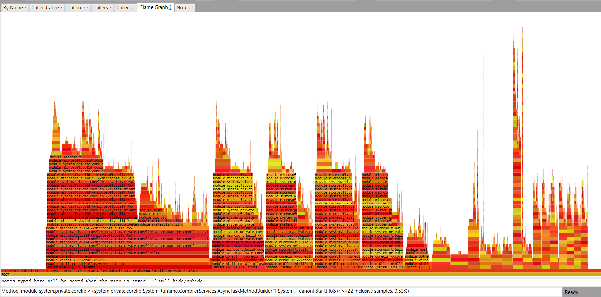

All of the filtering and grouping parameters at the top of the view affect any of the view (byname, caller-callee or CallTree), equally. We can use this fact and the 'Fold %' functionality to get an even coarser view of the 'top' of the call tree. With all nodes expanded, simply right click on the window and select 'Increase Fold %' (or easier hit the F7 key). This increases the number in the Fold % textbox by 1.6X. By hitting the F7 key repeatedly you keep trimming down the 'bottoms' of the stacks until you only see methods that use a large amount of CPU time. The following image shows the CallTreeView after hitting F7 seven times.

You can restore the previous view by either using the 'Back' button, the Shift-F7 key (which decreases the Fold%) or by simply selecting 1 in the Fold% box (e.g. from the drop down menu).

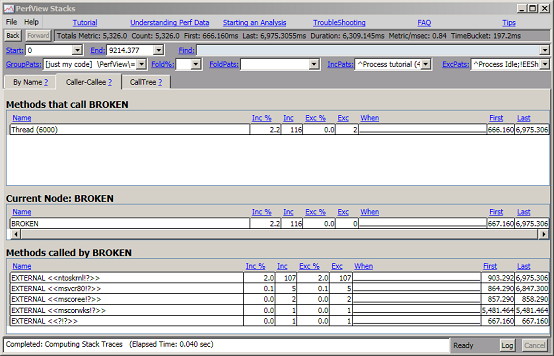

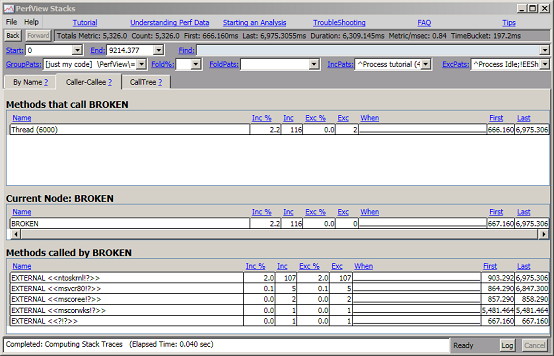

Getting a course view of the tree is useful but sometimes you just want to restrict your attention to what is happening at a single node. For example, if the inclusive time for BROKEN stacks is large, you might want to view the nodes under 'BROKEN' stacks to get an idea what samples are 'missing' from their proper position in the call tree. you can do this easily by viewing the BROKEN node in the Caller-callee view. To do this right click on the BROKEN node, and select Goto -> Caller-callee (or type Alt-C). Because so few samples are in our trace are BROKEN this node is not very interesting. By setting Fold % to 0 (blank) you get the following view

The view is broken in to three grids. The middle piece shows the 'current node', in this case 'BROKEN'. The top grid shows all nodes that call into this focus node. In the case of BROKEN nodes are only on one thread. The bottom graph shows all nodes that are called by 'BROKEN' sorted by inclusive time. We can see that most of the broken nodes came from stacks that originated in the 'ntoskrnl' dll (this is the Windows OS Kernel) To dig in more we would first need to resolve symbols for this DLL. See symbol resolution for more.

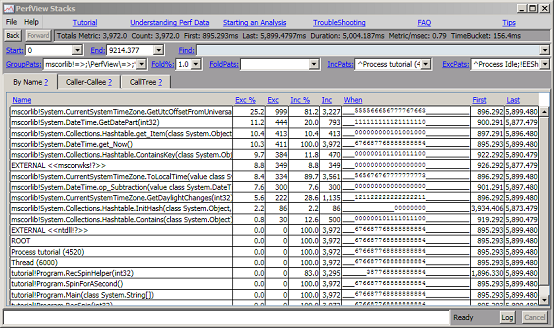

While groups are a very powerful feature for understanding the performance of your program at a 'coarse' level, inevitably, you wish to 'Drill into' those groups and understand the details of PARTICULAR nodes in detail. For example, If we were a developer responsible for the DateTime.get_Now(), we would not be interested in the fact that it was called from 'SpinForASecond' routine but what was going on inside. Moreover we DON'T want to see samples from other parts of the program 'cluttering' the analysis of get_Now(). This is what the 'Drill Into' command is for. If we go back to the 'ByName' view and select the 3792 samples 'Inc' column of the 'get_Now' right click, and select 'Drill Into', it brings a new window where ONLY THOSE 3792 samples have been extracted.

Initially Drilling in does not change any filter/grouping parameters. However, now that we have isolated the samples of interest, we are free to change the grouping and folding to understand the data at a new level of abstraction. Typically this means ungrouping something. In this case we would like to see the detail of how mscorlib!get_Now() works, so we want to see details inside mscorlib. To do this we select the 'mscorlib!DateTime.get_Now() node, right click, and select 'Ungroup Module'. This indicates that we wish to ungroup any methods that were in the 'mscorlib' module. This allows you to see the 'inner structure' of that routine (without ungrouping completely) The result is the following display

At this point we can see that most of the 'get_Now' time is spend in a function called 'GetUtcOffsetFromUniversalTime' and 'GetDatePart' We have the full power of the stack viewer at our disposal, folding, grouping, using CallTree or caller-callee views to further refine our analysis. Because the 'Drill Into' window is separate from its parent, you can treat is as 'disposable' and simply discard it when you are finished looking at this aspect of your program's performance.

In the example above we drilled into the inclusive samples of method. However you can also do the same thing to drill into exclusive samples. This is useful when user callbacks or virtual functions are involved. Take for example a 'sort' routine that has internal helper functions. In that case it can be useful to segregate those samples that were part of the nodes 'internal helpers' (which would be folded up as exclusive samples of 'sort') from those that were caused by the user 'compare' function (which would typically not be grouped as exclusive samples because it crossed a module boundary). By drilling into the exclusive samples of 'sort' and then ungrouping, you get to see just those samples in 'sort' that were NOT part of the user callback. Typically this is EXACTLY what the programmer responsible for the 'sort' routine would want to see.

Once the analysis has determined methods are potentially inefficient, the next step is to understand the code enough to make an improvement. PerfView helps with this by implementing the 'Goto Source' functionality. Simply select a cell with a method name in it, right click and choose Goto Source (or use Alt-D (D for definition)). PerfView with then attempt to look up the source code and if successful will launch a text editor window. For example, if you select the 'SpinForASecond' cell in the ByName view and select Goto Source the following window is displayed.

As you can see, the particular method is displayed and each line has been prefixed with the cost (in this case CPU MSec) spent on that line. in this view it shows 4.9 seconds of CPU time were spent on the first line of the method.

Unfortunately, prior to V4.5 of the .NET Runtime, the runtime did not emit enough information into the ETL file to resolve a sample down to a line number (only to a method). As a result while PerfView can bring up the source code, it can't accurately place samples on particular lines unless the code was running on V4.5 or later. When PerfView does not have the information it needs it simply attributes all the cost to the first line of the method. This is in fact what you see in the example above. If you run your example on a V4.5 runtime, you would get a more interesting distribution of cost. This problem does not exist for native code (you will get line level resolution). Even on old runtime versions, however, you at least have an easy way to navigate to the relevant source.

PerfView finds the source code by looking up information in the PDB file associated with the code. Thus the first step is that PerfView must be able to find the PDB file. By default most tools will place the complete path of the PDB file inside the EXE or DLL it builds, which means that if you have not moved the PDB file (and are on the machine you built on), then PerfView will find the PDB. It then looks in the PDB file which contain the full path name of each of the source files and again, if you are on the machine that built the binary then PerfView will find the source. So if you run on the same machine you build on, it 'just works'.

However it is common to not run on the machine you built on, in which case PerfView needs help. PerfView follows the standard conventions for other tools for locating source code. In particular if the _NT_SYMBOL_PATH variable is set to a semicolon separated list of paths, it will look in those places for the PDB file. In addition if _NT_SOURCE_PATH is set to a semicolon separated list of paths, it will search for the source file in subdirectories of each of the paths. Thus setting these environment variables will allow PerfView's source code feature to work on 'foreign' machines. You can also set the _NT_SYMBOL_PATH and _NT_SOURCE_PATH inside the GUI by using the menu items on the File menu on the stack viewer menu bar.

See Also Tutorial of a Time-Based Investigation. While there currently is no tutorial on doing a GC heap analysis, if you have not walked the time based investigation tutorial you should do so. Many of the same concepts are used in a memory investigation. You should also take a look at

TUTORIAL NOT COMPLETE

As mentioned in the introduction, ETW is light weight logging mechanism built into the Windows Operating system that can collect a broad variety of information about what is going on in the machine. There are two ways PerfView supports for collecting ETW profile data.

You can also automate the collection of profile data by using command line options. See collecting data from the command line for more.

If you intend to do a wall clock time investigation

By default PerfView chooses a set of events that does not generate too much data but is useful for a variety of investigations. However wall clock investigations require events that are too voluminous to collect by default. Thus if you wish to do a wall clock investigation, you need to set the 'Thread Time' checkbox in the collection dialog.

If you intend to copy the ETL file to another machine for analysis

By default to save time PerfView does NOT prepare the ETL file so that it can be analyzed on a different machine (see merging). Moreover, there is symbolic information (PDBS for NGEN images), that also needs to be included if the data is to work well on any machine). If you are intending to do this you need to merge and include the NGEN pdbs by using the 'ZIP' command. You can do this either by

Once the data has been zipped not only does the file contain all the information needed to resolve symbolic information, but it also has been compressed for faster file copies. If you intend to use the data on another machine, please specify the ZIP option.

The result of collecting data is an ETL file (and possibly a .kernel.ETL file as discussed in merging). When you double click on the file in the main viewer it opens up 'children views' of the data that was collected. One of these items will be the 'CPU Stacks' view. Double clicking on that will bring up a stack viewer to view the samples collected. The data in the ETL file contains CPU information for ALL processes in the system, however most analyses concentrate on a single process. Because of this before the stack viewer is displayed a dialog box to select a process of interest is displayed first.

By default, this dialog box contains a list of all processes that were active at the time the trace was collected sorted by the amount of CPU time each process consumed. If you are doing a CPU investigation, there is a good chance the process of interest is near the top of this list. Simply double clicking on the desired process will bring up the stack viewer filtered to the process you chose.

The process view can be sorted by any of the columns by clicking on the column header. Thus if you wish to find the process that was started most recently you can sort by start time to find it quickly. If the view is sorted by name, if you type the first character of the process name it will navigate to the first process with that name.

Process Filter Textbox The box just above the list of processes. If you type text in this box, then only processes that match this string (PID, process name or command line, case insensitive) will be displayed. The * character is a wild card. This is a quick way of finding a particular process.

If you wish to see samples for more than one process for your analysis click the 'All Procs' button.

Note that the ONLY effect of the process selection dialog box is to add an 'Inc Pats' filter that matches the process you chose. Thus the dialog box is really just a 'friendly interface' to the more powerful filtering options of the stack viewer. In particular, the stack viewer still has access to all the samples (even those outside the process you selected), it is just that it filters it out because of the include pattern that was set by the dialog box. This means that you can remove or modify this filter at a later point in the analysis.

The data shown by default in the PerfView stack viewer are stack traces taken every millisecond on each processor on the system. Every millisecond, whatever process is running is stopped and the operating system 'walks the stack' associated with the running code. What is preserved when taking a stack trace is the return address of every method on the stack. Stackwalking may not be perfect. It is possible that the OS can't find the next frame (leading to broken stacks) or that an optimizing compiler has removed a method call (see missing frames), which can make analysis more difficult. However for the most part the scheme works well, and has low overhead (typically 10% slowdown), so monitoring can be done on 'production' systems.

On lightly loaded system, many CPUs are typically in the 'Idle' process that the OS run when there is nothing else to do. These samples are discarded by PerfView because they are almost never interesting. All other samples are kept however, regardless of what process they were taken from. Most analyses focus on a single process, and further filter all samples that did not occur in the process of interest, however PerfView also allows you to also look at samples from all processes as one large tree. This is useful in scenarios where more than one process is involved end-to-end, or when you need to run an application several times to collect enough samples.

Because the samples are taken every millisecond per processor, each sample represents 1 millisecond of CPU time. However exactly where the sample is taken is effectively 'random', and so it is really 'unfair' to 'charge' the full millisecond to the routine that happened to be running at the time the sample was taken. While this is true, it is also true that as more samples are taken this 'unfairness' decreases as the square root of the number of samples. If a method has just 1 or 2 samples it could be just random chance that it happened in that particular method, but methods with 10 samples are likely to have truly used between 7 and 13 samples (30% error). Routines with 100 samples are likely to be within 90 and 110 (10% error). For 'typical' analysis this means you want at least 1000 and preferably more like 5000 samples (There are diminishing returns after 10K). By collecting a few thousand samples you ensure that even moderately 'warm' methods will have at least 10 samples, and 'hot' methods will have at least 100s, which keep the error acceptably small. Because PerfView does not allow you to vary the sampling frequency, this means that you need to run the scenario for at least several seconds (for CPU bound tasks), and 10-20 seconds for less CPU bound activities.

If the program you wish to measure cannot easily be changed to loop for the required amount of time, you can create a batch file that repeatedly launches the program and use that to collect data. In this case you will want to view the CPU samples for all processes, and then use a GroupPat that erases the process ID (e.g. process {%}=>$1) and thus groups all processes of the same name together.

Even with 1000s of samples, there is still 'noise' that is at least in the 3% range (sqrt(1000) ~= 30 = 3%). This error gets larger as the methods / groups being investigated have fewer samples. This makes it problematic to use sample based profiling to compare two traces to track down small regressions (say 3%). Noise is likely to be at least as large as the 'signal' (diff) you are trying to track down. Increasing the number of samples will help, however you should always keep in mind the sampling error when comparing small differences between two traces.

Because a stack trace is collected for each sample, every node has both an exclusive metric (the number of samples that were collected in that particular method) and an inclusive metric (the number of samples that collected in that method or any method that method called). Typically you are interested in inclusive time, however it is important to realize that folding (see FoldPats and Fold %) and grouping artificially increase exclusive time (it is the time in that method (group) and anything folded into that group). When you wish to see the internals of what was folded into a node, you Drill Into the groups to open a view where the grouping or folding can be undone.

If you have not done so, consider walking through the tutorial and best practices from Measure Early and Often for Performance .

The default stack viewer in PerfView analyzes CPU usage of your process. There are three things that you should always do immediately when starting a CPU analysis of a particular process.

If either of the above conditions fail, the rest of your analysis will very likely be inaccurate. If you don't have enough samples you need to go back and recollect so that you get more, modifying the program to run longer, or running the program many times to accumulate more samples. If you program is running for long enough (typically 5-20 seconds), and you still don't have at least 1000 samples, it is likely it is because CPU is NOT the bottleneck. It is very common in STARTUP scenarios that CPU is NOT the problem but that the time is being spent fetching data from the disk. It is also possible that the program is waiting on network I/O (server responses) or responses from other processes on the local system. In all of these cases the time being wasted is NOT governed by how much CPU time is used, and thus a CPU analysis is inappropriate.

You can quickly determine if your process is CPU bound by looking at the 'When' column for your 'top most' method. If the When column has lots of 9s or As in it over the time it is active then it is likely the process was CPU bound during that time. This is the time you can hope to optimize and if it is not a large fraction of the total time of your app, then optimizing it will have little overall effect (See Amdahl's Law). Switching to the CallTree view and looking at the 'When' column of some of the top-most methods in the program is a good way of confirming that your application is actually CPU bound..

Finally you may have enough samples, but you lack the symbolic information to make sense of them. This will manifest with names with ? in them. By default .NET code should 'just work'. For unmanaged code you need to tell PerfView which DLLs you are interested in getting symbols for. See symbol resolution for more. You should also quickly check that you don't have many broken stacks as this too will interfere with analysis.

Once you have determined that CPU is actually important to optimize you have a choice of how to do your analysis. Performance investigations can either be 'top-down' (starting with the Main program and how the time spent there is divided into methods it calls), or 'bottom-up' (starting with methods at 'leaf' methods where samples were actually taken, and look for methods that used a lot of time). Both techniques are useful, however 'bottom-up' is usually a better way to start because methods at the bottom tend to be simpler and thus easier to understand and have intuition about how much CPU they should be using.

PerfView starts you out in the 'ByName' view that is appropriate starting point for a bottom-up analysis. It is particularly important in a bottom up analysis to group methods into semantically relevant groupings. By default PerfView picks a good set starting group (called 'just my code'). In this grouping any method in any module that lives in a directory OTHER than the directory where the EXE lives, is considered 'OTHER' and the entry group feature is used group them by the method used to call out to this external code. See the tutorial more on the meaning of 'Just My Code' grouping, and the GroupPats reference for more on grouping.

For simple applications the default grouping works well. There are other predefined groupings in the dropdown of the GroupPats box, and you are free to create or extend these as you need. You know that you have a 'good' set of groupings when what you see in the 'ByName' view are method names that are semantically relevant (you recognize the names, and know what their semantic purpose is), there are not too many of them (less than 20 or so that have an interesting amount of exclusive time), but enough that break the program into 'interesting' pieces that you can focus on in turn (by Drilling Into).

One very simple way of doing this is to increase the Fold % , which folds away small nodes. There is a shortcuts that increase (F7 key) or decrease (Shift F7) this by 1.6X. Thus by repeatedly hitting F7, you can 'clump' small nodes into large nodes until only a few survive and are displayed. While this is fast and easy, it does not pay attention to how semantically relevant the resulting groups are. As a result it may group things in poor ways (folding away small nodes that were semantically relevant, and grouping them into 'helper routines' that you don't much want to see). Nevertheless, it is so fast and easy it is always worth at least trying to see what happens. Moreover it is almost always valuable to fold away truly small nodes. Even if a node is semantically relevant, if it uses < 1% of the total CPU time, you probably don't care about it.

Typically the best results occur when you use Fold % in the 1-10% range (to get rid of the smallest nodes), and then selectively fold way any semantically uninteresting nodes that are left. This can be done easily looking at the 'ByName' view, holding the 'Shift' key down, and selecting every node on the graph that has some exclusive time (they will be toward the top), and you DON'T recognize. After you have completed your scan, simply right click and select 'Fold Item' and these node will be folded into their caller disappearing from the view. Repeat this until there are no nodes in the display that use exclusive time that are semantically irrelevant. What you have left is what you are looking for.

During the first phase of an investigation you spend your time forming semantically relevant groups so you can understand the 'bigger picture' of how the time spent in hundreds of individual methods can be assigned a 'meaning'. Typically the next phase is to 'Drill into' one of these groups that seems to be using too much time. In this phase you are selectively ungrouping a semantic group to understand what is happening at the next 'lower level' of abstraction.

You accomplish this with two commands

Typically if 'Ungroup' or 'Ungroup Module command does not work well, use 'Clear all Folding' If that does not work well, clear the 'GroupPats' textbox which will show you the most 'ungrouped' view. if this view is too complex, you can then use explicit folding (or making ad-hoc groups), to build up a new semantic grouping (just like in the first phase of analysis).

In summary, a CPU performance analysis typically consist of three phases

It is pretty clear the benefit of optimizing for time: your program goes faster, which means your users are not waiting as long. For memory it is not as clear. If your program uses 10% more memory than it could who cares? There is a useful MSDN article called Memory Usage Auditing for .NET Applications which will be summarized here. Fundamentally, you really only care about memory when it affects speed, this happens when your app gets big (Memory used as indicated by TaskManager > 50 Meg). Even if your application is small, however, it is so easy to do a '10 minute memory audit' of your applications total memory usage and the .NET's GC heap, that you really should do so for any application that performance matters at all. Literally in seconds you can get a dump of the GC heap, and be seeing if the memory 'is reasonable'. If your app does use 50Meg or 100 Meg of memory, then it probably is having an important performance impact and you need to take more time to optimized its memory usage. See the article for more details.

Even if you have determined that you care about memory, it is still not clear that you care about the GC heap. If the GC heap is only 10% of your memory usage then you should be concentrating your efforts elsewhere. You can quickly determine this by opening TaskManager, selecting the 'processes' tab an finding your processes 'Memory (Private Working Set) value . (See Memory Usage Auditing for .NET Applications on an explanation of Private working set). Next, use PerfView to take a heap snapshot of the same process (Memory -> Take Heap Snapshot). At the top of the view will be the 'Total Metric' which in this case is bytes of memory. If GC Heap is a substantial part of the total memory used by the process, then you should be concentrating your memory optimization on the GC heap.

If you find that your process is using a lot of memory but it is NOT the GC heap, you should download the free SysInternals vmmap tool. This tool gives you a breakdown of ALL the memory used by your process (it is nicer than the vadump tool mentioned in Memory Usage Auditing for .NET Applications ). If this utility shows that the Managed heap is large, then you should be investigating that. If it shows you that the 'Heap' (which is the OS heap) or 'Private Data' (which is virtualAllocs) you should be investigating unmanaged memory .

If you have not already read When to care about Memory and When to care about the GC Heap please do so to ensure that GC memory is even relevant to your performance problem.

The Memory->Take Heap Snapshot menu item allows you to take a snapshot of the GC heap of any running .NET application. When you select this menu item it brings up a dialog box displaying all the processes on the system from which to select.

By typing a few letters of the process name in the filter textbox you can quickly reduce the number of processes shown. In the image above simply typing 'x' reduces the number of processes to 7 and typing 'xm' would be enough to reduce it to a single process (xmlView). Double clicking on the entry will select the entry and start the heap dump. Alternatively you can simply select the process with a single click and continue to update other fields of the dialog box.

If PerfView is not run as administrator it may not show the process of interest (if it is not owned by you). By clicking on the Elevate to Admin hyperlink to restart PerfView as admin to see all processes.

The process to dump is the only required field of the dialog, however you can set the others if desired. (See Memory Collection Dialog reference for more). To start the dump either click the 'Dump Heap' button or simply type the enter key.

Once you have some GC Heap data, it is important to understand what exactly you collected and what its limitations are. Logically what has been captured is a snapshot of objects in the heap that were found by traversing references from a set of roots (just like the GC itself). This means that you only discover objects that were live at the time the snapshot was taken. However two factors make this characterization inaccurate in the normal case.

For some applications GC heaps can get quite large (> 1GB and possibly 50GB or more) When GC heaps 1,000,000 objects it slows the viewer quite as well as making the size of the heap dump file very large.

To avoid this problem, by default PerfView only collects complete GC heap dumps for heaps less than 50K objects. Above that PerfView only takes a sample of the GC heap. PerfView goes to some trouble to pick a 'good' sample. In particular

The result is that all samples always contain at least one path to root (but maybe not all paths). All large objects are present, and each type has at least a representative number of samples (there may be more because of reason (5) and (6)).

GC heap sampling produces only dumps fraction of objects in the GC Heap, but we wish for that sample to represent the whole GC heap. PerfView does this by scaling the counts. Unfortunately because of the requirement to included any large object and the path to root of any object, a single number will not correctly scale the sampled heap so that it represents the original heap. PerfView solves this by remembering the Total sizes for each type in the original graph as well as the total counts in the scaled graph. Using this information, for each type it scales the COUNT for that type so that the SIZE of that type matches the original GC heap. Thus what you see in the viewer should be pretty close to what you would see in original heap (just much smaller and easier for PerfView to digest). In this way large objects (which are ALWAYS taken) will not have their counts scaled, but but the most common types (e.g. string), will be heavily scaled. You can see the original statistics and the ratios that PerfView uses to scale by looking at the log when a .gcdump file has been opened.

When PerfView displays a .gcdump file that has been sampled (and thus needs to be scaled), it will display the Average amount the COUNTS of the types have been scaled as well as the average amount the SIZES had to be scaled in the summary text box at the top of the display. This is your indication that sampling/scaling is happening, and to be aware that some sampling distortions may be present.

It is important to realize that while the scaling tries to counteract the effect of sampling (so what is display 'looks' like the true, unsampled, graph), it is not perfect. The PER-TYPE statistic SIZE should always be accurate (because that is the metric that was used to perform the scaling, but the COUNTs may not be. In particular for types whose instances can vary in size (strings and arrays), the counts may be off (however you can see the true numbers in the log file). In addition the counts and sizes for SUBSETS of the heap can be off.

For example if you drill down to one particular part of the heap (say the set of all Dictionary<string, MyType>), you might find that the count of the keys (type string) and the count of values (type MyType) are not the same. This is clearly unexpected, because each entry should have exactly one of each. This anomaly is a result of the sampling. The likelihood of an anomaly like this is inversely proportional to the size of the subset of the heap you are reasoning over. Thus when you reason about the heap as a whole, there should be no anomaly, but if you reason about a small number of objects deep in some sub-tree, the likelihood is very high.

Generally speaking, these anomalies do not tend to affect the analysis much. This is because you usually care about LARGE parts of your heap, and this is exactly where sampling is most accurate. Thus typically the correct response to these anomalies is to simply ignore them. If however they are interfering with your analysis, you can reduce or eliminate them by simply doing less sampling. The Sampling is controlled by the 'Max Dump K Objs' field. By default 250K objects are collected. If you set this number to be larger you will sample less. If you set it to some VERY large number (say 1 Billion), then the graph will not be sampled at all. Note that there is a reason why PerfView samples. When the number of objects being manipulated gets above 1 million, PerfView's viewer will noticeably lag. Above 10 million and it will be a VERY frustrating experience. There is also a good chance that PerfView will run out of memory when manipulating such large graphs. It will also make the GCDump files proportionally bigger, and unwieldy to copy. Thus changing the default should be considered carefully. Using the sampled dump is usually the better option.

As mentioned, GCHeap collection (for .NET) collects DEAD as well as live objects. PerfView does this because it allows you to see the 'overhead' of the GC (amount of space consumed, but not being used for live objects). It also is more robust (if roots or objects can't be traversed, you don't lose large amounts of the data). When the graph is displayed dead objects can be determined because they will pass through the '[not reachable from roots]' node. Typically you are not interested in the dead objects, so you can exclude dead objects by excluding this node (Alt-E).

PerfView has the ability to either freeze the process or allow it to run while the GC heap is being collected. If the process is frozen, the resulting heap is accurate for that point in time, however since even sampling the GC heap can take 10s of seconds, it means that the process will not be running for that amount of time. For 'always up' servers this is a problem as 10s of seconds is quite noticeable. On the other hand if you allow the process to run as the heap is collected, it means that the heap references are changing over time. In fact GCs can occur, and memory that used to point at one object might now be dead, and conversely new objects will be created that will not be rooted by the roots captured earlier in the heap dump. Thus the heap data will be inaccurate.

Thus we have a trade-off

PerfView allows both, but by default it will NOT freeze the process. The rational is that for most apps, you take a snapshot while the process is waiting for user input (and thus the process acts like it is frozen anyway). The exception is server applications. However this is precisely the case where stopping the process for 10s of seconds would likely be bad. Thus a default to allow the process to run is better in most cases.

In addition, if the heap is large, it is already the case that you will not dump all objects in the heap. As long as the objects being missed by the process running are statistically similar to the ones that did not move (likely in a server process), then your heap stats are likely to be accurate enough for most performance investigations.

Nevertheless, if for whatever reason you wish to eliminate the inaccuracy of a running process, simply use the Freeze checkbox or the /Freeze command line qualifier to indicate your desire to PerfView.

As described in Understanding GC heap data the data actually captured in a .GCDump file may only be an approximation to the GC heap. Nevertheless the .GCDump does capture the fact that the heap is an arbitrary reference graph (a node can have any number of incoming and outgoing references and the references can form cycles). Such arbitrary graphs are inconvenient from an analysis perspective because there is no obvious way to 'roll up' costs in a meaningful way. Thus the data is further massaged to turn the graph into a tree.

The basic algorithm is to do a weighted breadth-first traversal of the heap visiting every node at most once, and only keeping links that where traversed during the visit. Thus the arbitrary graph is converted to a tree (no cycles, and every node (except the root) has exactly one parent). The default weighting is designed to pick the 'best' nodes to be 'parents'. The intuition is that if you have a choice between choosing two nodes to be that parent of a particular node, you want to pick the most semantically relevant node.

The viewer of gc heap memory data has an extra 'Priority' text box, which contains patterns that control the graph-to-tree conversion by assigning each object a floating point numeric priority. This is done in a two step process, first assigning priorities to type names, and then through types assigning objects a priority.

The Priority text box is a semicolon list of expressions of the form

Where PAT is a regular expression pattern as defined in Simplified Pattern matching and NUM is a floating point number. The algorithm for assigning priorities to types is simple: find the first pattern in the list of patterns that match the type name. If the patterns match assign the corresponding priority. If no pattern matches assign a priority of 0. In this way every type is given a priority.

The algorithm for assigning a priority to an object is equally simple. It starts with the priority of its type, but it also adds in 1/10 the priority of its 'parent' in the spanning tree being formed. Thus a node gives part of its priority to its children, and thus this tends to encourage breadth first behavior (all other priorities being equal that is 2 hops away from a node with a given priority will have a higher priority than a node that is 3 hops away).

Having assigned a priority to all 'about to be traversed' nodes, the choice of the next node is simple. PerfView chooses the highest priority node to traverse next. Thus nodes with high priority are likely to be part of the spanning tree that PerfView forms. This is important because all the rest of the analysis depends on this spanning tree.

You can see the default priorities in the 'Priority' text box. The rationale behind this default is:

Thus the algorithm tends to traverse user defined types first and find the shortest path that has the most user defined types in the path. Only when it runs out of such links does it follow framework types (like collection types, GUI infrastructure, etc), and only when those are exhausted, will anonymous runtime handles be traversed. This tends to assign the cost (size) of objects in the heap to more semantically relevant objects when there is a choice.

The defaults work surprisingly well and often you don't have to augment them. However if you do assign priorities to your types, you generally want to choose a number between 1 and 10. If all types follow this convention, then generally all child nodes will be less (because it was divided by 10) than any type given an explicit type. However if you want to give a node a priority so that even its children have high priority you can give it a number between 10 and 100. Making the number even larger will force even the grandchildren to 'win' most priority comparisons. In this way you can force whole areas of the graph to be high priority. Similarly, if there are types that you don't want to see, you should give them a number between -1 and -10.

The GUI has the ability to quickly set the priorities of particular type. If you select text in the GUI right click to Priorities -> Raise Item Priority (Alt-P), then that type's priority will be increased by 1. There is a similarly 'Lower Item Priority (Shift-Alt-P). Similarly, there is a Raise Module Priority (Alt-Q) and Lower Module Priority (Shift-Alt-Q) which match any type with the same module as the selected cell.

Because the graph has been converted to a tree, it is now possible to unambiguously assign the cost of a 'child' to the parent. In this case the cost is the size of the object, and thus at the root the costs will add up to the total (reachable) size of the GC heap (that was actually sampled).

Once the heap graph has been converted to a tree, the data can be viewed in the same stackviewer as was used for ETW callstack data. However in this view the data is not the stack of the allocation but rather the connectivity graph of the GC heap. You don't have callers and callees but referrers and referees. There is no notion of time (the 'when', 'first' and 'last' columns), but the notions of inclusive and exclusive time still make sense, an the grouping and folding operations are just as useful.

It is important to note that this conversion to a tree is inaccurate in that it attributes all the cost of a child to one parent (the one in the traversal), and no cost to any other nodes that also happened to point to that node. Keep this in mind when viewing the data.

As described in Converting a Heap Graph to a Heap Tree, before the memory data can be display it is converted from a graph (where arcs can form cycles and have multiple parents) to a tree (where there is always exactly one path from the node to the root. References that are part of this tree are called primary refs and are displayed in black in the viewer. However it is useful to also see the other references that were trimmed. These other references are called secondary nodes. When secondary nodes are present, primary nodes are in bold and secondary nodes are normal font weight. Sometimes secondary nodes clutter the display so there is a 'Pri1 Only' check box, which when selected suppresses the display of secondary nodes.

Primary nodes are much more useful than secondary nodes because there is an obvious notion of 'ownership' or 'inclusive' cost. It makes sense to talk about the cost of a node and all of its children for primary nodes. Secondary nodes do not have this characteristic. It is very easy to 'get lost' opening secondary nodes because you could be following a loop and not realize it. To help avoid this, each secondary nodes is labeled with its 'minimum depth'. This number is the shortest PRIMARY path from any node in the set to the root node. Thus if you are trying to find a path to root with secondary nodes, following nodes with small depth will get you there.

Generally, however it is better to NOT spend time opening secondary nodes. The real purpose of showing these nodes is to allow you to determine if your priorities in the Priority Text Box are appropriate. If you find yourself being interested in secondary nodes, there is a good chance that the best response is to simply add a priority that will make those secondary nodes primary ones. By doing this you can get sensible inclusive metrics, which are the key to making sense of the memory data.

One good way of setting priorities is to us the right click -> Priority -> Increase Priority (Alt-P) and right click -> Priority -> Decrease Priority (Alt-Q) commands. By selecting a node that is either interesting, or explicitly not interesting and executing these commands you can raise or lower its priority and thus cause it to be in the primary tree (or not).

This section assumes you have taken determined that the GC heap is relevant , that you have collected a GC Snapshot and that you understand how the heap graph was converted to a tree and how the heap data was scaled. In addition to the 'normal' heap analysis done here, it can also be useful to review the bulk behavior of the GC with the GCStats report as well as GC Heap Alloc Ignore Free (Coarse Sampling) view.

Like a CPU time investigation, a GC heap investigation can be done bottom up or top down. Like a CPU investigation, a bottom up investigation is a good place to start. This is even more true for memory then it was for CPU. The reason is that unlike CPU, the tree that is being displayed in the view is not the 'truth' because the tree view does not represent the fact that some nodes are referenced by more than one node (that is they have multiple parents). Because of this the top down representation is a bit 'arbitrary' because you can get different trees depending on details of exactly how the breadth first traversal of the graph was done. A bottom up analysis is relatively immune to such inaccuracy and thus is a better choice.

Like a CPU investigation, a bottom up heap investigation starts with forming semantically relevant groups by 'folding away' any nodes that are NOT semantically relevant. This continues until the size of the groups are big enough to be interesting. The 'Drill Into' feature can then be used to start a sub-analysis. Please see the CPU Tutorial if you are not familiar with these techniques.

The Goto callers view (F10) is particularly useful for a heap investigation because it quickly summarizes paths to the GC roots, which indicate why the object is still alive. When you find object that have outlived their usefulness, one of these links must be broken for the GC to collect it. It is important to note that because the view shows the TREE and not the GRAPH of objects, there may be other paths to the object that are not shown. Thus to make an object die, it is NECESSARY that one of the paths in the callers view be severed, but it may not be SUFFICIENT.

Typically, GC heaps are dominated by

Unfortunately while these types dominate the size of the heap they do not really help in analysis. What you really want to know is not that you use a lot of strings but WHAT OBJECTS YOU CONTROL are using a lot of strings. The good news is that this is 'standard problem' that of a bottom up analysis that PerfView is really good a solving. By default PerfView adds folding patterns that cause the cost of all strings and arrays to be charged to the object that refers to them (it is like the field was 'inlined' into the structure that referenced it). Thus other objects (which are much more likely to be semantically relevant to you), are charged this cost. Also by default, the 'Fold%' textbox is set to 1, which says that any type that uses less than 1% of the GC heap should be removed and its cost charged to whoever referred to it.

The bottom up analysis of a GC heap proceeds in much the same way as a CPU investigation. You use the grouping and folding features of the Stack Viewer to eliminate noise and to form bigger semantically relevant groups. When these get large enough, you use the Drill Into feature to isolate on such group and understand it at a finer level of detail. This detailed understanding of your applications memory use tells you the most valuable places to optimize.

Once you have determined a type to focus on, it is often useful to understand where the types have been allocated. See the GC Alloc Stacks view for more on this.

A common type of memory problem is a 'Memory Leak'. This is a set of objects that have served their purpose and are no longer useful, but are still connected to live objects and thus cannot be collected by the GC heap. If your GC heap is growing over time, there is a good chance you have a memory leak. Caches of various types are a common source of 'memory leaks'.

A memory leak is really just an extreme case of a normal memory investigation. In any memory investigation you are grouping together semantically relevant nodes and evaluating whether the costs you see are justified by the value they bring to the program. In the case of a memory leak the value is zero, so generally it is just about finding the cost. Moreover there is a very straightforward way of finding a leak

Note that because programs often have 'one time' caches, the procedure above often needs to be amended. You need to perform the set of operations once or twice before taking the baseline. That way any 'on time' caches will have been filled by the time the baseline has been captured and thus will not show up in the diff.

When you find a likely leak use the 'Goto callers view (F10)' on the node to find a path from the root to that particular node. This shows you the objects that are keeping this object alive. To fix the problem you must break one of these links (typically by nulling out on of the object fields).

While a Bottom up Analysis is generally the best way to start, it is also useful to look at the tree 'top down' by looking at the CallTree view. At the top of a GC heap are the roots of the graph. Most of these roots are either local variables of actively running methods, or static variables of various classes. PerfView goes to some trouble to try to get as much information as possible about the roots and group them by assembly and class. Taking a quick look at which classes are consuming a lot of heap space is often a quick way of discovering a leak.

However this technique should be used with care. As mentioned in the section on Converting a Heap Graph to a Heap Tree, while PerfView tries to find the most semantically relevant 'parents' for a node, if a node has several parents, PerfView is really only guessing. Thus it is possible that there are multiple classes 'responsible' for an object, and you are only seeing one. Thus it may be 'unfair' to blame class that was arbitrarily picked as the sole 'owner' of the high cost nodes. Nevertheless, the path in the calltree view is at least partially to blame, and is at least worthy of additional investigation. Just keep in mind the limitations of the view.

PerfView uses the .NET Debugger interface to collect symbolic information about the roots of the GC heap. There are times (typically because the program is running on old .NET runtimes) that PerfView can't collect this information. If PerfView is unable to collect this information it still dumps the heap, but the GC roots are anonymous e.g. everything is 'other roots'. See the log at the time of the GC Heap dump to determine exactly why this information could not be collected.

A typical GC Memory investigation includes dump of the GC heap. While this gives very detailed information about the heap at the time the snapshot was taken, it gives no information about the GC behavior over time. This is what the GCStats report does. To get a GCStats reports you must Collect Event Data as you would for a CPU investigation (the GC events are on by default). When you open the resulting ETL file one of the children will be a 'GCStats' view. Opening this will give you a report for each process on the system detailing how bit the GC heap was, when GCs happen, and how much each GC reclaimed. This information is quite useful to get a broad idea of how the GC heap changes over time.

In addition to the information needed for a GC Stats Report, a normal ETW Event Data collection will also include coarse information on where objects where allocated. Every time 100K of GC objects were allocated, a stack trace is taken. These stack traces can be displayed in the 'GC Heap Alloc Ignore Free (Coarse Sampling) Stacks' view of the ETL file.

These stacks show where a lot of bytes were allocated, however it does not tell you which of these objects died quickly, and which lived on to add to the size of the overall GC heap. It is these later objects that are the most serious performance issue. However by looking at a heap dump you CAN see the live objects, and after you have determined that a particular have many instances that live a long time, it can be useful to see where they are being allocated. This is what the GC Heap Alloc Stacks view will show you.

Please keep in mind that the coarse sampling is pretty coarse. Only the objects that happen to 'trip' the 100KB sample counter are actually sampled. However what is true is that ALL objects over 100K in size will be logged, and any small object that is allocated a lot will likely be logged also. In practice this is good enough.

The .NET heap segregates the heap into 'LARGE objects' (over 85K) and small objects (under 85K) and treats them quite differently. In particular large objects are only collected on Gen 2 GCs (pretty infrequently). If these large objects live for a long time, everything is fine, however if large objects are allocated a lot then either you are using a lot of memory or you are create a lot of garbage that will force a lot of Gen 2 collections (which are expensive). Thus you should not be allocating many large objects. The GC Heap Alloc Ignore Free (Coarse Sampling) view has a special 'LargeObject' pseudo-frame that it injects if the object is big, making it VERY easy to find all the stacks where large objects are allocated. This is a common use of the GC Heap Alloc Ignore Free (Coarse Sampling) Stacks view.

The first choice of investigating excessive memory usage of the .NET GC heap is to take a heap snapshot of the GC heap . This is because objects are only kept alive because they are rooted, and this information shows you all the paths that are keeping the memory alive. However there are times that knowing the allocation stack is useful. The GC Heap Alloc Ignore Free (Coarse Sampling) Stacks view shows you these stacks, but it does not know when objects die. It is also possible to turn on extra events that allow PerfView to trace object freeing as well as allocation and thus compute the NET amount of memory allocated on the GC heap (along with the call stacks of those allocations). There are two verbosity levels to choose from. They are both in the advanced section of the collection dialog box

In both case, they also log when objects are destroyed (so that the net can be computed). The the option of firing an event on every allocation is VERY verbose. If your program allocates a lot, it can slow it down by a factor if 3 or more. In such cases the files will also be large (> 1GB for 10-20 seconds of trace). Thus it is best to start with the second option of firing an event every 10KB of allocation. This typically well under 1% of the overhead, and thus does not impact run time or file size much. It is sufficient for most purposes.

When you turn on these events, only .NET processes that start AFTER you start data collection. Thus if you are profiling a long running service, you would have to restart the application to collect this information.

Once you have the data you can view the data in the 'GC Heap Net Mem (Coarse Sampling)', which shows you the call stacks of all the allocations where the metric is bytes of GC Net GC heap. The most notable difference between GC Heap Alloc Ignore Free (Coarse Sampling) Stacks and 'GC Heap Net Mem (Coarse Sampling)' is that the former shows allocations stacks of all objects, whereas the latter shows allocations stacks of only those objects that were not garbage collected yet.

There is basically no difference in what is displayed between traces collected with the '.NET Alloc' checkbox or the '.NET SampAlloc' checkbox. It is just that in the case of .NET SampAlloc the information may be inaccurate since a particular call stack and type are 'charged' with 10K of size. However statistically speaking it should give you the same averages if enough samples are collected.

The analysis of .NET Net allocations work the same way us unmanaged heap analysis.

One of the goals of PerfView is for the interface to remain responsive at all times. The manifestation of this is the status bar at the bottom of most windows. This bar displays a one line output area as well as an indication of whether an operation is in flight, a 'Cancel' button and a 'Log' button. Whenever a long operation starts, the status bar will change from 'Ready' to 'Working' and will blink. The cancel button also becomes active. If the user grows impatient, he can always cancel the current operation. There is also a one line status message that is updated as progress is made.

When complex operations are performed (like taking a trace or opening a trace for the first time), detailed diagnostic information is also collected and stored in a Status log. When things go wrong, this log can be useful in debugging the issue. Simply click on the 'Log' button in the lower right corner to see this information.

You have three basic choices in the main view:

While we do recommend that you walk the tutorial, and review Collecting Event Data and Understanding Performance Data , if your goal is to see your time-based profile data as quickly as possible, follow the following steps

While we do recommend that you walk the tutorial, and review Collecting GC Heap Data and Understanding GC Heap Data, if your goal is to see your memory profile data as quickly as possible, follow the following steps

Live Process Collection

Process Dump Collection

In addition to the General Tips, here are tips specific to the Main View.

The Main view is what greets you when you first start PerfView. The main view serves three main purposes

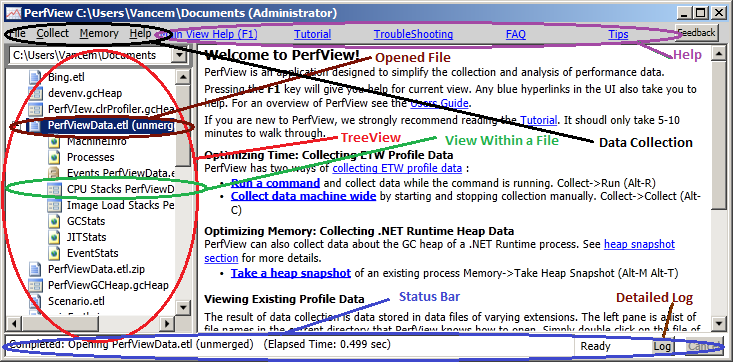

The following image highlights the important parts of the Main View.

Typically when you first use PerfView, you use it to collect data. PerfView can currently collect data for the following kinds of investigations

The types of data PerfView understands

EventData DurationNs reveals individual pauses of each thread: this can be useful

to correlate a particular long wait to another events in the trace. Note that not all contention events

are real OS-level waits: the runtime may first spin wait to try acquire the lock fast. The metric

represents the amount of time spent to acquire the lock in milliseconds.

System.Threading.Monitor and System.Threading.Lock code paths.

WaitHandleWait events are lower level and fire when the OS-provided wait handle is used to block the thread execution.

For example, WaitHandleWait will catch waiting inside SemaphoreSlim.Wait() while Contention event won't.

WaitHandleWait can be fired alongside the Contention event: for instance, during Monitor.Enter if spin waiting

was not enough to acquire the lock. Also note that WaitHandleWait is much more noisy: you'd need to clean up

the stacks from "legit" waiting like a main thread waiting for application shutdown signal, or a consumer thread

that waits for new items in a blocking channel. WaitHandleWait may not cover all waits in your application, like waits that

bypass the dotnet runtime or waits on other synchronous OS APIs like a blocking write to a socket.

For more details, refer to this diagram from the original PR.

TODO NOT DONE

In addition to the General Tips, here are tips specific to the Object Viewer.

The object viewer is a view that lets you see specific information about a individual object on the GC heap.

TODO NOT DONE

While we do recommend that you walk the tutorial, if your goal is to understand what the stack viewer is showing you follow these steps

You can set the default value used in the GroupPats and Fold textboxes using the "File -> Set As Default Grouping/Folding" menu item. These three values are persisted across PerfView sessions for that machine. The 'File -> Clear User Config' will reset these persisted values to their defaults, which is simple way to undo a mistake.

While we do recommend that you walk the tutorial, and review Understanding GC Heap Perf Data and Starting an Analysis of GC Heap Dump, if your goal is to see your memory profile data as quickly as possible, follow the following steps

In addition to the General Tips, here are tips specific to the Stack Viewer.

The stack viewer is main window for doing performance analysis. If you have not walked through the tutorial or the section on starting an analysis and understanding perf data, these would be good to read. Here is the layout of the stack viewer

The stack viewer has three main views: ByName, Caller-Callee, and CallTree. Each view has its own tab in the stack viewer and the can be selected using these tabs. However more typically you use right click or keyboard shortcuts to jump from a node in one view to the same node in another view. Double clicking on any node in any view in fact will bring you to Caller-Callee view and set your focus to that node.

Regardless of what view is selected, the samples under consideration and the grouping of those samples are the same for every view. This filtering and grouping is controlled by the text boxes at the top of the view and are described in detail in the section on grouping and filtering.

At the very top of the stack viewer is the summary statistics line. This gives

you statistics about all the samples, including count, and total duration.

It computes the 'TimeBucket' size which is defined as 1/32 of the

total time interval of the trace. This is the amount of time that is

represented by each character in the When column.

It also computes the Metric/Interval. This is a quick measurement of how

CPU bound the trace is as a whole. A value of 1 indicates a program

that on average consumes all the CPU from a single processor. Unless that is high, your problem is not CPU (it can be some blocking operation like network/disk read).

However this metric is average over the time data was collected, so can include

time when the process of interest is not even running. Thus is typically better

to use the When column for the node presenting the process

as a whole to determine how CPU bound a process is.

In addition to the grouping/filtering textboxes, the stack viewer also has a find textbox, which allows you to search (using .NET Regular expression) for nodes with particular names.

The columns displayed in the stack viewer grids independent of the view displayed. Columns can be reordered simply by dragging the column headers to the location you wish, and most columns can be sorted by clicking on an (often invisible) button in the column header directly to the right of the column header text. The columns that are display are:

Many of the columns in the PerfView display can be used to sort the display. You do this by clicking on the column header at the top of the column. Clicking again switches the direction of the sort. Be sure to avoid clicking on the hyperlink text (it is easy to accidentally click on the hyperlink). Clicking near the top typically works, but you may need to make the column header larger (by dragging one of the column header separators). There is already a request to change the hyperlinks so that it is easier to access the column sorting feature.

There is a known bug that once you sort by a column the search functionality does not respect the new sorted order. This means that searches will seem to randomly jump around when finding the next instance.

The default view for the stack viewer is the ByName View. In this view EVERY node (method or group) is displayed, shorted by the total EXCLUSIVE time for that node. This is the view you would use for a bottom up analysis. See the tutorial for an example of using this view. Double clicking on entries will send you to the Caller-Callee View for the selected node.

See stack viewer for more.

The call tree view shows how each method calls other methods and how many samples are associated with each of these called starting at the root. It is an appropriate view for doing a top down analysis. Each node has a checkbox associated with it that displays all the children of that node when checked. By checking boxes you can drill down into particular methods and thus discover how any particular call contributes to the overall CPU time used by the process.

The call tree view is also well suited for 'zooming in' to a region of interest. Often you are only interested in the performance of a particular part of the program (e.g., the time between a mouse click and the display update associated with that click) These regions of time can typically be easily discovered by either looking for regions of high CPU utilization using the When column on the Main program node, or by finding the name of a function known to be associated with the activity an using the 'SetTimeRange' command to limit the scope of the investigation.

Like all stack-viewer views, the grouping/filtering parameters are applied before the calltree is formed.

If the stack viewer window was started to display the samples from all processes, each process is just a node off the 'ROOT' node. This is useful when you are investigating 'why is my machine slow' and you don't really know what process to look at. By opening the ROOT node and looking at the When column, you can quickly see which process is using the CPU and over what time period.

See the tutorial for an example of using this view. See stack viewer for more. See flame graph for different visual representation.

The caller-callee view is designed to allow you to focus on the resource consumption of a single method. Typically you navigate to here by navigating from either the ByName or Calltree view by double-clicking on a node name. If you have a particular method you are interested in, search for it ( find textbox ) in the ByName view and then double click on the entry.

The ByName view has the concept of the 'Current Node'. This is the node of interest and is the grid line in the center of the display. The display then shows all nodes (methods or groups) that were called by that current node in the lower grid and all nodes that called the current node in the upper pane. By double clicking on nodes in either the upper or lower pane you can change the current node to a new one, and in that way navigate up and down the call tree.