🆕 **[Portkey Models](https://github.com/Portkey-AI/models)** - Open-source LLM pricing for 2,300+ models across 40+ providers. [Explore →](https://portkey.ai/models)

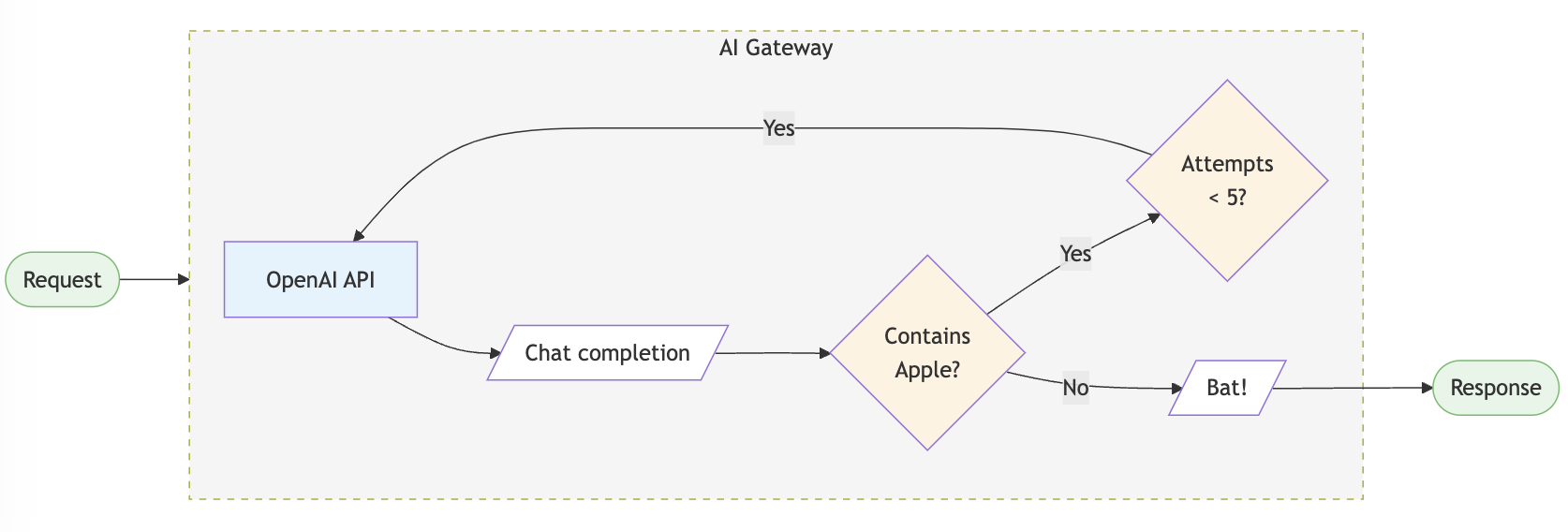

# AI Gateway

#### Route to 250+ LLMs with 1 fast & friendly API

[Docs](https://portkey.wiki/gh-1) | [Enterprise](https://portkey.wiki/gh-2) | [Hosted Gateway](https://portkey.wiki/gh-3) | [Changelog](https://portkey.wiki/gh-4) | [API Reference](https://portkey.wiki/gh-5)

[](./LICENSE)

[](https://portkey.wiki/gh-6)

[](https://portkey.wiki/gh-7)

[](https://portkey.wiki/gh-8)

[](https://portkey.wiki/gh-9)

[](https://deepwiki.com/Portkey-AI/gateway)

[Docs](https://portkey.wiki/gh-1) | [Enterprise](https://portkey.wiki/gh-2) | [Hosted Gateway](https://portkey.wiki/gh-3) | [Changelog](https://portkey.wiki/gh-4) | [API Reference](https://portkey.wiki/gh-5)

[](./LICENSE)

[](https://portkey.wiki/gh-6)

[](https://portkey.wiki/gh-7)

[](https://portkey.wiki/gh-8)

[](https://portkey.wiki/gh-9)

[Docs](https://portkey.wiki/gh-1) | [Enterprise](https://portkey.wiki/gh-2) | [Hosted Gateway](https://portkey.wiki/gh-3) | [Changelog](https://portkey.wiki/gh-4) | [API Reference](https://portkey.wiki/gh-5)

[](./LICENSE)

[](https://portkey.wiki/gh-6)

[](https://portkey.wiki/gh-7)

[](https://portkey.wiki/gh-8)

[](https://portkey.wiki/gh-9)

[](https://deepwiki.com/Portkey-AI/gateway)

[](https://deepwiki.com/Portkey-AI/gateway)