{

"cells": [

{

"cell_type": "markdown",

"id": "6f3d9163",

"metadata": {},

"source": [

"$$\n",

"\\newcommand{\\argmax}{arg\\,max}\n",

"\\newcommand{\\argmin}{arg\\,min}\n",

"\\newcommand{\\col}{col}\n",

"\\newcommand{\\Span}{span}\n",

"\\newcommand{\\epsilon}{\\varepsilon}\n",

"\\newcommand{\\EE}{\\mathbb{E}}\n",

"\\newcommand{\\PP}{\\mathbb{P}}\n",

"\\newcommand{\\RR}{\\mathbb{R}}\n",

"\\newcommand{\\NN}{\\mathbb{N}}\n",

"\\newcommand{\\ZZ}{\\mathbb{Z}}\n",

"\\newcommand{\\aA}{\\mathcal{A}}\n",

"\\newcommand{\\bB}{\\mathcal{B}}\n",

"\\newcommand{\\cC}{\\mathcal{C}}\n",

"\\newcommand{\\dD}{\\mathcal{D}}\n",

"\\newcommand{\\eE}{\\mathcal{E}}\n",

"\\newcommand{\\fF}{\\mathcal{F}}\n",

"\\newcommand{\\gG}{\\mathcal{G}}\n",

"\\newcommand{\\hH}{\\mathcal{H}}\n",

"$$"

]

},

{

"cell_type": "markdown",

"id": "face90c1",

"metadata": {},

"source": [

"\n",

""

]

},

{

"cell_type": "markdown",

"id": "45cbfae7",

"metadata": {},

"source": [

"# Continuous State Markov Chains\n",

"\n",

"\n",

"\n",

"In addition to what’s in Anaconda, this lecture will need the following libraries:"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "a737b735",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"!pip install --upgrade quantecon"

]

},

{

"cell_type": "markdown",

"id": "50fc1fe1",

"metadata": {},

"source": [

"## Overview\n",

"\n",

"In a [previous lecture](https://python-intro.quantecon.org/finite_markov.html), we learned about finite Markov chains, a relatively elementary class of stochastic dynamic models.\n",

"\n",

"The present lecture extends this analysis to continuous (i.e., uncountable) state Markov chains.\n",

"\n",

"Most stochastic dynamic models studied by economists either fit directly into this class or can be represented as continuous state Markov chains after minor modifications.\n",

"\n",

"In this lecture, our focus will be on continuous Markov models that\n",

"\n",

"- evolve in discrete-time \n",

"- are often nonlinear \n",

"\n",

"\n",

"The fact that we accommodate nonlinear models here is significant, because\n",

"linear stochastic models have their own highly developed toolset, as we’ll\n",

"see [later on](https://python-advanced.quantecon.org/arma.html).\n",

"\n",

"The question that interests us most is: Given a particular stochastic dynamic\n",

"model, how will the state of the system evolve over time?\n",

"\n",

"In particular,\n",

"\n",

"- What happens to the distribution of the state variables? \n",

"- Is there anything we can say about the “average behavior” of these variables? \n",

"- Is there a notion of “steady state” or “long-run equilibrium” that’s applicable to the model? \n",

" - If so, how can we compute it? \n",

"\n",

"\n",

"Answering these questions will lead us to revisit many of the topics that occupied us in the finite state case,\n",

"such as simulation, distribution dynamics, stability, ergodicity, etc.\n",

"\n",

">**Note**\n",

">\n",

">For some people, the term “Markov chain” always refers to a process with a\n",

"finite or discrete state space. We follow the mainstream\n",

"mathematical literature (e.g., [[Meyn and Tweedie, 2009](https://python-advanced.quantecon.org/zreferences.html#id189)]) in using the term to refer to any discrete **time**\n",

"Markov process.\n",

"\n",

"Let’s begin with some imports:"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "596e69ce",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"import numpy as np\n",

"import matplotlib.pyplot as plt\n",

"from scipy.stats import lognorm, beta\n",

"from quantecon import LAE\n",

"from scipy.stats import norm, gaussian_kde"

]

},

{

"cell_type": "markdown",

"id": "0e2b07c4",

"metadata": {},

"source": [

"\n",

""

]

},

{

"cell_type": "markdown",

"id": "ff2983c4",

"metadata": {},

"source": [

"## The density case\n",

"\n",

"You are probably aware that some distributions can be represented by densities\n",

"and some cannot.\n",

"\n",

"(For example, distributions on the real numbers $ \\mathbb R $ that put positive probability\n",

"on individual points have no density representation)\n",

"\n",

"We are going to start our analysis by looking at Markov chains where the one-step transition probabilities have density representations.\n",

"\n",

"The benefit is that the density case offers a very direct parallel to the finite case in terms of notation and intuition.\n",

"\n",

"Once we’ve built some intuition we’ll cover the general case."

]

},

{

"cell_type": "markdown",

"id": "23398d5e",

"metadata": {},

"source": [

"### Definitions and basic properties\n",

"\n",

"In our [lecture on finite Markov chains](https://python-intro.quantecon.org/finite_markov.html), we studied discrete-time Markov chains that evolve on a finite state space $ S $.\n",

"\n",

"In this setting, the dynamics of the model are described by a stochastic matrix — a nonnegative square matrix $ P = P[i, j] $ such that each row $ P[i, \\cdot] $ sums to one.\n",

"\n",

"The interpretation of $ P $ is that $ P[i, j] $ represents the\n",

"probability of transitioning from state $ i $ to state $ j $ in one\n",

"unit of time.\n",

"\n",

"In symbols,\n",

"\n",

"$$\n",

"\\mathbb P \\{ X_{t+1} = j \\,|\\, X_t = i \\} = P[i, j]\n",

"$$\n",

"\n",

"Equivalently,\n",

"\n",

"- $ P $ can be thought of as a family of distributions $ P[i, \\cdot] $, one for each $ i \\in S $ \n",

"- $ P[i, \\cdot] $ is the distribution of $ X_{t+1} $ given $ X_t = i $ \n",

"\n",

"\n",

"(As you probably recall, when using NumPy arrays, $ P[i, \\cdot] $ is expressed as `P[i,:]`)\n",

"\n",

"In this section, we’ll allow $ S $ to be a subset of $ \\mathbb R $, such as\n",

"\n",

"- $ \\mathbb R $ itself \n",

"- the positive reals $ (0, \\infty) $ \n",

"- a bounded interval $ (a, b) $ \n",

"\n",

"\n",

"The family of discrete distributions $ P[i, \\cdot] $ will be replaced by a family of densities $ p(x, \\cdot) $, one for each $ x \\in S $.\n",

"\n",

"Analogous to the finite state case, $ p(x, \\cdot) $ is to be understood as the distribution (density) of $ X_{t+1} $ given $ X_t = x $.\n",

"\n",

"More formally, a *stochastic kernel on* $ S $ is a function $ p \\colon S \\times S \\to \\mathbb R $ with the property that\n",

"\n",

"1. $ p(x, y) \\geq 0 $ for all $ x, y \\in S $ \n",

"1. $ \\int p(x, y) dy = 1 $ for all $ x \\in S $ \n",

"\n",

"\n",

"(Integrals are over the whole space unless otherwise specified)\n",

"\n",

"For example, let $ S = \\mathbb R $ and consider the particular stochastic\n",

"kernel $ p_w $ defined by\n",

"\n",

"\n",

"\n",

"$$\n",

"p_w(x, y) := \\frac{1}{\\sqrt{2 \\pi}} \\exp \\left\\{ - \\frac{(y - x)^2}{2} \\right\\} \\tag{2.1}\n",

"$$\n",

"\n",

"What kind of model does $ p_w $ represent?\n",

"\n",

"The answer is, the (normally distributed) random walk\n",

"\n",

"\n",

"\n",

"$$\n",

"X_{t+1} = X_t + \\xi_{t+1}\n",

"\\quad \\text{where} \\quad\n",

"\\{ \\xi_t \\} \\stackrel {\\textrm{ IID }} {\\sim} N(0, 1) \\tag{2.2}\n",

"$$\n",

"\n",

"To see this, let’s find the stochastic kernel $ p $ corresponding to [(2.2)](#equation-statd-rw).\n",

"\n",

"Recall that $ p(x, \\cdot) $ represents the distribution of $ X_{t+1} $ given $ X_t = x $.\n",

"\n",

"Letting $ X_t = x $ in [(2.2)](#equation-statd-rw) and considering the distribution of $ X_{t+1} $, we see that $ p(x, \\cdot) = N(x, 1) $.\n",

"\n",

"In other words, $ p $ is exactly $ p_w $, as defined in [(2.1)](#equation-statd-rwsk)."

]

},

{

"cell_type": "markdown",

"id": "6863ef71",

"metadata": {},

"source": [

"### Connection to stochastic difference equations\n",

"\n",

"In the previous section, we made the connection between stochastic difference\n",

"equation [(2.2)](#equation-statd-rw) and stochastic kernel [(2.1)](#equation-statd-rwsk).\n",

"\n",

"In economics and time-series analysis we meet stochastic difference equations of all different shapes and sizes.\n",

"\n",

"It will be useful for us if we have some systematic methods for converting stochastic difference equations into stochastic kernels.\n",

"\n",

"To this end, consider the generic (scalar) stochastic difference equation given by\n",

"\n",

"\n",

"\n",

"$$\n",

"X_{t+1} = \\mu(X_t) + \\sigma(X_t) \\, \\xi_{t+1} \\tag{2.3}\n",

"$$\n",

"\n",

"Here we assume that\n",

"\n",

"- $ \\{ \\xi_t \\} \\stackrel {\\textrm{ IID }} {\\sim} \\phi $, where $ \\phi $ is a given density on $ \\mathbb R $ \n",

"- $ \\mu $ and $ \\sigma $ are given functions on $ S $, with $ \\sigma(x) > 0 $ for all $ x $ \n",

"\n",

"\n",

"**Example 1:** The random walk [(2.2)](#equation-statd-rw) is a special case of [(2.3)](#equation-statd-srs), with $ \\mu(x) = x $ and $ \\sigma(x) = 1 $.\n",

"\n",

"**Example 2:** Consider the [ARCH model](https://en.wikipedia.org/wiki/Autoregressive_conditional_heteroskedasticity)\n",

"\n",

"$$\n",

"X_{t+1} = \\alpha X_t + \\sigma_t \\, \\xi_{t+1},\n",

"\\qquad \\sigma^2_t = \\beta + \\gamma X_t^2,\n",

"\\qquad \\beta, \\gamma > 0\n",

"$$\n",

"\n",

"Alternatively, we can write the model as\n",

"\n",

"\n",

"\n",

"$$\n",

"X_{t+1} = \\alpha X_t + (\\beta + \\gamma X_t^2)^{1/2} \\xi_{t+1} \\tag{2.4}\n",

"$$\n",

"\n",

"This is a special case of [(2.3)](#equation-statd-srs) with $ \\mu(x) = \\alpha x $ and $ \\sigma(x) = (\\beta + \\gamma x^2)^{1/2} $.\n",

"\n",

"\n",

"\n",

"**Example 3:** With stochastic production and a constant savings rate, the one-sector neoclassical growth model leads to a law of motion for capital per worker such as\n",

"\n",

"\n",

"\n",

"$$\n",

"k_{t+1} = s A_{t+1} f(k_t) + (1 - \\delta) k_t \\tag{2.5}\n",

"$$\n",

"\n",

"Here\n",

"\n",

"- $ s $ is the rate of savings \n",

"- $ A_{t+1} $ is a production shock \n",

" - The $ t+1 $ subscript indicates that $ A_{t+1} $ is not visible at time $ t $ \n",

"- $ \\delta $ is a depreciation rate \n",

"- $ f \\colon \\mathbb R_+ \\to \\mathbb R_+ $ is a production function satisfying $ f(k) > 0 $ whenever $ k > 0 $ \n",

"\n",

"\n",

"(The fixed savings rate can be rationalized as the optimal policy for a particular set of technologies and preferences (see [[Ljungqvist and Sargent, 2018](https://python-advanced.quantecon.org/zreferences.html#id179)], section\n",

"3.1.2), although we omit the details here).\n",

"\n",

"Equation [(2.5)](#equation-statd-ss) is a special case of [(2.3)](#equation-statd-srs) with $ \\mu(x) = (1 - \\delta)x $ and $ \\sigma(x) = s f(x) $.\n",

"\n",

"Now let’s obtain the stochastic kernel corresponding to the generic model [(2.3)](#equation-statd-srs).\n",

"\n",

"To find it, note first that if $ U $ is a random variable with\n",

"density $ f_U $, and $ V = a + b U $ for some constants $ a,b $\n",

"with $ b > 0 $, then the density of $ V $ is given by\n",

"\n",

"\n",

"\n",

"$$\n",

"f_V(v)\n",

"= \\frac{1}{b}\n",

"f_U \\left( \\frac{v - a}{b} \\right) \\tag{2.6}\n",

"$$\n",

"\n",

"(The proof is [below](#statd-appendix). For a multidimensional version\n",

"see [EDTC](http://johnstachurski.net/edtc.html), theorem 8.1.3).\n",

"\n",

"Taking [(2.6)](#equation-statd-dv) as given for the moment, we can\n",

"obtain the stochastic kernel $ p $ for [(2.3)](#equation-statd-srs) by recalling that\n",

"$ p(x, \\cdot) $ is the conditional density of $ X_{t+1} $ given\n",

"$ X_t = x $.\n",

"\n",

"In the present case, this is equivalent to stating that $ p(x, \\cdot) $ is the density of $ Y := \\mu(x) + \\sigma(x) \\, \\xi_{t+1} $ when $ \\xi_{t+1} \\sim \\phi $.\n",

"\n",

"Hence, by [(2.6)](#equation-statd-dv),\n",

"\n",

"\n",

"\n",

"$$\n",

"p(x, y)\n",

"= \\frac{1}{\\sigma(x)}\n",

"\\phi \\left( \\frac{y - \\mu(x)}{\\sigma(x)} \\right) \\tag{2.7}\n",

"$$\n",

"\n",

"For example, the growth model in [(2.5)](#equation-statd-ss) has stochastic kernel\n",

"\n",

"\n",

"\n",

"$$\n",

"p(x, y)\n",

"= \\frac{1}{sf(x)}\n",

"\\phi \\left( \\frac{y - (1 - \\delta) x}{s f(x)} \\right) \\tag{2.8}\n",

"$$\n",

"\n",

"where $ \\phi $ is the density of $ A_{t+1} $.\n",

"\n",

"(Regarding the state space $ S $ for this model, a natural choice is $ (0, \\infty) $ — in which case\n",

"$ \\sigma(x) = s f(x) $ is strictly positive for all $ s $ as required)"

]

},

{

"cell_type": "markdown",

"id": "c3e0bc69",

"metadata": {},

"source": [

"### Distribution dynamics\n",

"\n",

"In [this section](https://python.quantecon.org/finite_markov.html#marginal-distributions) of our lecture on **finite** Markov chains, we\n",

"asked the following question: If\n",

"\n",

"1. $ \\{X_t\\} $ is a Markov chain with stochastic matrix $ P $ \n",

"1. the distribution of $ X_t $ is known to be $ \\psi_t $ \n",

"\n",

"\n",

"then what is the distribution of $ X_{t+1} $?\n",

"\n",

"Letting $ \\psi_{t+1} $ denote the distribution of $ X_{t+1} $, the\n",

"answer [we gave](https://python.quantecon.org/finite_markov.html#marginal-distributions) was that\n",

"\n",

"$$\n",

"\\psi_{t+1}[j] = \\sum_{i \\in S} P[i,j] \\psi_t[i]\n",

"$$\n",

"\n",

"This intuitive equality states that the probability of being at $ j $\n",

"tomorrow is the probability of visiting $ i $ today and then going on to\n",

"$ j $, summed over all possible $ i $.\n",

"\n",

"In the density case, we just replace the sum with an integral and probability\n",

"mass functions with densities, yielding\n",

"\n",

"\n",

"\n",

"$$\n",

"\\psi_{t+1}(y) = \\int p(x,y) \\psi_t(x) \\, dx,\n",

"\\qquad \\forall y \\in S \\tag{2.9}\n",

"$$\n",

"\n",

"It is convenient to think of this updating process in terms of an operator.\n",

"\n",

"(An operator is just a function, but the term is usually reserved for a function that sends functions into functions)\n",

"\n",

"Let $ \\mathscr D $ be the set of all densities on $ S $, and let\n",

"$ P $ be the operator from $ \\mathscr D $ to itself that takes density\n",

"$ \\psi $ and sends it into new density $ \\psi P $, where the latter is\n",

"defined by\n",

"\n",

"\n",

"\n",

"$$\n",

"(\\psi P)(y) = \\int p(x,y) \\psi(x) dx \\tag{2.10}\n",

"$$\n",

"\n",

"This operator is usually called the *Markov operator* corresponding to $ p $\n",

"\n",

">**Note**\n",

">\n",

">Unlike most operators, we write $ P $ to the right of its argument,\n",

"instead of to the left (i.e., $ \\psi P $ instead of $ P \\psi $).\n",

"This is a common convention, with the intention being to maintain the\n",

"parallel with the finite case — see [here](https://python.quantecon.org/finite_markov.html#marginal-distributions)\n",

"\n",

"With this notation, we can write [(2.9)](#equation-statd-fdd) more succinctly as $ \\psi_{t+1}(y) = (\\psi_t P)(y) $ for all $ y $, or, dropping the $ y $ and letting “$ = $” indicate equality of functions,\n",

"\n",

"\n",

"\n",

"$$\n",

"\\psi_{t+1} = \\psi_t P \\tag{2.11}\n",

"$$\n",

"\n",

"Equation [(2.11)](#equation-statd-p) tells us that if we specify a distribution for $ \\psi_0 $, then the entire sequence\n",

"of future distributions can be obtained by iterating with $ P $.\n",

"\n",

"It’s interesting to note that [(2.11)](#equation-statd-p) is a deterministic difference equation.\n",

"\n",

"Thus, by converting a stochastic difference equation such as\n",

"[(2.3)](#equation-statd-srs) into a stochastic kernel $ p $ and hence an operator\n",

"$ P $, we convert a stochastic difference equation into a deterministic\n",

"one (albeit in a much higher dimensional space).\n",

"\n",

">**Note**\n",

">\n",

">Some people might be aware that discrete Markov chains are in fact\n",

"a special case of the continuous Markov chains we have just described. The reason is\n",

"that probability mass functions are densities with respect to\n",

"the [counting measure](https://en.wikipedia.org/wiki/Counting_measure)."

]

},

{

"cell_type": "markdown",

"id": "d006bca0",

"metadata": {},

"source": [

"### Computation\n",

"\n",

"To learn about the dynamics of a given process, it’s useful to compute and study the sequences of densities generated by the model.\n",

"\n",

"One way to do this is to try to implement the iteration described by [(2.10)](#equation-def-dmo) and [(2.11)](#equation-statd-p) using numerical integration.\n",

"\n",

"However, to produce $ \\psi P $ from $ \\psi $ via [(2.10)](#equation-def-dmo), you\n",

"would need to integrate at every $ y $, and there is a continuum of such\n",

"$ y $.\n",

"\n",

"Another possibility is to discretize the model, but this introduces errors of unknown size.\n",

"\n",

"A nicer alternative in the present setting is to combine simulation with an elegant estimator called the *look-ahead* estimator.\n",

"\n",

"Let’s go over the ideas with reference to the growth model [discussed above](#solow-swan), the dynamics of which we repeat here for convenience:\n",

"\n",

"\n",

"\n",

"$$\n",

"k_{t+1} = s A_{t+1} f(k_t) + (1 - \\delta) k_t \\tag{2.12}\n",

"$$\n",

"\n",

"Our aim is to compute the sequence $ \\{ \\psi_t \\} $ associated with this model and fixed initial condition $ \\psi_0 $.\n",

"\n",

"To approximate $ \\psi_t $ by simulation, recall that, by definition, $ \\psi_t $ is the density of $ k_t $ given $ k_0 \\sim \\psi_0 $.\n",

"\n",

"If we wish to generate observations of this random variable, all we need to do is\n",

"\n",

"1. draw $ k_0 $ from the specified initial condition $ \\psi_0 $ \n",

"1. draw the shocks $ A_1, \\ldots, A_t $ from their specified density $ \\phi $ \n",

"1. compute $ k_t $ iteratively via [(2.12)](#equation-statd-ss2) \n",

"\n",

"\n",

"If we repeat this $ n $ times, we get $ n $ independent observations $ k_t^1, \\ldots, k_t^n $.\n",

"\n",

"With these draws in hand, the next step is to generate some kind of representation of their distribution $ \\psi_t $.\n",

"\n",

"A naive approach would be to use a histogram, or perhaps a [smoothed histogram](https://en.wikipedia.org/wiki/Kernel_density_estimation) using SciPy’s `gaussian_kde` function.\n",

"\n",

"However, in the present setting, there is a much better way to do this, based on the look-ahead estimator.\n",

"\n",

"With this estimator, to construct an estimate of $ \\psi_t $, we\n",

"actually generate $ n $ observations of $ k_{t-1} $, rather than $ k_t $.\n",

"\n",

"Now we take these $ n $ observations $ k_{t-1}^1, \\ldots,\n",

"k_{t-1}^n $ and form the estimate\n",

"\n",

"\n",

"\n",

"$$\n",

"\\psi_t^n(y) = \\frac{1}{n} \\sum_{i=1}^n p(k_{t-1}^i, y) \\tag{2.13}\n",

"$$\n",

"\n",

"where $ p $ is the growth model stochastic kernel in [(2.8)](#equation-statd-sssk).\n",

"\n",

"What is the justification for this slightly surprising estimator?\n",

"\n",

"The idea is that, by the strong [law of large numbers](https://python-intro.quantecon.org/lln_clt.html#lln-ksl),\n",

"\n",

"$$\n",

"\\frac{1}{n} \\sum_{i=1}^n p(k_{t-1}^i, y)\n",

"\\to\n",

"\\mathbb E p(k_{t-1}^i, y)\n",

"= \\int p(x, y) \\psi_{t-1}(x) \\, dx\n",

"= \\psi_t(y)\n",

"$$\n",

"\n",

"with probability one as $ n \\to \\infty $.\n",

"\n",

"Here the first equality is by the definition of $ \\psi_{t-1} $, and the\n",

"second is by [(2.9)](#equation-statd-fdd).\n",

"\n",

"We have just shown that our estimator $ \\psi_t^n(y) $ in [(2.13)](#equation-statd-lae1)\n",

"converges almost surely to $ \\psi_t(y) $, which is just what we want to compute."

]

},

{

"cell_type": "markdown",

"id": "f8294e07",

"metadata": {},

"source": [

"### Implementation\n",

"\n",

"A class called `LAE` for estimating densities by this technique can be found in [lae.py](https://github.com/QuantEcon/QuantEcon.py/blob/master/quantecon/lae.py).\n",

"\n",

"Given our use of the `__call__` method, an instance of `LAE` acts as a callable object, which is essentially a function that can store its own data (see [this discussion](https://python-programming.quantecon.org/python_oop.html#call-method)).\n",

"\n",

"This function returns the right-hand side of [(2.13)](#equation-statd-lae1) using\n",

"\n",

"- the data and stochastic kernel that it stores as its instance data \n",

"- the value $ y $ as its argument \n",

"\n",

"\n",

"The function is vectorized, in the sense that if `psi` is such an instance and `y` is an array, then the call `psi(y)` acts elementwise.\n",

"\n",

"(This is the reason that we reshaped `X` and `y` inside the class — to make vectorization work)\n",

"\n",

"Because the implementation is fully vectorized, it is about as efficient as it\n",

"would be in C or Fortran."

]

},

{

"cell_type": "markdown",

"id": "2d4a1c46",

"metadata": {},

"source": [

"### Example\n",

"\n",

"The following code is an example of usage for the stochastic growth model [described above](#solow-swan)\n",

"\n",

"\n",

""

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "f64d6858",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"# == Define parameters == #\n",

"s = 0.2\n",

"δ = 0.1\n",

"a_σ = 0.4 # A = exp(B) where B ~ N(0, a_σ)\n",

"α = 0.4 # We set f(k) = k**α\n",

"ψ_0 = beta(5, 5, scale=0.5) # Initial distribution\n",

"ϕ = lognorm(a_σ)\n",

"\n",

"\n",

"def p(x, y):\n",

" \"\"\"\n",

" Stochastic kernel for the growth model with Cobb-Douglas production.\n",

" Both x and y must be strictly positive.\n",

" \"\"\"\n",

" d = s * x**α\n",

" return ϕ.pdf((y - (1 - δ) * x) / d) / d\n",

"\n",

"n = 10000 # Number of observations at each date t\n",

"T = 30 # Compute density of k_t at 1,...,T+1\n",

"\n",

"# == Generate matrix s.t. t-th column is n observations of k_t == #\n",

"k = np.empty((n, T))\n",

"A = ϕ.rvs((n, T))\n",

"k[:, 0] = ψ_0.rvs(n) # Draw first column from initial distribution\n",

"for t in range(T-1):\n",

" k[:, t+1] = s * A[:, t] * k[:, t]**α + (1 - δ) * k[:, t]\n",

"\n",

"# == Generate T instances of LAE using this data, one for each date t == #\n",

"laes = [LAE(p, k[:, t]) for t in range(T)]\n",

"\n",

"# == Plot == #\n",

"fig, ax = plt.subplots()\n",

"ygrid = np.linspace(0.01, 4.0, 200)\n",

"greys = [str(g) for g in np.linspace(0.0, 0.8, T)]\n",

"greys.reverse()\n",

"for ψ, g in zip(laes, greys):\n",

" ax.plot(ygrid, ψ(ygrid), color=g, lw=2, alpha=0.6)\n",

"ax.set_xlabel('capital')\n",

"ax.set_title(f'Density of $k_1$ (lighter) to $k_T$ (darker) for $T={T}$')\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"id": "240632dd",

"metadata": {},

"source": [

"The figure shows part of the density sequence $ \\{\\psi_t\\} $, with each\n",

"density computed via the look-ahead estimator.\n",

"\n",

"Notice that the sequence of densities shown in the figure seems to be\n",

"converging — more on this in just a moment.\n",

"\n",

"Another quick comment is that each of these distributions could be interpreted\n",

"as a cross-sectional distribution (recall [this discussion](https://python.quantecon.org/finite_markov.html#example-2-cross-sectional-distributions))."

]

},

{

"cell_type": "markdown",

"id": "2578d9d2",

"metadata": {},

"source": [

"## Beyond densities\n",

"\n",

"Up until now, we have focused exclusively on continuous state Markov chains\n",

"where all conditional distributions $ p(x, \\cdot) $ are densities.\n",

"\n",

"As discussed above, not all distributions can be represented as densities.\n",

"\n",

"If the conditional distribution of $ X_{t+1} $ given $ X_t = x $\n",

"**cannot** be represented as a density for some $ x \\in S $, then we need a slightly\n",

"different theory.\n",

"\n",

"The ultimate option is to switch from densities to [probability measures](https://en.wikipedia.org/wiki/Probability_measure), but not all readers will\n",

"be familiar with measure theory.\n",

"\n",

"We can, however, construct a fairly general theory using distribution functions."

]

},

{

"cell_type": "markdown",

"id": "cb7a6381",

"metadata": {},

"source": [

"### Example and definitions\n",

"\n",

"To illustrate the issues, recall that Hopenhayn and Rogerson [[Hopenhayn and Rogerson, 1993](https://python-advanced.quantecon.org/zreferences.html#id168)] study a model of firm dynamics where individual firm productivity follows the exogenous process\n",

"\n",

"$$\n",

"X_{t+1} = a + \\rho X_t + \\xi_{t+1},\n",

"\\quad \\text{where} \\quad\n",

"\\{ \\xi_t \\} \\stackrel {\\textrm{ IID }} {\\sim} N(0, \\sigma^2)\n",

"$$\n",

"\n",

"As is, this fits into the density case we treated above.\n",

"\n",

"However, the authors wanted this process to take values in $ [0, 1] $, so they added boundaries at the endpoints 0 and 1.\n",

"\n",

"One way to write this is\n",

"\n",

"$$\n",

"X_{t+1} = h(a + \\rho X_t + \\xi_{t+1})\n",

"\\quad \\text{where} \\quad\n",

"h(x) := x \\, \\mathbf 1\\{0 \\leq x \\leq 1\\} + \\mathbf 1 \\{ x > 1\\}\n",

"$$\n",

"\n",

"If you think about it, you will see that for any given $ x \\in [0, 1] $,\n",

"the conditional distribution of $ X_{t+1} $ given $ X_t = x $\n",

"puts positive probability mass on 0 and 1.\n",

"\n",

"Hence it cannot be represented as a density.\n",

"\n",

"What we can do instead is use cumulative distribution functions (cdfs).\n",

"\n",

"To this end, set\n",

"\n",

"$$\n",

"G(x, y) := \\mathbb P \\{ h(a + \\rho x + \\xi_{t+1}) \\leq y \\}\n",

"\\qquad (0 \\leq x, y \\leq 1)\n",

"$$\n",

"\n",

"This family of cdfs $ G(x, \\cdot) $ plays a role analogous to the stochastic kernel in the density case.\n",

"\n",

"The distribution dynamics in [(2.9)](#equation-statd-fdd) are then replaced by\n",

"\n",

"\n",

"\n",

"$$\n",

"F_{t+1}(y) = \\int G(x,y) F_t(dx) \\tag{2.14}\n",

"$$\n",

"\n",

"Here $ F_t $ and $ F_{t+1} $ are cdfs representing the distribution of the current state and next period state.\n",

"\n",

"The intuition behind [(2.14)](#equation-statd-fddc) is essentially the same as for [(2.9)](#equation-statd-fdd)."

]

},

{

"cell_type": "markdown",

"id": "84cc8ed4",

"metadata": {},

"source": [

"### Computation\n",

"\n",

"If you wish to compute these cdfs, you cannot use the look-ahead estimator as before.\n",

"\n",

"Indeed, you should not use any density estimator, since the objects you are\n",

"estimating/computing are not densities.\n",

"\n",

"One good option is simulation as before, combined with the [empirical distribution function](https://en.wikipedia.org/wiki/Empirical_distribution_function)."

]

},

{

"cell_type": "markdown",

"id": "f04e3079",

"metadata": {},

"source": [

"## Stability\n",

"\n",

"In our [lecture](https://python-intro.quantecon.org/finite_markov.html) on finite Markov chains, we also studied stationarity, stability and ergodicity.\n",

"\n",

"Here we will cover the same topics for the continuous case.\n",

"\n",

"We will, however, treat only the density case (as in [this section](#statd-density-case)), where the stochastic kernel is a family of densities.\n",

"\n",

"The general case is relatively similar — references are given below."

]

},

{

"cell_type": "markdown",

"id": "6dd38b54",

"metadata": {},

"source": [

"### Theoretical results\n",

"\n",

"Analogous to [the finite case](https://python.quantecon.org/finite_markov.html#stationary-distributions), given a stochastic kernel $ p $ and corresponding Markov operator as\n",

"defined in [(2.10)](#equation-def-dmo), a density $ \\psi^* $ on $ S $ is called\n",

"*stationary* for $ P $ if it is a fixed point of the operator $ P $.\n",

"\n",

"In other words,\n",

"\n",

"\n",

"\n",

"$$\n",

"\\psi^*(y) = \\int p(x,y) \\psi^*(x) \\, dx,\n",

"\\qquad \\forall y \\in S \\tag{2.15}\n",

"$$\n",

"\n",

"As with the finite case, if $ \\psi^* $ is stationary for $ P $, and\n",

"the distribution of $ X_0 $ is $ \\psi^* $, then, in view of\n",

"[(2.11)](#equation-statd-p), $ X_t $ will have this same distribution for all $ t $.\n",

"\n",

"Hence $ \\psi^* $ is the stochastic equivalent of a steady state.\n",

"\n",

"In the finite case, we learned that at least one stationary distribution exists, although there may be many.\n",

"\n",

"When the state space is infinite, the situation is more complicated.\n",

"\n",

"Even existence can fail very easily.\n",

"\n",

"For example, the random walk model has no stationary density (see, e.g., [EDTC](http://johnstachurski.net/edtc.html), p. 210).\n",

"\n",

"However, there are well-known conditions under which a stationary density $ \\psi^* $ exists.\n",

"\n",

"With additional conditions, we can also get a unique stationary density ($ \\psi \\in \\mathscr D \\text{ and } \\psi = \\psi P \\implies \\psi = \\psi^* $), and also global convergence in the sense that\n",

"\n",

"\n",

"\n",

"$$\n",

"\\forall \\, \\psi \\in \\mathscr D, \\quad \\psi P^t \\to \\psi^*\n",

" \\quad \\text{as} \\quad t \\to \\infty \\tag{2.16}\n",

"$$\n",

"\n",

"This combination of existence, uniqueness and global convergence in the sense\n",

"of [(2.16)](#equation-statd-dca) is often referred to as *global stability*.\n",

"\n",

"Under very similar conditions, we get *ergodicity*, which means that\n",

"\n",

"\n",

"\n",

"$$\n",

"\\frac{1}{n} \\sum_{t = 1}^n h(X_t) \\to \\int h(x) \\psi^*(x) dx\n",

" \\quad \\text{as } n \\to \\infty \\tag{2.17}\n",

"$$\n",

"\n",

"for any ([measurable](https://en.wikipedia.org/wiki/Measurable_function)) function $ h \\colon S \\to \\mathbb R $ such that the right-hand side is finite.\n",

"\n",

"Note that the convergence in [(2.17)](#equation-statd-lln) does not depend on the distribution (or value) of $ X_0 $.\n",

"\n",

"This is actually very important for simulation — it means we can learn about $ \\psi^* $ (i.e., approximate the right-hand side of [(2.17)](#equation-statd-lln) via the left-hand side) without requiring any special knowledge about what to do with $ X_0 $.\n",

"\n",

"So what are these conditions we require to get global stability and ergodicity?\n",

"\n",

"In essence, it must be the case that\n",

"\n",

"1. Probability mass does not drift off to the “edges” of the state space. \n",

"1. Sufficient “mixing” obtains. \n",

"\n",

"\n",

"For one such set of conditions see theorem 8.2.14 of [EDTC](http://johnstachurski.net/edtc.html).\n",

"\n",

"In addition\n",

"\n",

"- [[Stokey *et al.*, 1989](https://python-advanced.quantecon.org/zreferences.html#id215)] contains a classic (but slightly outdated) treatment of these topics. \n",

"- From the mathematical literature, [[Lasota and MacKey, 1994](https://python-advanced.quantecon.org/zreferences.html#id178)] and [[Meyn and Tweedie, 2009](https://python-advanced.quantecon.org/zreferences.html#id189)] give outstanding in-depth treatments. \n",

"- Section 8.1.2 of [EDTC](http://johnstachurski.net/edtc.html) provides detailed intuition, and section 8.3 gives additional references. \n",

"- [EDTC](http://johnstachurski.net/edtc.html), section 11.3.4\n",

" provides a specific treatment for the growth model we considered in this\n",

" lecture. "

]

},

{

"cell_type": "markdown",

"id": "c62aae18",

"metadata": {},

"source": [

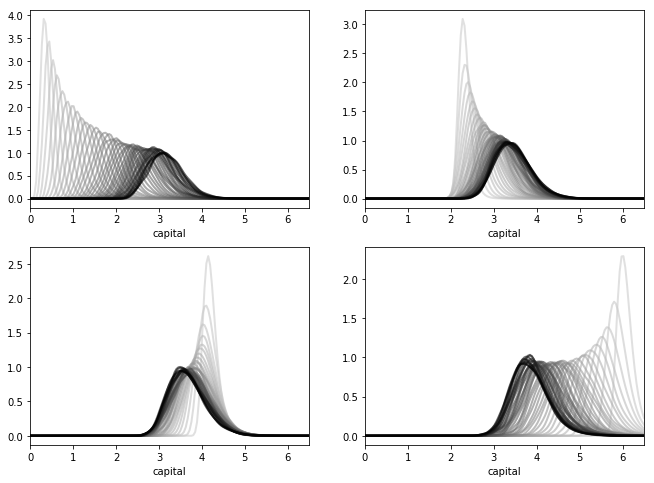

"### An example of stability\n",

"\n",

"As stated above, the [growth model treated here](#solow-swan) is stable under mild conditions\n",

"on the primitives.\n",

"\n",

"- See [EDTC](http://johnstachurski.net/edtc.html), section 11.3.4 for more details. \n",

"\n",

"\n",

"We can see this stability in action — in particular, the convergence in [(2.16)](#equation-statd-dca) — by simulating the path of densities from various initial conditions.\n",

"\n",

"Here is such a figure.\n",

"\n",

"\n",

"\n",

"\n",

"\n",

" \n",

"All sequences are converging towards the same limit, regardless of their initial condition.\n",

"\n",

"The details regarding initial conditions and so on are given in [this exercise](#statd-ex2), where you are asked to replicate the figure."

]

},

{

"cell_type": "markdown",

"id": "b1cc1bec",

"metadata": {},

"source": [

"### Computing stationary densities\n",

"\n",

"In the preceding figure, each sequence of densities is converging towards the unique stationary density $ \\psi^* $.\n",

"\n",

"Even from this figure, we can get a fair idea what $ \\psi^* $ looks like, and where its mass is located.\n",

"\n",

"However, there is a much more direct way to estimate the stationary density,\n",

"and it involves only a slight modification of the look-ahead estimator.\n",

"\n",

"Let’s say that we have a model of the form [(2.3)](#equation-statd-srs) that is stable and\n",

"ergodic.\n",

"\n",

"Let $ p $ be the corresponding stochastic kernel, as given in [(2.7)](#equation-statd-srssk).\n",

"\n",

"To approximate the stationary density $ \\psi^* $, we can simply generate a\n",

"long time-series $ X_0, X_1, \\ldots, X_n $ and estimate $ \\psi^* $ via\n",

"\n",

"\n",

"\n",

"$$\n",

"\\psi_n^*(y) = \\frac{1}{n} \\sum_{t=1}^n p(X_t, y) \\tag{2.18}\n",

"$$\n",

"\n",

"This is essentially the same as the look-ahead estimator [(2.13)](#equation-statd-lae1),\n",

"except that now the observations we generate are a single time-series, rather\n",

"than a cross-section.\n",

"\n",

"The justification for [(2.18)](#equation-statd-lae2) is that, with probability one as $ n \\to \\infty $,\n",

"\n",

"$$\n",

"\\frac{1}{n} \\sum_{t=1}^n p(X_t, y)\n",

"\\to\n",

"\\int p(x, y) \\psi^*(x) \\, dx\n",

"= \\psi^*(y)\n",

"$$\n",

"\n",

"where the convergence is by [(2.17)](#equation-statd-lln) and the equality on the right is by\n",

"[(2.15)](#equation-statd-dsd).\n",

"\n",

"The right-hand side is exactly what we want to compute.\n",

"\n",

"On top of this asymptotic result, it turns out that the rate of convergence\n",

"for the look-ahead estimator is very good.\n",

"\n",

"The first exercise helps illustrate this point."

]

},

{

"cell_type": "markdown",

"id": "fd92349a",

"metadata": {},

"source": [

"## Exercises\n",

"\n",

"\n",

""

]

},

{

"cell_type": "markdown",

"id": "3cd15165",

"metadata": {},

"source": [

"## Exercise 2.1\n",

"\n",

"Consider the simple threshold autoregressive model\n",

"\n",

"\n",

"\n",

"$$\n",

"X_{t+1} = \\theta |X_t| + (1- \\theta^2)^{1/2} \\xi_{t+1}\n",

"\\qquad \\text{where} \\quad\n",

"\\{ \\xi_t \\} \\stackrel {\\textrm{ IID }} {\\sim} N(0, 1) \\tag{2.19}\n",

"$$\n",

"\n",

"This is one of those rare nonlinear stochastic models where an analytical\n",

"expression for the stationary density is available.\n",

"\n",

"In particular, provided that $ |\\theta| < 1 $, there is a unique\n",

"stationary density $ \\psi^* $ given by\n",

"\n",

"\n",

"\n",

"$$\n",

"\\psi^*(y) = 2 \\, \\phi(y) \\, \\Phi\n",

"\\left[\n",

" \\frac{\\theta y}{(1 - \\theta^2)^{1/2}}\n",

"\\right] \\tag{2.20}\n",

"$$\n",

"\n",

"Here $ \\phi $ is the standard normal density and $ \\Phi $ is the standard normal cdf.\n",

"\n",

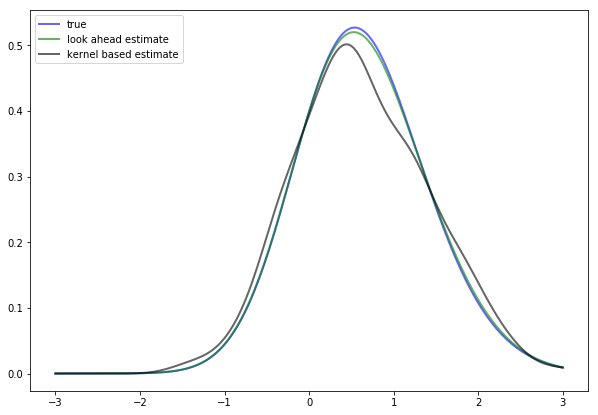

"As an exercise, compute the look-ahead estimate of $ \\psi^* $, as defined\n",

"in [(2.18)](#equation-statd-lae2), and compare it with $ \\psi^* $ in [(2.20)](#equation-statd-tar-ts) to see whether they\n",

"are indeed close for large $ n $.\n",

"\n",

"In doing so, set $ \\theta = 0.8 $ and $ n = 500 $.\n",

"\n",

"The next figure shows the result of such a computation\n",

"\n",

"\n",

"\n",

"The additional density (black line) is a [nonparametric kernel density estimate](https://en.wikipedia.org/wiki/Kernel_density_estimation), added to the solution for illustration.\n",

"\n",

"(You can try to replicate it before looking at the solution if you want to)\n",

"\n",

"As you can see, the look-ahead estimator is a much tighter fit than the kernel\n",

"density estimator.\n",

"\n",

"If you repeat the simulation you will see that this is consistently the case."

]

},

{

"cell_type": "markdown",

"id": "e0613265",

"metadata": {},

"source": [

"## Solution\n",

"\n",

"Look-ahead estimation of a TAR stationary density, where the TAR model\n",

"is\n",

"\n",

"$$\n",

"X_{t+1} = \\theta |X_t| + (1 - \\theta^2)^{1/2} \\xi_{t+1}\n",

"$$\n",

"\n",

"and $ \\xi_t \\sim N(0,1) $.\n",

"\n",

"Try running at `n = 10, 100, 1000, 10000` to get an idea of the speed of convergence"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "158b42f2",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"ϕ = norm()\n",

"n = 500\n",

"θ = 0.8\n",

"# == Frequently used constants == #\n",

"d = np.sqrt(1 - θ**2)\n",

"δ = θ / d\n",

"\n",

"def ψ_star(y):\n",

" \"True stationary density of the TAR Model\"\n",

" return 2 * norm.pdf(y) * norm.cdf(δ * y)\n",

"\n",

"def p(x, y):\n",

" \"Stochastic kernel for the TAR model.\"\n",

" return ϕ.pdf((y - θ * np.abs(x)) / d) / d\n",

"\n",

"Z = ϕ.rvs(n)\n",

"X = np.empty(n)\n",

"for t in range(n-1):\n",

" X[t+1] = θ * np.abs(X[t]) + d * Z[t]\n",

"ψ_est = LAE(p, X)\n",

"k_est = gaussian_kde(X)\n",

"\n",

"fig, ax = plt.subplots(figsize=(10, 7))\n",

"ys = np.linspace(-3, 3, 200)\n",

"ax.plot(ys, ψ_star(ys), 'b-', lw=2, alpha=0.6, label='true')\n",

"ax.plot(ys, ψ_est(ys), 'g-', lw=2, alpha=0.6, label='look-ahead estimate')\n",

"ax.plot(ys, k_est(ys), 'k-', lw=2, alpha=0.6, label='kernel based estimate')\n",

"ax.legend(loc='upper left')\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"id": "ea9d5b1e",

"metadata": {},

"source": [

"\n",

""

]

},

{

"cell_type": "markdown",

"id": "92458721",

"metadata": {},

"source": [

"## Exercise 2.2\n",

"\n",

"Replicate the figure on global convergence [shown above](#statd-egs).\n",

"\n",

"The densities come from the stochastic growth model treated [at the start of the lecture](#solow-swan).\n",

"\n",

"Begin with the code found [above](#stoch-growth).\n",

"\n",

"Use the same parameters.\n",

"\n",

"For the four initial distributions, use the shifted beta distributions"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "1b577c06",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"ψ_0 = beta(5, 5, scale=0.5, loc=i*2)"

]

},

{

"cell_type": "markdown",

"id": "6ea5e9af",

"metadata": {},

"source": [

"## Solution\n",

"\n",

"Here’s one program that does the job"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "24e4d2f4",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"# == Define parameters == #\n",

"s = 0.2\n",

"δ = 0.1\n",

"a_σ = 0.4 # A = exp(B) where B ~ N(0, a_σ)\n",

"α = 0.4 # f(k) = k**α\n",

"\n",

"ϕ = lognorm(a_σ)\n",

"\n",

"def p(x, y):\n",

" \"Stochastic kernel, vectorized in x. Both x and y must be positive.\"\n",

" d = s * x**α\n",

" return ϕ.pdf((y - (1 - δ) * x) / d) / d\n",

"\n",

"n = 1000 # Number of observations at each date t\n",

"T = 40 # Compute density of k_t at 1,...,T\n",

"\n",

"fig, axes = plt.subplots(2, 2, figsize=(11, 8))\n",

"axes = axes.flatten()\n",

"xmax = 6.5\n",

"\n",

"for i in range(4):\n",

" ax = axes[i]\n",

" ax.set_xlim(0, xmax)\n",

" ψ_0 = beta(5, 5, scale=0.5, loc=i*2) # Initial distribution\n",

"\n",

" # == Generate matrix s.t. t-th column is n observations of k_t == #\n",

" k = np.empty((n, T))\n",

" A = ϕ.rvs((n, T))\n",

" k[:, 0] = ψ_0.rvs(n)\n",

" for t in range(T-1):\n",

" k[:, t+1] = s * A[:,t] * k[:, t]**α + (1 - δ) * k[:, t]\n",

"\n",

" # == Generate T instances of lae using this data, one for each t == #\n",

" laes = [LAE(p, k[:, t]) for t in range(T)]\n",

"\n",

" ygrid = np.linspace(0.01, xmax, 150)\n",

" greys = [str(g) for g in np.linspace(0.0, 0.8, T)]\n",

" greys.reverse()\n",

" for ψ, g in zip(laes, greys):\n",

" ax.plot(ygrid, ψ(ygrid), color=g, lw=2, alpha=0.6)\n",

" ax.set_xlabel('capital')\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"id": "5d87c4da",

"metadata": {},

"source": [

"\n",

""

]

},

{

"cell_type": "markdown",

"id": "36cf00a6",

"metadata": {},

"source": [

"## Exercise 2.3\n",

"\n",

"A common way to compare distributions visually is with [boxplots](https://en.wikipedia.org/wiki/Box_plot).\n",

"\n",

"To illustrate, let’s generate three artificial data sets and compare them with a boxplot.\n",

"\n",

"The three data sets we will use are:\n",

"\n",

"$$\n",

"\\{ X_1, \\ldots, X_n \\} \\sim LN(0, 1), \\;\\;\n",

"\\{ Y_1, \\ldots, Y_n \\} \\sim N(2, 1), \\;\\;\n",

"\\text{ and } \\;\n",

"\\{ Z_1, \\ldots, Z_n \\} \\sim N(4, 1), \\;\n",

"$$\n",

"\n",

"Here is the code and figure:"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "85ab7753",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"n = 500\n",

"x = np.random.randn(n) # N(0, 1)\n",

"x = np.exp(x) # Map x to lognormal\n",

"y = np.random.randn(n) + 2.0 # N(2, 1)\n",

"z = np.random.randn(n) + 4.0 # N(4, 1)\n",

"\n",

"fig, ax = plt.subplots(figsize=(10, 6.6))\n",

"ax.boxplot([x, y, z])\n",

"ax.set_xticks((1, 2, 3))\n",

"ax.set_ylim(-2, 14)\n",

"ax.set_xticklabels(('$X$', '$Y$', '$Z$'), fontsize=16)\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"id": "bdcbb1cf",

"metadata": {},

"source": [

"Each data set is represented by a box, where the top and bottom of the box are the third and first quartiles of the data, and the red line in the center is the median.\n",

"\n",

"The boxes give some indication as to\n",

"\n",

"- the location of probability mass for each sample \n",

"- whether the distribution is right-skewed (as is the lognormal distribution), etc \n",

"\n",

"\n",

"Now let’s put these ideas to use in a simulation.\n",

"\n",

"Consider the threshold autoregressive model in [(2.19)](#equation-statd-tar).\n",

"\n",

"We know that the distribution of $ X_t $ will converge to [(2.20)](#equation-statd-tar-ts) whenever $ |\\theta| < 1 $.\n",

"\n",

"Let’s observe this convergence from different initial conditions using\n",

"boxplots.\n",

"\n",

"In particular, the exercise is to generate J boxplot figures, one for each initial condition $ X_0 $ in"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "4c00bcb0",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"initial_conditions = np.linspace(8, 0, J)"

]

},

{

"cell_type": "markdown",

"id": "4ff42b9b",

"metadata": {},

"source": [

"For each $ X_0 $ in this set,\n",

"\n",

"1. Generate $ k $ time-series of length $ n $, each starting at $ X_0 $ and obeying [(2.19)](#equation-statd-tar). \n",

"1. Create a boxplot representing $ n $ distributions, where the $ t $-th distribution shows the $ k $ observations of $ X_t $. \n",

"\n",

"\n",

"Use $ \\theta = 0.9, n = 20, k = 5000, J = 8 $"

]

},

{

"cell_type": "markdown",

"id": "91ea7677",

"metadata": {},

"source": [

"## Solution\n",

"\n",

"Here’s a possible solution.\n",

"\n",

"Note the way we use vectorized code to simulate the $ k $ time\n",

"series for one boxplot all at once"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "7afcc174",

"metadata": {

"hide-output": false

},

"outputs": [],

"source": [

"n = 20\n",

"k = 5000\n",

"J = 8\n",

"\n",

"θ = 0.9\n",

"d = np.sqrt(1 - θ**2)\n",

"δ = θ / d\n",

"\n",

"fig, axes = plt.subplots(J, 1, figsize=(10, 4*J))\n",

"initial_conditions = np.linspace(8, 0, J)\n",

"X = np.empty((k, n))\n",

"\n",

"for j in range(J):\n",

"\n",

" axes[j].set_ylim(-4, 8)\n",

" axes[j].set_title(f'time series from t = {initial_conditions[j]}')\n",

"\n",

" Z = np.random.randn(k, n)\n",

" X[:, 0] = initial_conditions[j]\n",

" for t in range(1, n):\n",

" X[:, t] = θ * np.abs(X[:, t-1]) + d * Z[:, t]\n",

" axes[j].boxplot(X)\n",

"\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"id": "eca4328d",

"metadata": {},

"source": [

"## Appendix\n",

"\n",

"\n",

"\n",

"Here’s the proof of [(2.6)](#equation-statd-dv).\n",

"\n",

"Let $ F_U $ and $ F_V $ be the cumulative distributions of $ U $ and $ V $ respectively.\n",

"\n",

"By the definition of $ V $, we have $ F_V(v) = \\mathbb P \\{ a + b U \\leq v \\} = \\mathbb P \\{ U \\leq (v - a) / b \\} $.\n",

"\n",

"In other words, $ F_V(v) = F_U ( (v - a)/b ) $.\n",

"\n",

"Differentiating with respect to $ v $ yields [(2.6)](#equation-statd-dv)."

]

}

],

"metadata": {

"date": 1769597375.8360865,

"filename": "stationary_densities.md",

"kernelspec": {

"display_name": "Python",

"language": "python3",

"name": "python3"

},

"title": "Continuous State Markov Chains"

},

"nbformat": 4,

"nbformat_minor": 5

}