{

"cells": [

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "5KRoA4kukwie"

},

"source": [

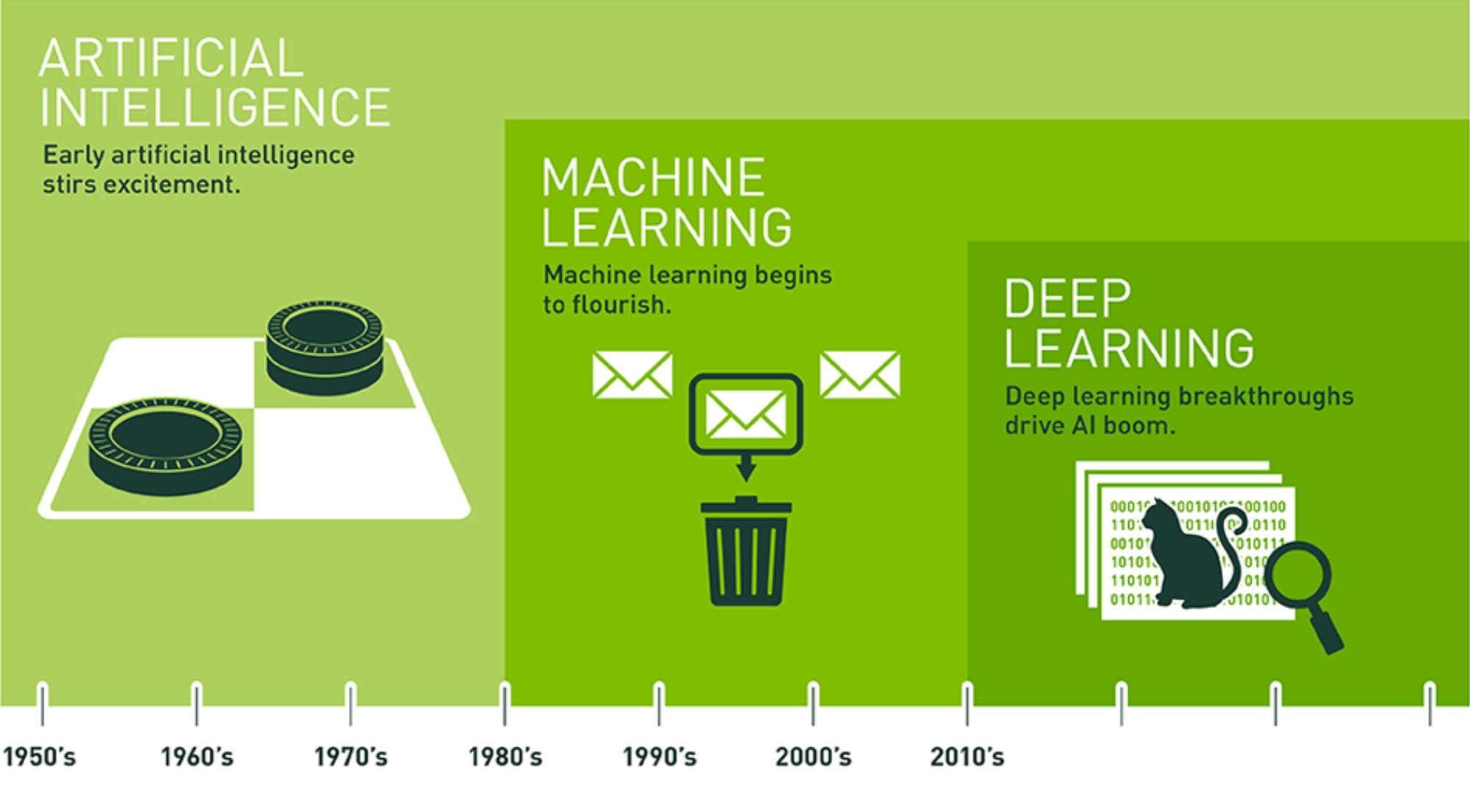

"# Czym jest Machine Learning?\n",

"\n",

"**Sztuczna Inteligencja** (*AI ang. Artificial Intelligence*) - to koncepcja według której maszyny są zdolne do wykonywania zadań w sposób inteligentny, a nie zalgorytmizowany w ściśle określony sposób.\n",

"\n",

"**Uczenie Maszynowe** (*ML ang. Machine Learning*) -gałąź AI, gdzie do nauki określonego modelu wykorzystywane są zewnętrzne dane. Algorytmy ML potrafią wyciągać wnioski z danych uczących i dokonywać predykcji na nowych danych.\n",

"\n",

"**Uczenie Głębokie** (*DL ang. Deep Learning*) - gałąź ML inspirowana biologicznymi uwarunkowaniami ludzkiego mózgu. Algorytmy DL w odróżnieniu od ML potrafią same wybrać odpowiednie cechy z zadanych danych. Wiąże się to z tym, że DL wymaga silniejszych jednostek obliczeniowych (GPU, TPU) oraz znacznie większej ilości danych.\n",

"\n",

"\n",

"\n",

"# Gdzie wykorzystujemy Machine Learning?\n",

"\n",

"Główne zastosowania ML:\n",

"\n",

"1. **Regresja** - predykcja wartości na podstawie innych informacji.\n",

" \n",

"\n",

"2. **Klasyfikacja** - predykcja klas na podstawie etykietowanych danych uczących\n",

"\n",

" \n",

"\n",

"3. **Klasteryzacja** - predykcja klas i dopasowanie ich struktury na podstawie nieetykietowanych danych uczących\n",

"\n",

" \n",

"\n",

"# W jaki sposób to działa?\n",

"\n",

""

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "GjHTZcThldXk"

},

"source": [

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"\n",

"# Regresja"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "HhCoKo7nldXw"

},

"source": [

"### Wczytanie danych - Boston House Pricing\n",

"13 predyktorów opisujących domy i ich okolicę, 506 obserwacji\n",

"(wspóczynnik przestępczości, stosunek liczby uczniów do nauczycieli, liczba pokoi itp.)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 212

},

"colab_type": "code",

"id": "GZlSsZ76ldXx",

"outputId": "dc89417f-3292-45ac-8e5e-97c0dea32077"

},

"outputs": [],

"source": [

"import sklearn\n",

"from sklearn.datasets import load_boston\n",

"import pandas as pd\n",

"import numpy as np\n",

"np.random.seed(123)\n",

"\n",

"boston_dict = load_boston()\n",

"print(boston_dict.keys())\n",

"\n",

"boston=pd.DataFrame(boston_dict.data)\n",

"boston.columns=boston_dict.feature_names\n",

"print(boston.head())\n",

"\n",

"X = boston\n",

"Y = pd.DataFrame(boston_dict.target)"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "vGoS01J1ldX4"

},

"source": [

"### Analiza Danych"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 476

},

"colab_type": "code",

"id": "a-vH3hfBldX5",

"outputId": "e9cab294-cbd4-4558-b179-4dbef898932f"

},

"outputs": [],

"source": [

"import seaborn as sns\n",

"import matplotlib.pyplot as plt\n",

"sns.pairplot(boston.iloc[:,[0,5,10]], size=2)\n",

"plt.tight_layout()\n",

"#CRIM - współczynnik przestępczości\n",

"#RM - liczba pokoi\n",

"#PTRATION - stosunek liczby uczniów do liczby nauczycieli"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "zqVeZJpXxpnh"

},

"source": [

"Preprocessing danych\n",

"- standaryzacja\n",

"- feature selection"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "jHJm8zvuldYA"

},

"source": [

"Losowy podział na zbiór treningowy i testowy"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 52

},

"colab_type": "code",

"id": "h_BQdWOPldYB",

"outputId": "bbba09f5-de67-4566-c37b-7de95a0944b9"

},

"outputs": [],

"source": [

"from sklearn import model_selection\n",

"X_train, X_test, Y_train, Y_test = sklearn.model_selection.train_test_split(X, Y, test_size = 0.33, random_state = 5)\n",

"print(\"Rozmiar zbioru treningowego - predyktory:\",X_train.shape,\"Rozmiar zbioru treningowego - etykiety:\", Y_train.shape )\n",

"print(\"Rozmiar zbioru testowego - predyktory:\",X_test.shape,\"Rozmiar zbioru testowego - etykiety:\", Y_test.shape )"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "cTuAf3H0ldYD"

},

"source": [

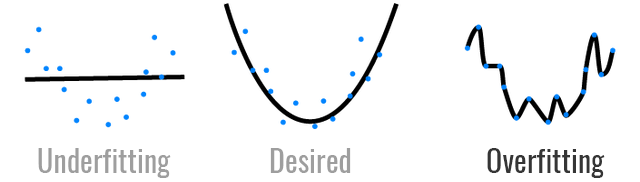

"Cel: uniknąć przeuczenia\n"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "VyzKkBYaldYE"

},

"source": [

"W przypadku nie wyodrębnienia zbioru testowego trudno rzetelnie ocenić zdolność sieci do predykcji. \\\\\n",

"Dodatkowo może wystąpić zjawisko przeuczenia, czyli model idealnie dopasuje się do danych treningowych, natomiast nie będzie miał zdolności do generalizacji.\n",

"\n",

"\n",

"https://hackernoon.com/memorizing-is-not-learning-6-tricks-to-prevent-overfitting-in-machine-learning-820b091dc42"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "oOyhVX6DldYE"

},

"source": [

"## Regresja Liniowa"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "ix7JeoZNldYF"

},

"source": [

"Zadanie polega na jak najlepszym dopasowaniu prostej do danych, wyrażonej wzorem poniżej\n",

"${y_{i}=\\beta _{0}1+\\beta _{1}x_{i1}+\\cdots +\\beta _{p}x_{ip}+\\varepsilon _{i}=\\mathbf {x} _{i}^{\\top }{\\boldsymbol {\\beta }}+\\varepsilon _{i},\\qquad i=1,\\dots ,n}$ \n",

"\n",

"Dobór parametrów odbywa się zgodnie z metodą najmniejszych kwadratów.\n",

"\n",

"\n",

"\n",

"https://blog.etrapez.pl/ekonometria/o-regresji-i-metodzie-najmniejszych-kwadratow-czyli-skad-wziely-sie-oszacowania-parametrow-modelu/"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 1015

},

"colab_type": "code",

"id": "tG3Au86lldYG",

"outputId": "5b1064e3-b2a2-43ea-d68e-419f348f7ddf"

},

"outputs": [],

"source": [

"from sklearn.linear_model import LinearRegression\n",

"lm = LinearRegression()\n",

"\n",

"#naucz model na danych treningowych\n",

"lm.fit(X_train, Y_train)\n",

"\n",

"print(\"Współczynniki beta:\", lm.coef_)\n",

"print(boston_dict.DESCR)\n",

"\n",

"Y_pred_linear = lm.predict(X_test)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "hUfL14PHldYH"

},

"outputs": [],

"source": [

"def points_plot(Y_test, Y_pred):\n",

" fig, ax = plt.subplots(figsize=(7, 5))\n",

" ax.scatter(Y_test, Y_pred, label='poprawność modelu')\n",

" ax.plot([Y_test.min(), Y_test.max()], [Y_test.min(), Y_test.max()], 'k--', lw=3, label='idealne dopasowanie')\n",

" ax.set_xlabel(\"Ceny rzeczywiste domów [$]\")\n",

" ax.set_ylabel(\"Ceny wygenerowane prze model [$]\")\n",

" ax.set_title(\"Ceny rzeczywiste vs. wygenerowane\")\n",

" ax.legend()\n",

" plt.show()"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 350

},

"colab_type": "code",

"id": "c5wkyY2_ldYK",

"outputId": "6809c7fb-4d29-405b-fe87-212449aad37a"

},

"outputs": [],

"source": [

"points_plot(Y_test, Y_pred_linear)"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "i6eo6-7uldYN"

},

"source": [

"**Metryki:**\n",

"- pierwiastek z błędu średniokwadratowego\n",

"${\\operatorname {RMSE} ={\\sqrt {\\frac {\\sum _{i=1}^{n}({\\hat {y}}_{i}-y_{i})^{2}}{n}}}}$\n",

"\n",

" im mniejsza wartość, tym lepiej\n",

"\n",

"- współczynnik determinacji [0,1] - określa jak dużo zmienności danych jest uchywycone przez model\n",

"${R^{2}={\\frac {\\sum \\limits _{i=1}^{n}({\\hat {y}}_{i}-{\\overline {y}})^{2}}{\\sum \\limits _{i=1}^{n}(y_{i}-{\\overline {y}})^{2}}}}$\n",

"\n",

" im wartość bliższa jedności, tym lepiej"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "fIzZyzyxldYN"

},

"outputs": [],

"source": [

"import scipy.stats as stats\n",

"from sklearn.metrics import r2_score\n",

"\n",

"def metrics (Y_test, Y_pred):\n",

" rmse = np.sqrt(sklearn.metrics.mean_squared_error(Y_test, Y_pred))\n",

" print(\"RMSE =\",rmse)\n",

" r2=r2_score(Y_pred, Y_test)\n",

" print(\"R^2 =\",r2)\n",

" return rmse,r2"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 52

},

"colab_type": "code",

"id": "k7bZHMo8ldYP",

"outputId": "a463fbc6-43c5-4d8e-91c4-cf4b243f8d00"

},

"outputs": [],

"source": [

"import numpy as np\n",

"results=pd.DataFrame() #do agregowania metryk\n",

"results['linear']=metrics(Y_test, Y_pred_linear)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 301

},

"colab_type": "code",

"id": "9kemBh4IldYS",

"outputId": "258d2cee-ab27-4751-c515-17d3b7e86f17"

},

"outputs": [],

"source": [

"error = np.array(Y_pred_linear - Y_test)\n",

"plt.hist(error, bins = 20, facecolor='b')\n",

"plt.xlabel('wartości błędu [$]')\n",

"plt.ylabel('częstość występowania')\n",

"plt.figure(figsize=(5,3))\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "5LZrOmS-ldYW"

},

"source": [

"## Drzewa regresyjne\n",

"\n",

"- budowa: korzeń, węzły (m), liście ($m_R$, $m_L$)\n",

"- wartości w liściach wyliczane są jako średnia z obserwacji\n",

"- dobór struktury drzewa:\n",

" szukamy podziału m na $m_L$ i $m_R$, aby SSE($m_L$) + SSE($m_R$) było minimalne\n",

" \n",

" *SSE(.) - suma kwadratów rezyduów, gdy regresja dla (.) estymowana\n",

"jest przez średnią próbkową wartości zmiennej objaśnianej dla tego węzła\n",

"\n",

"Strategia wyboru najlepszego drzewa\n",

"\n",

"• utwórz pełne drzewo \n",

"\n",

"• przy różnych parametrach określających koszt złożoności drzew przytnij drzewo \n",

"\n",

"• spośród tak utworzonej skończonej rodziny drzew wybierz drzewo dające najmniejszy błąd w oparciu o kroswalidację\n",

"\n",

"Zaleta:\n",

"- interpretowalność\n",

"\n",

"Wada:\n",

"- duża wariancja i niestabilność\n",

"(małą zmiana w danych może skutkować znaczną zmianą struktury drzewa)\n",

"\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "vvl8X4etldYX"

},

"outputs": [],

"source": [

"from sklearn import tree\n",

"from sklearn.tree import DecisionTreeRegressor\n",

"regression_model = DecisionTreeRegressor(criterion=\"mse\", min_samples_leaf=2) \n",

"\n",

"regression_model.fit(X_train,Y_train)\n",

"\n",

"Y_pred_tree = regression_model.predict(X_test)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 52

},

"colab_type": "code",

"id": "p5DxJhTIldYZ",

"outputId": "0b8c4df2-f58d-4722-b268-0b61be624409"

},

"outputs": [],

"source": [

"results['drzewo']=metrics(Y_test, Y_pred_tree)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 350

},

"colab_type": "code",

"id": "L57_AW10ldYe",

"outputId": "6473842b-f450-4d51-acf9-b0406cb95786",

"scrolled": true

},

"outputs": [],

"source": [

"points_plot(Y_test, Y_pred_tree)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 1139

},

"colab_type": "code",

"id": "5PAiSDG31R9T",

"outputId": "52363dd8-98a4-4d11-88b5-134a59fc0708"

},

"outputs": [],

"source": [

"#wizualizacja struktury drzewa\n",

"\n",

"from sklearn.tree import DecisionTreeRegressor, export_graphviz\n",

"import graphviz\n",

"plt.figure(figsize=(20,20))\n",

"splits=tree.plot_tree(regression_model, filled=True)\n",

"\n",

"# #umożliwia dokładną analizę węzłów\n",

"# dot_data = tree.export_graphviz(regression_model, out_file=None, \n",

"# feature_names=X_train.columns, \n",

"# filled=True, rounded=True, \n",

"# special_characters=True) \n",

"# graph = graphviz.Source(dot_data) \n",

"# graph"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "YXRA1ikbldYh"

},

"source": [

"## XGBoost (eXtreme Gradient Boosting)\n",

"\n",

"Jest to złożony algorytm, w którym wykorzystano wiele stosowanych wcześniej technik:\n",

"\n",

"- bootstrap - losowanie ze zwracaniem obserwacji z próby\n",

"- bagging - stosuje się komitet drzew a następnie wyniki są agregowane (w ten sposób zmniejsza się wariancję modeli)\n",

"- boosting - nadawanie obserwacjom bootstrapowym większych wag w trakcie losowania w przypadku gdy jeden z estymator regresji przypisał wartość objaśnianą o dużym błędzie\n",

"\n",

"Minializacja funkcji celu dokonywana jest w oparciu o spadek gradientu.\n",

"\n",

"\n",

"Zaleta:\n",

"- duża skalowalność (używany w CERN)\n",

"\n",

"Wada:\n",

"- trudna interpretacja"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 35

},

"colab_type": "code",

"id": "ZeFrEIa7ldYi",

"outputId": "1226e5db-9ea0-49e6-bc4f-3e4d3764cabe"

},

"outputs": [],

"source": [

"from xgboost import XGBRegressor, plot_tree\n",

"import xgboost as xgb\n",

"model = XGBRegressor()\n",

"model.fit(X_train, Y_train)\n",

"Y_pred_xgb = model.predict(X_test)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 350

},

"colab_type": "code",

"id": "X8mv1i_NldYm",

"outputId": "3c318117-0e31-4123-e02d-80cff8bdf598"

},

"outputs": [],

"source": [

"points_plot(Y_test, Y_pred_xgb)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 52

},

"colab_type": "code",

"id": "msnM9PJVldYp",

"outputId": "db4cd12d-1db0-4b68-bdf4-7b1393a91cdb"

},

"outputs": [],

"source": [

"results['xgboost']=metrics(Y_test, Y_pred_xgb)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 364

},

"colab_type": "code",

"id": "HTDuWbjFldYs",

"outputId": "3555b9ab-872d-4a77-f956-fa32b3c8f194",

"scrolled": true

},

"outputs": [],

"source": [

"fig, ax = plt.subplots(figsize=(25, 25))\n",

"xgb.plot_tree(model, num_trees=4, ax=ax)\n",

"plt.show()"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 321

},

"colab_type": "code",

"id": "f6sAzgeNldYv",

"outputId": "5766d91c-1b69-4040-9b55-f0aada1d0221"

},

"outputs": [],

"source": [

"# które predyktory są najbardziej informatywne - feature importance\n",

"sns.barplot(y=model.feature_importances_, x=boston.columns)\n",

"plt.ylabel('Współczynnik informatywności')\n",

"plt.xlabel('Predykatory')\n",

"plt.xticks(rotation=45)\n",

"plt.show()\n",

"#LSTAT: procent populacji o niższym statusie społecznym\n",

"#RM: liczba pokoi"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "QZxcnnDJiwDN"

},

"source": [

"##Wyniki:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 106

},

"colab_type": "code",

"id": "sL8coZcpiu98",

"outputId": "f8076beb-fcfe-45ca-9d6e-285d7517fe2b"

},

"outputs": [],

"source": [

"idx_names = {0:'RMSE', 1:'R^2'} \n",

"results1=results.rename(index=idx_names)\n",

"results1"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "8ixB4aIKghH4"

},

"source": [

"PODSUMOWANIE\n",

"1. Najlepszy okazał się algorytm XGBoost (najmniejsze RMSE i największe R^2)\n",

"2. Decydując się na ostateczny model warto dobrze przemyśleć wybór metryki \n",

"(mogą wskazywać inne modele jako najlepsze/najgorsze)\n",

"przykład: najgorsze R^2 ma model liniowy podczas gdy największą wartość RMSE daje drzewo\n",

"\n",

"Uwaga: W celu lepszej inwestygacji efektywności modeli wykorzystuje się metodę kroswalidacji"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "pl4TqAH14X3l"

},

"source": [

"**Spostrzeżenie:**\n",

"Niezależnie od zastosowanego algorytmu największe błędy estymacji występują dla najdroższych domów - ok. 50 000$\n",

"\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 274

},

"colab_type": "code",

"id": "E0HjI_Gj4w52",

"outputId": "48791938-1a04-457e-c2bf-eee8e1c789be"

},

"outputs": [],

"source": [

"sns.set()\n",

"sns.distplot(Y, bins=22)\n",

"plt.show()\n",

"#rozkład ma charakter dwumodalny"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "T_f59o_83H6f"

},

"source": [

"**Zadanie ilustracyjne**\n",

"- wytrenować model regresji liniowej na całym dostępnym zbiorze: X,Y \n",

"(bez podziału na treningowy i testowy)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "jUMiCbI8nRaa"

},

"outputs": [],

"source": []

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "3Sf8pr8Hkwif"

},

"source": [

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"\n",

"# Klasyfikacja "

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "pjFPE0zskwig"

},

"source": [

"## Random Forest Classifier\n",

"\n",

"RFC (ang. Random Forest Classifier) Losowy Las Decyzyjny:\n",

"Zasada działanie RFC polega na tworzeniu komitetu Drzew Decyzjnych i łączy rezultaty ich działania abu uzyskać bardziej precyzyją i stabliną predykcję.\n",

"\n",

"\n",

""

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "1Uyj4qxAkwih"

},

"outputs": [],

"source": [

"from sklearn import datasets\n",

"\n",

"iris = datasets.load_iris()"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "XX70QwF4kwik",

"outputId": "2d63dd1e-9e8b-4166-ad0b-a2c55871d6df"

},

"outputs": [],

"source": [

"print(iris.target_names)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "ku80SZCFkwio",

"outputId": "4b05bcf1-94a4-4866-c4a8-e7c2c09210f7"

},

"outputs": [],

"source": [

"print(iris.feature_names)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "dyi2OW9pkwir"

},

"outputs": [],

"source": [

"from sklearn.model_selection import train_test_split\n",

"\n",

"X = iris.data\n",

"y = iris.target\n",

"X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "PkcXySVBkwit"

},

"outputs": [],

"source": [

"from sklearn.ensemble import RandomForestClassifier\n",

"\n",

"clf = RandomForestClassifier(n_estimators=100)\n",

"_ = clf.fit(X_train, y_train)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "wKN13qK3kwiv",

"outputId": "f8427b53-9a17-4cf1-a6f5-11fcb97b772e"

},

"outputs": [],

"source": [

"from sklearn.metrics import accuracy_score\n",

"\n",

"print(\n",

" f\"Accuracy na zbiorze treningowym: {int(100*accuracy_score(y_train, clf.predict(X_train)))}%\"\n",

")\n",

"print(\n",

" f\"Accuracy na zbiorze testowym: {int(100*accuracy_score(y_test, clf.predict(X_test)))}%\"\n",

")"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "lWzDQLg3kwiy",

"outputId": "27ac2be2-8aca-4881-9a74-17e6ff9fa8df"

},

"outputs": [],

"source": [

"import pandas as pd\n",

"feature_imp = pd.Series(clf.feature_importances_,\n",

" index=iris.feature_names).sort_values(ascending=False)\n",

"feature_imp"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "g_7VArn5kwi1",

"outputId": "78cb8fd9-e88d-40bb-fde0-4227b5282abd"

},

"outputs": [],

"source": [

"import warnings\n",

"warnings.filterwarnings('ignore')\n",

"import seaborn as sns\n",

"import matplotlib.pyplot as plt # do wizualizacji danych\n",

"%matplotlib inline\n",

"\n",

"sns.barplot(y=feature_imp, x=feature_imp.index)\n",

"\n",

"plt.ylabel('Feature Importance Score')\n",

"plt.xlabel('Features')\n",

"plt.title(\"Visualizing Important Features\")\n",

"plt.xticks(rotation=45)\n",

"plt.show()"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "jQsSviXOkwi4",

"outputId": "26a620ed-6df7-4bc3-85ee-756e11d3b7e8"

},

"outputs": [],

"source": [

"from sklearn.metrics import confusion_matrix\n",

"y_pred = clf.predict(X_test)\n",

"cm = confusion_matrix(y_test, y_pred)\n",

"print(cm)\n",

"\n",

"df_cm = pd.DataFrame(cm, index=iris.target_names, columns=iris.target_names)\n",

"sns.set(font_scale=1.4)\n",

"grid = sns.heatmap(df_cm,\n",

" annot=True,\n",

" annot_kws={\"size\": 16},\n",

" fmt='g',\n",

" cmap=plt.cm.Blues)\n",

"grid.set_title('Confusion matrix')\n",

"plt.ylabel('True label')\n",

"plt.xlabel('Predicted label')\n",

"plt.yticks(rotation=0)\n",

"grid.xaxis.set_ticks_position('top')\n",

"grid.xaxis.set_label_position('top')\n",

"plt.show()"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "TV1nIpNakwi7"

},

"outputs": [],

"source": [

"X = iris.data[:, 2:\n",

" 4] #Working with the two first features : sepal length and sepal width\n",

"feature_1_name = iris.feature_names[2]\n",

"feature_2_name = iris.feature_names[3]\n",

"y = iris.target\n",

"\n",

"_ = clf.fit(X, y)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "9gbvWahgkwi9",

"outputId": "9b9854a8-9793-4fa7-feab-cadcc701967f"

},

"outputs": [],

"source": [

"import numpy as np\n",

"\n",

"x_min, x_max = X[:, 0].min() - .1, X[:, 0].max() + .1\n",

"y_min, y_max = X[:, 1].min() - .1, X[:, 1].max() + .1\n",

"xx, yy = np.meshgrid(np.linspace(x_min, x_max, 100),\n",

" np.linspace(y_min, y_max, 100))\n",

"pred = clf.predict(np.c_[xx.ravel(), yy.ravel()])\n",

"pred = pred.reshape(xx.shape)\n",

"plt.figure(figsize=(10, 6))\n",

"plt.pcolormesh(xx, yy, pred, cmap=plt.get_cmap(\"Pastel1\"))\n",

"plt.scatter(X[:, 0], X[:, 1], c=y, cmap=plt.get_cmap(\"Set1\"))\n",

"plt.xlabel(feature_1_name)\n",

"plt.ylabel(feature_2_name)\n",

"plt.axis('tight')\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "FMLVmUkekwi_"

},

"source": [

"## Support Vector Machine"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "bPGfWBalkwjA"

},

"source": [

"**SVM (ang. Support Vector Machine) - Maszyna wektorów nośnych.**\n",

"\n",

"Zasada działania tego algorytmu polega na znalezieniu hiper-płaszczyzny w N-wymiarowej przestrzeni (N to liczba cech, kolumn w naszych danych) która oddzieli i sklasyfikuje punkty ze zbioru dnaych.\n",

"\n",

""

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "raroRguUkwjB"

},

"source": [

"Załadujmy dane:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "41Y2f81QkwjB"

},

"outputs": [],

"source": [

"from sklearn.datasets import load_digits\n",

"\n",

"digits = load_digits()\n",

"X_train, X_test, y_train, y_test = train_test_split(digits.data,\n",

" digits.target,\n",

" random_state=0)"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "HN5S1_S0kwjE"

},

"source": [

"Przykałdowy obrazek:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "gQ-Sv3kvkwjE",

"outputId": "bc0e21e0-86f8-47b8-9d12-3a2f758bf28c",

"scrolled": true

},

"outputs": [],

"source": [

"from random import randint\n",

"\n",

"rand = randint(0, digits.target.shape[0])\n",

"label = digits.target[rand]\n",

"pixels = digits.images[rand]\n",

"plt.title(f'Label is {label}')\n",

"g = sns.heatmap(pixels, linewidth=0, xticklabels=False, yticklabels=False, cmap='gray', cbar=False, square =True)\n",

"plt.show()\n",

"print(f\"Liczba obrazków: {digits.images.shape[0]}, rozmiar obrazka: {digits.images.shape[1:3]}\")\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "pNNNBm4EkwjI",

"outputId": "dcc04f40-26f8-4751-c409-90c02b5910ca"

},

"outputs": [],

"source": [

"plt.figure(figsize=(10,6))\n",

"plt.bar(range(10), np.bincount(y_train))\n",

"plt.title(\"Rozkład przykładów pośród klas\")\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "4rfiNolPkwjL"

},

"source": [

"Nauczmy nasz liniowy model:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "j_t-8tffkwjN"

},

"outputs": [],

"source": [

"from sklearn.svm import LinearSVC\n",

"\n",

"svm = LinearSVC()\n",

"_ = svm.fit(X_train, y_train)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "xFlIxlP-kwjP",

"outputId": "2e9c0290-e4fc-4914-d461-b94dc668ae9e"

},

"outputs": [],

"source": [

"print(f\"Accuracy na zbiorze traningowym: {int(100*accuracy_score(y_train, svm.predict(X_train)))}%\")\n",

"print(f\"Accuracy na zbiorze testowym: {int(100*accuracy_score(y_test, svm.predict(X_test)))}%\")"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "l0sF8KaDkwjT",

"outputId": "1765076e-ff5c-4d20-a8df-71113f1b4011"

},

"outputs": [],

"source": [

"from sklearn.metrics import confusion_matrix\n",

"y_pred = svm.predict(X_test)\n",

"cm = confusion_matrix(y_test, y_pred)\n",

"df_cm = pd.DataFrame(cm, index = range(10), columns = range(10))\n",

"sns.set(font_scale=1.4)\n",

"grid = sns.heatmap(df_cm, annot=True,annot_kws={\"size\": 16}, fmt='g', cmap=plt.cm.Blues)\n",

"grid.set_title('Confusion matrix')\n",

"plt.ylabel('True label')\n",

"plt.xlabel('Predicted label')\n",

"plt.yticks( rotation=0)\n",

"grid.xaxis.set_ticks_position('top')\n",

"grid.xaxis.set_label_position('top') \n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "3J4N3e8UkwjW"

},

"source": [

"## Klasyfikacja z użyciem perceptronu"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "9EzK47FdkwjX"

},

"source": [

"Współczesne osiągnięcia sztucznej inteligencji to w dużej mierze załuga uczenia głębokiego (ang. deep learning) a w szczególności sieci neuronowych. Najbardziej podstawową architekturą sieci neuronowej jest Perceptron Wielowarstwowy. Standardowe sieci używane w biznesie lub nauce składją się z dziesiętek lub setek tysięcy neuronów. Poniżej przedstawię zasadę działania pojedynczego neuronu.\n",

"\n",

"**Budowa neuronu:**\n",

"\n",

"\n",

"**Feedforward - algorytm propagacji w przód:**\n",

"\n",

" $z=w_1x_1+w_2x_2+b$\n",

"\n",

" $p=sigmoid(z)$\n",

"\n",

" $c=(p-y)^2$\n",

"\n",

"**Backpropagation - algorytm propagacji błędu wstecz:**\n",

"\n",

"Algorytm propagacji wstecznej polega na propagacji błędu jakim obarczona została predykcja wstecz względem kierunku propagacji **feedforward**\n",

"\n",

" $w_1 = w_1 - learning\\_rate \\cdot \\frac{\\partial c}{\\partial w_1}$\n",

"\n",

" $w_2 = w_2 - learning\\_rate \\cdot \\frac{\\partial c}{\\partial w_2}$\n",

"\n",

" $b = b - learning\\_rate \\cdot \\frac{\\partial c}{\\partial b}$\n",

"\n",

"**Z reguły łańcuchowej:**\n",

"\n",

" $\\frac{\\partial c}{\\partial w_1} = \\frac{\\partial c}{\\partial p} \\cdot \\frac{\\partial p}{\\partial z} \\cdot \\frac{\\partial z}{\\partial w_1}$\n",

"\n",

" $\\frac{\\partial c}{\\partial w_2} = \\frac{\\partial c}{\\partial p} \\cdot \\frac{\\partial p}{\\partial z} \\cdot \\frac{\\partial z}{\\partial w_2}$\n",

"\n",

" $\\frac{\\partial c}{\\partial b} = \\frac{\\partial c}{\\partial p} \\cdot \\frac{\\partial p}{\\partial z} \\cdot \\frac{\\partial z}{\\partial b}$\n",

"\n",

"**Liczymy różniczki:**\n",

"\n",

" $\\frac{\\partial c}{\\partial p} = 2(p-y)$\n",

"\n",

" $\\frac{\\partial p}{\\partial z} = sigmoid'(z)$\n",

"\n",

" $\\frac{\\partial z}{\\partial w_1} = x_1$\n",

"\n",

" $\\frac{\\partial z}{\\partial w_2} = x_2$\n",

"\n",

" $\\frac{\\partial z}{\\partial b} = 1$\n",

"\n",

"**Funkcja Aktywacji:**\n",

"\n",

" $sigmoid(z) = \\frac{1}{1+e^{-z}}$\n",

"\n",

" $sigmoid'(z) = sigmoid(z) \\cdot (1- sigmoid(z))$\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "9BUIvYwokwjX"

},

"outputs": [],

"source": [

"def load_data(f1,f2):\n",

" iris = datasets.load_iris()\n",

" X = iris.data[:, (f1,f2)]\n",

" y = iris.target\n",

" X = X[y != 2]\n",

" y = y[y != 2]\n",

" feature_1_name = iris.feature_names[f1]\n",

" feature_2_name = iris.feature_names[f2]\n",

" \n",

" return X,y, feature_1_name, feature_2_name"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "Cwot2snykwjZ",

"outputId": "cf2652f5-ad1d-42be-82f7-1b772b6d0c8a"

},

"outputs": [],

"source": [

"f1,f2 = 0,1\n",

"X, y, feature_1_name, feature_2_name = load_data(f1,f2)\n",

"print(\"Selected features: \", feature_1_name, feature_2_name)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "IcOC6aQskwjb",

"outputId": "ca148b23-653f-4e82-8572-bf393f84ff88",

"scrolled": true

},

"outputs": [],

"source": [

"my_flower = np.asarray([[6.5, 3.5]])\n",

"\n",

"plt.figure(figsize=(8, 8))\n",

"plt.scatter(X[:, 0], X[:, 1], c=y, s=100, edgecolor='k', cmap=plt.cm.Set1)\n",

"plt.scatter([my_flower[0][0]], [my_flower[0][1]],\n",

" c='g',\n",

" edgecolor='k',\n",

" marker='d',\n",

" s=200)\n",

"plt.xlabel(feature_1_name)\n",

"plt.ylabel(feature_2_name)\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "wupITAHCkwjd"

},

"source": [

"Funkcja aktywacji"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "qGoOYjN6kwje",

"outputId": "3d97c6b2-8761-4bee-8afe-bc52fd74215f"

},

"outputs": [],

"source": [

"def sigmoid(x):\n",

" return 1 / (1 + np.exp(-x))\n",

"\n",

"\n",

"def d_sigmoid(x):\n",

" return sigmoid(x) * (1 - sigmoid(x))\n",

"\n",

"\n",

"ls = np.linspace(-5, 5, 100)\n",

"plt.plot(ls, sigmoid(ls), \"red\") # sigmoid in blue\n",

"plt.plot(ls, d_sigmoid(ls), \"gray\") # d_sigmoid in red\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "3WtamBNlkwjh"

},

"source": [

"**Implementacja Sieci Neuronowej**"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "Z44YAmtnkwji",

"outputId": "ad3548e5-0b0a-4676-989e-7198c92f83f0",

"scrolled": true

},

"outputs": [],

"source": [

"class NNClassifier:\n",

" def cost(self):\n",

" c = 0\n",

" for j in range(len(self.X)):\n",

" pred = self.predict(X[j])\n",

" c += np.square(pred - self.y[j])\n",

" return c\n",

" \n",

" def random_example(self):\n",

" ri = np.random.randint(len(self.X))\n",

" return self.X[ri], self.y[ri]\n",

"\n",

" def fit(self, X, y, plot=False, iterations=10_000, learning_rate=0.1):\n",

" #Losowa inicjalizacja zmiennych\n",

" self.w1 = np.random.randn()\n",

" self.w2 = np.random.randn()\n",

" self.b = np.random.randn()\n",

" self.X = X\n",

" self.y = y\n",

"\n",

" costs = [] # Lista przechowywująca wartości funkcji kosztu\n",

"\n",

" for i in range(iterations):\n",

" point, target = self.random_example()\n",

"\n",

" # Co każde 100 iteracji badaj funkcję kosztu\n",

" if i % 100 == 0:\n",

" costs.append(self.cost())\n",

"\n",

" z = point[0] * self.w1 + point[1] * self.w2 + self.b\n",

" pred = sigmoid(z)\n",

"\n",

" dcost_dpred = 2 * (pred - target)\n",

" dpred_dz = d_sigmoid(z)\n",

"\n",

" dz_dw1 = point[0]\n",

" dz_dw2 = point[1]\n",

" dz_db = 1\n",

"\n",

" dcost_dz = dcost_dpred * dpred_dz\n",

"\n",

" dcost_dw1 = dcost_dz * dz_dw1\n",

" dcost_dw2 = dcost_dz * dz_dw2\n",

" dcost_db = dcost_dz * dz_db\n",

"\n",

" self.w1 = self.w1 - learning_rate * dcost_dw1\n",

" self.w2 = self.w2 - learning_rate * dcost_dw2\n",

" self.b = self.b - learning_rate * dcost_db\n",

" \n",

" if plot:\n",

" plt.figure(figsize=(8, 5))\n",

" plt.xlabel('Iterations')\n",

" plt.ylabel('Cost')\n",

" plt.plot(costs)\n",

" plt.show()\n",

" \n",

" def predict(self, x):\n",

" if isinstance(x,tuple):\n",

" x = np.asarray(x)\n",

" if len(x.shape) == 1:\n",

" x = np.asarray([x])\n",

" z = self.w1 * x[:,0] + self.w2 * x[:,1] + self.b\n",

" pred = sigmoid(z)\n",

" pred = np.where(pred >= 0.5, 1, 0)\n",

" return pred\n",

"\n",

"nnc = NNClassifier()\n",

"nnc.fit(X, y, plot=True)"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "4q1h7apkkwjl"

},

"source": [

"**Predykcja dla nowego przypadku**"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "HIaqgLBykwjm",

"outputId": "45aecf73-a0ad-48a2-febc-3c29aae7b38d"

},

"outputs": [],

"source": [

"my_flower = (6.5, 3.5)\n",

"pred = nnc.predict(my_flower)\n",

"print(\"0 - red, 1 -> gray\")\n",

"pred_col = 'gray' if pred.item() > 0.5 else 'red'\n",

"print(f\"{pred.item()} -> {pred_col}\")"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "dpqrMLNekwjo"

},

"source": [

"**Wizualizacja predykcji sieci neuronowej**"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "7zPJfq7nkwjp"

},

"outputs": [],

"source": [

"f1,f2 = 2,3\n",

"X, y, feature_1_name, feature_2_name = load_data(f1,f2)\n",

"nnc.fit(X, y)\n",

"x_min, x_max = X[:, 0].min() - .1, X[:, 0].max() + .1 \n",

"y_min, y_max = X[:, 1].min() - .1, X[:, 1].max() + .1 \n",

"xx, yy = np.meshgrid(np.linspace(x_min, x_max, 100),\n",

" np.linspace(y_min, y_max, 100))"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "7fi6bN9ikwjr",

"outputId": "75502cc3-be32-4414-987c-454b70b17120",

"scrolled": true

},

"outputs": [],

"source": [

"plt.figure(figsize=(10,6))\n",

"pred = nnc.predict(np.c_[xx.ravel(), yy.ravel()])\n",

"pred = pred.reshape(xx.shape)\n",

"\n",

"plt.pcolormesh(xx, yy, pred, cmap=plt.get_cmap(\"Pastel1\"))\n",

"plt.scatter(X[:, 0], X[:, 1], c=y, cmap=plt.get_cmap(\"Set1\"))\n",

"plt.xlabel(feature_1_name)\n",

"plt.ylabel(feature_2_name)\n",

"\n",

"idx = np.random.randint(np.c_[xx.ravel(), yy.ravel()].shape[0], size=1)\n",

"my_flower = np.c_[xx.ravel(), yy.ravel()][idx,:]\n",

" \n",

"pred_flower = nnc.predict(my_flower)\n",

"pred_col = 'gray' if pred_flower.item() > 0.5 else 'red'\n",

"\n",

"plt.scatter([my_flower[0][0]], [my_flower[0][1]],\n",

" c=pred_col,\n",

" edgecolor='k',\n",

" marker='d',\n",

" s=200)\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "SmhI_3Yikwk5"

},

"source": [

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"

\n",

"\n",

"# Klastering"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "KgcfWP5tkwk6"

},

"outputs": [],

"source": [

"import numpy as np\n",

"import pandas as pd\n",

"import matplotlib.pyplot as plt\n",

"from sklearn import datasets, cluster, metrics\n",

"from sklearn.preprocessing import StandardScaler\n",

"from itertools import islice, cycle\n",

"import warnings"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "QINr51FSkwk7"

},

"source": [

"Generowanie danych"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "k-RkhcN_kwk8"

},

"outputs": [],

"source": [

"n_samples = 2000\n",

"\n",

"warnings.filterwarnings(\"ignore\", message=\"Graph is not fully connected, spectral embedding\" + \n",

" \" may not work as expected.\", category=UserWarning)\n",

"\n",

"noisy_circles = datasets.make_circles(n_samples=n_samples, factor=.5, noise=.05)\n",

"noisy_moons = datasets.make_moons(n_samples=n_samples, noise=.05)\n",

"varied = datasets.make_blobs(n_samples=n_samples, cluster_std=[1.0, 2.5, 0.5], random_state=170)\n",

"blobs = datasets.make_blobs(n_samples=n_samples, random_state=8)\n",

"no_structure = np.random.rand(n_samples, 2), None\n",

"\n",

"datasets = [\n",

" (noisy_circles, {'n_clusters': 2, 'eps': .3}),\n",

" (noisy_moons, {'n_clusters': 2, 'eps': .3}),\n",

" (varied, {'n_clusters': 3, 'eps': .18}),\n",

" (blobs, {'n_clusters': 3, 'eps': .3}),\n",

" (no_structure, {'n_clusters': 3, 'eps': .3})]"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "kQDJPjzukwk-"

},

"source": [

"Wizualizacja wygenerowanych danych"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "K2GTWpWokwk-",

"outputId": "7f976941-4144-424e-e6a8-b881d40b19e9",

"scrolled": false

},

"outputs": [],

"source": [

"plt.rcParams[\"figure.figsize\"] = (15, 15)\n",

"\n",

"for id_dataset, dataset in enumerate(datasets):\n",

" plt.subplot(3, 3, id_dataset+1)\n",

" plt.scatter(dataset[0][0][:, 0], dataset[0][0][:, 1], s=10)\n",

"\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "rvzi-Z69kwlA"

},

"source": [

"Interfejs"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "v1ls_ZB2kwlB"

},

"outputs": [],

"source": [

"results = {}\n",

"\n",

"def clustering(algorithm, dataset, id_data):\n",

" X, Y = dataset\n",

" X = StandardScaler().fit_transform(X)\n",

" algorithm.fit(X)\n",

" \n",

" y_pred = algorithm.labels_.astype(np.int)\n",

"\n",

" colors = np.array(list(islice(cycle(['#377eb8', '#ff7f00', '#4daf4a']), int(max(y_pred) + 1))))\n",

" colors = np.append(colors, [\"#000000\"])\n",

" \n",

" plt.subplot(3, 3, id_data+1)\n",

" plt.scatter(X[:, 0], X[:, 1], s=10, color=colors[y_pred])\n",

" \n",

" return {'input': X, 'labels': Y, 'predictions': y_pred}"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "iukMPbkrkwlC"

},

"source": [

"## KMeans"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "zsV2NetrkwlD"

},

"source": [

"KMeans - algorytm uczenia nienadzorowanego (nieetykietowane dane). Algorytm ustawia losowo wygenerowane centroidy (centra poszukiwanych klastrów), następnie powtarza dwa poniższe kroki:\n",

"\n",

"**1. Przypisanie danych do klastrów:**\n",

"\n",

"Każdy centroid odpowiada dokładnie jednemu klastrowi. Każdemu z punktów w przestrzeni danych przypisywany jest jeden- najbliższy centroid. Następnie obliczany jest koszt tego przypisania na podstawie odległości euklidesowej.\n",

"\n",

"**2. Uaktualnienie położenia centroidów**\n",

"\n",

"W tym kroku obliczana jest nowa pozycja centroidu na podstawie środka masy przypisanych mu punktów.\n",

"\n",

""

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "9HX2HXoHkwlD",

"outputId": "b8c941d8-5bda-4952-e89f-46812414174f",

"scrolled": true

},

"outputs": [],

"source": [

"results['KMeans'] = []\n",

"for id_data, (dataset, params) in enumerate(datasets):\n",

" \n",

" kmeans = cluster.KMeans(n_clusters=params['n_clusters'])\n",

" results['KMeans'].append(clustering(kmeans, dataset, id_data))\n",

" \n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "SjuBTKYbkwlG"

},

"source": [

"## DBScan"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "_Tve9rUrkwlH"

},

"source": [

"Algorytm DBScan działa nieco inaczej, ponieważ zamiast szukać centrów ośrodków o maksymalnym zagęszczeniu, łączy punkty które mogę stworzyć taki ośrodek.\n",

"\n",

"Potrzebne mu są do tego dwa parametry:\n",

" \n",

"- eps: minimalna odległość między dwoma punktami - jeżeli dwa punkty są odległe o mniej niż eps to są uznawane za sąsiadów.\n",

"- minPoints: minimalna ilość punktów która może stworzyć nnowy gęsty region.\n",

"\n",

""

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "D6DVrMHHkwlH",

"outputId": "269f57e0-733c-4b4c-8c2f-cb7cb94cbb93"

},

"outputs": [],

"source": [

"results['DBSCAN'] = []\n",

"for id_data, (dataset, params) in enumerate(datasets):\n",

" \n",

" dbscan = cluster.DBSCAN(eps=params['eps']) \n",

" results['DBSCAN'].append(clustering(dbscan, dataset, id_data))\n",

"\n",

"plt.show() "

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "cEdzDuCbkwlJ"

},

"source": [

"## Spectral Clustering"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "8LbKkX_qkwlK"

},

"source": [

"Spectral Clustering podobine jak DBScan polega na wyszukiwaniu połączeń między danymi i grupowaniu ich na podstawie ich ilości w grupie. Zasada działania jest jednak nieco inna. Zaczynamy od wyznaczenia grafu połączeń, gdzie wagi na krawędziach są proporcjonalne do odległości między punktami. \n",

"\n",

"\n",

"Słabe połączenia zostaną w przyszłości odcięte dzięki czemu utworzą się podgrafy tworzący poszukiwane klastry.\n",

"\n",

"\n",

"Odpowiednie przekształcenie macierzy połączeń pozwala uzyskać oczekiwaną liczbę klastrów.\n",

"\n",

"\n",

""

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "MAro8OmSkwlK",

"outputId": "cec23305-545f-43c1-93ff-1d5e7d286125"

},

"outputs": [],

"source": [

"results['Spectral'] = []\n",

"for id_data, (dataset, params) in enumerate(datasets):\n",

" \n",

" spectral = cluster.SpectralClustering(n_clusters=params['n_clusters'], eigen_solver='arpack', affinity=\"nearest_neighbors\")\n",

" results['Spectral'].append(clustering(spectral, dataset, id_data))\n",

"\n",

"plt.show() "

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "QsbGzRJakwlM"

},

"source": [

"**Metody oceny klasteryzacji**"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "5qM-pkTfkwlM"

},

"source": [

"**Normalized mutual info score** - to znormalizowana Mutual Information (MI) o zakresie od 0 (brak wzajemnej informacji - z punktu widzenia teorii informacji) do 1 (idealna korelacja)."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "9L1vxi1OkwlM"

},

"outputs": [],

"source": [

"def nmi_score(labels, predictions):\n",

" if not (labels is None):\n",

" return metrics.normalized_mutual_info_score(labels, predictions,\n",

" average_method='arithmetic')\n",

" else:\n",

" return 'nan'"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "VskcIL8XkwlO"

},

"source": [

"**Fowlkes-Mallows score** - miara podobieństwa dwóch klastrów"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "bRn9iT5_kwlO"

},

"outputs": [],

"source": [

"def fm_score(labels, predictions):\n",

" if not (labels is None):\n",

" return metrics.fowlkes_mallows_score(labels, predictions)\n",

" else:\n",

" return 'nan'"

]

},

{

"cell_type": "markdown",

"metadata": {

"colab_type": "text",

"id": "2dkkiStMkwlR"

},

"source": [

"**Silhouette score** - Wskaźnik Silhouette może być używany do określenia dystansu dzielącego uzyskane klastry. Wynik bliski 1 oznacza dużą odległość danego punktu od klastra sąsiedniego. Wynik bliski -1 oznacza przypisanie do złego klastra. "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "_KYiS7JwkwlR"

},

"outputs": [],

"source": [

"def silhouette_score(inputs, predictions):\n",

" if len(set(predictions)) > 1:\n",

" return metrics.silhouette_score(inputs, predictions, \n",

" metric='euclidean')\n",

" else:\n",

" return 'nan'"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "ZAqPn8HwkwlT"

},

"outputs": [],

"source": [

"scores = {}\n",

"\n",

"for key, value in results.items():\n",

" scores[key] = {}\n",

" scores[key]['nmi'] = []\n",

" scores[key]['fm'] = []\n",

" scores[key]['silhouette'] = []\n",

" for algorithm_results in value:\n",

" inputs, labels, predictions = \\\n",

" algorithm_results['input'], algorithm_results['labels'], algorithm_results['predictions']\n",

" \n",

" scores[key]['nmi'].append(nmi_score(labels, predictions))\n",

" scores[key]['fm'].append(fm_score(labels, predictions))\n",

" scores[key]['silhouette'].append(silhouette_score(inputs, predictions))"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "4bnElQgpkwlV",

"outputId": "fce18403-582e-4d38-a595-9b58d98a922f"

},

"outputs": [],

"source": [

"kmeans_metrics = pd.DataFrame(data=scores['KMeans'])\n",

"print(kmeans_metrics)\n",

"for id_data, (dataset, params) in enumerate(datasets):\n",

" clustering(kmeans, dataset, id_data)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "aoqeWNPJkwlW",

"outputId": "8416d57f-c505-4613-b5d7-eac36bedccf8"

},

"outputs": [],

"source": [

"dbscan_metrics = pd.DataFrame(data=scores['DBSCAN'])\n",

"print(dbscan_metrics)\n",

"for id_data, (dataset, params) in enumerate(datasets):\n",

" clustering(dbscan, dataset, id_data)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "3LVuhVeWkwlZ",

"outputId": "e72a7b11-8e9f-4db5-cdad-5e49eae6bd84"

},

"outputs": [],

"source": [

"spectral_metrics = pd.DataFrame(data=scores['Spectral'])\n",

"print(spectral_metrics)\n",

"for id_data, (dataset, params) in enumerate(datasets):\n",

" clustering(spectral, dataset, id_data)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {},

"colab_type": "code",

"id": "fEueUHfNkwlb"

},

"outputs": [],

"source": []

}

],

"metadata": {

"colab": {

"collapsed_sections": [

"3J4N3e8UkwjW",

"oOyhVX6DldYE",

"iukMPbkrkwlC",

"SjuBTKYbkwlG",

"cEdzDuCbkwlJ"

],

"name": "WorkShopMerge (1).ipynb",

"provenance": [],

"version": "0.3.2"

},

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.7.2"

},

"toc": {

"base_numbering": 1,

"nav_menu": {},

"number_sections": true,

"sideBar": true,

"skip_h1_title": false,

"title_cell": "Table of Contents",

"title_sidebar": "Contents",

"toc_cell": false,

"toc_position": {},

"toc_section_display": true,

"toc_window_display": true

}

},

"nbformat": 4,

"nbformat_minor": 1

}