| Notation | Intuition | Definition |

|---|---|---|

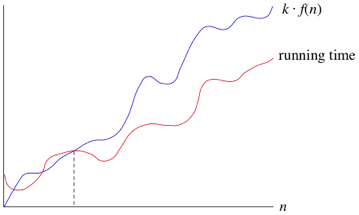

| f(n) = O(g(n)) | f is bounded above by g (up to constant factor) asymptotically | f(n) <= k*g(n) for some positive k |

| Notation | Intuition | Definition |

|---|---|---|

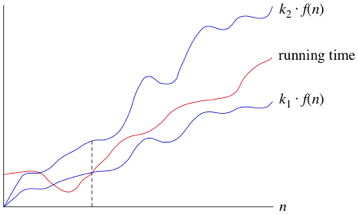

| f(n) = Θ(g(n)) | f is bounded above and below by g asymptotically | k2*g(n) <= f(n) <= k2*g(n) for some positive k1, k2 |

| Notation | Intuition | Definition |

|---|---|---|

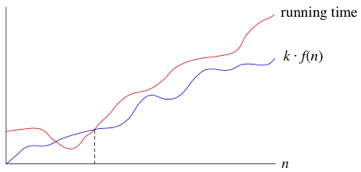

| f(n) = Ω(g(n)) | f is bounded below by g asymptotically | f(n) >= k*g(n) for some positive k |

| Notation | Intuition | Definition |

|---|---|---|

| f(n) = o(g(n)) | f is dominated by g asymptotically | f(n) < k*g(n) for every positive k |

| Notation | Intuition | Definition |

|---|---|---|

| f(n) = ω(g(n)) | f dominates g asymptotically | f(n) > k*g(n) for every positive k |

Why not just Big-Θ?

From the Khan Academy site:

We use big-Θ notation to asymptotically bound the growth of a running time to within constant factors above and below. Sometimes we want to bound from only above. For example, although the worst-case running time of binary search is Θ(lg n), it would be incorrect to say that binary search runs in Θ(lg n) time in all cases. What if we find the target value upon the first guess? Then it runs in Θ(1) time. The running time of binary search is never worse than Θ(lg n), but it's sometimes better. It would be convenient to have a form of asymptotic notation that means "the running time grows at most this much, but it could grow more slowly." We use "big-O" notation for just such occasions.

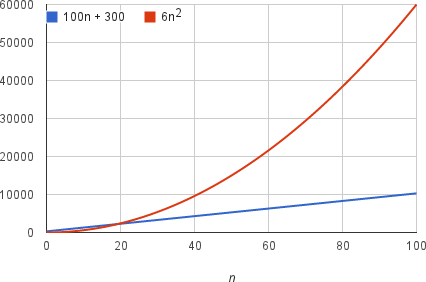

More graphics on why we only care about the highest-order

Let's say we posit a search operating in 2n + 3 time.

We want to show that this has Θ(n) complexity.

We must find k1, k2,

and n0, so that:

k1 * n < 2n + 3 < k2 * n

One solution is k1 = 1, k2 = 3,

and n0 = 4.

Let's demonstrate!

The problem of computing, given n >= 0, the nth Fibonacci number Fn.

The Fibonacci discussion is not in the textbook. One place where it is presented in a nice way similar to what I will do in class is in section 0.2 of the DasGupta, Papadimitriou, Vazirani Algorithms book.

Talked about the obvious recursive algorithm; how it is very slow, intuitively due to recomputing the same subproblems. Proved that the recursion tree grows at least as fast as the Fibonacci sequence itself.

Note that every leaf of the recursion tree returns one. The sum of the value of all of these leaves is going to be the Fibonacci number we return. Therefore, there must be at least as many operations as the Fibonacci number itself.

Showed how to speed the recursive algorithm algorithm up by "memoization": Using a table to store answers for subproblems already computed. Analyzed the running time and space of the recursive memoized version and of the iterative algorithm that fills the table going in the forward direction. Pointed out how to reduce space to constant.

Naive and faster Fibonacci code.

By the way, this is an open-source project: you may contribute!

There is a closed-form formula for computing Fibonacci numbers.

So why can't we claim we can compute nth Fibonacci number in constant time? Did not go into details, but this is related to our inability to directly represent irrational numbers such as square roots, maybe related to precision/rounding, and generally related to what operations are allowed and what are not, in an algorithm.

Using some tricks one can construct nth Fibonacci number in O(log n) arithmetic operations, where we count additions/subtractions/multiplications and do not worry about the fact that eventually the numbers get too large to manipulate in constant time.

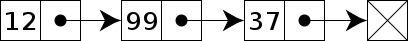

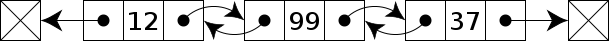

Data structures form a very important part of algorithmic research.

However, this course does not focus on data structures. We will only devote a couple of lectures, total, to this subject. (There are algorithms courses that spend their entire time on data structures. We have an advanced data structures expert in the department, Prof. John Iacono.)