{

"nbformat": 4,

"nbformat_minor": 0,

"metadata": {

"colab": {

"name": "Google Translate API for Python.ipynb",

"provenance": [],

"collapsed_sections": [],

"authorship_tag": "ABX9TyOnSc1hgO3emXa6eb5vuhYH",

"include_colab_link": true

},

"kernelspec": {

"name": "python3",

"display_name": "Python 3"

}

},

"cells": [

{

"cell_type": "markdown",

"metadata": {

"id": "view-in-github",

"colab_type": "text"

},

"source": [

" "

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "4unOk6cs2NKQ"

},

"source": [

"# **Google Translate API for Python**\n",

"\n",

"## **In this tutorial. I will demonstrate how to use the Google Translate API for translating the data from Hindi to English.**\n",

"\n",

""

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "fp0q3l6N2cMT"

},

"source": [

"There are some things I want to clear at the beginning of the tutorial. First, I would translate the data from [Hindi](https://en.wikipedia.org/wiki/Hindi) to [English](https://en.wikipedia.org/wiki/English_language). Also, I will demonstrate the translation on a [Pandas Data Frame](https://pandas.pydata.org/pandas-docs/stable/generated/pandas.DataFrame.html), meaning I will convert the entire data frame from Hindi to English. Isn’t that great? Well, let’s get started. Also, you can use the [Google Colab notebook](https://colab.research.google.com/) to type the code. I would recommend everyone go through the documentation of the Google Translate API for Python completely, so by the time you code you would probably know what I mean. It would be a cakewalk because you would know most of the terms while coding.\n",

"\n",

"[Google Translate API official documentation](https://pypi.org/project/googletrans/)"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "Ev_UbeyR23Sj"

},

"source": [

"Open your Google Colab and create a new notebook and name it as “***Google_Translations.ipynb***”. Before actually typing the code I want all of you guys manually install the Google Translate library inside the notebook. So to do this just type `!pip install googletrans` . This command automatically downloads and installs the library as shown below:"

]

},

{

"cell_type": "code",

"metadata": {

"id": "PY5aV_ikp9U1",

"outputId": "faf7b34b-3bd9-4160-ff8b-86d062376468",

"colab": {

"base_uri": "https://localhost:8080/",

"height": 783

}

},

"source": [

"# install googletrans using pip\n",

"!pip install googletrans"

],

"execution_count": 1,

"outputs": [

{

"output_type": "stream",

"text": [

"Collecting googletrans\n",

" Downloading https://files.pythonhosted.org/packages/71/3a/3b19effdd4c03958b90f40fe01c93de6d5280e03843cc5adf6956bfc9512/googletrans-3.0.0.tar.gz\n",

"Collecting httpx==0.13.3\n",

"\u001b[?25l Downloading https://files.pythonhosted.org/packages/54/b4/698b284c6aed4d7c2b4fe3ba5df1fcf6093612423797e76fbb24890dd22f/httpx-0.13.3-py3-none-any.whl (55kB)\n",

"\u001b[K |████████████████████████████████| 61kB 2.8MB/s \n",

"\u001b[?25hCollecting sniffio\n",

" Downloading https://files.pythonhosted.org/packages/52/b0/7b2e028b63d092804b6794595871f936aafa5e9322dcaaad50ebf67445b3/sniffio-1.2.0-py3-none-any.whl\n",

"Requirement already satisfied: idna==2.* in /usr/local/lib/python3.6/dist-packages (from httpx==0.13.3->googletrans) (2.10)\n",

"Requirement already satisfied: chardet==3.* in /usr/local/lib/python3.6/dist-packages (from httpx==0.13.3->googletrans) (3.0.4)\n",

"Collecting httpcore==0.9.*\n",

"\u001b[?25l Downloading https://files.pythonhosted.org/packages/dd/d5/e4ff9318693ac6101a2095e580908b591838c6f33df8d3ee8dd953ba96a8/httpcore-0.9.1-py3-none-any.whl (42kB)\n",

"\u001b[K |████████████████████████████████| 51kB 5.1MB/s \n",

"\u001b[?25hCollecting rfc3986<2,>=1.3\n",

" Downloading https://files.pythonhosted.org/packages/78/be/7b8b99fd74ff5684225f50dd0e865393d2265656ef3b4ba9eaaaffe622b8/rfc3986-1.4.0-py2.py3-none-any.whl\n",

"Collecting hstspreload\n",

"\u001b[?25l Downloading https://files.pythonhosted.org/packages/f0/16/59b51f8e640f16acad2c0e101fa1e55a87bfd80cff23f77ab23cb8927541/hstspreload-2020.10.6-py3-none-any.whl (965kB)\n",

"\u001b[K |████████████████████████████████| 972kB 8.8MB/s \n",

"\u001b[?25hRequirement already satisfied: certifi in /usr/local/lib/python3.6/dist-packages (from httpx==0.13.3->googletrans) (2020.6.20)\n",

"Collecting contextvars>=2.1; python_version < \"3.7\"\n",

" Downloading https://files.pythonhosted.org/packages/83/96/55b82d9f13763be9d672622e1b8106c85acb83edd7cc2fa5bc67cd9877e9/contextvars-2.4.tar.gz\n",

"Collecting h11<0.10,>=0.8\n",

"\u001b[?25l Downloading https://files.pythonhosted.org/packages/5a/fd/3dad730b0f95e78aeeb742f96fa7bbecbdd56a58e405d3da440d5bfb90c6/h11-0.9.0-py2.py3-none-any.whl (53kB)\n",

"\u001b[K |████████████████████████████████| 61kB 6.4MB/s \n",

"\u001b[?25hCollecting h2==3.*\n",

"\u001b[?25l Downloading https://files.pythonhosted.org/packages/25/de/da019bcc539eeab02f6d45836f23858ac467f584bfec7a526ef200242afe/h2-3.2.0-py2.py3-none-any.whl (65kB)\n",

"\u001b[K |████████████████████████████████| 71kB 7.4MB/s \n",

"\u001b[?25hCollecting immutables>=0.9\n",

"\u001b[?25l Downloading https://files.pythonhosted.org/packages/99/e0/ea6fd4697120327d26773b5a84853f897a68e33d3f9376b00a8ff96e4f63/immutables-0.14-cp36-cp36m-manylinux1_x86_64.whl (98kB)\n",

"\u001b[K |████████████████████████████████| 102kB 9.0MB/s \n",

"\u001b[?25hCollecting hpack<4,>=3.0\n",

" Downloading https://files.pythonhosted.org/packages/8a/cc/e53517f4a1e13f74776ca93271caef378dadec14d71c61c949d759d3db69/hpack-3.0.0-py2.py3-none-any.whl\n",

"Collecting hyperframe<6,>=5.2.0\n",

" Downloading https://files.pythonhosted.org/packages/19/0c/bf88182bcb5dce3094e2f3e4fe20db28a9928cb7bd5b08024030e4b140db/hyperframe-5.2.0-py2.py3-none-any.whl\n",

"Building wheels for collected packages: googletrans, contextvars\n",

" Building wheel for googletrans (setup.py) ... \u001b[?25l\u001b[?25hdone\n",

" Created wheel for googletrans: filename=googletrans-3.0.0-cp36-none-any.whl size=15736 sha256=2f134961ea6857ba577d095c022da79d49dfac4fb1f06d0ffdb1ffaf22a0bea2\n",

" Stored in directory: /root/.cache/pip/wheels/28/1a/a7/eaf4d7a3417a0c65796c547cff4deb6d79c7d14c2abd29273e\n",

" Building wheel for contextvars (setup.py) ... \u001b[?25l\u001b[?25hdone\n",

" Created wheel for contextvars: filename=contextvars-2.4-cp36-none-any.whl size=7666 sha256=0711439525786db137da3dd994721af4cae710c8079e817c36f056e85b40cf54\n",

" Stored in directory: /root/.cache/pip/wheels/a5/7d/68/1ebae2668bda2228686e3c1cf16f2c2384cea6e9334ad5f6de\n",

"Successfully built googletrans contextvars\n",

"Installing collected packages: immutables, contextvars, sniffio, h11, hpack, hyperframe, h2, httpcore, rfc3986, hstspreload, httpx, googletrans\n",

"Successfully installed contextvars-2.4 googletrans-3.0.0 h11-0.9.0 h2-3.2.0 hpack-3.0.0 hstspreload-2020.10.6 httpcore-0.9.1 httpx-0.13.3 hyperframe-5.2.0 immutables-0.14 rfc3986-1.4.0 sniffio-1.2.0\n"

],

"name": "stdout"

}

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "mNqgTBII3D9w"

},

"source": [

"\n",

"\n",

"---\n",

"\n"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "9MOyJFT_3FY9"

},

"source": [

"## **Importing the necessary libraries**\n",

"\n",

"In this step, we will be importing the necessary libraries that we will be using throughout the tutorial. The library ***pandas*** are for storing the CSV data into a data frame. And ***googletrans*** is obviously used for translation and we will also use one of its methods called ***Translator*** which you will see in the later tutorial.\n",

"\n",

"\n"

]

},

{

"cell_type": "code",

"metadata": {

"id": "Dmxp7yNVqHKH"

},

"source": [

"# Importing the necessary libraries\n",

"import pandas as pd\n",

"import googletrans\n",

"from googletrans import Translator"

],

"execution_count": 2,

"outputs": []

},

{

"cell_type": "markdown",

"metadata": {

"id": "Z3u32t703TYb"

},

"source": [

"\n",

"\n",

"---\n",

"\n"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "5WfwlTR43UOb"

},

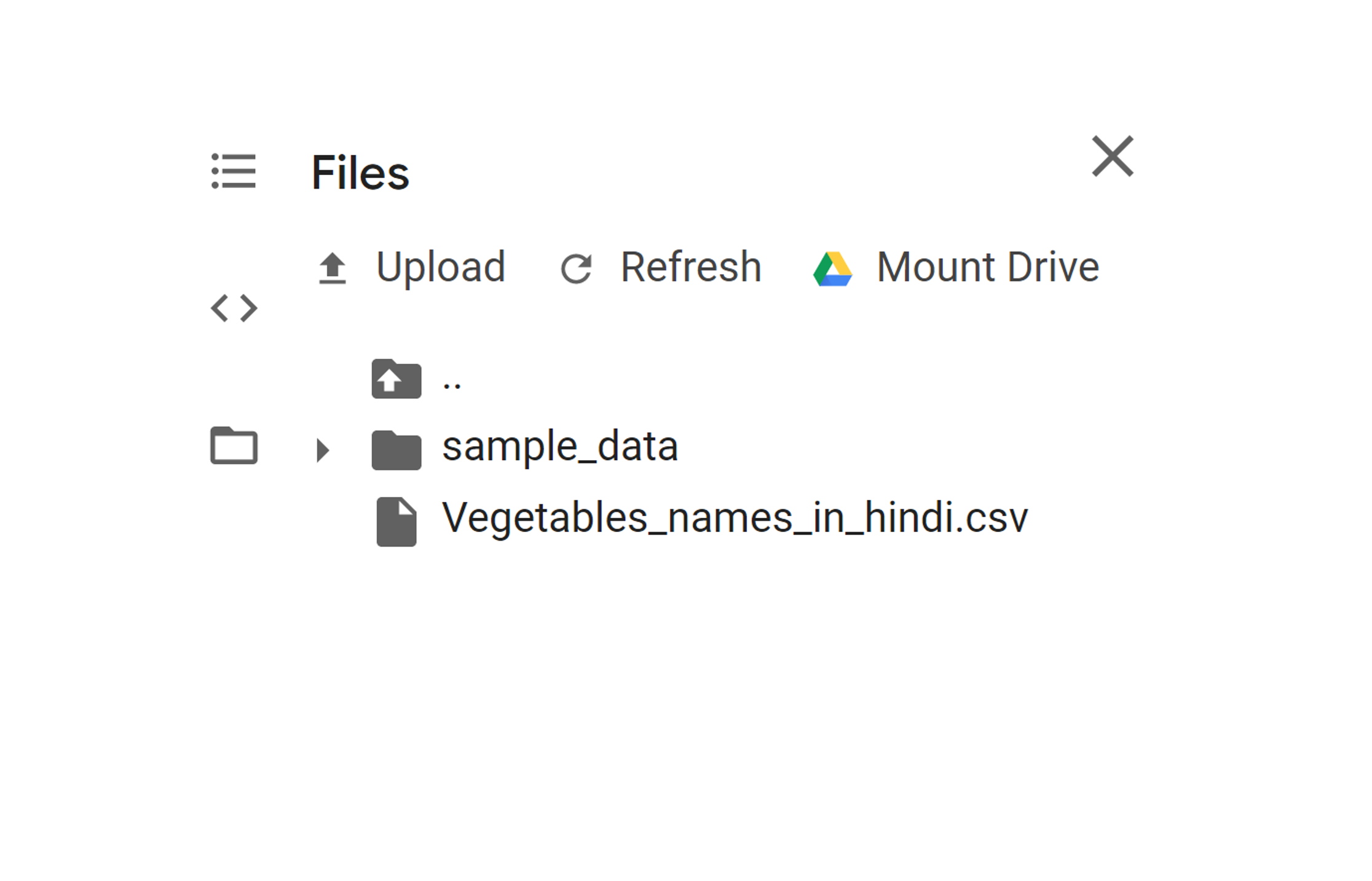

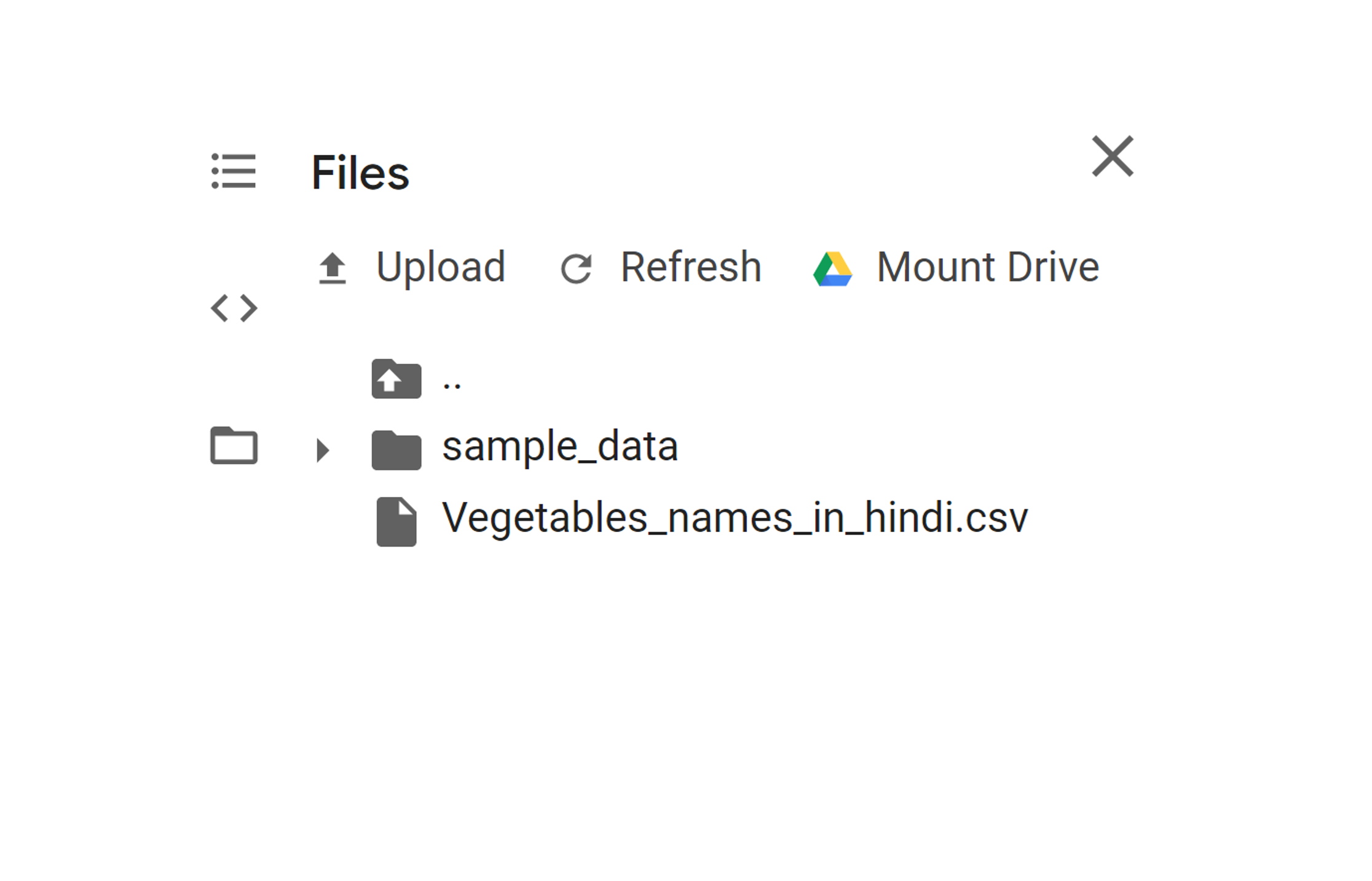

"source": [

"## **Storing the CSV file as a data frame**\n",

"\n",

"In this step, we will be storing the CSV file as a data frame with the help of pandas. For you to get the CSV file, click the link below:\n",

"\n",

"[Dataset download](https://drive.google.com/open?id=1UZl-vf7uBh80GaQFk1-kxsa_zI-_rwFm)\n",

"\n",

"\n",

"After downloading the CSV file, upload the file to Google Colab. There are 3 horizontal lines on the left-hand side, on hovering it prompts “Show table of contents”. After clicking go the “Files” tab and then press “Upload”. Then upload the CSV.\n",

"\n",

"\n",

"\n",

"\n",

"Now you have to read the CSV file and store it in a data frame. For clarity, I am displaying the first 10- rows of the data frame.\n"

]

},

{

"cell_type": "code",

"metadata": {

"id": "IRTLLWcNqLvZ",

"outputId": "334edb00-b9ce-48d3-dfdc-ff08b4456d1c",

"colab": {

"base_uri": "https://localhost:8080/",

"height": 355

}

},

"source": [

"# Reading and storing the CSV file as a dataframe\n",

"df = pd.read_csv('Vegetables_names_in_hindi.csv')\n",

"df.head(10)"

],

"execution_count": 3,

"outputs": [

{

"output_type": "execute_result",

"data": {

"text/html": [

"

"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "4unOk6cs2NKQ"

},

"source": [

"# **Google Translate API for Python**\n",

"\n",

"## **In this tutorial. I will demonstrate how to use the Google Translate API for translating the data from Hindi to English.**\n",

"\n",

""

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "fp0q3l6N2cMT"

},

"source": [

"There are some things I want to clear at the beginning of the tutorial. First, I would translate the data from [Hindi](https://en.wikipedia.org/wiki/Hindi) to [English](https://en.wikipedia.org/wiki/English_language). Also, I will demonstrate the translation on a [Pandas Data Frame](https://pandas.pydata.org/pandas-docs/stable/generated/pandas.DataFrame.html), meaning I will convert the entire data frame from Hindi to English. Isn’t that great? Well, let’s get started. Also, you can use the [Google Colab notebook](https://colab.research.google.com/) to type the code. I would recommend everyone go through the documentation of the Google Translate API for Python completely, so by the time you code you would probably know what I mean. It would be a cakewalk because you would know most of the terms while coding.\n",

"\n",

"[Google Translate API official documentation](https://pypi.org/project/googletrans/)"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "Ev_UbeyR23Sj"

},

"source": [

"Open your Google Colab and create a new notebook and name it as “***Google_Translations.ipynb***”. Before actually typing the code I want all of you guys manually install the Google Translate library inside the notebook. So to do this just type `!pip install googletrans` . This command automatically downloads and installs the library as shown below:"

]

},

{

"cell_type": "code",

"metadata": {

"id": "PY5aV_ikp9U1",

"outputId": "faf7b34b-3bd9-4160-ff8b-86d062376468",

"colab": {

"base_uri": "https://localhost:8080/",

"height": 783

}

},

"source": [

"# install googletrans using pip\n",

"!pip install googletrans"

],

"execution_count": 1,

"outputs": [

{

"output_type": "stream",

"text": [

"Collecting googletrans\n",

" Downloading https://files.pythonhosted.org/packages/71/3a/3b19effdd4c03958b90f40fe01c93de6d5280e03843cc5adf6956bfc9512/googletrans-3.0.0.tar.gz\n",

"Collecting httpx==0.13.3\n",

"\u001b[?25l Downloading https://files.pythonhosted.org/packages/54/b4/698b284c6aed4d7c2b4fe3ba5df1fcf6093612423797e76fbb24890dd22f/httpx-0.13.3-py3-none-any.whl (55kB)\n",

"\u001b[K |████████████████████████████████| 61kB 2.8MB/s \n",

"\u001b[?25hCollecting sniffio\n",

" Downloading https://files.pythonhosted.org/packages/52/b0/7b2e028b63d092804b6794595871f936aafa5e9322dcaaad50ebf67445b3/sniffio-1.2.0-py3-none-any.whl\n",

"Requirement already satisfied: idna==2.* in /usr/local/lib/python3.6/dist-packages (from httpx==0.13.3->googletrans) (2.10)\n",

"Requirement already satisfied: chardet==3.* in /usr/local/lib/python3.6/dist-packages (from httpx==0.13.3->googletrans) (3.0.4)\n",

"Collecting httpcore==0.9.*\n",

"\u001b[?25l Downloading https://files.pythonhosted.org/packages/dd/d5/e4ff9318693ac6101a2095e580908b591838c6f33df8d3ee8dd953ba96a8/httpcore-0.9.1-py3-none-any.whl (42kB)\n",

"\u001b[K |████████████████████████████████| 51kB 5.1MB/s \n",

"\u001b[?25hCollecting rfc3986<2,>=1.3\n",

" Downloading https://files.pythonhosted.org/packages/78/be/7b8b99fd74ff5684225f50dd0e865393d2265656ef3b4ba9eaaaffe622b8/rfc3986-1.4.0-py2.py3-none-any.whl\n",

"Collecting hstspreload\n",

"\u001b[?25l Downloading https://files.pythonhosted.org/packages/f0/16/59b51f8e640f16acad2c0e101fa1e55a87bfd80cff23f77ab23cb8927541/hstspreload-2020.10.6-py3-none-any.whl (965kB)\n",

"\u001b[K |████████████████████████████████| 972kB 8.8MB/s \n",

"\u001b[?25hRequirement already satisfied: certifi in /usr/local/lib/python3.6/dist-packages (from httpx==0.13.3->googletrans) (2020.6.20)\n",

"Collecting contextvars>=2.1; python_version < \"3.7\"\n",

" Downloading https://files.pythonhosted.org/packages/83/96/55b82d9f13763be9d672622e1b8106c85acb83edd7cc2fa5bc67cd9877e9/contextvars-2.4.tar.gz\n",

"Collecting h11<0.10,>=0.8\n",

"\u001b[?25l Downloading https://files.pythonhosted.org/packages/5a/fd/3dad730b0f95e78aeeb742f96fa7bbecbdd56a58e405d3da440d5bfb90c6/h11-0.9.0-py2.py3-none-any.whl (53kB)\n",

"\u001b[K |████████████████████████████████| 61kB 6.4MB/s \n",

"\u001b[?25hCollecting h2==3.*\n",

"\u001b[?25l Downloading https://files.pythonhosted.org/packages/25/de/da019bcc539eeab02f6d45836f23858ac467f584bfec7a526ef200242afe/h2-3.2.0-py2.py3-none-any.whl (65kB)\n",

"\u001b[K |████████████████████████████████| 71kB 7.4MB/s \n",

"\u001b[?25hCollecting immutables>=0.9\n",

"\u001b[?25l Downloading https://files.pythonhosted.org/packages/99/e0/ea6fd4697120327d26773b5a84853f897a68e33d3f9376b00a8ff96e4f63/immutables-0.14-cp36-cp36m-manylinux1_x86_64.whl (98kB)\n",

"\u001b[K |████████████████████████████████| 102kB 9.0MB/s \n",

"\u001b[?25hCollecting hpack<4,>=3.0\n",

" Downloading https://files.pythonhosted.org/packages/8a/cc/e53517f4a1e13f74776ca93271caef378dadec14d71c61c949d759d3db69/hpack-3.0.0-py2.py3-none-any.whl\n",

"Collecting hyperframe<6,>=5.2.0\n",

" Downloading https://files.pythonhosted.org/packages/19/0c/bf88182bcb5dce3094e2f3e4fe20db28a9928cb7bd5b08024030e4b140db/hyperframe-5.2.0-py2.py3-none-any.whl\n",

"Building wheels for collected packages: googletrans, contextvars\n",

" Building wheel for googletrans (setup.py) ... \u001b[?25l\u001b[?25hdone\n",

" Created wheel for googletrans: filename=googletrans-3.0.0-cp36-none-any.whl size=15736 sha256=2f134961ea6857ba577d095c022da79d49dfac4fb1f06d0ffdb1ffaf22a0bea2\n",

" Stored in directory: /root/.cache/pip/wheels/28/1a/a7/eaf4d7a3417a0c65796c547cff4deb6d79c7d14c2abd29273e\n",

" Building wheel for contextvars (setup.py) ... \u001b[?25l\u001b[?25hdone\n",

" Created wheel for contextvars: filename=contextvars-2.4-cp36-none-any.whl size=7666 sha256=0711439525786db137da3dd994721af4cae710c8079e817c36f056e85b40cf54\n",

" Stored in directory: /root/.cache/pip/wheels/a5/7d/68/1ebae2668bda2228686e3c1cf16f2c2384cea6e9334ad5f6de\n",

"Successfully built googletrans contextvars\n",

"Installing collected packages: immutables, contextvars, sniffio, h11, hpack, hyperframe, h2, httpcore, rfc3986, hstspreload, httpx, googletrans\n",

"Successfully installed contextvars-2.4 googletrans-3.0.0 h11-0.9.0 h2-3.2.0 hpack-3.0.0 hstspreload-2020.10.6 httpcore-0.9.1 httpx-0.13.3 hyperframe-5.2.0 immutables-0.14 rfc3986-1.4.0 sniffio-1.2.0\n"

],

"name": "stdout"

}

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "mNqgTBII3D9w"

},

"source": [

"\n",

"\n",

"---\n",

"\n"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "9MOyJFT_3FY9"

},

"source": [

"## **Importing the necessary libraries**\n",

"\n",

"In this step, we will be importing the necessary libraries that we will be using throughout the tutorial. The library ***pandas*** are for storing the CSV data into a data frame. And ***googletrans*** is obviously used for translation and we will also use one of its methods called ***Translator*** which you will see in the later tutorial.\n",

"\n",

"\n"

]

},

{

"cell_type": "code",

"metadata": {

"id": "Dmxp7yNVqHKH"

},

"source": [

"# Importing the necessary libraries\n",

"import pandas as pd\n",

"import googletrans\n",

"from googletrans import Translator"

],

"execution_count": 2,

"outputs": []

},

{

"cell_type": "markdown",

"metadata": {

"id": "Z3u32t703TYb"

},

"source": [

"\n",

"\n",

"---\n",

"\n"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "5WfwlTR43UOb"

},

"source": [

"## **Storing the CSV file as a data frame**\n",

"\n",

"In this step, we will be storing the CSV file as a data frame with the help of pandas. For you to get the CSV file, click the link below:\n",

"\n",

"[Dataset download](https://drive.google.com/open?id=1UZl-vf7uBh80GaQFk1-kxsa_zI-_rwFm)\n",

"\n",

"\n",

"After downloading the CSV file, upload the file to Google Colab. There are 3 horizontal lines on the left-hand side, on hovering it prompts “Show table of contents”. After clicking go the “Files” tab and then press “Upload”. Then upload the CSV.\n",

"\n",

"\n",

"\n",

"\n",

"Now you have to read the CSV file and store it in a data frame. For clarity, I am displaying the first 10- rows of the data frame.\n"

]

},

{

"cell_type": "code",

"metadata": {

"id": "IRTLLWcNqLvZ",

"outputId": "334edb00-b9ce-48d3-dfdc-ff08b4456d1c",

"colab": {

"base_uri": "https://localhost:8080/",

"height": 355

}

},

"source": [

"# Reading and storing the CSV file as a dataframe\n",

"df = pd.read_csv('Vegetables_names_in_hindi.csv')\n",

"df.head(10)"

],

"execution_count": 3,

"outputs": [

{

"output_type": "execute_result",

"data": {

"text/html": [

"\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" Vegetable Names | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" गाजर | \n",

"

\n",

" \n",

" | 1 | \n",

" शिमला मिर्च | \n",

"

\n",

" \n",

" | 2 | \n",

" भिन्डी | \n",

"

\n",

" \n",

" | 3 | \n",

" मक्का | \n",

"

\n",

" \n",

" | 4 | \n",

" लाल मिर्च | \n",

"

\n",

" \n",

" | 5 | \n",

" खीरा | \n",

"

\n",

" \n",

" | 6 | \n",

" कढ़ी पत्ता | \n",

"

\n",

" \n",

" | 7 | \n",

" बैगन | \n",

"

\n",

" \n",

" | 8 | \n",

" लहसुन | \n",

"

\n",

" \n",

" | 9 | \n",

" अदरक | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" Vegetable Names | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" carrot | \n",

"

\n",

" \n",

" | 1 | \n",

" capsicum | \n",

"

\n",

" \n",

" | 2 | \n",

" Lady finger | \n",

"

\n",

" \n",

" | 3 | \n",

" Corn | \n",

"

\n",

" \n",

" | 4 | \n",

" Red chilly | \n",

"

\n",

" \n",

" | 5 | \n",

" Cucumber | \n",

"

\n",

" \n",

" | 6 | \n",

" Curry leaves | \n",

"

\n",

" \n",

" | 7 | \n",

" Brinjal | \n",

"

\n",

" \n",

" | 8 | \n",

" Garlic | \n",

"

\n",

" \n",

" | 9 | \n",

" Ginger | \n",

"

\n",

" \n",

"

\n",

"