{

"cells": [

{

"cell_type": "markdown",

"id": "05c43ea9",

"metadata": {},

"source": [

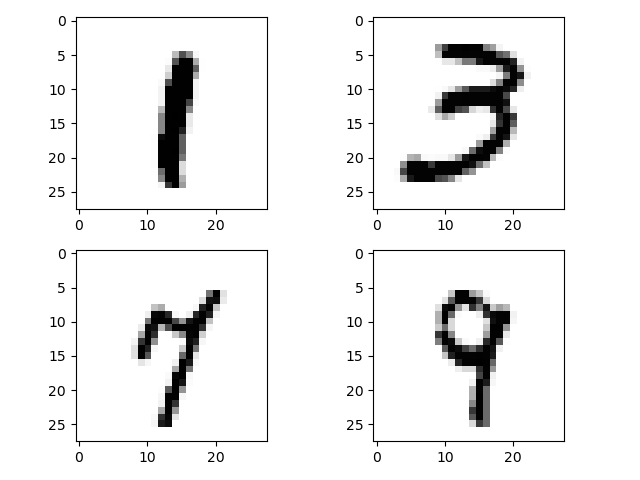

"## Tuning the hyperparameters of a neural network using EasyVVUQ\n",

"\n",

"In this tutorial we will use the EasyVVUQ `GridSampler` to perform a grid search on the hyperparameters of a simple Keras neural network model, trained to recognize hand-written digits. This is the famous MNIST data set, of which 4 input features (of size 28 x 28) are show below. These are fed into a standard feed-forward neural network, which will predict the label 0-9.\n",

"\n",

"**Note**: This tutorial always runs on the localhost. One possibility of performing the grid search on a remote supercomputer involves the use of FabSim, see the `hyperparameter_tuning_tutorial_with_fabsim.ipynb` notebook tutorial.\n",

"\n",

"The (Keras) neural network script is located in `mnist/keras_mnist.template`, which will form the input template for the EasyVVUQ encoder. We will assume you are familiar with the basic EasyVVUQ building blocks. If not, you can look at the [basic tutorial](https://github.com/UCL-CCS/EasyVVUQ/blob/dev/tutorials/basic_tutorial.ipynb)."

]

},

{

"cell_type": "markdown",

"id": "83545e38",

"metadata": {},

"source": [

""

]

},

{

"cell_type": "markdown",

"id": "bf467821",

"metadata": {},

"source": [

"We need EasyVVUQ, TensorFlow and the TensorFlow data sets to execute this tutorial. If you need to install these, uncomment the corresponding line below."

]

},

{

"cell_type": "code",

"execution_count": 1,

"id": "00f7ba08",

"metadata": {

"execution": {

"iopub.execute_input": "2025-07-20T07:14:45.139704Z",

"iopub.status.busy": "2025-07-20T07:14:45.138994Z",

"iopub.status.idle": "2025-07-20T07:14:45.147974Z",

"shell.execute_reply": "2025-07-20T07:14:45.147181Z",

"shell.execute_reply.started": "2025-07-20T07:14:45.139650Z"

}

},

"outputs": [],

"source": [

"# !pip install easyvvuq\n",

"# !pip install tensorflow\n",

"# !pip install tensorflow_datasets"

]

},

{

"cell_type": "code",

"execution_count": 2,

"id": "e7347053",

"metadata": {

"execution": {

"iopub.execute_input": "2025-07-20T07:14:47.183899Z",

"iopub.status.busy": "2025-07-20T07:14:47.183278Z",

"iopub.status.idle": "2025-07-20T07:14:48.619200Z",

"shell.execute_reply": "2025-07-20T07:14:48.618938Z",

"shell.execute_reply.started": "2025-07-20T07:14:47.183846Z"

}

},

"outputs": [

{

"name": "stderr",

"output_type": "stream",

"text": [

"/Volumes/UserData/dpc/GIT/EasyVVUQ/env_3.12/lib/python3.12/site-packages/chaospy/__init__.py:9: UserWarning: pkg_resources is deprecated as an API. See https://setuptools.pypa.io/en/latest/pkg_resources.html. The pkg_resources package is slated for removal as early as 2025-11-30. Refrain from using this package or pin to Setuptools<81.\n",

" import pkg_resources\n"

]

}

],

"source": [

"import easyvvuq as uq\n",

"import os\n",

"import numpy as np\n",

"from easyvvuq.actions import CreateRunDirectory, Encode, Decode, ExecuteLocal, Actions"

]

},

{

"cell_type": "markdown",

"id": "22672c4c",

"metadata": {},

"source": [

"We now set some flags:"

]

},

{

"cell_type": "code",

"execution_count": 3,

"id": "52064503",

"metadata": {

"execution": {

"iopub.execute_input": "2025-07-20T07:14:55.379391Z",

"iopub.status.busy": "2025-07-20T07:14:55.378482Z",

"iopub.status.idle": "2025-07-20T07:14:55.381942Z",

"shell.execute_reply": "2025-07-20T07:14:55.381468Z",

"shell.execute_reply.started": "2025-07-20T07:14:55.379366Z"

}

},

"outputs": [],

"source": [

"# Work directory, where the EasyVVUQ directory will be placed\n",

"WORK_DIR = '/tmp'\n",

"# target output filename generated by the code\n",

"TARGET_FILENAME = 'output.csv'\n",

"# EasyVVUQ campaign name\n",

"CAMPAIGN_NAME = 'grid_test'"

]

},

{

"cell_type": "markdown",

"id": "3cae997f",

"metadata": {},

"source": [

"As is standard in EasyVVUQ, we now define the parameter space. In this case these are 4 hyperparameters. There is one hidden layer with `n_neurons` neurons, a Dropout layer after the input and hidden layer, with dropout probability `dropout_prob_in` and `dropout_prob_hidden` respectively. We made the `learning_rate` tuneable as well."

]

},

{

"cell_type": "code",

"execution_count": 4,

"id": "6a3a8a82",

"metadata": {

"execution": {

"iopub.execute_input": "2025-07-20T07:14:57.209052Z",

"iopub.status.busy": "2025-07-20T07:14:57.208447Z",

"iopub.status.idle": "2025-07-20T07:14:57.214996Z",

"shell.execute_reply": "2025-07-20T07:14:57.214364Z",

"shell.execute_reply.started": "2025-07-20T07:14:57.209009Z"

}

},

"outputs": [],

"source": [

"params = {}\n",

"params[\"n_neurons\"] = {\"type\":\"integer\", \"default\": 32}\n",

"params[\"dropout_prob_in\"] = {\"type\":\"float\", \"default\": 0.0}\n",

"params[\"dropout_prob_hidden\"] = {\"type\":\"float\", \"default\": 0.0}\n",

"params[\"learning_rate\"] = {\"type\":\"float\", \"default\": 0.001}"

]

},

{

"cell_type": "markdown",

"id": "7b41214c",

"metadata": {},

"source": [

"These 4 hyperparameter appear as flags in the input template `mnist/keras_mnist.template`. Typically this is generated from an input file used by some simulation code. In this case however, `mnist/keras_mnist.template` is directly our Python script, with the hyperparameters replaced by flags. For instance:\n",

"\n",

"```python\n",

"model = tf.keras.models.Sequential([\n",

" tf.keras.layers.Flatten(input_shape=(28, 28)),\n",

" tf.keras.layers.Dropout($dropout_prob_in),\n",

" tf.keras.layers.Dense($n_neurons, activation='relu'),\n",

" tf.keras.layers.Dropout($dropout_prob_hidden),\n",

" tf.keras.layers.Dense(10)\n",

"])\n",

"```\n",

"\n",

"is simply the neural network construction part with flags for the dropout probabilities and the number of neurons in the hidden layer. The encoder reads the flags and replaces them with numeric values, and it subsequently writes the corresponding `target_filename=hyper_param_tune.py`:"

]

},

{

"cell_type": "code",

"execution_count": 5,

"id": "3ed08818",

"metadata": {

"execution": {

"iopub.execute_input": "2025-07-20T07:15:02.907656Z",

"iopub.status.busy": "2025-07-20T07:15:02.907223Z",

"iopub.status.idle": "2025-07-20T07:15:02.914530Z",

"shell.execute_reply": "2025-07-20T07:15:02.913674Z",

"shell.execute_reply.started": "2025-07-20T07:15:02.907615Z"

}

},

"outputs": [],

"source": [

"encoder = uq.encoders.GenericEncoder('./mnist/keras_mnist.template', target_filename='hyper_param_tune.py')"

]

},

{

"cell_type": "markdown",

"id": "02644574",

"metadata": {},

"source": [

"What follows are standard steps in setting up an EasyVVUQ Campaign"

]

},

{

"cell_type": "code",

"execution_count": 6,

"id": "a10d571c",

"metadata": {

"execution": {

"iopub.execute_input": "2025-07-20T07:15:06.966339Z",

"iopub.status.busy": "2025-07-20T07:15:06.966079Z",

"iopub.status.idle": "2025-07-20T07:15:07.004831Z",

"shell.execute_reply": "2025-07-20T07:15:07.004559Z",

"shell.execute_reply.started": "2025-07-20T07:15:06.966319Z"

}

},

"outputs": [

{

"name": "stderr",

"output_type": "stream",

"text": [

"/Volumes/UserData/dpc/GIT/EasyVVUQ/env_3.12/lib/python3.12/site-packages/cerberus/validator.py:618: UserWarning: These types are defined both with a method and in the'types_mapping' property of this validator: {'integer'}\n",

" warn(\n",

"/Volumes/UserData/dpc/GIT/EasyVVUQ/env_3.12/lib/python3.12/site-packages/cerberus/validator.py:618: UserWarning: These types are defined both with a method and in the'types_mapping' property of this validator: {'integer'}\n",

" warn(\n"

]

}

],

"source": [

"# execute the runs locally\n",

"execute = ExecuteLocal('python3 hyper_param_tune.py')\n",

"\n",

"# decode the output CSV files, with stored training and test accuracy values\n",

"output_columns = [\"accuracy_train\", \"accuracy_test\"]\n",

"decoder = uq.decoders.SimpleCSV(target_filename=TARGET_FILENAME, output_columns=output_columns)\n",

"\n",

"# actions are 1) create run dirs, 2) encode input template, 3) execute runs, 4) decode output files\n",

"actions = Actions(CreateRunDirectory(root='/tmp', flatten=True), Encode(encoder), execute, Decode(decoder))\n",

"\n",

"# create the EasyVVUQ main campaign object\n",

"campaign = uq.Campaign(\n",

" name=CAMPAIGN_NAME,\n",

" work_dir=WORK_DIR,\n",

")\n",

"\n",

"# add the param definitions and actions to the campaign\n",

"campaign.add_app(\n",

" name=CAMPAIGN_NAME,\n",

" params=params,\n",

" actions=actions\n",

")"

]

},

{

"cell_type": "markdown",

"id": "bbbba5f8",

"metadata": {},

"source": [

"As with the uncertainty-quantification (UQ) samplers, the `vary` is used to select which of the `params` we actually vary. Unlike the UQ samplers we do not specify an input probability distribution. This being a grid search, we simply specify a list of values for each hyperparameter. Parameters not in `vary`, but with a flag in the template, will be given the default value specified in `params`."

]

},

{

"cell_type": "code",

"execution_count": 7,

"id": "a3247048",

"metadata": {

"execution": {

"iopub.execute_input": "2025-07-20T07:15:16.423221Z",

"iopub.status.busy": "2025-07-20T07:15:16.422602Z",

"iopub.status.idle": "2025-07-20T07:15:16.427651Z",

"shell.execute_reply": "2025-07-20T07:15:16.426632Z",

"shell.execute_reply.started": "2025-07-20T07:15:16.423177Z"

}

},

"outputs": [],

"source": [

"vary = {\"n_neurons\": [64, 128], \"learning_rate\": [0.005, 0.01, 0.015]}"

]

},

{

"cell_type": "markdown",

"id": "612e912c",

"metadata": {},

"source": [

"**Note:** we are mixing integer and floats in the `vary` dict. Other data types (string, boolean) can also be used.\n",

"\n",

"The `vary` dict is passed to the `Grid_Sampler`. As can be seen, it created a tensor product of all 1D points specified in `vary`. If a single tensor product is not useful (e.g. because it creates combinations of parameters that do not makes sense), you can also pass a list of different `vary` dicts. For even more flexibility you can also write the required parameter combinations to a CSV file, and pass it to the `CSV_Sampler` instead."

]

},

{

"cell_type": "code",

"execution_count": 8,

"id": "29e62d09",

"metadata": {

"execution": {

"iopub.execute_input": "2025-07-20T07:15:22.495815Z",

"iopub.status.busy": "2025-07-20T07:15:22.495173Z",

"iopub.status.idle": "2025-07-20T07:15:22.513378Z",

"shell.execute_reply": "2025-07-20T07:15:22.512898Z",

"shell.execute_reply.started": "2025-07-20T07:15:22.495762Z"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"There are 6 points:\n"

]

},

{

"data": {

"text/plain": [

"[array([[64, 0.005],\n",

" [64, 0.01],\n",

" [64, 0.015],\n",

" [128, 0.005],\n",

" [128, 0.01],\n",

" [128, 0.015]], dtype=object)]"

]

},

"execution_count": 8,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"# create an instance of the Grid Sampler\n",

"sampler = uq.sampling.Grid_Sampler(vary)\n",

"\n",

"# Associate the sampler with the campaign\n",

"campaign.set_sampler(sampler)\n",

"\n",

"# print the points\n",

"print(\"There are %d points:\" % (sampler.n_samples()))\n",

"sampler.points"

]

},

{

"cell_type": "markdown",

"id": "99c39b4b",

"metadata": {},

"source": [

"Run the `actions` (create directories with `hyper_param_tune.py` files in it)"

]

},

{

"cell_type": "code",

"execution_count": 9,

"id": "2095968a",

"metadata": {

"execution": {

"iopub.execute_input": "2025-07-20T07:15:31.290243Z",

"iopub.status.busy": "2025-07-20T07:15:31.289997Z",

"iopub.status.idle": "2025-07-20T07:15:44.630079Z",

"shell.execute_reply": "2025-07-20T07:15:44.629734Z",

"shell.execute_reply.started": "2025-07-20T07:15:31.290226Z"

}

},

"outputs": [

{

"name": "stderr",

"output_type": "stream",

"text": [

"/Volumes/UserData/dpc/GIT/EasyVVUQ/env_3.12/lib/python3.12/site-packages/keras/src/layers/reshaping/flatten.py:37: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead.\n",

" super().__init__(**kwargs)\n",

"/Volumes/UserData/dpc/GIT/EasyVVUQ/env_3.12/lib/python3.12/site-packages/keras/src/layers/reshaping/flatten.py:37: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead.\n",

" super().__init__(**kwargs)\n",

"/Volumes/UserData/dpc/GIT/EasyVVUQ/env_3.12/lib/python3.12/site-packages/keras/src/layers/reshaping/flatten.py:37: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead.\n",

" super().__init__(**kwargs)\n",

"/Volumes/UserData/dpc/GIT/EasyVVUQ/env_3.12/lib/python3.12/site-packages/keras/src/layers/reshaping/flatten.py:37: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead.\n",

" super().__init__(**kwargs)\n",

"/Volumes/UserData/dpc/GIT/EasyVVUQ/env_3.12/lib/python3.12/site-packages/keras/src/layers/reshaping/flatten.py:37: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead.\n",

" super().__init__(**kwargs)\n",

"/Volumes/UserData/dpc/GIT/EasyVVUQ/env_3.12/lib/python3.12/site-packages/keras/src/layers/reshaping/flatten.py:37: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead.\n",

" super().__init__(**kwargs)\n",

"2025-07-20 09:15:35.505420: I tensorflow/core/kernels/data/tf_record_dataset_op.cc:387] The default buffer size is 262144, which is overridden by the user specified `buffer_size` of 8388608\n",

"2025-07-20 09:15:35.505447: I tensorflow/core/kernels/data/tf_record_dataset_op.cc:387] The default buffer size is 262144, which is overridden by the user specified `buffer_size` of 8388608\n",

"2025-07-20 09:15:35.505539: I tensorflow/core/kernels/data/tf_record_dataset_op.cc:387] The default buffer size is 262144, which is overridden by the user specified `buffer_size` of 8388608\n",

"2025-07-20 09:15:35.505632: I tensorflow/core/kernels/data/tf_record_dataset_op.cc:387] The default buffer size is 262144, which is overridden by the user specified `buffer_size` of 8388608\n",

"2025-07-20 09:15:35.505885: I tensorflow/core/kernels/data/tf_record_dataset_op.cc:387] The default buffer size is 262144, which is overridden by the user specified `buffer_size` of 8388608\n",

"2025-07-20 09:15:35.505924: I tensorflow/core/kernels/data/tf_record_dataset_op.cc:387] The default buffer size is 262144, which is overridden by the user specified `buffer_size` of 8388608\n"

]

},

{

"name": "stdout",

"output_type": "stream",

"text": [

"Epoch 1/6\n",

"Epoch 1/6\n",

"Epoch 1/6\n",

"Epoch 1/6\n",

"Epoch 1/6\n",

"Epoch 1/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m5s\u001b[0m 2ms/step - loss: 0.6675 - sparse_categorical_accuracy: 0.8001 - val_loss: 0.2092 - val_sparse_categorical_accuracy: 0.9369\n",

"Epoch 2/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m5s\u001b[0m 2ms/step - loss: 0.8846 - sparse_categorical_accuracy: 0.7448 - val_loss: 0.2860 - val_sparse_categorical_accuracy: 0.9189\n",

"Epoch 2/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m6s\u001b[0m 2ms/step - loss: 0.9380 - sparse_categorical_accuracy: 0.7193 - val_loss: 0.2912 - val_sparse_categorical_accuracy: 0.9184\n",

"Epoch 2/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m6s\u001b[0m 2ms/step - loss: 0.7561 - sparse_categorical_accuracy: 0.7718 - val_loss: 0.2439 - val_sparse_categorical_accuracy: 0.9297\n",

"Epoch 2/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m6s\u001b[0m 2ms/step - loss: 0.6991 - sparse_categorical_accuracy: 0.7860 - val_loss: 0.2195 - val_sparse_categorical_accuracy: 0.9373\n",

"Epoch 2/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m6s\u001b[0m 3ms/step - loss: 0.7696 - sparse_categorical_accuracy: 0.7651 - val_loss: 0.2370 - val_sparse_categorical_accuracy: 0.9342\n",

"Epoch 2/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m1s\u001b[0m 2ms/step - loss: 0.2755 - sparse_categorical_accuracy: 0.9221 - val_loss: 0.2228 - val_sparse_categorical_accuracy: 0.9370\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m1s\u001b[0m 2ms/step - loss: 0.1879 - sparse_categorical_accuracy: 0.9460 - val_loss: 0.1433 - val_sparse_categorical_accuracy: 0.9592\n",

"Epoch 3/6\n",

"Epoch 3/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m1s\u001b[0m 1ms/step - loss: 0.2837 - sparse_categorical_accuracy: 0.9185 - val_loss: 0.2398 - val_sparse_categorical_accuracy: 0.9343\n",

"Epoch 3/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 986us/step - loss: 0.2392 - sparse_categorical_accuracy: 0.9322 - val_loss: 0.1889 - val_sparse_categorical_accuracy: 0.9437\n",

"Epoch 3/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 920us/step - loss: 0.2052 - sparse_categorical_accuracy: 0.9416 - val_loss: 0.1515 - val_sparse_categorical_accuracy: 0.9555\n",

"Epoch 3/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m1s\u001b[0m 1ms/step - loss: 0.2255 - sparse_categorical_accuracy: 0.9354 - val_loss: 0.1763 - val_sparse_categorical_accuracy: 0.9501\n",

"Epoch 3/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 913us/step - loss: 0.2342 - sparse_categorical_accuracy: 0.9331 - val_loss: 0.2041 - val_sparse_categorical_accuracy: 0.9422\n",

"Epoch 4/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 916us/step - loss: 0.1874 - sparse_categorical_accuracy: 0.9467 - val_loss: 0.1601 - val_sparse_categorical_accuracy: 0.9516\n",

"Epoch 4/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m1s\u001b[0m 1ms/step - loss: 0.2246 - sparse_categorical_accuracy: 0.9360 - val_loss: 0.1888 - val_sparse_categorical_accuracy: 0.9465\n",

"Epoch 4/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m1s\u001b[0m 1ms/step - loss: 0.1337 - sparse_categorical_accuracy: 0.9614 - val_loss: 0.1111 - val_sparse_categorical_accuracy: 0.9661\n",

"Epoch 4/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 874us/step - loss: 0.1432 - sparse_categorical_accuracy: 0.9598 - val_loss: 0.1368 - val_sparse_categorical_accuracy: 0.9603\n",

"Epoch 4/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m1s\u001b[0m 1ms/step - loss: 0.1595 - sparse_categorical_accuracy: 0.9538 - val_loss: 0.1346 - val_sparse_categorical_accuracy: 0.9616\n",

"Epoch 4/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 909us/step - loss: 0.1967 - sparse_categorical_accuracy: 0.9446 - val_loss: 0.1798 - val_sparse_categorical_accuracy: 0.9478\n",

"Epoch 5/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 893us/step - loss: 0.1515 - sparse_categorical_accuracy: 0.9558 - val_loss: 0.1423 - val_sparse_categorical_accuracy: 0.9584\n",

"Epoch 5/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 949us/step - loss: 0.1171 - sparse_categorical_accuracy: 0.9663 - val_loss: 0.1099 - val_sparse_categorical_accuracy: 0.9675\n",

"Epoch 5/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m1s\u001b[0m 1ms/step - loss: 0.1825 - sparse_categorical_accuracy: 0.9486 - val_loss: 0.1656 - val_sparse_categorical_accuracy: 0.9511\n",

"Epoch 5/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m1s\u001b[0m 1ms/step - loss: 0.0994 - sparse_categorical_accuracy: 0.9711 - val_loss: 0.1015 - val_sparse_categorical_accuracy: 0.9693\n",

"Epoch 5/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 928us/step - loss: 0.1793 - sparse_categorical_accuracy: 0.9485 - val_loss: 0.1654 - val_sparse_categorical_accuracy: 0.9513\n",

"Epoch 6/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 919us/step - loss: 0.1324 - sparse_categorical_accuracy: 0.9613 - val_loss: 0.1254 - val_sparse_categorical_accuracy: 0.9619\n",

"Epoch 6/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m1s\u001b[0m 1ms/step - loss: 0.1268 - sparse_categorical_accuracy: 0.9644 - val_loss: 0.1231 - val_sparse_categorical_accuracy: 0.9645\n",

"Epoch 5/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 917us/step - loss: 0.0954 - sparse_categorical_accuracy: 0.9726 - val_loss: 0.1015 - val_sparse_categorical_accuracy: 0.9695\n",

"Epoch 6/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m1s\u001b[0m 1ms/step - loss: 0.1617 - sparse_categorical_accuracy: 0.9549 - val_loss: 0.1515 - val_sparse_categorical_accuracy: 0.9555\n",

"Epoch 6/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m1s\u001b[0m 1ms/step - loss: 0.0806 - sparse_categorical_accuracy: 0.9774 - val_loss: 0.0975 - val_sparse_categorical_accuracy: 0.9709\n",

"Epoch 6/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 909us/step - loss: 0.1600 - sparse_categorical_accuracy: 0.9543 - val_loss: 0.1473 - val_sparse_categorical_accuracy: 0.9577\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 936us/step - loss: 0.1134 - sparse_categorical_accuracy: 0.9673 - val_loss: 0.1163 - val_sparse_categorical_accuracy: 0.9636\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m1s\u001b[0m 1ms/step - loss: 0.1063 - sparse_categorical_accuracy: 0.9695 - val_loss: 0.1035 - val_sparse_categorical_accuracy: 0.9696\n",

"Epoch 6/6\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 897us/step - loss: 0.0829 - sparse_categorical_accuracy: 0.9761 - val_loss: 0.1022 - val_sparse_categorical_accuracy: 0.9682\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 564us/step - loss: 0.1442 - sparse_categorical_accuracy: 0.9584\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 575us/step - loss: 0.1023 - sparse_categorical_accuracy: 0.9703\n",

"\u001b[1m79/79\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 579us/step - loss: 0.1455 - sparse_categorical_accuracy: 0.9584\n",

"\u001b[1m79/79\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 573us/step - loss: 0.1154 - sparse_categorical_accuracy: 0.9640\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m1s\u001b[0m 1ms/step - loss: 0.1421 - sparse_categorical_accuracy: 0.9608 - val_loss: 0.1366 - val_sparse_categorical_accuracy: 0.9597\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m1s\u001b[0m 1ms/step - loss: 0.0748 - sparse_categorical_accuracy: 0.9787 - val_loss: 0.0862 - val_sparse_categorical_accuracy: 0.9736\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 551us/step - loss: 0.0812 - sparse_categorical_accuracy: 0.9755\n",

"\u001b[1m79/79\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 454us/step - loss: 0.0996 - sparse_categorical_accuracy: 0.9687\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 1ms/step - loss: 0.0885 - sparse_categorical_accuracy: 0.9751 - val_loss: 0.0929 - val_sparse_categorical_accuracy: 0.9726\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 550us/step - loss: 0.1273 - sparse_categorical_accuracy: 0.9654\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 556us/step - loss: 0.0601 - sparse_categorical_accuracy: 0.9832\n",

"\u001b[1m79/79\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 501us/step - loss: 0.1349 - sparse_categorical_accuracy: 0.9615\n",

"\u001b[1m79/79\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 477us/step - loss: 0.0868 - sparse_categorical_accuracy: 0.9715\n",

"\u001b[1m469/469\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 466us/step - loss: 0.0764 - sparse_categorical_accuracy: 0.9796\n",

"\u001b[1m79/79\u001b[0m \u001b[32m━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[37m\u001b[0m \u001b[1m0s\u001b[0m 403us/step - loss: 0.0914 - sparse_categorical_accuracy: 0.9721\n"

]

},

{

"name": "stdout",

"output_type": "stream",

"text": [

"Epoch 1/6\n",

"Epoch 1/6\n",

"Epoch 1/6\n",

"Epoch 1/6\n",

"Epoch 1/6\n",

"Epoch 1/6\n",

"469/469 [==============================] - 15s 13ms/step - loss: 0.4033 - sparse_categorical_accuracy: 0.8792 - val_loss: 0.2163 - val_sparse_categorical_accuracy: 0.9375\n",

"Epoch 2/6\n",

"469/469 [==============================] - 15s 9ms/step - loss: 0.4464 - sparse_categorical_accuracy: 0.8710 - val_loss: 0.2590 - val_sparse_categorical_accuracy: 0.9264\n",

"Epoch 2/6\n",

"469/469 [==============================] - 15s 13ms/step - loss: 0.5436 - sparse_categorical_accuracy: 0.8448 - val_loss: 0.2959 - val_sparse_categorical_accuracy: 0.9169\n",

"469/469 [==============================] - 16s 14ms/step - loss: 0.4242 - sparse_categorical_accuracy: 0.8773 - val_loss: 0.2251 - val_sparse_categorical_accuracy: 0.9334\n",

"Epoch 2/6\n",

"469/469 [==============================] - 16s 14ms/step - loss: 0.3825 - sparse_categorical_accuracy: 0.8884 - val_loss: 0.2041 - val_sparse_categorical_accuracy: 0.9407\n",

"Epoch 2/6\n",

"469/469 [==============================] - 16s 14ms/step - loss: 0.5121 - sparse_categorical_accuracy: 0.8543 - val_loss: 0.2868 - val_sparse_categorical_accuracy: 0.9208\n",

"296/469 [=================>............] - ETA: 0s - loss: 0.2019 - sparse_categorical_accuracy: 0.9404Epoch 2/6\n",

"469/469 [==============================] - 2s 5ms/step - loss: 0.2190 - sparse_categorical_accuracy: 0.9385 - val_loss: 0.1947 - val_sparse_categorical_accuracy: 0.9430\n",

"243/469 [==============>...............] - ETA: 1s - loss: 0.2207 - sparse_categorical_accuracy: 0.9359Epoch 3/6\n",

"469/469 [==============================] - 3s 6ms/step - loss: 0.1926 - sparse_categorical_accuracy: 0.9432 - val_loss: 0.1692 - val_sparse_categorical_accuracy: 0.9502\n",

"Epoch 3/6\n",

"469/469 [==============================] - 4s 8ms/step - loss: 0.2056 - sparse_categorical_accuracy: 0.9409 - val_loss: 0.1634 - val_sparse_categorical_accuracy: 0.9507\n",

"Epoch 3/6\n",

"469/469 [==============================] - 4s 8ms/step - loss: 0.1765 - sparse_categorical_accuracy: 0.9489 - val_loss: 0.1405 - val_sparse_categorical_accuracy: 0.9589\n",

"469/469 [==============================] - 4s 9ms/step - loss: 0.2690 - sparse_categorical_accuracy: 0.9233 - val_loss: 0.2342 - val_sparse_categorical_accuracy: 0.9324\n",

"Epoch 3/6\n",

"469/469 [==============================] - 3s 7ms/step - loss: 0.1725 - sparse_categorical_accuracy: 0.9514 - val_loss: 0.1574 - val_sparse_categorical_accuracy: 0.9550\n",

" 84/469 [====>.........................] - ETA: 2s - loss: 0.2292 - sparse_categorical_accuracy: 0.9323Epoch 4/6\n",

"469/469 [==============================] - 3s 7ms/step - loss: 0.1440 - sparse_categorical_accuracy: 0.9579 - val_loss: 0.1253 - val_sparse_categorical_accuracy: 0.9631\n",

"Epoch 4/6\n",

"221/469 [=============>................] - ETA: 1s - loss: 0.1635 - sparse_categorical_accuracy: 0.9525Epoch 3/6\n",

"113/469 [======>.......................] - ETA: 1s - loss: 0.1428 - sparse_categorical_accuracy: 0.9583Epoch 2/6\n",

"469/469 [==============================] - 4s 8ms/step - loss: 0.1552 - sparse_categorical_accuracy: 0.9557 - val_loss: 0.1408 - val_sparse_categorical_accuracy: 0.9578\n",

"455/469 [============================>.] - ETA: 0s - loss: 0.1411 - sparse_categorical_accuracy: 0.9602Epoch 4/6\n",

"469/469 [==============================] - 3s 7ms/step - loss: 0.2198 - sparse_categorical_accuracy: 0.9381 - val_loss: 0.1971 - val_sparse_categorical_accuracy: 0.9432\n",

"469/469 [==============================] - 3s 6ms/step - loss: 0.1407 - sparse_categorical_accuracy: 0.9603 - val_loss: 0.1350 - val_sparse_categorical_accuracy: 0.9623\n",

"469/469 [==============================] - 3s 6ms/step - loss: 0.1177 - sparse_categorical_accuracy: 0.9659 - val_loss: 0.1115 - val_sparse_categorical_accuracy: 0.9675\n",

"437/469 [==========================>...] - ETA: 0s - loss: 0.2782 - sparse_categorical_accuracy: 0.9216Epoch 5/6\n",

"469/469 [==============================] - 3s 7ms/step - loss: 0.1296 - sparse_categorical_accuracy: 0.9632 - val_loss: 0.1169 - val_sparse_categorical_accuracy: 0.9652\n",

" 58/469 [==>...........................] - ETA: 1s - loss: 0.1079 - sparse_categorical_accuracy: 0.9674Epoch 4/6\n",

"469/469 [==============================] - 3s 6ms/step - loss: 0.2760 - sparse_categorical_accuracy: 0.9222 - val_loss: 0.2362 - val_sparse_categorical_accuracy: 0.9323\n",

"361/469 [======================>.......] - ETA: 0s - loss: 0.1000 - sparse_categorical_accuracy: 0.9704Epoch 4/6\n",

"469/469 [==============================] - 2s 5ms/step - loss: 0.1241 - sparse_categorical_accuracy: 0.9643 - val_loss: 0.1146 - val_sparse_categorical_accuracy: 0.9661\n",

"Epoch 5/6\n",

"109/469 [=====>........................] - ETA: 1s - loss: 0.2055 - sparse_categorical_accuracy: 0.9414Epoch 5/6\n",

"469/469 [==============================] - 2s 4ms/step - loss: 0.1003 - sparse_categorical_accuracy: 0.9705 - val_loss: 0.1062 - val_sparse_categorical_accuracy: 0.9665\n",

"147/469 [========>.....................] - ETA: 1s - loss: 0.1988 - sparse_categorical_accuracy: 0.9439Epoch 6/6\n",

"119/469 [======>.......................] - ETA: 1s - loss: 0.1241 - sparse_categorical_accuracy: 0.9641Epoch 3/6\n",

"469/469 [==============================] - 3s 6ms/step - loss: 0.1030 - sparse_categorical_accuracy: 0.9703 - val_loss: 0.1046 - val_sparse_categorical_accuracy: 0.9687\n",

" 67/469 [===>..........................] - ETA: 2s - loss: 0.2337 - sparse_categorical_accuracy: 0.9356Epoch 5/6\n",

"469/469 [==============================] - 3s 7ms/step - loss: 0.1873 - sparse_categorical_accuracy: 0.9469 - val_loss: 0.1769 - val_sparse_categorical_accuracy: 0.9504\n",

"248/469 [==============>...............] - ETA: 1s - loss: 0.0810 - sparse_categorical_accuracy: 0.9773Epoch 5/6\n",

"469/469 [==============================] - 3s 6ms/step - loss: 0.1213 - sparse_categorical_accuracy: 0.9653 - val_loss: 0.1245 - val_sparse_categorical_accuracy: 0.9628\n",

"Epoch 6/6\n",

"469/469 [==============================] - 3s 6ms/step - loss: 0.0867 - sparse_categorical_accuracy: 0.9755 - val_loss: 0.0991 - val_sparse_categorical_accuracy: 0.9702\n",

"469/469 [==============================] - 3s 7ms/step - loss: 0.1038 - sparse_categorical_accuracy: 0.9709 - val_loss: 0.1101 - val_sparse_categorical_accuracy: 0.9670\n",

"469/469 [==============================] - 3s 6ms/step - loss: 0.2256 - sparse_categorical_accuracy: 0.9364 - val_loss: 0.2024 - val_sparse_categorical_accuracy: 0.9425\n",

"469/469 [==============================] - 3s 6ms/step - loss: 0.0848 - sparse_categorical_accuracy: 0.9758 - val_loss: 0.0951 - val_sparse_categorical_accuracy: 0.9703\n",

"469/469 [==============================] - 2s 3ms/step - loss: 0.0748 - sparse_categorical_accuracy: 0.9791\n",

"469/469 [==============================] - 2s 4ms/step - loss: 0.1070 - sparse_categorical_accuracy: 0.9691 - val_loss: 0.1089 - val_sparse_categorical_accuracy: 0.9682\n",

"Epoch 6/6\n",

"469/469 [==============================] - 2s 5ms/step - loss: 0.1628 - sparse_categorical_accuracy: 0.9542 - val_loss: 0.1559 - val_sparse_categorical_accuracy: 0.9545\n",

"Epoch 6/6\n",

"311/469 [==================>...........] - ETA: 0s - loss: 0.0950 - sparse_categorical_accuracy: 0.9729Epoch 4/6\n",

"79/79 [==============================] - 0s 3ms/step - loss: 0.0991 - sparse_categorical_accuracy: 0.9702\n",

" 84/469 [====>.........................] - ETA: 1s - loss: 0.2051 - sparse_categorical_accuracy: 0.9418Epoch 6/6\n",

"469/469 [==============================] - 1s 3ms/step - loss: 0.0933 - sparse_categorical_accuracy: 0.9735\n",

"469/469 [==============================] - 3s 5ms/step - loss: 0.0900 - sparse_categorical_accuracy: 0.9743 - val_loss: 0.0938 - val_sparse_categorical_accuracy: 0.9720\n",

"469/469 [==============================] - 3s 5ms/step - loss: 0.1441 - sparse_categorical_accuracy: 0.9596 - val_loss: 0.1390 - val_sparse_categorical_accuracy: 0.9595\n",

"79/79 [==============================] - 0s 3ms/step - loss: 0.1089 - sparse_categorical_accuracy: 0.9682\n",

"469/469 [==============================] - 2s 5ms/step - loss: 0.1951 - sparse_categorical_accuracy: 0.9449 - val_loss: 0.1784 - val_sparse_categorical_accuracy: 0.9480\n",

"Epoch 5/6\n",

"469/469 [==============================] - 3s 5ms/step - loss: 0.0724 - sparse_categorical_accuracy: 0.9797 - val_loss: 0.0847 - val_sparse_categorical_accuracy: 0.9726\n",

"469/469 [==============================] - 2s 3ms/step - loss: 0.1309 - sparse_categorical_accuracy: 0.9635\n",

"79/79 [==============================] - 0s 3ms/step - loss: 0.1390 - sparse_categorical_accuracy: 0.9595\n",

"460/469 [============================>.] - ETA: 0s - loss: 0.1721 - sparse_categorical_accuracy: 0.9512"

]

}

],

"source": [

"###############################\n",

"# execute the defined actions #\n",

"###############################\n",

"\n",

"campaign.execute().collate()"

]

},

{

"cell_type": "code",

"execution_count": 10,

"id": "0b55725a",

"metadata": {

"execution": {

"iopub.execute_input": "2025-07-20T07:15:59.299460Z",

"iopub.status.busy": "2025-07-20T07:15:59.298837Z",

"iopub.status.idle": "2025-07-20T07:15:59.329418Z",

"shell.execute_reply": "2025-07-20T07:15:59.329117Z",

"shell.execute_reply.started": "2025-07-20T07:15:59.299409Z"

}

},

"outputs": [

{

"data": {

"text/html": [

"\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" run_id | \n",

" iteration | \n",

" n_neurons | \n",

" learning_rate | \n",

" dropout_prob_in | \n",

" dropout_prob_hidden | \n",

" accuracy_train | \n",

" accuracy_test | \n",

"

\n",

" \n",

" | \n",

" 0 | \n",

" 0 | \n",

" 0 | \n",

" 0 | \n",

" 0 | \n",

" 0 | \n",

" 0 | \n",

" 0 | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 1 | \n",

" 0 | \n",

" 64 | \n",

" 0.005 | \n",

" 0.0 | \n",

" 0.0 | \n",

" 0.959450 | \n",

" 0.9577 | \n",

"

\n",

" \n",

" | 1 | \n",

" 2 | \n",

" 0 | \n",

" 64 | \n",

" 0.010 | \n",

" 0.0 | \n",

" 0.0 | \n",

" 0.970083 | \n",

" 0.9636 | \n",

"

\n",

" \n",

" | 2 | \n",

" 3 | \n",

" 0 | \n",

" 64 | \n",

" 0.015 | \n",

" 0.0 | \n",

" 0.0 | \n",

" 0.976750 | \n",

" 0.9682 | \n",

"

\n",

" \n",

" | 3 | \n",

" 4 | \n",

" 0 | \n",

" 128 | \n",

" 0.005 | \n",

" 0.0 | \n",

" 0.0 | \n",

" 0.965550 | \n",

" 0.9597 | \n",

"

\n",

" \n",

" | 4 | \n",

" 5 | \n",

" 0 | \n",

" 128 | \n",

" 0.010 | \n",

" 0.0 | \n",

" 0.0 | \n",

" 0.978967 | \n",

" 0.9726 | \n",

"

\n",

" \n",

" | 5 | \n",

" 6 | \n",

" 0 | \n",

" 128 | \n",

" 0.015 | \n",

" 0.0 | \n",

" 0.0 | \n",

" 0.982950 | \n",

" 0.9736 | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" n_neurons | \n",

" learning_rate | \n",

"

\n",

" \n",

" | \n",

" 0 | \n",

" 0 | \n",

"

\n",

" \n",

" \n",

" \n",

" | 5 | \n",

" 128 | \n",

" 0.015 | \n",

"

\n",

" \n",

"

\n",

"