{

"cells": [

{

"cell_type": "markdown",

"id": "05c43ea9",

"metadata": {},

"source": [

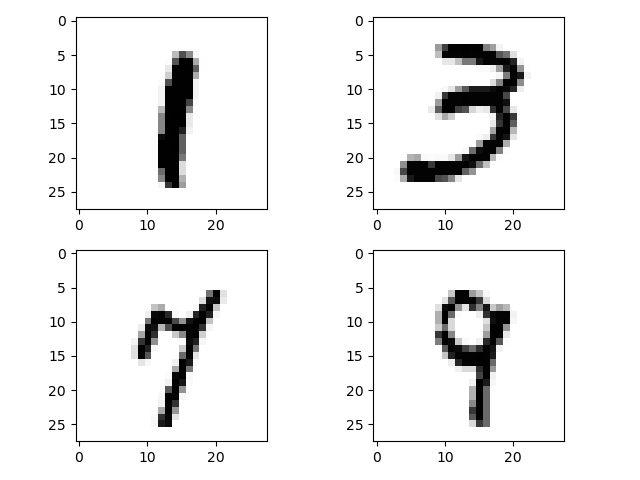

"## Tuning the hyperparameters of a neural network using EasyVVUQ and FabSim3\n",

"\n",

"In this tutorial we will use the EasyVVUQ `GridSampler` to perform a grid search on the hyperparameters of a simple Keras neural network model, trained to recognize hand-written digits. This is the famous MNIST data set, of which 4 input features (of size 28 x 28) are show below. These are fed into a standard feed-forward neural network, which will predict the label 0-9.\n",

"\n",

"The (Keras) neural network script is located in `mnist/keras_mnist.template`, which will form the input template for the EasyVVUQ encoder. We will assume you are familiar with the basic EasyVVUQ building blocks. If not, you can look at the [basic tutorial](https://github.com/UCL-CCS/EasyVVUQ/blob/dev/tutorials/basic_tutorial.ipynb)."

]

},

{

"cell_type": "markdown",

"id": "83545e38",

"metadata": {},

"source": [

""

]

},

{

"cell_type": "markdown",

"id": "bf467821",

"metadata": {},

"source": [

"We need EasyVVUQ, TensorFlow and the TensorFlow data sets to execute this tutorial. If you need to install these, uncomment the corresponding line below."

]

},

{

"cell_type": "code",

"execution_count": 1,

"id": "00f7ba08",

"metadata": {},

"outputs": [],

"source": [

"# !pip install easyvvuq\n",

"# !pip install tensorflow\n",

"# !pip install tensorflow_datasets"

]

},

{

"cell_type": "markdown",

"id": "8d5c7fde",

"metadata": {},

"source": [

"### FabSim3\n",

"\n",

"While running on the localhost, we will use the [FabSim3](https://github.com/djgroen/FabSim3) automation toolkit for the data processing workflow, i.e. to move the UQ ensemble to/from the localhost. To connect EasyVVUQ with FabSim3, the [FabUQCampaign](https://github.com/wedeling/FabUQCampaign) plugin must be installed.\n",

"\n",

"The advantage of this construction is that we could offload the ensemble to a remote supercomputer using this same script by simply changing the `MACHINE='localhost'` flag, provided that FabSIm3 is set up on the remote resource.\n",

"\n",

"For an example **without FabSim3**, see `tutorials/hyperparameter_tuning_tutorial.ipynb`.\n",

"\n",

"For now, import the required libraries below. `fabsim3_cmd_api` is an interface with fabSim3 such that the command-line FabSim3 commands can be executed in a Python script. It is stored locally in `fabsim3_cmd_api.py`."

]

},

{

"cell_type": "code",

"execution_count": 2,

"id": "e7347053",

"metadata": {},

"outputs": [],

"source": [

"import easyvvuq as uq\n",

"import os\n",

"import numpy as np\n",

"\n",

"############################################\n",

"# Import the FabSim3 commandline interface #\n",

"############################################\n",

"import fabsim3_cmd_api as fab"

]

},

{

"cell_type": "markdown",

"id": "22672c4c",

"metadata": {},

"source": [

"We now set some flags:"

]

},

{

"cell_type": "code",

"execution_count": 3,

"id": "52064503",

"metadata": {},

"outputs": [],

"source": [

"# Work directory, where the EasyVVUQ directory will be placed\n",

"WORK_DIR = '/tmp'\n",

"# machine to run ensemble on\n",

"MACHINE = \"localhost\"\n",

"# target output filename generated by the code\n",

"TARGET_FILENAME = 'output.csv'\n",

"# EasyVVUQ campaign name\n",

"CAMPAIGN_NAME = 'grid_test'\n",

"\n",

"# FabSim3 config name\n",

"CONFIG = 'grid_search'\n",

"# Use QCG PilotJob or not\n",

"PILOT_JOB = False"

]

},

{

"cell_type": "markdown",

"id": "898a0d57",

"metadata": {},

"source": [

"Most of these are self explanatory. Here, `CONFIG` is the name of the script that gets executed for each sample, in this case `grid_search`, which is located in `FabUQCampaign/templates/grid_search`. Its contents are essentially just runs our Python code `hyper_param_tune.py`:"

]

},

{

"cell_type": "markdown",

"id": "a229e7b9",

"metadata": {},

"source": [

"```\n",

"cd $job_results\n",

"$run_prefix\n",

"\n",

"/usr/bin/env > env.log\n",

"\n",

"python3 hyper_param_tune.py\n",

"```"

]

},

{

"cell_type": "markdown",

"id": "5c0c006f",

"metadata": {},

"source": [

"Here, `hyper_param_tune` is generated by the EasyVVUQ encoder, see below. The flag `PILOT_JOB` regulates the use of the QCG PilotJob mechanism. If `True`, FabSim will submit the ensemble to the (remote) host as a QCG PilotJob, which essentially means that all invididual jobs of the ensemble will get packaged into a single job allocation, thereby circumventing the limit on the maximum number of simultaneous jobs that is present on many supercomputers. For more info on the QCG PilotJob click [here](https://github.com/vecma-project/QCG-PilotJob). In this example we'll run the samples on the localhost (see `MACHINE`), and hence we set `PILOT_JOB=False`.\n",

"\n",

"As is standard in EasyVVUQ, we now define the parameter space. In this case these are 4 hyperparameters. There is one hidden layer with `n_neurons` neurons, a Dropout layer after the input and hidden layer, with dropout probability `dropout_prob_in` and `dropout_prob_hidden` respectively. We made the `learning_rate` tuneable as well."

]

},

{

"cell_type": "code",

"execution_count": 4,

"id": "6a3a8a82",

"metadata": {},

"outputs": [],

"source": [

"params = {}\n",

"params[\"n_neurons\"] = {\"type\":\"integer\", \"default\": 32}\n",

"params[\"dropout_prob_in\"] = {\"type\":\"float\", \"default\": 0.0}\n",

"params[\"dropout_prob_hidden\"] = {\"type\":\"float\", \"default\": 0.0}\n",

"params[\"learning_rate\"] = {\"type\":\"float\", \"default\": 0.001}"

]

},

{

"cell_type": "markdown",

"id": "7b41214c",

"metadata": {},

"source": [

"These 4 hyperparameter appear as flags in the input template `mnist/keras_mnist.template`. Typically this is generated from an input file used by some simualtion code. In this case however, `mnist/keras_mnist.template` is directly our Python script, with the hyperparameters replaced by flags. For instance:\n",

"\n",

"```python\n",

"model = tf.keras.models.Sequential([\n",

" tf.keras.layers.Flatten(input_shape=(28, 28)),\n",

" tf.keras.layers.Dropout($dropout_prob_in),\n",

" tf.keras.layers.Dense($n_neurons, activation='relu'),\n",

" tf.keras.layers.Dropout($dropout_prob_hidden),\n",

" tf.keras.layers.Dense(10)\n",

"])\n",

"```\n",

"\n",

"is simply the neural network construction part with flags for the dropout probabilities and the number of neurons in the hidden layer. The encoder reads the flags and replaces them with numeric values, and it subsequently writes the corresponding `target_filename=hyper_param_tune.py`:"

]

},

{

"cell_type": "code",

"execution_count": 5,

"id": "3ed08818",

"metadata": {},

"outputs": [],

"source": [

"encoder = uq.encoders.GenericEncoder('./mnist/keras_mnist.template', target_filename='hyper_param_tune.py')"

]

},

{

"cell_type": "markdown",

"id": "02644574",

"metadata": {},

"source": [

"Now we create the first set of EasyVVUQ `actions` to create separate run directories and to encode the template:"

]

},

{

"cell_type": "code",

"execution_count": 6,

"id": "a10d571c",

"metadata": {},

"outputs": [],

"source": [

"# actions: create directories and encode input template, placing 1 hyper_param_tune.py file in each directory.\n",

"actions = uq.actions.Actions(\n",

" uq.actions.CreateRunDirectory(root=WORK_DIR, flatten=True),\n",

" uq.actions.Encode(encoder),\n",

")\n",

"\n",

"# create the EasyVVUQ main campaign object\n",

"campaign = uq.Campaign(\n",

" name=CAMPAIGN_NAME,\n",

" work_dir=WORK_DIR,\n",

")\n",

"\n",

"# add the param definitions and actions to the campaign\n",

"campaign.add_app(\n",

" name=CAMPAIGN_NAME,\n",

" params=params,\n",

" actions=actions\n",

")"

]

},

{

"cell_type": "markdown",

"id": "bbbba5f8",

"metadata": {},

"source": [

"As with the uncertainty-quantification (UQ) samplers, the `vary` is used to select which of the `params` we actually vary. Unlike the UQ samplers we do not specify an input probability distribution. This being a grid search, we simply specify a list of values for each hyperparameter. Parameters not in `vary`, but with a flag in the template, will be given the default value specified in `params`."

]

},

{

"cell_type": "code",

"execution_count": 7,

"id": "a3247048",

"metadata": {},

"outputs": [],

"source": [

"vary = {\"n_neurons\": [64, 128], \"learning_rate\": [0.005, 0.01, 0.015]}"

]

},

{

"cell_type": "markdown",

"id": "612e912c",

"metadata": {},

"source": [

"**Note:** we are mixing integer and floats in the `vary` dict. Other data types (string, boolean) can also be used.\n",

"\n",

"The `vary` dict is passed to the `Grid_Sampler`. As can be seen, it created a tensor product of all 1D points specified in `vary`. If a single tensor product is not useful (e.g. because it creates combinations of parameters that do not makes sense), you can also pass a list of different `vary` dicts. For even more flexibility you can also write the required parameter combinations to a CSV file, and pass it to the `CSV_Sampler` instead."

]

},

{

"cell_type": "code",

"execution_count": 8,

"id": "29e62d09",

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"There are 6 points:\n"

]

},

{

"data": {

"text/plain": [

"[array([[64, 0.005],\n",

" [64, 0.01],\n",

" [64, 0.015],\n",

" [128, 0.005],\n",

" [128, 0.01],\n",

" [128, 0.015]], dtype=object)]"

]

},

"execution_count": 8,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"# create an instance of the Grid Sampler\n",

"sampler = uq.sampling.Grid_Sampler(vary)\n",

"\n",

"# Associate the sampler with the campaign\n",

"campaign.set_sampler(sampler)\n",

"\n",

"# print the points\n",

"print(\"There are %d points:\" % (sampler.n_samples()))\n",

"sampler.points"

]

},

{

"cell_type": "markdown",

"id": "99c39b4b",

"metadata": {},

"source": [

"Run the `actions` (create directories with `hyper_param_tune.py` files in it)"

]

},

{

"cell_type": "code",

"execution_count": 9,

"id": "2095968a",

"metadata": {},

"outputs": [],

"source": [

"###############################\n",

"# execute the defined actions #\n",

"###############################\n",

"\n",

"campaign.execute().collate()"

]

},

{

"cell_type": "markdown",

"id": "b149dd32",

"metadata": {},

"source": [

"To run the ensemble, execute:"

]

},

{

"cell_type": "code",

"execution_count": 10,

"id": "7b9cb1b8",

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Executing fabsim localhost run_uq_ensemble:grid_search,campaign_dir=/tmp/grid_testrebm6ntq,script=grid_search,skip=0,PJ=False\n"

]

},

{

"name": "stderr",

"output_type": "stream",

"text": [

"2023-03-02 11:35:56.557670: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA\n",

"To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.\n",

"2023-03-02 11:35:56.725197: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:35:56.725224: I tensorflow/compiler/xla/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.\n",

"2023-03-02 11:35:57.644413: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:35:57.644488: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:35:57.644497: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.\n",

"2023-03-02 11:35:59.393841: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcuda.so.1'; dlerror: libcuda.so.1: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:35:59.393866: W tensorflow/compiler/xla/stream_executor/cuda/cuda_driver.cc:265] failed call to cuInit: UNKNOWN ERROR (303)\n",

"2023-03-02 11:35:59.393886: I tensorflow/compiler/xla/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (wouter-XPS-13-7390): /proc/driver/nvidia/version does not exist\n",

"2023-03-02 11:35:59.394178: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA\n",

"To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.\n",

"2023-03-02 11:36:12.314798: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA\n",

"To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.\n",

"2023-03-02 11:36:12.475403: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:36:12.475430: I tensorflow/compiler/xla/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.\n",

"2023-03-02 11:36:13.409427: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:36:13.409501: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:36:13.409511: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.\n",

"2023-03-02 11:36:15.210445: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcuda.so.1'; dlerror: libcuda.so.1: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:36:15.210470: W tensorflow/compiler/xla/stream_executor/cuda/cuda_driver.cc:265] failed call to cuInit: UNKNOWN ERROR (303)\n",

"2023-03-02 11:36:15.210490: I tensorflow/compiler/xla/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (wouter-XPS-13-7390): /proc/driver/nvidia/version does not exist\n",

"2023-03-02 11:36:15.210784: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA\n",

"To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.\n",

"2023-03-02 11:36:27.814654: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA\n",

"To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.\n",

"2023-03-02 11:36:27.985756: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:36:27.985783: I tensorflow/compiler/xla/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.\n",

"2023-03-02 11:36:28.926507: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:36:28.926585: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:36:28.926596: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.\n",

"2023-03-02 11:36:30.685925: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcuda.so.1'; dlerror: libcuda.so.1: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:36:30.685950: W tensorflow/compiler/xla/stream_executor/cuda/cuda_driver.cc:265] failed call to cuInit: UNKNOWN ERROR (303)\n",

"2023-03-02 11:36:30.685969: I tensorflow/compiler/xla/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (wouter-XPS-13-7390): /proc/driver/nvidia/version does not exist\n",

"2023-03-02 11:36:30.686252: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA\n",

"To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.\n",

"2023-03-02 11:36:42.235332: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA\n",

"To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.\n",

"2023-03-02 11:36:42.397849: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:36:42.397876: I tensorflow/compiler/xla/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.\n",

"2023-03-02 11:36:43.325167: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:36:43.325318: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:36:43.325331: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.\n"

]

},

{

"name": "stderr",

"output_type": "stream",

"text": [

"2023-03-02 11:36:45.073851: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcuda.so.1'; dlerror: libcuda.so.1: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:36:45.073875: W tensorflow/compiler/xla/stream_executor/cuda/cuda_driver.cc:265] failed call to cuInit: UNKNOWN ERROR (303)\n",

"2023-03-02 11:36:45.073894: I tensorflow/compiler/xla/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (wouter-XPS-13-7390): /proc/driver/nvidia/version does not exist\n",

"2023-03-02 11:36:45.074174: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA\n",

"To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.\n",

"2023-03-02 11:36:56.730036: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA\n",

"To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.\n",

"2023-03-02 11:36:56.899197: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:36:56.899225: I tensorflow/compiler/xla/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.\n",

"2023-03-02 11:36:57.892828: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:36:57.892931: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:36:57.892948: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.\n",

"2023-03-02 11:36:59.710915: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcuda.so.1'; dlerror: libcuda.so.1: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:36:59.710945: W tensorflow/compiler/xla/stream_executor/cuda/cuda_driver.cc:265] failed call to cuInit: UNKNOWN ERROR (303)\n",

"2023-03-02 11:36:59.710971: I tensorflow/compiler/xla/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (wouter-XPS-13-7390): /proc/driver/nvidia/version does not exist\n",

"2023-03-02 11:36:59.711346: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA\n",

"To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.\n",

"2023-03-02 11:37:11.878783: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA\n",

"To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.\n",

"2023-03-02 11:37:12.045043: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:37:12.045066: I tensorflow/compiler/xla/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.\n",

"2023-03-02 11:37:12.946743: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:37:12.946813: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:37:12.946822: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.\n",

"2023-03-02 11:37:14.633522: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcuda.so.1'; dlerror: libcuda.so.1: cannot open shared object file: No such file or directory\n",

"2023-03-02 11:37:14.633546: W tensorflow/compiler/xla/stream_executor/cuda/cuda_driver.cc:265] failed call to cuInit: UNKNOWN ERROR (303)\n",

"2023-03-02 11:37:14.633564: I tensorflow/compiler/xla/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (wouter-XPS-13-7390): /proc/driver/nvidia/version does not exist\n",

"2023-03-02 11:37:14.633830: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA\n",

"To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.\n"

]

},

{

"data": {

"text/plain": [

"True"

]

},

"execution_count": 10,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"###################################################\n",

"# run the UQ ensemble using the FabSim3 interface #\n",

"###################################################\n",

"\n",

"fab.run_uq_ensemble(CONFIG, campaign.campaign_dir, script='grid_search',\n",

" machine=MACHINE, PJ=PILOT_JOB)\n",

"\n",

"# wait for job to complete\n",

"fab.wait(machine=MACHINE)"

]

},

{

"cell_type": "code",

"execution_count": 11,

"id": "9d2c0ddb",

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Executing fabsim localhost fetch_results\n",

"Executing fabsim localhost verify_last_ensemble:grid_search,campaign_dir=/tmp/grid_testrebm6ntq,target_filename=output.csv,machine=localhost\n"

]

}

],

"source": [

"# check if all output files are retrieved from the remote machine, returns a Boolean flag\n",

"all_good = fab.verify(CONFIG, campaign.campaign_dir, TARGET_FILENAME, machine=MACHINE)"

]

},

{

"cell_type": "code",

"execution_count": 12,

"id": "c2b9838b",

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Executing fabsim localhost get_uq_samples:grid_search,campaign_dir=/tmp/grid_testrebm6ntq,number_of_samples=6,skip=0\n"

]

}

],

"source": [

"if all_good:\n",

" # copy the results from the FabSim results dir to the EasyVVUQ results dir\n",

" fab.get_uq_samples(CONFIG, campaign.campaign_dir, sampler.n_samples(), machine=MACHINE)\n",

"else:\n",

" print(\"Not all samples executed correctly\")\n",

" import sys\n",

" sys.exit()"

]

},

{

"cell_type": "markdown",

"id": "907c295d",

"metadata": {},

"source": [

"Briely:\n",

"\n",

"* `fab.run_uq_ensemble`: this command submits the ensemble to the (remote) host for execution. Under the hood it uses the FabSim3 `campaign2ensemble` subroutine to copy the run directories from `WORK_DIR` to the FabSim3 `SWEEP` directory, located in `config_files/grid_search/SWEEP`. From there the ensemble will be sent to the (remote) host.\n",

"* `fab.wait`: this will check every minute on the status of the jobs on the remote host, and sleep otherwise, halting further execution of the script. On the localhost this command doesn't do anything.\n",

"* `fab.verify`: this will execute the `verify_last_ensemble` subroutine to see if the output file `target_filename` for each run in the `SWEEP` directory is present in the corresponding FabSim3 results directory. Returns a boolean flag. `fab.verify` will also call the FabSim `fetch_results` method, which actually retreives the results from the (remote) host. So, if you want to just get the results without verifying the presence of output files, call `fab.fetch_results(machine=MACHINE)` instead. However, if something went wrong on the (remote) host, this will cause an error later on since not all required output files will be transfered on the EasyVVUQ `WORK_DIR`.\n",

"* `fab.get_uq_samples`: copies the samples from the (local) FabSim results directory to the (local) EasyVVUQ campaign directory. It will not delete the results from the FabSim results directory. If you want to save space, you can delete the results on the FabSim side (see `results` directory in your FabSim home directory). You can also call `fab.clear_results(machine, name_results_dir)` to remove a specific FabSim results directory on a given machine.\n",

"\n",

"#### Error handling\n",

"\n",

"If `all_good == False` something went wrong on the (remote) host, and `sys.exit()` is called in our example, giving you the opportunity of investigating what went wrong. It can happen that a (small) number of jobs did not get executed on the remote host for some reason, whereas (most) jobs did execute succesfully. In this case simply resubmitting the failed jobs could be an option:\n",

"\n",

"```python\n",

"fab.remove_succesful_runs(CONFIG, campaign.campaign_dir)\n",

"fab.resubmit_previous_ensemble(CONFIG, 'grid_search')\n",

"```\n",

"\n",

"The first command removes all succesful run directories from the `SWEEP` dir for which the output file `TARGET_FILENAME` has been found. For this to work, `fab.verify` must have been called. Then, `fab.resubmit_previous_ensemble` simply resubmits the runs that are present in the `SWEEP` directory, which by now only contains the failed runs. After the jobs have finished, call `fab.verify` again to see if now `TARGET_FILENAME` is present in the results directory, for every run in the `SWEEP` dir.\n",

"\n",

"Once we are sure we have all required output files, the role of FabSim is over, and we proceed with decoding the output files. In this case, our Python script wrote the training and test accuracy to a CSV file, hence we use the `SimpleCSV` decoder. \n",

"\n",

"**Note**: It is also possible to use a more flexible HDF5 format, by using `uq.decoders.HDF5` instead."

]

},

{

"cell_type": "code",

"execution_count": 13,

"id": "0b55725a",

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" run_id | \n",

" iteration | \n",

" n_neurons | \n",

" learning_rate | \n",

" dropout_prob_in | \n",

" dropout_prob_hidden | \n",

" accuracy_train | \n",

" accuracy_test | \n",

"

\n",

" \n",

" | \n",

" 0 | \n",

" 0 | \n",

" 0 | \n",

" 0 | \n",

" 0 | \n",

" 0 | \n",

" 0 | \n",

" 0 | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 1 | \n",

" 0 | \n",

" 64 | \n",

" 0.005 | \n",

" 0.0 | \n",

" 0.0 | \n",

" 0.959267 | \n",

" 0.9544 | \n",

"

\n",

" \n",

" | 1 | \n",

" 2 | \n",

" 0 | \n",

" 64 | \n",

" 0.010 | \n",

" 0.0 | \n",

" 0.0 | \n",

" 0.974133 | \n",

" 0.9653 | \n",

"

\n",

" \n",

" | 2 | \n",

" 3 | \n",

" 0 | \n",

" 64 | \n",

" 0.015 | \n",

" 0.0 | \n",

" 0.0 | \n",

" 0.979717 | \n",

" 0.9712 | \n",

"

\n",

" \n",

" | 3 | \n",

" 4 | \n",

" 0 | \n",

" 128 | \n",

" 0.005 | \n",

" 0.0 | \n",

" 0.0 | \n",

" 0.963333 | \n",

" 0.9592 | \n",

"

\n",

" \n",

" | 4 | \n",

" 5 | \n",

" 0 | \n",

" 128 | \n",

" 0.010 | \n",

" 0.0 | \n",

" 0.0 | \n",

" 0.978667 | \n",

" 0.9718 | \n",

"

\n",

" \n",

" | 5 | \n",

" 6 | \n",

" 0 | \n",

" 128 | \n",

" 0.015 | \n",

" 0.0 | \n",

" 0.0 | \n",

" 0.983650 | \n",

" 0.9744 | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" n_neurons | \n",

" learning_rate | \n",

"

\n",

" \n",

" | \n",

" 0 | \n",

" 0 | \n",

"

\n",

" \n",

" \n",

" \n",

" | 5 | \n",

" 128 | \n",

" 0.015 | \n",

"

\n",

" \n",

"

\n",

"