Kaka Subtitle Assistant

VideoCaptioner

An LLM-powered video subtitle processing assistant, supporting speech recognition, subtitle segmentation, optimization, and translation.

[简体中文](../README.md) / [正體中文](./README_TW.md) / English / [日本語](./README_JA.md)

## 📖 Introduction

Kaka Subtitle Assistant (VideoCaptioner) is easy to operate and doesn't require high-end hardware. It supports both online API calls and local offline processing (with GPU support) for speech recognition. It leverages Large Language Models (LLMs) for intelligent subtitle segmentation, correction, and translation. It offers a one-click solution for the entire video subtitle workflow! Add stunning subtitles to your videos.

- Support for word-level timestamps and VAD voice activity detection with high recognition accuracy

- LLM-based semantic understanding to automatically reorganize word-by-word subtitles into natural, fluent sentence paragraphs

- Context-aware AI translation with reflection optimization mechanism for idiomatic and professional translations

- Batch video subtitle synthesis support to improve processing efficiency

- Intuitive subtitle editing and viewing interface with real-time preview and quick editing

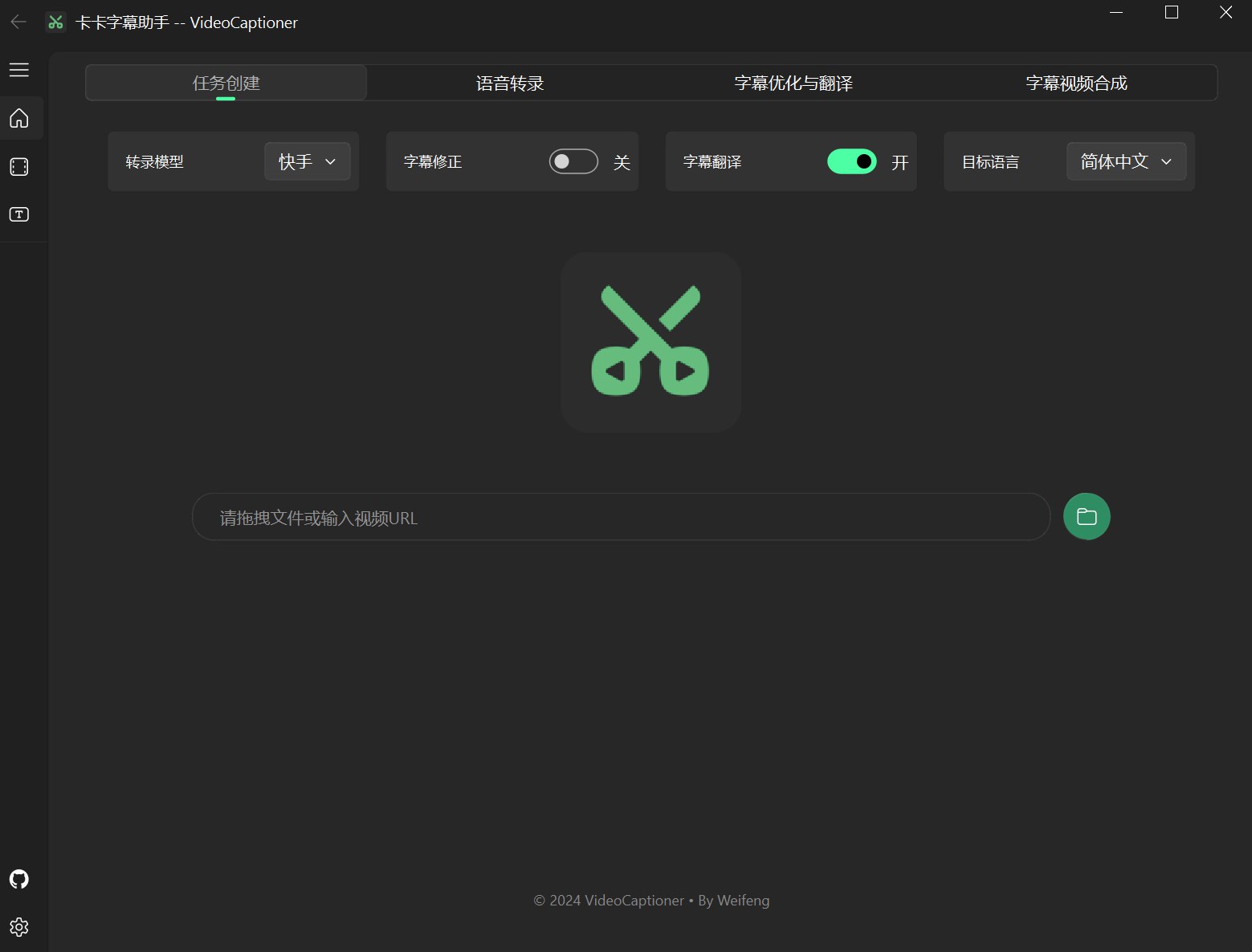

## 📸 Interface Preview