{

"cells": [

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# 2. Statistical Learning\n",

"\n",

"Excercises from **Chapter 2** of [An Introduction to Statistical Learning](http://www-bcf.usc.edu/~gareth/ISL/) by Gareth James, Daniela Witten, Trevor Hastie and Robert Tibshirani.\n",

"\n",

"I've elected to use Python instead of R."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Conceptual"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Q1 \n",

"For each of parts (a) through (d), indicate whether we would generally expect the performance of a flexible statistical learning method to be better or worse than an inflexible method. Justify your answer.\n",

"\n",

"a) The sample size n is extremely large, and the number of predictors p is small. \n",

">**Flexible**: we have enough observations to avoid overfitting, so assuming some there are non-linear relationships in our data a more flexible model should provide an improved fit. \n",

"\n",

"b) The number of predictors p is extremely large, and the number of observations n is small. \n",

">**Inflexible**: we *don't* have enough observations to avoid overfitting \n",

"\n",

"c) The relationship between the predictors and response is highly non-linear. \n",

">**Flexible**: a high variance model affords a better fit to non-linear relationships \n",

"\n",

"d) The variance of the error terms, i.e. σ2 = Var(ε), is extremely high. \n",

">**Inflexible**: a high bias model avoids overfitting to the noise in our dataset "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Q2 \n",

"Explain whether each scenario is a classification or regression problem, and indicate whether we are most interested in inference or prediction. Finally, provide n and p.\n",

"\n",

"(a) We collect a set of data on the top 500 firms in the US. For each firm we record profit, number of employees, industry and the CEO salary. We are interested in understanding which factors affect CEO salary. \n",

"\n",

">regression, inference, n=500, p=4 \n",

"\n",

"(b) We are considering launching a new product and wish to know whether it will be a success or a failure. We collect data on 20 similar products that were previously launched. For each product we have recorded whether it was a success or failure, price charged for the product, marketing budget, competition price, and ten other variables. \n",

"\n",

">classification, prediction, n=20, p=14\n",

"\n",

"(c) We are interested in predicting the % change in the USD/Euro exchange rate in relation to the weekly changes in the world stock markets. Hence we collect weekly data for all of 2012. For each week we record the % change in the USD/Euro, the % change in the US market, the % change in the British market, and the % change in the German market. \n",

"\n",

">regression, prediction, n=52, p=4"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

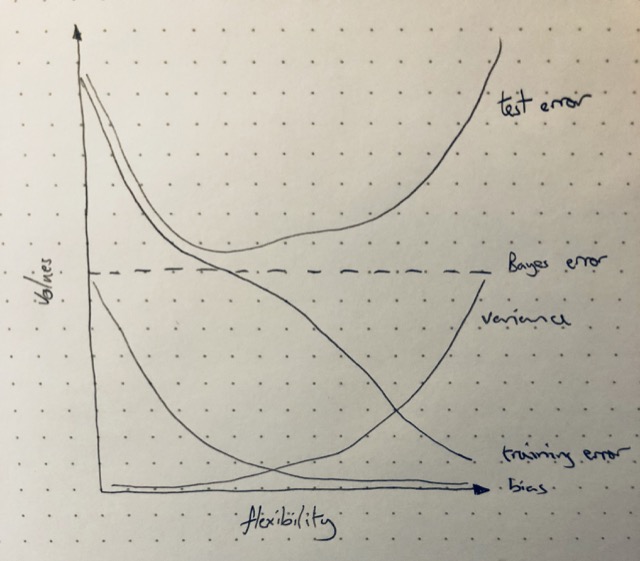

"### Q3\n",

"\n",

"We now revisit the bias-variance decomposition.\n",

"\n",

"(a) Provide a sketch of typical (squared) bias, variance, training error, test error, and Bayes (or irreducible) error curves, on a single plot, as we go from less flexible statistical learning methods towards more flexible approaches. The x-axis should represent the amount of flexibility in the method, and the y-axis should represent the values for each curve. There should be five curves. Make sure to label each one.\n",

"\n",

"\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"(b) Explain why each of the five curves has the shape displayed in part (a).\n",

"\n",

"- **Bayes error**: the irreducible error which is a constant irrespective of model flexibility\n",

"- **Variance**: the variance of a model increases with flexibility as the model picks up variation between training sets rsulting in more variaiton in f(X)\n",

"- **Bias**: bias tends to decrease with flexibility as the model casn fit more complex relationships\n",

"- **Test error**: tends to decreases as reduced bias allows the model to better fit non-linear relationships but then increases as an increasingly flexible model begins to fit the noise in the dataset (overfitting)\n",

"- **Training error**: decreases monotonically with increaed flexibility as the model 'flexes' towards individual datapoints in the training set"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Q4 \n",

"You will now think of some real-life applications for statistical learning\n",

"\n",

"(a) Describe three real-life applications in which classification might be useful. Describe the response, as well as the predictors. Is the goal of each application inference or prediction? Explain your answer. \n",

"\n",

" 1. Is this tumor malignant or benign?\n",

" - response: boolean (is malign)\n",

" - predictors (naive examples): tumor size, white blood cell count, change in adrenal mass, position in body\n",

" - goal: prediction\n",

" 2. What animals are in this image?\n",

" - response: 'Cat', 'Dog', 'Fish'\n",

" - predictors: image pixel values\n",

" - goal: prediction\n",

" 3. What test bench metrics are most indicative of a faulty Printed Circuit Board (PCB)?\n",

" - response: boolean (is faulty)\n",

" - predictors: current draw, voltage drawer, output noise, operating temp.\n",

" - goal: inference\n",

"\n",

"\n",

"(b) Describe three real-life applications in which regression might be useful. Describe the response, as well as the predictors. Is the goal of each application inference or prediction? Explain your answer. \n",

"\n",

" 1. How much is this house worth?\n",

" - responce: SalePrice\n",

" - predictors: LivingArea, BathroomCount, GarageCount, Neighbourhood, CrimeRate\n",

" - goal: prediction\n",

" 2. What attributes most affect the market cap. of a company?\n",

" - response: MarketCap\n",

" - predictors: Sector, Employees, FounderIsCEO, Age, TotalInvestment, Profitability, RONA\n",

" - goal: inference\n",

" 3. How long is this dairy cow likely to live?\n",

" - response: years\n",

" - predictors: past medical conditions, current weight, milk yield\n",

" - goal: prediction\n",

"\n",

"(c) Describe three real-life applications in which cluster analysis might be useful. \n",

"\n",

" 1. This dataset contains observations of 3 different species of flower. Estimate which observations belong to the same species.\n",

" - response: a, b, c (species class)\n",

" - predictors: sepal length, petal length, number of petals\n",

" - goal: prediction\n",

" 2. which attributes of the flowers in dataset described above are most predictive of species?\n",

" - response: a, b, c (species class)\n",

" - predictors: sepal length, petal length, number of petals\n",

" - goal: inference\n",

" 3. Group these audio recordings of birdsong by species.\n",

" - responce: (species classes)\n",

" - predictors: audio sample values\n",

" - goal: prediction\n",

" "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Q5 \n",

"What are the advantages and disadvantages of a very flexible (versus a less flexible) approach for regression or classification? Under what circumstances might a more flexible approach be preferred to a less flexible approach? When might a less flexible approach be preferred?\n",

"\n",

"Less flexible\n",

"\n",

"+ (+) gives better results with few observations\n",

"+ (+) simpler inference: the effect of each feature can be more easily understood\n",

"+ (+) fewer parameters, faster optimisation\n",

"- (-) performs poorly if observations contain highly non-linear relationships\n",

"\n",

"More flexible\n",

"+ (+) gives better fit if observations contain non-linear relationships\n",

"- (-) can overfit the data providing poor predictions for new observations\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Q6 \n",

"Describe the differences between a parametric and a non-parametric statistical learning approach. What are the advantages of a parametric approach to regression or classification (as opposed to a non-parametric approach)? What are its disadvantages?\n",

"\n",

"\n",

">A parametric approach simplifies the problem of estimating the best fit to the training data f(x) by making some assumptions about the functional form of f(x), this reduces the problem to estimating the parameters of the model. A non-parametric approach make no such assumptions and so f(x) can take any arbitrary shape.\n",

"\n",

">The advantage of the parametric approach is that it simplifies the problem of estimating f(x) because it is easier to estimate paramters than an arbitrary function. The disadvantage of this approach is that the assumed form of the function f(X) could limit the degree of accuracy with which the model can fit the training data. If too many parameters are used, in an attempt to increase the models flexibility, then overfitting can occur – meaning that the model begins to fit noise in the training data that is not representive of unseen observations."

]

},

{

"cell_type": "code",

"execution_count": 148,

"metadata": {},

"outputs": [],

"source": [

"import numpy as np\n",

"import pandas as pd\n",

"import seaborn as sns\n",

"import warnings\n",

"warnings.filterwarnings('ignore')"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Q7 \n",

"The table below provides a training data set containing six observations, three predictors, and one qualitative response variable."

]

},

{

"cell_type": "code",

"execution_count": 50,

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" Obs | \n",

" X1 | \n",

" X2 | \n",

" X3 | \n",

" Y | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 1 | \n",

" 0 | \n",

" 3 | \n",

" 0 | \n",

" Red | \n",

"

\n",

" \n",

" | 1 | \n",

" 2 | \n",

" 2 | \n",

" 0 | \n",

" 0 | \n",

" Red | \n",

"

\n",

" \n",

" | 2 | \n",

" 3 | \n",

" 0 | \n",

" 1 | \n",

" 3 | \n",

" Red | \n",

"

\n",

" \n",

" | 3 | \n",

" 4 | \n",

" 0 | \n",

" 1 | \n",

" 2 | \n",

" Green | \n",

"

\n",

" \n",

" | 4 | \n",

" 5 | \n",

" -1 | \n",

" 0 | \n",

" 1 | \n",

" Green | \n",

"

\n",

" \n",

" | 5 | \n",

" 6 | \n",

" 1 | \n",

" -1 | \n",

" 1 | \n",

" Red | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" Obs | \n",

" X1 | \n",

" X2 | \n",

" X3 | \n",

" Y | \n",

" EuclideanDist | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 1 | \n",

" 0 | \n",

" 3 | \n",

" 0 | \n",

" Red | \n",

" 3.000000 | \n",

"

\n",

" \n",

" | 1 | \n",

" 2 | \n",

" 2 | \n",

" 0 | \n",

" 0 | \n",

" Red | \n",

" 2.000000 | \n",

"

\n",

" \n",

" | 2 | \n",

" 3 | \n",

" 0 | \n",

" 1 | \n",

" 3 | \n",

" Red | \n",

" 3.162278 | \n",

"

\n",

" \n",

" | 3 | \n",

" 4 | \n",

" 0 | \n",

" 1 | \n",

" 2 | \n",

" Green | \n",

" 2.236068 | \n",

"

\n",

" \n",

" | 4 | \n",

" 5 | \n",

" -1 | \n",

" 0 | \n",

" 1 | \n",

" Green | \n",

" 1.414214 | \n",

"

\n",

" \n",

" | 5 | \n",

" 6 | \n",

" 1 | \n",

" -1 | \n",

" 1 | \n",

" Red | \n",

" 1.732051 | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" Obs | \n",

" X1 | \n",

" X2 | \n",

" X3 | \n",

" Y | \n",

" EuclideanDist | \n",

"

\n",

" \n",

" \n",

" \n",

" | 4 | \n",

" 5 | \n",

" -1 | \n",

" 0 | \n",

" 1 | \n",

" Green | \n",

" 1.414214 | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" Obs | \n",

" X1 | \n",

" X2 | \n",

" X3 | \n",

" Y | \n",

" EuclideanDist | \n",

"

\n",

" \n",

" \n",

" \n",

" | 4 | \n",

" 5 | \n",

" -1 | \n",

" 0 | \n",

" 1 | \n",

" Green | \n",

" 1.414214 | \n",

"

\n",

" \n",

" | 5 | \n",

" 6 | \n",

" 1 | \n",

" -1 | \n",

" 1 | \n",

" Red | \n",

" 1.732051 | \n",

"

\n",

" \n",

" | 1 | \n",

" 2 | \n",

" 2 | \n",

" 0 | \n",

" 0 | \n",

" Red | \n",

" 2.000000 | \n",

"

\n",

" \n",

"

\n",

"