Ph.D. Preliminary Examination

Parsimonious AI: Software-Hardware Synergy For Robot Autonomy

Monday May 16th, 2022

2:00 p.m. EST

IRB 4105

Zoom Link

Abstract:

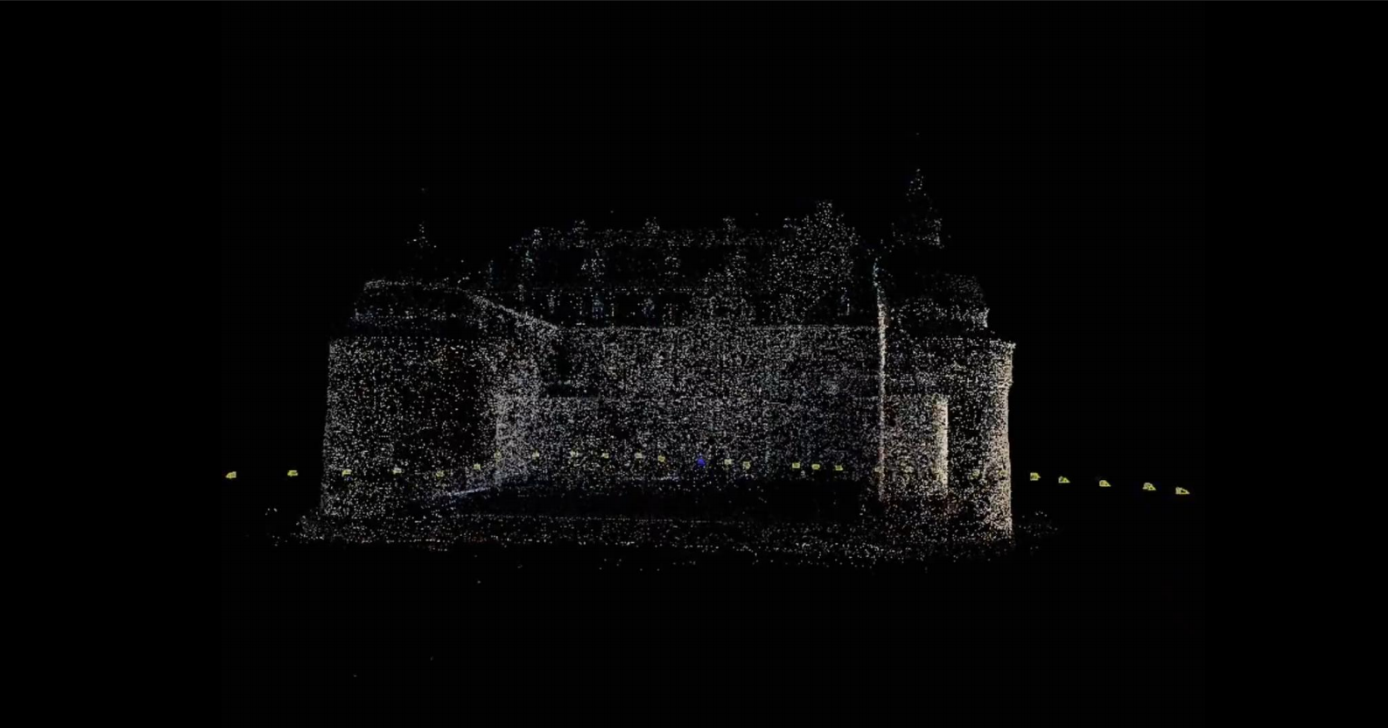

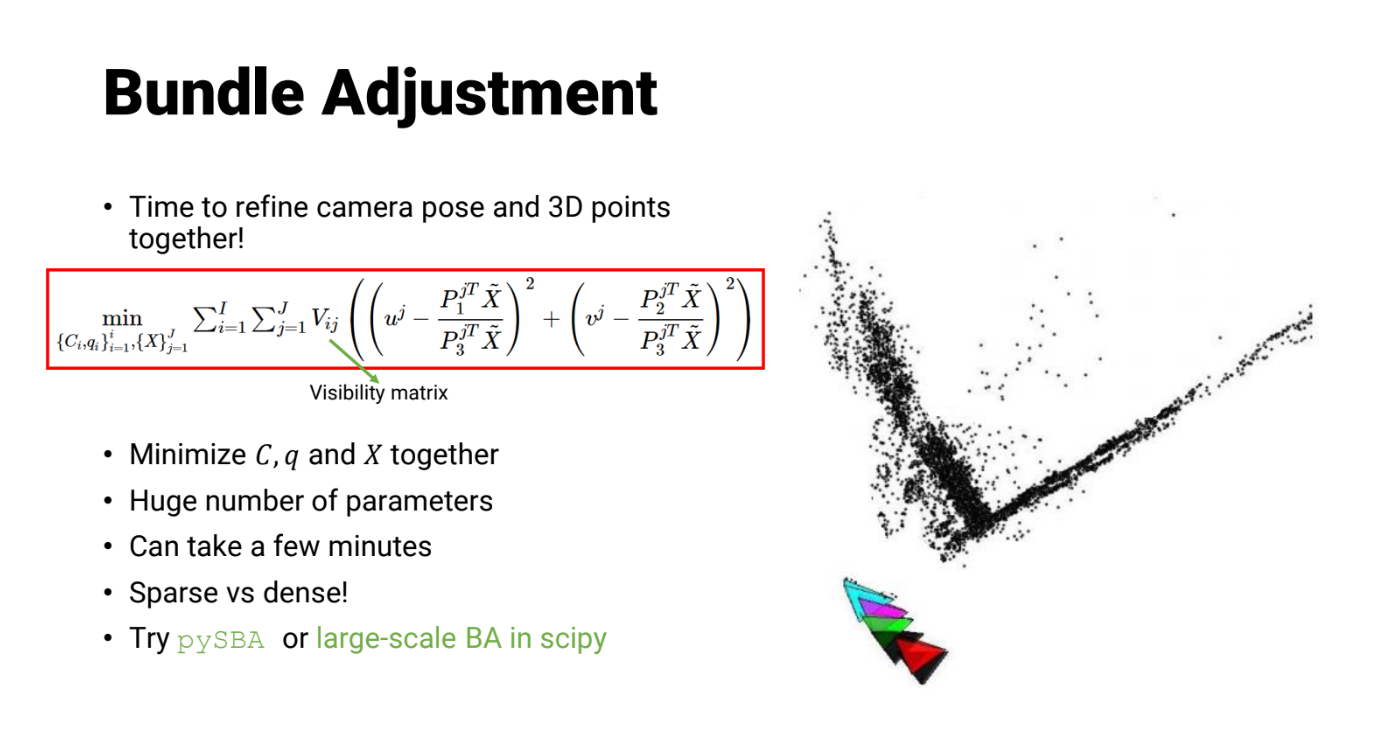

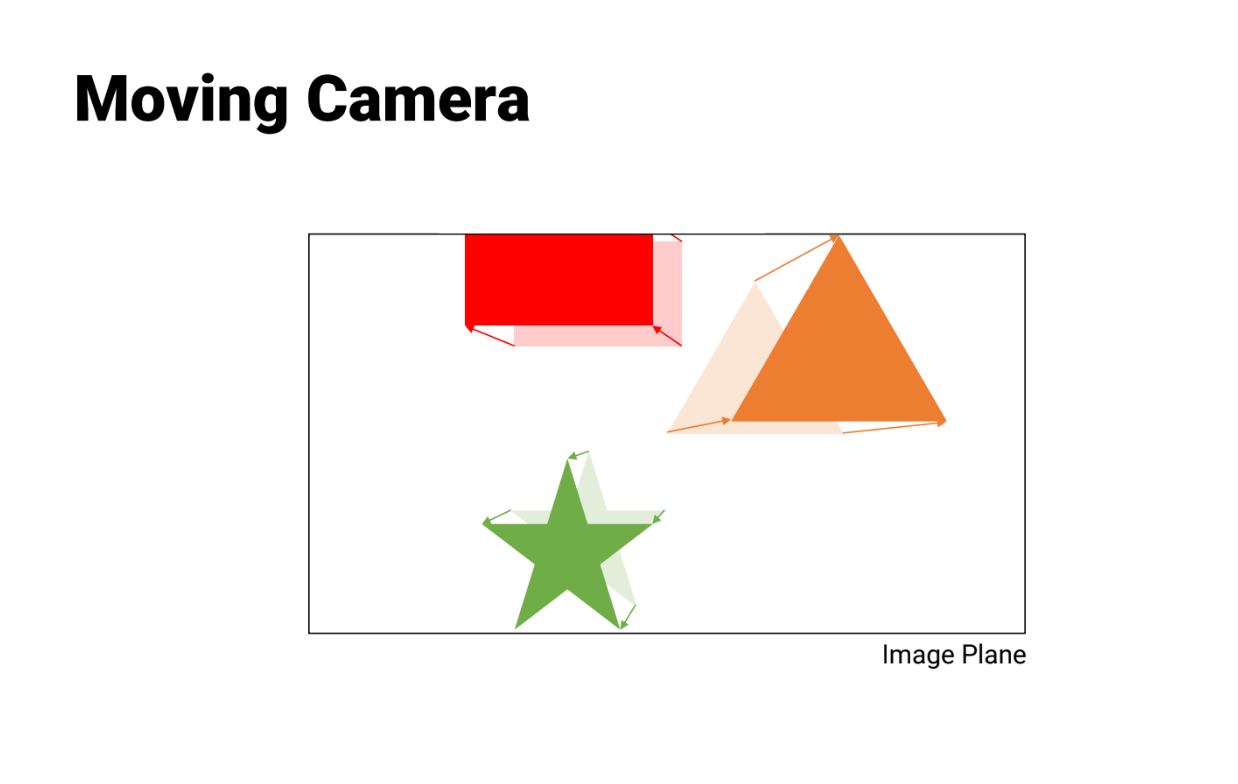

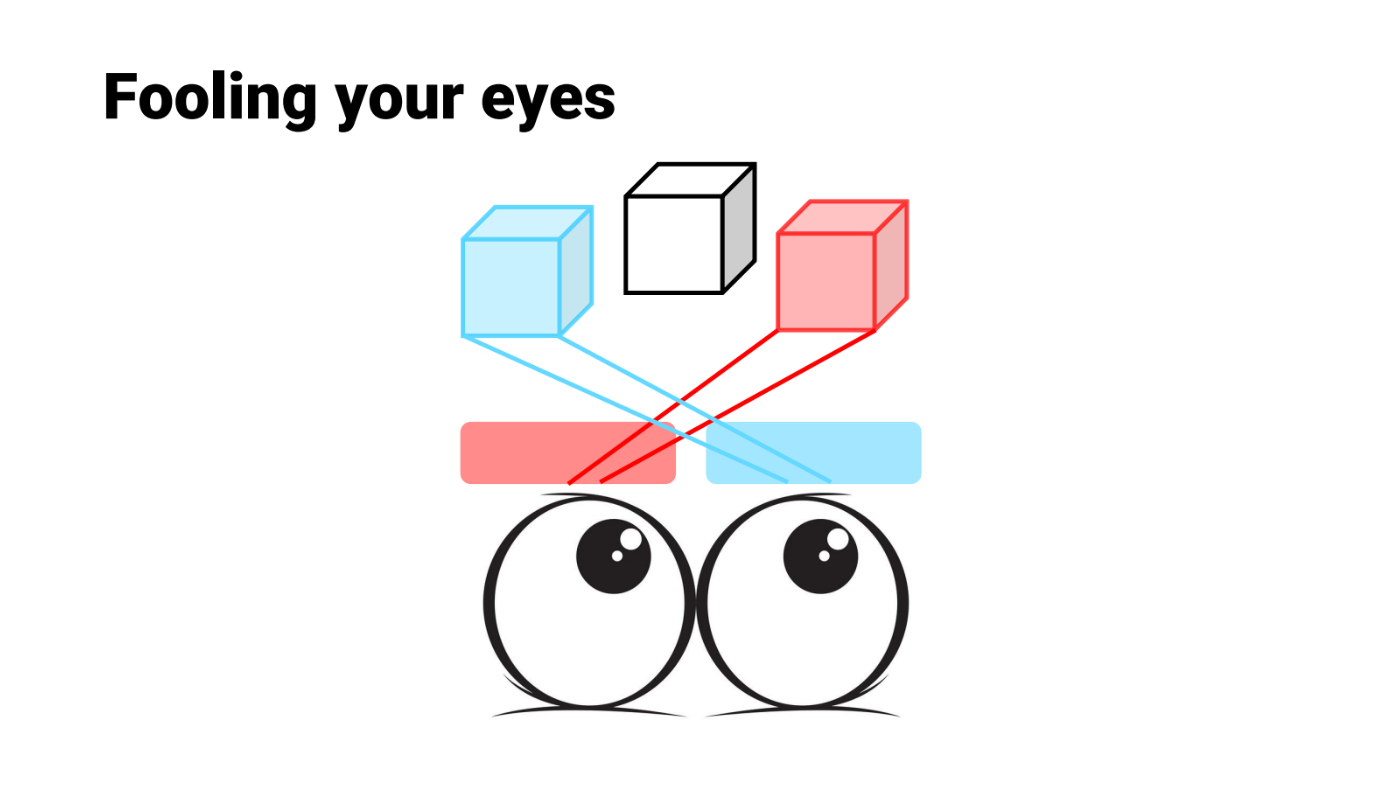

Although autonomous agents are inherently built for a myriad of applications, their perceptual systems are designed with a single line-of-thought - perception delivers a 3D representation of the scene. The utilization of these traditional methods on most autonomous agents (like drones) is highly inefficient as these algorithms are generic and not parsimonious. In stark contrast, the perceptual systems in biological beings have evolved to be highly efficient based on their natural habitat as well as their day-to-day tasks.

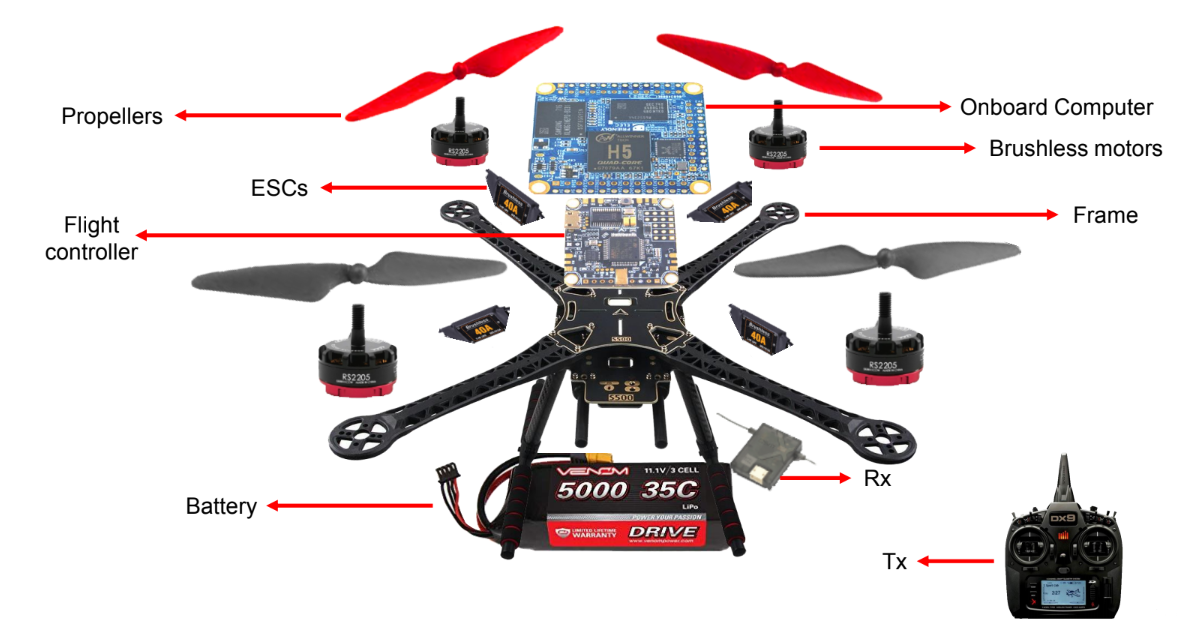

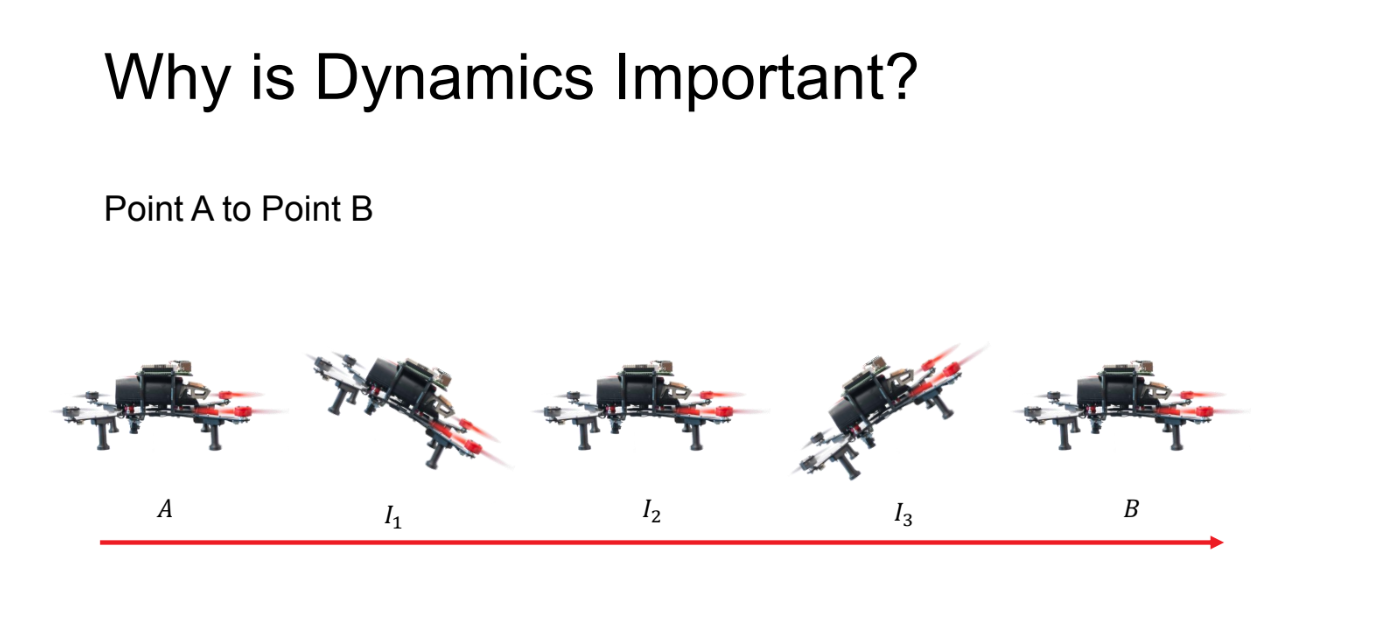

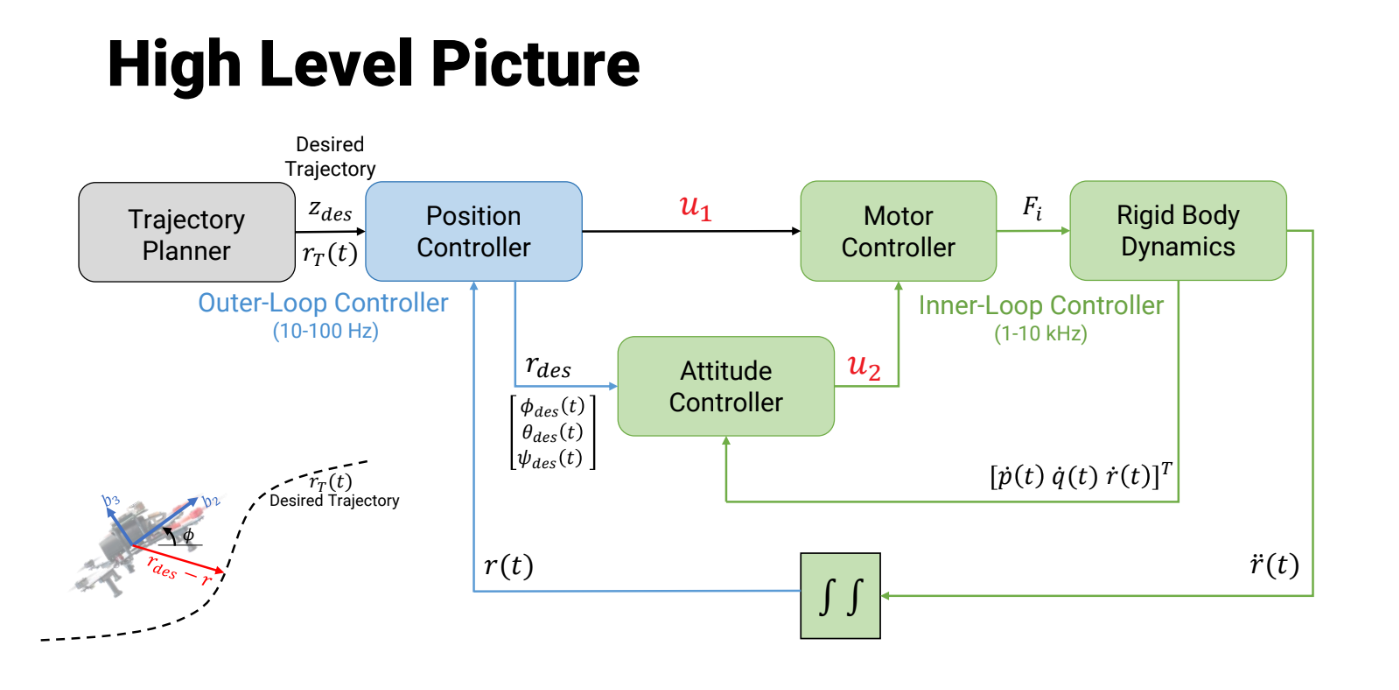

We draw inspiration from nature to build a minimalist cognitive framework for robots at scales that were never thought possible before. We propose a novel Parsimonious AI framework for mobile robots to solve a class of perception and navigational tasks like traversing through dynamic unstructured environments and segmenting never-seen objects. We utilize the fact that computing is only a small aspect of a robot. We re-imagine the robot from the ground-up based on a class of tasks to be accomplished. This leads to a set of tight constraints that aid in efficiently solving the problem of autonomy.

Bio:

Chahat Deep Singh is a Ph.D. student in the Perception and Robotics Group (PRG) with Professor Yiannis Aloimonos and Associate Research Scientist Cornelia Fermüller. He completed his Masters in Robotics at the University of Maryland in 2018. Later, he joined as a Ph.D. student in the department of Computer Science. Singh’s research focuses on developing bio-inspired minimalist cognitive architectures for mobile robot autonomy. He works on computationally efficient and bio-inspired algorithms with an emphasis on computer vision. He was awarded Ann G. Wylie Fellowship for the year 2022-2023, Future Faculty Fellowship 2022-2023 and UMD's Dean Fellowship in 2020. He has been serving as the Maryland Robotics Center Student Ambassador since 2021. His works have been featured in IEEE-Spectrum, NVIDIA, Voice of America, Futurism, Mashable and many more.

Examining Committee:

Dr. Yiannis Aloimonos (Chair)

Dr. Christopher Metzler (Department Representative)

Dr. Guido de Croon (TU Delft)

Dr. Cornelia Fermüller

Dr. Nitin J. Sanket