| foo.js | bar.js | bundle.js |

|---|---|---|

| ```js exports.fn = () => 123 ``` | ```js const foo = require('./foo') console.log(foo.fn()) ``` | ```js let __commonJS = (callback, module) => () => { if (!module) { module = {exports: {}}; callback(module.exports, module); } return module.exports; }; // foo.js var require_foo = __commonJS((exports) => { exports.fn = () => 123; }); // bar.js const foo = require_foo(); console.log(foo.fn()); ``` |

| foo.js | bar.js | bundle.js |

|---|---|---|

| ```js export const fn = () => 123 ``` | ```js import {fn} from './foo' console.log(fn()) ``` | ```js // foo.js const fn = () => 123; // bar.js console.log(fn()); ``` |

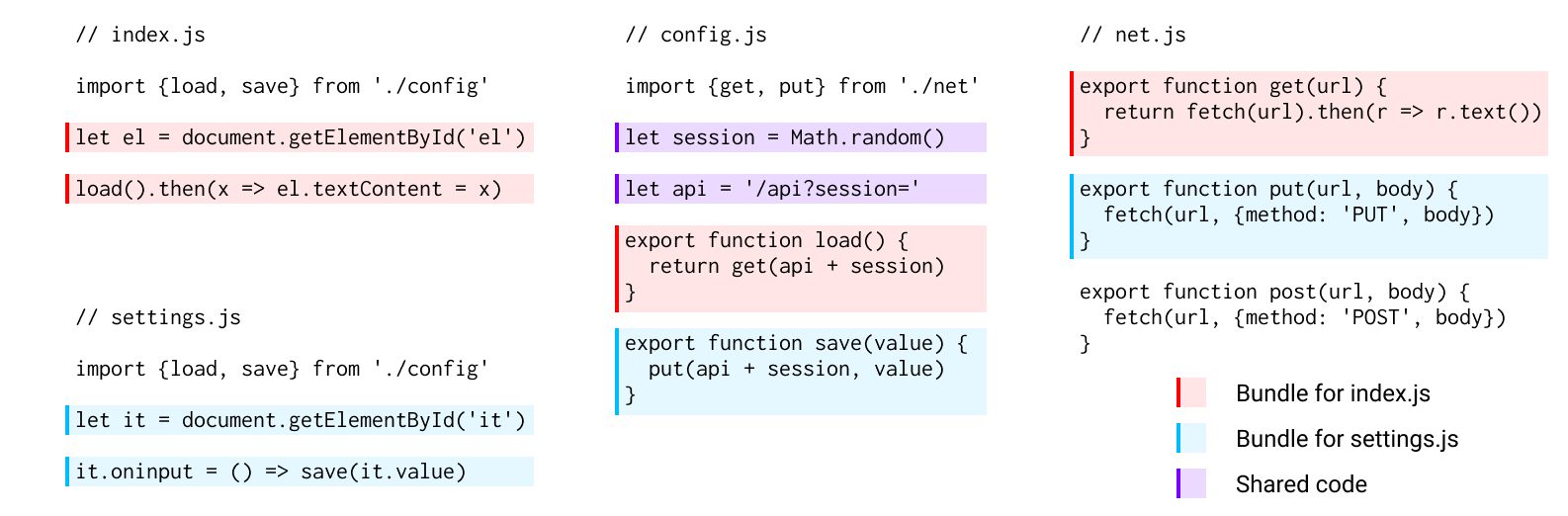

| Chunk for index.js | Chunk for settings.js | Chunk for shared code |

|---|---|---|

| ```js import { api, session } from "./chunk.js"; // net.js function get(url) { return fetch(url).then((r) => r.text()); } // config.js function load() { return get(api + session); } // index.js let el = document.getElementById("el"); load().then((x) => el.textContent = x); ``` | ```js import { api, session } from "./chunk.js"; // net.js function put(url, body) { fetch(url, {method: "PUT", body}); } // config.js function save(value) { return put(api + session, value); } // settings.js let it = document.getElementById("it"); it.oninput = () => save(it.value); ``` | ```js // config.js let session = Math.random(); let api = "/api?session="; export { api, session }; ``` |

| entry1.js | entry2.js | data.js |

|---|---|---|

| ```js import {data} from './data' console.log(data) ``` | ```js import {setData} from './data' setData(123) ``` | ```js export let data export function setData(value) { data = value } ``` |

| Chunk for entry1.js | Chunk for entry2.js | Chunk for shared code |

|---|---|---|

| ```js import { data } from "./chunk.js"; // entry1.js console.log(data); ``` | ```js import { data } from "./chunk.js"; // data.js function setData(value) { data = value; } // entry2.js setData(123); ``` | ```js // data.js let data; export { data }; ``` |

| Chunk for entry1.js | Chunk for entry2.js | Chunk for shared code |

|---|---|---|

| ```js import { data } from "./chunk.js"; // entry1.js console.log(data); ``` | ```js import { setData } from "./chunk.js"; // entry2.js setData(123); ``` | ```js // data.js let data; function setData(value) { data = value; } export { data, setData }; ``` |

| Original code | Code with symbol minification |

|---|---|

| ```js function useReducer(reducer, initialState) { let [state, setState] = useState(initialState); function dispatch(action) { let nextState = reducer(state, action); setState(nextState); } return [state, dispatch]; } ``` | ```js function useReducer(b, c) { let [a, d] = useState(c); function e(f) { let g = b(a, f); d(g); } return [a, e]; } ``` |