advanced

- {{file.name}} - {{file.size}} bytes

1

2

not working anymore to make the tooltip visible. affected browser s chrome / firefox and most probably ie too \n", "\n", "Original Title:\n", " tooltip is not shown anymore if tooltip is larger than the chart\n", "\n", "****** Machine Generated Title (Prediction) ******:\n", " tooltip not shown on * number *\n", "\n", "\n", "==============================================\n", "============== Example # 7320 =================\n", "\n", "\"https://github.com/Criccle/GoogleCombo/issues/1\"\n", "Issue Body:\n", " unlike google chart for mendix, google combo chart for mendix cannot redraw a chart. only one chart can be drawn only once but no redraw or two charts in a page is possible. thus, this module is useless at all with this condition. \n", "\n", "Original Title:\n", " cannot redraw a chart by google combo chart for mendix\n", "\n", "****** Machine Generated Title (Prediction) ******:\n", " google charts not working\n", "\n", "\n", "==============================================\n", "============== Example # 42159 =================\n", "\n", "\"https://github.com/cviebrock/eloquent-sluggable/issues/337\"\n", "Issue Body:\n", " hello! i have a model with multiple slug fields setup like this: return 'slug_en' => 'source' => 'name_en' , 'slug_es' => 'source' => 'name_es' , 'slug_fr' => 'source' => 'name_fr' , 'slug_it' => 'source' => 'name_it' , 'slug_de' => 'source' => 'name_de' , ; i want to findbyslug on all of them, i have tried with slugkeyname but no luck. is there something im missing? thank you \n", "\n", "Original Title:\n", " find on multiple slug fields\n", "\n", "****** Machine Generated Title (Prediction) ******:\n", " multiple fields with same name\n", "\n", "\n", "==============================================\n", "============== Example # 184774 =================\n", "\n", "\"https://github.com/hylang/hy/issues/1271\"\n", "Issue Body:\n", " it was released in 2008, so it's almost 10 years old. also, we don't test it. \n", "\n", "Original Title:\n", " drop support for python 2.6\n", "\n", "****** Machine Generated Title (Prediction) ******:\n", " remove old version from * number *\n", "\n", "\n", "==============================================\n", "============== Example # 121668 =================\n", "\n", "\"https://github.com/MajkiIT/polish-ads-filter/issues/3646\"\n", "Issue Body:\n", " @majkiit w prebake jest reguła, która psuje logowanie na gg. a najwyraźniej są jeszcze osoby, które korzystają z gg i z listy prebake. więc nie wiem czy warto dać whitelist na nasz filtr czy nie, co o tym sądzisz? https://github.com/azet12/popupblocker/issues/68 issuecomment-329763381 \n", "\n", "Original Title:\n", " gg.pl prebake\n", "\n", "****** Machine Generated Title (Prediction) ******:\n", " problem z login\n", "\n", "\n", "==============================================\n", "============== Example # 34871 =================\n", "\n", "\"https://github.com/WorldDominationArmy/geodk-reqtest-req/issues/1\"\n", "Issue Body:\n", " afsnit: 3. krav til løsningens overordnede egenskaber relateret: \n", "\n", "Original Title:\n", " krav 1-eksterne kilder til datasupplering\n", "\n", "****** Machine Generated Title (Prediction) ******:\n", " * number * - * number * - * number * -\n", "\n", "\n", "==============================================\n", "============== Example # 7978 =================\n", "\n", "\"https://github.com/blockstack/blockstack-portal/issues/416\"\n", "Issue Body:\n", " i noticed that gmp is installed by the macos installer script. noticed that the library was not loaded https://github.com/blockstack/blockstack-portal/issues/415 issuecomment-294392702 for albert: library not loaded: /usr/local/opt/gmp/lib/libgmp.10.dylib referenced from: /private/tmp/blockstack-venv/lib/python2.7/site-packages/fastecdsa/curvemath.so reason: image not found he is on macos 10.12. let's see if we can reproduce this error locally. \n", "\n", "Original Title:\n", " testing gmp and libffi installation via script\n", "\n", "****** Machine Generated Title (Prediction) ******:\n", " library not loaded in macos\n", "\n", "\n", "==============================================\n", "============== Example # 28099 =================\n", "\n", "\"https://github.com/EcrituresNumeriques/transformation_jats_erudit/issues/2\"\n", "Issue Body:\n", " avons-nous une liste définitive des attributs possible de 'fig-type' pour l'extrant de jats? le balisage de mon côté, pour érudit, dépend de la valeur sémantique de l'attribut de cette balise et je voudrais pouvoir styler les différents cas de figures haha , qui sont :

, , , , pour les images et le son. merci. \n",

"\n",

"Original Title:\n",

" attributs possibles pour sous jats\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" * number * : gestion des dates\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 24459 =================\n",

"\n",

"\"https://github.com/go-gitea/gitea/issues/656\"\n",

"Issue Body:\n",

" when adding a new member to an organisation owner team, addteammember does not set watches for the new team member. together with 653 that is pretty confusing behaviour and probably a bug. \n",

"\n",

"Original Title:\n",

" new owner team member does not get watches for org repo's\n"

]

},

{

"name": "stdout",

"output_type": "stream",

"text": [

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" new member does not set the team member\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 64152 =================\n",

"\n",

"\"https://github.com/linuxboss182/SoftEng-2017/issues/84\"\n",

"Issue Body:\n",

" need 3-4 people to present our application to the class on wednesday. applicants must: - not have presented last week - understand how to use the application - be ready to kick ass remember, you have to present at either this wednesday or the next one, so plan accordingly! \n",

"\n",

"Original Title:\n",

" iteration 2 presentation\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" add a new class to the application\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 69032 =================\n",

"\n",

"\"https://github.com/kartoza/qgis.org.za/issues/184\"\n",

"Issue Body:\n",

" i created a form 'contact' and it seems to work but the form labels do not appear on the form so it is a bit useless. please get the labels to appear and merge and release with other improvements asap \n",

"\n",

"Original Title:\n",

" form labels not appearing\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" form labels not showing up\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 132252 =================\n",

"\n",

"\"https://github.com/NTU-ASH/tree-generator/issues/18\"\n",

"Issue Body:\n",

" sort a series of node values within the tree, e.g. -take values from 0-9 up to 15 -sort them into a tree with the middle value as the root and the lowest on the left/highest on the right -perhaps do the same for letters so a is to the left and z is to the right \n",

"\n",

"Original Title:\n",

" binary search tree generation\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" sort tree nodes\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 53765 =================\n",

"\n",

"\"https://github.com/multiformats/multihash/issues/74\"\n",

"Issue Body:\n",

" why not use the existing crypt format? $.$ \n",

"\n",

"Original Title:\n",

" why not use the existing crypt format? $.$\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" why not use the existing format ?\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 123370 =================\n",

"\n",

"\"https://github.com/PSEBergclubBern/BergclubBern/issues/181\"\n",

"Issue Body:\n",

" ich kann bilder einfügen: ! 2017-05-07 14_01_33-tourenbericht anpassen bergclub bern wordpress https://cloud.githubusercontent.com/assets/18282099/25780754/d2260da6-332d-11e7-8350-f46821b300d5.png aber auf der website werden diese nicht angezeigt: ! 2017-05-07 14_00_32-bergclub bern https://cloud.githubusercontent.com/assets/18282099/25780756/defc015c-332d-11e7-982e-e51b758c8179.png \n",

"\n",

"Original Title:\n",

" bilder eines tourenberichts werden nicht angezeigt\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" website : update to * url *\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 57636 =================\n",

"\n",

"\"https://github.com/postmanlabs/postman-app-support/issues/2996\"\n",

"Issue Body:\n",

" welcome to the postman issue tracker. any feature requests / bug reports can be posted here. any security-related bugs should be reported directly to security@getpostman.com version/app information: 1. postman version: 4.10.7 2. app chrome app or mac app : linux app not sure if its also happening on other oss 3. os details: ubuntu 14.06 4. is the interceptor on and enabled in the app: no 5. did you encounter this recently, or has this bug always been there: 6. expected behaviour: explain below steps to repoduce 7. console logs http://blog.getpostman.com/2014/01/27/enabling-chrome-developer-tools-inside-postman/ for the chrome app, view->toggle dev tools for the mac app : 8. screenshots if applicable steps to reproduce the problem: it seems postman ignores the failures if there is 1<= passed test after the failed assertion. i.e: assertion a a=true assertion b=false must fails the test assertion c c=true the final outcome of the postman test must be false because b failed. but postman shows the final results as passed because it looks at c which was true as the last line of the test which is wrong and the test easily ignores any bug and marks the test as successfull. some guidelines: 1. please file newman-related issues at https://github.com/postmanlabs/newman/issues 2. if it’s a cloud-related issue, or you want to include personal information like your username / collection names, mail us at help@getpostman.com 3. if it’s a question anything along the lines of “how do i … in postman” , the answer might lie in our documentation - http://getpostman.com/docs. \n",

"\n",

"Original Title:\n",

" postman is skiping the failed assestions if the last assersion passes\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" feature request : add support for multiple devices\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 120461 =================\n",

"\n",

"\"https://github.com/libgraviton/gdk-java/issues/23\"\n",

"Issue Body:\n",

" with 12 rql support was introduced for string and date fields. since the rql syntax varies depending on the field type, integer and float and boolean are currently not supported, since they get treated as regular string fields. lets have a look at a typical query against a string field _fieldname_ with the value _value_ ?eq fieldname,string:value in this case the string: prefix is not required. it has the same result as ?eq fieldname,value but lets look at another example again a string field ?eq fieldname,string:20 at this point the string: prefix is required, since the graviton rql parser needs to know it's dealing with a string. omitting string: would lead to an empty result on the other hand, if we look at an integer field ?eq integerfieldname,string:20 would lead to an empty result. in this case the query needs to look like ?eq integerfieldname,string:20 the part that needs changing is https://github.com/libgraviton/gdk-java/blob/develop/gdk-core/src/main/java/com/github/libgraviton/gdk/api/query/rql/rql.java l141 where currently every field is always treated as string. \n",

"\n",

"Original Title:\n",

" integer, float and boolean support for rql\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" support for numeric type\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 3333 =================\n",

"\n",

"\"https://github.com/jpvillaisaza/hangman/issues/15\"\n",

"Issue Body:\n",

" losing a game and then restarting shouldn't count as two more games. just one, thanks. \n",

"\n",

"Original Title:\n",

" fix total number of games\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" game crashes when game is running\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 133450 =================\n",

"\n",

"\"https://github.com/vector-im/riot-meta/issues/28\"\n",

"Issue Body:\n",

" placeholder overarching issue to track progress on: general ux polish should probably be decomposed further. \n",

"\n",

"Original Title:\n",

" general ux polish\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" add more info to the ui\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 111482 =================\n",

"\n",

"\"https://github.com/Viva-con-Agua/drops/issues/21\"\n",

"Issue Body:\n",

" currently, the view for defining the roles is very confusing. a search field for searching users has to be implemented and the role selection should be a little bit more user friendly. \n",

"\n",

"Original Title:\n",

" roles definition view\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" improve search for user roles\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 154925 =================\n",

"\n",

"\"https://github.com/srusskih/SublimeJEDI/issues/228\"\n",

"Issue Body:\n",

" i want edit my project config file. according to the readme , by default project config name is .sublime-project , so the project is the folder that holds the project py file? \n",

"\n",

"Original Title:\n",

" how to define a project ?\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" how to edit project name ?\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 18851 =================\n",

"\n",

"\"https://github.com/climategadgets/servomaster/issues/7\"\n",

"Issue Body:\n",

" adafruit dc & stepper motor hat for raspberry pi - mini kit https://www.adafruit.com/product/2348 provides a very reproducible and standard stepper controller solution for raspberry pi, it would be a shame not to support it. this enhancement is much more complicated than 6, though. steppers, unlike servos, do not have inherent limits, and if a stepper is used as a servo, there will have to be solutions put in place to allow limit detection limit switches and torque sensors, to name a couple . in addition, stepper positioning model discrete steps is different from servo positioning model floating point 0 to 1 with adjustable ranges and limits , so some extra work will need to be done. \n",

"\n",

"Original Title:\n",

" implement tb6612 driver for raspberry pi\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" rpi motor support\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 174664 =================\n",

"\n",

"\"https://github.com/cawilliamson/ansible-gpdpocket/issues/98\"\n",

"Issue Body:\n",

" first off, thanks for all the effort going into this, very promising. issue: trying to bootstrap an ubuntu-16.04.3 iso from within an existing ubuntu instance. running into an error, which appears to be when ansible starts getting involved. very possible i'm doing something wrong. e: can not write log is /dev/pts mounted? - posix_openpt 2: no such file or directory + grep -wq -- --nogit + echo 'skip pulling source from git' + cd /usr/src/ansible-gpdpocket + ansible_nocows=1 + ansible-playbook system.yml -e bootstrap=true -v warning : provided hosts list is empty, only localhost is available error! syntax error while loading yaml. the error appears to have been in '/usr/src/ansible-gpdpocket/roles/audio/tasks/main.yml': line 17, column 1, but may be elsewhere in the file depending on the exact syntax problem. the offending line appears to be: - name: create chtrt5645 directory ^ here play recap localhost : ok=23 changed=14 unreachable=0 failed=1 \n",

"\n",

"Original Title:\n",

" syntax error while loading yaml\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" bootstrap fails to mount in ubuntu\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 186883 =================\n",

"\n",

"\"https://github.com/prettydiff/prettydiff/issues/456\"\n",

"Issue Body:\n",

" right now a single language file handles all tasks for a given group of languages. these files need to be broken down into respective pieces: parser beautifier minifier analyzer this is a large architectural effort. fortunately the code is well segmented internally for separation of concerns, so the logic can be broken apart without impact to operational integrity. the challenge is largely administration to ensure all the pieces are included into each of the respective environments and pass data among each other appropriately. \n",

"\n",

"Original Title:\n",

" separate language files into their respective tasks\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" fix language handling for all languages\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 151593 =================\n",

"\n",

"\"https://github.com/koorellasuresh/UKRegionTest/issues/21568\"\n",

"Issue Body:\n",

" first from flow in uk south \n",

"\n",

"Original Title:\n",

" first from flow in uk south\n"

]

},

{

"name": "stdout",

"output_type": "stream",

"text": [

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" first from flow in uk south\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 24718 =================\n",

"\n",

"\"https://github.com/sensorario/go-tris/issues/34\"\n",

"Issue Body:\n",

" move 1 simone : 5 move 2 computer : 2 move 3 simone : 9 move 4 computer : 1 move 5 simone : 3 move 6 computer : 6 move 7 simone : 8 move 8 computer : 7 move 9 simone : 4 \n",

"\n",

"Original Title:\n",

" in this case computer loose\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" move to * number *\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 2005 =================\n",

"\n",

"\"https://github.com/fossasia/susi_firefoxbot/issues/6\"\n",

"Issue Body:\n",

" actual behaviour only text response from the server is shown expected behaviour support different types of responses like images, links, tables etc. would you like to work on the issue ? yes \n",

"\n",

"Original Title:\n",

" support for different types of responses from server\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" support for different types of response\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 144769 =================\n",

"\n",

"\"https://github.com/reallyenglish/ansible-role-poudriere/issues/8\"\n",

"Issue Body:\n",

" the role clones a remote git repository, which takes time to clone. to make the test faster, create a small, but functional repository in the role, and use it for the test. \n",

"\n",

"Original Title:\n",

" create minimal ports tree for the test\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" add a test to the repo\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 148842 =================\n",

"\n",

"\"https://github.com/felquis/HTJSON/issues/2\"\n",

"Issue Body:\n",

" firstly - thanks for making this, i had the same idea. but i would do it slightly differently. exactly 2 differerences. 1. i'd make content an array 2. i'd more the objects inside attr down a level and get rid of it. thus content would be an attribute. for example, instead of : var template = { a : { attr : { href : http://your-domain.com/images/any-image.jpg }, content: { link name } } }; it'd be: var template = a : { href : http://your-domain.com/images/any-image.jpg , content : some text , { img : { src: http://whatever.jpg }, some more text } ; 1. makes it more compact, without losing any document structure information 2. makes it more versatile, and, in fact, makes it complete - it can then encode any html document. \n",

"\n",

"Original Title:\n",

" shouldn't content be an array? is attr really neccessary?\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" content - type : attribute\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 83915 =================\n",

"\n",

"\"https://github.com/rrdelaney/ava-rethinkdb/issues/3\"\n",

"Issue Body:\n",

" when i run the ava-rethinkdb it works but when i ran it through travis ci i get error: spawn rethinkdb enoent is there something i am doing wrong or need to add for ci build? \n",

"\n",

"Original Title:\n",

" error: spawn rethinkdb enoent\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" spawn enoent on ci\n",

"\n",

"\n",

"==============================================\n",

"============== Example # 22941 =================\n",

"\n",

"\"https://github.com/cartalyst/stripe/issues/90\"\n",

"Issue Body:\n",

" i am using your latest release 2.0.9 but that release does not include the payout file. kidnly upload the latest release that has the payout work. \n",

"\n",

"Original Title:\n",

" payout file is missing in latest release.\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" release * number*.1 missing\n"

]

}

],

"source": [

"# this method displays the predictions on random rows of the holdout set\n",

"seq2seq_inf.demo_model_predictions(n=50, issue_df=testdf)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# Feature Extraction Demo"

]

},

{

"cell_type": "code",

"execution_count": 68,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"# Read All 5M data points\n",

"all_data_df = pd.read_csv('github_issues.csv')\n",

"# Extract the bodies from this dataframe\n",

"all_data_bodies = all_data_df['body'].tolist()"

]

},

{

"cell_type": "code",

"execution_count": 70,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"# transform all of the data using the ktext processor\n",

"all_data_vectorized = body_pp.transform_parallel(all_data_bodies)"

]

},

{

"cell_type": "code",

"execution_count": 71,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"# save transformed data\n",

"with open('all_data_vectorized.dpkl', 'wb') as f:\n",

" dpickle.dump(all_data_vectorized, f)"

]

},

{

"cell_type": "code",

"execution_count": 262,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"%reload_ext autoreload\n",

"%autoreload 2\n",

"from seq2seq_utils import Seq2Seq_Inference\n",

"seq2seq_inf_rec = Seq2Seq_Inference(encoder_preprocessor=body_pp,\n",

" decoder_preprocessor=title_pp,\n",

" seq2seq_model=seq2seq_Model)\n",

"recsys_annoyobj = seq2seq_inf_rec.prepare_recommender(all_data_vectorized, all_data_df)"

]

},

{

"cell_type": "markdown",

"metadata": {

"collapsed": true

},

"source": [

"### Example 1: Issues Installing Python Packages"

]

},

{

"cell_type": "code",

"execution_count": 223,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"\n",

"\n",

"==============================================\n",

"============== Example # 13563 =================\n",

"\n",

"\"https://github.com/bnosac/pattern.nlp/issues/5\"\n",

"Issue Body:\n",

" thanks for your package, i can't wait to use it. unfortunately i have issues with the installation. prerequisite is 'first install python version 2.5+ not version 3 '. so this package cant be used with version 3.6 64bit that i have installed? i nevertheless tried to install it using pip, conda is not supported? but got an error: 'syntaxerror: missing parentheses in call to 'print''. besides when i try to run the library in r version 3.3.3. 64 bit i got errors with can_find_python_cmd required_modules = pattern.db : 'error in find_python_cmd......' pattern seems to be written in python but must be used in r, why cant it be used in python? i found another python pattern application that apparently does the same in python: https://pypi.python.org/pypi/pattern how is this related? \n",

"\n",

"Original Title:\n",

" error installation python\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" install with python * number *\n",

"\n",

"**** Similar Issues (using encoder embedding) ****:\n",

"\n"

]

},

{

"data": {

"text/html": [

" \n",

"222304 i'd love to be able to add a link at the bottom of the page for my github account. however, the sns-share option doesn't currently seem to be able to do this. \n",

"153327 sometimes people share files via g drive. provided a link this app can show some info about the files but doesn't show the download button. i hope that it can be fixed and users would be able to download files with this app. \n",

"\n",

" dist \n",

"250423 0.748828 \n",

"222304 0.774398 \n",

"153327 0.778953 "

]

},

"metadata": {},

"output_type": "display_data"

}

],

"source": [

"seq2seq_inf_rec.demo_model_predictions(n=1, issue_df=testdf, threshold=1)"

]

},

{

"cell_type": "code",

"execution_count": 78,

"metadata": {},

"outputs": [

{

"data": {

"text/plain": [

"True"

]

},

"execution_count": 78,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"# incase you need to reset the rec system\n",

"# seq2seq_inf_rec.set_recsys_annoyobj(recsys_annoyobj)\n",

"# seq2seq_inf_rec.set_recsys_data(all_data_df)\n",

"\n",

"# save object\n",

"recsys_annoyobj.save('recsys_annoyobj.pkl')"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": []

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.6.2"

},

"toc": {

"nav_menu": {

"height": "263px",

"width": "352px"

},

"number_sections": true,

"sideBar": true,

"skip_h1_title": false,

"title_cell": "Table of Contents",

"title_sidebar": "Contents",

"toc_cell": true,

"toc_position": {},

"toc_section_display": true,

"toc_window_display": false

}

},

"nbformat": 4,

"nbformat_minor": 2

}

\n",

"222304 i'd love to be able to add a link at the bottom of the page for my github account. however, the sns-share option doesn't currently seem to be able to do this. \n",

"153327 sometimes people share files via g drive. provided a link this app can show some info about the files but doesn't show the download button. i hope that it can be fixed and users would be able to download files with this app. \n",

"\n",

" dist \n",

"250423 0.748828 \n",

"222304 0.774398 \n",

"153327 0.778953 "

]

},

"metadata": {},

"output_type": "display_data"

}

],

"source": [

"seq2seq_inf_rec.demo_model_predictions(n=1, issue_df=testdf, threshold=1)"

]

},

{

"cell_type": "code",

"execution_count": 78,

"metadata": {},

"outputs": [

{

"data": {

"text/plain": [

"True"

]

},

"execution_count": 78,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"# incase you need to reset the rec system\n",

"# seq2seq_inf_rec.set_recsys_annoyobj(recsys_annoyobj)\n",

"# seq2seq_inf_rec.set_recsys_data(all_data_df)\n",

"\n",

"# save object\n",

"recsys_annoyobj.save('recsys_annoyobj.pkl')"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": []

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.6.2"

},

"toc": {

"nav_menu": {

"height": "263px",

"width": "352px"

},

"number_sections": true,

"sideBar": true,

"skip_h1_title": false,

"title_cell": "Table of Contents",

"title_sidebar": "Contents",

"toc_cell": true,

"toc_position": {},

"toc_section_display": true,

"toc_window_display": false

}

},

"nbformat": 4,

"nbformat_minor": 2

}

\n",

"\n",

"\n",

" \n",

"

\n",

"

"

],

"text/plain": [

" issue_url \\\n",

"286906 \"https://github.com/scikit-hep/root_numpy/issues/337\" \n",

"314005 \"https://github.com/andim/noisyopt/issues/4\" \n",

"48120 \"https://github.com/turi-code/SFrame/issues/389\" \n",

"\n",

" issue_title \\\n",

"286906 root 6.10/02 and root_numpy compatibility \n",

"314005 joss review: installing dependencies via pip \n",

"48120 python 3.6 compatible \n",

"\n",

" body \\\n",

"286906 i am trying to pip install root_pandas and one of the dependency is root_numpy however some weird reasons i am unable to install it even though i can import root in python. i am working on python3.6 as i am more comfortable with it. is root_numpy is not yet compatible with the latest root? \n",

"314005 hi, i'm trying to install noisyopt in a clean conda environment running python 3.5. running pip install noisyopt does not install the dependencies numpy, scipy . i see that you do include a requires keyword argument in your setup.py file, does this need to be install_requires ? as in https://packaging.python.org/requirements/ . also, not necessary if you don't want to, but i think it would be good to include a list of dependences somewhere in the readme. \n",

"48120 hi: i tried to install sframe using pip and conda but i can not find anything that will work with python 3.6? has sframe been updated to work with python 3.6 yet? thanks, drew \n",

"\n",

" dist \n",

"286906 0.694671 \n",

"314005 0.698265 \n",

"48120 0.718715 "

]

},

"metadata": {},

"output_type": "display_data"

}

],

"source": [

"seq2seq_inf_rec.demo_model_predictions(n=1, issue_df=testdf, threshold=1)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Example 2: Issues asking for feature improvements"

]

},

{

"cell_type": "code",

"execution_count": 226,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"\n",

"\n",

"==============================================\n",

"============== Example # 157322 =================\n",

"\n",

"\"https://github.com/Chingu-cohorts/devgaido/issues/89\"\n",

"Issue Body:\n",

" right now, your profile link is https://devgaido.com/profile. this is fine, but it would be really cool if there was a way to share your profile with other people. on my portfolio, i have social media buttons to freecodecamp, github, ect. without a custom link, i cannot show-off what i have done on devgaido to future employers. \n",

"\n",

"Original Title:\n",

" feature request: sharable profile.\n",

"\n",

"****** Machine Generated Title (Prediction) ******:\n",

" add a link to your profile\n",

"\n",

"**** Similar Issues (using encoder embedding) ****:\n",

"\n"

]

},

{

"data": {

"text/html": [

"| \n", " | issue_url | \n", "issue_title | \n", "body | \n", "dist | \n", "

|---|---|---|---|---|

| 286906 | \n", "\"https://github.com/scikit-hep/root_numpy/issues/337\" | \n", "root 6.10/02 and root_numpy compatibility | \n", "i am trying to pip install root_pandas and one of the dependency is root_numpy however some weird reasons i am unable to install it even though i can import root in python. i am working on python3.6 as i am more comfortable with it. is root_numpy is not yet compatible with the latest root? | \n", "0.694671 | \n", "

| 314005 | \n", "\"https://github.com/andim/noisyopt/issues/4\" | \n", "joss review: installing dependencies via pip | \n", "hi, i'm trying to install noisyopt in a clean conda environment running python 3.5. running pip install noisyopt does not install the dependencies numpy, scipy . i see that you do include a requires keyword argument in your setup.py file, does this need to be install_requires ? as in https://packaging.python.org/requirements/ . also, not necessary if you don't want to, but i think it would be good to include a list of dependences somewhere in the readme. | \n", "0.698265 | \n", "

| 48120 | \n", "\"https://github.com/turi-code/SFrame/issues/389\" | \n", "python 3.6 compatible | \n", "hi: i tried to install sframe using pip and conda but i can not find anything that will work with python 3.6? has sframe been updated to work with python 3.6 yet? thanks, drew | \n", "0.718715 | \n", "

\n",

"\n",

"\n",

" \n",

"

\n",

"

"

],

"text/plain": [

" issue_url \\\n",

"250423 \"https://github.com/ParabolInc/action/issues/1379\" \n",

"222304 \"https://github.com/viosey/hexo-theme-material/issues/166\" \n",

"153327 \"https://github.com/tobykurien/GoogleApps/issues/31\" \n",

"\n",

" issue_title \\\n",

"250423 integrations list view discoverability \n",

"222304 allow us to use sns-share for github \n",

"153327 drive provide download ability \n",

"\n",

" body \\\n",

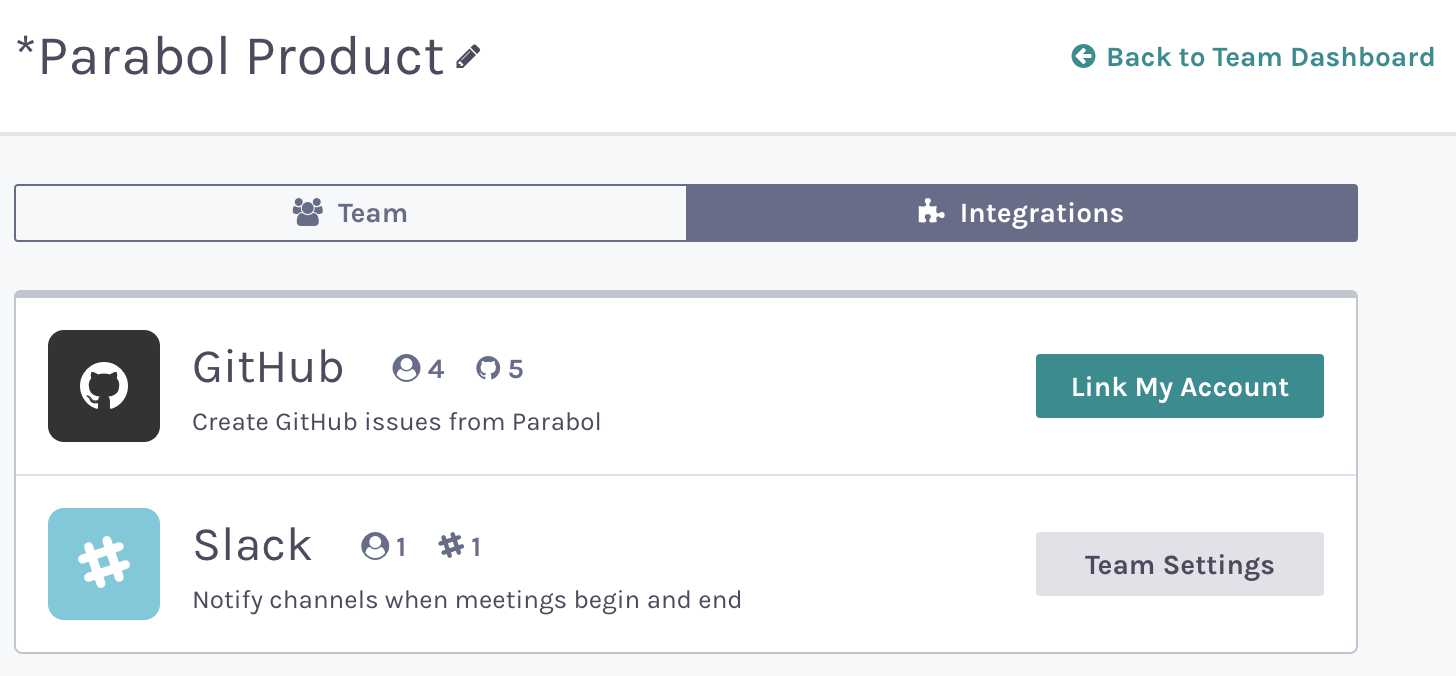

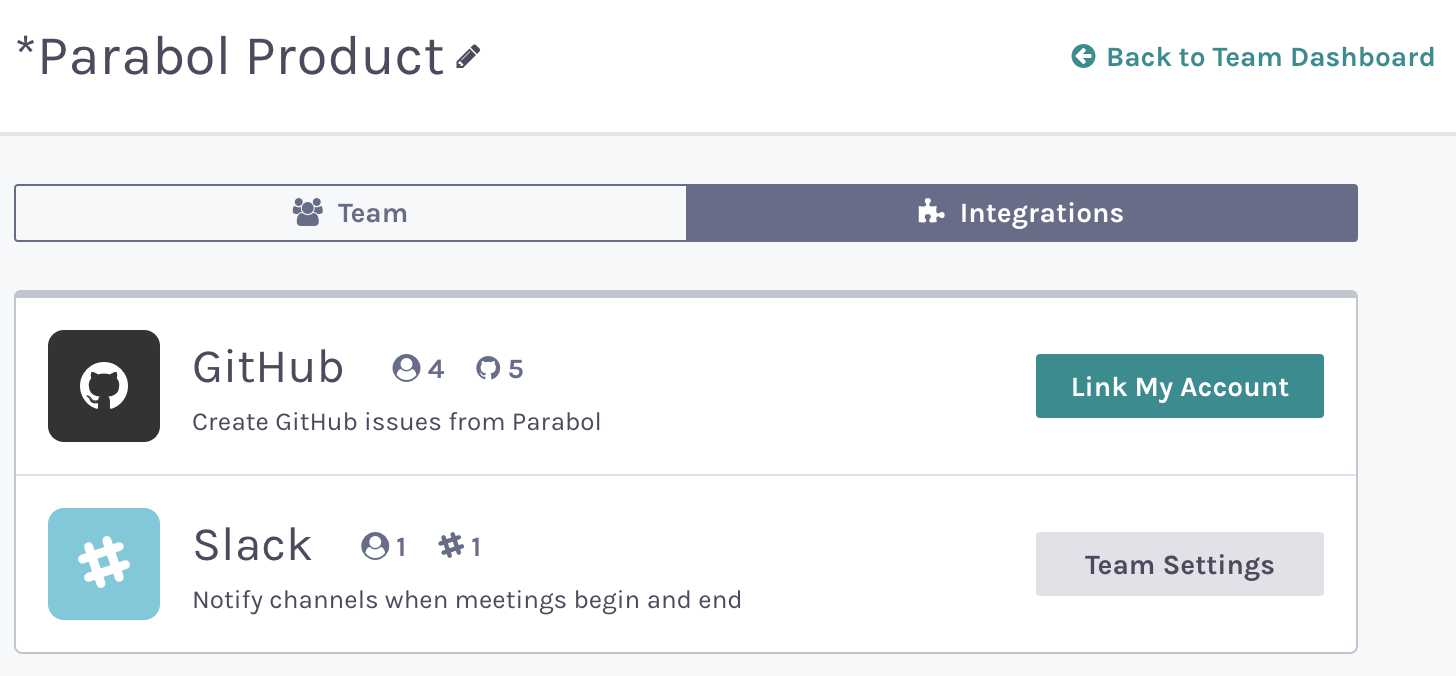

"250423 issue - enhancement i was initially confused by the link to my account copy; seeing github in the integrations list made me think it had already been set up . i realize now that i had to allow parabol to post as me. i think that link to my account could use a tooltip explaining what link means, and why you'd want to do so. | \n", " | issue_url | \n", "issue_title | \n", "body | \n", "dist | \n", "

|---|---|---|---|---|

| 250423 | \n", "\"https://github.com/ParabolInc/action/issues/1379\" | \n", "integrations list view discoverability | \n", "issue - enhancement i was initially confused by the link to my account copy; seeing github in the integrations list made me think it had already been set up . i realize now that i had to allow parabol to post as me. i think that link to my account could use a tooltip explaining what link means, and why you'd want to do so. <img width= 728 alt= screen shot 2017-09-29 at 10 52 05 am src= https://user-images.githubusercontent.com/2146312/31024786-2fd39c46-a50e-11e7-9f2a-6d4a5ed2baeb.png > | \n", "0.748828 | \n", "

| 222304 | \n", "\"https://github.com/viosey/hexo-theme-material/issues/166\" | \n", "allow us to use sns-share for github | \n", "i'd love to be able to add a link at the bottom of the page for my github account. however, the sns-share option doesn't currently seem to be able to do this. | \n", "0.774398 | \n", "

| 153327 | \n", "\"https://github.com/tobykurien/GoogleApps/issues/31\" | \n", "drive provide download ability | \n", "sometimes people share files via g drive. provided a link this app can show some info about the files but doesn't show the download button. i hope that it can be fixed and users would be able to download files with this app. | \n", "0.778953 | \n", "

\n",

"222304 i'd love to be able to add a link at the bottom of the page for my github account. however, the sns-share option doesn't currently seem to be able to do this. \n",

"153327 sometimes people share files via g drive. provided a link this app can show some info about the files but doesn't show the download button. i hope that it can be fixed and users would be able to download files with this app. \n",

"\n",

" dist \n",

"250423 0.748828 \n",

"222304 0.774398 \n",

"153327 0.778953 "

]

},

"metadata": {},

"output_type": "display_data"

}

],

"source": [

"seq2seq_inf_rec.demo_model_predictions(n=1, issue_df=testdf, threshold=1)"

]

},

{

"cell_type": "code",

"execution_count": 78,

"metadata": {},

"outputs": [

{

"data": {

"text/plain": [

"True"

]

},

"execution_count": 78,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"# incase you need to reset the rec system\n",

"# seq2seq_inf_rec.set_recsys_annoyobj(recsys_annoyobj)\n",

"# seq2seq_inf_rec.set_recsys_data(all_data_df)\n",

"\n",

"# save object\n",

"recsys_annoyobj.save('recsys_annoyobj.pkl')"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": []

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.6.2"

},

"toc": {

"nav_menu": {

"height": "263px",

"width": "352px"

},

"number_sections": true,

"sideBar": true,

"skip_h1_title": false,

"title_cell": "Table of Contents",

"title_sidebar": "Contents",

"toc_cell": true,

"toc_position": {},

"toc_section_display": true,

"toc_window_display": false

}

},

"nbformat": 4,

"nbformat_minor": 2

}

\n",

"222304 i'd love to be able to add a link at the bottom of the page for my github account. however, the sns-share option doesn't currently seem to be able to do this. \n",

"153327 sometimes people share files via g drive. provided a link this app can show some info about the files but doesn't show the download button. i hope that it can be fixed and users would be able to download files with this app. \n",

"\n",

" dist \n",

"250423 0.748828 \n",

"222304 0.774398 \n",

"153327 0.778953 "

]

},

"metadata": {},

"output_type": "display_data"

}

],

"source": [

"seq2seq_inf_rec.demo_model_predictions(n=1, issue_df=testdf, threshold=1)"

]

},

{

"cell_type": "code",

"execution_count": 78,

"metadata": {},

"outputs": [

{

"data": {

"text/plain": [

"True"

]

},

"execution_count": 78,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"# incase you need to reset the rec system\n",

"# seq2seq_inf_rec.set_recsys_annoyobj(recsys_annoyobj)\n",

"# seq2seq_inf_rec.set_recsys_data(all_data_df)\n",

"\n",

"# save object\n",

"recsys_annoyobj.save('recsys_annoyobj.pkl')"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": []

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.6.2"

},

"toc": {

"nav_menu": {

"height": "263px",

"width": "352px"

},

"number_sections": true,

"sideBar": true,

"skip_h1_title": false,

"title_cell": "Table of Contents",

"title_sidebar": "Contents",

"toc_cell": true,

"toc_position": {},

"toc_section_display": true,

"toc_window_display": false

}

},

"nbformat": 4,

"nbformat_minor": 2

}