{

"cells": [

{

"cell_type": "markdown",

"metadata": {},

"source": [

"**Test 2 Review**\n",

"\n",

"- l10 -> end\n",

"- sentiment analysis\n",

" - lexicons\n",

" - wordnet\n",

" - how to combine words with different sentiment?\n",

"- classification\n",

" - workflow (collect, label, train, test, etc)\n",

" - computing feature vectors\n",

" - generalization error\n",

" - variants of SimpleMachine\n",

" - computing cross-validation accuracy (why?)\n",

" - bias vs. variance\n",

"- demographics\n",

" - pitfalls of using name lists\n",

" - computing odds ratio\n",

" - smoothing\n",

" - ngrams\n",

" - tokenization\n",

" - stop words\n",

" - regularization (why?)\n",

"- logistic regression, linear regression\n",

" - no need to do calculus, but do you understand the formula?\n",

" - apply classification function given data/parameters\n",

" - what does the gradient represent?\n",

"- feature representation\n",

" - tf-idf\n",

" - csr_matrix: how does this data structure work? (data, column index, row pointer)\n",

"- recommendation systems\n",

" - content-based\n",

" - tf-idf\n",

" - cosine similarity\n",

" - collaborative filtering\n",

" - user-user\n",

" - item-item\n",

" - measures: jaccard, cosine, pearson. Why choose one over another\n",

" - How to compute the recommended score for a specific item?\n",

"- k-means\n",

" - compute cluster assignment, means, and error function\n",

" - what effect does k have?\n",

" - representing word context vectors\n"

]

},

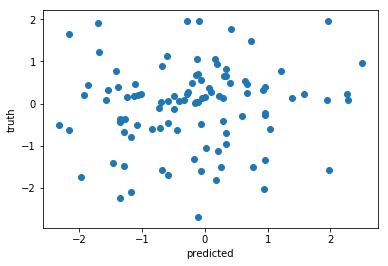

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Project tips\n",

"\n",

"So you've collected data, implemented a baseline, and have an F1 of 78%. \n",

"**Now what??**\n",

"\n",

"- Error analysis\n",

"- Check for data biases\n",

"- Over/under fitting\n",

"- Parameter tuning\n",

"\n",

"## Reminder: train/validation/test splits\n",

"\n",

"- Training data\n",

" - To fit model\n",

" - May use cross-validation loop\n",

" \n",

"- Validation data\n",

" - To evaluate model while debugging/tuning\n",

" \n",

"- Testing data\n",

" - Evaluate once at the end of the project\n",

" - Best estimate of accuracy on some new, as-yet-unseen data\n",

" - be sure you are evaluating against **true** labels \n",

" - e.g., not the output of some other noisy labeling algorithm\n",

" \n",

" \n",

"## Error analysis\n",

"\n",

"What did you get wrong and why?\n",

"\n",

"- Fit model on all training data\n",

"- Predict on validation data\n",

"- Collect and categorize errors\n",

" - false positives\n",

" - false negatives\n",

"- Sort by:\n",

" - Label probability\n",

" \n",

"A useful diagnostic:\n",

"- Find the top 10 most wrong predictions\n",

" - I.e., probability of incorrect label is near 1\n",

"- For each, print the features that are \"most responsible\" for decision\n",

"\n",

"E.g., for logistic regression\n",

"\n",

"$$\n",

"p(y \\mid x) = \\frac{1}{1 + e^{-x^T \\theta}}\n",

"$$\n",

"\n",

"If true label was $-1$, but classifier predicted $+1$, sort features in descending order of $x_j * \\theta_j$\n",

"\n",

"

\n",

"\n",

"Error analysis often helps designing new features.\n",

"- E.g., \"not good\" classified as positive because $x_{\\mathrm{good}} * \\theta_{\\mathrm{good}} >> 0$\n",

"- Instead, replace feature \"good\" with \"not_good\"\n",

" - similarly for other negation words\n",

" \n",

"May also discover incorrectly labeled data\n",

"- Common in classification tasks in which labels are not easily defined\n",

" - E.g., is the sentence \"it's not bad\" positive or negative?\n",

"\n",

"\n",

"For regression, make a scatter plot\n",

" - look at outliers\n",

" \n",

"\n",

"\n",

"## Inter-annotator agreement\n",

"\n",

"- How often do two human annotators give the same label to the same example?\n",

"\n",

"- E.g., consider two humans labeling 100 documents:\n",

"\n",

"\n",

"| | | **Person 1** |

\n",

"| | | Relevant | Not Relevant |

\n",

"| **Person 2** | Relevant | 50 | 20 |

\n",

" | Not Relevant | 10 | 20 |

\n",

"

\n",

"\n",

"\n",

"- Simple **agreement**: fraction of documents with matching labels. $\\frac{70}{100} = 70\\%$\n",

"\n",

"

\n",

"\n",

"- But, how much agreement would we expect by chance?\n",

"\n",

"- Person 1 says Relevant $60\\%$ of the time.\n",

"- Person 2 says Relevant $70\\%$ of the time.\n",

"- Chance that they both say relevant at the same time? $60\\% \\times 70\\% = 42\\%$.\n",

"\n",

"\n",

"- Person 1 says Not Relevant $40\\%$ of the time.\n",

"- Person 2 says Not Relevant $30\\%$ of the time.\n",

"- Chance that they both say not relevant at the same time? $40\\% \\times 30\\% = 12\\%$.\n",

"\n",

"\n",

"- Chance that they agree on any document (both say yes or both say no): $42\\% + 12\\% = 54\\%$\n",

"\n",

"** Cohen's Kappa ** $\\kappa$\n",

"\n",

"- Percent agreement beyond that expected by chance\n",

"\n",

"$ \\kappa = \\frac{P(A) - P(E)}{1 - P(E)}$\n",

"\n",

"- $P(A)$ = simple agreement proportion\n",

"- $P(E)$ = agreement proportion expected by chance\n",

"\n",

"\n",

"E.g., $\\kappa = \\frac{.7 - .54}{1 - .54} = .3478$\n",

"\n",

"- $k=0$ if no better than chance, $k=1$ if perfect agreement\n",

"\n",

"

\n",

"\n",

"## Data biases\n",

"\n",

"- How similar is the testing data to the training data?\n",

"\n",

"- If you were to deploy the system to run in real-time, would the data it sees be comparable to the testing data?\n",

"\n",

"Assumption that test/train data drawn from same distribution often wrong:\n",

"\n",

"Label shift:\n",

"\n",

"$p_{\\mathrm{train}}(y) \\ne p_{\\mathrm{test}}(y)$\n",

" - e.g., positive examples more likely in testing data\n",

" - Why does this matter?\n",

"\n",

"In logistic regression:\n",

"\n",

"$$\n",

"p(y \\mid x) = \\frac{1}{1 + e^{-(x^T\\theta + b)}}\n",

"$$\n",

"- bias term $b$ adjusts predictions to match overall $p(y)$\n",

" \n",

"

\n",

"\n",

"\n",

"## More bias\n",

"\n",

"Confounders\n",

"- Are there variables that predict the label that are not in the feature representation?\n",

" - e.g., some products have higher ratings than others; gender bias; location bias;\n",

" - May add additional features to model these attributes\n",

" - Or, may need to train separate classifiers for each gender/location/etc.\n",

" \n",

"

\n",

"\n",

"Temporal Bias\n",

"- Do testing instances come later, chronologically, than the training instances?\n",

" - E.g., we observe that user X likes Superman II, she probably also likes Superman I \n",

"- Why does this matter?\n",

" - inflates estimate of accuracy in production setting\n",

"\n",

"

\n",

"\n",

" Cross-validation splits \n",

"- E.g., classifying a user/organization's tweets: does the same user appear in both training/testing\n",

" - could just be learning a user-specific classifier; won't generalize to new user\n",

" - speech recognition\n",

"- Again, will inflate estimate of accuracy.\n",

"\n",

"\n",

"\n",

"## Over/under fitting\n",

"\n",

"What is training vs validation accuracy?\n",

"\n",

"- If training accuracy is low, we are probably underfitting. Consider:\n",

" - adding new features\n",

" - adding combinations of existing features (e.g., ngrams, conjunctions/disjunctions)\n",

" - adding hidden units/layers\n",

" - try non-linear classifier\n",

" - SVM, decision trees, neural nets\n",

" \n",

"- If training accuracy is very high (>99%), but validation accuracy is low, we are probably overfitting\n",

" - Do the opposite of above\n",

" - reduce number of features\n",

" - Regularization (L2, L1)\n",

" - Early stopping for gradient descent\n",

" - look at learning curves\n",

" - may need more training data\n",

" \n",

" \n",

" \n",

"## Parameter tuning\n",

"\n",

"Many \"hyperparameters\"\n",

"- regularization strength\n",

"- number of gradient descent iterations\n",

"- ...\n",

"\n",

"\n",

"- Be sure to tune these on the validation set, not the test set.\n",

"\n",

"

\n",

"\n",

"- Grid search\n",

" - Exhaustively search over all combinations\n",

" - Discretize continous variables\n",

" ```python \n",

" {'C': [.01, .1, 1, 10, 100, 1000], 'n_hidden': [5, 10, 50], 'regularizer': ['l1', 'l2']},\n",

" ```\n",

" \n",

"- Random search\n",

" - Define a probability distribution over each parameter\n",

" - Each iteration samples from that distribution\n",

" - Allows you to express prior knowledge over the likely best settings\n",

" - E.g.,\n",

" ```python\n",

" regularizer={'l1': .3, 'l2': .7}```\n",

" \n",

"See more at http://scikit-learn.org/stable/modules/grid_search.html\n",

"\n",

"\n",

"

\n",

"- While building model, may want to avoid evaluating on validation set too much\n",

"- **double cross-validation** can be used instead\n",

"\n",

"- Using only the training set:\n",

" - split into $k$ (train, test) splits\n",

" - for each split ($D_{tr}, D_{te}$)\n",

" - split $D_{tr}$ into $m$ splits\n",

" - pick hyperparameters that maximize this nested cross-validation accuracy\n",

" - train on all of $D_{tr}$ with those parameters\n",

" \n",

"Evaluates how well your hyperparameter selection algorithm does.\n",

"\n",

" \n"

]

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.6.0"

}

},

"nbformat": 4,

"nbformat_minor": 2

}