**Pix2pix: [Project](https://phillipi.github.io/pix2pix/) | [Paper](https://arxiv.org/pdf/1611.07004.pdf) | [Torch](https://github.com/phillipi/pix2pix) |

[Tensorflow Core Tutorial](https://www.tensorflow.org/tutorials/generative/pix2pix) | [PyTorch Colab](https://colab.research.google.com/github/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/pix2pix.ipynb)**

**Pix2pix: [Project](https://phillipi.github.io/pix2pix/) | [Paper](https://arxiv.org/pdf/1611.07004.pdf) | [Torch](https://github.com/phillipi/pix2pix) |

[Tensorflow Core Tutorial](https://www.tensorflow.org/tutorials/generative/pix2pix) | [PyTorch Colab](https://colab.research.google.com/github/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/pix2pix.ipynb)**

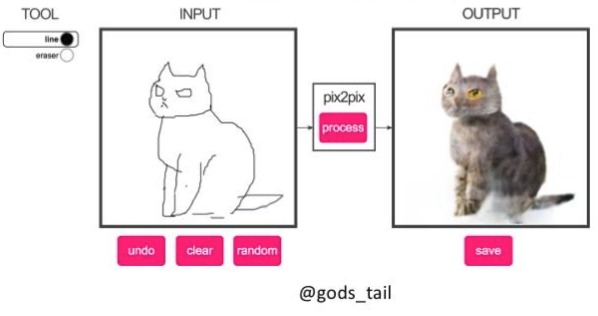

**[EdgesCats Demo](https://affinelayer.com/pixsrv/) | [pix2pix-tensorflow](https://github.com/affinelayer/pix2pix-tensorflow) | by [Christopher Hesse](https://twitter.com/christophrhesse)**

**[EdgesCats Demo](https://affinelayer.com/pixsrv/) | [pix2pix-tensorflow](https://github.com/affinelayer/pix2pix-tensorflow) | by [Christopher Hesse](https://twitter.com/christophrhesse)**

If you use this code for your research, please cite:

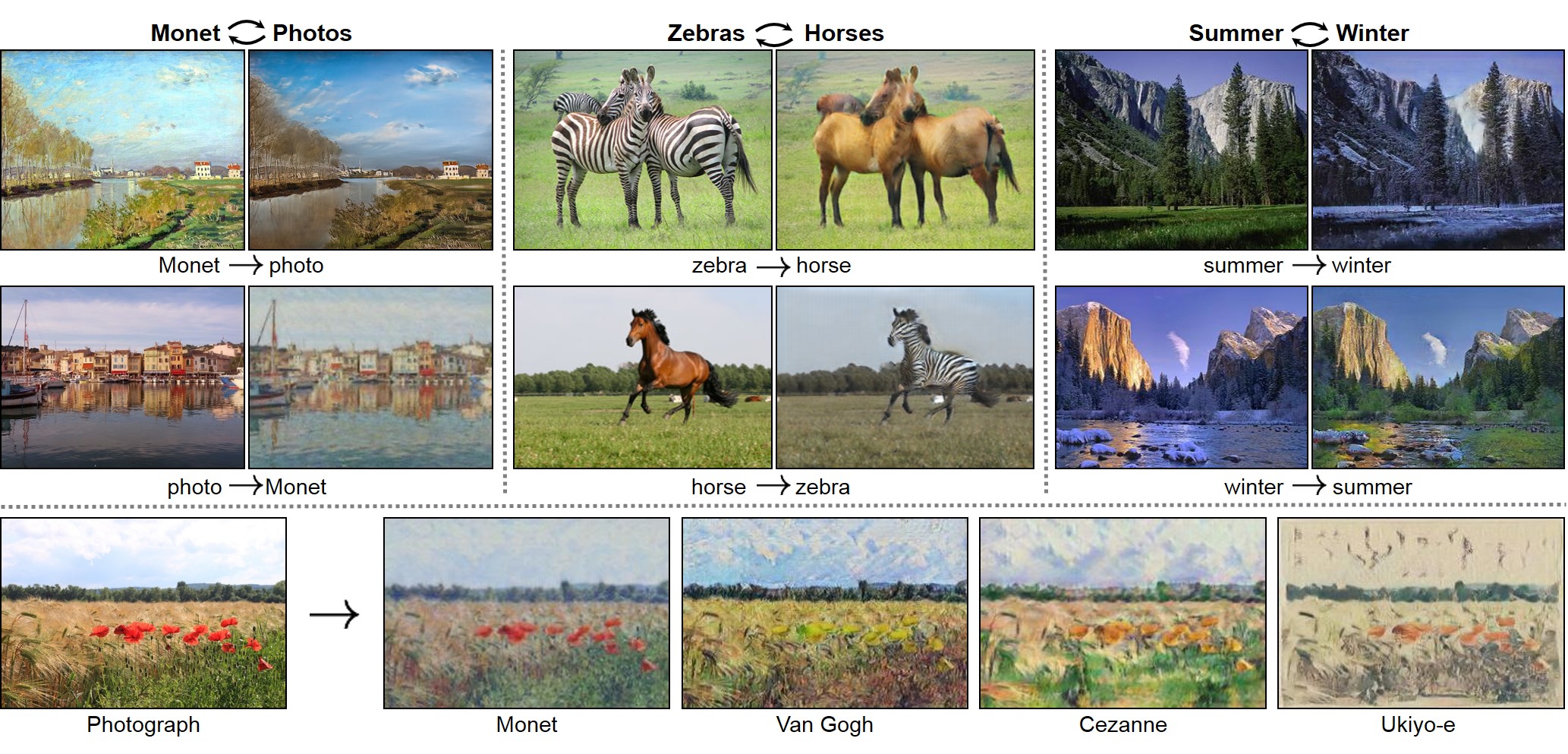

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks.

If you use this code for your research, please cite:

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks.[Tensorflow] (by Harry Yang), [Tensorflow] (by Archit Rathore), [Tensorflow] (by Van Huy), [Tensorflow] (by Xiaowei Hu), [Tensorflow2] (by Zhenliang He), [TensorLayer1.0] (by luoxier), [TensorLayer2.0] (by zsdonghao), [Chainer] (by Yanghua Jin), [Minimal PyTorch] (by yunjey), [Mxnet] (by Ldpe2G), [lasagne/Keras] (by tjwei), [Keras] (by Simon Karlsson), [OneFlow] (by Ldpe2G)

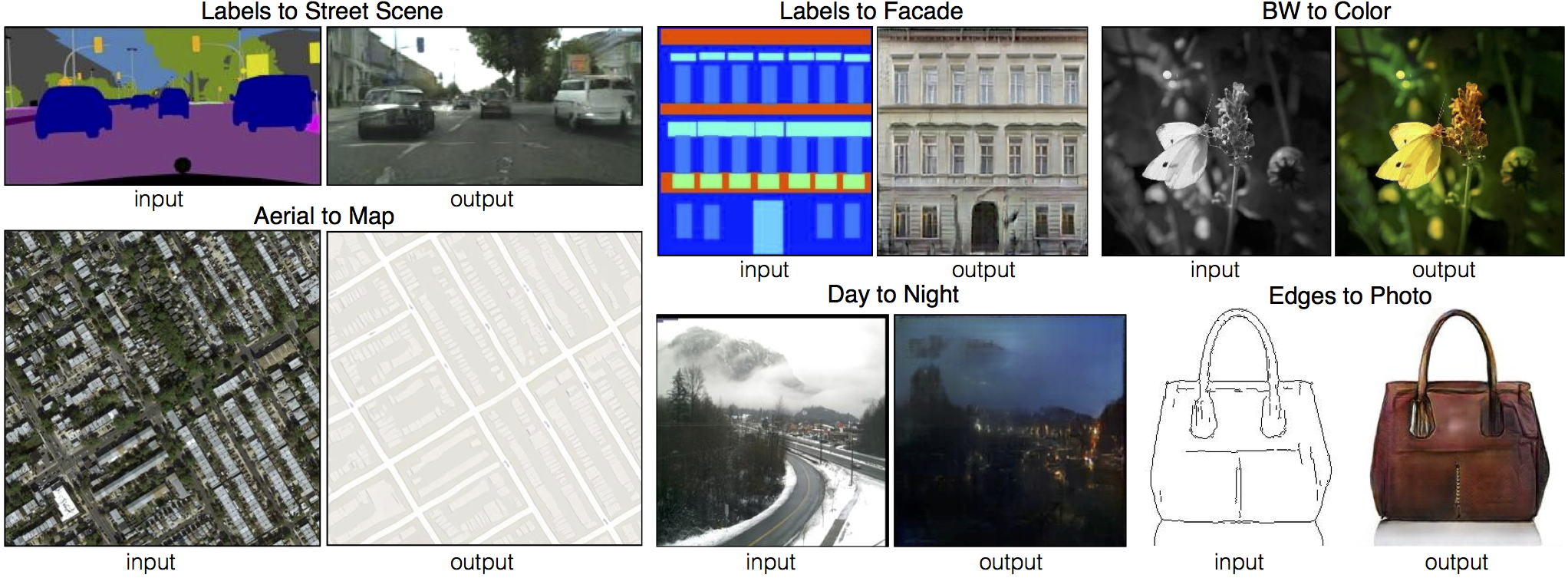

### pix2pix[Tensorflow] (by Christopher Hesse), [Tensorflow] (by Eyyüb Sariu), [Tensorflow (face2face)] (by Dat Tran), [Tensorflow (film)] (by Arthur Juliani), [Tensorflow (zi2zi)] (by Yuchen Tian), [Chainer] (by mattya), [tf/torch/keras/lasagne] (by tjwei), [Pytorch] (by taey16)

## Prerequisites - Linux or macOS - Python 3 - CPU or NVIDIA GPU + CUDA CuDNN ## Getting Started ### Installation - Clone this repo: ```bash git clone https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix cd pytorch-CycleGAN-and-pix2pix ``` - Install [PyTorch](http://pytorch.org) and other dependencies. For Conda users, you can create a new Conda environment by ```bash conda env create -f environment.yml ``` and then activate the environment by ```bash conda activate pytorch-img2img ``` - For Docker users, we provide the pre-built Docker image and Dockerfile. Please refer to our [Docker](docs/docker.md) page. - For Repl users, please click [](https://repl.it/github/junyanz/pytorch-CycleGAN-and-pix2pix). ### CycleGAN train/test - Download a CycleGAN dataset (e.g. maps): ```bash bash ./datasets/download_cyclegan_dataset.sh maps ``` - To log training progress and test images to W&B dashboard, set the `--use_wandb` flag with training script - Train a model: ```bash #!./scripts/train_cyclegan.sh python train.py --dataroot ./datasets/maps --name maps_cyclegan --model cycle_gan --use_wandb ``` To see more intermediate results, check out `./checkpoints/maps_cyclegan/web/index.html`. - Test the model: ```bash #!./scripts/test_cyclegan.sh python test.py --dataroot ./datasets/maps --name maps_cyclegan --model cycle_gan ``` - The test results will be saved to a html file here: `./results/maps_cyclegan/latest_test/index.html`. ### pix2pix train/test - Download a pix2pix dataset (e.g.[facades](http://cmp.felk.cvut.cz/~tylecr1/facade/)): ```bash bash ./datasets/download_pix2pix_dataset.sh facades ``` - To log training progress and test images to W&B dashboard, set the `--use_wandb` flag with training script - Train a model: ```bash #!./scripts/train_pix2pix.sh python train.py --dataroot ./datasets/facades --name facades_pix2pix --model pix2pix --direction BtoA --use_wandb ``` To see more intermediate results, check out `./checkpoints/facades_pix2pix/web/index.html`. - Test the model (`bash ./scripts/test_pix2pix.sh`): ```bash #!./scripts/test_pix2pix.sh python test.py --dataroot ./datasets/facades --name facades_pix2pix --model pix2pix --direction BtoA ``` - The test results will be saved to a html file here: `./results/facades_pix2pix/test_latest/index.html`. You can find more scripts at `scripts` directory. - To train and test pix2pix-based colorization models, please add `--model colorization` and `--dataset_mode colorization`. See our training [tips](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/docs/tips.md#notes-on-colorization) for more details. ### Apply a pre-trained model (CycleGAN) - You can download a pretrained model (e.g. horse2zebra) with the following script: ```bash bash ./scripts/download_cyclegan_model.sh horse2zebra ``` - The pretrained model is saved at `./checkpoints/{name}_pretrained/latest_net_G.pth`. Check [here](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/scripts/download_cyclegan_model.sh#L3) for all the available CycleGAN models. - To test the model, you also need to download the horse2zebra dataset: ```bash bash ./datasets/download_cyclegan_dataset.sh horse2zebra ``` - Then generate the results using ```bash python test.py --dataroot datasets/horse2zebra/testA --name horse2zebra_pretrained --model test --no_dropout ``` - The option `--model test` is used for generating results of CycleGAN only for one side. This option will automatically set `--dataset_mode single`, which only loads the images from one set. On the contrary, using `--model cycle_gan` requires loading and generating results in both directions, which is sometimes unnecessary. The results will be saved at `./results/`. Use `--results_dir {directory_path_to_save_result}` to specify the results directory. - For pix2pix and your own models, you need to explicitly specify `--netG`, `--norm`, `--no_dropout` to match the generator architecture of the trained model. See this [FAQ](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/docs/qa.md#runtimeerror-errors-in-loading-state_dict-812-671461-296) for more details. ### Apply a pre-trained model (pix2pix) Download a pre-trained model with `./scripts/download_pix2pix_model.sh`. - Check [here](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/scripts/download_pix2pix_model.sh#L3) for all the available pix2pix models. For example, if you would like to download label2photo model on the Facades dataset, ```bash bash ./scripts/download_pix2pix_model.sh facades_label2photo ``` - Download the pix2pix facades datasets: ```bash bash ./datasets/download_pix2pix_dataset.sh facades ``` - Then generate the results using ```bash python test.py --dataroot ./datasets/facades/ --direction BtoA --model pix2pix --name facades_label2photo_pretrained ``` - Note that we specified `--direction BtoA` as Facades dataset's A to B direction is photos to labels. - If you would like to apply a pre-trained model to a collection of input images (rather than image pairs), please use `--model test` option. See `./scripts/test_single.sh` for how to apply a model to Facade label maps (stored in the directory `facades/testB`). - See a list of currently available models at `./scripts/download_pix2pix_model.sh` ### Multi-GPU training To train a model on multiple GPUs, please use `torchrun --nproc_per_node=4 train.py ...` instead of `python train.py ...`. We also need to use synchronized batchnorm by setting `--norm sync_batch` (or `--norm sync_instance` for instance normgalization). The `--norm batch` is not compatible with DDP. ## [Docker](docs/docker.md) We provide the pre-built Docker image and Dockerfile that can run this code repo. See [docker](docs/docker.md). ## [Datasets](docs/datasets.md) Download pix2pix/CycleGAN datasets and create your own datasets. ## [Training/Test Tips](docs/tips.md) Best practice for training and testing your models. ## [Frequently Asked Questions](docs/qa.md) Before you post a new question, please first look at the above Q & A and existing GitHub issues. ## Custom Model and Dataset If you plan to implement custom models and dataset for your new applications, we provide a dataset [template](data/template_dataset.py) and a model [template](models/template_model.py) as a starting point. ## [Code structure](docs/overview.md) To help users better understand and use our code, we briefly overview the functionality and implementation of each package and each module. ## Pull Request You are always welcome to contribute to this repository by sending a [pull request](https://help.github.com/articles/about-pull-requests/). Please run `flake8 --ignore E501 .` and `pytest scripts/test_before_push.py -v` before you commit the code. Please also update the code structure [overview](docs/overview.md) accordingly if you add or remove files. ## Citation If you use this code for your research, please cite our papers. ``` @inproceedings{CycleGAN2017, title={Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks}, author={Zhu, Jun-Yan and Park, Taesung and Isola, Phillip and Efros, Alexei A}, booktitle={Computer Vision (ICCV), 2017 IEEE International Conference on}, year={2017} } @inproceedings{isola2017image, title={Image-to-Image Translation with Conditional Adversarial Networks}, author={Isola, Phillip and Zhu, Jun-Yan and Zhou, Tinghui and Efros, Alexei A}, booktitle={Computer Vision and Pattern Recognition (CVPR), 2017 IEEE Conference on}, year={2017} } ``` ## Other Languages [Spanish](docs/README_es.md) ## Related Projects [img2img-turbo](https://github.com/GaParmar/img2img-turbo)