{

"cells": [

{

"cell_type": "markdown",

"id": "771f658f",

"metadata": {},

"source": [

"# \"MLFlow on GCP for Experiment Tracking\"\n",

"> \"We will setup MLFlow on GCP for distributed experiment tracking.\"\n",

"\n",

"- toc: True\n",

"- branch: master\n",

"- badges: true\n",

"- comments: true\n",

"- categories: [mlops, data engineering]\n",

"- image: images/some_folder/your_image.png\n",

"- hide: false\n",

"- search_exclude: true"

]

},

{

"cell_type": "markdown",

"id": "0470eca1",

"metadata": {},

"source": [

"I searched over the internet and couldn't find a good step by step tutorial on setting up MLFlow on GCP. That's why I decided to write a tutorial on that. \n",

"\n",

"In this blog post, I will show the steps to setup MLFlow on Google Cloud for distributed experiment tracking. MLFlow can be used for machine learning experiment tracking. There are several ways to use MLFLow which you can check them [here](https://www.mlflow.org/docs/latest/tracking.html). \n",

"\n",

"\n",

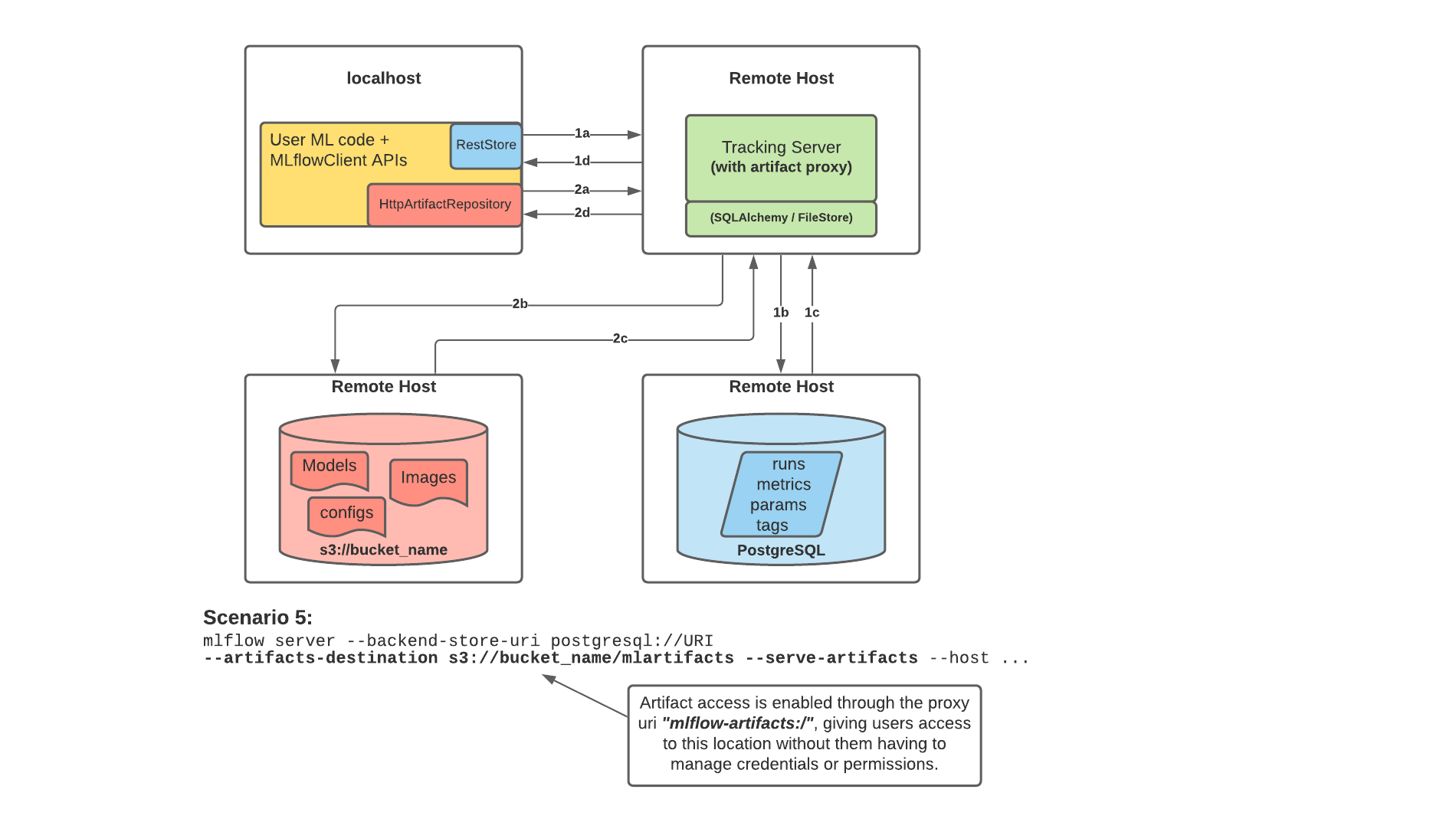

"The architecture that we want to implement here is like scenario number 5 and would be as the following image:\n",

"\n",

"\n",

"*[source](https://www.mlflow.org/docs/latest/tracking.html#scenario-5-mlflow-tracking-server-enabled-with-proxied-artifact-storage-access)*\n",

"\n",

"This is useful for teams with multiple data scientists to have one tracking server to be shared between all of them. So they all can do their experimentation and have everything in one place. The tracking server is not also dependent on the backend store and artifact store and can be scaled. In addition, the scientists will not lose their local data if they want to scale their machine or change it. Everything is decentralised.\n",

"\n",

"Here is the explanation from MLFLow documentation:\n",

"\n",

"\n",

"> MLflow’s Tracking Server supports utilizing the host as a proxy server for operations involving artifacts. Once configured with the appropriate access requirements, an administrator can start the tracking server to enable assumed-role operations involving the saving, loading, or listing of model artifacts, images, documents, and files. This eliminates the need to allow end users to have direct path access to a remote object store (e.g., s3, adls, gcs, hdfs) for artifact handling and eliminates the need for an end-user to provide access credentials to interact with an underlying object store.

\n",

"Enabling the Tracking Server to perform proxied artifact access in order to route client artifact requests to an object store location:

\n",

"**Part 1a and b:**

\n",

"The MLflow client creates an instance of a RestStore and sends REST API requests to log MLflow entities.

\n",

"The Tracking Server creates an instance of an SQLAlchemyStore and connects to the remote host for inserting tracking information in the database (i.e., metrics, parameters, tags, etc.)

\n",

"**Part 1c and d:**

\n",

"Retrieval requests by the client return information from the configured SQLAlchemyStore table

\n",

"**Part 2a and b:**

\n",

"Logging events for artifacts are made by the client using the HttpArtifactRepository to write files to MLflow Tracking Server

\n",

"The Tracking Server then writes these files to the configured object store location with assumed role authentication

\n",

"**Part 2c and d:**

\n",

"Retrieving artifacts from the configured backend store for a user request is done with the same authorized authentication that was configured at server start

\n",

"Artifacts are passed to the end user through the Tracking Server through the interface of the HttpArtifactRepository"

]

},

{

"cell_type": "markdown",

"id": "9e0a057d",

"metadata": {},

"source": [

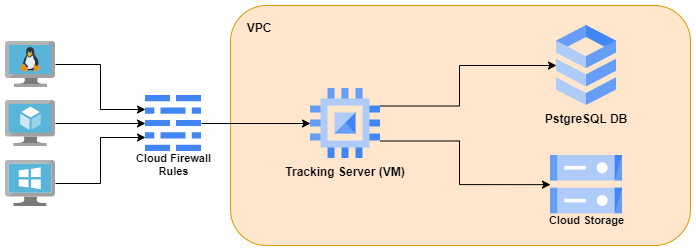

"In this distributed architecture, we will have :\n",

"- one virtual machine as tracking server\n",

"- one google storage bucket as artifact store - persists artifacts (files, models, images, in-memory objects, or model summary, etc).\n",

"- one PostgreSQL as backend store - persists MLflow entities (runs, parameters, metrics, tags, notes, metadata, etc).\n",

"\n",

"The architecture on GCP would be like the following image:\n",

"\n",

"\n"

]

},

{

"cell_type": "markdown",

"id": "bd8db347",

"metadata": {},

"source": [

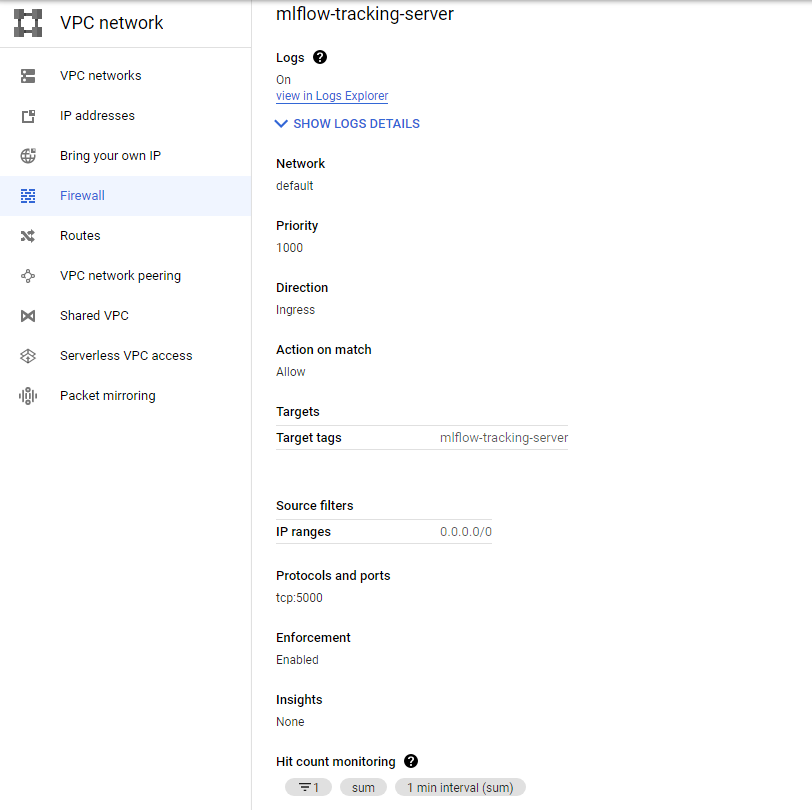

"# Virtual Machine as The Tracking Server\n",

"\n",

"We need a firewall rule which can be created like the following:\n",

"\n",

"```bash\n",

"gcloud compute firewall-rules create mlflow-tracking-server \\\n",

" --network default \\\n",

" --priority 1000 \\\n",

" --direction ingress \\\n",

" --action allow \\\n",

" --target-tags mlflow-tracking-server \\\n",

" --source-ranges 0.0.0.0/0 \\\n",

" --rules tcp:5000 \\\n",

" --enable-logging\n",

"```\n",

"\n",

"Here is the firewall rule after creation:\n",

"\n",

"\n",

"\n",

"We then can create a virtual instance as the tracking server. \n",

"\n",

"```bash\n",

"gcloud compute instances create mlflow-tracking-server \\\n",

" --project= \\\n",

" --zone=europe-west1-b \\\n",

" --machine-type=e2-standard-2 \\\n",

" --network-interface=network-tier=PREMIUM,subnet=default \\\n",

" --maintenance-policy=MIGRATE \\\n",

" --provisioning-model=STANDARD \\\n",

" --service-account=-compute@developer.gserviceaccount.com \\\n",

" --scopes=https://www.googleapis.com/auth/cloud-platform \\\n",

" --tags=mlflow-tracking-server \\\n",

" --create-disk=auto-delete=yes,boot=yes,device-name=mlflow-tracking-server,image=projects/ubuntu-os-cloud/global/images/ubuntu-2004-focal-v20220610,mode=rw,size=10,type=projects//zones/europe-west1-b/diskTypes/pd-balanced \\\n",

" --no-shielded-secure-boot \\\n",

" --shielded-vtpm \\\n",

" --shielded-integrity-monitoring \\\n",

" --reservation-affinity=any\n",

"```\n",

"\n",

"change `PROJECT_ID` based on your project. You can also change other configs like zone, machine, etc. if you want. Note that you have to change them in multiple places. The service account is the default service account for compute engine and is as follows:\n",

"\n",

"```bash\n",

"PROJECT_NUMBER-compute@developer.gserviceaccount.com\n",

"```\n",

"\n",

"Where `PROJECT_NUMBER` is the project number of the project that owns the service account. You can find it [here](https://cloud.google.com/resource-manager/docs/creating-managing-projects#identifying_projects).\n",

"\n",

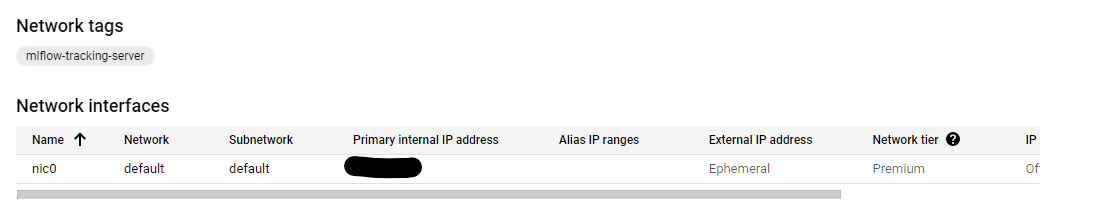

"You can also use the UI to simply create the virtual machine. Just make sure you use the `default` network for VPC and the created firewall rule for the `networks tags` in the `Network Interfaces` section. Also give the VM `Allow full access to all Cloud APIs`i in the Management -> availability policies section.\n",

"\n",

"Here is the networking section of the VM after creation (other configs can be based on your choice):\n",

"\n",

"\n"

]

},

{

"cell_type": "markdown",

"id": "d25dfbb5",

"metadata": {},

"source": [

"# Database as the Backend Store\n",

"\n",

"We also need a PostgreSQL database as the backend store.\n",

"\n",

"- Go to GCP dashboard and search for SQL and then select `create instance` and the select `PostgreSQL`.\n",

"- Put a name and password for the instance. Select the Database version and region. You can choose one option for Zonal availability too. \n",

"- Expand the `Customize your instance` part, and in connections, select `Private IP` and deselect `Public IP` and from the drop down options for `Network` in `Private IP` part, select `default`. This is the VPC which our virtual machine should be also on it too. So the VM and DB can see eachother.\n",

"- You can change other configs for the DB too. I leave them as their default values.\n",

"- Select `Create Instance` option.\n",

"\n",

"It will take you to the overview page and will take some time to create the database instance. Then we can create a database. GCP will create a default one named `postgres`, but I will create a new one."

]

},

{

"cell_type": "markdown",

"id": "5ddd385d",

"metadata": {},

"source": [

"Then go to the `Databases` section and select `Create Database` and name it `mlflow_db`, for example.\n",

"\n",

"Then we need to create a user too. Go to the `User` section and click on the `Add User Account`. Select a username and password for that.\n",

"\n",

"Now, you should be able to connect to the tracking server via ssh and run the following command to install and then see the list of databases. You can see the created database with its private IP.\n",

"\n",

"```bash\n",

"sudo apt-get update\n",

"sudo apt-get install postgresql-client\n",

"gcloud sql instances list\n",

"``` \n",

"\n",

"Then run the following command to see if you can connect to the database.\n",

"\n",

"```bash\n",

"psql -h CLOUD_SQL_PRIVATE_IP_ADDRESS -U USERNAME DATABASENAME\n",

"```\n",

"\n",

"It will ask you for the password for the user you created before.\n",

"\n",

"Now that you can connect to the database from the tracking server using private IP, let's go to the next part."

]

},

{

"cell_type": "markdown",

"id": "a91709d5",

"metadata": {},

"source": [

"# Google Cloud Storage Bucket as Artifact Store\n",

"\n",

"In the google cloud dashboard, search for `cloud storage` and then select `Create Bucket`. Do the required configs and done. You can also create a folder like `mlruns` in the bucket."

]

},

{

"cell_type": "markdown",

"id": "d336eddb",

"metadata": {},

"source": [

"# Run the MLFlow Server on Tracking Server\n",

"\n",

"Now we have all the resources. Go back to the ssh terminal for the tracking server or connect to it again. I had some problems with installing the required python packages. So I created a virtual env and installed the packages there.\n",

"\n",

"```bash\n",

"sudo apt install python3.8-venv\n",

"python3 -m venv mlflow\n",

"source mlflow/bin/activate\n",

"pip install mlflow boto3 google-cloud-storage psycopg2-binary\n",

"```\n",

"\n",

"Then run the mlflow server:\n",

"```bash\n",

"mlflow server \\\n",

" -h 0.0.0.0 \\\n",

" -p 5000 \\\n",

" --backend-store-uri postgresql://:@:5432/ \\\n",

" --default-artifact-root gs:///\n",

"```\n",

"\n",

"Then you can go to `http::5000` address and you should see the mlflow UI!"

]

},

{

"cell_type": "markdown",

"id": "85c3e9f5",

"metadata": {},

"source": [

"Now, you can train a model on your machine or another VM and log mlflow data.\n",

"\n",

"```python\n",

"import mlflow\n",

"import os\n",

"\n",

"TRACKING_SERVER_HOST = \"\"\n",

"mlflow.set_tracking_uri(f\"http://{TRACKING_SERVER_HOST}:5000\")\n",

"\n",

"print(f\"tracking URI: '{mlflow.get_tracking_uri()}'\")\n",

"\n",

"from sklearn.linear_model import LogisticRegression\n",

"from sklearn.datasets import load_iris\n",

"from sklearn.metrics import accuracy_score\n",

"\n",

"mlflow.set_experiment(\"my-experiment-1\")\n",

"\n",

"with mlflow.start_run():\n",

"\n",

" X, y = load_iris(return_X_y=True)\n",

"\n",

" params = {\"C\": 0.1, \"random_state\": 42}\n",

" mlflow.log_params(params)\n",

"\n",

" lr = LogisticRegression(**params).fit(X, y)\n",

" y_pred = lr.predict(X)\n",

" mlflow.log_metric(\"accuracy\", accuracy_score(y, y_pred))\n",

"\n",

" mlflow.sklearn.log_model(lr, artifact_path=\"models\")\n",

" print(f\"default artifacts URI: '{mlflow.get_artifact_uri()}'\")\n",

" \n",

"mlflow.list_experiments()\n",

"```\n",

"\n",

"\n",

"Note that you need to install `google-cloud-storage` via pip on your machine.\n",

"\n",

"You should now see `my-experiment-1` in the output of the above code and also in UI (refresh the page if you don't see it).\n",

"\n",

"You can also assign a fixed external IP address for your tracking server. So you don't need to change it in the code everytime you start the VM. You can do this by going to the `IP addresses` section in `VPC network` as shown in the below image:\n",

"\n",

"\n",

"\n",

"Now if you check the `mlflow-tracking-server` VM, you should see the External IP even if the VM is stopped.\n",

"\n",

"That's it for this post. I will try to replace VM with cloud run in future posts. I also think about having a login UI that each user should sign in to access the mlflow UI."

]

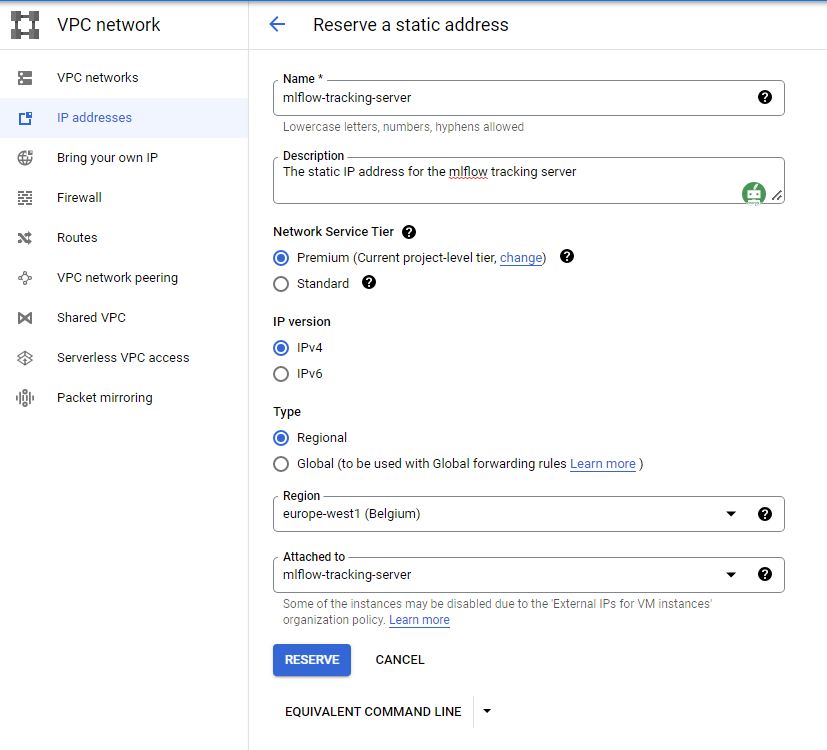

},

{

"cell_type": "code",

"execution_count": null,

"id": "d23b5a90",

"metadata": {},

"outputs": [],

"source": []

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3 (ipykernel)",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.8.13"

}

},

"nbformat": 4,

"nbformat_minor": 5

}