Floating point arithmetics from the Commodore 64 in C++

This is an experiment in 1. using C++ operator overloading to implement software floating point arithmetics and 2. measuring the accuracy and performance of the Commodore 64's floating point library.

It takes the 6502 core I developed for my NES emulator, using it to emulate a Commodore 64 making calls to the kernel's floating point arithmetic routines.

The interface, a C++ class, can be used more or less like a regular float.

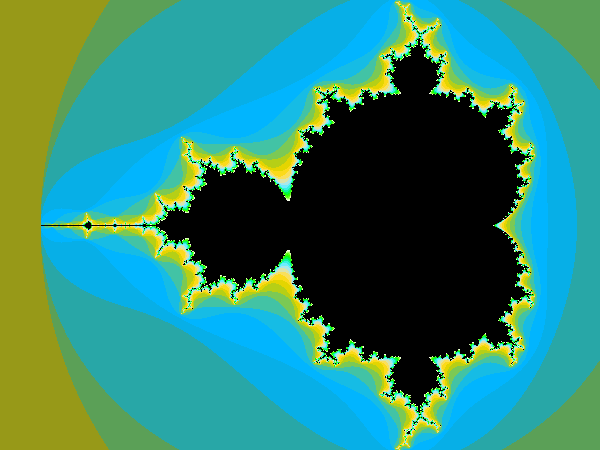

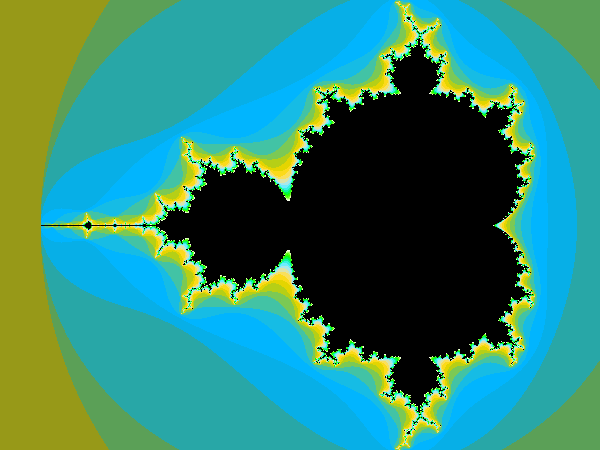

It is available at this github repository, which includes a mandelbrot generator demo, which was used to generate the image above.

The mandelbrot code is very similar to that for the gifcap library.

The interface

The main interface is just a class with a bunch of operators overloaded:

class C64Float

{

public:

C64Float operator *(const C64Float other) const;

C64Float operator +(const C64Float other) const;

C64Float operator -(const C64Float other) const;

C64Float operator /(const C64Float other) const;

bool operator >(const C64Float other) const;

bool operator ==(const C64Float other) const;

operator int() const;

C64Float operator -() const;

C64Float operator *=(const C64Float other){ *this = *this * other; return *this; }

C64Float operator +=(const C64Float other){ *this = *this + other; return *this; }

C64Float operator /=(const C64Float other){ *this = *this / other; return *this; }

C64Float operator -=(const C64Float other){ *this = *this - other; return *this; }

C64Float operator ++(){ *this += unit; return *this; }

C64Float operator --(){ *this -= unit; return *this; }

bool operator <(C64Float other) const{ return !((*this) == other) && !((*this) > other); }

C64Float(int i);

C64Float(double d);

};

class C64Float

{

//(slightly abridged)

public:

C64Float operator *(const C64Float other) const;

C64Float operator +(const C64Float other) const;

C64Float operator -(const C64Float other) const;

C64Float operator /(const C64Float other) const;

bool operator >(const C64Float other) const;

bool operator ==(const C64Float other) const;

operator int() const;

C64Float operator -() const;

C64Float operator *=(const C64Float other){ *this = *this * other; return *this; }

C64Float operator +=(const C64Float other){ *this = *this + other; return *this; }

C64Float operator /=(const C64Float other){ *this = *this / other; return *this; }

C64Float operator -=(const C64Float other){ *this = *this - other; return *this; }

C64Float operator ++(){ *this += unit; return *this; }

C64Float operator --(){ *this -= unit; return *this; }

bool operator <(C64Float other) const{ return !((*this) == other) && !((*this) > other); }

C64Float(int i);

C64Float(double d);

};

The lib also includes overloads for the common math.h/cmath functions (those which are available in the C64) and a user-defined literal thingamabob for convenience:

static C64Float round(C64Float f);

static C64Float abs(C64Float f);

static C64Float sqrt(C64Float f);

static C64Float atan(C64Float f);

static C64Float tan(C64Float f);

static C64Float exp(C64Float f);

static C64Float pow(C64Float f1, C64Float f2);

static C64Float log(C64Float f);

static C64Float sin(C64Float f);

static C64Float cos(C64Float f);

static C64Float log2(C64Float f);

static C64Float log10(C64Float f);

C64Float operator "" _C64F(const char *);

static C64Float round(C64Float f);

static C64Float abs(C64Float f);

static C64Float sqrt(C64Float f);

static C64Float atan(C64Float f);

static C64Float tan(C64Float f);

static C64Float exp(C64Float f);

static C64Float pow(C64Float f1, C64Float f2);

static C64Float log(C64Float f);

static C64Float sin(C64Float f);

static C64Float cos(C64Float f);

static C64Float log2(C64Float f);

static C64Float log10(C64Float f);

C64Float operator "" _C64F(const char *);

Internals

Internally it works by emulating a partial Commodore 64 as mentioned.

Modifying the code from the NES emulator to behave sufficiently like a Commodore 64 wasn't very difficult, since the core was sufficiently machine-agnostic.

Since actual IO is not necessary It is enough to map some addresses to ROM, and to simulate a copy of a routine to zero-page RAM which the Commodore 64 does on its boot code (which we don't use).

class C64Memory : public Memory

{

public:

uint8_t ram[64 * 1024];

static const uint8_t rom_kernal[8 * 1024];

static const uint8_t rom_basic[8 * 1024];

virtual MemoryByte operator[](std::size_t address)

{

MemoryByte mb(this);

mb.addr = address;

mb.wptr = ram + address;

mb.ptr = ram + address;

switch(address){

case 0xA000 ... 0xBFFF:

mb.ptr = rom_basic + address - 0xA000;

break;

case 0xE000 ... 0xFFFF:

mb.ptr = rom_kernal + address - 0xE000;

break;

default:

break;

}

return mb;

}

C64Memory() : ram{}

{

for(size_t src = 0xE3A2, dst = 0x73; src <= 0xE3BE; src++, dst++){

ram[dst] = (*this)[src];

}

}

};

class C64Memory : public Memory

{

public:

uint8_t ram[64 * 1024];

static const uint8_t rom_kernal[8 * 1024];

static const uint8_t rom_basic[8 * 1024];

virtual MemoryByte operator[](std::size_t address)

{

MemoryByte mb(this);

mb.addr = address;

mb.wptr = ram + address;

mb.ptr = ram + address;

switch(address){

case 0xA000 ... 0xBFFF:

mb.ptr = rom_basic + address - 0xA000;

break;

case 0xE000 ... 0xFFFF:

mb.ptr = rom_kernal + address - 0xE000;

break;

default:

break;

}

return mb;

}

//[...]

C64Memory() : ram{}

{

//Copy CHRGET

for(size_t src = 0xE3A2, dst = 0x73; src <= 0xE3BE; src++, dst++){

ram[dst] = (*this)[src];

}

}

};

Some small bugs occurred when I called the wrong routine addresses and before I realized I had to copy CHRGET as above.

To debug this, I used the VICE emulator's monitor and modified the 6502 logger to create output in the same style, allowing for easy comparison and quickly finding the divergence point.

Then, each operation is done by assembling and running a small 6502 program which calls the Kernal routines:

C64Float C64Float::operator *(const C64Float other) const

{

size_t addrFAC, addrARG;

return NewProg()

.getAddr(addrFAC)

.pushFloat(*this)

.getAddr(addrARG)

.pushFloat(other)

.begin()

.pushMOVFM(addrFAC)

.pushFMUL(addrARG)

.pushMOVMF(addrFAC)

.execute()

.retFloat(addrFAC);

}

C64Float C64Float::operator *(const C64Float other) const

{

size_t addrFAC, addrARG;

return NewProg()

.getAddr(addrFAC)

.pushFloat(*this)

.getAddr(addrARG)

.pushFloat(other)

.begin()

.pushMOVFM(addrFAC)

.pushFMUL(addrARG)

.pushMOVMF(addrFAC)

.execute()

.retFloat(addrFAC);

}

"NewProg" is just a convenience function to create new C64Prog class instances. C64Prog has several small methods which are strung together to assemble and execute a 6502 program.

C64Prog C64Prog::pushLDA(uint8_t val){ return pushBytes(0xA9, val); }

C64Prog C64Prog::pushAddrAY(size_t addr){ return pushLDA(addr & 0xFFu).pushLDY(addr >> 8u); }

C64Prog C64Prog::pushFMUL(size_t addr){ return pushAddrAY(addr).pushJSR(0xBA28); }

C64Prog C64Prog::pushLDA(uint8_t val){ return pushBytes(0xA9, val); }

C64Prog C64Prog::pushAddrAY(size_t addr){ return pushLDA(addr & 0xFFu).pushLDY(addr >> 8u); }

C64Prog C64Prog::pushFMUL(size_t addr){ return pushAddrAY(addr).pushJSR(0xBA28); }

Here, the magic constant 0xBA28 is the address of the kernal routine we want, which can be looked up in a Commodore 64 reference.

Each C64Float goes in and out as 5 bytes:

C64Prog C64Prog::pushFloat(const C64Float f)

{

for(size_t i = 0; i < sizeof(f.val); i++){

pushBytes(f.val[i]);

}

return *this;

}

C64Prog C64Prog::popFloat(size_t addr, C64Float &f)

{

for(size_t i = 0; i < sizeof(f.val); i++){

f.val[i] = ram[addr + i];

}

return *this;

}

C64Float C64Prog::retFloat(size_t addr)

{

C64Float f;

popFloat(addr, f);

return f;

}

C64Prog C64Prog::pushFloat(const C64Float f)

{

for(size_t i = 0; i < sizeof(f.val); i++){

pushBytes(f.val[i]);

}

return *this;

}

C64Prog C64Prog::popFloat(size_t addr, C64Float &f)

{

for(size_t i = 0; i < sizeof(f.val); i++){

f.val[i] = ram[addr + i];

}

return *this;

}

C64Float C64Prog::retFloat(size_t addr)

{

C64Float f;

popFloat(addr, f);

return f;

}

Those 5 bytes consist of an 8bit exponent, a 31bit mantissa and a sign bit, allowing the following conversion to regular floating point:

double C64FloatToDouble(C64Float f)

{

if(!f.val[0]) return 0.0;

unsigned int mantissa =

(((f.val[1] & 0x7F) | 0x80u) << 24) +

(f.val[2] << 16) +

(f.val[3] << 8) +

(f.val[4] << 0);

int exp = f.val[0];

exp -= 0x81;

return std::pow(2.0, exp) * double(mantissa) / double(0x80u << 24);

}

double C64FloatToDouble(C64Float f)

{

if(!f.val[0]) return 0.0;

unsigned int mantissa =

(((f.val[1] & 0x7F) | 0x80u) << 24) +

(f.val[2] << 16) +

(f.val[3] << 8) +

(f.val[4] << 0);

int exp = f.val[0];

exp -= 0x81;

return std::pow(2.0, exp) * double(mantissa) / double(0x80u << 24);

}

Performance and accuracy

The performance obviously is piss-poor. The mandelbrot code, the same unoptimized one from the gifcap demo, pushes it to its limits:

for(iY=0;iY<iYmax;iY++){

Cy=CyMin + floatType(iY) *PixelHeight;

if(abs(Cy)< PixelHeight/floatType(2)) Cy=f0;

for(iX=0,Cx=CxMin;iX<iXmax;iX++,Cx+=PixelWidth){

Zx=f0;

Zy=f0;

Zx2=Zx*Zx;

Zy2=Zy*Zy;

for(Iteration=0;Iteration<IterationMax && ((Zx2+Zy2)<ER2);Iteration++){

Zy=f2*Zx*Zy + Cy;

Zx=Zx2-Zy2 +Cx;

Zx2=Zx*Zx;

Zy2=Zy*Zy;

};

PutPixel(bmp, iX, iY, CalcColor(Iteration));

}

}

for(iY=0;iY<iYmax;iY++){

Cy=CyMin + floatType(iY) *PixelHeight;

if(abs(Cy)< PixelHeight/floatType(2)) Cy=f0; /* Main antenna */

for(iX=0,Cx=CxMin;iX<iXmax;iX++,Cx+=PixelWidth){

/* initial value of orbit = critical point Z= 0 */

Zx=f0;

Zy=f0;

Zx2=Zx*Zx;

Zy2=Zy*Zy;

/* */

for(Iteration=0;Iteration<IterationMax && ((Zx2+Zy2)<ER2);Iteration++){

Zy=f2*Zx*Zy + Cy;

Zx=Zx2-Zy2 +Cx;

Zx2=Zx*Zx;

Zy2=Zy*Zy;

};

PutPixel(bmp, iX, iY, CalcColor(Iteration));

}

}

It took 34 minutes to render the 600x450 image above this article, by emulating 27460104375 machine cycles of the 6502.

That would take 7 hours and a half in a real life Commodore 64.

Even though I limited the max iterations to a mere 25.

The floating-point accuracy, in this and other tests, is shown to be quite good.

Comparing to a regular "double" version of the code, the only change is in images with odd dimensions where the centre line will evaluate slightly different:

In other tests, I find the most inaccurate operation seems to be the multiplication.

Overall the accuracy is much like a regular double and way better than a float, as expected from the 31bit mantissa of the format.

Acknowledgments

The following resources were extremely useful when making this: