Overview

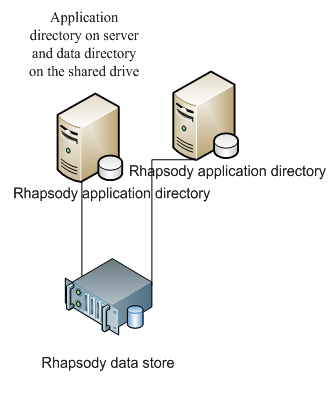

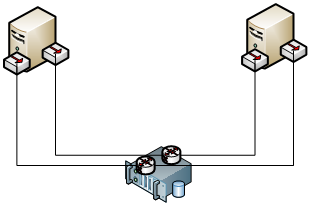

Rhapsody supports high availability (HA) using an active-passive setup. In an active-passive configuration, there are two servers connected to a shared data store (normally placed on a Storage Area Network).

- Rhapsody is running on the active node.

- Rhapsody is stopped (shut down) on the passive node.

In the event of a fault, the cluster software activates the passive node and starts Rhapsody. The new active node uses the same data store, so the configuration and any in-process messages are picked up as if Rhapsody had just performed a normal restart. All connections into Rhapsody are made through a virtual IP which always points to the active node.

Refer to the following topics:

Installation Options

There are two ways to install Rhapsody in an active-passive setup:

- Installing both the application directory and the data directory on a shared drive – the advantage of installing the application on a shared drive is that there is only one copy of Rhapsody to manage. When the application is installed locally on both servers, you need to be careful when editing configuration files in the application directory and ensure that changes are also made on the passive node. Proper change management processes should be adhered to when making any changes to Rhapsody configuration in both HA and non-HA environments. Refer to Installing With a Shared Application Drive for details.

- Installing only the data directory on a shared drive, and the application directory on both servers locally – the advantage of installing the application on a local drive means you can perform a rolling upgrade by upgrading the passive node whilst the active node is still running, thereby minimizing downtime incurred. Once the passive node has been successfully upgraded, a fail-over can be induced to allow the previously active node to be upgraded. However, the installer generally takes less than five minutes, so the saving is minimal assuming that the upgrade went smoothly. If there are any complications during installation, they are mitigated by having the active node still running. Refer to Installing on a Local Application Drive and Shared Data Directory for details.

Rhapsody as an organization recommends installing the application on the local drive (Option 2) as this provides greater stability and reduced downtime with only a minimal configuration cost. This also avoids any issues that might occur due to running executables from a shared location.

Installing with a Shared Application Drive

The Rhapsody data store must be mounted on a drive using a suitable driver in order to avoid performance issues. Refer to the Datastore Setup for details.

Do not use windows network shares or NFS technology for any Rhapsody data store deployments in order to avoid critical performance issues.

To install both the application directory and the data directory on a shared drive:

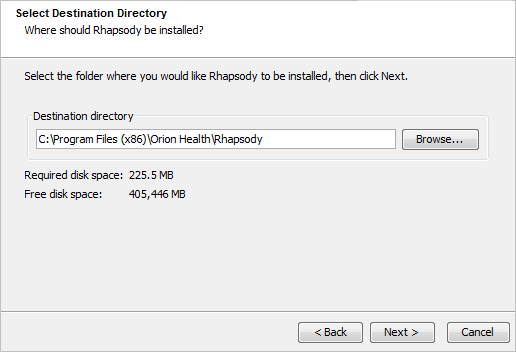

- Run the Rhapsody installer on one of the servers.

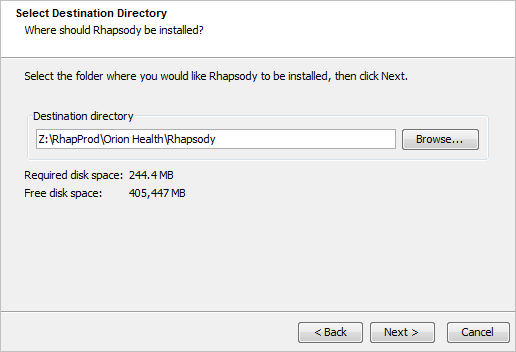

Select a location on the shared drive to install Rhapsody:

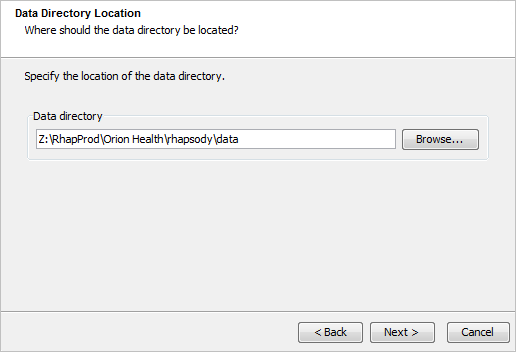

Then select a location on the shared drive for the data directory.

- Proceed with the Rhapsody installation as normal.

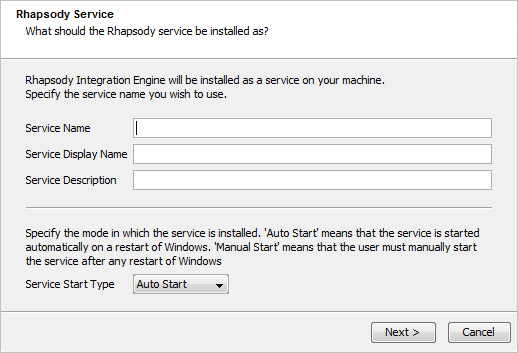

If you are installing Rhapsody in a Windows HA environment with a shared application or installation drive, the Windows service must be configured to use a domain ID with appropriate permissions. Otherwise, authentication failures will occur when the Windows Service starts up.

Upgrading a Shared Application Drive

Upgrading a shared application drive is the same as a normal upgrade. Rhapsody should be shut down using the cluster software (so that the cluster software does not attempt to restart Rhapsody). Thereafter the installer should be run as normal.

Licensing

The Rhapsody license is coded against the hostnames of the machines. There are several options for setting up the license in an active-passive setup.

If the application is installed on the shared drive it means there will be one copy of the license for both machines. In this case, there are three options for licensing:

- Make the cluster software assign a virtual hostname as well as the virtual IP. For example, the cluster software could assign RhapProd as the host name of the active node. When the license is installed this virtual host name will be included in the license. When a fail-over occurs, the cluster software is required to assign the virtual host name (“RhapProd”) to the new active node before Rhapsody is started.

- The license service will ignore a single number on the end of the hostname. Therefore the hostnames of the two servers should be in the format nameN1 and nameN2, for example Rhapsody3 and Rhapsody5. If the hostnames follow this format, the same license will be valid on both servers.

- If the servers cannot be named in the above manner, the license will need to be swapped during fail-over. This swapping should be performed using the cluster software or by modifying the Rhapsody start script. In this case, after installing Rhapsody on both servers, Rhapsody IDE should be connected to the active node and a license installed. This will create the file in the application directory (

\Rhapsody\rhapsody\licenses). This file should be renamedRhapsodyLicense.hostname, for examplelicenses.RhapsodyserverA. Rhapsody should then be failed over so that it starts up on the passive node. Again, a license should be installed using Rhapsody IDE and\Rhapsody\rhapsody\licensesrenamed tolicenses.hostname, for examplelicenses.RhapsodyserverB. The start script should then use the hostname of the server to pick one of the two licenses.hostnamefiles and copy it to licenses.

Installing on a Local Application Drive and Shared Data Directory

The Rhapsody data store must be mounted on a drive using a suitable driver in order to avoid performance issues. Refer to the Datastore Setup for details.

Do not use windows network shares or NFS technology for any Rhapsody data store deployments in order to avoid critical performance issues.

To install only the data directory on a shared drive:

- Run the Rhapsody installer on server one.

Select a location on the local drive to install Rhapsody:

Then select a location on the shared drive for the data directory:

- Finish the Rhapsody installation as normal

- Shut down Rhapsody on server one.

- Run the Rhapsody installer on server two.

Select a location on the local drive to install Rhapsody:

Then select the same location on the shared drive as server one:

- Proceed with the Rhapsody installation as normal.

If you are installing Rhapsody in a Windows HA environment with a shared application or installation drive, the Windows service must be configured to use a domain ID with appropriate permissions. Otherwise, authentication failures will occur when the Windows Service starts up:

Upgrading a Local Application Drive and Shared Data Directory

There are two types of upgrades:

- The first (and most common scenario) is when no update to the data store is required for the upgrade.

- The second case is when an update to the data store is required for the upgrade.

An update to the data store is required, for example, when the structure of a pre-lockers configuration needs to be updated to a post-lockers configuration. Any updates to the data store are performed during startup.

Upgrade with Failover

Run the installer on the passive node as normal. Once the upgrade has finished the active node should be failed over to the passive node. When the new Rhapsody engine starts up, it will perform any configuration migration required. While the new active node is starting, the installer should be run on the alternate Rhapsody node. Note that a fail-over cannot be performed until the second upgrade has completed.

Upgrade without Failover

To upgrade, shut down the active-passive cluster. On the active node run the installer as normal. After the upgrade, the active node can be restarted. Run the installer on the passive node. For the data directory location, specify the path of the shared data folder. This will run the installer on the passive node without running the data upgrade. Do not start the passive engine after upgrading it. Note that a fail-over cannot be performed until the second upgrade has completed.

Licensing

The Rhapsody license is coded against the hostnames of the machines. There are several options for setting up the license in an active-passive setup.

If Rhapsody is installed with both nodes having their own copy of the application, there will need to be a copy of the license in both application directories. There are two options for setting this up:

- If the servers either have a virtual host name or a name using the name1/name2 convention, then the license can be installed on the active node. The

Rhapsody\rhapsody\RhapsodyLicensefile should be copied to the passive nodes install directory. - The license service will ignore a single number on the end of the hostname. Therefore the hostnames of the two servers should be in the format nameN1 and nameN2, for example Rhapsody3 and Rhapsody5. If the hostnames follow this format the same license will be valid on both servers.

- If the servers are not set up with a hostname that can be shared, the license should be installed on the active node. Then a fail-over should be performed and the license installed again to generate the second node’s license.

Duplicated Files

The following configuration and log files are duplicated when the Rhapsody application is installed on a local drive:

File |

Description |

|---|---|

|

Basic Rhapsody application settings, memory usage, command line parameters, etc. |

wrapper-local.conf |

Local settings.

When you make changes to this file, ensure you uncomment the appropriate Configuration Include line at the bottom of the

wrapper.conf file.

|

wrapper-platform.conf |

Platform specific settings.

When you make changes to this file, ensure you uncomment the appropriate Configuration Include line at the bottom of the

wrapper.conf file.

|

|

The configuration file for logging. |

|

The Rhapsody configuration file. |

If any of these files are modified, both nodes should be modified. Moreover, if Rhapsody Support requests one of these files, send both versions grouped by the server they came off.

Cluster Setup

This document does not describe how to configure the cluster software as there are many cluster software alternatives available. It does, however, cover the common aspects that should be set up.

Virtual IP

All connections to a Rhapsody in an active passive setup should be through a virtual IP or host name. This virtual address should be configured in the cluster software so that it always points to the active Rhapsody server.

Start/Stop Scripts

The cluster software must be able to stop Rhapsody during a fail-over (that is, if it is running but unresponsive).

This should use the Windows service Stop command or the UNIX rhapsody.sh stop command. This gives Rhapsody the chance to stop cleanly, and avoid validation occurring on the next startup.

- The stop operation should be given five minutes to complete normally.

- If the stop operation does not complete, the cluster software should kill the application.

To start Rhapsody, the Windows service start command (net start Rhapsody_<majorVersion>.<minorVersion>.<servicePack>), or the UNIX start command (rhapsody.sh start) should be used.

Failure Detection

The cluster software needs to be configured to detect when there is an issue with Rhapsody. The Rhapsody state can by checked by checking the Windows service Status or on a UNIX system running the rhapsody.sh status command.

Stop Rhapsody from Automatically Starting

Rhapsody automatically restarts if the application stops when the stop command has not been called. However, in a fail-over configuration, you may want to treat any stoppage of Rhapsody as a fail-over even though most issues are not caused by the failed hardware.

If this is the case, the automatic restart should be disabled. This is controlled in the wrapper.conf file in the main Rhapsody install directory. To disable restarts, add the following line to the wrapper.conf file (the location in the file does not matter):

wrapper.disable_restarts=true

Alternatively, change how many times Rhapsody will attempt to restart before failing. By default, Rhapsody will attempt to start five times before outright failure (this number is reset after Rhapsody has successfully started). To change the number, add the following to the wrapper.conf file:

wrapper.max_failed_invocations=5

Other options for controlling how Rhapsody starts can be found at http://wrapper.tanukisoftware.org/doc/english/properties.html.

Shared Drive Protection

If possible, the cluster software should prevent the passive node from having access to the data directory. This is to prevent two Rhapsody’s trying to access the same data directory simultaneously. From Rhapsody 3.3 onwards, Rhapsody itself detects whether another Rhapsody engine is already accessing the data store, but this data protection should also be setup in the cluster software.

Notification of Fail-over

If a fail-over occurs, the administrator should be notified to determine the cause and resolve the issue.

Shared Data Store Redundancy

The shared data store must provide redundancy to provide fault tolerance to the overall solution. This should include:

- Fault tolerant RAID for disks; RAID 1 is recommended for low throughout, RAID 10 for high throughput.

- Dual controllers on the shared drive

- Dual adapters to connect to the shared data drive on each server.

- Connections from each adapter to each controller.

- Dual power supply.

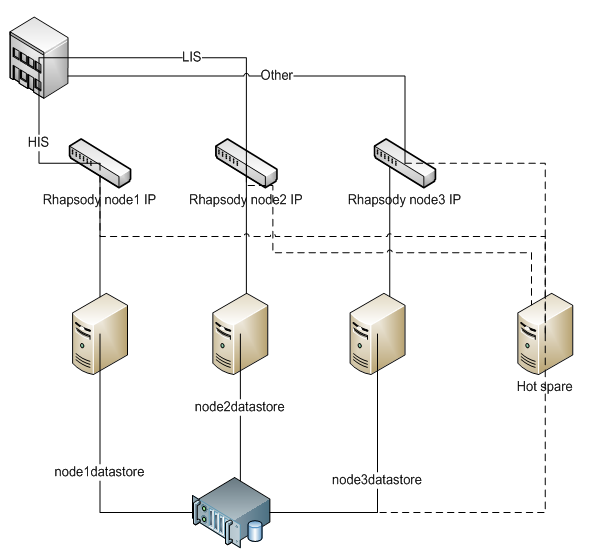

Clustering Rhapsody for Higher Throughput

Rhapsody can be clustered for higher volume processing by providing a number of independent nodes. To also provide failure redundancy the active nodes should be able to fail to a hot spare (passive node).

In a cluster of independent nodes, the messages can be shared between the nodes by configuration splitting.

Configuration Splitting

In the case of configuration splitting each node in the cluster is configured with different portions of the configuration. For example, all the messages from the HIS are received on Node1 and all messages from the LIS are received on Node 2.