Tested with python 3.6 and python 2.7

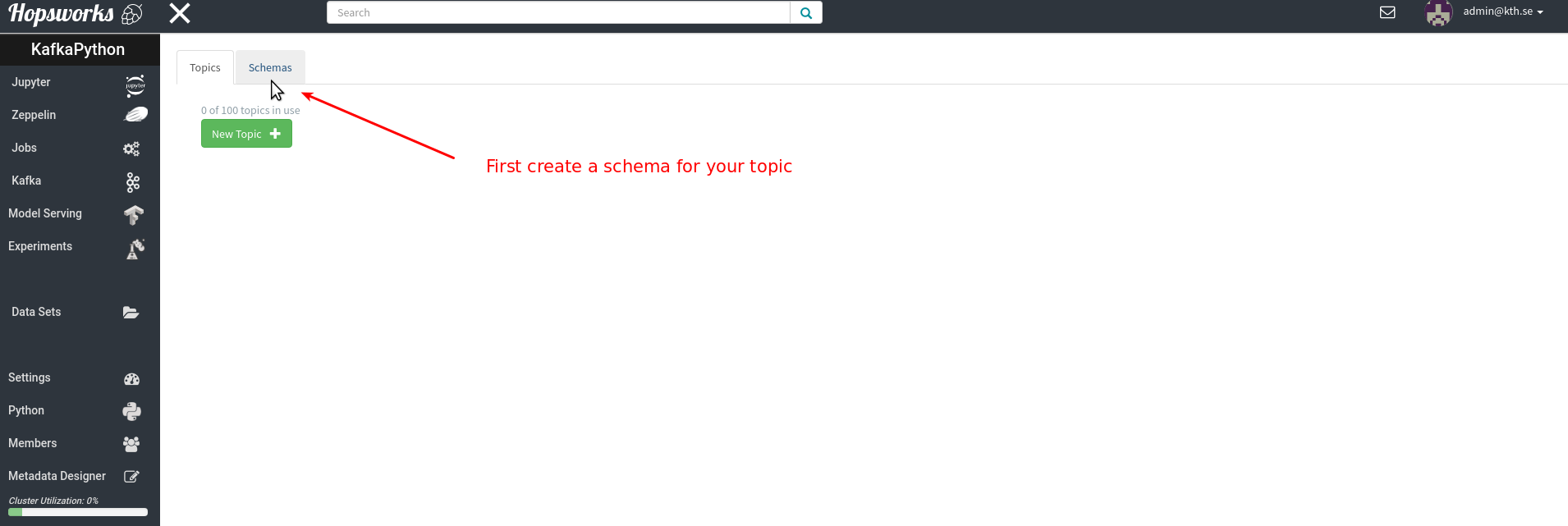

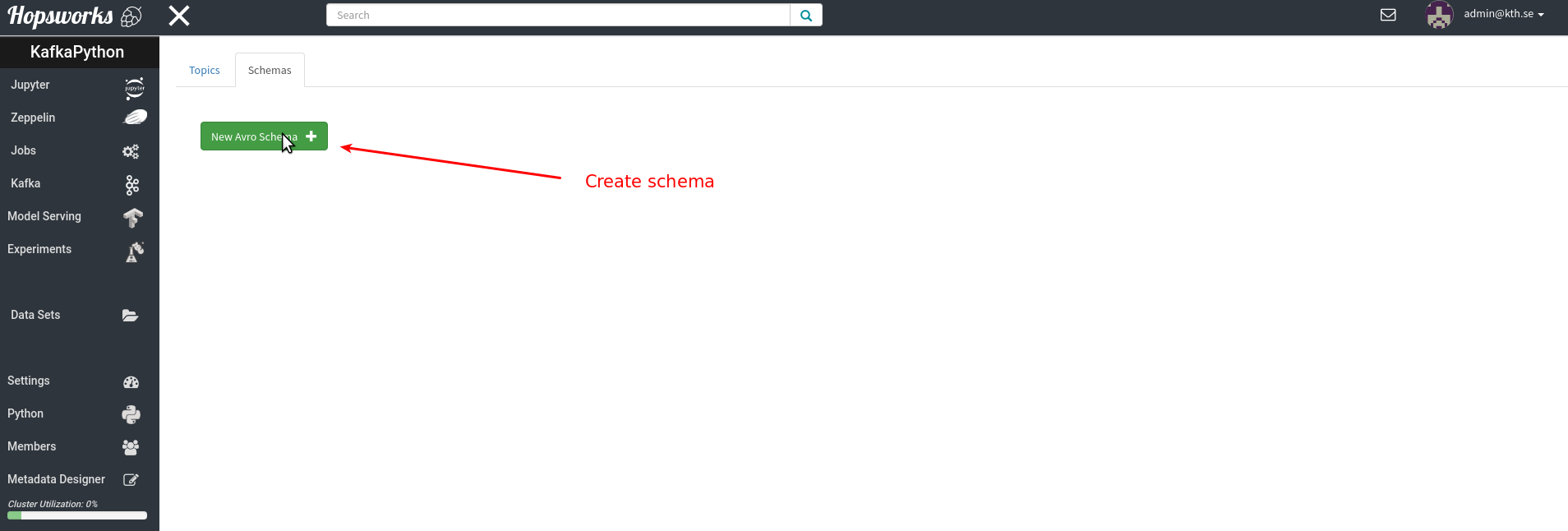

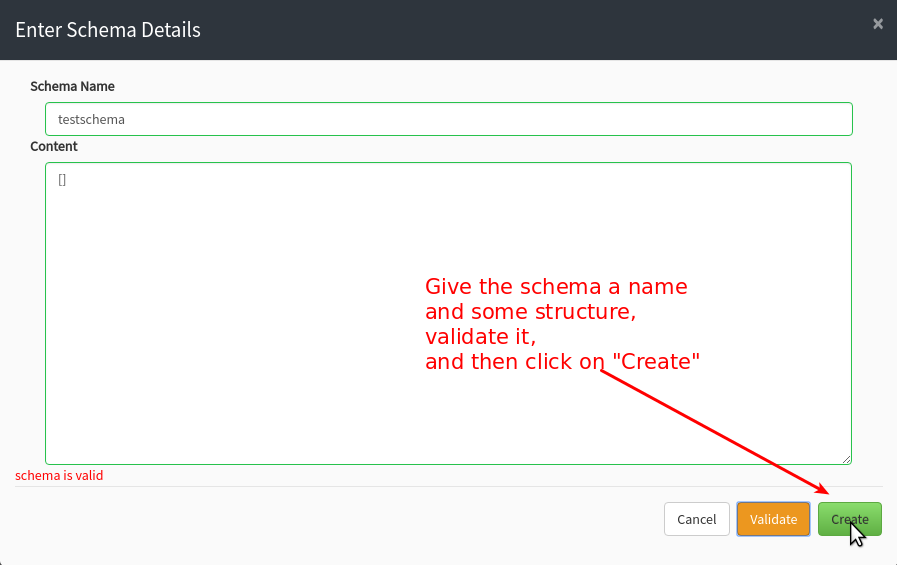

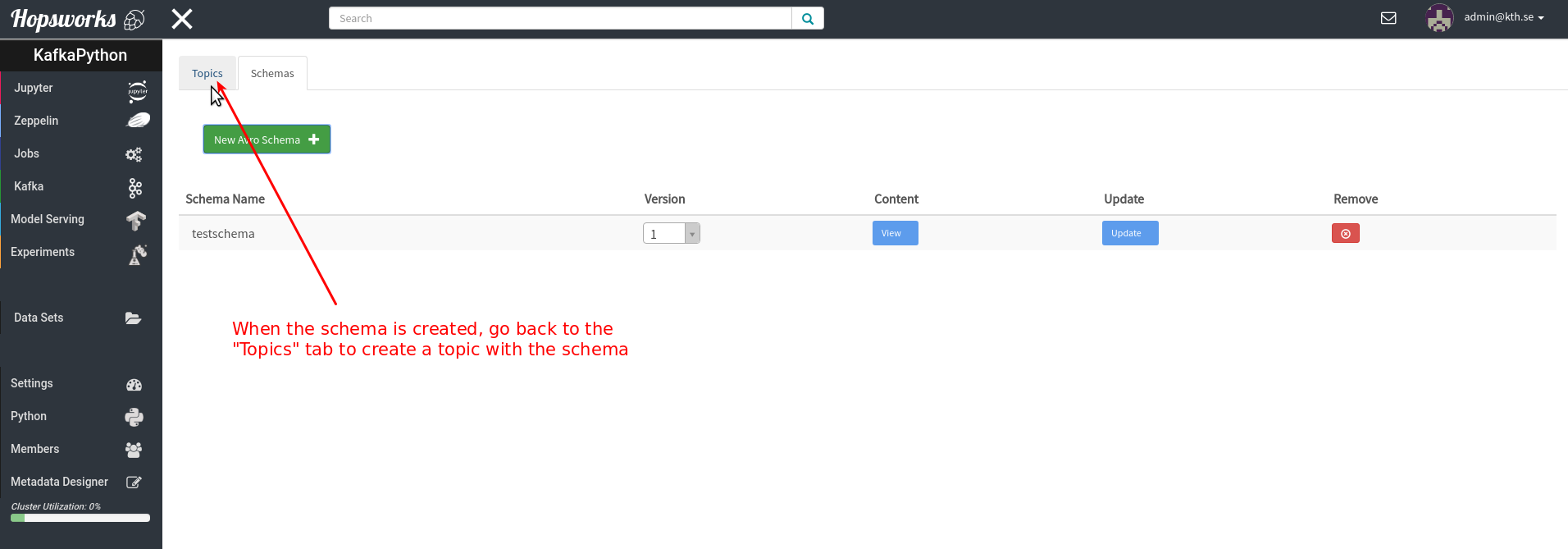

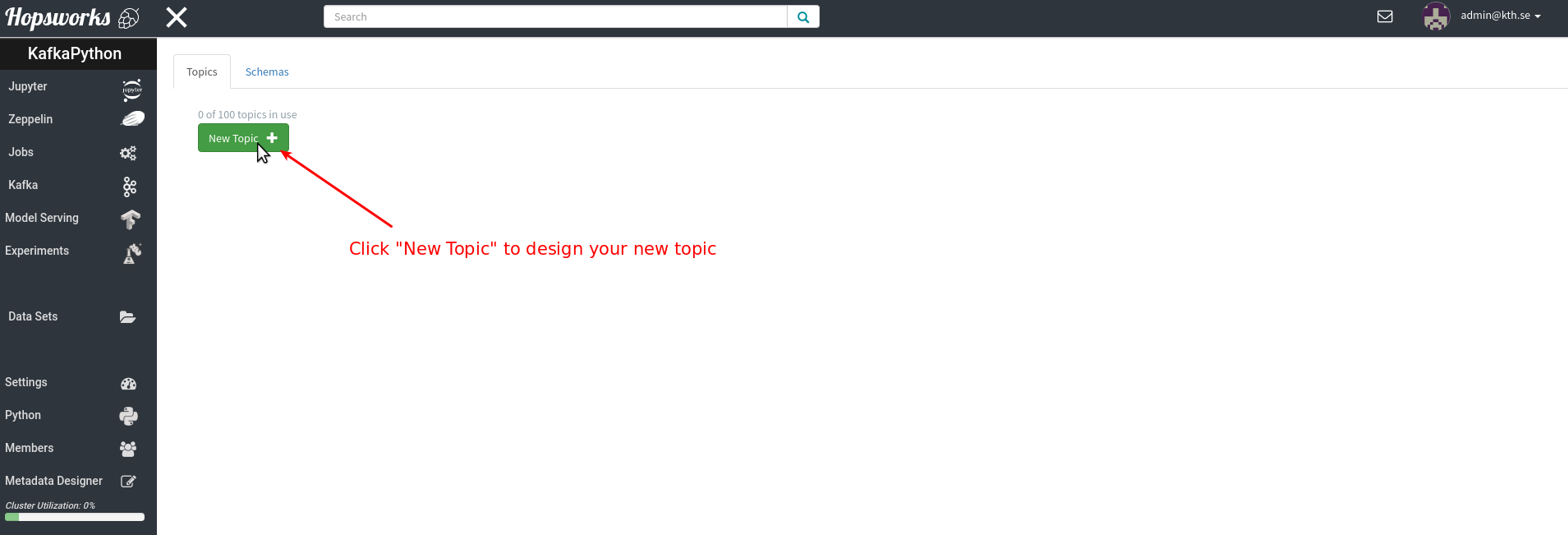

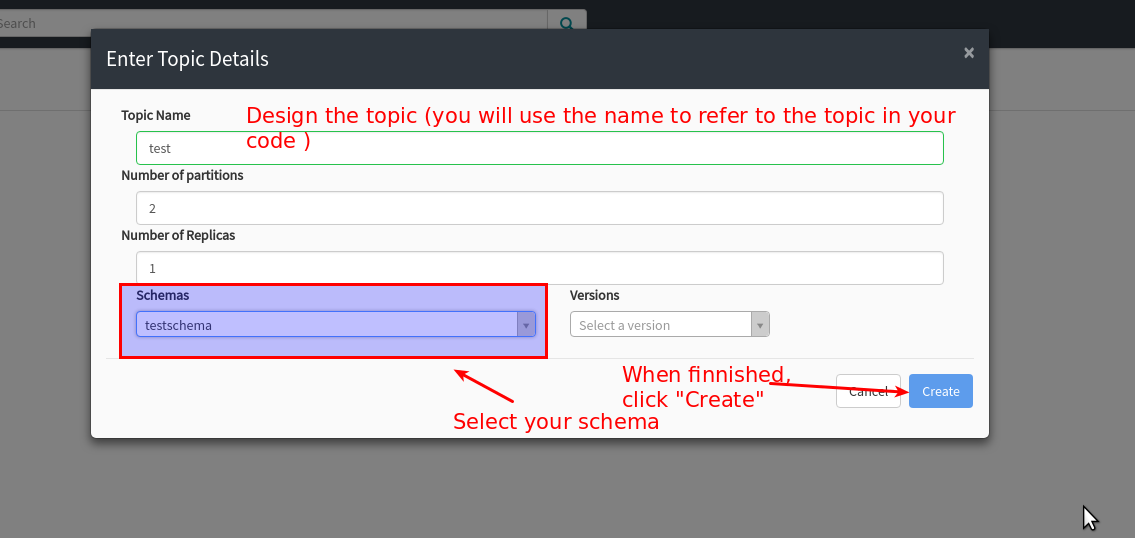

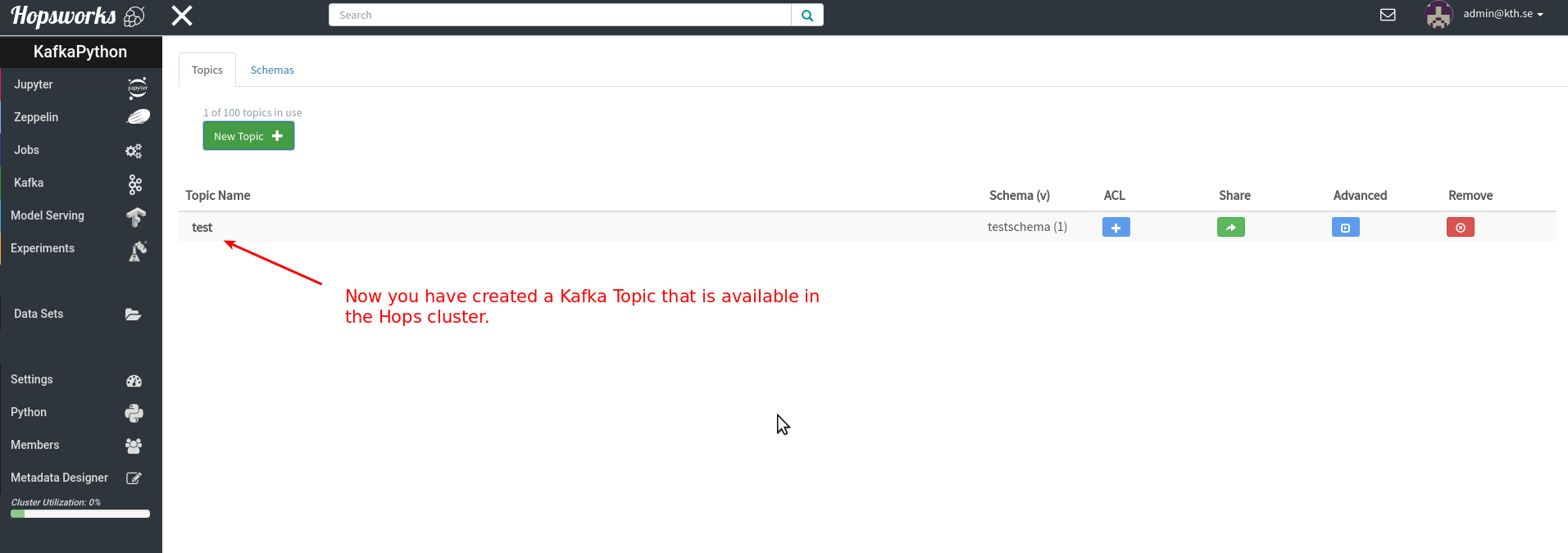

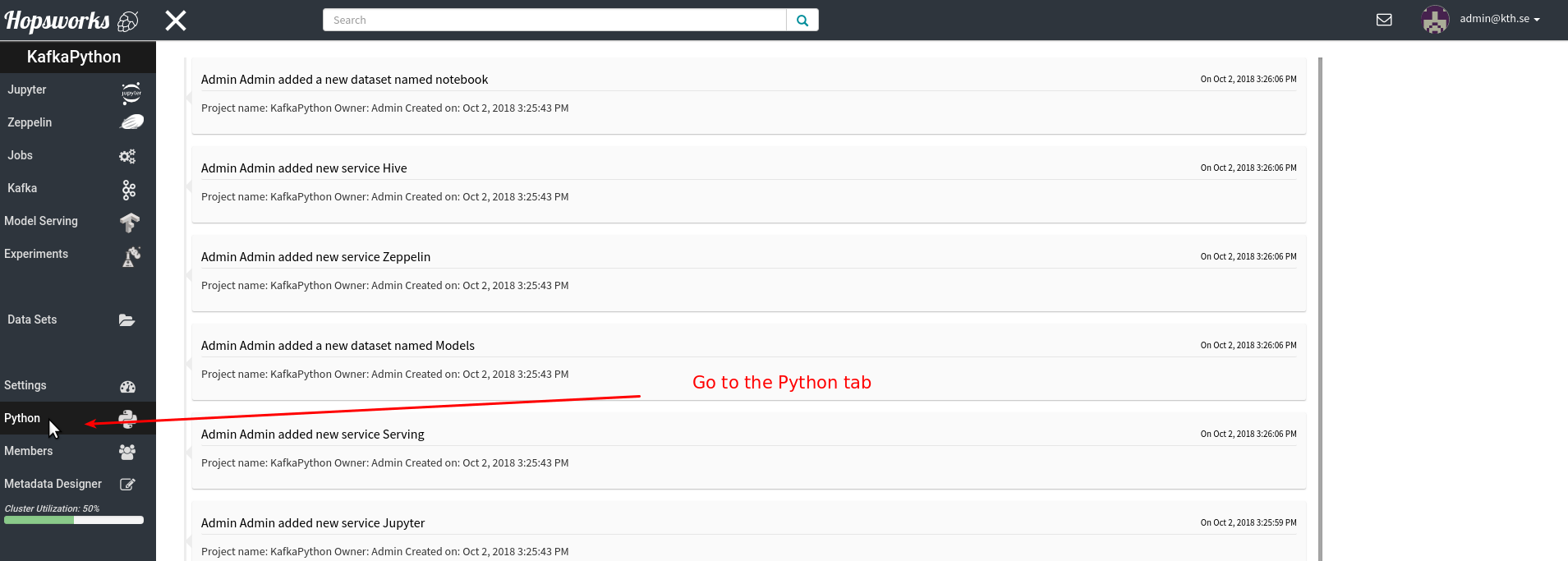

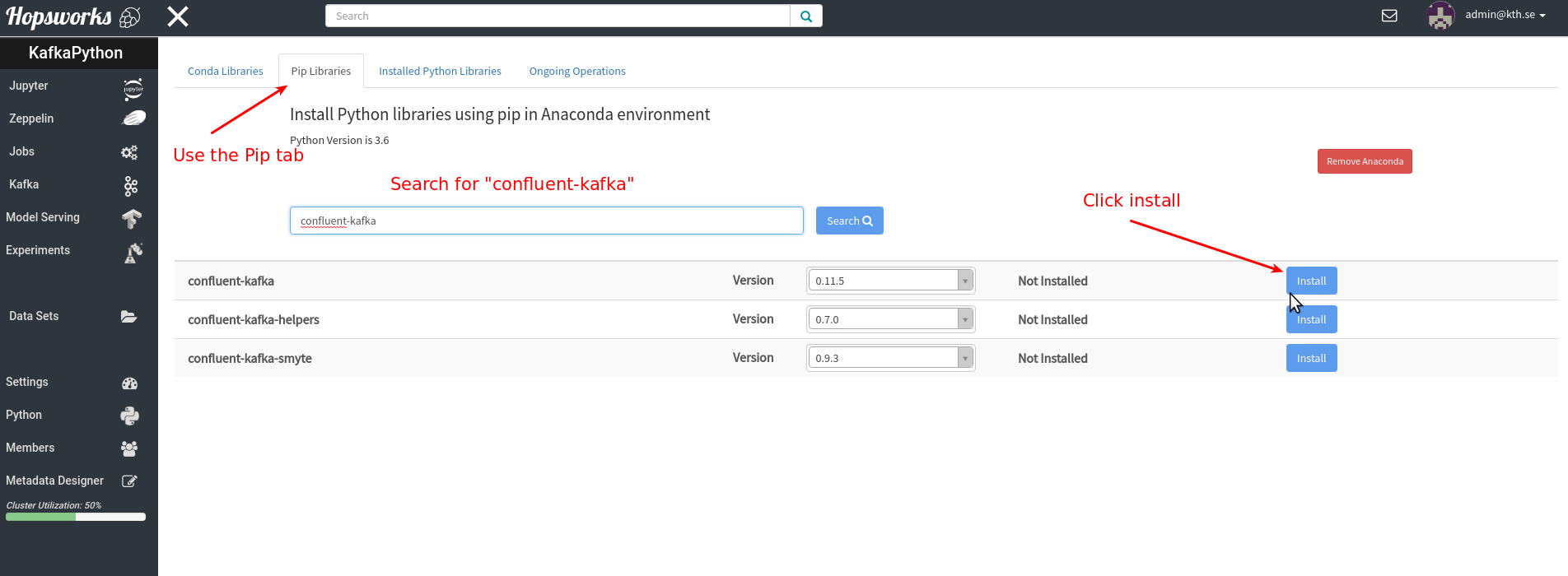

\n", "\n", "Before running this notebook, you should have created a Kafka topic with a name that you can configure in the `TOPIC_NAME` variable below in the code.\n", "\n", "The screenshots below illustrates the steps necessary to create a Kafka topic on Hops\n", "\n", "\n", "\n", "\n", "\n", "\n", "\n", "\n", "\n", "\n", "In this notebook we use two python dependencies:\n", "\n", "- [hops-util-py](https://github.com/logicalclocks/hops-util-py)\n", "- [confluent-kafka-python](https://github.com/confluentinc/confluent-kafka-python)\n", " \n", " To install the `confluent-kafka-python` libary, use the Hopsworks UI:\n", " \n", "\n", "\n", " \n", "The hops-util library is already installed by default when projects are created on Hops. However, if you need to re-install it for some reason you can use the Hopsworks UI to first uninstall it and the install it from pip using the same method as described above." ] }, { "cell_type": "markdown", "metadata": {}, "source": [ "## Imports" ] }, { "cell_type": "code", "execution_count": 1, "metadata": {}, "outputs": [ { "name": "stdout", "output_type": "stream", "text": [ "Starting Spark application\n" ] }, { "data": { "text/html": [ "| ID | YARN Application ID | Kind | State | Spark UI | Driver log | Current session? |

|---|---|---|---|---|---|---|

| 31 | application_1538483294796_0034 | pyspark | idle | Link | Link | ✔ |