## Conformer

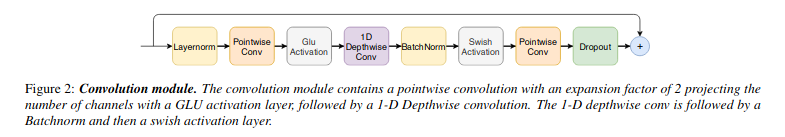

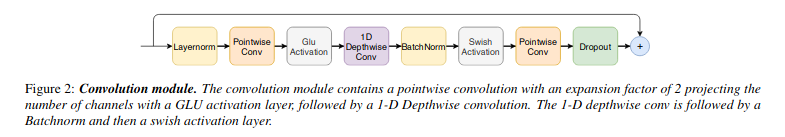

Implementation of the convolutional module from the Conformer paper, for improving the local inductive bias in Transformers.

## Install

```bash

$ pip install conformer

```

## Usage

The Conformer convolutional module, the main novelty of the paper

```python

import torch

from conformer import ConformerConvModule

layer = ConformerConvModule(

dim = 512,

causal = False, # auto-regressive or not - 1d conv will be made causal with padding if so

expansion_factor = 2, # what multiple of the dimension to expand for the depthwise convolution

kernel_size = 31, # kernel size, 17 - 31 was said to be optimal

dropout = 0. # dropout at the very end

)

x = torch.randn(1, 1024, 512)

x = layer(x) + x

```

1 Conformer Block

```python

import torch

from conformer import ConformerBlock

block = ConformerBlock(

dim = 512,

dim_head = 64,

heads = 8,

ff_mult = 4,

conv_expansion_factor = 2,

conv_kernel_size = 31,

attn_dropout = 0.,

ff_dropout = 0.,

conv_dropout = 0.

)

x = torch.randn(1, 1024, 512)

block(x) # (1, 1024, 512)

```

Conformer - just multiple `ConformerBlock` from above

```python

import torch

from conformer import Conformer

conformer = Conformer(

dim = 512,

depth = 12, # 12 blocks

dim_head = 64,

heads = 8,

ff_mult = 4,

conv_expansion_factor = 2,

conv_kernel_size = 31,

attn_dropout = 0.,

ff_dropout = 0.,

conv_dropout = 0.

)

x = torch.randn(1, 1024, 512)

conformer(x) # (1, 1024, 512)

```

## Todo

- [ ] switch to a better relative positional encoding. shaw's is dated

- [ ] flash attention with a better RPE

## Citations

```bibtex

@misc{gulati2020conformer,

title = {Conformer: Convolution-augmented Transformer for Speech Recognition},

author = {Anmol Gulati and James Qin and Chung-Cheng Chiu and Niki Parmar and Yu Zhang and Jiahui Yu and Wei Han and Shibo Wang and Zhengdong Zhang and Yonghui Wu and Ruoming Pang},

year = {2020},

eprint = {2005.08100},

archivePrefix = {arXiv},

primaryClass = {eess.AS}

}

```

## Conformer

Implementation of the convolutional module from the Conformer paper, for improving the local inductive bias in Transformers.

## Install

```bash

$ pip install conformer

```

## Usage

The Conformer convolutional module, the main novelty of the paper

```python

import torch

from conformer import ConformerConvModule

layer = ConformerConvModule(

dim = 512,

causal = False, # auto-regressive or not - 1d conv will be made causal with padding if so

expansion_factor = 2, # what multiple of the dimension to expand for the depthwise convolution

kernel_size = 31, # kernel size, 17 - 31 was said to be optimal

dropout = 0. # dropout at the very end

)

x = torch.randn(1, 1024, 512)

x = layer(x) + x

```

1 Conformer Block

```python

import torch

from conformer import ConformerBlock

block = ConformerBlock(

dim = 512,

dim_head = 64,

heads = 8,

ff_mult = 4,

conv_expansion_factor = 2,

conv_kernel_size = 31,

attn_dropout = 0.,

ff_dropout = 0.,

conv_dropout = 0.

)

x = torch.randn(1, 1024, 512)

block(x) # (1, 1024, 512)

```

Conformer - just multiple `ConformerBlock` from above

```python

import torch

from conformer import Conformer

conformer = Conformer(

dim = 512,

depth = 12, # 12 blocks

dim_head = 64,

heads = 8,

ff_mult = 4,

conv_expansion_factor = 2,

conv_kernel_size = 31,

attn_dropout = 0.,

ff_dropout = 0.,

conv_dropout = 0.

)

x = torch.randn(1, 1024, 512)

conformer(x) # (1, 1024, 512)

```

## Todo

- [ ] switch to a better relative positional encoding. shaw's is dated

- [ ] flash attention with a better RPE

## Citations

```bibtex

@misc{gulati2020conformer,

title = {Conformer: Convolution-augmented Transformer for Speech Recognition},

author = {Anmol Gulati and James Qin and Chung-Cheng Chiu and Niki Parmar and Yu Zhang and Jiahui Yu and Wei Han and Shibo Wang and Zhengdong Zhang and Yonghui Wu and Ruoming Pang},

year = {2020},

eprint = {2005.08100},

archivePrefix = {arXiv},

primaryClass = {eess.AS}

}

```

## Conformer

Implementation of the convolutional module from the Conformer paper, for improving the local inductive bias in Transformers.

## Install

```bash

$ pip install conformer

```

## Usage

The Conformer convolutional module, the main novelty of the paper

```python

import torch

from conformer import ConformerConvModule

layer = ConformerConvModule(

dim = 512,

causal = False, # auto-regressive or not - 1d conv will be made causal with padding if so

expansion_factor = 2, # what multiple of the dimension to expand for the depthwise convolution

kernel_size = 31, # kernel size, 17 - 31 was said to be optimal

dropout = 0. # dropout at the very end

)

x = torch.randn(1, 1024, 512)

x = layer(x) + x

```

1 Conformer Block

```python

import torch

from conformer import ConformerBlock

block = ConformerBlock(

dim = 512,

dim_head = 64,

heads = 8,

ff_mult = 4,

conv_expansion_factor = 2,

conv_kernel_size = 31,

attn_dropout = 0.,

ff_dropout = 0.,

conv_dropout = 0.

)

x = torch.randn(1, 1024, 512)

block(x) # (1, 1024, 512)

```

Conformer - just multiple `ConformerBlock` from above

```python

import torch

from conformer import Conformer

conformer = Conformer(

dim = 512,

depth = 12, # 12 blocks

dim_head = 64,

heads = 8,

ff_mult = 4,

conv_expansion_factor = 2,

conv_kernel_size = 31,

attn_dropout = 0.,

ff_dropout = 0.,

conv_dropout = 0.

)

x = torch.randn(1, 1024, 512)

conformer(x) # (1, 1024, 512)

```

## Todo

- [ ] switch to a better relative positional encoding. shaw's is dated

- [ ] flash attention with a better RPE

## Citations

```bibtex

@misc{gulati2020conformer,

title = {Conformer: Convolution-augmented Transformer for Speech Recognition},

author = {Anmol Gulati and James Qin and Chung-Cheng Chiu and Niki Parmar and Yu Zhang and Jiahui Yu and Wei Han and Shibo Wang and Zhengdong Zhang and Yonghui Wu and Ruoming Pang},

year = {2020},

eprint = {2005.08100},

archivePrefix = {arXiv},

primaryClass = {eess.AS}

}

```

## Conformer

Implementation of the convolutional module from the Conformer paper, for improving the local inductive bias in Transformers.

## Install

```bash

$ pip install conformer

```

## Usage

The Conformer convolutional module, the main novelty of the paper

```python

import torch

from conformer import ConformerConvModule

layer = ConformerConvModule(

dim = 512,

causal = False, # auto-regressive or not - 1d conv will be made causal with padding if so

expansion_factor = 2, # what multiple of the dimension to expand for the depthwise convolution

kernel_size = 31, # kernel size, 17 - 31 was said to be optimal

dropout = 0. # dropout at the very end

)

x = torch.randn(1, 1024, 512)

x = layer(x) + x

```

1 Conformer Block

```python

import torch

from conformer import ConformerBlock

block = ConformerBlock(

dim = 512,

dim_head = 64,

heads = 8,

ff_mult = 4,

conv_expansion_factor = 2,

conv_kernel_size = 31,

attn_dropout = 0.,

ff_dropout = 0.,

conv_dropout = 0.

)

x = torch.randn(1, 1024, 512)

block(x) # (1, 1024, 512)

```

Conformer - just multiple `ConformerBlock` from above

```python

import torch

from conformer import Conformer

conformer = Conformer(

dim = 512,

depth = 12, # 12 blocks

dim_head = 64,

heads = 8,

ff_mult = 4,

conv_expansion_factor = 2,

conv_kernel_size = 31,

attn_dropout = 0.,

ff_dropout = 0.,

conv_dropout = 0.

)

x = torch.randn(1, 1024, 512)

conformer(x) # (1, 1024, 512)

```

## Todo

- [ ] switch to a better relative positional encoding. shaw's is dated

- [ ] flash attention with a better RPE

## Citations

```bibtex

@misc{gulati2020conformer,

title = {Conformer: Convolution-augmented Transformer for Speech Recognition},

author = {Anmol Gulati and James Qin and Chung-Cheng Chiu and Niki Parmar and Yu Zhang and Jiahui Yu and Wei Han and Shibo Wang and Zhengdong Zhang and Yonghui Wu and Ruoming Pang},

year = {2020},

eprint = {2005.08100},

archivePrefix = {arXiv},

primaryClass = {eess.AS}

}

```