## Open source security data lake for AWS

Matano Open Source Security data lake is an open source **cloud-native security data lake**, built for security teams on AWS.

> [!NOTE]

> Matano offers a commercial managed Cloud SIEM for a complete enterprise Security Operations platform. [Learn more](https://matanosecurity.com).

## Features

- **Security Data Lake:** Normalize unstructured security logs into a structured realtime data lake in your AWS account.

- **Collect All Your Logs:** Integrates out of the box with [50+ sources](https://www.matano.dev/docs/log-sources/managed-log-sources) for security logs and can easily be extended with custom sources.

- **Detection-as-Code:** Use Python to build realtime detections as code. Support for automatic import of [Sigma](https://www.matano.dev/docs/detections/importing-from-sigma-rules) detections to Matano.

- **Log Transformation Pipeline:** Supports custom VRL ([Vector Remap Language](https://vector.dev/docs/reference/vrl/)) scripting to parse, enrich, normalize and transform your logs as they are ingested without managing any servers.

- **No Vendor Lock-In:** Uses an open table format ([Apache Iceberg](https://iceberg.apache.org/)) and open schema standards ([ECS](https://github.com/elastic/ecs)), to give you full ownership of your security data in a vendor-neutral format.

- **Bring Your Own Analytics:** Query your security lake directly from any Iceberg-compatible engine (AWS Athena, Snowflake, Spark, Trino etc.) without having to copy data around.

- **Serverless:** Fully serverless and designed specifically for AWS and focuses on enabling high scale, low cost, and zero-ops.

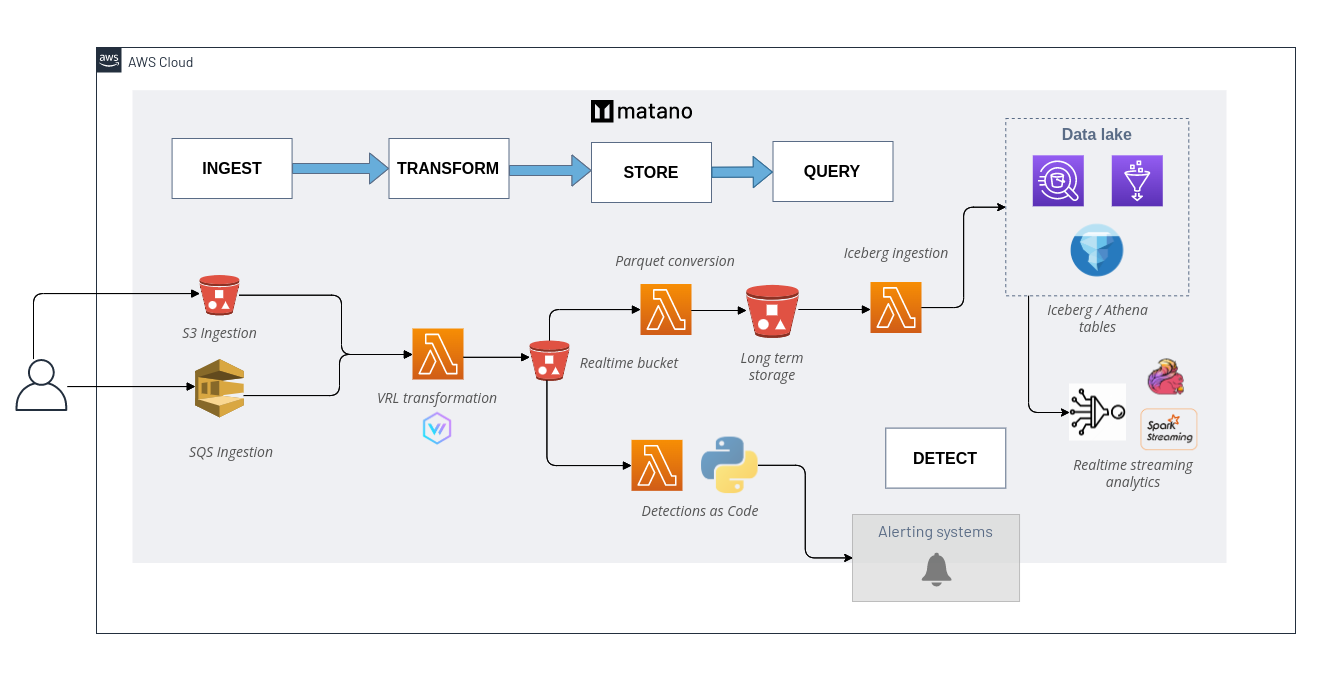

## Architecture

## 👀 Use cases

- Reduce SIEM costs.

- Augment your SIEM with a security data lake for additional context during investigations.

- Write detections-as-code using Python to detect suspicious behavior & create contextualized alerts.

- ECS-compatible serverless alternative to ELK / Elastic Security stack.

## ✨ Integrations

#### Managed log sources

- [**AWS CloudTrail**](https://www.matano.dev/docs/log-sources/managed-log-sources/aws/cloudtrail)

- [**AWS Route53**](https://www.matano.dev/docs/log-sources/managed-log-sources/aws/route53-resolver-logs)

- [**AWS VPC Flow**](https://www.matano.dev/docs/log-sources/managed-log-sources/aws/vpcflow)

- [**AWS Config**](https://www.matano.dev/docs/log-sources/managed-log-sources/aws/aws-config)

- [**AWS ELB**](https://www.matano.dev/docs/log-sources/managed-log-sources/aws/aws-elb)

- [**Amazon S3 Server Access**](https://www.matano.dev/docs/log-sources/managed-log-sources/aws/amazon-s3-server-access-logs)

- [**Amazon S3 Inventory Reports**](https://www.matano.dev/docs/log-sources/managed-log-sources/aws/s3-inventory-report)

- [**Amazon Inspector**](https://www.matano.dev/docs/log-sources/managed-log-sources/aws/amazon-inspector)

- [**Amazon WAF**](https://www.matano.dev/docs/log-sources/managed-log-sources/aws/amazon-waf)

- [**Cloudflare**](https://www.matano.dev/docs/log-sources/managed-log-sources/cloudflare)

- [**Crowdstrike**](https://www.matano.dev/docs/log-sources/managed-log-sources/crowdstrike)

- [**Duo**](https://www.matano.dev/docs/log-sources/managed-log-sources/duo)

- [**Okta**](https://www.matano.dev/docs/log-sources/managed-log-sources/okta)

- [**GitHub**](https://www.matano.dev/docs/log-sources/managed-log-sources/github)

- [**Google Workspace**](https://www.matano.dev/docs/log-sources/managed/google-workspace)

- [**Office 365**](https://www.matano.dev/docs/log-sources/managed-log-sources/office365)

- [**Snyk**](https://www.matano.dev/docs/log-sources/managed-log-sources/snyk)

- [**Suricata**](https://www.matano.dev/docs/log-sources/managed-log-sources/suricata)

- [**Zeek**](https://www.matano.dev/docs/log-sources/managed-log-sources/zeek)

- [**Custom 🔧**](#-log-transformation--data-normalization)

#### Alert destinations

- [**Amazon SNS**](https://www.matano.dev/docs/detections/alerting)

- [**Slack**](https://www.matano.dev/docs/detections/alerting/slack)

#### Query engines

- [**Amazon Athena**](https://docs.aws.amazon.com/athena/latest/ug/querying-iceberg.html) (default)

- [**Snowflake**](https://www.snowflake.com/blog/iceberg-tables-powering-open-standards-with-snowflake-innovations/) (preview)

- [**Spark**](https://iceberg.apache.org/spark-quickstart/)

- [**Trino**](https://trino.io/docs/current/connector/iceberg.html)

- [**BigQuery Omni (BigLake)**](https://cloud.google.com/biglake)

- [**Dremio**](https://docs.dremio.com/software/data-formats/apache-iceberg/)

## Quick start

[**View the complete installation instructions**](https://www.matano.dev/docs/getting-started#installation)

### Installation

Install the matano CLI to deploy Matano into your AWS account, and manage your deployment.

**Linux**

```bash

curl -OL https://github.com/matanolabs/matano/releases/download/nightly/matano-linux-x64.sh

chmod +x matano-linux-x64.sh

sudo ./matano-linux-x64.sh

```

**macOS**

```bash

curl -OL https://github.com/matanolabs/matano/releases/download/nightly/matano-macos-x64.sh

chmod +x matano-macos-x64.sh

sudo ./matano-macos-x64.sh

```

### Deployment

[**Read the complete docs on getting started**](https://www.matano.dev/docs/getting-started)

To get started, run the `matano init` command.

- Make sure you have AWS credentials in your environment (or in an AWS CLI profile).

- The interactive CLI wizard will walk you through getting started by generating an initial [Matano directory](https://www.matano.dev/docs/matano-directory) for you, initializing your AWS account, and deploying into your AWS account.

- Initial deployment takes a few minutes.

### Directory structure

Once initialized, your [Matano directory](https://www.matano.dev/docs/matano-directory) is used to control & manage all resources in your project e.g. log sources, detections, and other configuration. It is structured as follows:

```bash

➜ example-matano-dir git:(main) tree

├── detections

│ └── aws_root_credentials

│ ├── detect.py

│ └── detection.yml

├── log_sources

│ ├── cloudtrail

│ │ ├── log_source.yml

│ │ └── tables

│ │ └── default.yml

│ └── zeek

│ ├── log_source.yml

│ └── tables

│ └── dns.yml

├── matano.config.yml

└── matano.context.json

```

When onboarding a new log source or authoring a detection, run `matano deploy` from anywhere in your project to deploy the changes to your account.

## 🔧 Log Transformation & Data Normalization

[**Read the complete docs on configuring custom log sources**](https://www.matano.dev/docs/log-sources/configuration)

[Vector Remap Language (VRL)](https://vector.dev/docs/reference/vrl/), allows you to easily onboard custom log sources and encourages you to normalize fields according to the [Elastic Common Schema (ECS)](https://www.elastic.co/guide/en/ecs/current/ecs-reference.html) to enable enhanced pivoting and bulk search for IOCs across your security data lake.

Users can define custom VRL programs to parse and transform unstructured logs as they are being ingested through one of the supported mechanisms for a log source (e.g. S3, SQS).

VRL is an expression-oriented language designed for transforming observability data (e.g. logs) in a safe and performant manner. It features a simple syntax and a rich set of built-in functions tailored specifically to observability use cases.

### Example: parsing JSON

Let's have a look at a simple example. Imagine that you're working with

HTTP log events that look like this:

```json

{

"line": "{\"status\":200,\"srcIpAddress\":\"1.1.1.1\",\"message\":\"SUCCESS\",\"username\":\"ub40fan4life\"}"

}

```

You want to apply these changes to each event:

- Parse the raw `line` string into JSON, and explode the fields to the top level

- Rename `srcIpAddress` to the `source.ip` ECS field

- Remove the `username` field

- Convert the `message` to lowercase

Adding this VRL program to your log source as a `transform` step would accomplish all of that:

###### log_source.yml

```yml

transform: |

. = object!(parse_json!(string!(.json.line)))

.source.ip = del(.srcIpAddress)

del(.username)

.message = downcase(string!(.message))

schema:

ecs_field_names:

- source.ip

- http.status

```

The resulting event 🎉:

```json

{

"message": "success",

"status": 200,

"source": {

"ip": "1.1.1.1"

}

}

```

## 📝 Writing Detections

[**Read the complete docs on detections**](https://www.matano.dev/docs/detections)

Use detections to define rules that can alert on threats in your security logs. A _detection_ is a Python program that is invoked with data from a log source in realtime and can create an _alert_.

### Examples

#### Detect failed attempts to export AWS EC2 instance in AWS CloudTrail logs.

```python

def detect(record):

return (

record.deepget("event.action") == "CreateInstanceExportTask"

and record.deepget("event.provider") == "ec2.amazonaws.com"

and record.deepget("event.outcome") == "failure"

)

```

#### Detect Brute Force Logins by IP across all configured log sources (e.g. Okta, AWS, GWorkspace)

###### detect.py

```python

def detect(r):

return (

"authentication" in r.deepget("event.category", [])

and r.deepget("event.outcome") == "failure"

)

def title(r):

return f"Multiple failed logins from {r.deepget('user.full_name')} - {r.deepget('source.ip')}"

def dedupe(r):

return r.deepget("source.ip")

```

###### detection.yml

```yaml

---

tables:

- aws_cloudtrail

- okta_system

- o365_audit

alert:

severity: medium

threshold: 5

deduplication_window_minutes: 15

destinations:

- slack_my_team

```

#### Detect Successful Login from never before seen IP for User

```python

from detection import remotecache

# a cache of user -> ip[]

user_to_ips = remotecache("user_ip")

def detect(record):

if (

record.deepget("event.action") == "ConsoleLogin" and

record.deepget("event.outcome") == "success"

):

# A unique key on the user name

user = record.deepget("user.name")

existing_ips = user_to_ips[user] or []

updated_ips = user_to_ips.add_to_string_set(

user,

record.deepget("source.ip")

)

# Alert on new IPs

new_ips = set(updated_ips) - set(existing_ips)

if existing_ips and new_ips:

return True

```

## 🚨 Alerting

[**Read the complete docs on alerting**](https://www.matano.dev/docs/detections/alerting)

#### Alerts table

All alerts are automatically stored in a Matano table named `matano_alerts`. The alerts and rule matches are normalized to ECS and contain context about the original event that triggered the rule match, along with the alert and rule data.

**Example Queries**

Summarize alerts in the last week that are activated (exceeded the threshold)

```sql

select

matano.alert.id as alert_id,

matano.alert.rule.name as rule_name,

max(matano.alert.title) as title,

count(*) as match_count,

min(matano.alert.first_matched_at) as first_matched_at,

max(ts) as last_matched_at,

array_distinct(flatten(array_agg(related.ip))) as related_ip,

array_distinct(flatten(array_agg(related.user))) as related_user,

array_distinct(flatten(array_agg(related.hosts))) as related_hosts,

array_distinct(flatten(array_agg(related.hash))) as related_hash

from

matano_alerts

where

matano.alert.first_matched_at > (current_timestamp - interval '7' day)

and matano.alert.activated = true

group by

matano.alert.rule.name,

matano.alert.id

order by

last_matched_at desc

```

#### Delivering alerts

You can deliver alerts to external systems. You can use the alerting SNS topic to deliver alerts to Email, Slack, and other services.

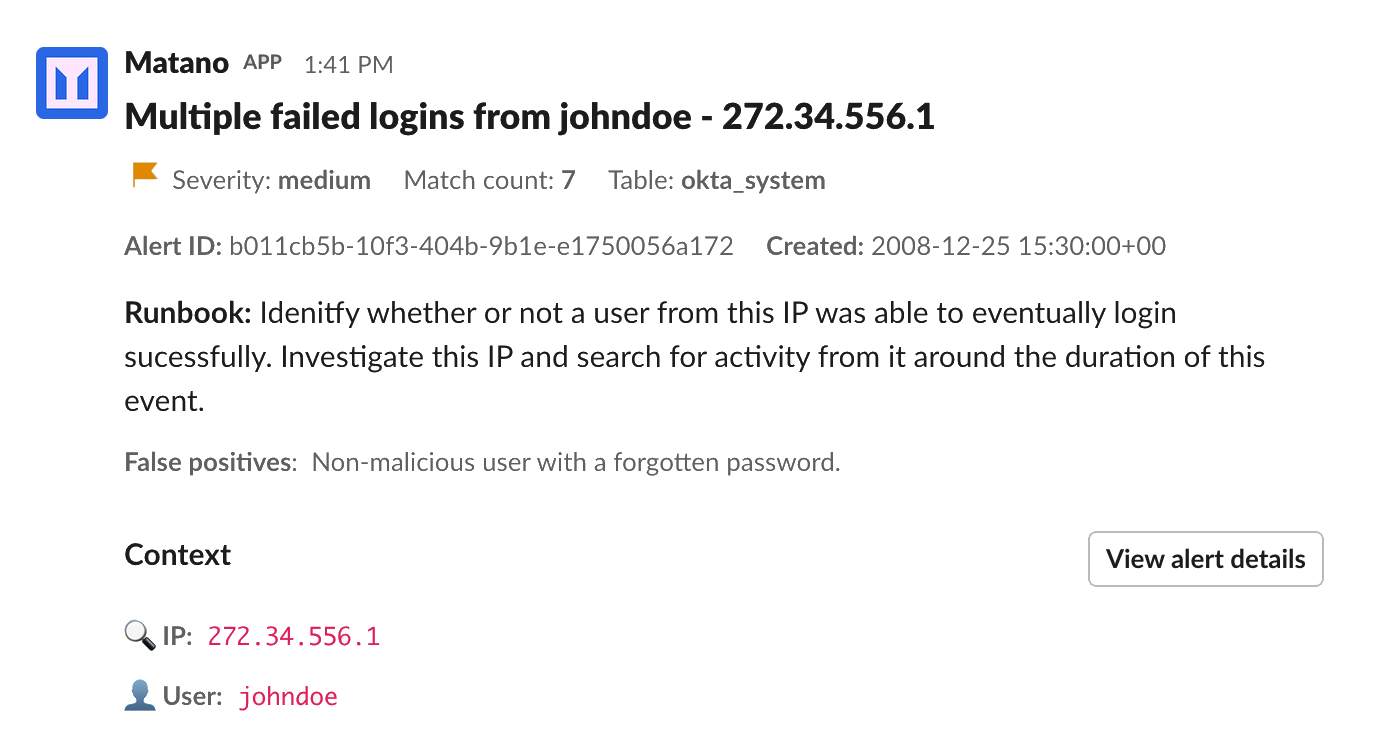

A medium severity alert delivered to Slack

A medium severity alert delivered to Slack

## ❤️ Community support

For general help on usage, please refer to the official [documentation](https://matano.dev/docs). For additional help, feel free to use one of these channels to ask a question:

- [Discord](https://discord.gg/YSYfHMbfZQ) \(Come join the family, and hang out with the team and community\)

- [Forum](https://github.com/matanolabs/matano/discussions) \(For deeper conversations about features, the project, or problems\)

- [GitHub](https://github.com/matanolabs/matano) \(Bug reports, Contributions\)

- [Twitter](https://twitter.com/matanolabs) \(Get news hot off the press\)

## 👷 Contributors

Thanks go to these wonderful people ([emoji key](https://allcontributors.org/docs/en/emoji-key)):

This project follows the [all-contributors](https://allcontributors.org) specification.

Contributions of any kind are welcome!

## License

- [Apache-2.0 License](LICENSE)