{

"cells": [

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# Gradient-based optimization"

]

},

{

"cell_type": "markdown",

"metadata": {

"tags": []

},

"source": [

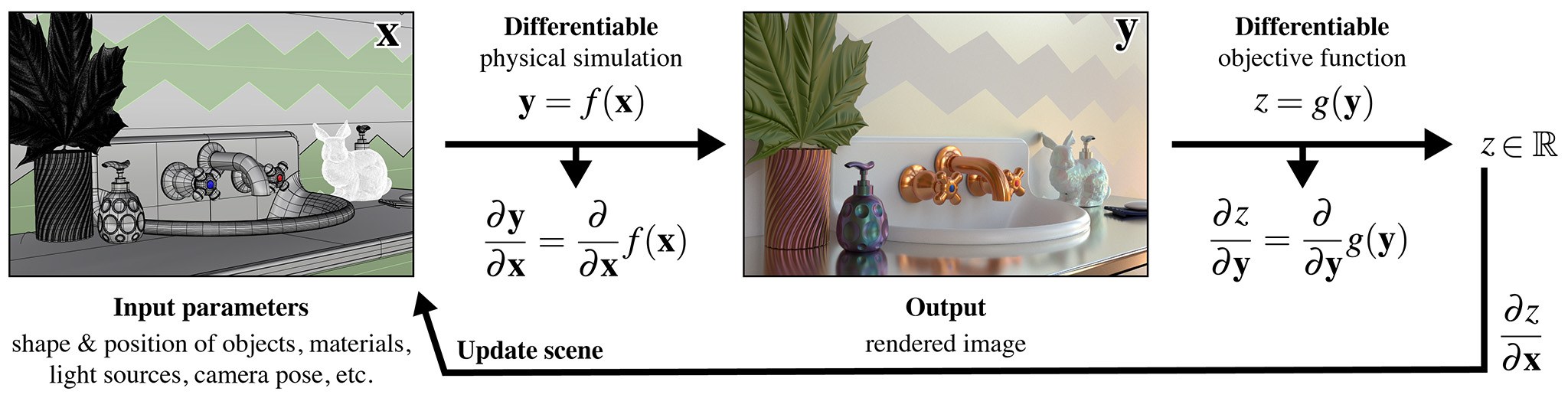

"## Overview\n",

"\n",

"Mitsuba 3 can be used to solve inverse problems involving light using a technique known as *differentiable rendering*. It interprets the rendering algorithm as a function $f(\\mathbf{x})$ that converts an input $\\mathbf{x}$ (the scene description) into an output $\\mathbf{y}$ (the rendering). This function $f$ is then mathematically differentiated to obtain $\\frac{d\\mathbf{y}}{d\\mathbf{x}}$, providing a first-order approximation of how a desired change in the output $\\mathbf{y}$ (the rendering) can be achieved by changing the inputs $\\mathbf{x}$ (the scene description). Together with a differentiable *objective function* $g(\\mathbf{y})$ that quantifies the suitability of tentative scene parameters, a gradient-based optimization algorithm such as stochastic gradient descent or Adam can then be used to find a sequence of scene parameters $\\mathbf{x_0}$, $\\mathbf{x_1}$, $\\mathbf{x_2}$, etc., that successively improve the objective function. In pictures:\n",

"\n",

"\n",

"\n",

"\n",

"In this tutorial, we will build a simple example application that showcases differentiation and optimization through a light transport simulation:\n",

"\n",

"1. We will first render a reference image of the Cornell Box scene.\n",

"2. Then, we will perturb the color of one of the walls, e.g. changing it to blue.\n",

"3. Finally, we will try to recover the original color of the wall using differentiation along with the reference image generated in step 1.\n",

"\n",

"Mitsuba’s ability to automatically differentiate entire rendering algorithms builds on differentiable JIT array types provided by the Dr.Jit library. Those are explained in the [Dr.Jit documentation][1]. The linked document also discusses key differences compared to related frameworks like PyTorch and TensorFlow. For *automatic differentiation* (AD), Dr.Jit records and simplifies computation graphs and uses them to propagate derivatives in forward or reverse mode. Before getting further into this tutorial, we recommend that you familiarize yourself Dr.Jit.\n",

"\n",

"

\n",

"\n",

"🚀 **You will learn how to:**\n",

" \n",

"

\n",

"

Pass scene arguments when loading an XML file

\n",

"

Build an optimization loop using the Optimizer classes

\n",

"

Perform a gradient-based optimization using automatic differentiation

\n",

"

\n",

" \n",

"

\n",

"\n",

" \n",

"[1]: https://drjit.readthedocs.io/en/master/"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Setup\n",

"\n",

"In order to use the automatic differentiation, we need to enable a variant that supports it. Those are the ones containing `_ad` after the backend description. E.g. `cuda_ad_rgb`, `llvm_ad_rgb`, …\n",

"\n",

"\n",

"

\n",

" \n",

"If you receive an error mentionning that the requested variant is not supported, you can switch to another available `_ad` variant. If you compiled Mitsuba 3 yourself, you can also add the desired variant to your `build/mitsuba.conf` file and recompile the project ([documentation][1]).\n",

"\n",

"[1]: https://mitsuba.readthedocs.io/en/latest/src/developer_guide/compiling.html#configuring-mitsuba-conf\n",

"\n",

"

"

]

},

{

"cell_type": "code",

"execution_count": 1,

"metadata": {

"ExecuteTime": {

"end_time": "2021-06-15T14:13:40.427650Z",

"start_time": "2021-06-15T14:13:40.259649Z"

}

},

"outputs": [],

"source": [

"import drjit as dr\n",

"import mitsuba as mi\n",

"\n",

"mi.set_variant('llvm_ad_rgb')"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Scene loading\n",

"\n",

"Before loading the scene, let's note that in `cbox.xml`, we expose some variables at the top of the file:\n",

"\n",

"```xml\n",

" \n",

" \n",

" \n",

" \n",

"```\n",

"\n",

"Those variables are later referenced in the XML file, as explained in the [XML scene format documentation][1].\n",

"They can be given new values directly from Python when loading the scene by passing keyword arguments to the `load_file()` function. This helpful feature let us change the film resolution and integrator type for this tutorial without editing the XML file.\n",

"\n",

"For this simple differentiable rendering example, which does not involve moving objects or cameras, we recommand using the Path Replay Backpropagation integrator ([prb][2]) introduced by Vicini et al. (2021). It is essentially a path tracer, augmented with a specialized algorithm to efficiently compute the gradients in a separate adjoint pass.\n",

"\n",

"[1]: https://mitsuba.readthedocs.io/en/latest/src/key_topics/scene_format.html\n",

"[2]: https://mitsuba.readthedocs.io/en/latest/src/api_reference.html#mitsuba.ad.integrators.prb"

]

},

{

"cell_type": "code",

"execution_count": 2,

"metadata": {

"ExecuteTime": {

"end_time": "2021-06-15T14:13:40.914750Z",

"start_time": "2021-06-15T14:13:40.807334Z"

}

},

"outputs": [],

"source": [

"scene = mi.load_file('../scenes/cbox.xml', res=128, integrator='prb')"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Reference image\n",

"\n",

"We render a reference image of the original scene that will later be used in the objective function for the optimization. Ideally, this reference image should expose very little noise as it will pertube optimization process otherwise. For best results, we should render it with an even larger sample count."

]

},

{

"cell_type": "code",

"execution_count": 3,

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

""

],

"text/plain": [

"Bitmap[\n",

" pixel_format = rgb,\n",

" component_format = uint8,\n",

" size = [128, 128],\n",

" srgb_gamma = 1,\n",

" struct = Struct<3>[\n",

" uint8 R; // @0, normalized, gamma, premultiplied alpha\n",

" uint8 G; // @1, normalized, gamma, premultiplied alpha\n",

" uint8 B; // @2, normalized, gamma, premultiplied alpha\n",

" ],\n",

" data = [ 48 KiB of image data ]\n",

"]"

]

},

"execution_count": 3,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"image_ref = mi.render(scene, spp=512)\n",

"\n",

"# Preview the reference image\n",

"mi.util.convert_to_bitmap(image_ref)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Initial state\n",

"\n",

"Using the `traverse` mechanism, we can pick the parameter that we will be optimizing and change its value away from the correct value. The goal of the optimization process will be to recover the original value of this parameter using gradient descent. \n",

"\n",

"We chose the `'red.reflectance.value'` parameter, which controls the albedo color of the red wall in the scene.\n",

"For later comparison, we also save the original value of the scene parameter."

]

},

{

"cell_type": "code",

"execution_count": 4,

"metadata": {},

"outputs": [],

"source": [

"params = mi.traverse(scene)\n",

"\n",

"key = 'red.reflectance.value'\n",

"\n",

"# Save the original value\n",

"param_ref = mi.Color3f(params[key])\n",

"\n",

"# Set another color value and update the scene\n",

"params[key] = mi.Color3f(0.01, 0.2, 0.9)\n",

"params.update();"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"As expected, when rendering the scene again, the wall has changed color."

]

},

{

"cell_type": "code",

"execution_count": 5,

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

""

],

"text/plain": [

"Bitmap[\n",

" pixel_format = rgb,\n",

" component_format = uint8,\n",

" size = [128, 128],\n",

" srgb_gamma = 1,\n",

" struct = Struct<3>[\n",

" uint8 R; // @0, normalized, gamma, premultiplied alpha\n",

" uint8 G; // @1, normalized, gamma, premultiplied alpha\n",

" uint8 B; // @2, normalized, gamma, premultiplied alpha\n",

" ],\n",

" data = [ 48 KiB of image data ]\n",

"]"

]

},

"execution_count": 5,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"image_init = mi.render(scene, spp=128)\n",

"mi.util.convert_to_bitmap(image_init)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Optimization\n",

"\n",

"For gradient-based optimization, Mitsuba ships with standard optimizers including *Stochastic Gradient Descent* ([SGD][1]) with and without momentum, as well as [Adam][2] [KB14]. We will instantiate the latter and optimize our scene parameter with a learning rate of `0.05`. \n",

"\n",

"We then set the color to optimize on the optimizer, which will now hold a copy of this parameter and enable gradient tracking on it. During the optimization process, the optimizer will always perfom gradient steps on those variables. To propagate those changes to the scene, we need to call the `update()` method which will copy the values back into the `params` data structure. As always this method also notifies all objects in the scene whose parameters have changed, in case they need to update their internal state.\n",

"\n",

"This first call to `params.update()` ensures that gradient tracking with respect to our wall color parameter is propagated to the scene internal state. For more detailed explanation on how-to-use the optimizer classes, please refer to the dedicated [how-to-guide][3].\n",

"\n",

"[1]: https://mitsuba.readthedocs.io/en/latest/src/api_reference.html#mitsuba.ad.SGD\n",

"[2]: https://mitsuba.readthedocs.io/en/latest/src/api_reference.html#mitsuba.ad.Adam\n",

"[3]: https://mitsuba.readthedocs.io/en/latest/src/how_to_guides/use_optimizers.html"

]

},

{

"cell_type": "code",

"execution_count": 6,

"metadata": {

"ExecuteTime": {

"end_time": "2021-06-15T14:13:51.368393Z",

"start_time": "2021-06-15T14:13:51.362695Z"

}

},

"outputs": [],

"source": [

"opt = mi.ad.Adam(lr=0.05)\n",

"opt[key] = params[key]\n",

"params.update(opt);"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"At every iteration of the gradient descent, we will compute the derivatives of the scene parameters with respect to the objective function. In this simple experiment, we use the [*mean square error*][1], or $L_2$ error, between the current image and the reference created above.\n",

"\n",

"[1]: https://en.wikipedia.org/wiki/Mean_squared_error"

]

},

{

"cell_type": "code",

"execution_count": 7,

"metadata": {},

"outputs": [],

"source": [

"def mse(image):\n",

" return dr.mean(dr.square(image - image_ref))"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"In the following cell we define the hyper parameters controlling our optimization loop, such as the number of iterations:"

]

},

{

"cell_type": "code",

"execution_count": 8,

"metadata": {},

"outputs": [],

"source": [

"iteration_count = 50"

]

},

{

"cell_type": "code",

"execution_count": 9,

"metadata": {

"nbsphinx": "hidden",

"tags": []

},

"outputs": [],

"source": [

"# IGNORE THIS: When running under pytest, adjust parameters to reduce computation time\n",

"import os\n",

"if 'PYTEST_CURRENT_TEST' in os.environ:\n",

" iteration_count = 2"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"It is now time to actually perform the gradient-descent loop that executes 50 differentiable rendering iterations."

]

},

{

"cell_type": "code",

"execution_count": 10,

"metadata": {

"ExecuteTime": {

"end_time": "2021-06-15T14:14:04.664564Z",

"start_time": "2021-06-15T14:13:54.209445Z"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Iteration 49: parameter error = [0.00147954]\n",

"Optimization complete.\n"

]

}

],

"source": [

"errors = []\n",

"for it in range(iteration_count):\n",

" # Perform a (noisy) differentiable rendering of the scene\n",

" image = mi.render(scene, params, spp=4)\n",

" \n",

" # Evaluate the objective function from the current rendered image\n",

" loss = mse(image)\n",

"\n",

" # Backpropagate through the rendering process\n",

" dr.backward(loss)\n",

"\n",

" # Optimizer: take a gradient descent step\n",

" opt.step()\n",

"\n",

" # Post-process the optimized parameters to ensure legal color values.\n",

" opt[key] = dr.clip(opt[key], 0.0, 1.0)\n",

"\n",

" # Update the scene state to the new optimized values\n",

" params.update(opt)\n",

" \n",

" # Track the difference between the current color and the true value\n",

" err_ref = dr.sum(dr.square(param_ref - params[key]))\n",

" print(f\"Iteration {it:02d}: parameter error = {err_ref}\", end='\\r')\n",

" errors.append(dr.slice(err_ref))\n",

"print('\\nOptimization complete.')"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Results\n",

"\n",

"We can now render the scene again to check whether the optimization process successfully recovered the color of the red wall."

]

},

{

"cell_type": "code",

"execution_count": 11,

"metadata": {

"ExecuteTime": {

"end_time": "2021-06-15T14:14:07.765382Z",

"start_time": "2021-06-15T14:14:06.895491Z"

}

},

"outputs": [

{

"data": {

"text/html": [

""

],

"text/plain": [

"Bitmap[\n",

" pixel_format = rgb,\n",

" component_format = uint8,\n",

" size = [128, 128],\n",

" srgb_gamma = 1,\n",

" struct = Struct<3>[\n",

" uint8 R; // @0, normalized, gamma, premultiplied alpha\n",

" uint8 G; // @1, normalized, gamma, premultiplied alpha\n",

" uint8 B; // @2, normalized, gamma, premultiplied alpha\n",

" ],\n",

" data = [ 48 KiB of image data ]\n",

"]"

]

},

"execution_count": 11,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"image_final = mi.render(scene, spp=128)\n",

"mi.util.convert_to_bitmap(image_final)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"It worked!\n",

"\n",

"Note visualizing the objective value directly sometimes gives limited information, since differences between `image` and `image_ref` can be dominated by Monte Carlo noise that is not related to the parameter being optimized. \n",

"\n",

"Since we know the “true” target parameter in this scene, we can validate the convergence of the optimization by checking the difference to the true color at each iteration:"

]

},

{

"cell_type": "code",

"execution_count": 12,

"metadata": {

"nbsphinx-thumbnail": {},

"tags": []

},

"outputs": [

{

"data": {

"image/png": "iVBORw0KGgoAAAANSUhEUgAAAjcAAAHHCAYAAABDUnkqAAAAOXRFWHRTb2Z0d2FyZQBNYXRwbG90bGliIHZlcnNpb24zLjkuMCwgaHR0cHM6Ly9tYXRwbG90bGliLm9yZy80BEi2AAAACXBIWXMAAA9hAAAPYQGoP6dpAABMsklEQVR4nO3dd3hUZf428PtMT++dQCihBgIGiKEIK5EICpbdFRFFsfCT4oJZ312iAmIDlWVRAUGkrasSkcWCK4gBI2CWktB7SUiA9JBJzyQz5/0jycCQACkzc2Ym9+e6ZjNz5pTvnATn3uc853kEURRFEBERETkImdQFEBEREZkTww0RERE5FIYbIiIicigMN0RERORQGG6IiIjIoTDcEBERkUNhuCEiIiKHwnBDREREDoXhhoiIiBwKww0RkYNZv349BEFARkaG1KUQSYLhhsgGNHwZNTw0Gg26d++OmTNnIjc3V+ryLOr333/HG2+8geLiYqlLIQBffvklli5dKnUZRG3CcENkQ9588018/vnnWLZsGYYMGYJPPvkEMTExqKiokLo0i/n999+xYMEChhsbwXBDjkAhdQFEdN2YMWMwcOBAAMDzzz8PHx8fLFmyBN999x0mTpzY6v0aDAbodDpoNBpzlWrzKioq4OzsbPb9lpeXw8XFxSLHrK2thcFggEqlavU+iIgtN0Q27d577wUApKenAwAWL16MIUOGwMfHB05OToiKisI333zTaDtBEDBz5kx88cUX6NOnD9RqNbZt29aqfWzatAm9e/eGk5MTYmJicOzYMQDAqlWr0K1bN2g0GowcObLJ/h379u3D/fffDw8PDzg7O2PEiBHYu3ev8f033ngD/+///T8AQOfOnY2X5W7c17///W9ERUXByckJ3t7eePzxx5GVlWVynJEjRyIiIgKpqam455574OzsjFdfffW25/b06dP405/+BG9vb2g0GgwcOBDff/+9yToNlwuTk5Mxffp0+Pv7o0OHDnc8Zl5eHp577jkEBARAo9EgMjISGzZsMNl3RkYGBEHA4sWLsXTpUnTt2hVqtRonT568Zc03/l579OgBjUaDqKgo/Pbbb7f9rA1WrFhh/HsIDg7GjBkzTFrMRo4ciR9//BGXLl0y/i7CwsKatW8iW8KWGyIbduHCBQCAj48PAODDDz/E+PHjMWnSJOh0OmzcuBF//vOfsXXrVjzwwAMm2+7cuRNff/01Zs6cCV9fX+OXVEv2sXv3bnz//feYMWMGAGDhwoV48MEH8be//Q0rVqzA9OnTce3aNbz//vt49tlnsXPnTpPjjxkzBlFRUZg/fz5kMhnWrVuHe++9F7t378bgwYPx6KOP4uzZs/jqq6/wz3/+E76+vgAAPz8/AMA777yDuXPn4rHHHsPzzz+P/Px8fPzxx7jnnntw6NAheHp6Go9XWFiIMWPG4PHHH8eTTz6JgICAW57XEydOYOjQoQgJCcGcOXPg4uKCr7/+Gg8//DA2b96MRx55xGT96dOnw8/PD/PmzUN5efltj1lZWYmRI0fi/PnzmDlzJjp37oxNmzbhmWeeQXFxMWbNmmWy73Xr1qGqqgpTp06FWq2Gt7f3LesGgOTkZCQmJuIvf/kL1Go1VqxYgfvvvx/79+9HRETELbd74403sGDBAsTGxmLatGk4c+YMPvnkExw4cAB79+6FUqnEa6+9Bq1Wi8uXL+Of//wnAMDV1fW29RDZJJGIJLdu3ToRgPjLL7+I+fn5YlZWlrhx40bRx8dHdHJyEi9fviyKoihWVFSYbKfT6cSIiAjx3nvvNVkOQJTJZOKJEycaHasl+1Cr1WJ6erpx2apVq0QAYmBgoFhSUmJcnpCQIAIwrmswGMTw8HAxLi5ONBgMJsfu3LmzeN999xmXffDBBybbNsjIyBDlcrn4zjvvmCw/duyYqFAoTJaPGDFCBCCuXLmy0edtyqhRo8S+ffuKVVVVxmUGg0EcMmSIGB4eblzW8HsZNmyYWFtba7KPWx1z6dKlIgDx3//+t3GZTqcTY2JiRFdXV+N5S09PFwGI7u7uYl5eXrPqBiACEA8ePGhcdunSJVGj0YiPPPJIo7obzmleXp6oUqnE0aNHi3q93rjesmXLRADi2rVrjcseeOABsVOnTs2qh8hW8bIUkQ2JjY2Fn58fQkND8fjjj8PV1RVbtmxBSEgIAMDJycm47rVr16DVajF8+HCkpaU12teIESPQu3fvRstbso9Ro0aZXJaIjo4GAPzxj3+Em5tbo+UXL14EABw+fBjnzp3DE088gcLCQhQUFKCgoADl5eUYNWoUfvvtNxgMhtuei//85z8wGAx47LHHjNsXFBQgMDAQ4eHh2LVrl8n6arUaU6ZMue0+AaCoqAg7d+7EY489htLSUuN+CwsLERcXh3PnzuHKlSsm27zwwguQy+WN9tXUMf/73/8iMDDQpI+UUqnEX/7yF5SVlSE5Odlk/T/+8Y/GlqrmiImJQVRUlPF1x44d8dBDD2H79u3Q6/VNbvPLL79Ap9Nh9uzZkMmu/2f/hRdegLu7O3788cdmH5/IHvCyFJENWb58Obp37w6FQoGAgAD06NHD5Mto69atePvtt3H48GFUV1cblwuC0GhfnTt3bvIYLdlHx44dTV57eHgAAEJDQ5tcfu3aNQDAuXPnAABPP/30LT+rVquFl5fXLd8/d+4cRFFEeHh4k+8rlUqT1yEhIc3qiHv+/HmIooi5c+di7ty5Ta6Tl5dnDJTArc9lU8e8dOkSwsPDTX5vANCrVy/j+ze61b5vpanz0b17d1RUVCA/Px+BgYGN3m84Zo8ePUyWq1QqdOnSpVFNRPaO4YbIhgwePNh4t9TNdu/ejfHjx+Oee+7BihUrEBQUBKVSiXXr1uHLL79stP6NLTSt3UdTrRW3Wy6KIgAYW2U++OAD9O/fv8l179SXw2AwQBAE/PTTT00e7+btm/q8t9ovALzyyiuIi4trcp1u3bo1a9/NPebtmGMfRGSK4YbITmzevBkajQbbt2+HWq02Ll+3bp1V99EcXbt2BQC4u7sjNjb2tus21WLUsA9RFNG5c2d0797dbLV16dIFQF3Lz51qa41OnTrh6NGjMBgMJq03p0+fNr7fFg2tYjc6e/YsnJ2db3l5q+GYZ86cMX5+ANDpdEhPTzc5D7f6fRDZE/a5IbITcrkcgiCY9KvIyMjAt99+a9V9NEdUVBS6du2KxYsXo6ysrNH7+fn5xucNY8bcPIjfo48+CrlcjgULFhhbhBqIoojCwsJW1ebv74+RI0di1apVyM7Ovm1trTF27Fjk5OQgMTHRuKy2thYff/wxXF1dMWLEiDbtPyUlxaR/VFZWFr777juMHj36li1qsbGxUKlU+Oijj0zO5Zo1a6DVak3uknNxcYFWq21TjURSY8sNkZ144IEHsGTJEtx///144oknkJeXh+XLl6Nbt244evSo1fbRHDKZDJ999hnGjBmDPn36YMqUKQgJCcGVK1ewa9cuuLu744cffgAAY+fY1157DY8//jiUSiXGjRuHrl274u2330ZCQgIyMjLw8MMPw83NDenp6diyZQumTp2KV155pVX1LV++HMOGDUPfvn3xwgsvoEuXLsjNzUVKSgouX76MI0eOtPqzT506FatWrcIzzzyD1NRUhIWF4ZtvvsHevXuxdOlSk47YrREREYG4uDiTW8EBYMGCBbfcxs/PDwkJCViwYAHuv/9+jB8/HmfOnMGKFSswaNAgPPnkk8Z1o6KikJiYiPj4eAwaNAiurq4YN25cm2omsjoJ79QionoNt+4eOHDgtuutWbNGDA8PF9VqtdizZ09x3bp14vz588Wb/ykDEGfMmGH2fTTcvvzBBx+YLN+1a5cIQNy0aZPJ8kOHDomPPvqo6OPjI6rVarFTp07iY489JiYlJZms99Zbb4khISGiTCZrdFv45s2bxWHDhokuLi6ii4uL2LNnT3HGjBnimTNnjOuMGDFC7NOnz23P3c0uXLggTp48WQwMDBSVSqUYEhIiPvjgg+I333xjXOd2v5fbHTM3N1ecMmWK6OvrK6pUKrFv377iunXrTNa51bm8nYbfyb///W/j73DAgAHirl27TNa7+VbwBsuWLRN79uwpKpVKMSAgQJw2bZp47do1k3XKysrEJ554QvT09BQB8LZwskuCKN7U3ktERDZJEATMmDEDy5Ytk7oUIpvGPjdERETkUBhuiIiIyKEw3BAREZFD4d1SRER2gl0kiZqHLTdERETkUBhuiIiIyKG0u8tSBoMBV69ehZubG4cZJyIishOiKKK0tBTBwcGNJqa9WbsLN1evXm00ozERERHZh6ysLHTo0OG267S7cNMw9HlWVhbc3d0lroaIiIiao6SkBKGhoc2awqTdhZuGS1Hu7u4MN0RERHamOV1K2KGYiIiIHArDDRERETkUhhsiIiJyKAw3RERE5FAYboiIiMihMNwQERGRQ2G4ISIiIofCcENEREQOheGGiIiIHArDDRERETkUhhsiIiJyKAw3RERE5FAYbsyosKwa53JLpS6DiIioXWO4MZOkU7mIevsXzE48LHUpRERE7RrDjZl0D3ADAJzLLYOu1iBxNURERO0Xw42ZdPBygptaAZ3egAv5ZVKXQ0RE1G4x3JiJIAjoFewOADh5tUTiaoiIiNovhhsz6h1UH26yGW6IiIikwnBjRr3rW25OMdwQERFJhuHGjG5suRFFUeJqiIiI2ieGGzMKD3CFQiaguKIG2doqqcshIiJqlxhuzEitkKObvysAdiomIiKSCsONmTX0u2GnYiIiImkw3JiZsd8NW26IiIgkwXBjZmy5ISIikhbDjZk1tNxkFlWgpKpG4mqIiIjaH4YbM/N0ViHE0wkAcDqbM4QTERFZG8ONBfQy9rvRSlwJERFR+8NwYwHsd0NERCQdhhsL4BxTRERE0mG4sYA+9S03Z3PKUKM3SFwNERFR+8JwYwEdvJzgplZApzfgQn6Z1OUQERG1Kww3FiAIAnoFczA/IiIiKTDcWAhHKiYiIpIGw42F8I4pIiIiaTDcWMiNd0yJoihxNURERO0Hw42FhAe4QiETUFxRg2xtldTlEBERtRsMNxaiVsjRzd8VAPvdEBERWRPDjQWx3w0REZH1MdxYEO+YIiIisj6GGwtiyw0REZH1MdxYUEPLTWZRBUqqaiSuhoiIqH1guLEgT2cVQjydAACns0slroaIiKh9YLixsF7GfjdaiSshIiJqHxhuLIz9boiIiKyL4cbCbhypmIiIiCyP4cbC+tS33JzNKUON3iBxNURERI6P4cbCOng5wU2tgE5vwIX8MqnLISIicngMNxYmCAJ6BXMwPyIiImthuLECjlRMRERkPQw3VsA7poiIiKyH4cYKbrxjShRFiashIiJybAw3VhAe4AqFTEBxRQ2ytVVSl0NEROTQGG6sQK2Qo5u/KwD2uyEiIrI0ycPN8uXLERYWBo1Gg+joaOzfv/+26y9duhQ9evSAk5MTQkND8fLLL6OqyvZbQ9jvhoiIyDokDTeJiYmIj4/H/PnzkZaWhsjISMTFxSEvL6/J9b/88kvMmTMH8+fPx6lTp7BmzRokJibi1VdftXLlLcc7poiIiKxD0nCzZMkSvPDCC5gyZQp69+6NlStXwtnZGWvXrm1y/d9//x1Dhw7FE088gbCwMIwePRoTJ068Y2uPLeA0DERERNYhWbjR6XRITU1FbGzs9WJkMsTGxiIlJaXJbYYMGYLU1FRjmLl48SL++9//YuzYsbc8TnV1NUpKSkweUmiYHTyzqAIlVTWS1EBERNQeSBZuCgoKoNfrERAQYLI8ICAAOTk5TW7zxBNP4M0338SwYcOgVCrRtWtXjBw58raXpRYuXAgPDw/jIzQ01Kyfo7m8XFQI9tAAAE5nl0pSAxERUXsgeYfilvj111/x7rvvYsWKFUhLS8N//vMf/Pjjj3jrrbduuU1CQgK0Wq3xkZWVZcWKTRk7FV/VSlYDERGRo1NIdWBfX1/I5XLk5uaaLM/NzUVgYGCT28ydOxdPPfUUnn/+eQBA3759UV5ejqlTp+K1116DTNY4q6nVaqjVavN/gFboHeSOX07lsd8NERGRBUnWcqNSqRAVFYWkpCTjMoPBgKSkJMTExDS5TUVFRaMAI5fLAcAuRv7l7eBERESWJ1nLDQDEx8fj6aefxsCBAzF48GAsXboU5eXlmDJlCgBg8uTJCAkJwcKFCwEA48aNw5IlSzBgwABER0fj/PnzmDt3LsaNG2cMObasd5AHAOBsThlq9AYo5XZ1VZCIiMguSBpuJkyYgPz8fMybNw85OTno378/tm3bZuxknJmZadJS8/rrr0MQBLz++uu4cuUK/Pz8MG7cOLzzzjtSfYQWCfV2grtGgZKqWpzJKUVEiIfUJRERETkcQbSH6zlmVFJSAg8PD2i1Wri7u1v9+E+t2Yfd5wrwziMRmBTdyerHJyIiskct+f7mdREri+zgCQA4nFksaR1ERESOiuHGyvqHegIAjlwulrQOIiIiR8VwY2X9Quv62ZzLK0NZda3E1RARETkehhsr83fTIMTTCaIIHLvMwfyIiIjMjeFGApH1rTe8NEVERGR+DDcSaOhUfCSrWNI6iIiIHBHDjQQiGzoVM9wQERGZHcONBPqGeEAmAFe1VcgrqZK6HCIiIofCcCMBF7UC3QPcAACH2XpDRERkVgw3EjH2u2GnYiIiIrNiuJHI9X43vB2ciIjInBhuJHLj7eAGQ7ua3ouIiMiiGG4k0j3ADRqlDKVVtUgvLJe6HCIiIofBcCMRpVyGiOD61ht2KiYiIjIbhhsJNfS74R1TRERE5sNwI6H+HMyPiIjI7BhuJNQQbk5ml6C6Vi9tMURERA6C4UZCHbyc4O2iQo1exKnsUqnLISIicggMNxISBAGRHdipmIiIyJwYbiTGSTSJiIjMi+FGYsY7pjgNAxERkVkw3Eisf/0cUxfzy6GtqJG2GCIiIgfAcCMxLxcVOvk4AwCOXimWthgiIiIHwHBjA4wzhLPfDRERUZsx3NiA6yMVc4ZwIiKitmK4sQH962cIP5xVDFHkDOFERERtwXBjA/oEe0AuE1BQVo1sbZXU5RAREdk1hhsboFHK0TPQDQD73RAREbUVw42N6M8ZwomIiMyC4cZGRDLcEBERmQXDjY1oaLk5dkULvYGdiomIiFqL4cZGdPVzhYtKjgqdHufzyqQuh4iIyG4x3NgIuUxAX84QTkRE1GYMNzaEk2gSERG1HcONDenPaRiIiIjajOHGhvTv6AkAOJ1TikqdXtpiiIiI7BTDjQ0JdNfA300NvUHEiaucZ4qIiKg1GG5siCAIHO+GiIiojRhubEzDeDdHLrPlhoiIqDUYbmxMJDsVExERtQnDjY1pGOsms6gCReU6iashIiKyPww3NsbDSYmufi4AgEOZ1ySuhoiIyP4w3NiggZ28AQD7M4okroSIiMj+MNzYoMGd68LNgXSGGyIiopZiuLFBDeHm6GUtB/MjIiJqIYYbG9TBywlBHhrUGkT2uyEiImohhhsbJAiCsfWG/W6IiIhahuHGRg0Kqw837HdDRETUIgw3Niq6vuUmLfMadLUGiashIiKyHww3Nqqbvyu8XVSoqjHgOCfRJCIiajaGGxslCAIGdvICwEtTRERELcFwY8M43g0REVHLMdzYsOjOPgDq7pjSG0SJqyEiIrIPDDc2rFeQG1xUcpRW1eJMTqnU5RAREdkFhhsbppDLEFV/S/gBjndDRETULAw3Nm5wGDsVExERtQTDjY0bfEO/G1FkvxsiIqI7Ybixcf06eEClkCG/tBoZhRVSl0NERGTzGG5snEYpR/8OngCA/emF0hZDRERkBxhu7IBxEs10zhBORER0Jww3duD6DOFsuSEiIroThhs7cFcnL8gEIKuoEtnaSqnLISIismmSh5vly5cjLCwMGo0G0dHR2L9//23XLy4uxowZMxAUFAS1Wo3u3bvjv//9r5WqlYarWoGIEA8AvCWciIjoTiQNN4mJiYiPj8f8+fORlpaGyMhIxMXFIS8vr8n1dTod7rvvPmRkZOCbb77BmTNnsHr1aoSEhFi5cusbFNbQ74bhhoiI6HYkDTdLlizBCy+8gClTpqB3795YuXIlnJ2dsXbt2ibXX7t2LYqKivDtt99i6NChCAsLw4gRIxAZGWnlyq3POIkmRyomIiK6LcnCjU6nQ2pqKmJjY68XI5MhNjYWKSkpTW7z/fffIyYmBjNmzEBAQAAiIiLw7rvvQq/X3/I41dXVKCkpMXnYo4aWm7O5ZSgq10lcDRERke2SLNwUFBRAr9cjICDAZHlAQABycnKa3ObixYv45ptvoNfr8d///hdz587FP/7xD7z99tu3PM7ChQvh4eFhfISGhpr1c1iLt4sK4f6uANh6Q0REdDuSdyhuCYPBAH9/f3z66aeIiorChAkT8Nprr2HlypW33CYhIQFardb4yMrKsmLF5mW8NMV+N0RERLekkOrAvr6+kMvlyM3NNVmem5uLwMDAJrcJCgqCUqmEXC43LuvVqxdycnKg0+mgUqkabaNWq6FWq81bvEQGd/bGF/sysZ8tN0RERLckWcuNSqVCVFQUkpKSjMsMBgOSkpIQExPT5DZDhw7F+fPnYTAYjMvOnj2LoKCgJoONo2louTlxtQRl1bUSV0NERGSbJL0sFR8fj9WrV2PDhg04deoUpk2bhvLyckyZMgUAMHnyZCQkJBjXnzZtGoqKijBr1iycPXsWP/74I959913MmDFDqo9gVUEeTgj1doLeICLtEqdiICIiaopkl6UAYMKECcjPz8e8efOQk5OD/v37Y9u2bcZOxpmZmZDJruev0NBQbN++HS+//DL69euHkJAQzJo1C3//+9+l+ghWNyjMG1lFV7A/vQj3dPeTuhwiIiKbI4iiKEpdhDWVlJTAw8MDWq0W7u7uUpfTYokHMvH3zccwuLM3vv6/pi/fEREROZqWfH/b1d1SBAzu7AMAOJxVjKqaW4/vQ0RE1F4x3NiZMB9n+Lqqoas14OhlrdTlEBER2RyGGzsjCAKiORUDERHRLTHc2KGGW8L3cTA/IiKiRhhu7FDDPFNpl66hVm+4w9pERETtS6tuBU9PT8fu3btx6dIlVFRUwM/PDwMGDEBMTAw0Go25a6Sb9Ah0g7tGgZKqWpzKLkXfDh5Sl0RERGQzWhRuvvjiC3z44Yc4ePAgAgICEBwcDCcnJxQVFeHChQvQaDSYNGkS/v73v6NTp06Wqrndk8sEDArzRtLpPOxLL2S4ISIiukGzL0sNGDAAH330EZ555hlcunQJ2dnZSE1NxZ49e3Dy5EmUlJTgu+++g8FgwMCBA7Fp0yZL1t3uxXStuyV8z/kCiSshIiKyLc0exG/79u2Ii4tr1k4LCwuRkZGBqKioNhVnCfY+iF+DU9klGPPhbjgp5Tg8/z6oFfI7b0RERGSnLDKIX3ODDQD4+PjYZLBxJD0D3eDrqkZljR5pl4qlLoeIiMhmtGluqby8POTl5ZnM0g0A/fr1a1NRdGeCIGBYNx98e/gq9pzPN16mIiIiau9aFW5SU1Px9NNP49SpU2i4qiUIAkRRhCAI0Os5LYA1DAv3qws35wrw/5rfsEZEROTQWhVunn32WXTv3h1r1qxBQEAABEEwd13UDMO6+QIAjl7RorhCB09nlcQVERERSa9V4ebixYvYvHkzunXrZu56qAUCPTQI93fFubwy/H6hEGP7BkldEhERkeRaNULxqFGjcOTIEXPXQq0wLLyu9Wb3Od4STkREBLSy5eazzz7D008/jePHjyMiIgJKpdLk/fHjx5ulOLqz4eG+WLc3A3vO50tdChERkU1oVbhJSUnB3r178dNPPzV6jx2KrSu6sw+UcgFZRZXILKxARx9nqUsiIiKSVKsuS7300kt48sknkZ2dDYPBYPJgsLEuF7UCAzp6AQB2s/WGiIiodeGmsLAQL7/8MgICAsxdD7XC8Pq7pvaw3w0REVHrws2jjz6KXbt2mbsWaqWGTsW/XyiE3tCs2TSIiIgcVqv63HTv3h0JCQnYs2cP+vbt26hD8V/+8hezFEfN0zfEA24aBbSVNTh2RYv+oZ5Sl0RERCSZZk+ceaPOnTvfeoeCgIsXL7apKEtylIkzb/Z/nx/E9hO5eGV0d8y8N1zqcoiIiMyqJd/frWq5SU9Pb1VhZDnDwv2w/UQudp8rYLghIqJ2rVV9bsj2NHQqTsu8hvLqWomrISIikk6rZwW/fPkyvv/+e2RmZkKn05m8t2TJkjYXRi3TyccZHbyccPlaJfanF+EPPf2lLomIiEgSrQo3SUlJGD9+PLp06YLTp08jIiICGRkZEEURd911l7lrpGYQBAHDw33x1f4s7D5XwHBDRETtVqsuSyUkJOCVV17BsWPHoNFosHnzZmRlZWHEiBH485//bO4aqZmGdfMDAE7FQERE7Vqrws2pU6cwefJkAIBCoUBlZSVcXV3x5ptv4r333jNrgdR8Q7r6QBCAs7llyC2pkrocIiIiSbQq3Li4uBj72QQFBeHChQvG9woKOEquVLxcVOgb4gGAoxUTEVH71apwc/fdd2PPnj0AgLFjx+Kvf/0r3nnnHTz77LO4++67zVogtcywhqkYzjPcEBFR+9SqDsVLlixBWVkZAGDBggUoKytDYmIiwsPDeaeUxIaF+2LFrxew53wBRFGEIAhSl0RERGRVLQ43er0ely9fRr9+/QDUXaJauXKl2Quj1onq5AWNUob80mqcyS1Fz0DHGYWZiIioOVp8WUoul2P06NG4du2aJeqhNlIr5Bjc2QcA+90QEVH71Ko+NxERETY9f1R7N5z9boiIqB1rVbh5++238corr2Dr1q3Izs5GSUmJyYOkNSy8Ltzsu1iE6lq9xNUQERFZV6s6FI8dOxYAMH78eJMOqw0dWPV6fqFKqWegG3xd1Sgoq0bapWLEdPWRuiQiIiKraVW42bVrl7nrIDMSBAHDuvng28NXsed8PsMNERG1K60KNyNGjDB3HWRmw8L96sLNuQL8vzipqyEiIrKeVs8KDgAVFRVNzgrecJs4SadhML+jV7QortDB01klcUVERETW0apwk5+fjylTpuCnn35q8n32uZFeoIcG4f6uOJdXht8vFGJs3yCpSyIiIrKKVt0tNXv2bBQXF2Pfvn1wcnLCtm3bsGHDBoSHh+P77783d43USg13Te0+x1nCiYio/WhVy83OnTvx3XffYeDAgZDJZOjUqRPuu+8+uLu7Y+HChXjggQfMXSe1wojufli3NwO7TudzKgYiImo3WtVyU15eDn9/fwCAl5cX8vPrWgb69u2LtLQ081VHbXJ3Fx84q+TIKanCiascf4iIiNqHVoWbHj164MyZMwCAyMhIrFq1CleuXMHKlSsRFMS+HbZCo5QbOxYnncqTuBoiIiLraFW4mTVrFrKzswEA8+fPx08//YSOHTvio48+wrvvvmvWAqltRvWqa2FLOp0rcSVERETW0ao+N08++aTxeVRUFC5duoTTp0+jY8eO8PX1NVtx1HZ/6FkXbo5e1iK3pAoB7hqJKyIiIrKsVrXc3EgURTg5OeGuu+5isLFB/m4aRIZ6AgB2nealKSIicnytDjdr1qxBREQENBoNNBoNIiIi8Nlnn5mzNjKT2PrWm1/Y74aIiNqBVoWbefPmYdasWRg3bhw2bdqETZs2Ydy4cXj55Zcxb948c9dIbXRvfb+bPefzUVXDARaJiMixCaIoii3dyM/PDx999BEmTpxosvyrr77CSy+9hIKCArMVaG4lJSXw8PCAVquFu7u71OVYhSiKGLpoJ65qq7DumUHGfjhERET2oiXf361quampqcHAgQMbLY+KikJtbW1rdkkWJAiCsfXml1O8a4qIiBxbq8LNU089hU8++aTR8k8//RSTJk1qc1FkfqN6BgAAdp7OQysa64iIiOxGq2cFX7NmDX7++WfcfffdAIB9+/YhMzMTkydPRnx8vHG9JUuWtL1KarOYrj5wUsqRra0brTgixEPqkoiIiCyiVeHm+PHjuOuuuwAAFy5cAAD4+vrC19cXx48fN67HuYxsh0Ypx7BwX+w4mYudp/MYboiIyGG1Ktzs2rXL3HWQFcT28seOk7lIOpWLv4wKl7ocIiIii2jzIH5kP/7Qo65T8ZHLWuSVVElcDRERkWU0O9y8+OKLuHz5crPWTUxMxBdffNHqosgy/N01iOxQdzlq1xkO6EdERI6p2Zel/Pz80KdPHwwdOhTjxo3DwIEDERwcDI1Gg2vXruHkyZPYs2cPNm7ciODgYHz66aeWrJtaaVSvABy5rMUvp/IwYVBHqcshIiIyuxYN4pebm4vPPvsMGzduxMmTJ03ec3NzQ2xsLJ5//nncf//9Zi/UXNrjIH43On5Fiwc/3gMnpRyH5t0HjVIudUlERER31JLv71aNUAwA165dQ2ZmJiorK+Hr64uuXbvaxd1R7T3ciKKIIYt2IpujFRMRkR1pyfd3q8e58fLygpeXV2s3J4kIgoB7e/rji32ZSDqdy3BDREQOp0V3S73//vuorKw0vt67dy+qq6uNr0tLSzF9+nTzVUcWEdurfrTiUxytmIiIHE+Lwk1CQgJKS0uNr8eMGYMrV64YX1dUVGDVqlUtLmL58uUICwuDRqNBdHQ09u/f36ztNm7cCEEQ8PDDD7f4mO1ZTFcfaJQyXNVW4WR2idTlEBERmVWLws3N/y/fHP+vPzExEfHx8Zg/fz7S0tIQGRmJuLg45OXd/lbljIwMvPLKKxg+fHiba2hvNEo5hnXzA1DXekNERORIJB/Eb8mSJXjhhRcwZcoU9O7dGytXroSzszPWrl17y230ej0mTZqEBQsWoEuXLlas1nHENswSfprhhoiIHIuk4Uan0yE1NRWxsbHGZTKZDLGxsUhJSbnldm+++Sb8/f3x3HPP3fEY1dXVKCkpMXkQcG99R+IjWcXIK+VoxURE5DhafLfUZ599BldXVwBAbW0t1q9fD19fXwAw6Y/THAUFBdDr9QgICDBZHhAQgNOnTze5zZ49e7BmzRocPny4WcdYuHAhFixY0KK62gN/dw36dfDA0cta7DrNAf2IiMhxtCjcdOzYEatXrza+DgwMxOeff95oHUspLS3FU089hdWrVxsD1Z0kJCQgPj7e+LqkpAShoaGWKtGujOoZgKOXtUjiaMVERORAWhRuMjIyzHpwX19fyOVy5ObmmizPzc1FYGBgo/UvXLiAjIwMjBs3zrjMYDAAABQKBc6cOYOuXbuabKNWq6FWq81at6MY1csf//zlLHafK0BVjZ6jFRMRkUOQtM+NSqVCVFQUkpKSjMsMBgOSkpIQExPTaP2ePXvi2LFjOHz4sPExfvx4/OEPf8Dhw4fZItNCfYLdEeiuQWWNHikXC6Uuh4iIyCxaFG5SUlKwdetWk2X/+te/0LlzZ/j7+2Pq1Kkmg/o1R3x8PFavXo0NGzbg1KlTmDZtGsrLyzFlyhQAwOTJk5GQkAAA0Gg0iIiIMHl4enrCzc0NERERUKlULTp2eycIAu6tv2sq6VTuHdYmIiKyDy0KN2+++SZOnDhhfH3s2DE899xziI2NxZw5c/DDDz9g4cKFLSpgwoQJWLx4MebNm4f+/fvj8OHD2LZtm7GTcWZmJrKzs1u0T2o+4y3hJ/NgMHC0YiIisn8tmjgzKCgIP/zwAwYOHAgAeO2115CcnIw9e/YAADZt2oT58+c3mjHclrT3iTNvVlWjx6C3f0FpdS02vRiDQWHeUpdERETUSEu+v1vUcnPt2jWT27aTk5MxZswY4+tBgwYhKyurheWSlDRKOe7rU/c73XrkqsTVEBERtV2Lwk1AQADS09MB1A3Al5aWhrvvvtv4fmlpKZRKpXkrJIsbFxkMAPjxWA70vDRFRER2rkXhZuzYsZgzZw52796NhIQEODs7m8ztdPTo0Ua3YpPtG9bNF57OShSUVWMf75oiIiI716Jw89Zbb0GhUGDEiBFYvXo1Pv30U5M7lNauXYvRo0ebvUiyLKVchjERdeMK/XCUl6aIiMi+tahDcQOtVgtXV1fI5aaDvhUVFcHNzc2mL02xQ3HT9p4vwKTP9sHTWYkDr8VCKZd8TlUiIiKjlnx/t2iE4meffbZZ691uRm+yTXd38YGvqxoFZdXYc74Af+jhL3VJRERErdKicLN+/Xp06tQJAwYMQCsafMiGyWUCxvYNxL9SLmHrkWyGGyIislstCjfTpk3DV199hfT0dEyZMgVPPvkkvL05LoqjGBcZjH+lXMLPJ3JQVRPBuaaIiMgutahjxfLly5GdnY2//e1v+OGHHxAaGorHHnsM27dvZ0uOA4jq6IVAdw1Kq2uRfDZf6nKIiIhapcW9RtVqNSZOnIgdO3bg5MmT6NOnD6ZPn46wsDCUlZVZokayEplMwIP9ggAAW49yygsiIrJPbbolRiaTQRAEiKIIvV5vrppIQg/WD+j3y8lcVOhqJa6GiIio5Vocbqqrq/HVV1/hvvvuQ/fu3XHs2DEsW7YMmZmZcHV1tUSNZEWRHTwQ6u2Eyho9dp7Ok7ocIiKiFmtRuJk+fTqCgoKwaNEiPPjgg8jKysKmTZswduxYyGQcF8URCIKAB/vVtd78wLmmiIjIDrVoED+ZTIaOHTtiwIABEAThluv95z//MUtxlsBB/O7s5NUSjP1oN1QKGVJfj4WbxnYHZSQiovbBYoP4TZ48+bahhhxDryA3dPFzwcX8cuw4mYtH7+ogdUlERETN1uJB/MjxCYKAcf2C8WHSOWw9ms1wQ0REdoUdZahJ4yLrbgn/7Ww+iit0EldDRETUfAw31KRu/m7oGeiGWoOI7SdypC6HiIio2Rhu6JbGRTbcNcUB/YiIyH4w3NAtNYxW/PuFAuSXVktcDRERUfMw3NAtdfJxQb8OHjCIwLbjbL0hIiL7wHBDtzWuHy9NERGRfWG4odt6oP7S1IFLRcjWVkpcDRER0Z0x3NBtBXs6YWAnL4gi8CNnCiciIjvAcEN31HDX1FaGGyIisgMMN3RHY/oGQiYAh7OKcTG/TOpyiIiIbovhhu7I302DEd39AACJB7IkroaIiOj2GG6oWR4f3BEA8E3qZehqDRJXQ0REdGsMN9Qs9/b0h7+bGoXlOuw4mSt1OURERLfEcEPNopTL8OeBdbODbzyQKXE1REREt8ZwQ832+KC6S1O7zxUgs7BC4mqIiIiaxnBDzRbq7Yzh4b4AgMSDbL0hIiLbxHBDLTKxvmPx1wcvo0bPjsVERGR7GG6oRWJ7BcDXVYX80mrsPJ0ndTlERESNMNxQi6gUMvwxqq5j8Vf7eWmKiIhsD8MNtVhDx+Lks/m4UszJNImIyLYw3FCLdfZ1QUwXH4giRywmIiLbw3BDrTIxuq71ZtPBLNSyYzEREdkQhhtqlbg+AfByViJbW4Xks/lSl0NERGTEcEOtolbI8ce7GjoW89IUERHZDoYbarXHB4cCAHaezkWOtkriaoiIiOow3FCrdfN3w+AwbxjEur43REREtoDhhtqkofVm44EsGAyixNUQEREx3FAbje0bBHeNAleKK7H7fIHU5RARETHcUNtolHI82tCxeB9HLCYiIukx3FCbNVya+uVULvJK2bGYiIikxXBDbdYz0B0DOnqi1iDim9TLUpdDRETtHMMNmcXEwXUjFieyYzEREUmM4YbM4sF+QXBTK3CpsAK/neOIxUREJB2GGzILZ5UCfxpY17F4VfJFiashIqL2jOGGzOb54V2gkAlIuViIQ5nXpC6HiIjaKYYbMpsQTyc81D8EALAy+YLE1RARUXvFcENm9eKILgCAn0/m4nxemcTVEBFRe8RwQ2YVHuCG+3oHQBSBT39j6w0REVkfww2Z3bSRXQEAWw5dQba2UuJqiIiovWG4IbO7q6MXojt7o0YvYs3udKnLISKidobhhiyiofXmy/2ZKK7QSVwNERG1Jww3ZBEjuvuhV5A7KnR6/CvlktTlEBFRO8JwQxYhCIKx9Wbd3nRU6GolroiIiNoLhhuymLERgejo7YxrFTX4+kCW1OUQEVE7wXBDFqOQyzD1nrpxb1bvTkeN3iBxRURE1B4w3JBF/SmqA3xd1bhSXIkfjlyVuhwiImoHbCLcLF++HGFhYdBoNIiOjsb+/ftvue7q1asxfPhweHl5wcvLC7Gxsbddn6SlUcrx7LAwAHVTMhgMorQFERGRw5M83CQmJiI+Ph7z589HWloaIiMjERcXh7y8vCbX//XXXzFx4kTs2rULKSkpCA0NxejRo3HlyhUrV07N9eTdneCmVuBsbhl2nm7690pERGQugiiKkv5f6ejoaAwaNAjLli0DABgMBoSGhuKll17CnDlz7ri9Xq+Hl5cXli1bhsmTJ99x/ZKSEnh4eECr1cLd3b3N9VPzLPrpNFYmX8BdHT2xedoQCIIgdUlERGRHWvL9LWnLjU6nQ2pqKmJjY43LZDIZYmNjkZKS0qx9VFRUoKamBt7e3k2+X11djZKSEpMHWd+zQ8OgUsiQllmMAxnXpC6HiIgcmKThpqCgAHq9HgEBASbLAwICkJOT06x9/P3vf0dwcLBJQLrRwoUL4eHhYXyEhoa2uW5qOX93Df4U1QEA8Mmv5yWuhoiIHJnkfW7aYtGiRdi4cSO2bNkCjUbT5DoJCQnQarXGR1YWx1uRytThXSATgF1n8nHyKlvQiIjIMiQNN76+vpDL5cjNzTVZnpubi8DAwNtuu3jxYixatAg///wz+vXrd8v11Go13N3dTR4kjTBfF4ztGwQAWPzzGYmrISIiRyVpuFGpVIiKikJSUpJxmcFgQFJSEmJiYm653fvvv4+33noL27Ztw8CBA61RKplJ/H3doZAJ2Hk6D7vP5UtdDhEROSDJL0vFx8dj9erV2LBhA06dOoVp06ahvLwcU6ZMAQBMnjwZCQkJxvXfe+89zJ07F2vXrkVYWBhycnKQk5ODsrIyqT4CtUAXP1c8FdMJAPD21lPQc9wbIiIyM8nDzYQJE7B48WLMmzcP/fv3x+HDh7Ft2zZjJ+PMzExkZ2cb1//kk0+g0+nwpz/9CUFBQcbH4sWLpfoI1EKzRoXDw0mJM7mlSOScU0REZGaSj3NjbRznxjas25uOBT+chK+rCrteGQk3jVLqkoiIyIbZzTg31H49eXcndPF1QUGZDst3XZC6HCIiciAMNyQJpVyGV8f2AgCs3ZOOrKIKiSsiIiJHwXBDkhnVyx9Du/lApzdg0bbTUpdDREQOguGGJCMIAl5/oDcEAfjxaDYOZhRJXRIRETkAhhuSVK8gd0wYWDclxltbT8LAW8OJiKiNGG5IcvGju8NFJceRy1p8d+SK1OUQEZGdY7ghyfm7aTD9D90AAO9vO4NKnV7iioiIyJ4x3JBNeG5YZ4R4OiFbW4XVuy9KXQ4REdkxhhuyCRqlHHPG9AQAfPLrBeSWVElcERER2SuGG7IZD/YLwl0dPVFZo8cH2zlrOBERtQ7DDdkMQRAw98HeAIDNaZdx/IpW4oqIiMgeMdyQTRnQ0QsP9Q+GKAKvbjmGGr1B6pKIiMjOMNyQzUkY0wvuGgWOXtbi453npS6HiIjsDMMN2ZxADw3efqQvAGD5rvNIy7wmcUVERGRPGG7IJo2PDMZD/YOhN4h4OfEwyqtrpS6JiIjsBMMN2aw3H4pAsIcGlwor8PaPJ6Uuh4iI7ATDDdksDyclFj8WCUEAvtqfhR0nc6UuiYiI7ADDDdm0IV198cLwLgCAOZuPIr+0WuKKiIjI1jHckM376+ju6BnohsJyHeZsPgpR5MzhRER0aww3ZPPUCjmWPt4fKrkMSafz8NX+LKlLIiIiG8ZwQ3ahZ6A7/nZ/DwDAW1tPIr2gXOKKiIjIVjHckN14dmhnDOnqg8oaPV5OPIxajl5MRERNYLghuyGTCVj850i4axQ4nFWMZbs4ejERETXGcEN2JdjTCW89HAEA+HjneRzi6MVERHQThhuyOw/1D8H4yLrRi1/66hDySqukLomIiGwIww3ZpbceikAnH2dcvlaJ59Yf5PQMRERkxHBDdsnDWYkNUwbDx0WFY1e0mP5FGmrYwZiIiMBwQ3YszNcFa54ZBCelHMln8/Hqf45xgD8iImK4IfvWP9QTy54YAJkAbEq9jH/+ck7qkoiISGIMN2T3RvUKwDuP9AUAfJR0Dl/tz5S4IiIikhLDDTmEiYM74i/3dgMAvP7tcew8zRnEiYjaK4Ybchgv39cdf47qAL1BxIwvDuFIVrHUJRERkQQYbshhCIKAdx/ti3u6+6GyRo9n1x9ABuegIiJqdxhuyKEo5TKsmHQXIkLcUViuwzPr9qOwrFrqsoiIyIoYbsjhuKoVWPvMIHTwckJGYQWeXX8A18p1UpdFRERWwnBDDsnfTYMNzw6Gp7MSRy5r8egnv+NSIS9RERG1Bww35LC6+rni6/+LQYinE9ILyvHIit+Rxok2iYgcHsMNObTuAW7YMn0IIkLcUVSuw8RP/4dtx7OlLouIiCyI4YYcnr+7BolTYzCqpz+qaw2Y9kUaPtt9kVM1EBE5KIYbahdc1AqseioKT93dCaIIvP3jKbzx/QnoDQw4RESOhuGG2g2FXIY3H+qDV8f2BABsSLmE//s8FRW6WokrIyIic2K4oXZFEARMvacrlj9xF1QKGX45lYvHP/0f8kqrpC6NiIjMhOGG2qUH+gXhqxei4eWsxNHLWjyy/HfsTy+SuiwiIjIDhhtqt6I6eWPL9KEI83HGleJKPLYqBXO/PY7SqhqpSyMiojZguKF2LczXBd/NHIaJg0MBAJ//7xLi/vkbdp3Jk7gyIiJqLYYbavc8nJRY+Gg/fPl8NDp6O+OqtgpT1h3Ay4mHUcRpG4iI7A7DDVG9Id18sW32cDw/rDNkArDl0BXctyQZW49e5Zg4RER2hOGG6AbOKgVef7A3Nk8bgu4Brigs12Hml4cw9fNU5JbwjioiInvAcEPUhAEdvbD1peGYHRsOpVzAjpO5iF2SjA9/OccZxomIbJwgtrP29pKSEnh4eECr1cLd3V3qcsgOnMkpxd82H8WRrGIAgJNSjscHh+L54V0Q4ukkbXFERO1ES76/GW6ImkFvEPHjsWys/PUCTmaXAAAUMgHjI4PxfyO6okegm8QVEhE5Noab22C4obYQRRG/nSvAyl8vIOVioXH5vT39MW1kVwwK85awOiIix8VwcxsMN2QuR7KKsTL5AradyEHDv6KoTl54fFAoYnsFwMtFJW2BREQOhOHmNhhuyNwu5pdh9e6L2Jx6BTq9AQAglwm4u4s34voEYnTvQAR6aCSukojIvjHc3AbDDVlKXkkVvtqfhW0ncnCqvl9OgwEdPXF/n0DE9QlEmK+LRBUSEdkvhpvbYLgha7hUWI7tJ3Kw/UQuUi9dM3mvZ6Abhof7IjLUE5EdPNHBywmCIEhUKRGRfWC4uQ2GG7K23JIq/HwyFz+fyEHKhULUGkz/yXm7qNA3xKM+7HigXwdP+LmpJaqWiMg2MdzcBsMNSam4QoddZ/KQdqkYRy4X41R2CWr0jf8JBnto0DvYHR28nBHq7YyO3s4I9XZCqJczXNQKCSonIpIWw81tMNyQLamu1eN0dimOXC7GkSwtjl4uxvn8MtzuX6W3iwqhXk7o4O2MDp5O8HZRwctFBW/n+p/1z900CshkvNxFRI6B4eY2GG7I1pVV1+L4FS3O55Uhq6gCWdcqkFVUiaxrFSiuqGn2fuQyAV7OSng6q+CiVsBFJYezSgEXtbzRa2eVAmqFDGqlHCq5DGqlDOr6nyq5vP6nDEqFDEqZAIVcBoVcgFImg1wmQCkX2G+IiCyqJd/fbN8msjGuagXu7uKDu7v4NHqvpKqmLvAUVeLytQpcLa5CcYUORRU6FJXXPa6V61Cu00NvEFFQpkNBmXXmwpLLBChkAtQKGZxVCjir5HBWy+GsVNT9VMnhpKwLU65qBXxd1fB1U8PPVQ2/+p/uTgqGJCJqM4YbIjvirlGiT7AH+gR73Ha9qho9iitqUFSuQ3FFXdip0NWivPqmn7paVFTrUa6rRXWtAdU1Buj0BlTX6qGrNaC61mD8WV2jR41BRK3eAEMT7b16gwi9QUR1rQElVbWt+nwquQy+rqq6sFP/8L0h/Ny4jH2PiOhWbOK/DsuXL8cHH3yAnJwcREZG4uOPP8bgwYNvuf6mTZswd+5cZGRkIDw8HO+99x7Gjh1rxYqJbJtGKUegh9xigwcaDCJqDAbU6kXU6uue6w0iavR1QahSp0d5dS0qavTG55U1epRX61Gpq0VJVS0KyqqRX1qN/LJqFJRWo6SqFjq9AVe1VbiqrbpjDc4qOfzc1PBxUcHHteGnCt4uavi61vU98nFRw8dVBU9nJdQKuUXOBRHZHsnDTWJiIuLj47Fy5UpER0dj6dKliIuLw5kzZ+Dv799o/d9//x0TJ07EwoUL8eCDD+LLL7/Eww8/jLS0NEREREjwCYjaH5lMgFomhzkbT6pq9Cgoq0ZBma4u9JRWXw9ADSGorBp5JdWorNGjQqfHpcIKXCqsaNb+nVVyeDop4e6khKezEp5OKnjUP/dwVsJdo4SrWgEXtQKu9Q8XtRyumrrnTkq5w14yMxhE6PR1rXa62uuPhpY7nV5vfF6jF+t/NrxnMHldozdAp69r4autD7w1ekN9CK5bXqMXoTcYoBdR99MgwmAAauuXGepbAQ2iCFEERDT8rJvfTQSA+tcNBACCAAiCcP05BOMymVB36VQm1F0+lckEyAWhbplMgFwA5DIZFDIBCnndOnKZDEq5YLzkqpDf+L4MKoXMuFwpF6Csf18pl9U/rj9X1D9X3fRcqbi+TsN7Chn7sLWV5B2Ko6OjMWjQICxbtgwAYDAYEBoaipdeeglz5sxptP6ECRNQXl6OrVu3Gpfdfffd6N+/P1auXHnH47FDMZH9K6+uNQaewjIdCsvrfhaV61BQVo2icp1xeVG5rsnLaC0lEwBnlQIapQxqRV0na7VCXv/6+nOVQg6lrP4Lsf7LruHLUd7wpSk078tLFEXoRRF6A2AQr3/hGwzXl+vrW9B09QGi1lAXHmoNBtTUXm9huzGI3BxIbh57iaSnaghHClldkDI+rw9GCtMgpTAGqbrgZXx+Q8CSywSTGwIUsoZ164Lc9VBXt7zR361xOepDogwyWV1obAiKDQFSo5Sbfbwuu+lQrNPpkJqaioSEBOMymUyG2NhYpKSkNLlNSkoK4uPjTZbFxcXh22+/bXL96upqVFdXG1+XlJQ0uR4R2Q+X+haW5kxlYTCIKK2qRXGlDsUVNdBW1qC4sgbairrXxZV1y0oqa1Cuq0VZVS3Kquv6JZVX16JMVwtRBAxi3Z1sZdV3PKTdU9V/eaoUsjs+V8qFukAnr+tMbvJlK2vqS7fuy9X4xVn/ZdjwxWjamlLf8gIAN7bEoL6Fpv55XYtOXRg01Lf0QLy+3CCKxkdDINQbAH1DUKwPi3WXW0Xo61udag3XW6DqgqNpi1TDJdka/fUWqdr6MNnQYlVz0/Pa+patuu3rW8Lq56S7UV1LGlD3P/ZnQEdPbJk+VLLjSxpuCgoKoNfrERAQYLI8ICAAp0+fbnKbnJycJtfPyclpcv2FCxdiwYIF5imYiOyOTCbAo/7SU6fGN6DdkcEg1vcXqkW5To/qWj2qauo6WFfVNv1TX//FaKj/qTf+NBhf39xmLsJ0gSjC+KVf9xOml1JuCATGSyLy67fq33yZpCGUNFz+aBxQri/jJRHrEkXx+iW82rqw03Q4Mn2tq60PScaAdcNlQMP1IGUa0K5fFmy4VKi/IaA1XCqs1d/4d3vD36++odXw+sNw02u9KEKtkEl6TiXvc2NpCQkJJi09JSUlCA0NlbAiIrInMplgbCkisgRBuB5QoZK6Gscg6b9WX19fyOVy5ObmmizPzc1FYGBgk9sEBga2aH21Wg21mvP0EBERtReSthupVCpERUUhKSnJuMxgMCApKQkxMTFNbhMTE2OyPgDs2LHjlusTERFR+yJ5O2t8fDyefvppDBw4EIMHD8bSpUtRXl6OKVOmAAAmT56MkJAQLFy4EAAwa9YsjBgxAv/4xz/wwAMPYOPGjTh48CA+/fRTKT8GERER2QjJw82ECROQn5+PefPmIScnB/3798e2bduMnYYzMzMhk11vYBoyZAi+/PJLvP7663j11VcRHh6Ob7/9lmPcEBEREQAbGOfG2jjODRERkf1pyfe3tPdqEREREZkZww0RERE5FIYbIiIicigMN0RERORQGG6IiIjIoTDcEBERkUNhuCEiIiKHwnBDREREDoXhhoiIiByK5NMvWFvDgMwlJSUSV0JERETN1fC93ZyJFdpduCktLQUAhIaGSlwJERERtVRpaSk8PDxuu067m1vKYDDg6tWrcHNzgyAIZt13SUkJQkNDkZWVxXmrrIDn27p4vq2L59u6eL6tqzXnWxRFlJaWIjg42GRC7aa0u5YbmUyGDh06WPQY7u7u/MdhRTzf1sXzbV0839bF821dLT3fd2qxacAOxURERORQGG6IiIjIoTDcmJFarcb8+fOhVqulLqVd4Pm2Lp5v6+L5ti6eb+uy9Pludx2KiYiIyLGx5YaIiIgcCsMNERERORSGGyIiInIoDDdERETkUBhuzGT58uUICwuDRqNBdHQ09u/fL3VJDuO3337DuHHjEBwcDEEQ8O2335q8L4oi5s2bh6CgIDg5OSE2Nhbnzp2Tplg7t3DhQgwaNAhubm7w9/fHww8/jDNnzpisU1VVhRkzZsDHxweurq744x//iNzcXIkqtm+ffPIJ+vXrZxzILCYmBj/99JPxfZ5ry1q0aBEEQcDs2bONy3jOzeeNN96AIAgmj549exrft+S5Zrgxg8TERMTHx2P+/PlIS0tDZGQk4uLikJeXJ3VpDqG8vByRkZFYvnx5k++///77+Oijj7By5Urs27cPLi4uiIuLQ1VVlZUrtX/JycmYMWMG/ve//2HHjh2oqanB6NGjUV5eblzn5Zdfxg8//IBNmzYhOTkZV69exaOPPiph1farQ4cOWLRoEVJTU3Hw4EHce++9eOihh3DixAkAPNeWdODAAaxatQr9+vUzWc5zbl59+vRBdna28bFnzx7jexY91yK12eDBg8UZM2YYX+v1ejE4OFhcuHChhFU5JgDili1bjK8NBoMYGBgofvDBB8ZlxcXFolqtFr/66isJKnQseXl5IgAxOTlZFMW6c6tUKsVNmzYZ1zl16pQIQExJSZGqTIfi5eUlfvbZZzzXFlRaWiqGh4eLO3bsEEeMGCHOmjVLFEX+fZvb/PnzxcjIyCbfs/S5ZstNG+l0OqSmpiI2Nta4TCaTITY2FikpKRJW1j6kp6cjJyfH5Px7eHggOjqa598MtFotAMDb2xsAkJqaipqaGpPz3bNnT3Ts2JHnu430ej02btyI8vJyxMTE8Fxb0IwZM/DAAw+YnFuAf9+WcO7cOQQHB6NLly6YNGkSMjMzAVj+XLe7iTPNraCgAHq9HgEBASbLAwICcPr0aYmqaj9ycnIAoMnz3/AetY7BYMDs2bMxdOhQREREAKg73yqVCp6enibr8ny33rFjxxATE4Oqqiq4urpiy5Yt6N27Nw4fPsxzbQEbN25EWloaDhw40Og9/n2bV3R0NNavX48ePXogOzsbCxYswPDhw3H8+HGLn2uGGyJq0owZM3D8+HGTa+Rkfj169MDhw4eh1WrxzTff4Omnn0ZycrLUZTmkrKwszJo1Czt27IBGo5G6HIc3ZswY4/N+/fohOjoanTp1wtdffw0nJyeLHpuXpdrI19cXcrm8UQ/v3NxcBAYGSlRV+9Fwjnn+zWvmzJnYunUrdu3ahQ4dOhiXBwYGQqfTobi42GR9nu/WU6lU6NatG6KiorBw4UJERkbiww8/5Lm2gNTUVOTl5eGuu+6CQqGAQqFAcnIyPvroIygUCgQEBPCcW5Cnpye6d++O8+fPW/zvm+GmjVQqFaKiopCUlGRcZjAYkJSUhJiYGAkrax86d+6MwMBAk/NfUlKCffv28fy3giiKmDlzJrZs2YKdO3eic+fOJu9HRUVBqVSanO8zZ84gMzOT59tMDAYDqqurea4tYNSoUTh27BgOHz5sfAwcOBCTJk0yPuc5t5yysjJcuHABQUFBlv/7bnOXZBI3btwoqtVqcf369eLJkyfFqVOnip6enmJOTo7UpTmE0tJS8dChQ+KhQ4dEAOKSJUvEQ4cOiZcuXRJFURQXLVokenp6it9995149OhR8aGHHhI7d+4sVlZWSly5/Zk2bZro4eEh/vrrr2J2drbxUVFRYVznxRdfFDt27Cju3LlTPHjwoBgTEyPGxMRIWLX9mjNnjpicnCymp6eLR48eFefMmSMKgiD+/PPPoijyXFvDjXdLiSLPuTn99a9/FX/99VcxPT1d3Lt3rxgbGyv6+vqKeXl5oiha9lwz3JjJxx9/LHbs2FFUqVTi4MGDxf/9739Sl+Qwdu3aJQJo9Hj66adFUay7HXzu3LliQECAqFarxVGjRolnzpyRtmg71dR5BiCuW7fOuE5lZaU4ffp00cvLS3R2dhYfeeQRMTs7W7qi7dizzz4rdurUSVSpVKKfn584atQoY7ARRZ5ra7g53PCcm8+ECRPEoKAgUaVSiSEhIeKECRPE8+fPG9+35LkWRFEU297+Q0RERGQb2OeGiIiIHArDDRERETkUhhsiIiJyKAw3RERE5FAYboiIiMihMNwQERGRQ2G4ISIiIofCcENE7U5YWBiWLl0qdRlEZCEMN0RkUc888wwefvhhAMDIkSMxe/Zsqx17/fr18PT0bLT8wIEDmDp1qtXqICLrUkhdABFRS+l0OqhUqlZv7+fnZ8ZqiMjWsOWGiKzimWeeQXJyMj788EMIggBBEJCRkQEAOH78OMaMGQNXV1cEBATgqaeeQkFBgXHbkSNHYubMmZg9ezZ8fX0RFxcHAFiyZAn69u0LFxcXhIaGYvr06SgrKwMA/Prrr5gyZQq0Wq3xeG+88QaAxpelMjMz8dBDD8HV1RXu7u547LHHkJuba3z/jTfeQP/+/fH5558jLCwMHh4eePzxx1FaWmrZk0ZErcJwQ0RW8eGHHyImJgYvvPACsrOzkZ2djdDQUBQXF+Pee+/FgAEDcPDgQWzbtg25ubl47LHHTLbfsGEDVCoV9u7di5UrVwIAZDIZPvroI5w4cQIbNmzAzp078be//Q0AMGTIECxduhTu7u7G473yyiuN6jIYDHjooYdQVFSE5ORk7NixAxcvXsSECRNM1rtw4QK+/fZbbN26FVu3bkVycjIWLVpkobNFRG3By1JEZBUeHh5QqVRwdnZGYGCgcfmyZcswYMAAvPvuu8Zla9euRWhoKM6ePYvu3bsDAMLDw/H++++b7PPG/jthYWF4++238eKLL2LFihVQqVTw8PCAIAgmx7tZUlISjh07hvT0dISGhgIA/vWvf6FPnz44cOAABg0aBKAuBK1fvx5ubm4AgKeeegpJSUl455132nZiiMjs2HJDRJI6cuQIdu3aBVdXV+OjZ8+eAOpaSxpERUU12vaXX37BqFGjEBISAjc3Nzz11FMoLCxERUVFs49/6tQphIaGGoMNAPTu3Ruenp44deqUcVlYWJgx2ABAUFAQ8vLyWvRZicg62HJDRJIqKyvDuHHj8N577zV6LygoyPjcxcXF5L2MjAw8+OCDmDZtGt555x14e3tjz549eO6556DT6eDs7GzWOpVKpclrQRBgMBjMegwiMg+GGyKyGpVKBb1eb7LsrrvuwubNmxEWFgaFovn/SUpNTYXBYMA//vEPyGR1jdBff/31HY93s169eiErKwtZWVnG1puTJ0+iuLgYvXv3bnY9RGQ7eFmKiKwmLCwM+/btQ0ZGBgoKCmAwGDBjxgwUFRVh4sSJOHDgAC5cuIDt27djypQptw0m3bp1Q01NDT7++GNcvHgRn3/+ubGj8Y3HKysrQ1JSEgoKCpq8XBUbG4u+ffti0qRJSEtLw/79+zF58mSMGDECAwcONPs5ICLLY7ghIqt55ZVXIJfL0bt3b/j5+SEzMxPBwcHYu3cv9Ho9Ro8ejb59+2L27Nnw9PQ0tsg0JTIyEkuWLMF7772HiIgIfPHFF1i4cKHJOkOGDMGLL76ICRMmwM/Pr1GHZKDu8tJ3330HLy8v3HPPPYiNjUWXLl2QmJho9s9PRNYhiKIoSl0EERERkbmw5YaIiIgcCsMNERERORSGGyIiInIoDDdERETkUBhuiIiIyKEw3BAREZFDYbghIiIih8JwQ0RERA6F4YaIiIgcCsMNERERORSGGyIiInIoDDdERETkUP4/p5KqBiYdxn4AAAAASUVORK5CYII=\n",

"text/plain": [

""

]

},

"metadata": {},

"output_type": "display_data"

}

],

"source": [

"import matplotlib.pyplot as plt\n",

"plt.plot(errors)\n",

"plt.xlabel('Iteration'); plt.ylabel('MSE(param)'); plt.title('Parameter error plot');\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## See also\n",

"\n",

"- [Detailed look at Optimizer](https://mitsuba.readthedocs.io/en/latest/src/how_to_guides/use_optimizers.html)\n",

"- API reference:\n",

" - [mitsuba.ad.Optimizer](https://mitsuba.readthedocs.io/en/latest/src/api_reference.html#mitsuba.ad.Optimizer)\n",

" - [prb plugin](https://mitsuba.readthedocs.io/en/latest/src/generated/plugins_integrators.html#path-replay-backpropagation-prb)\n",

" - [mitsuba.ad.SGD](https://mitsuba.readthedocs.io/en/latest/src/api_reference.html#mitsuba.ad.SGD)\n",

" - [mitsuba.ad.Adam](https://mitsuba.readthedocs.io/en/latest/src/api_reference.html#mitsuba.ad.Adam)\n",

" - [drjit.backward](https://drjit.readthedocs.io/en/latest/reference.html#drjit.backward)"

]

}

],

"metadata": {

"file_extension": ".py",

"kernelspec": {

"display_name": "Python 3 (ipykernel)",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.10.4"

},

"metadata": {

"interpreter": {

"hash": "31f2aee4e71d21fbe5cf8b01ff0e069b9275f58929596ceb00d14d90e3e16cd6"

}

},

"mimetype": "text/x-python",

"name": "python",

"npconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": 3

},

"nbformat": 4,

"nbformat_minor": 4

}