{

"cells": [

{

"cell_type": "code",

"execution_count": 4,

"metadata": {

"slideshow": {

"slide_type": "skip"

}

},

"outputs": [],

"source": [

"from IPython.core.display import HTML\n",

"HTML(\"\"\"\n",

"\n",

"\"\"\")\n",

"\n",

"from matplotlib import pyplot as plt\n",

"%matplotlib inline\n",

"import numpy as np"

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"source": [

"\n",

"\n",

""

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"source": [

""

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"source": [

""

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"source": [

""

]

},

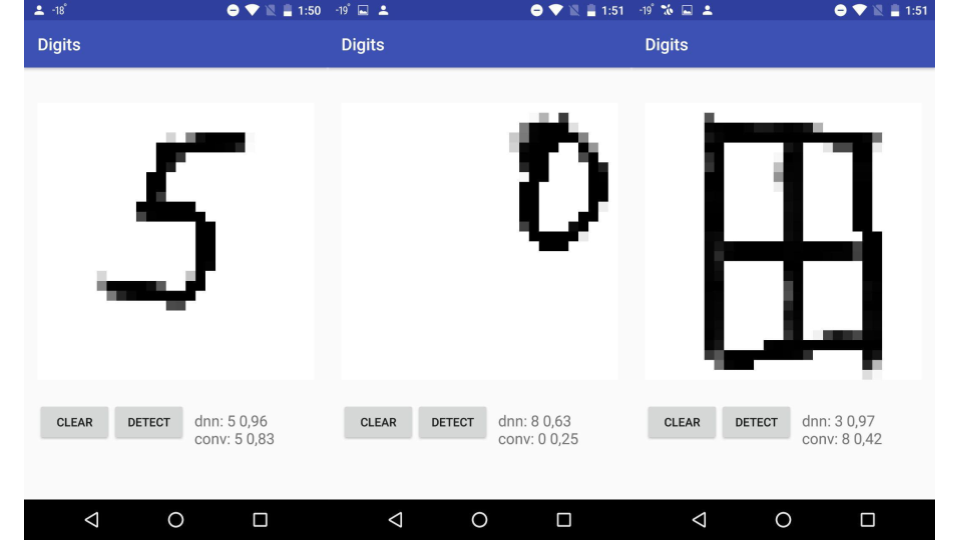

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"source": [

"\n",

"\n",

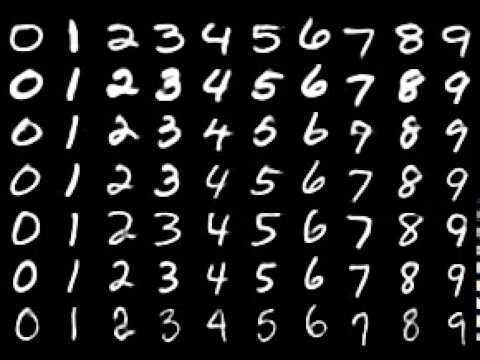

"Классическая задача распознавания рукописных цифр на основе набора данных [MNIST](http://yann.lecun.com/exdb/mnist/)"

]

},

{

"cell_type": "code",

"execution_count": 12,

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"outputs": [

{

"data": {

"text/plain": [

""

]

},

"execution_count": 12,

"metadata": {},

"output_type": "execute_result"

},

{

"data": {

"image/png": "iVBORw0KGgoAAAANSUhEUgAAAP8AAAD8CAYAAAC4nHJkAAAABHNCSVQICAgIfAhkiAAAAAlwSFlz\nAAALEgAACxIB0t1+/AAAADl0RVh0U29mdHdhcmUAbWF0cGxvdGxpYiB2ZXJzaW9uIDIuMS4wLCBo\ndHRwOi8vbWF0cGxvdGxpYi5vcmcvpW3flQAADgdJREFUeJzt3X9sXfV5x/HPs9D8QRoIXjUTpWFp\nIhQUIuZOJkwoGkXM5YeCggGhWkLKRBT3j1ii0hQNZX8MNAVFg2RqBKrsqqHJ1KWZBCghqpp0CZBO\nTBEmhF9mKQylqi2TFAWTH/zIHD/74x53Lvh+r3Pvufdc+3m/JMv3nuecex4d5ZPz8/pr7i4A8fxJ\n0Q0AKAbhB4Ii/EBQhB8IivADQRF+ICjCDwRF+IGgCD8Q1GWNXJmZ8TghUGfublOZr6Y9v5ndYWbH\nzex9M3ukls8C0FhW7bP9ZjZL0m8kdUgalPSqpC53H0gsw54fqLNG7PlXSHrf3T9w9wuSfi5pdQ2f\nB6CBagn/Akm/m/B+MJv2R8ys28z6zay/hnUByFndL/i5e5+kPonDfqCZ1LLnH5K0cML7b2bTAEwD\ntYT/VUnXmtm3zGy2pO9J2ptPWwDqrerDfncfNbMeSfslzZK03d3fya0zAHVV9a2+qlbGOT9Qdw15\nyAfA9EX4gaAIPxAU4QeCIvxAUIQfCIrwA0ERfiAowg8ERfiBoAg/EBThB4Ii/EBQhB8IivADQRF+\nICjCDwRF+IGgCD8QFOEHgiL8QFCEHwiK8ANBEX4gKMIPBEX4gaAIPxAU4QeCIvxAUFUP0S1JZnZC\n0llJFyWNunt7Hk0hP7NmzUrWr7zyyrquv6enp2zt8ssvTy67dOnSZH39+vXJ+pNPPlm21tXVlVz2\n888/T9Y3b96crD/22GPJejOoKfyZW939oxw+B0ADcdgPBFVr+F3SATN7zcy682gIQGPUeti/0t2H\nzOzPJP3KzP7b3Q9PnCH7T4H/GIAmU9Oe392Hst+nJD0vacUk8/S5ezsXA4HmUnX4zWyOmc0dfy3p\nu5LezqsxAPVVy2F/q6TnzWz8c/7N3X+ZS1cA6q7q8Lv7B5L+IsdeZqxrrrkmWZ89e3ayfvPNNyfr\nK1euLFubN29ectn77rsvWS/S4OBgsr5t27ZkvbOzs2zt7NmzyWXfeOONZP3ll19O1qcDbvUBQRF+\nICjCDwRF+IGgCD8QFOEHgjJ3b9zKzBq3sgZqa2tL1g8dOpSs1/trtc1qbGwsWX/ooYeS9XPnzlW9\n7uHh4WT9448/TtaPHz9e9brrzd1tKvOx5weCIvxAUIQfCIrwA0ERfiAowg8ERfiBoLjPn4OWlpZk\n/ciRI8n64sWL82wnV5V6HxkZSdZvvfXWsrULFy4kl436/EOtuM8PIInwA0ERfiAowg8ERfiBoAg/\nEBThB4LKY5Te8E6fPp2sb9iwIVlftWpVsv76668n65X+hHXKsWPHkvWOjo5k/fz588n69ddfX7b2\n8MMPJ5dFfbHnB4Ii/EBQhB8IivADQRF+ICjCDwRF+IGgKn6f38y2S1ol6ZS7L8+mtUjaLWmRpBOS\nHnD39B8618z9Pn+trrjiimS90nDSvb29ZWtr165NLvvggw8m67t27UrW0Xzy/D7/TyXd8aVpj0g6\n6O7XSjqYvQcwjVQMv7sflvTlR9hWS9qRvd4h6Z6c+wJQZ9We87e6+/h4Rx9Kas2pHwANUvOz/e7u\nqXN5M+uW1F3regDkq9o9/0kzmy9J2e9T5WZ09z53b3f39irXBaAOqg3/XklrstdrJO3Jpx0AjVIx\n/Ga2S9J/SVpqZoNmtlbSZkkdZvaepL/J3gOYRiqe87t7V5nSbTn3EtaZM2dqWv6TTz6petl169Yl\n67t3707Wx8bGql43isUTfkBQhB8IivADQRF+ICjCDwRF+IGgGKJ7BpgzZ07Z2gsvvJBc9pZbbknW\n77zzzmT9wIEDyToajyG6ASQRfiAowg8ERfiBoAg/EBThB4Ii/EBQ3Oef4ZYsWZKsHz16NFkfGRlJ\n1l988cVkvb+/v2zt6aefTi7byH+bMwn3+QEkEX4gKMIPBEX4gaAIPxAU4QeCIvxAUNznD66zszNZ\nf+aZZ5L1uXPnVr3ujRs3Jus7d+5M1oeHh5P1qLjPDyCJ8ANBEX4gKMIPBEX4gaAIPxAU4QeCqnif\n38y2S1ol6ZS7L8+mPSppnaTfZ7NtdPdfVFwZ9/mnneXLlyfrW7duTdZvu636kdx7e3uT9U2bNiXr\nQ0NDVa97OsvzPv9PJd0xyfR/cfe27Kdi8AE0l4rhd/fDkk43oBcADVTLOX+Pmb1pZtvN7KrcOgLQ\nENWG/0eSlkhqkzQsaUu5Gc2s28z6zaz8H3MD0HBVhd/dT7r7RXcfk/RjSSsS8/a5e7u7t1fbJID8\nVRV+M5s/4W2npLfzaQdAo1xWaQYz2yXpO5K+YWaDkv5R0nfMrE2SSzoh6ft17BFAHfB9ftRk3rx5\nyfrdd99dtlbpbwWYpW9XHzp0KFnv6OhI1mcqvs8PIInwA0ERfiAowg8ERfiBoAg/EBS3+lCYL774\nIlm/7LL0Yyijo6PJ+u2331629tJLLyWXnc641QcgifADQRF+ICjCDwRF+IGgCD8QFOEHgqr4fX7E\ndsMNNyTr999/f7J+4403lq1Vuo9fycDAQLJ++PDhmj5/pmPPDwRF+IGgCD8QFOEHgiL8QFCEHwiK\n8ANBcZ9/hlu6dGmy3tPTk6zfe++9yfrVV199yT1N1cWLF5P14eHhZH1sbCzPdmYc9vxAUIQfCIrw\nA0ERfiAowg8ERfiBoAg/EFTF+/xmtlDSTkmtklxSn7v/0MxaJO2WtEjSCUkPuPvH9Ws1rkr30ru6\nusrWKt3HX7RoUTUt5aK/vz9Z37RpU7K+d+/ePNsJZyp7/lFJf+fuyyT9laT1ZrZM0iOSDrr7tZIO\nZu8BTBMVw+/uw+5+NHt9VtK7khZIWi1pRzbbDkn31KtJAPm7pHN+M1sk6duSjkhqdffx5ys/VOm0\nAMA0MeVn+83s65KelfQDdz9j9v/Dgbm7lxuHz8y6JXXX2iiAfE1pz29mX1Mp+D9z9+eyySfNbH5W\nny/p1GTLunufu7e7e3seDQPIR8XwW2kX/xNJ77r71gmlvZLWZK/XSNqTf3sA6qXiEN1mtlLSryW9\nJWn8O5IbVTrv/3dJ10j6rUq3+k5X+KyQQ3S3tqYvhyxbtixZf+qpp5L166677pJ7ysuRI0eS9See\neKJsbc+e9P6Cr+RWZ6pDdFc853f3/5RU7sNuu5SmADQPnvADgiL8QFCEHwiK8ANBEX4gKMIPBMWf\n7p6ilpaWsrXe3t7ksm1tbcn64sWLq+opD6+88kqyvmXLlmR9//79yfpnn312yT2hMdjzA0ERfiAo\nwg8ERfiBoAg/EBThB4Ii/EBQYe7z33TTTcn6hg0bkvUVK1aUrS1YsKCqnvLy6aeflq1t27Ytuezj\njz+erJ8/f76qntD82PMDQRF+ICjCDwRF+IGgCD8QFOEHgiL8QFBh7vN3dnbWVK/FwMBAsr5v375k\nfXR0NFlPfed+ZGQkuSziYs8PBEX4gaAIPxAU4QeCIvxAUIQfCIrwA0GZu6dnMFsoaaekVkkuqc/d\nf2hmj0paJ+n32awb3f0XFT4rvTIANXN3m8p8Uwn/fEnz3f2omc2V9JqkeyQ9IOmcuz851aYIP1B/\nUw1/xSf83H1Y0nD2+qyZvSup2D9dA6Bml3TOb2aLJH1b0pFsUo+ZvWlm283sqjLLdJtZv5n119Qp\ngFxVPOz/w4xmX5f0sqRN7v6cmbVK+kil6wD/pNKpwUMVPoPDfqDOcjvnlyQz+5qkfZL2u/vWSeqL\nJO1z9+UVPofwA3U21fBXPOw3M5P0E0nvTgx+diFwXKekty+1SQDFmcrV/pWSfi3pLUlj2eSNkrok\ntal02H9C0vezi4Opz2LPD9RZrof9eSH8QP3ldtgPYGYi/EBQhB8IivADQRF+ICjCDwRF+IGgCD8Q\nFOEHgiL8QFCEHwiK8ANBEX4gKMIPBNXoIbo/kvTbCe+/kU1rRs3aW7P2JdFbtfLs7c+nOmNDv8//\nlZWb9bt7e2ENJDRrb83al0Rv1SqqNw77gaAIPxBU0eHvK3j9Kc3aW7P2JdFbtQrprdBzfgDFKXrP\nD6AghYTfzO4ws+Nm9r6ZPVJED+WY2Qkze8vMjhU9xFg2DNopM3t7wrQWM/uVmb2X/Z50mLSCenvU\nzIaybXfMzO4qqLeFZvaimQ2Y2Ttm9nA2vdBtl+irkO3W8MN+M5sl6TeSOiQNSnpVUpe7DzS0kTLM\n7ISkdncv/J6wmf21pHOSdo6PhmRm/yzptLtvzv7jvMrd/75JentUlzhyc516Kzey9N+qwG2X54jX\neShiz79C0vvu/oG7X5D0c0mrC+ij6bn7YUmnvzR5taQd2esdKv3jabgyvTUFdx9296PZ67OSxkeW\nLnTbJfoqRBHhXyDpdxPeD6q5hvx2SQfM7DUz6y66mUm0ThgZ6UNJrUU2M4mKIzc30pdGlm6abVfN\niNd544LfV61097+UdKek9dnhbVPy0jlbM92u+ZGkJSoN4zYsaUuRzWQjSz8r6QfufmZirchtN0lf\nhWy3IsI/JGnhhPffzKY1BXcfyn6fkvS8SqcpzeTk+CCp2e9TBffzB+5+0t0vuvuYpB+rwG2XjSz9\nrKSfuftz2eTCt91kfRW13YoI/6uSrjWzb5nZbEnfk7S3gD6+wszmZBdiZGZzJH1XzTf68F5Ja7LX\nayTtKbCXP9IsIzeXG1laBW+7phvx2t0b/iPpLpWu+P+PpH8ooocyfS2W9Eb2807RvUnapdJh4P+q\ndG1kraQ/lXRQ0nuS/kNSSxP19q8qjeb8pkpBm19QbytVOqR/U9Kx7Oeuorddoq9CthtP+AFBccEP\nCIrwA0ERfiAowg8ERfiBoAg/EBThB4Ii/EBQ/weCC5r/92q6mAAAAABJRU5ErkJggg==\n",

"text/plain": [

""

]

},

"metadata": {},

"output_type": "display_data"

}

],

"source": [

"from model import data\n",

"x_train, y_train, x_test, y_test = data.load_data()\n",

"plt.imshow(x_train[0].reshape((28,28)), cmap='gray')"

]

},

{

"cell_type": "code",

"execution_count": 53,

"metadata": {

"slideshow": {

"slide_type": "subslide"

}

},

"outputs": [],

"source": [

"import numpy as np\n",

"from keras.datasets import mnist\n",

"from keras.utils import to_categorical\n",

"\n",

"\n",

"def load_data(flatten=True):\n",

" \"\"\"\n",

" Load MNIST dataset, do train-test split, convert data to the necessary format\n",

" :return:\n",

" \"\"\"\n",

" if flatten:\n",

" reshape = _flatten\n",

" else:\n",

" reshape = _square\n",

"\n",

" (x_train, y_train), (x_test, y_test) = mnist.load_data()\n",

" x_train = reshape(x_train)\n",

" x_test = reshape(x_test)\n",

" x_train = x_train.astype('float32')\n",

" x_test = x_test.astype('float32')\n",

" x_train /= 255\n",

" x_test /= 255\n",

" y_train = to_categorical(y_train, 10)\n",

" y_test = to_categorical(y_test, 10)\n",

" return x_train, y_train, x_test, y_test\n",

"\n",

"\n",

"def _flatten(x: np.ndarray) -> np.ndarray:\n",

" return x.reshape(x.shape[0], 28 * 28)\n",

"\n",

"\n",

"def _square(x: np.ndarray) -> np.ndarray:\n",

" return x.reshape(x.shape[0], 28, 28, 1)"

]

},

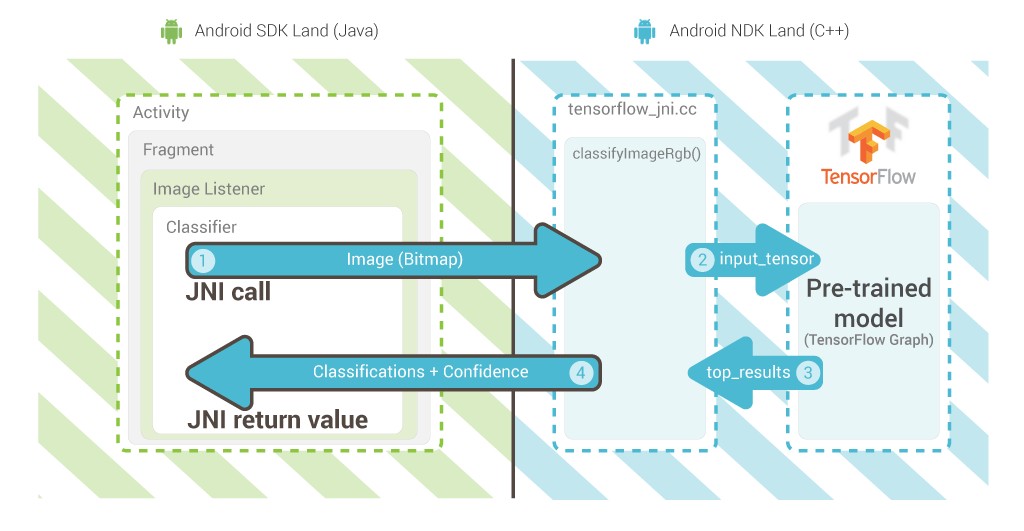

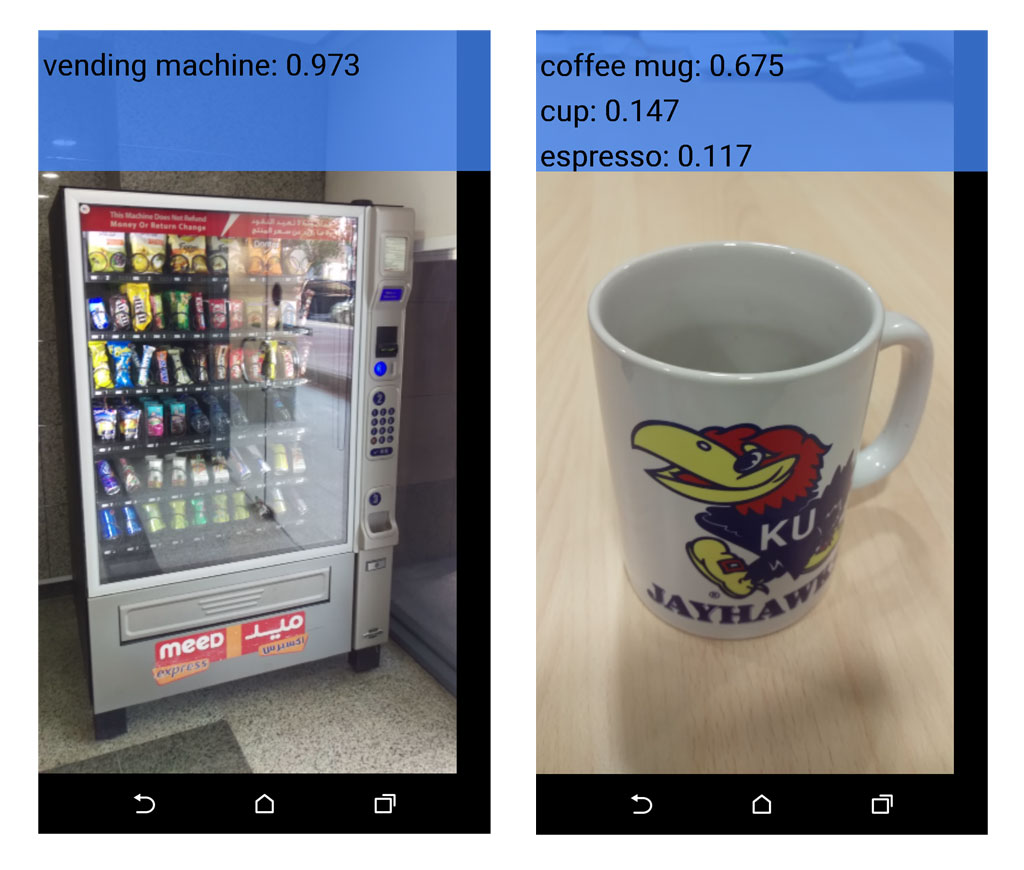

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"source": [

"# Что используем\n",

"\n",

"- [TensorFlow backend](https://www.tensorflow.org/)\n",

"- [Keras](https://keras.io/)\n",

"- Android SDK > 23\n",

"- [org.tensorflow:tensorflow-android:1.3.0](https://www.tensorflow.org/mobile/android_build)"

]

},

{

"cell_type": "code",

"execution_count": 57,

"metadata": {

"slideshow": {

"slide_type": "subslide"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"numpy\r\n",

"tensorflow\r\n",

"keras\r\n",

"h5py"

]

}

],

"source": [

"!cat model/requirements.txt"

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"source": [

"# 1. Neural Network"

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"source": [

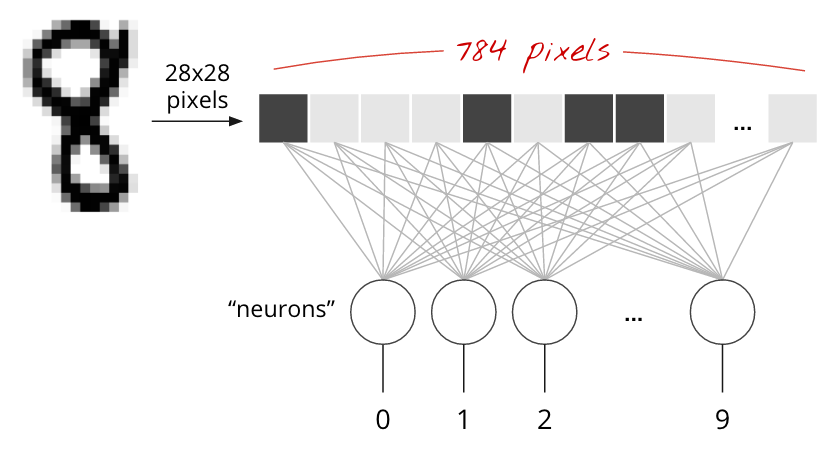

"# Простейшая однослойная нейросеть"

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "fragment"

}

},

"source": [

""

]

},

{

"cell_type": "code",

"execution_count": 44,

"metadata": {

"slideshow": {

"slide_type": "-"

}

},

"outputs": [],

"source": [

"import keras\n",

"keras.backend.clear_session()\n",

"tf.reset_default_graph()"

]

},

{

"cell_type": "code",

"execution_count": 45,

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"_________________________________________________________________\n",

"Layer (type) Output Shape Param # \n",

"=================================================================\n",

"dense_1 (Dense) (None, 10) 7850 \n",

"=================================================================\n",

"Total params: 7,850\n",

"Trainable params: 7,850\n",

"Non-trainable params: 0\n",

"_________________________________________________________________\n"

]

}

],

"source": [

"from keras import metrics, Sequential\n",

"from keras.layers import Dense\n",

"\n",

"model = Sequential(layers=[\n",

" Dense(10, activation='softmax', input_shape=(784,))\n",

"])\n",

"model.summary()"

]

},

{

"cell_type": "code",

"execution_count": 46,

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"outputs": [],

"source": [

"from keras.layers import K\n",

"from keras.optimizers import SGD\n",

"\n",

"model.compile(loss=K.categorical_crossentropy,\n",

" optimizer=SGD(),\n",

" metrics=[metrics.categorical_accuracy])"

]

},

{

"cell_type": "code",

"execution_count": 47,

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Train on 60000 samples, validate on 10000 samples\n",

"Epoch 1/10\n",

"60000/60000 [==============================] - 2s 30us/step - loss: 1.2668 - categorical_accuracy: 0.7063 - val_loss: 0.8042 - val_categorical_accuracy: 0.8336\n",

"Epoch 2/10\n",

"60000/60000 [==============================] - 1s 22us/step - loss: 0.7117 - categorical_accuracy: 0.8414 - val_loss: 0.6047 - val_categorical_accuracy: 0.8602\n",

"Epoch 3/10\n",

"60000/60000 [==============================] - 2s 31us/step - loss: 0.5852 - categorical_accuracy: 0.8595 - val_loss: 0.5249 - val_categorical_accuracy: 0.8755\n",

"Epoch 4/10\n",

"60000/60000 [==============================] - 2s 28us/step - loss: 0.5244 - categorical_accuracy: 0.8687 - val_loss: 0.4794 - val_categorical_accuracy: 0.8805\n",

"Epoch 5/10\n",

"60000/60000 [==============================] - 2s 26us/step - loss: 0.4871 - categorical_accuracy: 0.8751 - val_loss: 0.4500 - val_categorical_accuracy: 0.8858\n",

"Epoch 6/10\n",

"60000/60000 [==============================] - 2s 26us/step - loss: 0.4615 - categorical_accuracy: 0.8802 - val_loss: 0.4286 - val_categorical_accuracy: 0.8881\n",

"Epoch 7/10\n",

"60000/60000 [==============================] - 1s 25us/step - loss: 0.4424 - categorical_accuracy: 0.8835 - val_loss: 0.4129 - val_categorical_accuracy: 0.8917\n",

"Epoch 8/10\n",

"60000/60000 [==============================] - 2s 25us/step - loss: 0.4276 - categorical_accuracy: 0.8862 - val_loss: 0.3999 - val_categorical_accuracy: 0.8948\n",

"Epoch 9/10\n",

"60000/60000 [==============================] - 1s 24us/step - loss: 0.4157 - categorical_accuracy: 0.8884 - val_loss: 0.3897 - val_categorical_accuracy: 0.8976\n",

"Epoch 10/10\n",

"60000/60000 [==============================] - 2s 26us/step - loss: 0.4057 - categorical_accuracy: 0.8909 - val_loss: 0.3811 - val_categorical_accuracy: 0.8991\n"

]

},

{

"data": {

"text/plain": [

""

]

},

"execution_count": 47,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"model.fit(x_train, y_train,\n",

" batch_size=128,\n",

" epochs=10,\n",

" validation_data=(x_test, y_test))"

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"source": [

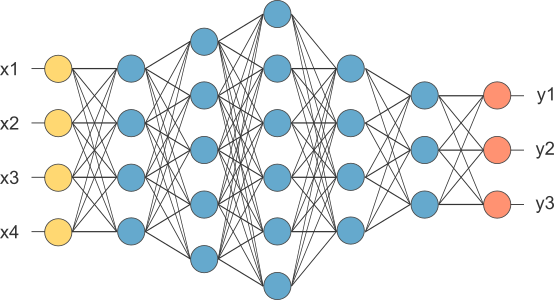

"# Многослойная нейросеть"

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "fragment"

}

},

"source": [

""

]

},

{

"cell_type": "code",

"execution_count": 48,

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"_________________________________________________________________\n",

"Layer (type) Output Shape Param # \n",

"=================================================================\n",

"dense_1 (Dense) (None, 200) 157000 \n",

"_________________________________________________________________\n",

"dense_2 (Dense) (None, 10) 2010 \n",

"=================================================================\n",

"Total params: 159,010\n",

"Trainable params: 159,010\n",

"Non-trainable params: 0\n",

"_________________________________________________________________\n"

]

}

],

"source": [

"keras.backend.clear_session()\n",

"tf.reset_default_graph()\n",

"\n",

"model = Sequential(layers=[\n",

" Dense(200, activation='relu', input_shape=(784,)),\n",

" Dense(10, activation='softmax')\n",

"])\n",

"model.summary()"

]

},

{

"cell_type": "code",

"execution_count": 50,

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Train on 60000 samples, validate on 10000 samples\n",

"Epoch 1/10\n",

"60000/60000 [==============================] - 2s 40us/step - loss: 1.1825 - categorical_accuracy: 0.7202 - val_loss: 0.6388 - val_categorical_accuracy: 0.8595\n",

"Epoch 2/10\n",

"60000/60000 [==============================] - 2s 41us/step - loss: 0.5502 - categorical_accuracy: 0.8672 - val_loss: 0.4509 - val_categorical_accuracy: 0.8903\n",

"Epoch 3/10\n",

"60000/60000 [==============================] - 3s 47us/step - loss: 0.4361 - categorical_accuracy: 0.8865 - val_loss: 0.3853 - val_categorical_accuracy: 0.9010\n",

"Epoch 4/10\n",

"60000/60000 [==============================] - 2s 42us/step - loss: 0.3857 - categorical_accuracy: 0.8961 - val_loss: 0.3507 - val_categorical_accuracy: 0.9072\n",

"Epoch 5/10\n",

"60000/60000 [==============================] - 2s 41us/step - loss: 0.3557 - categorical_accuracy: 0.9029 - val_loss: 0.3284 - val_categorical_accuracy: 0.9117\n",

"Epoch 6/10\n",

"60000/60000 [==============================] - 2s 39us/step - loss: 0.3348 - categorical_accuracy: 0.9078 - val_loss: 0.3120 - val_categorical_accuracy: 0.9138\n",

"Epoch 7/10\n",

"60000/60000 [==============================] - 2s 41us/step - loss: 0.3187 - categorical_accuracy: 0.9116 - val_loss: 0.2987 - val_categorical_accuracy: 0.9188\n",

"Epoch 8/10\n",

"60000/60000 [==============================] - 3s 46us/step - loss: 0.3055 - categorical_accuracy: 0.9149 - val_loss: 0.2883 - val_categorical_accuracy: 0.9211\n",

"Epoch 9/10\n",

"60000/60000 [==============================] - 3s 49us/step - loss: 0.2944 - categorical_accuracy: 0.9176 - val_loss: 0.2793 - val_categorical_accuracy: 0.9237\n",

"Epoch 10/10\n",

"60000/60000 [==============================] - 3s 52us/step - loss: 0.2846 - categorical_accuracy: 0.9200 - val_loss: 0.2707 - val_categorical_accuracy: 0.9257\n"

]

},

{

"data": {

"text/plain": [

""

]

},

"execution_count": 50,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"from keras.callbacks import TensorBoard\n",

"\n",

"model.compile(loss=K.categorical_crossentropy,\n",

" optimizer=SGD(),\n",

" metrics=[metrics.categorical_accuracy])\n",

"\n",

"model.fit(x_train, y_train,\n",

" batch_size=128,\n",

" epochs=10,\n",

" validation_data=(x_test, y_test),\n",

" callbacks=[TensorBoard(log_dir='/tmp/tensorflow/dnn_jup')])"

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"source": [

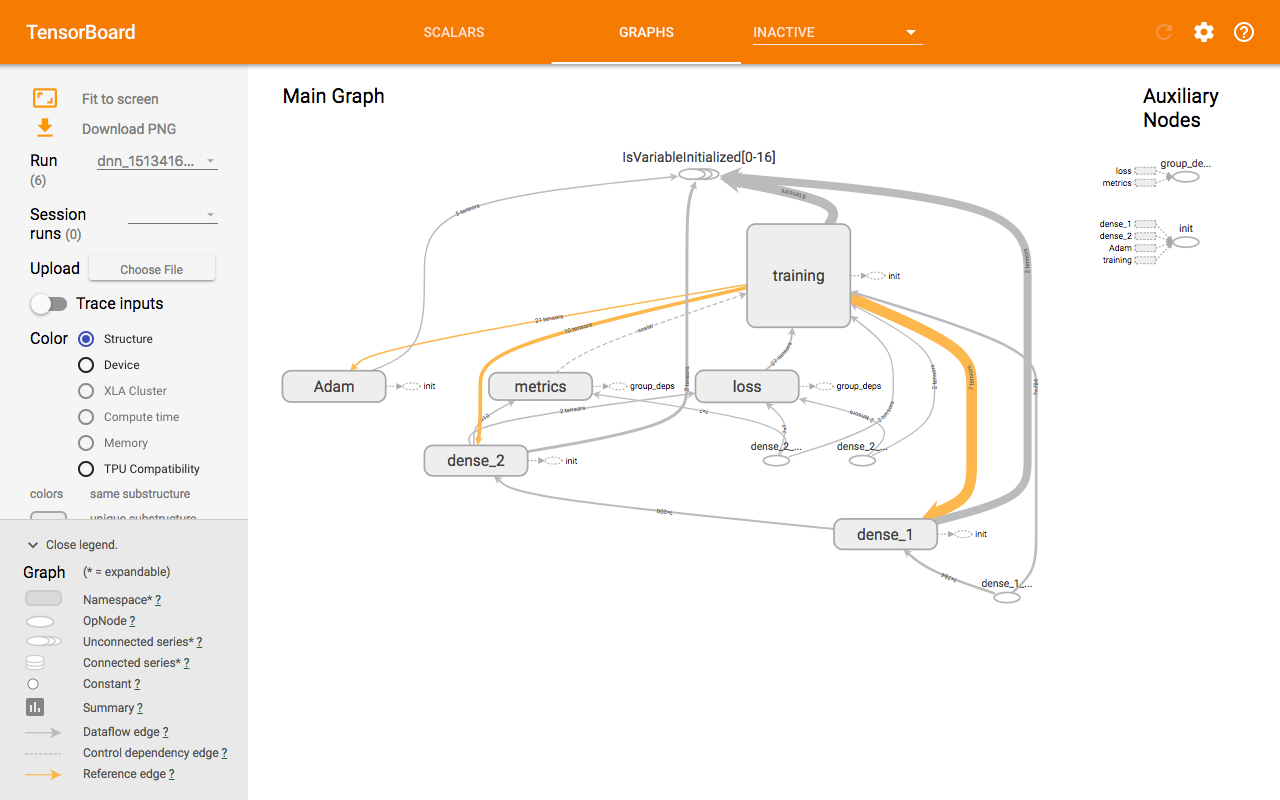

"# Tensorboard"

]

},

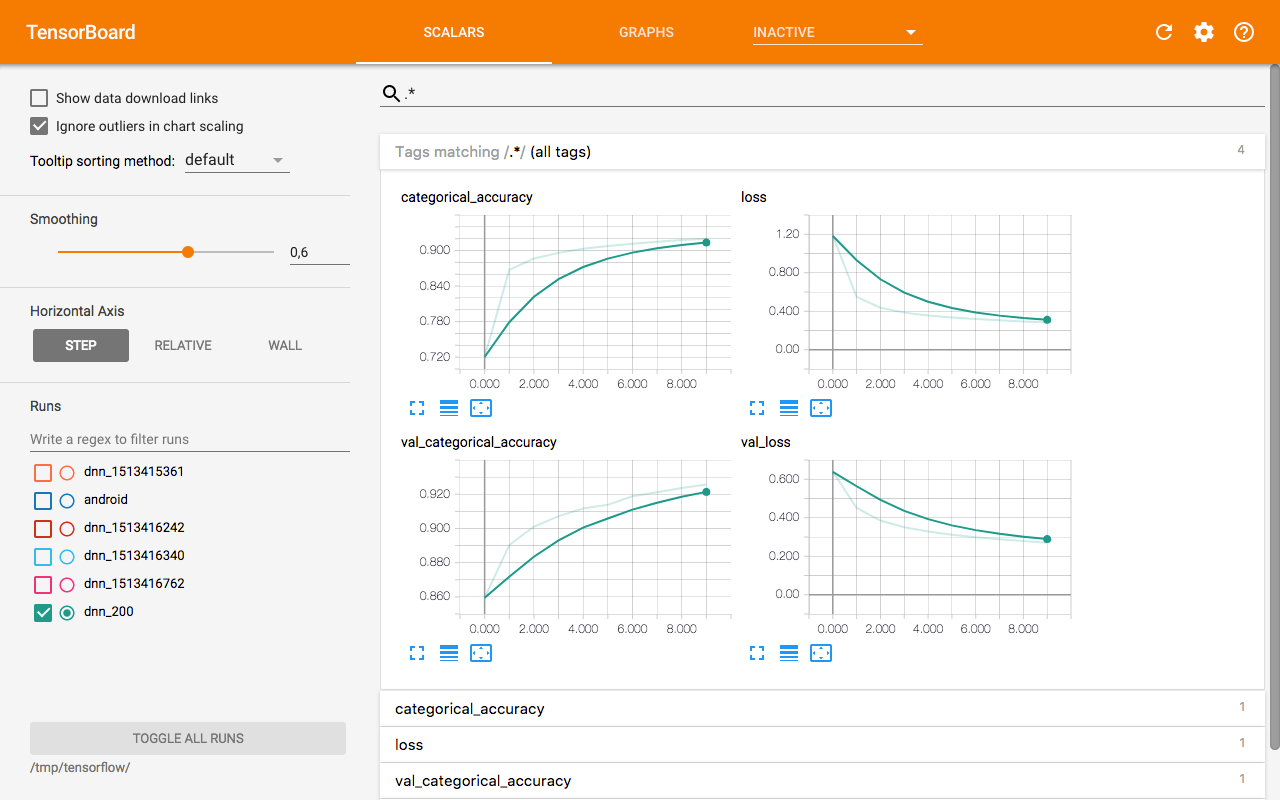

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "subslide"

}

},

"source": [

""

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "subslide"

}

},

"source": [

""

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"source": [

"# Экспорт модели"

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "fragment"

}

},

"source": [

"- pbtxt\n",

"- chkp\n",

"- pb\n",

"- **optimal pb** for android\n",

"- ..."

]

},

{

"cell_type": "code",

"execution_count": 51,

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"INFO:tensorflow:Restoring parameters from out/dnn.chkp\n"

]

},

{

"name": "stderr",

"output_type": "stream",

"text": [

"INFO:tensorflow:Restoring parameters from out/dnn.chkp\n"

]

},

{

"name": "stdout",

"output_type": "stream",

"text": [

"INFO:tensorflow:Froze 4 variables.\n"

]

},

{

"name": "stderr",

"output_type": "stream",

"text": [

"INFO:tensorflow:Froze 4 variables.\n"

]

},

{

"name": "stdout",

"output_type": "stream",

"text": [

"Converted 4 variables to const ops.\n",

"15 ops in the final graph.\n",

"graph saved!\n"

]

}

],

"source": [

"from model import exporter\n",

"\n",

"exporter.export_model(tf.train.Saver(), \n",

" input_names=['dense_1_input'], \n",

" output_name='dense_2/Softmax', \n",

" model_name=\"dnn_jup\")"

]

},

{

"cell_type": "code",

"execution_count": 52,

"metadata": {

"slideshow": {

"slide_type": "subslide"

}

},

"outputs": [],

"source": [

"def export_model(saver: tf.train.Saver, input_names: list, output_name: str, model_name: str):\n",

" \"\"\"\n",

" You can find node names by using debugger: just connect it right after model is created and look for nodes in the inspec\n",

" :param saver:\n",

" :param input_names:\n",

" :param output_name:\n",

" :param model_name:\n",

" :return:\n",

" \"\"\"\n",

" os.makedirs(\"./out\", exist_ok=True)\n",

" tf.train.write_graph(K.get_session().graph_def, 'out',\n",

" model_name + '_graph.pbtxt')\n",

"\n",

" saver.save(K.get_session(), 'out/' + model_name + '.chkp')\n",

"\n",

" # pbtxt is human readable representation of the graph\n",

" freeze_graph.freeze_graph('out/' + model_name + '_graph.pbtxt', None,\n",

" False, 'out/' + model_name + '.chkp', output_name,\n",

" \"save/restore_all\", \"save/Const:0\",\n",

" 'out/frozen_' + model_name + '.pb', True, \"\")\n",

"\n",

" input_graph_def = tf.GraphDef()\n",

" with tf.gfile.Open('out/frozen_' + model_name + '.pb', \"rb\") as f:\n",

" input_graph_def.ParseFromString(f.read())\n",

"\n",

" # optimization of the graph so we can use it in the android app\n",

" output_graph_def = optimize_for_inference_lib.optimize_for_inference(\n",

" input_graph_def, input_names, [output_name],\n",

" tf.float32.as_datatype_enum)\n",

"\n",

" # This is archived optimal graph in the protobuf format we'll use in our android App.\n",

" with tf.gfile.FastGFile('out/opt_' + model_name + '.pb', \"wb\") as f:\n",

" f.write(output_graph_def.SerializeToString())\n",

"\n",

" print(\"graph saved!\")"

]

},

{

"cell_type": "code",

"execution_count": 58,

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"checkpoint dnn_graph.pbtxt\r\n",

"dnn.chkp.data-00000-of-00001 frozen_dnn.pb\r\n",

"dnn.chkp.index opt_dnn.pb\r\n",

"dnn.chkp.meta\r\n"

]

}

],

"source": [

"!ls out/"

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"source": [

"# Свёрточная нейосеть"

]

},

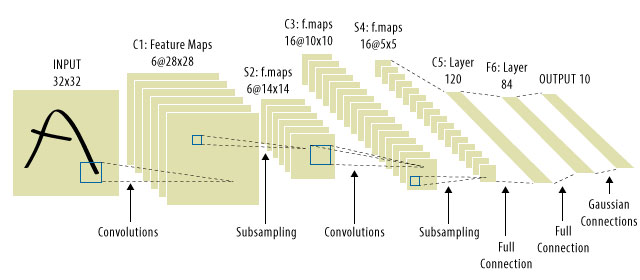

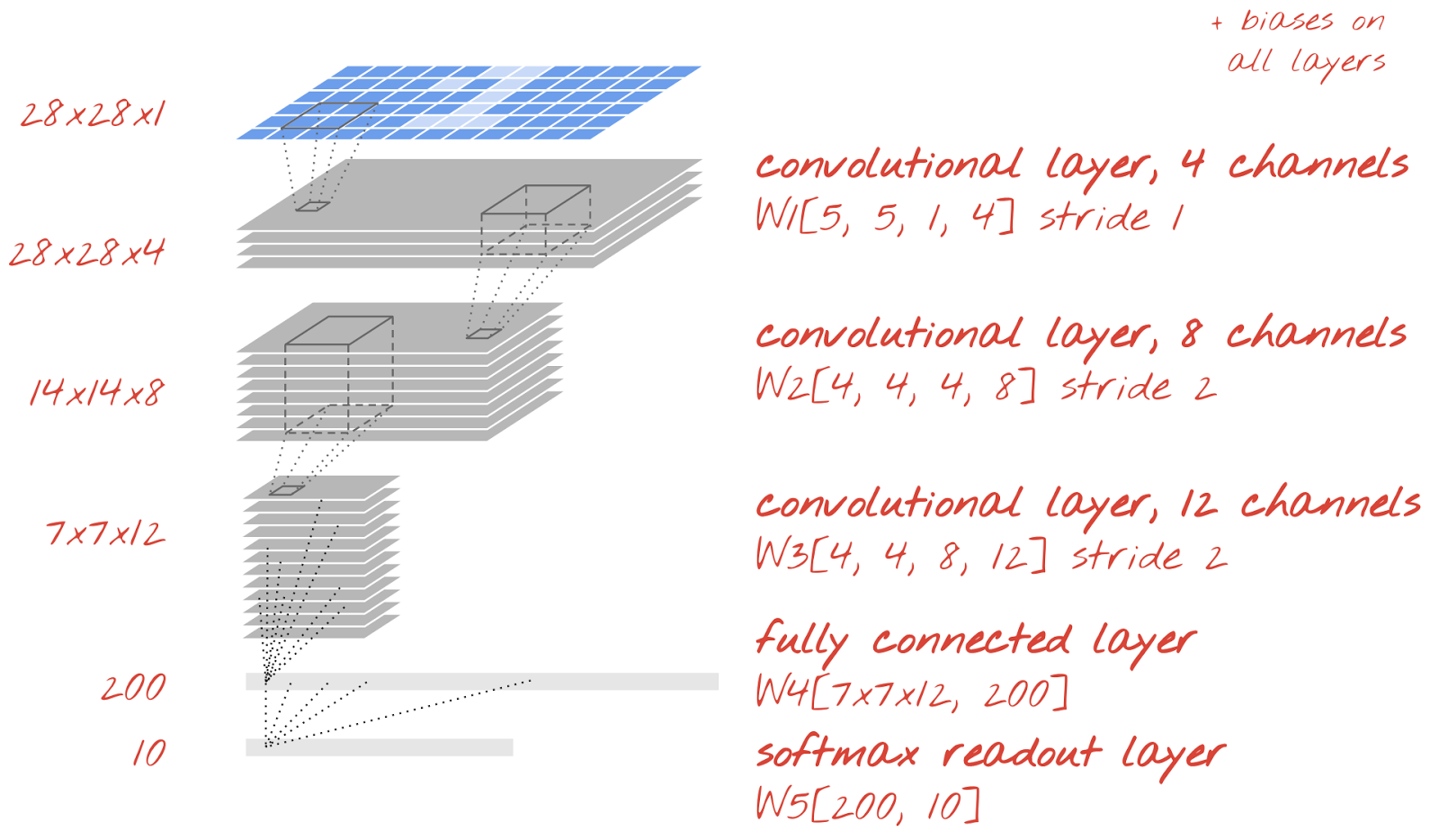

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "fragment"

}

},

"source": [

""

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"source": [

""

]

},

{

"cell_type": "code",

"execution_count": 60,

"metadata": {},

"outputs": [],

"source": [

"from keras.layers import Conv2D, MaxPooling2D\n",

"from keras.layers import Flatten"

]

},

{

"cell_type": "code",

"execution_count": 65,

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"outputs": [],

"source": [

"keras.backend.clear_session()\n",

"tf.reset_default_graph()\n",

"\n",

"model = Sequential()\n",

"model.add(Conv2D(filters=16, kernel_size=5, strides=1,\n",

" padding='same', activation='relu',\n",

" input_shape=[28, 28, 1]))\n",

"# 28*28*64\n",

"model.add(MaxPooling2D(pool_size=2, strides=2, padding='same'))\n",

"# 14*14*64\n",

"\n",

"model.add(Conv2D(filters=32, kernel_size=4, strides=1,\n",

" padding='same', activation='relu'))\n",

"# 14*14*128\n",

"model.add(MaxPooling2D(pool_size=2, strides=2, padding='same'))\n",

"# 7*7*128\n",

"\n",

"model.add(Conv2D(filters=64, kernel_size=3, strides=1,\n",

" padding='same', activation='relu'))\n",

"# 7*7*256\n",

"model.add(MaxPooling2D(pool_size=2, strides=2, padding='same'))\n",

"# 4*4*256\n",

"\n",

"model.add(Flatten())\n",

"model.add(Dense(64 * 4, activation='relu'))\n",

"model.add(Dense(10, activation='softmax'))"

]

},

{

"cell_type": "code",

"execution_count": 67,

"metadata": {},

"outputs": [],

"source": [

"x_train, y_train, x_test, y_test = data.load_data(flatten=False)"

]

},

{

"cell_type": "code",

"execution_count": 69,

"metadata": {},

"outputs": [],

"source": [

"!rm -rf /tmp/tensorflow/conv_jup\n",

"from keras.optimizers import Adam\n",

"\n",

"model.compile(loss=K.categorical_crossentropy,\n",

" optimizer=Adam(),\n",

" metrics=[metrics.categorical_accuracy])\n",

"\n",

"model.fit(x_train, y_train,\n",

" batch_size=128,\n",

" epochs=1,\n",

" validation_data=(x_test, y_test),\n",

" callbacks=[TensorBoard(log_dir='/tmp/tensorflow/conv_jup')])"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"exporter.export_model(tf.train.Saver(), \n",

" input_names=['conv2d_1_input'], \n",

" output_name='dense_2/Softmax', \n",

" model_name=\"conv_jup\")"

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"source": [

"# 2. Android"

]

},

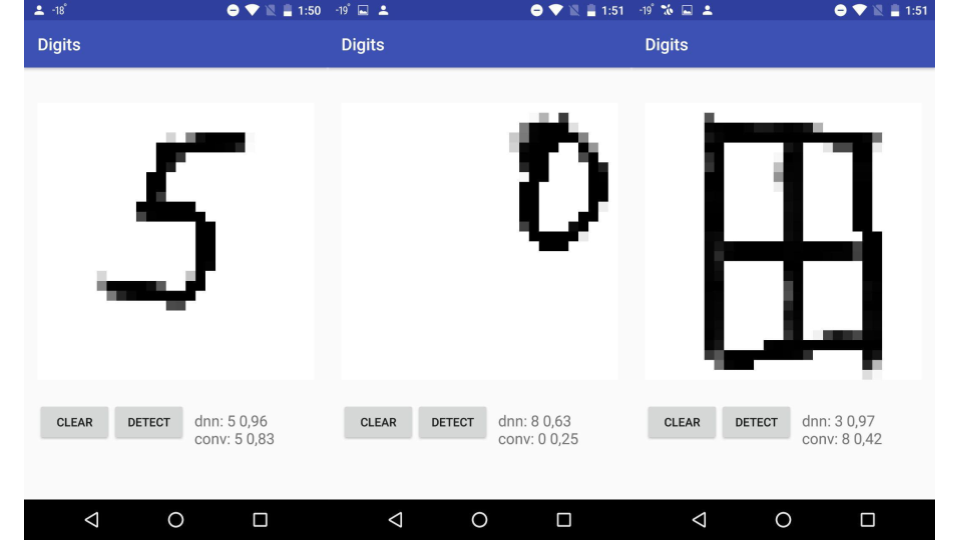

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "fragment"

}

},

"source": [

""

]

},

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"source": [

"# 3. Интеграция"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"```groovy\n",

"implementation 'org.tensorflow:tensorflow-android:1.3.0'\n",

"```"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"```kotlin\n",

"import org.senior_sigan.digits.views.DrawView\n",

"import org.senior_sigan.digits.ml.models.DigitsClassifierFlatten // for DNN\n",

"import org.senior_sigan.digits.ml.models.DigitsClassifierSquare // for Conv\n",

"```"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"```kotlin\n",

"// somewhere in another thread load the model\n",

"val clf = DigitsClassifierFlatten(\n",

" assetManager=assets, \n",

" modelPath=\"dnn.pb\", \n",

" inputSize=28, \n",

" inputName=\"dense_1_input\", \n",

" outputName=\"dense_2/Softmax\", \n",

" name=\"dnn\")\n",

"\n",

"// somewhere on UI thread\n",

"val drawView = find(R.id.draw)\n",

"\n",

"// somewhere on another thread\n",

"val pred = clf.predict(drawView.pixelData)\n",

"\n",

"Log.i(\"Dnn\", \"${pred.label} ${pred.proba}\")\n",

"\n",

"```"

]

}

],

"metadata": {

"celltoolbar": "Slideshow",

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.6.3"

},

"livereveal": {

"scroll": true

}

},

"nbformat": 4,

"nbformat_minor": 2

}