{

"cells": [

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# Accessing cloud satellite data\n",

"\n",

"- Funding: Interagency Implementation and Advanced Concepts Team [IMPACT](https://earthdata.nasa.gov/esds/impact) for the Earth Science Data Systems (ESDS) program and AWS Public Dataset Program\n",

" \n",

"### Credits: Tutorial development\n",

"* [Dr. Chelle Gentemann](mailto:gentemann@faralloninstitute.org) - [Twitter](https://twitter.com/ChelleGentemann) - Farallon Institute\n",

"* [Lucas Sterzinger](mailto:lsterzinger@ucdavis.edu) - [Twitter](https://twitter.com/lucassterzinger) - University of California, Davis\n",

"\n",

"### Satellite data\n",

"\n",

"Satellite data come from either polar or low inclination orbits that circle the Earth or geostationary orbits that hold a location above a specific location on the Earth, near the Equator. The type of data from satellites depends on both the orbit and the type of instrument. There are definitions for the different satellite data types.\n",

"- L1 - satellite observations, usually each observation has a lat/lon/time associated with it\n",

"- L2 - derived geophysical retrievals (eg. SST), still with lat/lon/time\n",

"- L3 - variables mapped to a uniform space/time grid\n",

"- L4 - model output or analysis of data, may include multiple sensors\n",

"\n",

"There are several different formats that satellite data: [netCDF](https://www.unidata.ucar.edu/software/netcdf/), [HDF5](https://www.hdfgroup.org/solutions/hdf5/), and [geoTIFF](https://earthdata.nasa.gov/esdis/eso/standards-and-references/geotiff).\n",

"There are also som newer cloud optimized formats that data: [Zarr](https://zarr.readthedocs.io/en/stable/), Cloud Optimized GeoTIFF ([COG](https://www.cogeo.org/)).\n",

"\n",

"### Understand the data\n",

"Data access can be easy and fast on the cloud, but to avoid potential issues down the road, spend time to understand the strengths and weaknesses of any data. Any science results will need to address uncertainties in the data, so it is easier to spend time at the beginning to understand them and avoid data mis-application.\n",

"\n",

"#### This tutorial will focus on netCDF/HDF5/Zarr\n",

"\n",

"### Data proximate computing\n",

"These are BIG datasets that you can analyze on the cloud without downloading the data. \n",

"You can run this on your phone, a Raspberry Pi, laptop, or desktop. \n",

"By using public cloud data, your science is reproducible and easily shared!\n",

"\n",

"The OHW Jupyter Hub is a m5.2xlarge instance shared by up to 4 users. What does that mean? [m5.2xlarge](https://aws.amazon.com/ec2/instance-types/m5/) has 8 vCPU and 32 GB memory.\n",

"If there are 3 other users on your node, then the memory could be used up fast by large satellite datasets, so just be a little aware of that when you want to run something....\n",

"\n",

"### Here we will demonstrate some ways to access the different SST datasets on AWS:\n",

"- AWS MUR sea surface temperatures (L4)\n",

"- AWS GOES sea surface temperatures (L2)\n",

"- AWS Himawari sea surface temperatures (L2 and L3)\n",

"- ERA5 data (L4)\n",

"\n",

"### To run this notebook\n",

"\n",

"Code is in the cells that have In [ ]: to the left of the cell and have a colored background\n",

"\n",

"To run the code:\n",

"- option 1) click anywhere in the cell, then hold `shift` down and press `Enter`\n",

"- option 2) click on the Run button at the top of the page in the dashboard\n",

"\n",

"Remember:\n",

"- to insert a new cell below press `Esc` then `b`\n",

"- to delete a cell press `Esc` then `dd`\n",

"\n",

"### First start by importing libraries\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# How to find an AWS Public Dataset\n",

"\n",

"- Click here: [AWS Public Dataset](https://aws.amazon.com/opendata/)\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"\n",

"\n",

"-------------------------------------------------------\n",

"\n",

"\n",

"\n",

"\n",

"\n",

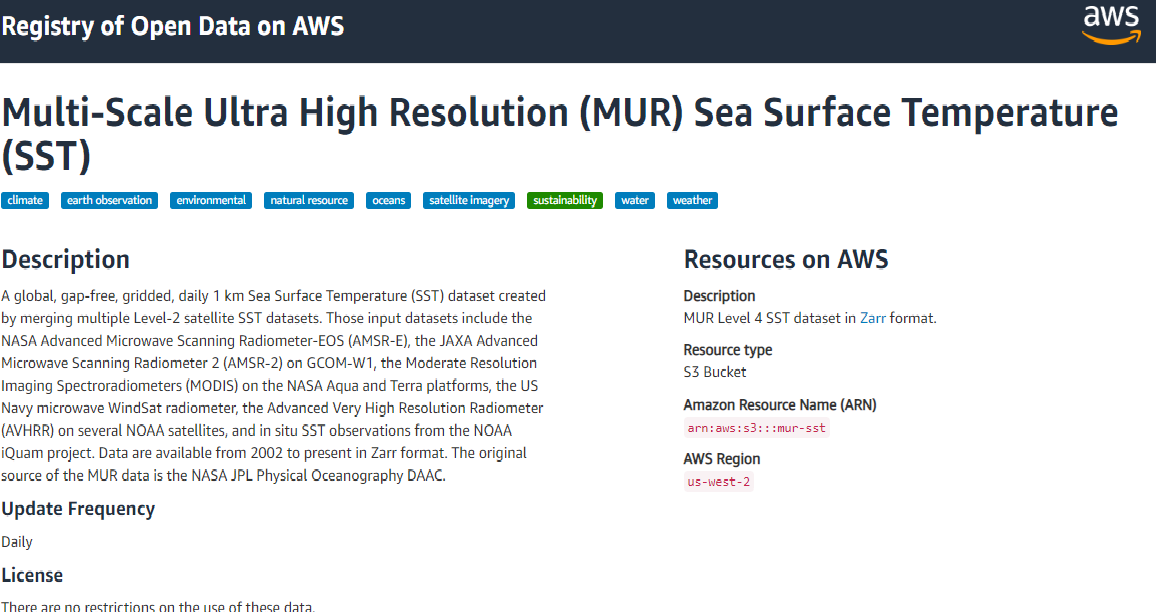

"- Click on `Find public available data on AWS` button\n",

"- Search for MUR\n",

"- Select [MUR SST](https://registry.opendata.aws/mur/)\n",

"\n",

" \n",

" \n",

" \n",

" \n",

"\n",

"-------------------------------------------------------\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## [MUR SST](https://podaac.jpl.nasa.gov/Multi-scale_Ultra-high_Resolution_MUR-SST) [AWS Public dataset program](https://registry.opendata.aws/mur/) \n",

"\n",

"### Access the MUR SST Zarr store which is in an s3 bucket. \n",

"\n",

"\n",

"\n",

"We will start with my favorite Analysis Ready Data (ARD) format: [Zarr](https://zarr.readthedocs.io/en/stable/). Using data stored in Zarr is fast, simple, and contains all the metadata normally in a netcdf file, so you can figure out easily what is in the datastore. \n",

"\n",

"- Fast - Zarr is fast because all the metadata is consolidated into a .json file. Reading in massive datasets is lightning fast because it only reads the metadata and does read in data until it needs it for compute.\n",

"\n",

"- Simple - Filenames? Who needs them? Who cares? Not I. Simply point your read routine to the data directory.\n",

"\n",

"- Metadata - all you want!"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# filter some warning messages\n",

"import warnings \n",

"warnings.filterwarnings(\"ignore\") \n",

"\n",

"#libraries\n",

"import datetime as dt\n",

"import xarray as xr\n",

"import fsspec\n",

"import s3fs\n",

"from matplotlib import pyplot as plt\n",

"import numpy as np\n",

"import pandas as pd\n",

"# make datasets display nicely\n",

"xr.set_options(display_style=\"html\") \n",

"\n",

"import dask\n",

"from dask.distributed import performance_report, Client, progress\n",

"\n",

"#magic fncts #put static images of your plot embedded in the notebook\n",

"%matplotlib inline \n",

"plt.rcParams['figure.figsize'] = 12, 6\n",

"%config InlineBackend.figure_format = 'retina' "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"\n",

"[fsspec.get_mapper](https://filesystem-spec.readthedocs.io/en/latest/api.html?highlight=get_mapper#fsspec.get_mapper) Creates a mapping between your computer and the s3 bucket. This isn't necessary if the Zarr file is stored locally.\n",

"\n",

"[xr.open_zarr](http://xarray.pydata.org/en/stable/generated/xarray.open_zarr.html) Reads a Zarr store into an Xarray dataset\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%time\n",

"file_location = 's3://mur-sst/zarr'\n",

"\n",

"ikey = fsspec.get_mapper(file_location, anon=True)\n",

"\n",

"ds_sst = xr.open_zarr(ikey,consolidated=True)\n",

"\n",

"ds_sst"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Read entire 10 years of data at 1 point.\n",

"\n",

"Select the ``analysed_sst`` variable over a specific time period, `lat`, and `lon` and load the data into memory. This is small enough to load into memory which will make calculating climatologies easier in the next step."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%time\n",

"\n",

"sst_timeseries = ds_sst['analysed_sst'].sel(time = slice('2010-01-01','2020-01-01'),\n",

" lat = 47,\n",

" lon = -145\n",

" ).load()\n",

"\n",

"sst_timeseries.plot()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### The anomaly is more interesting... \n",

"\n",

"Use [.groupby](http://xarray.pydata.org/en/stable/generated/xarray.DataArray.groupby.html#xarray-dataarray-groupby) method to calculate the climatology and [.resample](http://xarray.pydata.org/en/stable/generated/xarray.Dataset.resample.html#xarray-dataset-resample) method to then average it into 1-month bins.\n",

"- [DataArray.mean](http://xarray.pydata.org/en/stable/generated/xarray.DataArray.mean.html#xarray-dataarray-mean) arguments are important! Xarray uses metadata to plot, so keep_attrs is a nice feature. Also, for SST there are regions with changing sea ice. Setting skipna = False removes these regions. "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%time\n",

"\n",

"sst_climatology = sst_timeseries.groupby('time.dayofyear').mean('time',keep_attrs=True,skipna=False)\n",

"\n",

"sst_anomaly = sst_timeseries.groupby('time.dayofyear')-sst_climatology\n",

"\n",

"sst_anomaly_monthly = sst_anomaly.resample(time='1MS').mean(keep_attrs=True,skipna=False)\n",

"\n",

"#plot the data\n",

"sst_anomaly.plot()\n",

"sst_anomaly_monthly.plot()\n",

"plt.axhline(linewidth=2,color='k')"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# Chukchi Sea SST timeseries\n",

"\n",

"# Note SST is set to -1.8 C (271.35 K) when ice is present"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"sst_timeseries = ds_sst['analysed_sst'].sel(time = slice('2010-01-01','2020-01-01'),\n",

" lat = 72,\n",

" lon = -171\n",

" ).load()\n",

"\n",

"sst_timeseries.plot()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# Grid resolution does NOT equal spatial resolution\n",

"\n",

"- many L4 SST analyses blend infrared (~ 1 - 4 km data) with passive microwave (~ 50 km) data. Data availability will determine regional / temporal changes in spatial resolution\n",

"\n",

"- many L4 SST analyses apply smoothing filters that may further reduce resolution"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%time\n",

"\n",

"subset = ds_sst['analysed_sst'].sel(time='2019-06-01',lat=slice(35,40),lon=slice(-126,-120))\n",

"\n",

"subset.plot(vmin=282,vmax=289,cmap='inferno')"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%time\n",

"\n",

"subset = ds_sst['analysed_sst'].sel(time='2019-05-15',lat=slice(35,40),lon=slice(-126,-120))\n",

"\n",

"subset.plot(vmin=282,vmax=289,cmap='inferno')"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

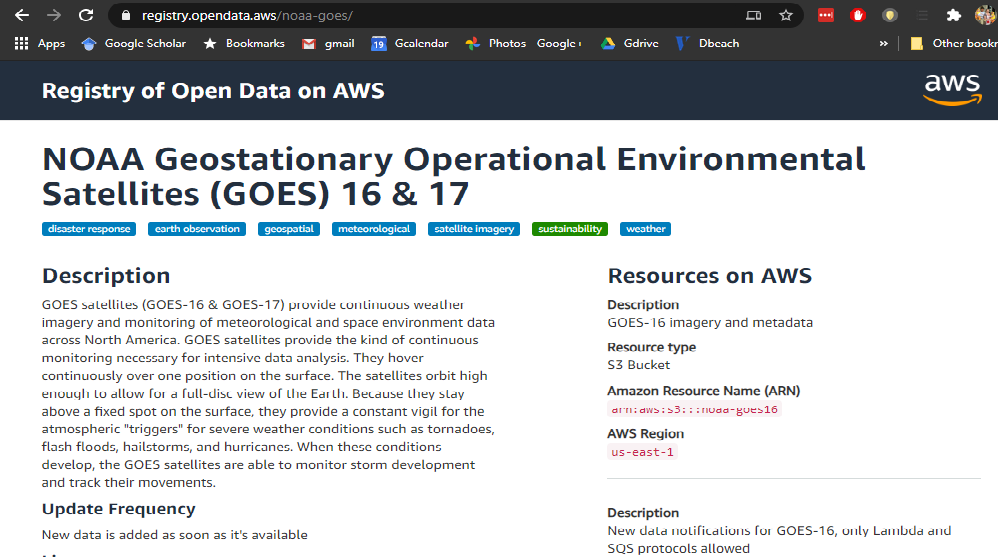

"# How to find an AWS Public Dataset\n",

"\n",

"- click here: [AWS Public Dataset](https://aws.amazon.com/opendata/)\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"- Click on `Find public available data on AWS` button\n",

"- Search for GOES\n",

"- Select [GOES-16 and GOES-17](https://registry.opendata.aws/noaa-goes/)\n",

"\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## NETCDF GEO DATA\n",

"\n",

"## Zarr made it seem so easy to read and access cloud SST data! \n",

"## It is always that easy, right?\n",

"\n",

"- When data is just dumped on the cloud, it is still useful, but can be challenging to use. \n",

"Which brings us to GOES SSTs.....\n",

"\n",

"- Info on the data is here -- GOES has a lot of different parameters and they are all stored in different files with names that are difficult to understand. There are *80* different data products. This link lists them all and explains the different directory names. [AWS info on GOES SST data](https://docs.opendata.aws/noaa-goes16/cics-readme.html). \n",

"\n",

"- The GOES data is netCDF format. There is a different for each of the 80 projects for year/day/hour. A lot of files. I find it really useful to 'browse' s3: buckets so that I can understand the directory and data structure. [Explore S3 structure](https://noaa-goes16.s3.amazonaws.com/index.html). The directory structure is `////`\n",

"\n",

"- In the code below we are going to create a function that searches for all files from a certain day, then creates the keys to opening them. The files are so messy that opening a day takes a little while. There are other ways you could write this function depending on what your analysis goals are, this is just one way to get some data in a reasonable amount of time. \n",

"- This function uses \n",

"- [`s3fs.S3FileSystem`](https://s3fs.readthedocs.io/en/latest/) which holds a connection with a s3 bucket and allows you to list files, etc. \n",

"- [`xr.open_mfdataset`](http://xarray.pydata.org/en/stable/generated/xarray.open_mfdataset.html#xarray.open_mfdataset) opens a list of filenames and concatenates the data along the specified dimensions \n",

"\n",

"Website [https://registry.opendata.aws/noaa-goes/](https://registry.opendata.aws/noaa-goes/)\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"@dask.delayed\n",

"def get_geo_data(sat,lyr,idyjl):\n",

" # arguments\n",

" # sat goes-east,goes-west,himawari\n",

" # lyr year\n",

" # idyjl day of year\n",

" \n",

" d = dt.datetime(lyr,1,1) + dt.timedelta(days=idyjl)\n",

" fs = s3fs.S3FileSystem(anon=True) #connect to s3 bucket!\n",

"\n",

" #create strings for the year and julian day\n",

" imon,idym=d.month,d.day\n",

" syr,sjdy,smon,sdym = str(lyr).zfill(4),str(idyjl).zfill(3),str(imon).zfill(2),str(idym).zfill(2)\n",

" \n",

" #use glob to list all the files in the directory\n",

" if sat=='goes-east':\n",

" file_location,var = fs.glob('s3://noaa-goes16/ABI-L2-SSTF/'+syr+'/'+sjdy+'/*/*.nc'),'SST'\n",

" if sat=='goes-west':\n",

" file_location,var = fs.glob('s3://noaa-goes17/ABI-L2-SSTF/'+syr+'/'+sjdy+'/*/*.nc'),'SST'\n",

" if sat=='himawari':\n",

" file_location,var = fs.glob('s3://noaa-himawari8/AHI-L2-FLDK-SST/'+syr+'/'+smon+'/'+sdym+'/*/*L2P*.nc'),'sea_surface_temperature'\n",

" \n",

" #make a list of links to the file keys\n",

" if len(file_location)<1:\n",

" return file_ob\n",

" file_ob = [fs.open(file) for file in file_location] #open connection to files\n",

" \n",

" #open all the day's data\n",

" ds = xr.open_mfdataset(file_ob,combine='nested',concat_dim='time') #note file is super messed up formatting\n",

" \n",

" #clean up coordinates which are a MESS in GOES\n",

" #rename one of the coordinates that doesn't match a dim & should\n",

" if not sat=='himawari':\n",

" ds = ds.rename({'t':'time'})\n",

" ds = ds.reset_coords()\n",

" else:\n",

" ds = ds.rename({'ni':'x','nj':'y'})\n",

" \n",

" #put in to Celsius\n",

" #ds[var] -= 273.15 #nice python shortcut to +- from itself a-=273.15 is the same as a=a-273.15\n",

" #ds[var].attrs['units'] = '$^\\circ$C'\n",

" \n",

" return ds\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Open a day of GOES-16 (East Coast) Data"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%time\n",

"lyr, idyjl = 2020, 210 #may 30, 2020\n",

"\n",

"ds = dask.delayed(get_geo_data)('goes-east',lyr,idyjl)\n",

"\n",

"ds"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%time\n",

"subset = ds.sel(x=slice(-0.01,0.07215601),y=slice(0.12,0.09)) #reduce to GS region\n",

"\n",

"masked = subset.SST.where(subset.DQF==0).compute()\n",

"\n",

"masked.isel(time=14).plot(vmin=14+273.15,vmax=30+273.15,cmap='inferno')"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%time\n",

"\n",

"mean_dy = masked.mean('time',skipna=True).compute() #here I want all possible values so skipna=True\n",

"\n",

"mean_dy.plot(vmin=14+273.15,vmax=30+273.15,cmap='inferno')"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Lat/Lon\n",

"The data above is on the GOES grid, which isn't very helpful if you want to make a subset by a known lat/lon or to plot borders with Cartopy.\n",

"\n",

"The function below takes in a GOES-16/17 dataset and returns the same dataset with latitude (`lat`) and longitude (`lon`) added as 2D coordinate arrays. The math to do this was taken from https://makersportal.com/blog/2018/11/25/goes-r-satellite-latitude-and-longitude-grid-projection-algorithm "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def calc_latlon(ds):\n",

" # The math for this function was taken from \n",

" # https://makersportal.com/blog/2018/11/25/goes-r-satellite-latitude-and-longitude-grid-projection-algorithm \n",

"\n",

" x = ds.x\n",

" y = ds.y\n",

" goes_imager_projection = ds.goes_imager_projection\n",

" \n",

" x,y = np.meshgrid(x,y)\n",

" \n",

" r_eq = goes_imager_projection.attrs[\"semi_major_axis\"]\n",

" r_pol = goes_imager_projection.attrs[\"semi_minor_axis\"]\n",

" l_0 = goes_imager_projection.attrs[\"longitude_of_projection_origin\"] * (np.pi/180)\n",

" h_sat = goes_imager_projection.attrs[\"perspective_point_height\"]\n",

" H = r_eq + h_sat\n",

" \n",

" a = np.sin(x)**2 + (np.cos(x)**2 * (np.cos(y)**2 + (r_eq**2 / r_pol**2) * np.sin(y)**2))\n",

" b = -2 * H * np.cos(x) * np.cos(y)\n",

" c = H**2 - r_eq**2\n",

" \n",

" r_s = (-b - np.sqrt(b**2 - 4*a*c))/(2*a)\n",

" \n",

" s_x = r_s * np.cos(x) * np.cos(y)\n",

" s_y = -r_s * np.sin(x)\n",

" s_z = r_s * np.cos(x) * np.sin(y)\n",

" \n",

" lat = np.arctan((r_eq**2 / r_pol**2) * (s_z / np.sqrt((H-s_x)**2 +s_y**2))) * (180/np.pi)\n",

" lon = (l_0 - np.arctan(s_y / (H-s_x))) * (180/np.pi)\n",

" \n",

" ds = ds.assign_coords({\n",

" \"lat\":([\"y\",\"x\"],lat),\n",

" \"lon\":([\"y\",\"x\"],lon)\n",

" })\n",

" ds.lat.attrs[\"units\"] = \"degrees_north\"\n",

" ds.lon.attrs[\"units\"] = \"degrees_east\"\n",

"\n",

" return ds"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"ds_latlon = calc_latlon(ds.compute())\n",

"\n",

"ds_latlon"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### It's really slow to use `.where()` to select data \n",

"e.g. \n",

"```python\n",

"subset = ds.where((ds.lon >= lon1) & (ds.lon <= lon2) & (ds.lat >= lat1) & (ds.lat <= lat2), drop=True)\n",

"```\n",

"\n",

"Instead, we can create a function that will return the x/y min and max so we can continue to use `.isel()` to quickly select data.\n",

"\n",

"_note: the `y` slice must be done as `slice(y2,y1)` since the y coordinate is decreasing, so the larger value must come first._"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def get_xy_from_latlon(ds, lats, lons):\n",

" lat1, lat2 = lats\n",

" lon1, lon2 = lons\n",

"\n",

" lat = ds.lat.data\n",

" lon = ds.lon.data\n",

" \n",

" x = ds.x.data\n",

" y = ds.y.data\n",

" \n",

" x,y = np.meshgrid(x,y)\n",

" \n",

" x = x[(lat >= lat1) & (lat <= lat2) & (lon >= lon1) & (lon <= lon2)]\n",

" y = y[(lat >= lat1) & (lat <= lat2) & (lon >= lon1) & (lon <= lon2)] \n",

" \n",

" return ((min(x), max(x)), (min(y), max(y)))"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Now that we have a min/max `x` and `y`, we can subset in the same was as above"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"lats = (31, 53)\n",

"lons = (-85, -56)\n",

"\n",

"(x1,x2), (y1,y2) = get_xy_from_latlon(ds_latlon, lats, lons)\n",

"subset = ds_latlon.sel(x=slice(x1,x2), y=slice(y2,y1))\n",

"\n",

"subset"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### To plot with Cartopy, an axis need to be set up with a projection. More info can be found at https://scitools.org.uk/cartopy/docs/latest/crs/projections.html "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%time\n",

"import cartopy.crs as ccrs\n",

"import cartopy.feature as cfeature\n",

"\n",

"masked = subset.mean(\"time\", skipna=True).where(subset.DQF==0)\n",

"\n",

"# For a faster-running example, plot a single time instead of taking a mean\n",

"# masked = subset.isel(time=14).where(subset.DQF==0)\n",

"\n",

"\n",

"fig = plt.figure()\n",

"ax = fig.add_subplot(111, projection=ccrs.PlateCarree())\n",

"masked.SST.plot(x=\"lon\", y=\"lat\", vmin=14+273.15,vmax=30+273.15,cmap='inferno', ax=ax)\n",

"\n",

"ax.add_feature(cfeature.COASTLINE)\n",

"ax.add_feature(cfeature.STATES)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Read AWS JMA Himawari SSTs\n",

"- define a function to get all the filenames for a day\n",

"- AWS info on Himawari SST data, [here](https://www.goes.noaa.gov/f_himawari-8.html)\n",

"- Explore S3 structure [here](https://noaa-himawari8.s3.amazonaws.com/index.html)\n",

"- SSTs are given in L2P and L3C GHRSST data formats. \n",

"L2P has dims that not mapped to a regular grid. \n",

"L3C is mapped to a grid, with dims lat,lon.\n",

"\n",

"Website [https://registry.opendata.aws/noaa-himawari/](https://registry.opendata.aws/noaa-himawari/)\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%time\n",

"lyr, idyjl = 2020, 212\n",

"\n",

"ds = dask.delayed(get_geo_data)('himawari',lyr,idyjl)\n",

"\n",

"subset = ds.sel(x=slice(-0.05,0.08),y=slice(0.12,0.08))\n",

"\n",

"masked = subset.sea_surface_temperature.where(subset.quality_level>=4)\n",

"\n",

"mean_dy = masked.mean('time',skipna=True).compute() #here I want all possible values so skipna=True\n",

"\n",

"mean_dy.plot(vmin=14+273.15,vmax=30+273.15,cmap='inferno')"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"masked.visualize()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## ERA5 Data Structure on S3\n",

"\n",

"The ERA5 data is organized into NetCDF files per variable, each containing a month of hourly data. \n",

"The directory structure is `/{year}/{month}/main.nc` for all the variables or `/{year}/{month}/data/{var}.nc` for just a single variable.\n",

"\n",

"Variables:\n",

"- snow_density\n",

"- sea_surface_temperature\n",

"- lwe_thickness_of_surface_snow_amount\n",

"- eastward_wind_at_10_metres\n",

"- northward_wind_at_10_metres\n",

"- time1_bounds\n",

"- air_temperature_at_2_metres_1hour_Maximum\n",

"- air_temperature_at_2_metres_1hour_Minimum\n",

"- precipitation_amount_1hour_Accumulation\n",

"- eastward_wind_at_100_metres\n",

"- air_temperature_at_2_metres\n",

"- dew_point_temperature_at_2_metres\n",

"- integral_wrt_time_of_surface_direct_downwelling_shortwave_flux_in_air_1hour_Accumulation\n",

"- northward_wind_at_100_metres\n",

"- air_pressure_at_mean_sea_level\n",

"- surface_air_pressure\n",

"\n",

"For example, the full file path for sea surface temperature for January 2008 is:\n",

"\n",

"/2008/01/data/sea_surface_temperature.nc\n",

"\n",

"- Note that due to the nature of the ERA5 forecast timing, which is run twice daily at 06:00 and 18:00 UTC, the monthly data file begins with data from 07:00 on the first of the month and continues through 06:00 of the following month. We'll see this in the coordinate values of a data file we download later in the notebook.\n",

"\n",

"- Granule variable structure and metadata attributes are stored in main.nc. This file contains coordinate and auxiliary variable data. This file is also annotated using NetCDF CF metadata conventions.\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"#https://nbviewer.jupyter.org/github/awslabs/amazon-asdi/blob/main/examples/dask/notebooks/era5_zarr.ipynb\n",

"# zac flamig\n",

"\n",

"\n",

"def fix_accum_var_dims(ds, var):\n",

" # Some varibles like precip have extra time bounds varibles, we drop them here to allow merging with other variables\n",

" \n",

" # Select variable of interest (drops dims that are not linked to current variable)\n",

" ds = ds[[var]] \n",

"\n",

" if var in ['air_temperature_at_2_metres',\n",

" 'dew_point_temperature_at_2_metres',\n",

" 'air_pressure_at_mean_sea_level',\n",

" 'northward_wind_at_10_metres',\n",

" 'eastward_wind_at_10_metres']:\n",

" \n",

" ds = ds.rename({'time0':'valid_time_end_utc'})\n",

" \n",

" elif var in ['precipitation_amount_1hour_Accumulation',\n",

" 'integral_wrt_time_of_surface_direct_downwelling_shortwave_flux_in_air_1hour_Accumulation']:\n",

" \n",

" ds = ds.rename({'time1':'valid_time_end_utc'})\n",

" \n",

" else:\n",

" print(\"Warning, Haven't seen {var} varible yet! Time renaming might not work.\".format(var=var))\n",

" \n",

" return ds\n",

"\n",

"@dask.delayed\n",

"def s3open(path):\n",

" fs = s3fs.S3FileSystem(anon=True, default_fill_cache=False, \n",

" config_kwargs = {'max_pool_connections': 20})\n",

" return s3fs.S3Map(path, s3=fs)\n",

"\n",

"\n",

"def open_era5_range(start_year, end_year, variables):\n",

" ''' Opens ERA5 monthly Zarr files in S3, given a start and end year (all months loaded) and a list of variables'''\n",

" \n",

" \n",

" file_pattern = 'era5-pds/zarr/{year}/{month}/data/{var}.zarr/'\n",

" \n",

" years = list(np.arange(start_year, end_year+1, 1))\n",

" months = [\"01\", \"02\", \"03\", \"04\", \"05\", \"06\", \"07\", \"08\", \"09\", \"10\", \"11\", \"12\"]\n",

" \n",

" l = []\n",

" for var in variables:\n",

" print(var)\n",

" \n",

" # Get files\n",

" files_mapper = [s3open(file_pattern.format(year=year, month=month, var=var)) for year in years for month in months]\n",

" \n",

" # Look up correct time dimension by variable name\n",

" if var in ['precipitation_amount_1hour_Accumulation']:\n",

" concat_dim='time1'\n",

" else:\n",

" concat_dim='time0'\n",

" \n",

" # Lazy load\n",

" ds = xr.open_mfdataset(files_mapper, engine='zarr', \n",

" concat_dim=concat_dim, combine='nested', \n",

" coords='minimal', compat='override', parallel=True)\n",

" \n",

" # Fix dimension names\n",

" ds = fix_accum_var_dims(ds, var)\n",

" l.append(ds)\n",

" \n",

" ds_out = xr.merge(l)\n",

" \n",

" return ds_out\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%time\n",

"ds = open_era5_range(2018, 2020, [\"sea_surface_temperature\"])\n",

"\n",

"ds"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%%time\n",

"\n",

"ds.sea_surface_temperature[0,:,:].plot()"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"ds.sea_surface_temperature.sel(lon=130,lat=20).plot()"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": []

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.8.6"

}

},

"nbformat": 4,

"nbformat_minor": 4

}