{

"cells": [

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### New to Plotly?\n",

"Plotly's Python library is free and open source! [Get started](https://plotly.com/python/getting-started/) by downloading the client and [reading the primer](https://plotly.com/python/getting-started/).\n",

"

You can set up Plotly to work in [online](https://plotly.com/python/getting-started/#initialization-for-online-plotting) or [offline](https://plotly.com/python/getting-started/#initialization-for-offline-plotting) mode, or in [jupyter notebooks](https://plotly.com/python/getting-started/#start-plotting-online).\n",

"

We also have a quick-reference [cheatsheet](https://images.plot.ly/plotly-documentation/images/python_cheat_sheet.pdf) (new!) to help you get started!\n",

"#### Version Check\n",

"Plotly's python package is updated frequently. Run `pip install plotly --upgrade` to use the latest version."

]

},

{

"cell_type": "code",

"execution_count": 1,

"metadata": {},

"outputs": [

{

"data": {

"text/plain": [

"'2.0.1'"

]

},

"execution_count": 1,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"import plotly\n",

"plotly.__version__"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### What is BigQuery?\n",

"It's a service by Google, which enables analysis of massive datasets. You can use the traditional SQL-like language to query the data. You can host your own data on BigQuery to use the super fast performance at scale."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Google BigQuery Public Datasets\n",

"\n",

"There are [a few datasets](https://cloud.google.com/bigquery/public-data/) stored in BigQuery, available for general public to use. Some of the publicly available datasets are:\n",

"- Hacker News (stories and comments)\n",

"- USA Baby Names\n",

"- GitHub activity data\n",

"- USA disease surveillance"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We will use the [Hacker News](https://cloud.google.com/bigquery/public-data/hacker-news) dataset for our analysis."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Imports"

]

},

{

"cell_type": "code",

"execution_count": 1,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"import plotly.plotly as py\n",

"import plotly.graph_objs as go\n",

"import plotly.figure_factory as ff\n",

"\n",

"import pandas as pd\n",

"from pandas.io import gbq # to communicate with Google BigQuery"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Prerequisites\n",

"\n",

"You need to have the following libraries:\n",

"* [python-gflags](http://code.google.com/p/python-gflags/)\n",

"* httplib2\n",

"* google-api-python-client\n",

"\n",

"#### Create Project\n",

"\n",

"A project can be created on the [Google Developer Console](https://console.developers.google.com/iam-admin/projects).\n",

"\n",

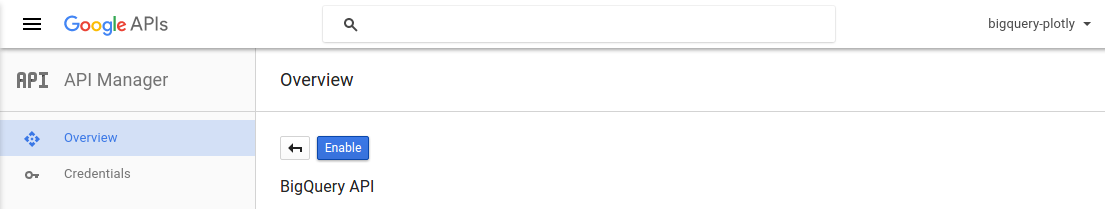

"#### Enable BigQuery API\n",

"\n",

"You need to activate the BigQuery API for the project."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

""

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

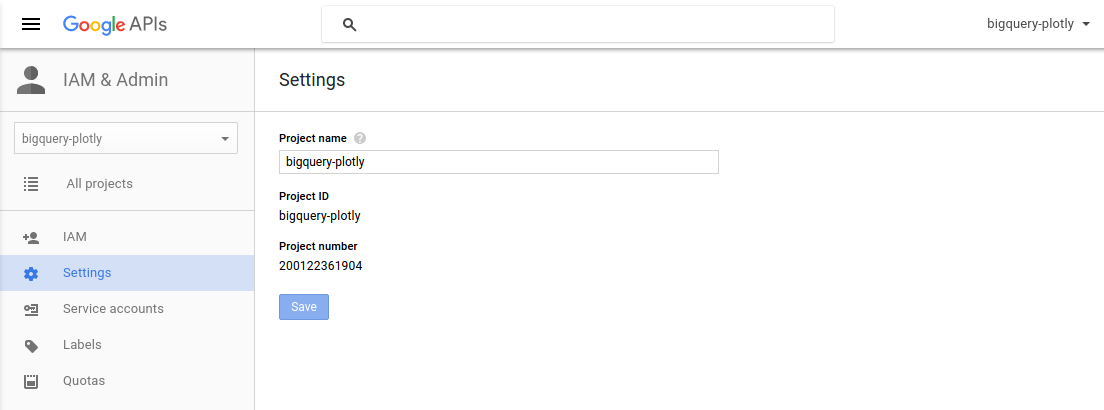

"You will have find the `Project ID` for your project to get the queries working.\n",

"\n",

""

]

},

{

"cell_type": "markdown",

"metadata": {

"collapsed": true

},

"source": [

"project_id = 'bigquery-plotly'"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Top 10 Most Active Users on Hacker News (by total stories submitted)\n",

"\n",

"We will select the top 10 high scoring `author`s and their respective `score` values."

]

},

{

"cell_type": "code",

"execution_count": 4,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"top10_active_users_query = \"\"\"\n",

"SELECT\n",

" author AS User,\n",

" count(author) as Stories\n",

"FROM\n",

" [fh-bigquery:hackernews.stories]\n",

"GROUP BY\n",

" User\n",

"ORDER BY\n",

" Stories DESC\n",

"LIMIT\n",

" 10\n",

"\"\"\""

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The `pandas.gbq` module provides a method `read_gbq` to query the BigQuery stored dataset and stores the result as a `DataFrame`."

]

},

{

"cell_type": "code",

"execution_count": 5,

"metadata": {

"scrolled": true

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Requesting query... ok.\n",

"Query running...\n",

" Elapsed 8.74 s. Waiting...\n",

"Query done.\n",

"Cache hit.\n",

"\n",

"Retrieving results...\n",

" Got page: 1; 100.0% done. Elapsed 9.36 s.\n",

"Got 10 rows.\n",

"\n",

"Total time taken 9.37 s.\n",

"Finished at 2016-07-19 17:28:38.\n"

]

}

],

"source": [

"try:\n",

" top10_active_users_df = gbq.read_gbq(top10_active_users_query, project_id=project_id)\n",

"except:\n",

" print 'Error reading the dataset'"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Using the `create_table` method from the `FigureFactory` module, we can generate a table from the resulting `DataFrame`."

]

},

{

"cell_type": "code",

"execution_count": 7,

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

""

],

"text/plain": [

""

]

},

"execution_count": 7,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"top_10_users_table = ff.create_table(top10_active_users_df)\n",

"py.iplot(top_10_users_table, filename='top-10-active-users')"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Top 10 Hacker News Submissions (by score)\n",

"\n",

"We will select the `title` and `score` columns in the descending order of their `score`, keeping only top 10 stories among all."

]

},

{

"cell_type": "code",

"execution_count": 8,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"top10_story_query = \"\"\"\n",

"SELECT\n",

" title,\n",

" score,\n",

" time_ts AS timestamp\n",

"FROM\n",

" [fh-bigquery:hackernews.stories]\n",

"ORDER BY\n",

" score DESC\n",

"LIMIT\n",

" 10\n",

"\"\"\""

]

},

{

"cell_type": "code",

"execution_count": 9,

"metadata": {

"scrolled": true

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Requesting query... ok.\n",

"Query running...\n",

" Elapsed 13.54 s. Waiting...\n",

"Query done.\n",

"Cache hit.\n",

"\n",

"Retrieving results...\n",

" Got page: 1; 100.0% done. Elapsed 14.34 s.\n",

"Got 10 rows.\n",

"\n",

"Total time taken 14.34 s.\n",

"Finished at 2016-07-19 17:28:57.\n"

]

}

],

"source": [

"try:\n",

" top10_story_df = gbq.read_gbq(top10_story_query, project_id=project_id)\n",

"except:\n",

" print 'Error reading the dataset'"

]

},

{

"cell_type": "code",

"execution_count": 10,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"# Create a table figure from the DataFrame\n",

"top10_story_figure = FF.create_table(top10_story_df)\n",

"\n",

"# Scatter trace for the bubble chart timeseries\n",

"story_timeseries_trace = go.Scatter(\n",

" x=top10_story_df['timestamp'],\n",

" y=top10_story_df['score'],\n",

" xaxis='x2',\n",

" yaxis='y2',\n",

" mode='markers',\n",

" text=top10_story_df['title'],\n",

" marker=dict(\n",

" color=[80 + i*5 for i in range(10)],\n",

" size=top10_story_df['score']/50,\n",

" showscale=False\n",

" )\n",

")\n",

"\n",

"# Add the trace data to the figure\n",

"top10_story_figure['data'].extend(go.Data([story_timeseries_trace]))\n",

"\n",

"# Subplot layout\n",

"top10_story_figure.layout.yaxis.update({'domain': [0, .45]})\n",

"top10_story_figure.layout.yaxis2.update({'domain': [.6, 1]})\n",

"\n",

"# Y-axis of the graph should be anchored with X-axis\n",

"top10_story_figure.layout.yaxis2.update({'anchor': 'x2'})\n",

"top10_story_figure.layout.xaxis2.update({'anchor': 'y2'})\n",

"\n",

"# Add the height and title attribute\n",

"top10_story_figure.layout.update({'height':900})\n",

"top10_story_figure.layout.update({'title': 'Highest Scoring Submissions on Hacker News'})\n",

"\n",

"# Update the background color for plot and paper\n",

"top10_story_figure.layout.update({'paper_bgcolor': 'rgb(243, 243, 243)'})\n",

"top10_story_figure.layout.update({'plot_bgcolor': 'rgb(243, 243, 243)'})\n",

"\n",

"# Add the margin to make subplot titles visible\n",

"top10_story_figure.layout.margin.update({'t':75, 'l':50})\n",

"top10_story_figure.layout.yaxis2.update({'title': 'Upvote Score'})\n",

"top10_story_figure.layout.xaxis2.update({'title': 'Post Time'})"

]

},

{

"cell_type": "code",

"execution_count": 39,

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

""

],

"text/plain": [

""

]

},

"execution_count": 39,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"py.image.save_as(top10_story_figure, filename='top10-posts.png')\n",

"py.iplot(top10_story_figure, filename='highest-scoring-submissions')"

]

},

{

"cell_type": "markdown",

"metadata": {

"collapsed": true

},

"source": [

"You can see that the lists consist of the stories involving some big names.\n",

"* \"Death of Steve Jobs and Aaron Swartz\"\n",

"* \"Announcements of the Hyperloop and the game 2048\".\n",

"* \"Microsoft open sourcing the .NET\"\n",

"\n",

"The story title is visible when you `hover` over the bubbles."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### From which Top-level domain (TLD) most of the stories come?\n",

"Here we have used the url-function [TLD](https://cloud.google.com/bigquery/query-reference#tld) from BigQuery's query syntax. We collect the domain for all URLs with their respective count, and group them by it."

]

},

{

"cell_type": "code",

"execution_count": 12,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"tld_share_query = \"\"\"\n",

"SELECT\n",

" TLD(url) AS domain,\n",

" count(score) AS stories\n",

"FROM\n",

" [fh-bigquery:hackernews.stories]\n",

"GROUP BY\n",

" domain\n",

"ORDER BY\n",

" stories DESC\n",

"LIMIT 10\n",

"\"\"\""

]

},

{

"cell_type": "code",

"execution_count": 13,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Requesting query... ok.\n",

"Query running...\n",

"Query done.\n",

"Cache hit.\n",

"\n",

"Retrieving results...\n",

" Got page: 1; 100.0% done. Elapsed 7.09 s.\n",

"Got 10 rows.\n",

"\n",

"Total time taken 7.09 s.\n",

"Finished at 2016-07-19 17:29:10.\n"

]

}

],

"source": [

"try:\n",

" tld_share_df = gbq.read_gbq(tld_share_query, project_id=project_id)\n",

"except:\n",

" print 'Error reading the dataset'"

]

},

{

"cell_type": "code",

"execution_count": 38,

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

""

],

"text/plain": [

""

]

},

"execution_count": 38,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"labels = tld_share_df['domain']\n",

"values = tld_share_df['stories']\n",

"\n",

"tld_share_trace = go.Pie(labels=labels, values=values)\n",

"data = [tld_share_trace]\n",

"\n",

"layout = go.Layout(\n",

" title='Submissions shared by Top-level domains'\n",

")\n",

"\n",

"fig = go.Figure(data=data, layout=layout)\n",

"py.iplot(fig)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We can notice that the **.com** top-level domain contributes to most of the stories on Hacker News.\n",

"#### Public response to the \"Who Is Hiring?\" posts\n",

"There is an account on Hacker News by the name [whoishiring](https://news.ycombinator.com/user?id=whoishiring). This account automatically submits a 'Who is Hiring?' post at 11 AM Eastern time on the first weekday of every month."

]

},

{

"cell_type": "code",

"execution_count": 16,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"wih_query = \"\"\"\n",

"SELECT\n",

" id,\n",

" title,\n",

" score,\n",

" time_ts\n",

"FROM\n",

" [fh-bigquery:hackernews.stories]\n",

"WHERE\n",

" author == 'whoishiring' AND\n",

" LOWER(title) contains 'who is hiring?'\n",

"ORDER BY\n",

" time\n",

"\"\"\""

]

},

{

"cell_type": "code",

"execution_count": 17,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Requesting query... ok.\n",

"Query running...\n",

"Query done.\n",

"Cache hit.\n",

"\n",

"Retrieving results...\n",

"Got 52 rows.\n",

"\n",

"Total time taken 4.73 s.\n",

"Finished at 2016-07-19 17:29:19.\n"

]

}

],

"source": [

"try:\n",

" wih_df = gbq.read_gbq(wih_query, project_id=project_id)\n",

"except:\n",

" print 'Error reading the dataset'"

]

},

{

"cell_type": "code",

"execution_count": 37,

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

""

],

"text/plain": [

""

]

},

"execution_count": 37,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"trace = go.Scatter(\n",

" x=wih_df['time_ts'],\n",

" y=wih_df['score'],\n",

" mode='markers+lines',\n",

" text=wih_df['title'],\n",

" marker=dict(\n",

" size=wih_df['score']/50\n",

" )\n",

")\n",

"\n",

"layout = go.Layout(\n",

" title='Public response to the \"Who Is Hiring?\" posts',\n",

" xaxis=dict(\n",

" title=\"Post Time\"\n",

" ),\n",

" yaxis=dict(\n",

" title=\"Upvote Score\"\n",

" )\n",

")\n",

"\n",

"data = [trace]\n",

"\n",

"fig = go.Figure(data=data, layout=layout)\n",

"py.iplot(fig, filename='whoishiring-public-response')"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Submission Traffic Volume in a Week"

]

},

{

"cell_type": "code",

"execution_count": 19,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"week_traffic_query = \"\"\"\n",

"SELECT\n",

" DAYOFWEEK(time_ts) as Weekday,\n",

" count(DAYOFWEEK(time_ts)) as story_counts\n",

"FROM\n",

" [fh-bigquery:hackernews.stories]\n",

"GROUP BY\n",

" Weekday\n",

"ORDER BY\n",

" Weekday\n",

"\"\"\""

]

},

{

"cell_type": "code",

"execution_count": 20,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Requesting query... ok.\n",

"Query running...\n",

"Query done.\n",

"Cache hit.\n",

"\n",

"Retrieving results...\n",

"Got 8 rows.\n",

"\n",

"Total time taken 5.26 s.\n",

"Finished at 2016-07-19 17:29:29.\n"

]

}

],

"source": [

"try:\n",

" week_traffic_df = gbq.read_gbq(week_traffic_query, project_id=project_id)\n",

"except:\n",

" print 'Error reading the dataset'"

]

},

{

"cell_type": "code",

"execution_count": 36,

"metadata": {

"scrolled": false

},

"outputs": [

{

"data": {

"text/html": [

""

],

"text/plain": [

""

]

},

"execution_count": 36,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"week_traffic_df['Day'] = ['NULL', 'Sunday', 'Monday', 'Tuesday', 'Wednesday', 'Thursday', 'Friday', 'Saturday']\n",

"week_traffic_df = week_traffic_df.drop(week_traffic_df.index[0])\n",

"\n",

"trace = go.Scatter(\n",

" x=week_traffic_df['Day'],\n",

" y=week_traffic_df['story_counts'],\n",

" mode='lines',\n",

" text=week_traffic_df['Day']\n",

")\n",

"\n",

"layout = go.Layout(\n",

" title='Submission Traffic Volume (Week Days)',\n",

" xaxis=dict(\n",

" title=\"Day of the Week\"\n",

" ),\n",

" yaxis=dict(\n",

" title=\"Total Submissions\"\n",

" )\n",

")\n",

"\n",

"data = [trace]\n",

"\n",

"fig = go.Figure(data=data, layout=layout)\n",

"py.iplot(fig, filename='submission-traffic-volume')"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We can observe that the Hacker News faces fewer submissions during the weekends.\n",

"#### Programming Language Trend on HackerNews\n",

"We will compare the trends for the Python and PHP programming languages, using the Hacker News post titles."

]

},

{

"cell_type": "code",

"execution_count": 24,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"python_query = \"\"\"\n",

"SELECT\n",

" YEAR(time_ts) as years,\n",

" COUNT(YEAR(time_ts )) as trends\n",

"FROM\n",

" [fh-bigquery:hackernews.stories]\n",

"WHERE\n",

" LOWER(title) contains 'python'\n",

"GROUP BY\n",

" years\n",

"ORDER BY\n",

" years\n",

"\"\"\"\n",

"\n",

"php_query = \"\"\"\n",

"SELECT\n",

" YEAR(time_ts) as years,\n",

" COUNT(YEAR(time_ts )) as trends\n",

"FROM\n",

" [fh-bigquery:hackernews.stories]\n",

"WHERE\n",

" LOWER(title) contains 'php'\n",

"GROUP BY\n",

" years\n",

"ORDER BY\n",

" years\n",

"\"\"\""

]

},

{

"cell_type": "code",

"execution_count": 25,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Requesting query... ok.\n",

"Query running...\n",

" Elapsed 10.07 s. Waiting...\n",

"Query done.\n",

"Cache hit.\n",

"\n",

"Retrieving results...\n",

" Got page: 1; 100.0% done. Elapsed 10.92 s.\n",

"Got 9 rows.\n",

"\n",

"Total time taken 10.93 s.\n",

"Finished at 2016-07-19 17:29:44.\n"

]

}

],

"source": [

"try:\n",

" python_df = gbq.read_gbq(python_query, project_id=project_id)\n",

"except:\n",

" print 'Error reading the dataset'"

]

},

{

"cell_type": "code",

"execution_count": 26,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Requesting query... ok.\n",

"Query running...\n",

" Elapsed 9.28 s. Waiting...\n",

"Query done.\n",

"Cache hit.\n",

"\n",

"Retrieving results...\n",

" Got page: 1; 100.0% done. Elapsed 9.91 s.\n",

"Got 9 rows.\n",

"\n",

"Total time taken 9.92 s.\n",

"Finished at 2016-07-19 17:29:54.\n"

]

}

],

"source": [

"try:\n",

" php_df = gbq.read_gbq(php_query, project_id=project_id)\n",

"except:\n",

" print 'Error reading the dataset'"

]

},

{

"cell_type": "code",

"execution_count": 35,

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

""

],

"text/plain": [

""

]

},

"execution_count": 35,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"trace1 = go.Scatter(\n",

" x=python_df['years'],\n",

" y=python_df['trends'],\n",

" mode='lines',\n",

" line=dict(color='rgba(115,115,115,1)', width=4),\n",

" connectgaps=True,\n",

")\n",

"\n",

"trace2 = go.Scatter(\n",

" x=[python_df['years'][0], python_df['years'][8]],\n",

" y=[python_df['trends'][0], python_df['trends'][8]],\n",

" mode='markers',\n",

" marker=dict(color='rgba(115,115,115,1)', size=8)\n",

")\n",

"\n",

"trace3 = go.Scatter(\n",

" x=php_df['years'],\n",

" y=php_df['trends'],\n",

" mode='lines',\n",

" line=dict(color='rgba(189,189,189,1)', width=4),\n",

" connectgaps=True,\n",

")\n",

"\n",

"trace4 = go.Scatter(\n",

" x=[php_df['years'][0], php_df['years'][8]],\n",

" y=[php_df['trends'][0], php_df['trends'][8]],\n",

" mode='markers',\n",

" marker=dict(color='rgba(189,189,189,1)', size=8)\n",

")\n",

"\n",

"traces = [trace1, trace2, trace3, trace4]\n",

"\n",

"layout = go.Layout(\n",

" xaxis=dict(\n",

" showline=True,\n",

" showgrid=False,\n",

" showticklabels=True,\n",

" linecolor='rgb(204, 204, 204)',\n",

" linewidth=2,\n",

" autotick=False,\n",

" ticks='outside',\n",

" tickcolor='rgb(204, 204, 204)',\n",

" tickwidth=2,\n",

" ticklen=5,\n",

" tickfont=dict(\n",

" family='Arial',\n",

" size=12,\n",

" color='rgb(82, 82, 82)',\n",

" ),\n",

" ),\n",

" yaxis=dict(\n",

" showgrid=False,\n",

" zeroline=False,\n",

" showline=False,\n",

" showticklabels=False,\n",

" ),\n",

" autosize=False,\n",

" margin=dict(\n",

" autoexpand=False,\n",

" l=100,\n",

" r=20,\n",

" t=110,\n",

" ),\n",

" showlegend=False,\n",

")\n",

"\n",

"annotations = []\n",

"\n",

"annotations.append(\n",

" dict(xref='paper', x=0.95, y=python_df['trends'][8],\n",

" xanchor='left', yanchor='middle',\n",

" text='Python',\n",

" font=dict(\n",

" family='Arial',\n",

" size=14,\n",

" color='rgba(49,130,189, 1)'\n",

" ),\n",

" showarrow=False)\n",

")\n",

"\n",

"annotations.append(\n",

" dict(xref='paper', x=0.95, y=php_df['trends'][8],\n",

" xanchor='left', yanchor='middle',\n",

" text='PHP',\n",

" font=dict(\n",

" family='Arial',\n",

" size=14,\n",

" color='rgba(49,130,189, 1)'\n",

" ),\n",

" showarrow=False)\n",

")\n",

"\n",

"annotations.append(\n",

" dict(xref='paper', yref='paper', x=0.5, y=-0.1,\n",

" xanchor='center', yanchor='top',\n",

" text='Source: Hacker News submissions with the title containing Python/PHP',\n",

" font=dict(\n",

" family='Arial',\n",

" size=12,\n",

" color='rgb(150,150,150)'\n",

" ),\n",

" showarrow=False)\n",

")\n",

"\n",

"layout['annotations'] = annotations\n",

"\n",

"fig = go.Figure(data=traces, layout=layout)\n",

"py.iplot(fig, filename='programming-language-trends')"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"As we already know about this trend, Python is dominating PHP throughout the timespan."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Reference \n",

"See https://plotly.com/python/getting-started/ for more information about Plotly's Python Open Source Graphing Library!"

]

},

{

"cell_type": "code",

"execution_count": 2,

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

""

],

"text/plain": [

""

]

},

"metadata": {},

"output_type": "display_data"

},

{

"data": {

"text/html": [

""

],

"text/plain": [

""

]

},

"metadata": {},

"output_type": "display_data"

},

{

"name": "stdout",

"output_type": "stream",

"text": [

"Collecting git+https://github.com/plotly/publisher.git\n",

" Cloning https://github.com/plotly/publisher.git to /private/var/folders/k_/zf24qrfn2kg710j9pdrxzrz40000gn/T/pip-5YqTp1-build\n",

"Installing collected packages: publisher\n",

" Found existing installation: publisher 0.10\n",

" Uninstalling publisher-0.10:\n",

" Successfully uninstalled publisher-0.10\n",

" Running setup.py install for publisher ... \u001b[?25ldone\n",

"\u001b[?25hSuccessfully installed publisher-0.10\n"

]

},

{

"name": "stderr",

"output_type": "stream",

"text": [

"/Users/chelsea/venv/venv2.7/lib/python2.7/site-packages/IPython/nbconvert.py:13: ShimWarning:\n",

"\n",

"The `IPython.nbconvert` package has been deprecated since IPython 4.0. You should import from nbconvert instead.\n",

"\n",

"/Users/chelsea/venv/venv2.7/lib/python2.7/site-packages/publisher/publisher.py:53: UserWarning:\n",

"\n",

"Did you \"Save\" this notebook before running this command? Remember to save, always save.\n",

"\n"

]

}

],

"source": [

"from IPython.display import display, HTML\n",

"\n",

"display(HTML(''))\n",

"display(HTML(''))\n",

"\n",

"! pip install git+https://github.com/plotly/publisher.git --upgrade\n",

"import publisher\n",

"publisher.publish(\n",

" 'BigQuery-Plotly.ipynb', 'python/google_big_query/', 'Google Big-Query',\n",

" 'How to make your-tutorial-chart plots in Python with Plotly.',\n",

" title = 'Google Big Query | plotly',\n",

" has_thumbnail='true', thumbnail='thumbnail/bigquery2.jpg', \n",

" language='python', page_type='example_index',\n",

" display_as='databases', order=7) "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": []

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 2",

"language": "python",

"name": "python2"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 2

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython2",

"version": "2.7.10"

}

},

"nbformat": 4,

"nbformat_minor": 1

}