{

"cells": [

{

"cell_type": "markdown",

"id": "medical-girlfriend",

"metadata": {},

"source": [

"# [Distributed statistical inference with `pyhf`](https://indico.cern.ch/event/1019958/contributions/4418598/)"

]

},

{

"cell_type": "markdown",

"id": "c43090d4-93d0-4f18-bead-a407b9c5aa9f",

"metadata": {},

"source": [

"## Cursorary introduction of `pyhf`"

]

},

{

"cell_type": "markdown",

"id": "704fae01-d281-4136-9376-561b2cb57b78",

"metadata": {},

"source": [

"For the sake of brevity and time, we won't go into a full discussion of what `pyhf` is and what you can do with it. For now we'll point you to the [latest `pyhf` tutorial for `pyhf` `v0.6.2`](https://github.com/pyhf/pyhf-tutorial/tree/786702385e003511bbce27773c48df8769dfcfcb) as well as our vCHEP 2021 talk: [Distributed statistical inference with `pyhf` enabled through `funcX`](https://indico.cern.ch/event/948465/contributions/4324013/).\n",

"\n",

"Very shortly though, `pyhf` is a pure-Python implimentation of the `HistFactory` family of statistical models that through optional computational backends like JAX provides autodifferentiation and hardware acceleration on GPUs. `pyhf` is part of Scikit-HEP and is designed to have a clear Pythonic API with the goal of making it easier and clearer to produce and interpret binned models.\n",

"\n",

"Taking an example from the `pyhf` project `README`, this is all the code that is needed to build a simple 1-bin model and then to perform a hypothesis test scan across multiple parameters of interest (POIs), plot those results, and inverting that determine the 95% CL upper limit on the POI value."

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "798befe8-afab-410b-9a5d-58e5d03ec321",

"metadata": {},

"outputs": [],

"source": [

"import matplotlib.pyplot as plt\n",

"import numpy as np\n",

"import pyhf\n",

"from pyhf.contrib.viz import brazil"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "1adba4b9-37a5-47d1-b2e8-22f68d3ba4fa",

"metadata": {},

"outputs": [],

"source": [

"pyhf.set_backend(\"numpy\")\n",

"model = pyhf.simplemodels.uncorrelated_background(\n",

" signal=[10.0], bkg=[50.0], bkg_uncertainty=[7.0]\n",

")\n",

"data = [55.0] + model.config.auxdata\n",

"\n",

"poi_vals = np.linspace(0, 5, 41)\n",

"results = [\n",

" pyhf.infer.hypotest(\n",

" test_poi, data, model, test_stat=\"qtilde\", return_expected_set=True\n",

" )\n",

" for test_poi in poi_vals\n",

"]\n",

"\n",

"fig, ax = plt.subplots()\n",

"fig.set_size_inches(7, 5)\n",

"plot = brazil.plot_results(poi_vals, results, ax=ax)"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "24edc867-ea13-4cfe-9c31-80947bea9121",

"metadata": {},

"outputs": [],

"source": [

"obs_limit, exp_limits, (scan, results) = pyhf.infer.intervals.upperlimit(\n",

" data, model, poi_vals, return_results=True\n",

")\n",

"print(f\"observed limit: {obs_limit}\")"

]

},

{

"cell_type": "markdown",

"id": "f2e17a9d-8c97-4b4b-bbd0-1f022710d09e",

"metadata": {},

"source": [

"The important part to emphasize for the purposes of this notebook talk though is just that `pyhf` allows for **statistical modelling of binned models** and allows for **fast fitting using Pythonic APIs**."

]

},

{

"cell_type": "markdown",

"id": "40cf0b58-45f5-4083-95b0-e6e026df588d",

"metadata": {},

"source": [

"## Introduction to [`funcX`](https://funcx.readthedocs.io/en/latest/) - High Performance Function Serving"

]

},

{

"cell_type": "markdown",

"id": "797ca63b-c85b-4da5-9c74-c5146fe09f70",

"metadata": {},

"source": [

"* `funcX` is a high-performance Function as a Service (FaaS) platform\n",

"* Designed to orchestrate scientific workloads across **heterogeneous computing resources** (clusters, clouds, and supercomputers) and **task execution providers** (HTCondor, Slurm, Torque, and Kubernetes)\n",

"* Leverages [Parsl](https://parsl.readthedocs.io/en/stable/) (flexible and scalable parallel programming library for Python) for efficient parallelism and managing concurrent task execution"

]

},

{

"cell_type": "markdown",

"id": "3df87df3-cfc0-4111-b770-4a99a260034a",

"metadata": {},

"source": [

"**`funcX` endpoints** are logical entities that represent a specified computer resource.\n",

"\n",

"* Managed by an agent process allowing the `funcX` service to dispatch **user defined functions** to resources for execution\n",

"\n",

"The agent handles:\n",

"- Authentication through (Globus) and authorization\n",

"- Provisioning of nodes on the compute resource\n",

"- Monitoring and management\n",

"\n",

"We'll see a bit more in a little bit"

]

},

{

"cell_type": "markdown",

"id": "jewish-statistics",

"metadata": {},

"source": [

"## Demo of `funcX`"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "negative-cherry",

"metadata": {},

"outputs": [],

"source": [

"from time import sleep\n",

"\n",

"import funcx\n",

"from funcx.sdk.client import FuncXClient"

]

},

{

"cell_type": "markdown",

"id": "noticed-variable",

"metadata": {},

"source": [

"### Endpoint Creation (On execution machine)"

]

},

{

"cell_type": "markdown",

"id": "european-comedy",

"metadata": {},

"source": [

"With the `funcx-endpoint` CLI API"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "mighty-canberra",

"metadata": {},

"outputs": [],

"source": [

"! funcx-endpoint --help"

]

},

{

"cell_type": "markdown",

"id": "clean-belize",

"metadata": {},

"source": [

"you need to create a template environment for your endpoint."

]

},

{

"cell_type": "markdown",

"id": "sunrise-brazilian",

"metadata": {},

"source": [

"```\n",

"$ funcx-endpoint configure pyhf\n",

"```"

]

},

{

"cell_type": "markdown",

"id": "alien-motivation",

"metadata": {},

"source": [

"Which will create a default `funcX` configuration file at `~/.funcx/pyhf/config.py`."

]

},

{

"cell_type": "markdown",

"id": "numerical-stuart",

"metadata": {},

"source": [

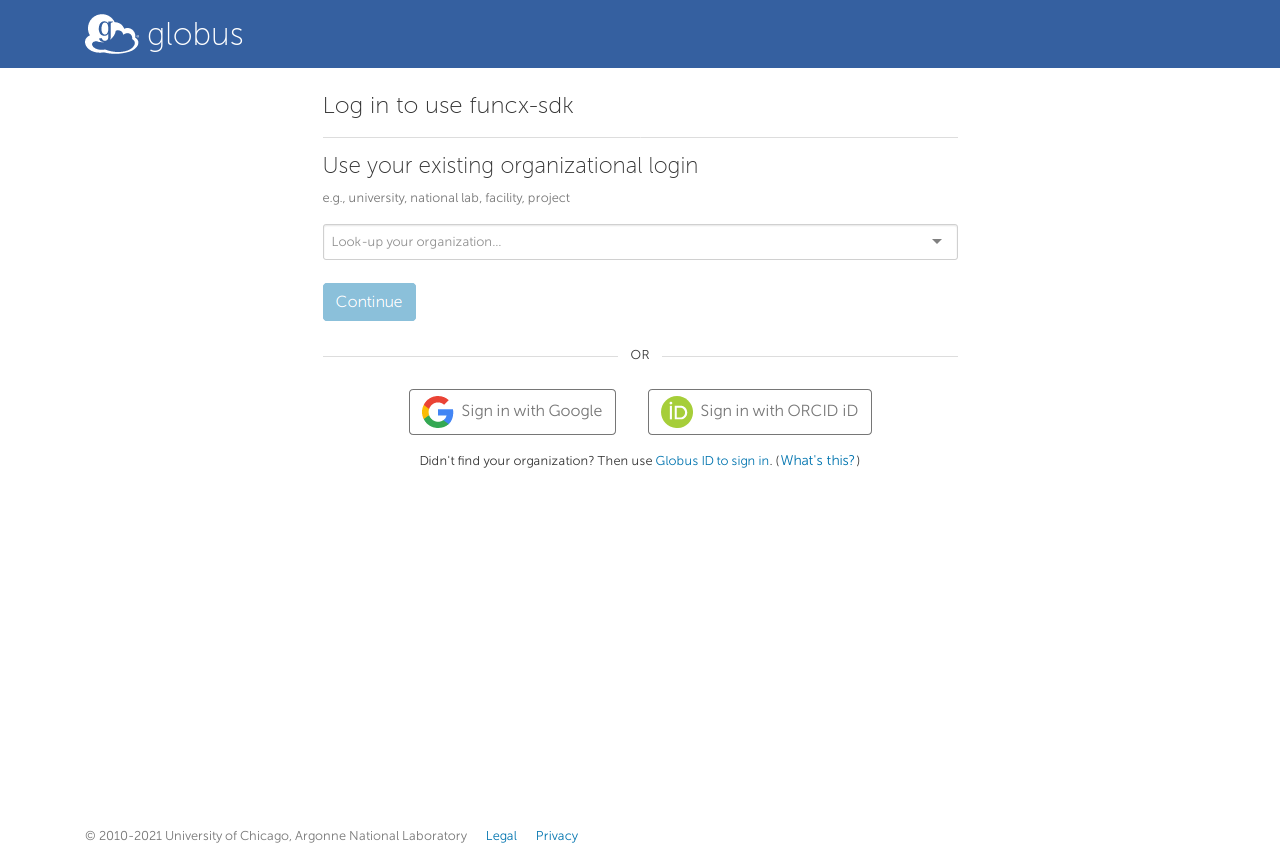

"1. Note that `funcX` requires the use of [Gloubs](https://www.globus.org/) and so will require you to first login to a Globus account to use the `funcx-sdk`. Globus allows authentication through existing organizational logins or through Google accounts or [ORCID iD](https://orcid.org/) so this shouldn't be a barrier to use.\n",

"

\n",

"\n",

"

\n",

"2. Once you authenticate with Globus you'll then need to approve the `funcx-sdk`'s required permissions and you'll be given a time limited authorization code.\n",

"3. Copy this code and paste it back into your terminal you ran `funcx-endpoint configure pyhf` in where you're asked to \"Please Paste your Auth Code Below\""

]

},

{

"cell_type": "markdown",

"id": "spatial-toddler",

"metadata": {},

"source": [

"Upon success you'll see\n",

"\n",

"```\n",

"A default profile has been create for at /home/jovyan/.funcx/pyhf/config.py\n",

"Configure this file and try restarting with:\n",

" $ funcx-endpoint start pyhf\n",

"```"

]

},

{

"cell_type": "markdown",

"id": "possible-parallel",

"metadata": {},

"source": [

"> If you're following along you'll want to switch over to a terminal to make this part easier"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "breathing-excerpt",

"metadata": {},

"outputs": [],

"source": [

"! echo \"funcx-endpoint configure pyhf\"\n",

"! ls -l ~/.funcx/pyhf/config.py"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "narrative-stationery",

"metadata": {},

"outputs": [],

"source": [

"! cat ~/.funcx/pyhf/config.py"

]

},

{

"cell_type": "markdown",

"id": "institutional-matrix",

"metadata": {},

"source": [

"We'll go a step further though and use a prepared `funcX` configuration found under `funcX/binder-config.py`."

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "detected-dialogue",

"metadata": {},

"outputs": [],

"source": [

"! cp funcX/binder-config.py ~/.funcx/pyhf/config.py"

]

},

{

"cell_type": "markdown",

"id": "e3a54f1b-437d-4f4c-a5da-197b5a07be18",

"metadata": {},

"source": [

"and look at it again"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "28f49534-5d0a-45e5-ab75-c1300d43a6b2",

"metadata": {},

"outputs": [],

"source": [

"! cat ~/.funcx/pyhf/config.py"

]

},

{

"cell_type": "markdown",

"id": "b9bcf603-0052-44c8-ad56-194ed81adc8d",

"metadata": {},

"source": [

"Let's break down some relevant information from Parsl\n",

"\n",

"* [`block`](https://parsl.readthedocs.io/en/1.1.0/userguide/execution.html#blocks): Basic unit of resources acquired from a provider\n",

"* [`max_blocks`](https://parsl.readthedocs.io/en/1.1.0/userguide/execution.html#elasticity): Maximum number of blocks that can be active per executor\n",

"* [`nodes_per_block`](https://parsl.readthedocs.io/en/1.1.0/userguide/execution.html#blocks): Number of nodes requested per block\n",

"* [`parallelism`](https://parsl.readthedocs.io/en/1.1.0/userguide/execution.html#parallelism): Ratio of task execution capacity to the sum of running tasks and available tasks"

]

},

{

"cell_type": "markdown",

"id": "f399f382-84b2-4a14-9fc5-6cd0629dda64",

"metadata": {},

"source": [

"And let's quickly consider this example from the [Parsl docs](https://parsl.readthedocs.io/en/1.1.0/userguide/execution.html#configuration) that `funcX` extends\n",

"\n",

"```python\n",

"from parsl.config import Config\n",

"from libsubmit.providers.local.local import Local\n",

"from parsl.executors import HighThroughputExecutor\n",

"\n",

"config = Config(\n",

" executors=[\n",

" HighThroughputExecutor(\n",

" label='local_htex',\n",

" workers_per_node=2,\n",

" provider=Local(\n",

" min_blocks=1,\n",

" init_blocks=1,\n",

" max_blocks=2,\n",

" nodes_per_block=1,\n",

" parallelism=0.5\n",

" )\n",

" )\n",

" ]\n",

")\n",

"```\n",

"\n",

"[](https://parsl.readthedocs.io/en/1.1.0/userguide/execution.html#configuration)\n",

"\n",

"

\n",

"**What's happening in the GIF above**:\n",

"\n",

"* `9` taks to compute\n",

"* Tasks are allocated to the first block until its task_capacity (here `4` tasks) reached\n",

"* Task `5`: First block full and `5/9` > `parallelism` so Parsl provisions a new block for executing the remaining tasks"

]

},

{

"cell_type": "markdown",

"id": "unusual-lottery",

"metadata": {},

"source": [

"Okay, now we'll start the endpoint"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "gross-senior",

"metadata": {},

"outputs": [],

"source": [

"! funcx-endpoint start pyhf"

]

},

{

"cell_type": "markdown",

"id": "residential-rehabilitation",

"metadata": {},

"source": [

"and you can verify that it is registered and up"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "modern-graphics",

"metadata": {},

"outputs": [],

"source": [

"! funcx-endpoint list"

]

},

{

"cell_type": "markdown",

"id": "leading-tuition",

"metadata": {},

"source": [

"**N.B.**: You'll want to take careful note of this `uuid` as this is the endpoint ID that you'll have your `funcX` code use.\n",

"\n",

"A good way to deal with this is to save it in a `endpoint_id.txt` file that is ignored from version control."

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "alternative-freeware",

"metadata": {},

"outputs": [],

"source": [

"! funcx-endpoint list | grep pyhf | awk '{print $(NF-1)}' > endpoint_id.txt\n",

"! cat endpoint_id.txt"

]

},

{

"cell_type": "markdown",

"id": "crude-pavilion",

"metadata": {},

"source": [

"## Using funcX for (Fitting) Functions as a Service (FaaS)"

]

},

{

"cell_type": "markdown",

"id": "df5ef8d6-7658-4139-8faf-781c4123b403",

"metadata": {},

"source": [

"To keep this as easy as possible to follow along with, we've done something that isn't very practical: We setup our `funcx` endpoint locally (this is probably not where your dedicate compute will be, but for demonstration purposes we'll pretend that our `funcx-endpoint` lives on another machine/cluster someplace)."

]

},

{

"cell_type": "markdown",

"id": "religious-editor",

"metadata": {},

"source": [

"### Prepare Functions (On your local submission machine)"

]

},

{

"cell_type": "markdown",

"id": "df6ceb29-753c-45c2-be7f-c366381d5868",

"metadata": {},

"source": [

"Locally we can now write our code that we'd like `funcX` to run for us **as functions** (remember FaaS)"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "collectible-movie",

"metadata": {},

"outputs": [],

"source": [

"def simple_example(backend=\"numpy\", test_poi=1.0):\n",

" import time\n",

"\n",

" import pyhf\n",

"\n",

" pyhf.set_backend(backend)\n",

"\n",

" tick = time.time()\n",

" model = pyhf.simplemodels.uncorrelated_background(\n",

" signal=[12.0, 11.0], bkg=[50.0, 52.0], bkg_uncertainty=[3.0, 7.0]\n",

" )\n",

"\n",

" data = model.expected_data(model.config.suggested_init())\n",

" return {\n",

" \"cls_obs\": float(\n",

" pyhf.infer.hypotest(test_poi, data, model, test_stat=\"qtilde\")\n",

" ),\n",

" \"fit-time\": time.time() - tick,\n",

" }"

]

},

{

"cell_type": "markdown",

"id": "770a8bac-43eb-475b-ae12-dda39b9099da",

"metadata": {},

"source": [

"The return is just a `dict` of the observed $\\mathrm{CL}_{s}$ value and the time to fit"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "czech-tooth",

"metadata": {},

"outputs": [],

"source": [

"simple_example()"

]

},

{

"cell_type": "markdown",

"id": "047441c1-6e25-446b-854f-99749c6b116a",

"metadata": {},

"source": [

"we can then initalize our local `funcX` client and **register** our function with it for execution"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "theoretical-words",

"metadata": {},

"outputs": [],

"source": [

"# Initialize funcX client\n",

"fxc = FuncXClient()\n",

"fxc.max_requests = 200"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "opposite-spending",

"metadata": {},

"outputs": [],

"source": [

"# register functions\n",

"infer_func = fxc.register_function(simple_example)"

]

},

{

"cell_type": "markdown",

"id": "16d35d97-d254-44ef-820d-0ec71d73e755",

"metadata": {},

"source": [

"With our functions registered we can now have the `funcx` client serialize and send them to the `funcx` endpoint (which can be on any machine anywhere!) to be sent out to the `funcx` worker nodes on the execution machine"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "noble-young",

"metadata": {},

"outputs": [],

"source": [

"with open(\"endpoint_id.txt\") as endpoint_file:\n",

" pyhf_endpoint = str(endpoint_file.read().rstrip())"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "naughty-discussion",

"metadata": {},

"outputs": [],

"source": [

"# Serialize and send to funcX ednpoint to run\n",

"task_id = fxc.run(\n",

" backend=\"numpy\", test_poi=1.0, endpoint_id=pyhf_endpoint, function_id=infer_func\n",

")"

]

},

{

"cell_type": "markdown",

"id": "ec565efe-5c13-4825-91fc-3c3fc812d022",

"metadata": {},

"source": [

"While that runs, we can now start to send queries from our local submission machine to the (remote) execution machine and check to see if the tasks we've submitted have finished execution"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "absent-culture",

"metadata": {},

"outputs": [],

"source": [

"# wait for it to run. Here this is super fast, but you'd want to setup a loop to check periodically\n",

"sleep(1)"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "ecological-hostel",

"metadata": {},

"outputs": [],

"source": [

"# retrieve output\n",

"result = fxc.get_result(task_id)\n",

"result"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "identified-priest",

"metadata": {},

"outputs": [],

"source": [

"# Run a different test POI\n",

"task_id = fxc.run(\n",

" backend=\"numpy\", test_poi=2.0, endpoint_id=pyhf_endpoint, function_id=infer_func\n",

")\n",

"sleep(0.01)\n",

"try:\n",

" result = fxc.get_result(task_id)\n",

"except Exception as excep:\n",

" print(f\"inference: {excep}\")\n",

" sleep(2)\n",

"\n",

"result = fxc.get_result(task_id)\n",

"result"

]

},

{

"cell_type": "markdown",

"id": "serial-memorabilia",

"metadata": {},

"source": [

"## funcX endpoint shutdown (On execution machine)"

]

},

{

"cell_type": "markdown",

"id": "pleased-france",

"metadata": {},

"source": [

"To stop a funcX endpoint from running simple use the `funcx-endpoint` CLI API again"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "circular-reservation",

"metadata": {},

"outputs": [],

"source": [

"! funcx-endpoint stop pyhf\n",

"! funcx-endpoint list"

]

}

],

"metadata": {

"interpreter": {

"hash": "bc61916ede75e2919ff33a1a319d3383b922e98fc54d7ec17a2c0b8a4b2de96d"

},

"kernelspec": {

"display_name": "Python 3 (ipykernel)",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.8.7"

}

},

"nbformat": 4,

"nbformat_minor": 5

}