{

"cells": [

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# Agent, RL and MultiEnvironment\n",

"Try this notebook out interactively with: [](https://mybinder.org/v2/gh/rte-france/Grid2Op/master)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"It is recommended to have a look at the [00_Introduction](00_Introduction.ipynb), [01_Grid2opFramework](01_Grid2opFramework.ipynb) and [03_Action](03_Action.ipynb) and especially [04_TrainingAnAgent](04_TrainingAnAgent.ipynb) notebooks before getting into this one."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"**Objectives**\n",

"\n",

"In this notebook we will introduce :\n",

"* what is a \"SingleEnvMultiProcess\" (previously [MultiEnvironment](https://grid2op.readthedocs.io/en/v0.9.4/environment.html#grid2op.Environment.MultiEnvironment))\n",

"* how can it be used with an agent\n",

"* how can it be used to train a agent that uses different environments (using the [l2rpn-baselines](https://l2rpn-baselines.readthedocs.io/en/master/) python package)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

" \n",

"Execute the cell below by removing the # character if you use google colab !\n",

"\n",

"Cell will look like:\n",

"```python\n",

"!pip install grid2op[optional] # for use with google colab (grid2Op is not installed by default)\n",

"```\n",

"

\n",

"Execute the cell below by removing the # character if you use google colab !\n",

"\n",

"Cell will look like:\n",

"```python\n",

"!pip install grid2op[optional] # for use with google colab (grid2Op is not installed by default)\n",

"```\n",

" "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# !pip install grid2op[optional] # for use with google colab (grid2Op is not installed by default)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"res = None\n",

"try:\n",

" from jyquickhelper import add_notebook_menu\n",

" res = add_notebook_menu()\n",

"except ModuleNotFoundError:\n",

" print(\"Impossible to automatically add a menu / table of content to this notebook.\\nYou can download \\\"jyquickhelper\\\" package with: \\n\\\"pip install jyquickhelper\\\"\")\n",

"res"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"import grid2op\n",

"from grid2op.Reward import ConstantReward, FlatReward\n",

"from tqdm.notebook import tqdm\n",

"from grid2op.Runner import Runner\n",

"import sys\n",

"import os\n",

"import numpy as np\n",

"TRAINING_STEP = 100"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## I) Make a regular environment and agent"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"By default we use the test environment. But by passing `test=False` in the following function will automatically download approximately 300MB from the internet and give you 1000 chronics instead of 2 used for this example."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"env = grid2op.make(\"rte_case14_realistic\", test=True)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"A lot of data have been made available for the default \"rte_case14_realistic\" environment. Including this data in the package is not convenient. \n",

"\n",

"We chose instead to release them and make them easily available with a utility. To download them in the default directory (\"~/data_grid2op/case14_redisp\") just pass the argument \"test=False\" (or don't pass anything else) as local=False is the default value. It will download approximately 300Mo of data."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## II) Train a standard RL Agent"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Make sure you are using a computer with at least 4 cores if you want to notice some speed-ups."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"from grid2op.Environment import SingleEnvMultiProcess\n",

"from grid2op.Agent import DoNothingAgent\n",

"NUM_CORE = 2"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### IIIa) Using the standard open AI gym loop\n",

"\n",

"Here we demonstrate how to use the multi environment class. First let's create a multi environment."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# create a simple agent\n",

"agent = DoNothingAgent(env.action_space)\n",

"\n",

"# create the multi environment class\n",

"multi_envs = SingleEnvMultiProcess(env=env, nb_env=NUM_CORE)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"A multi environment is just like using a regular environment but instead of dealing with one action, and one observation, it requires to be sent multiple actions, and returns a list of observations as well. \n",

"\n",

"It requires a grid2op environment to be initialized and creates some specific \"workers\", each is a replication of the initial environment. None of the \"worker\"s can be accessed directly. Supported methods are:\n",

"- multi_env.reset\n",

"- multi_env.step\n",

"- multi_env.close\n",

"\n",

"These methods have similar behaviour as \"env.step\", \"env.close\" or \"env.reset\".\n",

"\n",

"\n",

"It can be used the following way:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# initiliaze some variable with the proper dimension\n",

"obss = multi_envs.reset()\n",

"rews = [env.reward_range[0] for i in range(NUM_CORE)]\n",

"dones = [False for i in range(NUM_CORE)]\n",

"obss"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"dones"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"As you can see, obs is not a single obervation, but a list (numpy nd array to be precise) of 4 observations (if 4 cores were used, otherwise the length of the array is the number of cores), each one being an observation of a given \"worker\" environment.\n",

"\n",

"Worker environments are always called in the same order. It means the first observation of this vector will always correspond to the first worker environment. \n",

"\n",

"\n",

"Similarly to Observation, the \"step\" function of a multi_environment takes as input a list of multiple actions, each action will be implemented in its own environment. It returns a list of observations, a list of rewards, and boolean list of whether or not the worker environment suffers from a game over (in that case this worker environment is automatically restarted using the \"reset\" method.)\n",

"\n",

"Because orker environments are always called in the same order, the first action sent to the \"multi_env.step\" function will also be applied on this first environment.\n",

"\n",

"It is possible to use it as follow:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# initialize the vector of actions that will be processed by each worker environment.\n",

"acts = [None for _ in range(NUM_CORE)]\n",

"for env_act_id in range(NUM_CORE):\n",

" acts[env_act_id] = agent.act(obss[env_act_id], rews[env_act_id], dones[env_act_id])\n",

" \n",

"# feed them to the multi_env\n",

"obss, rews, dones, infos = multi_envs.step(acts)\n",

"\n",

"# as explained, this is a vector of Observation (as many as NUM_CORE in this example)\n",

"obss"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The multi environment loop is really close to the \"gym\" loop:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# performs the appropriated steps\n",

"for i in range(10):\n",

" acts = [None for _ in range(NUM_CORE)]\n",

" for env_act_id in range(NUM_CORE):\n",

" acts[env_act_id] = agent.act(obss[env_act_id], rews[env_act_id], dones[env_act_id])\n",

" obss, rews, dones, infos = multi_envs.step(acts)\n",

"\n",

" # DO SOMETHING WITH THE AGENT IF YOU WANT\n",

" ## agent.train(obss, rews, dones)\n",

" \n",

"\n",

"# close the environments created by the multi_env\n",

"multi_envs.close()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"On the above example, `TRAINING_STEP` steps are performed on `NUM_CORE` environments in parrallel. The agent has then acted `TRAINING_STEP * NUM_CORE` (=`10 * 4 = 40` by default) times on `NUM_CORE` different environments."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### III.b) Practical example\n",

"\n",

"We reuse the code of the Notebook [04_TrainingAnAgent](04_TrainingAnAgent.ipynb) to train a new agent we strongly recommend you to have a look at it if it is not done already.\n",

"\n",

"In this notebook, we focus on how to make your agent interact with multiple environments at the same time (**eg** it means that the batch of data the agent receives comes from different instance of the same environment, this is a technique used in [Asynchronous Actor Critic - A3C](https://medium.com/emergent-future/simple-reinforcement-learning-with-tensorflow-part-8-asynchronous-actor-critic-agents-a3c-c88f72a5e9f2) type of models for example).\n",

"\n",

"You will see with this method you can learn the same baseline with minimal (actually without any) changes in the above way.\n",

"\n",

"#### Note on the implementation\n",

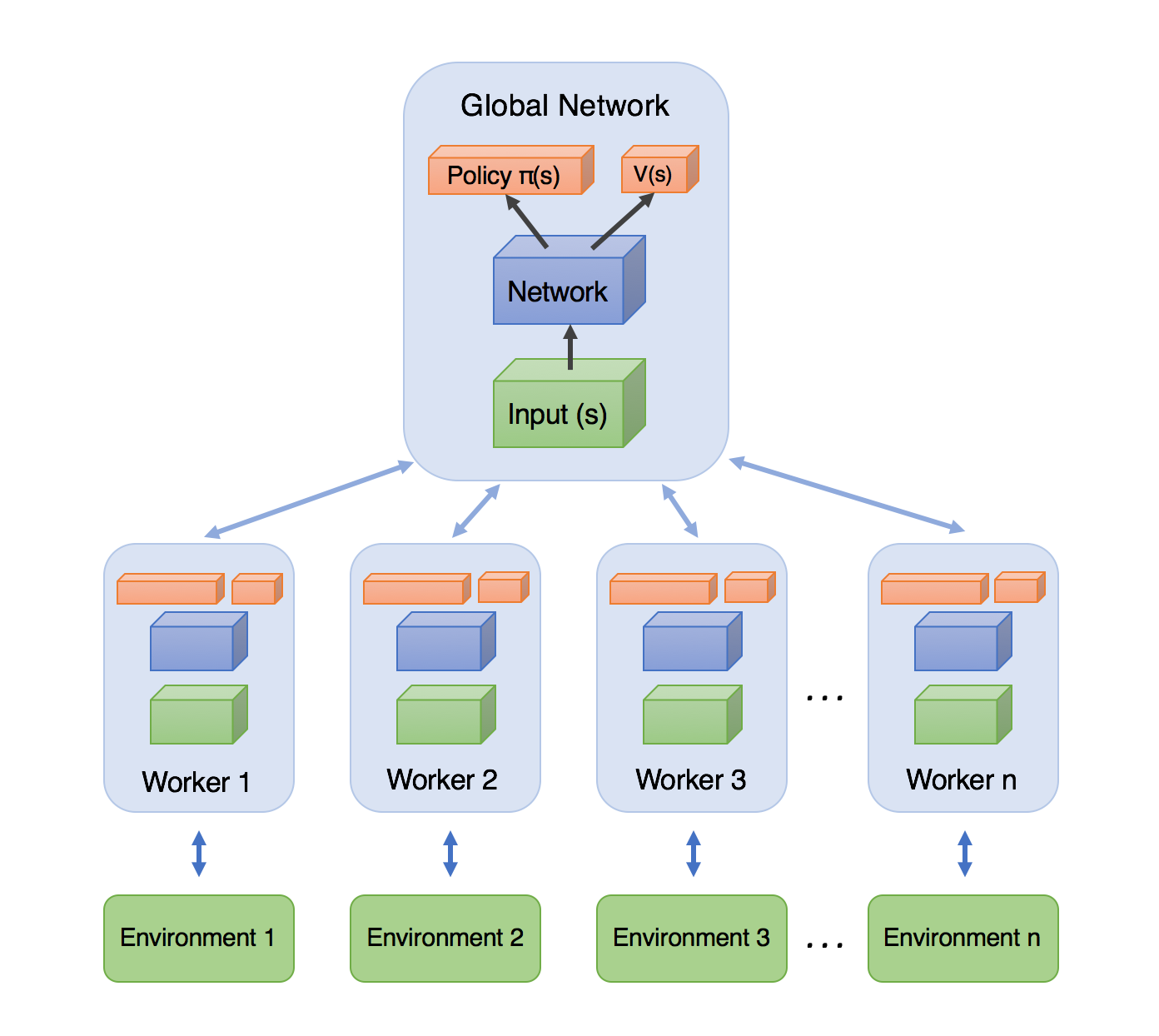

"The most common code for A3C agent can be summarized in the image below (image credit [this blog post](https://medium.com/emergent-future/simple-reinforcement-learning-with-tensorflow-part-8-asynchronous-actor-critic-agents-a3c-c88f72a5e9f2)):\n",

"\n",

"\n",

"In this image you see different version of the agent that interacts with different versions of the (same) environment (represented here in the different *workers*). And, from time to time, each \"worker\" will send its weights to the \"global network\" and an update procedure will be run there. Though this framework can perfectly be implemented in grid2op, the *SingleEnvMultiProcess* class works a bit differently.\n",

"\n",

"Actually, in this *SingleEnvMultiProcess* class, there exists only one copy of the \"global network\" that is kept into the main \"thread\" (which to be precise is a process) and that interacts (send actions to and receives observations from) with different independant environments. It is really similar to the [SubprocVecEnv](https://github.com/openai/baselines/blob/ea25b9e8b234e6ee1bca43083f8f3cf974143998/baselines/common/vec_env/subproc_vec_env.py#L39) of open ai gym.\n",

"\n",

"This is especially suited in the case of powersystem operations, as it can be quite computationnally expensive to solve for the powerflow equations at each nodes of the grid (also called [Kirchhoff's laws](https://en.wikipedia.org/wiki/Kirchhoff%27s_circuit_laws))\n",

"\n",

"#### What you have to do\n",

"\n",

"We recall here the code that we used in the relevant notebook to train the agent:\n",

"```python\n",

"# create an environment\n",

"env = make(env_name, test=True) \n",

"# don't forget to set \"test=False\" (or remove it, as False is the default value) for \"real\" training\n",

"\n",

"# import the train function and train your agent\n",

"from l2rpn_baselines.DuelQSimple import train\n",

"from l2rpn_baselines.utils import NNParam, TrainingParam\n",

"agent_name = \"test_agent\"\n",

"save_path = \"saved_agent_DDDQN_{}\".format(train_iter)\n",

"logs_dir=\"tf_logs_DDDQN\"\n",

"\n",

"\n",

"# we then define the neural network we want to make (you may change this at will)\n",

"## 1. first we choose what \"part\" of the observation we want as input, \n",

"## here for example only the generator and load information\n",

"## see https://grid2op.readthedocs.io/en/latest/observation.html#main-observation-attributes\n",

"## for the detailed about all the observation attributes you want to have\n",

"li_attr_obs_X = [\"gen_p\", \"gen_v\", \"load_p\", \"load_q\"]\n",

"# this automatically computes the size of the resulting vector\n",

"observation_size = NNParam.get_obs_size(env, li_attr_obs_X) \n",

"\n",

"## 2. then we define its architecture\n",

"sizes = [300, 300, 300] # 3 hidden layers, of 300 units each, why not...\n",

"activs = [\"relu\" for _ in sizes] # all followed by relu activation, because... why not\n",

"## 4. you put it all on a dictionnary like that (specific to this baseline)\n",

"kwargs_archi = {'observation_size': observation_size,\n",

" 'sizes': sizes,\n",

" 'activs': activs,\n",

" \"list_attr_obs\": li_attr_obs_X}\n",

"\n",

"# you can also change the training parameters you are using\n",

"# more information at https://l2rpn-baselines.readthedocs.io/en/latest/utils.html#l2rpn_baselines.utils.TrainingParam\n",

"tp = TrainingParam()\n",

"tp.batch_size = 32 # for example...\n",

"tp.update_tensorboard_freq = int(train_iter / 10)\n",

"tp.save_model_each = int(train_iter / 3)\n",

"tp.min_observation = int(train_iter / 5)\n",

"train(env,\n",

" name=agent_name,\n",

" iterations=train_iter,\n",

" save_path=save_path,\n",

" load_path=None, # put something else if you want to reload an agent instead of creating a new one\n",

" logs_dir=logs_dir,\n",

" kwargs_archi=kwargs_archi,\n",

" training_param=tp)\n",

"```\n",

"\n",

"Here, you will see in the next cell how to (*not really*) change it to train a agent on different environments:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"train_iter = TRAINING_STEP\n",

"# import the train function and train your agent\n",

"from l2rpn_baselines.DuelQSimple import train\n",

"from l2rpn_baselines.utils import NNParam, TrainingParam, make_multi_env\n",

"agent_name = \"test_agent_multi\"\n",

"save_path = \"saved_agent_DDDQN_{}_multi\".format(train_iter)\n",

"logs_dir=\"tf_logs_DDDQN\"\n",

"\n",

"# just add the relevant import (see above) and this line\n",

"my_envs = make_multi_env(env_init=env, nb_env=NUM_CORE)\n",

"# and that's it !\n",

"\n",

"\n",

"# we then define the neural network we want to make (you may change this at will)\n",

"## 1. first we choose what \"part\" of the observation we want as input, \n",

"## here for example only the generator and load information\n",

"## see https://grid2op.readthedocs.io/en/latest/observation.html#main-observation-attributes\n",

"## for the detailed about all the observation attributes you want to have\n",

"li_attr_obs_X = [\"gen_p\", \"gen_v\", \"load_p\", \"load_q\"]\n",

"# this automatically computes the size of the resulting vector\n",

"observation_size = NNParam.get_obs_size(env, li_attr_obs_X) \n",

"\n",

"## 2. then we define its architecture\n",

"sizes = [300, 300, 300] # 3 hidden layers, of 300 units each, why not...\n",

"activs = [\"relu\" for _ in sizes] # all followed by relu activation, because... why not\n",

"## 4. you put it all on a dictionnary like that (specific to this baseline)\n",

"kwargs_archi = {'observation_size': observation_size,\n",

" 'sizes': sizes,\n",

" 'activs': activs,\n",

" \"list_attr_obs\": li_attr_obs_X}\n",

"\n",

"# you can also change the training parameters you are using\n",

"# more information at https://l2rpn-baselines.readthedocs.io/en/latest/utils.html#l2rpn_baselines.utils.TrainingParam\n",

"tp = TrainingParam()\n",

"tp.batch_size = 32 # for example...\n",

"tp.update_tensorboard_freq = int(train_iter / 10)\n",

"tp.save_model_each = int(train_iter / 3)\n",

"tp.min_observation = int(train_iter / 5)\n",

"train(my_envs,\n",

" name=agent_name,\n",

" iterations=train_iter,\n",

" save_path=save_path,\n",

" load_path=None, # put something else if you want to reload an agent instead of creating a new one\n",

" logs_dir=logs_dir,\n",

" kwargs_archi=kwargs_archi,\n",

" training_param=tp)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### II c) Assess the performance of the trained agent"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Nothing is changing... Like really, it's the same code as in notebook 4 (we told you to have a look ;-) )"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"from l2rpn_baselines.DuelQSimple import evaluate\n",

"path_save_results = \"{}_results_multi\".format(save_path)\n",

"\n",

"evaluated_agent, res_runner = evaluate(env,\n",

" name=agent_name,\n",

" load_path=save_path,\n",

" logs_path=path_save_results,\n",

" nb_episode=2,\n",

" nb_process=1,\n",

" max_steps=100,\n",

" verbose=True,\n",

" save_gif=False)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### II d) That is it ?\n",

"\n",

"Yes there is nothing more to say about it. As long as you use one of the compatible baselines (at the date of writing):\n",

"- [DeepQSimple](https://l2rpn-baselines.readthedocs.io/en/master/DeepQSimple.html)\n",

"- [DuelQSimple](https://l2rpn-baselines.readthedocs.io/en/master/DuelQSimple.html)\n",

"- [DuelQLeapNet](https://l2rpn-baselines.readthedocs.io/en/master/DuelQLeapNet.html)\n",

"\n",

"You do not have anything more to do :-)\n",

"\n",

"If you want to use another baseline that does not support this feature, feel free to add an issue in the l2rpn-baselines official github at this adress [https://github.com/rte-france/l2rpn-baselines/issues](https://github.com/rte-france/l2rpn-baselines/issues)"

]

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.8.5"

}

},

"nbformat": 4,

"nbformat_minor": 2

}

"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# !pip install grid2op[optional] # for use with google colab (grid2Op is not installed by default)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"res = None\n",

"try:\n",

" from jyquickhelper import add_notebook_menu\n",

" res = add_notebook_menu()\n",

"except ModuleNotFoundError:\n",

" print(\"Impossible to automatically add a menu / table of content to this notebook.\\nYou can download \\\"jyquickhelper\\\" package with: \\n\\\"pip install jyquickhelper\\\"\")\n",

"res"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"import grid2op\n",

"from grid2op.Reward import ConstantReward, FlatReward\n",

"from tqdm.notebook import tqdm\n",

"from grid2op.Runner import Runner\n",

"import sys\n",

"import os\n",

"import numpy as np\n",

"TRAINING_STEP = 100"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## I) Make a regular environment and agent"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"By default we use the test environment. But by passing `test=False` in the following function will automatically download approximately 300MB from the internet and give you 1000 chronics instead of 2 used for this example."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"env = grid2op.make(\"rte_case14_realistic\", test=True)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"A lot of data have been made available for the default \"rte_case14_realistic\" environment. Including this data in the package is not convenient. \n",

"\n",

"We chose instead to release them and make them easily available with a utility. To download them in the default directory (\"~/data_grid2op/case14_redisp\") just pass the argument \"test=False\" (or don't pass anything else) as local=False is the default value. It will download approximately 300Mo of data."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## II) Train a standard RL Agent"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Make sure you are using a computer with at least 4 cores if you want to notice some speed-ups."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"from grid2op.Environment import SingleEnvMultiProcess\n",

"from grid2op.Agent import DoNothingAgent\n",

"NUM_CORE = 2"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### IIIa) Using the standard open AI gym loop\n",

"\n",

"Here we demonstrate how to use the multi environment class. First let's create a multi environment."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# create a simple agent\n",

"agent = DoNothingAgent(env.action_space)\n",

"\n",

"# create the multi environment class\n",

"multi_envs = SingleEnvMultiProcess(env=env, nb_env=NUM_CORE)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"A multi environment is just like using a regular environment but instead of dealing with one action, and one observation, it requires to be sent multiple actions, and returns a list of observations as well. \n",

"\n",

"It requires a grid2op environment to be initialized and creates some specific \"workers\", each is a replication of the initial environment. None of the \"worker\"s can be accessed directly. Supported methods are:\n",

"- multi_env.reset\n",

"- multi_env.step\n",

"- multi_env.close\n",

"\n",

"These methods have similar behaviour as \"env.step\", \"env.close\" or \"env.reset\".\n",

"\n",

"\n",

"It can be used the following way:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# initiliaze some variable with the proper dimension\n",

"obss = multi_envs.reset()\n",

"rews = [env.reward_range[0] for i in range(NUM_CORE)]\n",

"dones = [False for i in range(NUM_CORE)]\n",

"obss"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"dones"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"As you can see, obs is not a single obervation, but a list (numpy nd array to be precise) of 4 observations (if 4 cores were used, otherwise the length of the array is the number of cores), each one being an observation of a given \"worker\" environment.\n",

"\n",

"Worker environments are always called in the same order. It means the first observation of this vector will always correspond to the first worker environment. \n",

"\n",

"\n",

"Similarly to Observation, the \"step\" function of a multi_environment takes as input a list of multiple actions, each action will be implemented in its own environment. It returns a list of observations, a list of rewards, and boolean list of whether or not the worker environment suffers from a game over (in that case this worker environment is automatically restarted using the \"reset\" method.)\n",

"\n",

"Because orker environments are always called in the same order, the first action sent to the \"multi_env.step\" function will also be applied on this first environment.\n",

"\n",

"It is possible to use it as follow:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# initialize the vector of actions that will be processed by each worker environment.\n",

"acts = [None for _ in range(NUM_CORE)]\n",

"for env_act_id in range(NUM_CORE):\n",

" acts[env_act_id] = agent.act(obss[env_act_id], rews[env_act_id], dones[env_act_id])\n",

" \n",

"# feed them to the multi_env\n",

"obss, rews, dones, infos = multi_envs.step(acts)\n",

"\n",

"# as explained, this is a vector of Observation (as many as NUM_CORE in this example)\n",

"obss"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The multi environment loop is really close to the \"gym\" loop:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# performs the appropriated steps\n",

"for i in range(10):\n",

" acts = [None for _ in range(NUM_CORE)]\n",

" for env_act_id in range(NUM_CORE):\n",

" acts[env_act_id] = agent.act(obss[env_act_id], rews[env_act_id], dones[env_act_id])\n",

" obss, rews, dones, infos = multi_envs.step(acts)\n",

"\n",

" # DO SOMETHING WITH THE AGENT IF YOU WANT\n",

" ## agent.train(obss, rews, dones)\n",

" \n",

"\n",

"# close the environments created by the multi_env\n",

"multi_envs.close()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"On the above example, `TRAINING_STEP` steps are performed on `NUM_CORE` environments in parrallel. The agent has then acted `TRAINING_STEP * NUM_CORE` (=`10 * 4 = 40` by default) times on `NUM_CORE` different environments."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### III.b) Practical example\n",

"\n",

"We reuse the code of the Notebook [04_TrainingAnAgent](04_TrainingAnAgent.ipynb) to train a new agent we strongly recommend you to have a look at it if it is not done already.\n",

"\n",

"In this notebook, we focus on how to make your agent interact with multiple environments at the same time (**eg** it means that the batch of data the agent receives comes from different instance of the same environment, this is a technique used in [Asynchronous Actor Critic - A3C](https://medium.com/emergent-future/simple-reinforcement-learning-with-tensorflow-part-8-asynchronous-actor-critic-agents-a3c-c88f72a5e9f2) type of models for example).\n",

"\n",

"You will see with this method you can learn the same baseline with minimal (actually without any) changes in the above way.\n",

"\n",

"#### Note on the implementation\n",

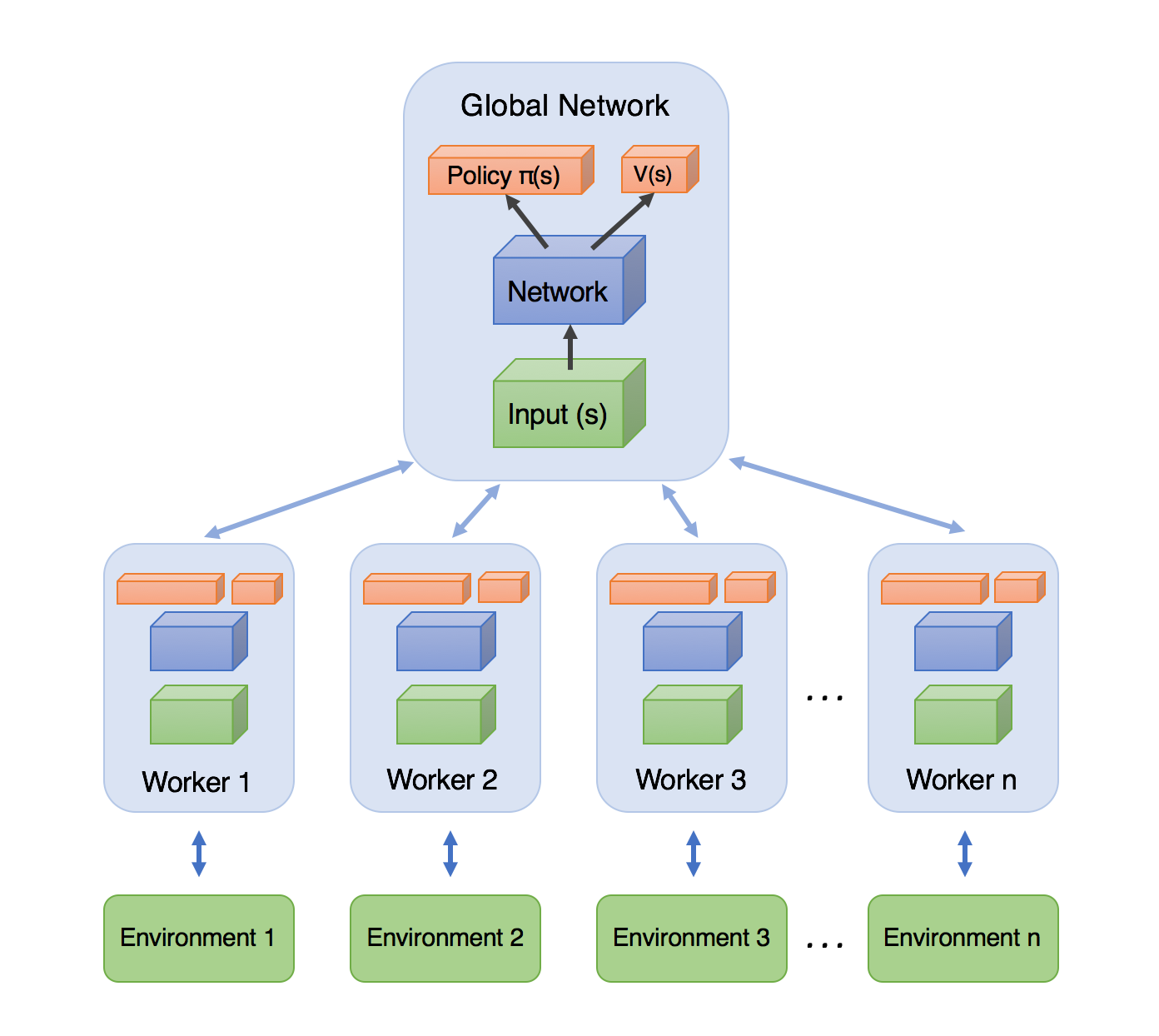

"The most common code for A3C agent can be summarized in the image below (image credit [this blog post](https://medium.com/emergent-future/simple-reinforcement-learning-with-tensorflow-part-8-asynchronous-actor-critic-agents-a3c-c88f72a5e9f2)):\n",

"\n",

"\n",

"In this image you see different version of the agent that interacts with different versions of the (same) environment (represented here in the different *workers*). And, from time to time, each \"worker\" will send its weights to the \"global network\" and an update procedure will be run there. Though this framework can perfectly be implemented in grid2op, the *SingleEnvMultiProcess* class works a bit differently.\n",

"\n",

"Actually, in this *SingleEnvMultiProcess* class, there exists only one copy of the \"global network\" that is kept into the main \"thread\" (which to be precise is a process) and that interacts (send actions to and receives observations from) with different independant environments. It is really similar to the [SubprocVecEnv](https://github.com/openai/baselines/blob/ea25b9e8b234e6ee1bca43083f8f3cf974143998/baselines/common/vec_env/subproc_vec_env.py#L39) of open ai gym.\n",

"\n",

"This is especially suited in the case of powersystem operations, as it can be quite computationnally expensive to solve for the powerflow equations at each nodes of the grid (also called [Kirchhoff's laws](https://en.wikipedia.org/wiki/Kirchhoff%27s_circuit_laws))\n",

"\n",

"#### What you have to do\n",

"\n",

"We recall here the code that we used in the relevant notebook to train the agent:\n",

"```python\n",

"# create an environment\n",

"env = make(env_name, test=True) \n",

"# don't forget to set \"test=False\" (or remove it, as False is the default value) for \"real\" training\n",

"\n",

"# import the train function and train your agent\n",

"from l2rpn_baselines.DuelQSimple import train\n",

"from l2rpn_baselines.utils import NNParam, TrainingParam\n",

"agent_name = \"test_agent\"\n",

"save_path = \"saved_agent_DDDQN_{}\".format(train_iter)\n",

"logs_dir=\"tf_logs_DDDQN\"\n",

"\n",

"\n",

"# we then define the neural network we want to make (you may change this at will)\n",

"## 1. first we choose what \"part\" of the observation we want as input, \n",

"## here for example only the generator and load information\n",

"## see https://grid2op.readthedocs.io/en/latest/observation.html#main-observation-attributes\n",

"## for the detailed about all the observation attributes you want to have\n",

"li_attr_obs_X = [\"gen_p\", \"gen_v\", \"load_p\", \"load_q\"]\n",

"# this automatically computes the size of the resulting vector\n",

"observation_size = NNParam.get_obs_size(env, li_attr_obs_X) \n",

"\n",

"## 2. then we define its architecture\n",

"sizes = [300, 300, 300] # 3 hidden layers, of 300 units each, why not...\n",

"activs = [\"relu\" for _ in sizes] # all followed by relu activation, because... why not\n",

"## 4. you put it all on a dictionnary like that (specific to this baseline)\n",

"kwargs_archi = {'observation_size': observation_size,\n",

" 'sizes': sizes,\n",

" 'activs': activs,\n",

" \"list_attr_obs\": li_attr_obs_X}\n",

"\n",

"# you can also change the training parameters you are using\n",

"# more information at https://l2rpn-baselines.readthedocs.io/en/latest/utils.html#l2rpn_baselines.utils.TrainingParam\n",

"tp = TrainingParam()\n",

"tp.batch_size = 32 # for example...\n",

"tp.update_tensorboard_freq = int(train_iter / 10)\n",

"tp.save_model_each = int(train_iter / 3)\n",

"tp.min_observation = int(train_iter / 5)\n",

"train(env,\n",

" name=agent_name,\n",

" iterations=train_iter,\n",

" save_path=save_path,\n",

" load_path=None, # put something else if you want to reload an agent instead of creating a new one\n",

" logs_dir=logs_dir,\n",

" kwargs_archi=kwargs_archi,\n",

" training_param=tp)\n",

"```\n",

"\n",

"Here, you will see in the next cell how to (*not really*) change it to train a agent on different environments:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"train_iter = TRAINING_STEP\n",

"# import the train function and train your agent\n",

"from l2rpn_baselines.DuelQSimple import train\n",

"from l2rpn_baselines.utils import NNParam, TrainingParam, make_multi_env\n",

"agent_name = \"test_agent_multi\"\n",

"save_path = \"saved_agent_DDDQN_{}_multi\".format(train_iter)\n",

"logs_dir=\"tf_logs_DDDQN\"\n",

"\n",

"# just add the relevant import (see above) and this line\n",

"my_envs = make_multi_env(env_init=env, nb_env=NUM_CORE)\n",

"# and that's it !\n",

"\n",

"\n",

"# we then define the neural network we want to make (you may change this at will)\n",

"## 1. first we choose what \"part\" of the observation we want as input, \n",

"## here for example only the generator and load information\n",

"## see https://grid2op.readthedocs.io/en/latest/observation.html#main-observation-attributes\n",

"## for the detailed about all the observation attributes you want to have\n",

"li_attr_obs_X = [\"gen_p\", \"gen_v\", \"load_p\", \"load_q\"]\n",

"# this automatically computes the size of the resulting vector\n",

"observation_size = NNParam.get_obs_size(env, li_attr_obs_X) \n",

"\n",

"## 2. then we define its architecture\n",

"sizes = [300, 300, 300] # 3 hidden layers, of 300 units each, why not...\n",

"activs = [\"relu\" for _ in sizes] # all followed by relu activation, because... why not\n",

"## 4. you put it all on a dictionnary like that (specific to this baseline)\n",

"kwargs_archi = {'observation_size': observation_size,\n",

" 'sizes': sizes,\n",

" 'activs': activs,\n",

" \"list_attr_obs\": li_attr_obs_X}\n",

"\n",

"# you can also change the training parameters you are using\n",

"# more information at https://l2rpn-baselines.readthedocs.io/en/latest/utils.html#l2rpn_baselines.utils.TrainingParam\n",

"tp = TrainingParam()\n",

"tp.batch_size = 32 # for example...\n",

"tp.update_tensorboard_freq = int(train_iter / 10)\n",

"tp.save_model_each = int(train_iter / 3)\n",

"tp.min_observation = int(train_iter / 5)\n",

"train(my_envs,\n",

" name=agent_name,\n",

" iterations=train_iter,\n",

" save_path=save_path,\n",

" load_path=None, # put something else if you want to reload an agent instead of creating a new one\n",

" logs_dir=logs_dir,\n",

" kwargs_archi=kwargs_archi,\n",

" training_param=tp)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### II c) Assess the performance of the trained agent"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Nothing is changing... Like really, it's the same code as in notebook 4 (we told you to have a look ;-) )"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"from l2rpn_baselines.DuelQSimple import evaluate\n",

"path_save_results = \"{}_results_multi\".format(save_path)\n",

"\n",

"evaluated_agent, res_runner = evaluate(env,\n",

" name=agent_name,\n",

" load_path=save_path,\n",

" logs_path=path_save_results,\n",

" nb_episode=2,\n",

" nb_process=1,\n",

" max_steps=100,\n",

" verbose=True,\n",

" save_gif=False)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### II d) That is it ?\n",

"\n",

"Yes there is nothing more to say about it. As long as you use one of the compatible baselines (at the date of writing):\n",

"- [DeepQSimple](https://l2rpn-baselines.readthedocs.io/en/master/DeepQSimple.html)\n",

"- [DuelQSimple](https://l2rpn-baselines.readthedocs.io/en/master/DuelQSimple.html)\n",

"- [DuelQLeapNet](https://l2rpn-baselines.readthedocs.io/en/master/DuelQLeapNet.html)\n",

"\n",

"You do not have anything more to do :-)\n",

"\n",

"If you want to use another baseline that does not support this feature, feel free to add an issue in the l2rpn-baselines official github at this adress [https://github.com/rte-france/l2rpn-baselines/issues](https://github.com/rte-france/l2rpn-baselines/issues)"

]

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.8.5"

}

},

"nbformat": 4,

"nbformat_minor": 2

}