\n",

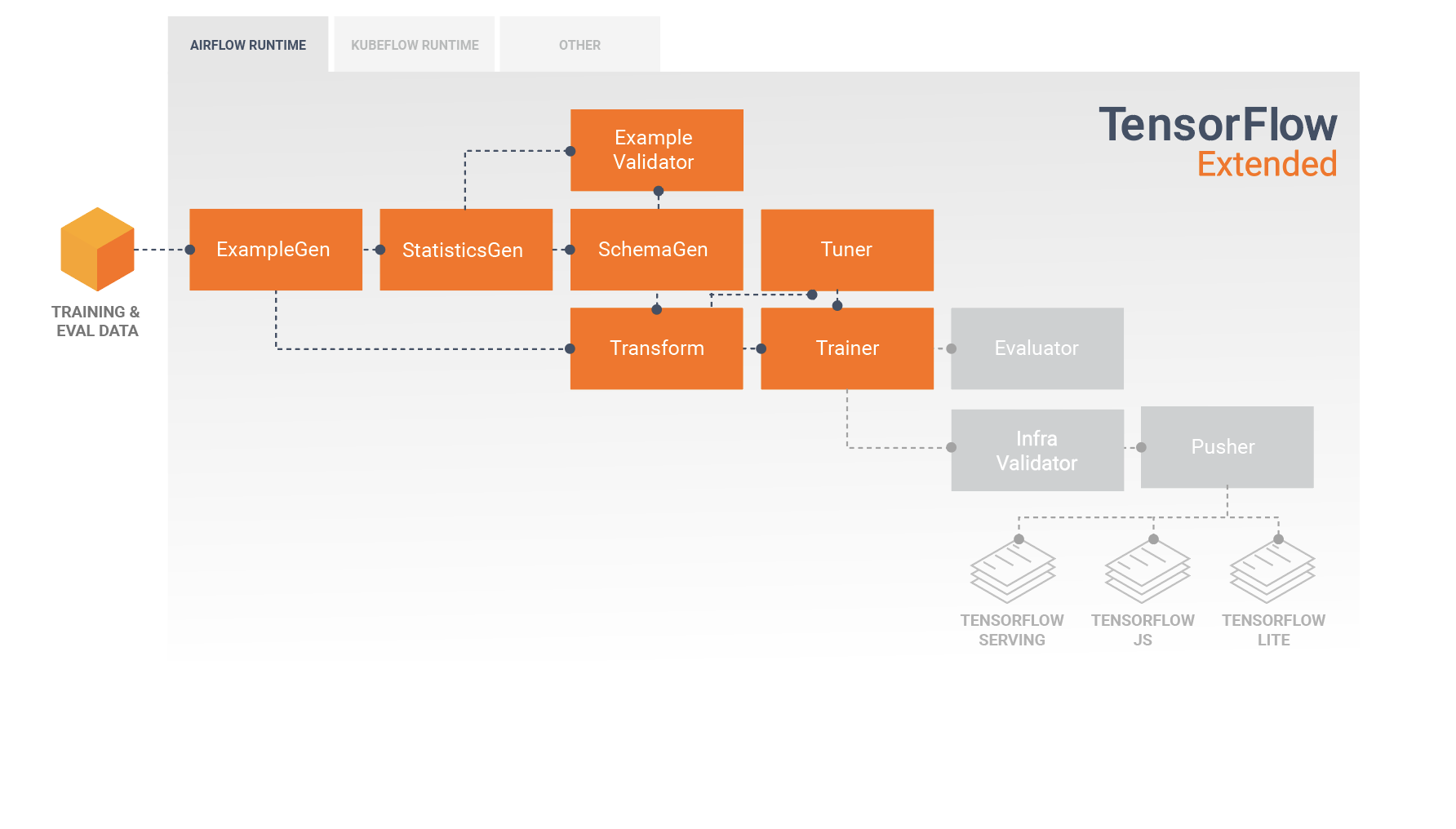

"image source: https://www.tensorflow.org/tfx/guide\n",

"\n",

"* The *Tuner* utilizes the [Keras Tuner](https://keras-team.github.io/keras-tuner/) API under the hood to tune your model's hyperparameters.\n",

"* You can get the best set of hyperparameters from the Tuner component and feed it into the *Trainer* component to optimize your model for training.\n",

"\n",

"You will again be working with the [FashionMNIST](https://github.com/zalandoresearch/fashion-mnist) dataset and will feed it though the TFX pipeline up to the Trainer component.You will quickly review the earlier components from Course 2, then focus on the two new components introduced.\n",

"\n",

"Let's begin!\n",

"\n"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "MUXex9ctTuDB"

},

"source": [

"## Setup"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "YEFWSi_-umNz"

},

"source": [

"### Install TFX\n",

"\n",

"You will first install [TFX](https://www.tensorflow.org/tfx), a framework for developing end-to-end machine learning pipelines."

]

},

{

"cell_type": "code",

"metadata": {

"id": "IqR2PQG4ZaZ0",

"colab": {

"base_uri": "https://localhost:8080/"

},

"outputId": "60ee146a-1707-485d-b95e-b685781ce85b"

},

"source": [

"!pip install -U pip\n",

"!pip install -U tfx==1.3\n",

"\n",

"# These are downgraded to work with the packages used by TFX 1.3\n",

"# Please do not delete because it will cause import errors in the next cell\n",

"!pip install --upgrade tensorflow-estimator==2.6.0\n",

"!pip install --upgrade keras==2.6.0"

],

"execution_count": 1,

"outputs": [

{

"output_type": "stream",

"name": "stdout",

"text": [

"Looking in indexes: https://pypi.org/simple, https://us-python.pkg.dev/colab-wheels/public/simple/\n",

"Requirement already satisfied: pip in /usr/local/lib/python3.7/dist-packages (21.1.3)\n",

"Collecting pip\n",

" Downloading pip-22.1.2-py3-none-any.whl (2.1 MB)\n",

"\u001b[K |████████████████████████████████| 2.1 MB 36.2 MB/s \n",

"\u001b[?25hInstalling collected packages: pip\n",

" Attempting uninstall: pip\n",

" Found existing installation: pip 21.1.3\n",

" Uninstalling pip-21.1.3:\n",

" Successfully uninstalled pip-21.1.3\n",

"Successfully installed pip-22.1.2\n",

"Looking in indexes: https://pypi.org/simple, https://us-python.pkg.dev/colab-wheels/public/simple/\n",

"Collecting tfx==1.3\n",

" Downloading tfx-1.3.0-py3-none-any.whl (2.4 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m2.4/2.4 MB\u001b[0m \u001b[31m31.4 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: click<8,>=7 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (7.1.2)\n",

"Collecting google-cloud-bigquery<3,>=2.26.0\n",

" Downloading google_cloud_bigquery-2.34.4-py2.py3-none-any.whl (206 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m206.6/206.6 kB\u001b[0m \u001b[31m21.1 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: protobuf<4,>=3.13 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (3.17.3)\n",

"Requirement already satisfied: tensorflow-hub<0.13,>=0.9.0 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (0.12.0)\n",

"Collecting tensorflow-data-validation<1.4.0,>=1.3.0\n",

" Downloading tensorflow_data_validation-1.3.0-cp37-cp37m-manylinux_2_12_x86_64.manylinux2010_x86_64.whl (1.4 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m1.4/1.4 MB\u001b[0m \u001b[31m36.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting tfx-bsl<1.4.0,>=1.3.0\n",

" Downloading tfx_bsl-1.3.0-cp37-cp37m-manylinux_2_12_x86_64.manylinux2010_x86_64.whl (19.0 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m19.0/19.0 MB\u001b[0m \u001b[31m43.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: jinja2<4,>=2.7.3 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (2.11.3)\n",

"Collecting pyarrow<3,>=1\n",

" Downloading pyarrow-2.0.0-cp37-cp37m-manylinux2014_x86_64.whl (17.7 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m17.7/17.7 MB\u001b[0m \u001b[31m46.1 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting google-apitools<1,>=0.5\n",

" Downloading google_apitools-0.5.32-py3-none-any.whl (135 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m135.7/135.7 kB\u001b[0m \u001b[31m7.4 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting tensorflow-serving-api!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15\n",

" Downloading tensorflow_serving_api-2.9.0-py2.py3-none-any.whl (37 kB)\n",

"Collecting ml-pipelines-sdk==1.3.0\n",

" Downloading ml_pipelines_sdk-1.3.0-py3-none-any.whl (1.2 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m1.2/1.2 MB\u001b[0m \u001b[31m32.8 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting absl-py<0.13,>=0.9\n",

" Downloading absl_py-0.12.0-py3-none-any.whl (129 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m129.4/129.4 kB\u001b[0m \u001b[31m13.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: grpcio<2,>=1.28.1 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (1.46.3)\n",

"Requirement already satisfied: pyyaml<6,>=3.12 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (3.13)\n",

"Requirement already satisfied: google-api-python-client<2,>=1.8 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (1.12.11)\n",

"Requirement already satisfied: portpicker<2,>=1.3.1 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (1.3.9)\n",

"Collecting tensorflow-model-analysis<0.35,>=0.34.1\n",

" Downloading tensorflow_model_analysis-0.34.1-py3-none-any.whl (1.8 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m1.8/1.8 MB\u001b[0m \u001b[31m50.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting ml-metadata<1.4.0,>=1.3.0\n",

" Downloading ml_metadata-1.3.0-cp37-cp37m-manylinux_2_12_x86_64.manylinux2010_x86_64.whl (6.5 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m6.5/6.5 MB\u001b[0m \u001b[31m54.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting apache-beam[gcp]<3,>=2.32\n",

" Downloading apache_beam-2.40.0-cp37-cp37m-manylinux2010_x86_64.whl (10.9 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m10.9/10.9 MB\u001b[0m \u001b[31m54.3 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting kubernetes<13,>=10.0.1\n",

" Downloading kubernetes-12.0.1-py2.py3-none-any.whl (1.7 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m1.7/1.7 MB\u001b[0m \u001b[31m53.4 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting attrs<21,>=19.3.0\n",

" Downloading attrs-20.3.0-py2.py3-none-any.whl (49 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m49.3/49.3 kB\u001b[0m \u001b[31m5.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting keras-tuner<2,>=1.0.4\n",

" Downloading keras_tuner-1.1.2-py3-none-any.whl (133 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m133.7/133.7 kB\u001b[0m \u001b[31m14.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting google-cloud-aiplatform<2,>=0.5.0\n",

" Downloading google_cloud_aiplatform-1.15.0-py2.py3-none-any.whl (2.1 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m2.1/2.1 MB\u001b[0m \u001b[31m76.6 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting docker<5,>=4.1\n",

" Downloading docker-4.4.4-py2.py3-none-any.whl (147 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m147.0/147.0 kB\u001b[0m \u001b[31m17.5 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting numpy<1.20,>=1.16\n",

" Downloading numpy-1.19.5-cp37-cp37m-manylinux2010_x86_64.whl (14.8 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m14.8/14.8 MB\u001b[0m \u001b[31m28.8 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting packaging<21,>=20\n",

" Downloading packaging-20.9-py2.py3-none-any.whl (40 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m40.9/40.9 kB\u001b[0m \u001b[31m5.0 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (2.8.2+zzzcolab20220527125636)\n",

"Collecting tensorflow-transform<1.4.0,>=1.3.0\n",

" Downloading tensorflow_transform-1.3.0-py3-none-any.whl (407 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m407.7/407.7 kB\u001b[0m \u001b[31m39.3 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: six in /usr/local/lib/python3.7/dist-packages (from absl-py<0.13,>=0.9->tfx==1.3) (1.15.0)\n",

"Collecting proto-plus<2,>=1.7.1\n",

" Downloading proto_plus-1.20.6-py3-none-any.whl (46 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m46.4/46.4 kB\u001b[0m \u001b[31m6.2 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: httplib2<0.21.0,>=0.8 in /usr/local/lib/python3.7/dist-packages (from apache-beam[gcp]<3,>=2.32->tfx==1.3) (0.17.4)\n",

"Requirement already satisfied: python-dateutil<3,>=2.8.0 in /usr/local/lib/python3.7/dist-packages (from apache-beam[gcp]<3,>=2.32->tfx==1.3) (2.8.2)\n",

"Requirement already satisfied: pydot<2,>=1.2.0 in /usr/local/lib/python3.7/dist-packages (from apache-beam[gcp]<3,>=2.32->tfx==1.3) (1.3.0)\n",

"Requirement already satisfied: pytz>=2018.3 in /usr/local/lib/python3.7/dist-packages (from apache-beam[gcp]<3,>=2.32->tfx==1.3) (2022.1)\n",

"Collecting cloudpickle<3,>=2.1.0\n",

" Downloading cloudpickle-2.1.0-py3-none-any.whl (25 kB)\n",

"Collecting pymongo<4.0.0,>=3.8.0\n",

" Downloading pymongo-3.12.3-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (508 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m508.1/508.1 kB\u001b[0m \u001b[31m45.5 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting requests<3.0.0,>=2.24.0\n",

" Downloading requests-2.28.1-py3-none-any.whl (62 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m62.8/62.8 kB\u001b[0m \u001b[31m7.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: crcmod<2.0,>=1.7 in /usr/local/lib/python3.7/dist-packages (from apache-beam[gcp]<3,>=2.32->tfx==1.3) (1.7)\n",

"Collecting dill<0.3.2,>=0.3.1.1\n",

" Downloading dill-0.3.1.1.tar.gz (151 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m152.0/152.0 kB\u001b[0m \u001b[31m2.1 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25h Preparing metadata (setup.py) ... \u001b[?25l\u001b[?25hdone\n",

"Requirement already satisfied: typing-extensions>=3.7.0 in /usr/local/lib/python3.7/dist-packages (from apache-beam[gcp]<3,>=2.32->tfx==1.3) (4.1.1)\n",

"Collecting orjson<4.0\n",

" Downloading orjson-3.7.7-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (272 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m272.8/272.8 kB\u001b[0m \u001b[31m26.9 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting fastavro<2,>=0.23.6\n",

" Downloading fastavro-1.5.2-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (2.3 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m2.3/2.3 MB\u001b[0m \u001b[31m78.0 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting hdfs<3.0.0,>=2.1.0\n",

" Downloading hdfs-2.7.0-py3-none-any.whl (34 kB)\n",

"Requirement already satisfied: cachetools<5,>=3.1.0 in /usr/local/lib/python3.7/dist-packages (from apache-beam[gcp]<3,>=2.32->tfx==1.3) (4.2.4)\n",

"Collecting google-cloud-dlp<4,>=3.0.0\n",

" Downloading google_cloud_dlp-3.7.1-py2.py3-none-any.whl (118 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m118.2/118.2 kB\u001b[0m \u001b[31m15.1 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting google-cloud-pubsublite<2,>=1.2.0\n",

" Downloading google_cloud_pubsublite-1.4.2-py2.py3-none-any.whl (265 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m265.8/265.8 kB\u001b[0m \u001b[31m28.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting google-cloud-bigquery-storage>=2.6.3\n",

" Downloading google_cloud_bigquery_storage-2.13.2-py2.py3-none-any.whl (180 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m180.2/180.2 kB\u001b[0m \u001b[31m20.4 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting google-cloud-spanner<2,>=1.13.0\n",

" Downloading google_cloud_spanner-1.19.3-py2.py3-none-any.whl (255 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m255.6/255.6 kB\u001b[0m \u001b[31m28.9 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting grpcio-gcp<1,>=0.2.2\n",

" Downloading grpcio_gcp-0.2.2-py2.py3-none-any.whl (9.4 kB)\n",

"Requirement already satisfied: google-cloud-datastore<2,>=1.8.0 in /usr/local/lib/python3.7/dist-packages (from apache-beam[gcp]<3,>=2.32->tfx==1.3) (1.8.0)\n",

"Requirement already satisfied: google-auth<3,>=1.18.0 in /usr/local/lib/python3.7/dist-packages (from apache-beam[gcp]<3,>=2.32->tfx==1.3) (1.35.0)\n",

"Requirement already satisfied: google-cloud-core<2,>=0.28.1 in /usr/local/lib/python3.7/dist-packages (from apache-beam[gcp]<3,>=2.32->tfx==1.3) (1.0.3)\n",

"Collecting google-cloud-videointelligence<2,>=1.8.0\n",

" Downloading google_cloud_videointelligence-1.16.3-py2.py3-none-any.whl (183 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m183.9/183.9 kB\u001b[0m \u001b[31m24.3 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting google-auth-httplib2<0.2.0,>=0.1.0\n",

" Downloading google_auth_httplib2-0.1.0-py2.py3-none-any.whl (9.3 kB)\n",

"Collecting google-apitools<1,>=0.5\n",

" Downloading google-apitools-0.5.31.tar.gz (173 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m173.5/173.5 kB\u001b[0m \u001b[31m22.3 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25h Preparing metadata (setup.py) ... \u001b[?25l\u001b[?25hdone\n",

"Collecting google-cloud-language<2,>=1.3.0\n",

" Downloading google_cloud_language-1.3.2-py2.py3-none-any.whl (83 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m83.6/83.6 kB\u001b[0m \u001b[31m11.5 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting google-cloud-vision<2,>=0.38.0\n",

" Downloading google_cloud_vision-1.0.2-py2.py3-none-any.whl (435 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m435.1/435.1 kB\u001b[0m \u001b[31m42.1 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting google-cloud-pubsub<3,>=2.1.0\n",

" Downloading google_cloud_pubsub-2.13.0-py2.py3-none-any.whl (234 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m234.5/234.5 kB\u001b[0m \u001b[31m28.1 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting google-cloud-bigtable<2,>=0.31.1\n",

" Downloading google_cloud_bigtable-1.7.2-py2.py3-none-any.whl (267 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m267.7/267.7 kB\u001b[0m \u001b[31m29.9 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting google-cloud-recommendations-ai<=0.2.0,>=0.1.0\n",

" Downloading google_cloud_recommendations_ai-0.2.0-py2.py3-none-any.whl (180 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m180.2/180.2 kB\u001b[0m \u001b[31m14.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting websocket-client>=0.32.0\n",

" Downloading websocket_client-1.3.3-py3-none-any.whl (54 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m54.3/54.3 kB\u001b[0m \u001b[31m7.0 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: google-api-core<3dev,>=1.21.0 in /usr/local/lib/python3.7/dist-packages (from google-api-python-client<2,>=1.8->tfx==1.3) (1.31.6)\n",

"Requirement already satisfied: uritemplate<4dev,>=3.0.0 in /usr/local/lib/python3.7/dist-packages (from google-api-python-client<2,>=1.8->tfx==1.3) (3.0.1)\n",

"Collecting fasteners>=0.14\n",

" Downloading fasteners-0.17.3-py3-none-any.whl (18 kB)\n",

"Requirement already satisfied: oauth2client>=1.4.12 in /usr/local/lib/python3.7/dist-packages (from google-apitools<1,>=0.5->tfx==1.3) (4.1.3)\n",

"Collecting google-cloud-storage<3.0.0dev,>=1.32.0\n",

" Downloading google_cloud_storage-2.4.0-py2.py3-none-any.whl (106 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m107.0/107.0 kB\u001b[0m \u001b[31m14.1 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting google-cloud-resource-manager<3.0.0dev,>=1.3.3\n",

" Downloading google_cloud_resource_manager-1.5.1-py2.py3-none-any.whl (230 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m230.2/230.2 kB\u001b[0m \u001b[31m28.2 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting protobuf<4,>=3.13\n",

" Downloading protobuf-3.20.1-cp37-cp37m-manylinux_2_5_x86_64.manylinux1_x86_64.whl (1.0 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m1.0/1.0 MB\u001b[0m \u001b[31m59.9 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting google-cloud-core<2,>=0.28.1\n",

" Downloading google_cloud_core-1.7.2-py2.py3-none-any.whl (28 kB)\n",

"Collecting google-resumable-media<3.0dev,>=0.6.0\n",

" Downloading google_resumable_media-2.3.3-py2.py3-none-any.whl (76 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m76.9/76.9 kB\u001b[0m \u001b[31m10.9 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: MarkupSafe>=0.23 in /usr/local/lib/python3.7/dist-packages (from jinja2<4,>=2.7.3->tfx==1.3) (2.0.1)\n",

"Requirement already satisfied: tensorboard in /usr/local/lib/python3.7/dist-packages (from keras-tuner<2,>=1.0.4->tfx==1.3) (2.8.0)\n",

"Collecting kt-legacy\n",

" Downloading kt_legacy-1.0.4-py3-none-any.whl (9.6 kB)\n",

"Requirement already satisfied: ipython in /usr/local/lib/python3.7/dist-packages (from keras-tuner<2,>=1.0.4->tfx==1.3) (5.5.0)\n",

"Requirement already satisfied: setuptools>=21.0.0 in /usr/local/lib/python3.7/dist-packages (from kubernetes<13,>=10.0.1->tfx==1.3) (57.4.0)\n",

"Requirement already satisfied: certifi>=14.05.14 in /usr/local/lib/python3.7/dist-packages (from kubernetes<13,>=10.0.1->tfx==1.3) (2022.6.15)\n",

"Requirement already satisfied: requests-oauthlib in /usr/local/lib/python3.7/dist-packages (from kubernetes<13,>=10.0.1->tfx==1.3) (1.3.1)\n",

"Requirement already satisfied: urllib3>=1.24.2 in /usr/local/lib/python3.7/dist-packages (from kubernetes<13,>=10.0.1->tfx==1.3) (1.24.3)\n",

"Requirement already satisfied: pyparsing>=2.0.2 in /usr/local/lib/python3.7/dist-packages (from packaging<21,>=20->tfx==1.3) (3.0.9)\n",

"Requirement already satisfied: google-pasta>=0.1.1 in /usr/local/lib/python3.7/dist-packages (from tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2->tfx==1.3) (0.2.0)\n",

"Requirement already satisfied: keras<2.9,>=2.8.0rc0 in /usr/local/lib/python3.7/dist-packages (from tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2->tfx==1.3) (2.8.0)\n",

"Requirement already satisfied: keras-preprocessing>=1.1.1 in /usr/local/lib/python3.7/dist-packages (from tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2->tfx==1.3) (1.1.2)\n",

"Requirement already satisfied: gast>=0.2.1 in /usr/local/lib/python3.7/dist-packages (from tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2->tfx==1.3) (0.5.3)\n",

"Requirement already satisfied: h5py>=2.9.0 in /usr/local/lib/python3.7/dist-packages (from tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2->tfx==1.3) (3.1.0)\n",

"Collecting tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2\n",

" Downloading tensorflow-2.9.1-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (511.7 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m511.7/511.7 MB\u001b[0m \u001b[31m3.3 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting keras<2.10.0,>=2.9.0rc0\n",

" Downloading keras-2.9.0-py2.py3-none-any.whl (1.6 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m1.6/1.6 MB\u001b[0m \u001b[31m19.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting tensorboard\n",

" Downloading tensorboard-2.9.1-py3-none-any.whl (5.8 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m5.8/5.8 MB\u001b[0m \u001b[31m90.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2\n",

" Downloading tensorflow-2.9.0-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (511.7 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m511.7/511.7 MB\u001b[0m \u001b[31m3.6 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25h Downloading https://us-python.pkg.dev/colab-wheels/public/tensorflow/tensorflow-2.8.2%2Bzzzcolab20220629235552-cp37-cp37m-linux_x86_64.whl (668.6 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m668.6/668.6 MB\u001b[0m \u001b[31m2.8 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25h Downloading https://us-python.pkg.dev/colab-wheels/public/tensorflow/tensorflow-2.8.2%2Bzzzcolab20220523105045-cp37-cp37m-linux_x86_64.whl (668.6 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m668.6/668.6 MB\u001b[0m \u001b[31m2.6 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25h Downloading tensorflow-2.8.2-cp37-cp37m-manylinux2010_x86_64.whl (497.9 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m497.9/497.9 MB\u001b[0m \u001b[31m3.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25h Downloading https://us-python.pkg.dev/colab-wheels/public/tensorflow/tensorflow-2.8.1%2Bzzzcolab20220518083849-cp37-cp37m-linux_x86_64.whl (668.6 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m668.6/668.6 MB\u001b[0m \u001b[31m2.6 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25h Downloading https://us-python.pkg.dev/colab-wheels/public/tensorflow/tensorflow-2.8.1%2Bzzzcolab20220516111314-cp37-cp37m-linux_x86_64.whl (668.6 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m668.6/668.6 MB\u001b[0m \u001b[31m2.5 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25h Downloading tensorflow-2.8.1-cp37-cp37m-manylinux2010_x86_64.whl (497.9 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m497.9/497.9 MB\u001b[0m \u001b[31m3.5 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25h Downloading https://us-python.pkg.dev/colab-wheels/public/tensorflow/tensorflow-2.8.0%2Bzzzcolab20220506162203-cp37-cp37m-linux_x86_64.whl (668.3 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m668.3/668.3 MB\u001b[0m \u001b[31m2.6 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting tf-estimator-nightly==2.8.0.dev2021122109\n",

" Downloading tf_estimator_nightly-2.8.0.dev2021122109-py2.py3-none-any.whl (462 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m462.5/462.5 kB\u001b[0m \u001b[31m37.6 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2\n",

" Downloading tensorflow-2.8.0-cp37-cp37m-manylinux2010_x86_64.whl (497.5 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m497.5/497.5 MB\u001b[0m \u001b[31m3.5 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25h Downloading https://us-python.pkg.dev/colab-wheels/public/tensorflow/tensorflow-2.7.3%2Bzzzcolab20220523111007-cp37-cp37m-linux_x86_64.whl (671.4 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m671.4/671.4 MB\u001b[0m \u001b[31m2.5 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting tensorflow-estimator<2.8,~=2.7.0rc0\n",

" Downloading tensorflow_estimator-2.7.0-py2.py3-none-any.whl (463 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m463.1/463.1 kB\u001b[0m \u001b[31m40.5 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting keras<2.8,>=2.7.0rc0\n",

" Downloading keras-2.7.0-py2.py3-none-any.whl (1.3 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m1.3/1.3 MB\u001b[0m \u001b[31m35.8 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: opt-einsum>=2.3.2 in /usr/local/lib/python3.7/dist-packages (from tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2->tfx==1.3) (3.3.0)\n",

"Requirement already satisfied: termcolor>=1.1.0 in /usr/local/lib/python3.7/dist-packages (from tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2->tfx==1.3) (1.1.0)\n",

"Requirement already satisfied: wrapt>=1.11.0 in /usr/local/lib/python3.7/dist-packages (from tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2->tfx==1.3) (1.14.1)\n",

"Requirement already satisfied: astunparse>=1.6.0 in /usr/local/lib/python3.7/dist-packages (from tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2->tfx==1.3) (1.6.3)\n",

"Requirement already satisfied: wheel<1.0,>=0.32.0 in /usr/local/lib/python3.7/dist-packages (from tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2->tfx==1.3) (0.37.1)\n",

"Requirement already satisfied: flatbuffers<3.0,>=1.12 in /usr/local/lib/python3.7/dist-packages (from tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2->tfx==1.3) (2.0)\n",

"Requirement already satisfied: libclang>=9.0.1 in /usr/local/lib/python3.7/dist-packages (from tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2->tfx==1.3) (14.0.1)\n",

"Collecting protobuf<4,>=3.13\n",

" Downloading protobuf-3.19.4-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (1.1 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m1.1/1.1 MB\u001b[0m \u001b[31m32.4 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: tensorflow-io-gcs-filesystem>=0.21.0 in /usr/local/lib/python3.7/dist-packages (from tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2->tfx==1.3) (0.26.0)\n",

"Collecting gast<0.5.0,>=0.2.1\n",

" Downloading gast-0.4.0-py3-none-any.whl (9.8 kB)\n",

"Requirement already satisfied: pandas<2,>=1.0 in /usr/local/lib/python3.7/dist-packages (from tensorflow-data-validation<1.4.0,>=1.3.0->tfx==1.3) (1.3.5)\n",

"Collecting joblib<0.15,>=0.12\n",

" Downloading joblib-0.14.1-py2.py3-none-any.whl (294 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m294.9/294.9 kB\u001b[0m \u001b[31m30.2 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting tensorflow-metadata<1.3,>=1.2\n",

" Downloading tensorflow_metadata-1.2.0-py3-none-any.whl (48 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m48.5/48.5 kB\u001b[0m \u001b[31m6.5 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting ipython\n",

" Downloading ipython-7.34.0-py3-none-any.whl (793 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m793.8/793.8 kB\u001b[0m \u001b[31m34.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: ipywidgets<8,>=7 in /usr/local/lib/python3.7/dist-packages (from tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (7.7.0)\n",

"Requirement already satisfied: scipy<2,>=1.4.1 in /usr/local/lib/python3.7/dist-packages (from tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (1.4.1)\n",

"INFO: pip is looking at multiple versions of tensorflow-serving-api to determine which version is compatible with other requirements. This could take a while.\n",

"Collecting tensorflow-serving-api!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15\n",

" Downloading tensorflow_serving_api-2.8.2-py2.py3-none-any.whl (37 kB)\n",

" Downloading tensorflow_serving_api-2.8.0-py2.py3-none-any.whl (37 kB)\n",

" Downloading tensorflow_serving_api-2.7.0-py2.py3-none-any.whl (37 kB)\n",

" Downloading tensorflow_serving_api-2.6.5-py2.py3-none-any.whl (37 kB)\n",

"Collecting tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2\n",

" Downloading https://us-python.pkg.dev/colab-wheels/public/tensorflow/tensorflow-2.6.5%2Bzzzcolab20220523104206-cp37-cp37m-linux_x86_64.whl (570.3 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m570.3/570.3 MB\u001b[0m \u001b[31m2.5 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25h Downloading tensorflow-2.6.5-cp37-cp37m-manylinux2010_x86_64.whl (464.2 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m464.2/464.2 MB\u001b[0m \u001b[31m3.8 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hINFO: pip is looking at multiple versions of tensorflow-transform to determine which version is compatible with other requirements. This could take a while.\n",

"Collecting tensorflow-serving-api!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15\n",

" Downloading tensorflow_serving_api-2.6.3-py2.py3-none-any.whl (37 kB)\n",

"Collecting tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2\n",

" Downloading https://us-python.pkg.dev/colab-wheels/public/tensorflow/tensorflow-2.6.4%2Bzzzcolab20220516125453-cp37-cp37m-linux_x86_64.whl (570.3 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m570.3/570.3 MB\u001b[0m \u001b[31m2.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25h Downloading tensorflow-2.6.4-cp37-cp37m-manylinux2010_x86_64.whl (464.2 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m464.2/464.2 MB\u001b[0m \u001b[31m3.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25h Downloading tensorflow-2.6.3-cp37-cp37m-manylinux2010_x86_64.whl (463.8 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m463.8/463.8 MB\u001b[0m \u001b[31m3.8 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting tensorflow-serving-api!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15\n",

" Downloading tensorflow_serving_api-2.6.2-py2.py3-none-any.whl (37 kB)\n",

"Collecting tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2\n",

" Downloading tensorflow-2.6.2-cp37-cp37m-manylinux2010_x86_64.whl (458.3 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m458.3/458.3 MB\u001b[0m \u001b[31m3.8 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting tensorflow-serving-api!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15\n",

" Downloading tensorflow_serving_api-2.6.1-py2.py3-none-any.whl (37 kB)\n",

"Collecting tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2\n",

" Downloading tensorflow-2.6.1-cp37-cp37m-manylinux2010_x86_64.whl (458.3 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m458.3/458.3 MB\u001b[0m \u001b[31m3.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hINFO: pip is looking at multiple versions of tensorflow-serving-api to determine which version is compatible with other requirements. This could take a while.\n",

"Collecting tensorflow-serving-api!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15\n",

" Downloading tensorflow_serving_api-2.6.0-py2.py3-none-any.whl (37 kB)\n",

"Collecting tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2\n",

" Downloading https://us-python.pkg.dev/colab-wheels/public/tensorflow/tensorflow-2.6.0%2Bzzzcolab20220506153740-cp37-cp37m-linux_x86_64.whl (564.4 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m564.4/564.4 MB\u001b[0m \u001b[31m2.6 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting clang~=5.0\n",

" Downloading clang-5.0.tar.gz (30 kB)\n",

" Preparing metadata (setup.py) ... \u001b[?25l\u001b[?25hdone\n",

"Collecting wrapt>=1.11.0\n",

" Downloading wrapt-1.12.1.tar.gz (27 kB)\n",

" Preparing metadata (setup.py) ... \u001b[?25l\u001b[?25hdone\n",

"Collecting typing-extensions>=3.7.0\n",

" Downloading typing_extensions-3.7.4.3-py3-none-any.whl (22 kB)\n",

"Collecting flatbuffers<3.0,>=1.12\n",

" Downloading flatbuffers-1.12-py2.py3-none-any.whl (15 kB)\n",

"Requirement already satisfied: googleapis-common-protos<2.0dev,>=1.6.0 in /usr/local/lib/python3.7/dist-packages (from google-api-core<3dev,>=1.21.0->google-api-python-client<2,>=1.8->tfx==1.3) (1.56.2)\n",

"Requirement already satisfied: rsa<5,>=3.1.4 in /usr/local/lib/python3.7/dist-packages (from google-auth<3,>=1.18.0->apache-beam[gcp]<3,>=2.32->tfx==1.3) (4.8)\n",

"Requirement already satisfied: pyasn1-modules>=0.2.1 in /usr/local/lib/python3.7/dist-packages (from google-auth<3,>=1.18.0->apache-beam[gcp]<3,>=2.32->tfx==1.3) (0.2.8)\n",

"Collecting grpc-google-iam-v1<0.13dev,>=0.12.3\n",

" Downloading grpc_google_iam_v1-0.12.4-py2.py3-none-any.whl (26 kB)\n",

"Collecting grpcio-status>=1.16.0\n",

" Downloading grpcio_status-1.47.0-py3-none-any.whl (10.0 kB)\n",

"Collecting overrides<7.0.0,>=6.0.1\n",

" Downloading overrides-6.1.0-py3-none-any.whl (14 kB)\n",

"Collecting google-cloud-storage<3.0.0dev,>=1.32.0\n",

" Downloading google_cloud_storage-2.3.0-py2.py3-none-any.whl (107 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m107.1/107.1 kB\u001b[0m \u001b[31m14.3 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25h Downloading google_cloud_storage-2.2.1-py2.py3-none-any.whl (107 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m107.1/107.1 kB\u001b[0m \u001b[31m14.0 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting google-crc32c<2.0dev,>=1.0\n",

" Downloading google_crc32c-1.3.0-cp37-cp37m-manylinux_2_12_x86_64.manylinux2010_x86_64.whl (38 kB)\n",

"Requirement already satisfied: cached-property in /usr/local/lib/python3.7/dist-packages (from h5py>=2.9.0->tensorflow!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15.2->tfx==1.3) (1.5.2)\n",

"Requirement already satisfied: docopt in /usr/local/lib/python3.7/dist-packages (from hdfs<3.0.0,>=2.1.0->apache-beam[gcp]<3,>=2.32->tfx==1.3) (0.6.2)\n",

"Requirement already satisfied: decorator in /usr/local/lib/python3.7/dist-packages (from ipython->keras-tuner<2,>=1.0.4->tfx==1.3) (4.4.2)\n",

"Requirement already satisfied: traitlets>=4.2 in /usr/local/lib/python3.7/dist-packages (from ipython->keras-tuner<2,>=1.0.4->tfx==1.3) (5.1.1)\n",

"Collecting prompt-toolkit!=3.0.0,!=3.0.1,<3.1.0,>=2.0.0\n",

" Downloading prompt_toolkit-3.0.30-py3-none-any.whl (381 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m381.7/381.7 kB\u001b[0m \u001b[31m32.1 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: backcall in /usr/local/lib/python3.7/dist-packages (from ipython->keras-tuner<2,>=1.0.4->tfx==1.3) (0.2.0)\n",

"Requirement already satisfied: jedi>=0.16 in /usr/local/lib/python3.7/dist-packages (from ipython->keras-tuner<2,>=1.0.4->tfx==1.3) (0.18.1)\n",

"Requirement already satisfied: matplotlib-inline in /usr/local/lib/python3.7/dist-packages (from ipython->keras-tuner<2,>=1.0.4->tfx==1.3) (0.1.3)\n",

"Requirement already satisfied: pickleshare in /usr/local/lib/python3.7/dist-packages (from ipython->keras-tuner<2,>=1.0.4->tfx==1.3) (0.7.5)\n",

"Requirement already satisfied: pygments in /usr/local/lib/python3.7/dist-packages (from ipython->keras-tuner<2,>=1.0.4->tfx==1.3) (2.6.1)\n",

"Requirement already satisfied: pexpect>4.3 in /usr/local/lib/python3.7/dist-packages (from ipython->keras-tuner<2,>=1.0.4->tfx==1.3) (4.8.0)\n",

"Requirement already satisfied: ipykernel>=4.5.1 in /usr/local/lib/python3.7/dist-packages (from ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (4.10.1)\n",

"Requirement already satisfied: widgetsnbextension~=3.6.0 in /usr/local/lib/python3.7/dist-packages (from ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (3.6.0)\n",

"Requirement already satisfied: nbformat>=4.2.0 in /usr/local/lib/python3.7/dist-packages (from ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (5.4.0)\n",

"Requirement already satisfied: ipython-genutils~=0.2.0 in /usr/local/lib/python3.7/dist-packages (from ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (0.2.0)\n",

"Requirement already satisfied: jupyterlab-widgets>=1.0.0 in /usr/local/lib/python3.7/dist-packages (from ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (1.1.0)\n",

"Requirement already satisfied: pyasn1>=0.1.7 in /usr/local/lib/python3.7/dist-packages (from oauth2client>=1.4.12->google-apitools<1,>=0.5->tfx==1.3) (0.4.8)\n",

"Requirement already satisfied: idna<4,>=2.5 in /usr/local/lib/python3.7/dist-packages (from requests<3.0.0,>=2.24.0->apache-beam[gcp]<3,>=2.32->tfx==1.3) (2.10)\n",

"Requirement already satisfied: charset-normalizer<3,>=2 in /usr/local/lib/python3.7/dist-packages (from requests<3.0.0,>=2.24.0->apache-beam[gcp]<3,>=2.32->tfx==1.3) (2.0.12)\n",

"Requirement already satisfied: werkzeug>=0.11.15 in /usr/local/lib/python3.7/dist-packages (from tensorboard->keras-tuner<2,>=1.0.4->tfx==1.3) (1.0.1)\n",

"Requirement already satisfied: tensorboard-plugin-wit>=1.6.0 in /usr/local/lib/python3.7/dist-packages (from tensorboard->keras-tuner<2,>=1.0.4->tfx==1.3) (1.8.1)\n",

"Requirement already satisfied: markdown>=2.6.8 in /usr/local/lib/python3.7/dist-packages (from tensorboard->keras-tuner<2,>=1.0.4->tfx==1.3) (3.3.7)\n",

"Requirement already satisfied: tensorboard-data-server<0.7.0,>=0.6.0 in /usr/local/lib/python3.7/dist-packages (from tensorboard->keras-tuner<2,>=1.0.4->tfx==1.3) (0.6.1)\n",

"Requirement already satisfied: google-auth-oauthlib<0.5,>=0.4.1 in /usr/local/lib/python3.7/dist-packages (from tensorboard->keras-tuner<2,>=1.0.4->tfx==1.3) (0.4.6)\n",

"Requirement already satisfied: oauthlib>=3.0.0 in /usr/local/lib/python3.7/dist-packages (from requests-oauthlib->kubernetes<13,>=10.0.1->tfx==1.3) (3.2.0)\n",

"Collecting grpcio<2,>=1.28.1\n",

" Downloading grpcio-1.47.0-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (4.5 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m4.5/4.5 MB\u001b[0m \u001b[31m61.3 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: tornado>=4.0 in /usr/local/lib/python3.7/dist-packages (from ipykernel>=4.5.1->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (5.1.1)\n",

"Requirement already satisfied: jupyter-client in /usr/local/lib/python3.7/dist-packages (from ipykernel>=4.5.1->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (5.3.5)\n",

"Requirement already satisfied: parso<0.9.0,>=0.8.0 in /usr/local/lib/python3.7/dist-packages (from jedi>=0.16->ipython->keras-tuner<2,>=1.0.4->tfx==1.3) (0.8.3)\n",

"Requirement already satisfied: importlib-metadata>=4.4 in /usr/local/lib/python3.7/dist-packages (from markdown>=2.6.8->tensorboard->keras-tuner<2,>=1.0.4->tfx==1.3) (4.11.4)\n",

"Requirement already satisfied: jsonschema>=2.6 in /usr/local/lib/python3.7/dist-packages (from nbformat>=4.2.0->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (4.3.3)\n",

"Requirement already satisfied: jupyter-core in /usr/local/lib/python3.7/dist-packages (from nbformat>=4.2.0->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (4.10.0)\n",

"Requirement already satisfied: fastjsonschema in /usr/local/lib/python3.7/dist-packages (from nbformat>=4.2.0->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (2.15.3)\n",

"Collecting typing-utils>=0.0.3\n",

" Downloading typing_utils-0.1.0-py3-none-any.whl (10 kB)\n",

"Requirement already satisfied: ptyprocess>=0.5 in /usr/local/lib/python3.7/dist-packages (from pexpect>4.3->ipython->keras-tuner<2,>=1.0.4->tfx==1.3) (0.7.0)\n",

"Requirement already satisfied: wcwidth in /usr/local/lib/python3.7/dist-packages (from prompt-toolkit!=3.0.0,!=3.0.1,<3.1.0,>=2.0.0->ipython->keras-tuner<2,>=1.0.4->tfx==1.3) (0.2.5)\n",

"Requirement already satisfied: notebook>=4.4.1 in /usr/local/lib/python3.7/dist-packages (from widgetsnbextension~=3.6.0->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (5.3.1)\n",

"Requirement already satisfied: zipp>=0.5 in /usr/local/lib/python3.7/dist-packages (from importlib-metadata>=4.4->markdown>=2.6.8->tensorboard->keras-tuner<2,>=1.0.4->tfx==1.3) (3.8.0)\n",

"Requirement already satisfied: pyrsistent!=0.17.0,!=0.17.1,!=0.17.2,>=0.14.0 in /usr/local/lib/python3.7/dist-packages (from jsonschema>=2.6->nbformat>=4.2.0->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (0.18.1)\n",

"Requirement already satisfied: importlib-resources>=1.4.0 in /usr/local/lib/python3.7/dist-packages (from jsonschema>=2.6->nbformat>=4.2.0->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (5.7.1)\n",

"Requirement already satisfied: terminado>=0.8.1 in /usr/local/lib/python3.7/dist-packages (from notebook>=4.4.1->widgetsnbextension~=3.6.0->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (0.13.3)\n",

"Requirement already satisfied: nbconvert in /usr/local/lib/python3.7/dist-packages (from notebook>=4.4.1->widgetsnbextension~=3.6.0->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (5.6.1)\n",

"Requirement already satisfied: Send2Trash in /usr/local/lib/python3.7/dist-packages (from notebook>=4.4.1->widgetsnbextension~=3.6.0->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (1.8.0)\n",

"Requirement already satisfied: pyzmq>=13 in /usr/local/lib/python3.7/dist-packages (from jupyter-client->ipykernel>=4.5.1->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (23.1.0)\n",

"Requirement already satisfied: pandocfilters>=1.4.1 in /usr/local/lib/python3.7/dist-packages (from nbconvert->notebook>=4.4.1->widgetsnbextension~=3.6.0->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (1.5.0)\n",

"Requirement already satisfied: defusedxml in /usr/local/lib/python3.7/dist-packages (from nbconvert->notebook>=4.4.1->widgetsnbextension~=3.6.0->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (0.7.1)\n",

"Requirement already satisfied: entrypoints>=0.2.2 in /usr/local/lib/python3.7/dist-packages (from nbconvert->notebook>=4.4.1->widgetsnbextension~=3.6.0->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (0.4)\n",

"Requirement already satisfied: mistune<2,>=0.8.1 in /usr/local/lib/python3.7/dist-packages (from nbconvert->notebook>=4.4.1->widgetsnbextension~=3.6.0->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (0.8.4)\n",

"Requirement already satisfied: testpath in /usr/local/lib/python3.7/dist-packages (from nbconvert->notebook>=4.4.1->widgetsnbextension~=3.6.0->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (0.6.0)\n",

"Requirement already satisfied: bleach in /usr/local/lib/python3.7/dist-packages (from nbconvert->notebook>=4.4.1->widgetsnbextension~=3.6.0->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (5.0.0)\n",

"Requirement already satisfied: webencodings in /usr/local/lib/python3.7/dist-packages (from bleach->nbconvert->notebook>=4.4.1->widgetsnbextension~=3.6.0->ipywidgets<8,>=7->tensorflow-model-analysis<0.35,>=0.34.1->tfx==1.3) (0.5.1)\n",

"Building wheels for collected packages: google-apitools, clang, dill, wrapt\n",

" Building wheel for google-apitools (setup.py) ... \u001b[?25l\u001b[?25hdone\n",

" Created wheel for google-apitools: filename=google_apitools-0.5.31-py3-none-any.whl size=131039 sha256=12792bbb192212a7b2a8e4fca526a7a40124b9a99df855107da1d4693aaa0bcf\n",

" Stored in directory: /root/.cache/pip/wheels/19/b5/2f/1cc3cf2b31e7a9cd1508731212526d9550271274d351c96f16\n",

" Building wheel for clang (setup.py) ... \u001b[?25l\u001b[?25hdone\n",

" Created wheel for clang: filename=clang-5.0-py3-none-any.whl size=30694 sha256=8b0601c614fa84bf7dde017c9e25c4d0359e691557b1202a6ac0875d8bfbdcf0\n",

" Stored in directory: /root/.cache/pip/wheels/98/91/04/971b4c587cf47ae952b108949b46926f426c02832d120a082a\n",

" Building wheel for dill (setup.py) ... \u001b[?25l\u001b[?25hdone\n",

" Created wheel for dill: filename=dill-0.3.1.1-py3-none-any.whl size=78544 sha256=850855ee2ae8f7ab6429d70ec8411bbe7f62cc026f2bb211ae4e2314b8e44719\n",

" Stored in directory: /root/.cache/pip/wheels/a4/61/fd/c57e374e580aa78a45ed78d5859b3a44436af17e22ca53284f\n",

" Building wheel for wrapt (setup.py) ... \u001b[?25l\u001b[?25hdone\n",

" Created wheel for wrapt: filename=wrapt-1.12.1-cp37-cp37m-linux_x86_64.whl size=68719 sha256=e63cd05a6e18767e996732096a0a68b59b1c34c73b1f3a2e382534a0e89f05a6\n",

" Stored in directory: /root/.cache/pip/wheels/62/76/4c/aa25851149f3f6d9785f6c869387ad82b3fd37582fa8147ac6\n",

"Successfully built google-apitools clang dill wrapt\n",

"Installing collected packages: wrapt, typing-extensions, tensorflow-estimator, kt-legacy, keras, joblib, flatbuffers, clang, websocket-client, typing-utils, requests, pymongo, protobuf, prompt-toolkit, packaging, orjson, numpy, grpcio, google-crc32c, gast, fasteners, fastavro, dill, cloudpickle, attrs, absl-py, pyarrow, proto-plus, overrides, ml-metadata, ipython, hdfs, grpcio-gcp, google-resumable-media, docker, tensorflow-metadata, kubernetes, grpcio-status, google-auth-httplib2, google-apitools, apache-beam, grpc-google-iam-v1, google-cloud-core, tensorflow, ml-pipelines-sdk, keras-tuner, google-cloud-vision, google-cloud-videointelligence, google-cloud-storage, google-cloud-spanner, google-cloud-resource-manager, google-cloud-recommendations-ai, google-cloud-pubsub, google-cloud-language, google-cloud-dlp, google-cloud-bigtable, google-cloud-bigquery-storage, google-cloud-bigquery, tensorflow-serving-api, google-cloud-pubsublite, google-cloud-aiplatform, tfx-bsl, tensorflow-transform, tensorflow-model-analysis, tensorflow-data-validation, tfx\n",

" Attempting uninstall: wrapt\n",

" Found existing installation: wrapt 1.14.1\n",

" Uninstalling wrapt-1.14.1:\n",

" Successfully uninstalled wrapt-1.14.1\n",

" Attempting uninstall: typing-extensions\n",

" Found existing installation: typing_extensions 4.1.1\n",

" Uninstalling typing_extensions-4.1.1:\n",

" Successfully uninstalled typing_extensions-4.1.1\n",

" Attempting uninstall: tensorflow-estimator\n",

" Found existing installation: tensorflow-estimator 2.8.0\n",

" Uninstalling tensorflow-estimator-2.8.0:\n",

" Successfully uninstalled tensorflow-estimator-2.8.0\n",

" Attempting uninstall: keras\n",

" Found existing installation: keras 2.8.0\n",

" Uninstalling keras-2.8.0:\n",

" Successfully uninstalled keras-2.8.0\n",

" Attempting uninstall: joblib\n",

" Found existing installation: joblib 1.1.0\n",

" Uninstalling joblib-1.1.0:\n",

" Successfully uninstalled joblib-1.1.0\n",

" Attempting uninstall: flatbuffers\n",

" Found existing installation: flatbuffers 2.0\n",

" Uninstalling flatbuffers-2.0:\n",

" Successfully uninstalled flatbuffers-2.0\n",

" Attempting uninstall: requests\n",

" Found existing installation: requests 2.23.0\n",

" Uninstalling requests-2.23.0:\n",

" Successfully uninstalled requests-2.23.0\n",

" Attempting uninstall: pymongo\n",

" Found existing installation: pymongo 4.1.1\n",

" Uninstalling pymongo-4.1.1:\n",

" Successfully uninstalled pymongo-4.1.1\n",

" Attempting uninstall: protobuf\n",

" Found existing installation: protobuf 3.17.3\n",

" Uninstalling protobuf-3.17.3:\n",

" Successfully uninstalled protobuf-3.17.3\n",

" Attempting uninstall: prompt-toolkit\n",

" Found existing installation: prompt-toolkit 1.0.18\n",

" Uninstalling prompt-toolkit-1.0.18:\n",

" Successfully uninstalled prompt-toolkit-1.0.18\n",

" Attempting uninstall: packaging\n",

" Found existing installation: packaging 21.3\n",

" Uninstalling packaging-21.3:\n",

" Successfully uninstalled packaging-21.3\n",

" Attempting uninstall: numpy\n",

" Found existing installation: numpy 1.21.6\n",

" Uninstalling numpy-1.21.6:\n",

" Successfully uninstalled numpy-1.21.6\n",

" Attempting uninstall: grpcio\n",

" Found existing installation: grpcio 1.46.3\n",

" Uninstalling grpcio-1.46.3:\n",

" Successfully uninstalled grpcio-1.46.3\n",

" Attempting uninstall: gast\n",

" Found existing installation: gast 0.5.3\n",

" Uninstalling gast-0.5.3:\n",

" Successfully uninstalled gast-0.5.3\n",

" Attempting uninstall: dill\n",

" Found existing installation: dill 0.3.5.1\n",

" Uninstalling dill-0.3.5.1:\n",

" Successfully uninstalled dill-0.3.5.1\n",

" Attempting uninstall: cloudpickle\n",

" Found existing installation: cloudpickle 1.3.0\n",

" Uninstalling cloudpickle-1.3.0:\n",

" Successfully uninstalled cloudpickle-1.3.0\n",

" Attempting uninstall: attrs\n",

" Found existing installation: attrs 21.4.0\n",

" Uninstalling attrs-21.4.0:\n",

" Successfully uninstalled attrs-21.4.0\n",

" Attempting uninstall: absl-py\n",

" Found existing installation: absl-py 1.1.0\n",

" Uninstalling absl-py-1.1.0:\n",

" Successfully uninstalled absl-py-1.1.0\n",

" Attempting uninstall: pyarrow\n",

" Found existing installation: pyarrow 6.0.1\n",

" Uninstalling pyarrow-6.0.1:\n",

" Successfully uninstalled pyarrow-6.0.1\n",

" Attempting uninstall: ipython\n",

" Found existing installation: ipython 5.5.0\n",

" Uninstalling ipython-5.5.0:\n",

" Successfully uninstalled ipython-5.5.0\n",

" Attempting uninstall: google-resumable-media\n",

" Found existing installation: google-resumable-media 0.4.1\n",

" Uninstalling google-resumable-media-0.4.1:\n",

" Successfully uninstalled google-resumable-media-0.4.1\n",

" Attempting uninstall: tensorflow-metadata\n",

" Found existing installation: tensorflow-metadata 1.8.0\n",

" Uninstalling tensorflow-metadata-1.8.0:\n",

" Successfully uninstalled tensorflow-metadata-1.8.0\n",

" Attempting uninstall: google-auth-httplib2\n",

" Found existing installation: google-auth-httplib2 0.0.4\n",

" Uninstalling google-auth-httplib2-0.0.4:\n",

" Successfully uninstalled google-auth-httplib2-0.0.4\n",

" Attempting uninstall: google-cloud-core\n",

" Found existing installation: google-cloud-core 1.0.3\n",

" Uninstalling google-cloud-core-1.0.3:\n",

" Successfully uninstalled google-cloud-core-1.0.3\n",

" Attempting uninstall: tensorflow\n",

" Found existing installation: tensorflow 2.8.2+zzzcolab20220527125636\n",

" Uninstalling tensorflow-2.8.2+zzzcolab20220527125636:\n",

" Successfully uninstalled tensorflow-2.8.2+zzzcolab20220527125636\n",

" Attempting uninstall: google-cloud-storage\n",

" Found existing installation: google-cloud-storage 1.18.1\n",

" Uninstalling google-cloud-storage-1.18.1:\n",

" Successfully uninstalled google-cloud-storage-1.18.1\n",

" Attempting uninstall: google-cloud-language\n",

" Found existing installation: google-cloud-language 1.2.0\n",

" Uninstalling google-cloud-language-1.2.0:\n",

" Successfully uninstalled google-cloud-language-1.2.0\n",

" Attempting uninstall: google-cloud-bigquery-storage\n",

" Found existing installation: google-cloud-bigquery-storage 1.1.2\n",

" Uninstalling google-cloud-bigquery-storage-1.1.2:\n",

" Successfully uninstalled google-cloud-bigquery-storage-1.1.2\n",

" Attempting uninstall: google-cloud-bigquery\n",

" Found existing installation: google-cloud-bigquery 1.21.0\n",

" Uninstalling google-cloud-bigquery-1.21.0:\n",

" Successfully uninstalled google-cloud-bigquery-1.21.0\n",

"\u001b[31mERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.\n",

"xarray-einstats 0.2.2 requires numpy>=1.21, but you have numpy 1.19.5 which is incompatible.\n",

"pandas-gbq 0.13.3 requires google-cloud-bigquery[bqstorage,pandas]<2.0.0dev,>=1.11.1, but you have google-cloud-bigquery 2.34.4 which is incompatible.\n",

"multiprocess 0.70.13 requires dill>=0.3.5.1, but you have dill 0.3.1.1 which is incompatible.\n",

"jupyter-console 5.2.0 requires prompt-toolkit<2.0.0,>=1.0.0, but you have prompt-toolkit 3.0.30 which is incompatible.\n",

"gym 0.17.3 requires cloudpickle<1.7.0,>=1.2.0, but you have cloudpickle 2.1.0 which is incompatible.\n",

"google-colab 1.0.0 requires ipython~=5.5.0, but you have ipython 7.34.0 which is incompatible.\n",

"google-colab 1.0.0 requires requests~=2.23.0, but you have requests 2.28.1 which is incompatible.\n",

"datascience 0.10.6 requires folium==0.2.1, but you have folium 0.8.3 which is incompatible.\n",

"albumentations 0.1.12 requires imgaug<0.2.7,>=0.2.5, but you have imgaug 0.2.9 which is incompatible.\u001b[0m\u001b[31m\n",

"\u001b[0mSuccessfully installed absl-py-0.12.0 apache-beam-2.40.0 attrs-20.3.0 clang-5.0 cloudpickle-2.1.0 dill-0.3.1.1 docker-4.4.4 fastavro-1.5.2 fasteners-0.17.3 flatbuffers-1.12 gast-0.4.0 google-apitools-0.5.31 google-auth-httplib2-0.1.0 google-cloud-aiplatform-1.15.0 google-cloud-bigquery-2.34.4 google-cloud-bigquery-storage-2.13.2 google-cloud-bigtable-1.7.2 google-cloud-core-1.7.2 google-cloud-dlp-3.7.1 google-cloud-language-1.3.2 google-cloud-pubsub-2.13.0 google-cloud-pubsublite-1.4.2 google-cloud-recommendations-ai-0.2.0 google-cloud-resource-manager-1.5.1 google-cloud-spanner-1.19.3 google-cloud-storage-2.2.1 google-cloud-videointelligence-1.16.3 google-cloud-vision-1.0.2 google-crc32c-1.3.0 google-resumable-media-2.3.3 grpc-google-iam-v1-0.12.4 grpcio-1.47.0 grpcio-gcp-0.2.2 grpcio-status-1.47.0 hdfs-2.7.0 ipython-7.34.0 joblib-0.14.1 keras-2.7.0 keras-tuner-1.1.2 kt-legacy-1.0.4 kubernetes-12.0.1 ml-metadata-1.3.0 ml-pipelines-sdk-1.3.0 numpy-1.19.5 orjson-3.7.7 overrides-6.1.0 packaging-20.9 prompt-toolkit-3.0.30 proto-plus-1.20.6 protobuf-3.19.4 pyarrow-2.0.0 pymongo-3.12.3 requests-2.28.1 tensorflow-2.6.0+zzzcolab20220506153740 tensorflow-data-validation-1.3.0 tensorflow-estimator-2.7.0 tensorflow-metadata-1.2.0 tensorflow-model-analysis-0.34.1 tensorflow-serving-api-2.6.0 tensorflow-transform-1.3.0 tfx-1.3.0 tfx-bsl-1.3.0 typing-extensions-3.7.4.3 typing-utils-0.1.0 websocket-client-1.3.3 wrapt-1.12.1\n",

"\u001b[33mWARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv\u001b[0m\u001b[33m\n",

"\u001b[0mLooking in indexes: https://pypi.org/simple, https://us-python.pkg.dev/colab-wheels/public/simple/\n",

"Collecting tensorflow-estimator==2.6.0\n",

" Downloading tensorflow_estimator-2.6.0-py2.py3-none-any.whl (462 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m462.9/462.9 kB\u001b[0m \u001b[31m2.0 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hInstalling collected packages: tensorflow-estimator\n",

" Attempting uninstall: tensorflow-estimator\n",

" Found existing installation: tensorflow-estimator 2.7.0\n",

" Uninstalling tensorflow-estimator-2.7.0:\n",

" Successfully uninstalled tensorflow-estimator-2.7.0\n",

"Successfully installed tensorflow-estimator-2.6.0\n",

"\u001b[33mWARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv\u001b[0m\u001b[33m\n",

"\u001b[0mLooking in indexes: https://pypi.org/simple, https://us-python.pkg.dev/colab-wheels/public/simple/\n",

"Collecting keras==2.6.0\n",

" Downloading keras-2.6.0-py2.py3-none-any.whl (1.3 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m1.3/1.3 MB\u001b[0m \u001b[31m53.0 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hInstalling collected packages: keras\n",

" Attempting uninstall: keras\n",

" Found existing installation: keras 2.7.0\n",

" Uninstalling keras-2.7.0:\n",

" Successfully uninstalled keras-2.7.0\n",

"Successfully installed keras-2.6.0\n",

"\u001b[33mWARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv\u001b[0m\u001b[33m\n",

"\u001b[0m"

]

}

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "Yr2ulfeNvvom"

},

"source": [

"*Note: In Google Colab, you need to restart the runtime at this point to finalize updating the packages you just installed. You can do so by clicking the `Restart Runtime` at the end of the output cell above (after installation), or by selecting `Runtime > Restart Runtime` in the Menu bar. **Please do not proceed to the next section without restarting.** You can also ignore the errors about version incompatibility of some of the bundled packages because we won't be using those in this notebook.*"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "T_MPhjWTvNSr"

},

"source": [

"### Imports\n",

"\n",

"You will then import the packages you will need for this exercise."

]

},

{

"cell_type": "code",

"metadata": {

"id": "_leAIdFKAxAD"

},

"source": [

"import tensorflow as tf\n",

"from tensorflow import keras\n",

"import tensorflow_datasets as tfds\n",

"\n",

"import os\n",

"import pprint\n",

"\n",

"from tfx.components import ImportExampleGen\n",

"from tfx.components import ExampleValidator\n",

"from tfx.components import SchemaGen\n",

"from tfx.components import StatisticsGen\n",

"from tfx.components import Transform\n",

"from tfx.components import Tuner\n",

"from tfx.components import Trainer\n",

"\n",

"from tfx.proto import example_gen_pb2\n",

"from tfx.orchestration.experimental.interactive.interactive_context import InteractiveContext"

],

"execution_count": 1,

"outputs": []

},

{

"cell_type": "markdown",

"metadata": {

"id": "ReV_UXOgCZvx"

},

"source": [

"## Download and prepare the dataset\n",

"\n",

"As mentioned earlier, you will be using the Fashion MNIST dataset just like in the previous lab. This will allow you to compare the similarities and differences when using Keras Tuner as a standalone library and within an ML pipeline.\n",

"\n",

"You will first need to setup the directories that you will use to store the dataset, as well as the pipeline artifacts and metadata store."

]

},

{

"cell_type": "code",

"metadata": {

"id": "cNQlwf5_t8Fc"

},

"source": [

"# Location of the pipeline metadata store\n",

"_pipeline_root = './pipeline/'\n",

"\n",

"# Directory of the raw data files\n",

"_data_root = './data/fmnist'\n",

"\n",

"# Temporary directory\n",

"tempdir = './tempdir'"

],

"execution_count": 2,

"outputs": []

},

{

"cell_type": "code",

"metadata": {

"id": "BqwtVwAsslgN"

},

"source": [

"# Create the dataset directory\n",

"!mkdir -p {_data_root}\n",

"\n",

"# Create the TFX pipeline files directory\n",

"!mkdir {_pipeline_root}"

],

"execution_count": 3,

"outputs": []

},

{

"cell_type": "markdown",

"metadata": {

"id": "JyjfgG0ax9uv"

},

"source": [

"You will now download FashionMNIST from [Tensorflow Datasets](https://www.tensorflow.org/datasets). The `with_info` flag will be set to `True` so you can display information about the dataset in the next cell (i.e. using `ds_info`)."

]

},

{

"cell_type": "code",

"metadata": {

"id": "aUzvq3WFvKyl",

"colab": {

"base_uri": "https://localhost:8080/",

"height": 299,

"referenced_widgets": [

"14c48dad3a62457e95d14c5969617a95",

"fabc8d79861e415588fa87b61592cc92",

"fead14ca47824dc5bad3a89c53a598ff",

"4400762ea5bd402ab5063c46732de816",

"a8c5f80562474004a8ece3892c9a1660",

"0183fa20298845198493992279557500",

"cbeaba2795a3416cb03c63eadd5c6784",

"5c21be423d2545a496bafbb124c7a54b",

"f38a51e9325f43628f0962c056896603",

"610f895d406c403cb4fe5552e39860e1",

"795e2d771c90482b87dd503e819b15c8",

"2a019d148bd042ab93869b5ab4691d98",

"34fb080edea84f04a1244c27b8c77476",

"62cd37ee65484395a56af6fd09dcffae",

"22dd37f87e4f4835b5dd46f5906a9c50",

"6c724cb6f42e4ddfbce30bf91394661c",

"4977b600d6ba4bbc958fbc50becd078d",

"c432812346a64951b6a6f86dc0c57f74",

"182781ed74b0428f9aa4eabdc4ec5106",

"79f67094663e4b5f90232aa629df848f",

"b36f3f17f367414d9574f86b40485c35",

"2ae7180e489b415b8f6ede1040b0d81a",

"587cb35f14ef4a489ba168f2372489df",

"38b163a029e6451b8ca10b7c1e5a5a74",

"695d49869df947179a239d44cc304d93",

"565f0096a30d4b69af8fa138427af892",

"fbb01ef2dbb3442eaf6fe44f598c30f4",

"5b26d50f3ae04705a5810826ecbf9ae1",

"9c7d271caadc4a6883469034a58b7ba4",

"ca7f1f33823b4357b7a9c569eead8a80",

"5a9cbc4f46624f1785045779d7488f19",

"3c355066e45f4f53ad1514b7381d71ff",

"c834f2b9ab444e46a8ea6d8f7fc95147",

"23e42f4aa10346f98b852898b0fd63eb",

"6e6bccbca81346618968ddeb05b937fe",

"f5287636d07e4cd193d085611e5823ab",

"c64160ec90d94174b286218662fdcdab",

"f01255ad84d541938532dc5edd72590a",

"fd9763902ed241dea6f217a735f7adde",

"38fa113736f048c48bb4d4250fbc06a8",

"0eeaab0ae4d3467593ea36a894e95157",

"986dd41579d5466facff4534973fc155",

"3d2a0087e78b474ca0ee33ee4d6b1fc6",

"377b3e912b124131b7cf151ba7c24e47",

"300bf99edd8146b8a9426ee9abc01974",

"8f184c41d0d04ce5a002631515bb7a6a",

"0dcaccd02b99425294116cb8b7cc803c",

"e4f3faec638f47febcfafeb7bd9ff017",

"62f1279d8bcb41bcbbf4d47363eb84ff",

"ca1046cfa8e84e36a708982aa0a85bef",

"189333397a164614b31f1ae701908fca",

"cf17a2d991364fb5abf688f879199aff",

"c91e30cfa8f742bc9e3c1e6828fc8609",

"3be165e1eb044c6db50eee5f3717e1a1",

"4c657a6146e4490f97f8ce39aee3beb6",

"caae62fb8b074f618fb9b00d602725c0",

"c68af1ca52f94778afec1151c7e9f508",

"d07656452ce74b019129b5968f3339a0",

"9e816381e9c74b9897b8737f824a9a7f",

"209a917093a7472e9f93ac222df237d7",

"bc23e131dc444d23918b0c9d8499dd04",

"4ecc4f8469b849b7a7582c27ec726d21",

"2bdce4f6dce34bb3805fbf839286090c",

"0f4978b1aeb94df9870a3e70149df983",

"0841ef877edd4441831d18008056d08e",

"9fdefc8fc2df4c7c8f4011c08deec1ef",

"a8ef6e43873f4d8aa9d6767ca36f3f11",

"ae10e2d4b104449eb2d6ec971a08537d",

"1ecc77266de5499cb00ced59b939b90c",

"d670f78db66a4a73b501f7eadc63982c",

"462d40c2408a4cb6bbbd4ca4bea5b6e0",

"93891a42d1104fb6bd513e34cd48895e",

"1dc6f18a88564f3fa709f9334dcd501e",

"102e5805439144209cca2d5eac7fdd59",

"963dc9f3b27044a5aa77942a616e1197",

"625ab8d0ea4744cea1979bf8d3671b0b",

"c555b2d46dc44f3a8e9efc49651e2222"

]

},

"outputId": "a8283e3c-cdd9-4afa-9289-cb04c7bea2b6"

},

"source": [

"# Download the dataset\n",

"ds, ds_info = tfds.load('fashion_mnist', data_dir=tempdir, with_info=True)"

],

"execution_count": 4,

"outputs": [

{

"output_type": "stream",

"name": "stdout",

"text": [

"\u001b[1mDownloading and preparing dataset fashion_mnist/3.0.1 (download: 29.45 MiB, generated: 36.42 MiB, total: 65.87 MiB) to ./tempdir/fashion_mnist/3.0.1...\u001b[0m\n"

]

},

{

"output_type": "display_data",

"data": {

"text/plain": [

"Dl Completed...: 0 url [00:00, ? url/s]"

],

"application/vnd.jupyter.widget-view+json": {

"version_major": 2,

"version_minor": 0,

"model_id": "14c48dad3a62457e95d14c5969617a95"

}

},

"metadata": {}

},

{

"output_type": "display_data",

"data": {

"text/plain": [

"Dl Size...: 0 MiB [00:00, ? MiB/s]"

],

"application/vnd.jupyter.widget-view+json": {

"version_major": 2,

"version_minor": 0,

"model_id": "2a019d148bd042ab93869b5ab4691d98"

}

},

"metadata": {}

},

{

"output_type": "display_data",

"data": {

"text/plain": [

"Extraction completed...: 0 file [00:00, ? file/s]"

],

"application/vnd.jupyter.widget-view+json": {

"version_major": 2,

"version_minor": 0,

"model_id": "587cb35f14ef4a489ba168f2372489df"

}

},

"metadata": {}

},

{

"output_type": "stream",

"name": "stdout",

"text": [

"\n",

"\n",

"\n"

]

},

{

"output_type": "display_data",

"data": {

"text/plain": [

"0 examples [00:00, ? examples/s]"

],

"application/vnd.jupyter.widget-view+json": {

"version_major": 2,

"version_minor": 0,

"model_id": "23e42f4aa10346f98b852898b0fd63eb"

}

},

"metadata": {}

},

{

"output_type": "stream",

"name": "stdout",

"text": [

"Shuffling and writing examples to ./tempdir/fashion_mnist/3.0.1.incomplete573I3J/fashion_mnist-train.tfrecord\n"

]

},

{

"output_type": "display_data",

"data": {

"text/plain": [

" 0%| | 0/60000 [00:00\n",

".tfx-object.expanded {\n",

" padding: 4px 8px 4px 8px;\n",

" background: white;\n",

" border: 1px solid #bbbbbb;\n",

" box-shadow: 4px 4px 2px rgba(0,0,0,0.05);\n",

"}\n",

".tfx-object, .tfx-object * {\n",

" font-size: 11pt;\n",

"}\n",

".tfx-object > .title {\n",

" cursor: pointer;\n",

"}\n",

".tfx-object .expansion-marker {\n",

" color: #999999;\n",

"}\n",

".tfx-object.expanded > .title > .expansion-marker:before {\n",

" content: '▼';\n",

"}\n",

".tfx-object.collapsed > .title > .expansion-marker:before {\n",

" content: '▶';\n",

"}\n",

".tfx-object .class-name {\n",

" font-weight: bold;\n",

"}\n",

".tfx-object .deemphasize {\n",

" opacity: 0.5;\n",

"}\n",

".tfx-object.collapsed > table.attr-table {\n",

" display: none;\n",

"}\n",

".tfx-object.expanded > table.attr-table {\n",

" display: block;\n",

"}\n",

".tfx-object table.attr-table {\n",

" border: 2px solid white;\n",

" margin-top: 5px;\n",

"}\n",

".tfx-object table.attr-table td.attr-name {\n",

" vertical-align: top;\n",

" font-weight: bold;\n",

"}\n",

".tfx-object table.attr-table td.attrvalue {\n",

" text-align: left;\n",

"}\n",

"\n",

"\n",

"

\n",

"image source: https://www.tensorflow.org/tfx/guide\n",

"\n",

"* The *Tuner* utilizes the [Keras Tuner](https://keras-team.github.io/keras-tuner/) API under the hood to tune your model's hyperparameters.\n",

"* You can get the best set of hyperparameters from the Tuner component and feed it into the *Trainer* component to optimize your model for training.\n",

"\n",

"You will again be working with the [FashionMNIST](https://github.com/zalandoresearch/fashion-mnist) dataset and will feed it though the TFX pipeline up to the Trainer component.You will quickly review the earlier components from Course 2, then focus on the two new components introduced.\n",

"\n",

"Let's begin!\n",

"\n"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "MUXex9ctTuDB"

},

"source": [

"## Setup"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "YEFWSi_-umNz"

},

"source": [

"### Install TFX\n",

"\n",

"You will first install [TFX](https://www.tensorflow.org/tfx), a framework for developing end-to-end machine learning pipelines."

]

},

{

"cell_type": "code",

"metadata": {

"id": "IqR2PQG4ZaZ0",

"colab": {

"base_uri": "https://localhost:8080/"

},

"outputId": "60ee146a-1707-485d-b95e-b685781ce85b"

},

"source": [

"!pip install -U pip\n",

"!pip install -U tfx==1.3\n",

"\n",

"# These are downgraded to work with the packages used by TFX 1.3\n",

"# Please do not delete because it will cause import errors in the next cell\n",

"!pip install --upgrade tensorflow-estimator==2.6.0\n",

"!pip install --upgrade keras==2.6.0"

],

"execution_count": 1,

"outputs": [

{

"output_type": "stream",

"name": "stdout",

"text": [

"Looking in indexes: https://pypi.org/simple, https://us-python.pkg.dev/colab-wheels/public/simple/\n",

"Requirement already satisfied: pip in /usr/local/lib/python3.7/dist-packages (21.1.3)\n",

"Collecting pip\n",

" Downloading pip-22.1.2-py3-none-any.whl (2.1 MB)\n",

"\u001b[K |████████████████████████████████| 2.1 MB 36.2 MB/s \n",

"\u001b[?25hInstalling collected packages: pip\n",

" Attempting uninstall: pip\n",

" Found existing installation: pip 21.1.3\n",

" Uninstalling pip-21.1.3:\n",

" Successfully uninstalled pip-21.1.3\n",

"Successfully installed pip-22.1.2\n",

"Looking in indexes: https://pypi.org/simple, https://us-python.pkg.dev/colab-wheels/public/simple/\n",

"Collecting tfx==1.3\n",

" Downloading tfx-1.3.0-py3-none-any.whl (2.4 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m2.4/2.4 MB\u001b[0m \u001b[31m31.4 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: click<8,>=7 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (7.1.2)\n",

"Collecting google-cloud-bigquery<3,>=2.26.0\n",

" Downloading google_cloud_bigquery-2.34.4-py2.py3-none-any.whl (206 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m206.6/206.6 kB\u001b[0m \u001b[31m21.1 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: protobuf<4,>=3.13 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (3.17.3)\n",

"Requirement already satisfied: tensorflow-hub<0.13,>=0.9.0 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (0.12.0)\n",

"Collecting tensorflow-data-validation<1.4.0,>=1.3.0\n",

" Downloading tensorflow_data_validation-1.3.0-cp37-cp37m-manylinux_2_12_x86_64.manylinux2010_x86_64.whl (1.4 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m1.4/1.4 MB\u001b[0m \u001b[31m36.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting tfx-bsl<1.4.0,>=1.3.0\n",

" Downloading tfx_bsl-1.3.0-cp37-cp37m-manylinux_2_12_x86_64.manylinux2010_x86_64.whl (19.0 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m19.0/19.0 MB\u001b[0m \u001b[31m43.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: jinja2<4,>=2.7.3 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (2.11.3)\n",

"Collecting pyarrow<3,>=1\n",

" Downloading pyarrow-2.0.0-cp37-cp37m-manylinux2014_x86_64.whl (17.7 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m17.7/17.7 MB\u001b[0m \u001b[31m46.1 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting google-apitools<1,>=0.5\n",

" Downloading google_apitools-0.5.32-py3-none-any.whl (135 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m135.7/135.7 kB\u001b[0m \u001b[31m7.4 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting tensorflow-serving-api!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.*,!=2.4.*,!=2.5.*,<3,>=1.15\n",

" Downloading tensorflow_serving_api-2.9.0-py2.py3-none-any.whl (37 kB)\n",

"Collecting ml-pipelines-sdk==1.3.0\n",

" Downloading ml_pipelines_sdk-1.3.0-py3-none-any.whl (1.2 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m1.2/1.2 MB\u001b[0m \u001b[31m32.8 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting absl-py<0.13,>=0.9\n",

" Downloading absl_py-0.12.0-py3-none-any.whl (129 kB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m129.4/129.4 kB\u001b[0m \u001b[31m13.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hRequirement already satisfied: grpcio<2,>=1.28.1 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (1.46.3)\n",

"Requirement already satisfied: pyyaml<6,>=3.12 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (3.13)\n",

"Requirement already satisfied: google-api-python-client<2,>=1.8 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (1.12.11)\n",

"Requirement already satisfied: portpicker<2,>=1.3.1 in /usr/local/lib/python3.7/dist-packages (from tfx==1.3) (1.3.9)\n",

"Collecting tensorflow-model-analysis<0.35,>=0.34.1\n",

" Downloading tensorflow_model_analysis-0.34.1-py3-none-any.whl (1.8 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m1.8/1.8 MB\u001b[0m \u001b[31m50.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting ml-metadata<1.4.0,>=1.3.0\n",

" Downloading ml_metadata-1.3.0-cp37-cp37m-manylinux_2_12_x86_64.manylinux2010_x86_64.whl (6.5 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m6.5/6.5 MB\u001b[0m \u001b[31m54.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting apache-beam[gcp]<3,>=2.32\n",

" Downloading apache_beam-2.40.0-cp37-cp37m-manylinux2010_x86_64.whl (10.9 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m10.9/10.9 MB\u001b[0m \u001b[31m54.3 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting kubernetes<13,>=10.0.1\n",

" Downloading kubernetes-12.0.1-py2.py3-none-any.whl (1.7 MB)\n",

"\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m1.7/1.7 MB\u001b[0m \u001b[31m53.4 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

"\u001b[?25hCollecting attrs<21,>=19.3.0\n",