{

"cells": [

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# Getting NLTK for Text Processing"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"This notebook introduces the [Natural Language Toolkit](http://www.nltk.org/) (NLTK) which facilitates a broad range of tasks for text processing and representing results. It's part of the [The Art of Literary Text Analysis](ArtOfLiteraryTextAnalysis.ipynb) and assumes that you've already worked through previous notebooks ([Getting Setup](GettingSetup.ipynb), [Getting Started](GettingStarted.ipynb) and [Getting Texts](GettingTexts.ipynb)). In this notebook we'll look in particular at:\n",

"\n",

"* [Installing the NLTK library (for text processing)](#Installing-the-NLTK-Library)\n",

"* [Simple tokenization of words](#Tokenization)\n",

"* [Producing a simple table of frequencies of words](#Word-Frequencies)\n",

"* [Applying a list of stopwords (words to ignore)](#Stop_Words)\n",

"* [Producing a simple concordance for a keyword](#Building-a-Simple-Concordance)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Installing the NLTK Library"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The Anaconda bundle that we're using already includes [NLTK](http://www.nltk.org/), but the bundle doesn't include the NLTK data collections that are available. Fortunately, it's easy to download the data, and we can even do it within a notebook. Following the same steps as before, create a new notebook named \"GettingNltk\" and run this first code cell:"

]

},

{

"cell_type": "code",

"execution_count": 1,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"showing info https://raw.githubusercontent.com/nltk/nltk_data/gh-pages/index.xml\n"

]

},

{

"data": {

"text/plain": [

"True"

]

},

"execution_count": 1,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"import nltk\n",

"\n",

"nltk.download() # download NLTK data (we should only need to run this cell once)"

]

},

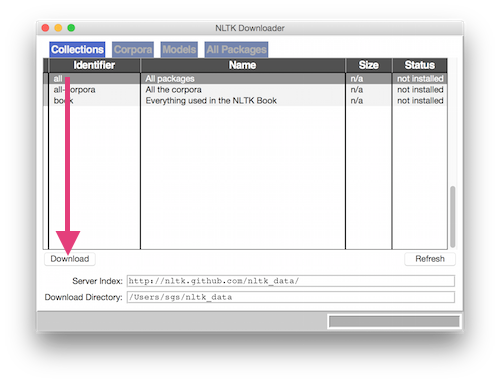

{

"cell_type": "markdown",

"metadata": {},

"source": [

"This should cause a new window to appear (eventually) with a dialog box to download data collections. For the sake of simplicity, if possible select the \"all\" row and press \"Download\". Once the download is complete, you can close that window.\n",

"\n",

"\n",

"\n",

"Now you're set! You can close and delete the temporary notebook used for installation."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Text Processing"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Now that we have NLTK installed, let's use it for text processing.\n",

"\n",

"We'll start by retrieving _The Gold Bug_ plain text that we had saved locally in the [Getting Texts](GettingTexts.ipynb) notebook. If you need to recapitulate the essentials of the previous notebook, try running this to retrieve the text:\n",

"\n",

"```python\n",

"import urllib.request\n",

"# retrieve Poe plain text value\n",

"poeUrl = \"http://www.gutenberg.org/files/2147/2147-0.txt\"\n",

"poeString = urllib.request.urlopen(poeUrl).read().decode()```\n",

"\n",

"And then this, in a separate cell so that we don't read repeatedly from Gutenberg:\n",

"\n",

"```python\n",

"import os\n",

"# isolate The Gold Bug\n",

"start = poeString.find(\"THE GOLD-BUG\")\n",

"end = poeString.find(\"FOUR BEASTS IN ONE\")\n",

"goldBugString = poeString[start:end]\n",

"# save the file locally\n",

"directory = \"data\"\n",

"if not os.path.exists(directory):\n",

" os.makedirs(directory)\n",

"with open(\"data/goldBug.txt\", \"w\") as f:\n",

" f.write(goldBugString)```\n",

"\n",

"Now we should be ready to retrieve the text:"

]

},

{

"cell_type": "code",

"execution_count": 1,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"with open(\"data/goldBug.txt\", \"r\") as f:\n",

" goldBugString = f.read()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Now we will work toward showing the top frequency words in our plain text. This involves three major steps:\n",

"\n",

"1. processing our plain text to find the words (also known as tokenization)\n",

"1. counting the frequencies of each word\n",

"1. displaying the frequencies information"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Tokenization"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Tokenization is the basic process of parsing a string to divide it into smaller units of the same kind. You can tokenize text into paragraphs, sentences, words or other structures, but here we're focused on recognizing words in our text. For that, let's import the ```nltk``` library and use its convenient ```word_tokenize()``` function. NLTK actually has several ways of tokenizing texts, and for that matter we could write our own code to do it. We'll have a peek at the first ten tokens."

]

},

{

"cell_type": "code",

"execution_count": 2,

"metadata": {},

"outputs": [

{

"data": {

"text/plain": [

"['THE', 'GOLD-BUG', 'What', 'ho', '!', 'what', 'ho', '!', 'this', 'fellow']"

]

},

"execution_count": 2,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"import nltk\n",

"\n",

"goldBugTokens = nltk.word_tokenize(goldBugString)\n",

"goldBugTokens[:10]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We can see from the above that ```word_tokenize``` does a useful job of identifying words (including hyphenated words like \"GOLD-BUG\"), but also includes tokens like the exclamation mark. In some cases punctuation like this might be useful, but in our case we want to focus on word frequencies, so we should filter out punctuation tokens. (To be fair, nltk.word_tokenize() is expecting to work with sentences that have already been parsed so we're slightly misusing it here, but that's ok.)\n",

"\n",

"To accomplish the filtering we will use a construct called [list comprehension](https://docs.python.org/3.4/tutorial/datastructures.html#list-comprehensions) with a conditional test built in. Let's take it one step at a time, first using a loop structure like we've already seen in [Getting Texts](GettingTexts.ipynb), and then doing the same thing with a list comprehension."

]

},

{

"cell_type": "code",

"execution_count": 3,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"['THE', 'GOLD-BUG', 'What', 'ho', '!', 'what', 'ho', '!', 'this', 'fellow'] (for loop technique)\n",

"['THE', 'GOLD-BUG', 'What', 'ho', '!', 'what', 'ho', '!', 'this', 'fellow'] (list comprehension technique)\n"

]

}

],

"source": [

"# technique 1 where we create a new list\n",

"loopList = []\n",

"for word in goldBugTokens[:10]:\n",

" loopList.append(word)\n",

"print(loopList, \"(for loop technique)\")\n",

" \n",

" \n",

"# technique 2 with list comprehension\n",

"print([word for word in goldBugTokens[:10]], \"(list comprehension technique)\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Identical! So the general form of a list comprehension (which is very compact) is: \n",

"\n",

"> [_expression(item)_ for _item_ in _list_)]\n",

"\n",

"We can now go a step further and add a condition to the list comprehension : we'll only include the word in the final list if the first character in the word is alphabetic as defined by the [isalpha()](https://docs.python.org/3.4/library/stdtypes.html?highlight=isalpha#str.isalpha) function (`word[0]` – remember the [string sequence technique](GettingTexts.ipynb#Working-with-Parts-of-String))."

]

},

{

"cell_type": "code",

"execution_count": 10,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"['THE', 'GOLD-BUG', 'What', 'ho', 'what', 'ho', 'this', 'fellow']\n"

]

}

],

"source": [

"print([word for word in goldBugTokens[:10] if word[0].isalpha()])"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Word Frequencies"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Now that we've had a first pass at word tokenization (keeping only word tokens), let's look at counting word frequencies. Essentially we want to go through the tokens and tally the number of times each one appears. Not surprisingly, the NLTK has a very convenient method for doing just this, which we can see in this small sample (the first 10 word tokens):"

]

},

{

"cell_type": "code",

"execution_count": 13,

"metadata": {},

"outputs": [

{

"data": {

"text/plain": [

"Counter({'GOLD-BUG': 1,\n",

" 'THE': 1,\n",

" 'What': 1,\n",

" 'fellow': 1,\n",

" 'ho': 2,\n",

" 'this': 1,\n",

" 'what': 1})"

]

},

"execution_count": 13,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"goldBugRealWordTokensSample = [word for word in goldBugTokens[:10] if word[0].isalpha()]\n",

"goldBugRealWordFrequenciesSample = nltk.FreqDist(goldBugRealWordTokensSample)\n",

"goldBugRealWordFrequenciesSample"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"This ```FreqDist``` object is a kind of dictionary, where each word is paired with its frequency (separated by a colon), and each pair is separated by a comma. This kind of dictionary also has a very convenient way of displaying results as a table:"

]

},

{

"cell_type": "code",

"execution_count": 14,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

" ho what GOLD-BUG fellow What THE this \n",

" 2 1 1 1 1 1 1 \n"

]

}

],

"source": [

"goldBugRealWordFrequenciesSample.tabulate()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The results are displayed in descending order of frequency (two occurrences of \"ho\"). One of the things we can notice is that \"What\" and \"what\" are calculated separately, which in some cases may be good, but for our purposes probably isn't. This might lead us to rethink our steps until now and consider the possibility of converting our string to lowercase during tokenization."

]

},

{

"cell_type": "code",

"execution_count": 15,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"what ho gold-bug the fellow this \n",

" 2 2 1 1 1 1 \n"

]

}

],

"source": [

"goldBugTokensLowercase = nltk.word_tokenize(goldBugString.lower()) # use lower() to convert entire string to lowercase\n",

"goldBugRealWordTokensLowercaseSample = [word for word in goldBugTokensLowercase[:10] if word[0].isalpha()]\n",

"goldBugRealWordFrequenciesSample = nltk.FreqDist(goldBugRealWordTokensLowercaseSample)\n",

"goldBugRealWordFrequenciesSample.tabulate(20)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Good, now we have \"what\" and \"What\" as the same word form counted twice. (There are disadvantages to this as well, such as more difficulty in identifying proper names and the start of sentences, but text mining is often a set of compromises.)\n",

"\n",

"Let's redo our entire workflow with the full set of tokens (not just a sample)."

]

},

{

"cell_type": "code",

"execution_count": 16,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"goldBugTokensLowercase = nltk.word_tokenize(goldBugString.lower())\n",

"goldBugRealWordTokensLowercase = [word for word in goldBugTokensLowercase if word[0].isalpha()]\n",

"goldBugRealWordFrequencies = nltk.FreqDist(goldBugRealWordTokensLowercase)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"One simple way of measuring the vocabulary richness of an author is to calculate the ratio of the total number of words and the number of unique words. If an author repeats words more often, it may be because he or she is drawing on a smaller vocabulary (either deliberately or not), which is a measure of style. There are several factors to consider, such as the length of the text, but in the simplest terms we can calculate the lexical diversity of an author by dividng the number of word forms (types) by the total number of tokens. We already have the necessary ingredients:\n",

"\n",

"* types: number of different words (number of word: count pairs in ```goldBugRealWordFrequencies```)\n",

"* tokens: total number of word tokens (length of ```goldBugRealWordTokensLowercase```"

]

},

{

"cell_type": "code",

"execution_count": 17,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"number of types: 2681\n",

"number of tokens: 13508\n",

"type/token ratio: 0.1984749777909387\n"

]

}

],

"source": [

"print(\"number of types: \", len(goldBugRealWordFrequencies))\n",

"print(\"number of tokens: \", len(goldBugRealWordTokensLowercase))\n",

"print(\"type/token ratio: \", len(goldBugRealWordFrequencies)/len(goldBugRealWordTokensLowercase))"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We haven't yet looked at our output for the top frequency lowercase words."

]

},

{

"cell_type": "code",

"execution_count": 18,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

" the of and i to a in it you was that with for as had at he but this we \n",

" 877 465 359 336 329 327 238 213 162 137 130 114 113 113 110 108 103 99 99 98 \n"

]

}

],

"source": [

"goldBugRealWordFrequencies.tabulate(20) # show a sample of the top frequency terms"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We tokenized, filtered and counted in three lines of code, and then a fourth to show the top frequency terms, but the results aren't necessarily very exciting. There's not much in these top frequency words that could be construed as especially characteristic of _The Gold Bug_, in large part because the most frequent words are similar for most texts of a given language: they're so-called function words that have more of a syntactic (grammatical) function rather than a semantic (meaning-bearing) value."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"\n",

"Fortunately, our NLTK library contains a list of stop-words for English (and other languages). We can load the list and look at its contents."

]

},

{

"cell_type": "code",

"execution_count": 20,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"['a', 'about', 'above', 'after', 'again', 'against', 'all', 'am', 'an', 'and', 'any', 'are', 'as', 'at', 'be', 'because', 'been', 'before', 'being', 'below', 'between', 'both', 'but', 'by', 'can', 'did', 'do', 'does', 'doing', 'don', 'down', 'during', 'each', 'few', 'for', 'from', 'further', 'had', 'has', 'have', 'having', 'he', 'her', 'here', 'hers', 'herself', 'him', 'himself', 'his', 'how', 'i', 'if', 'in', 'into', 'is', 'it', 'its', 'itself', 'just', 'me', 'more', 'most', 'my', 'myself', 'no', 'nor', 'not', 'now', 'of', 'off', 'on', 'once', 'only', 'or', 'other', 'our', 'ours', 'ourselves', 'out', 'over', 'own', 's', 'same', 'she', 'should', 'so', 'some', 'such', 't', 'than', 'that', 'the', 'their', 'theirs', 'them', 'themselves', 'then', 'there', 'these', 'they', 'this', 'those', 'through', 'to', 'too', 'under', 'until', 'up', 'very', 'was', 'we', 'were', 'what', 'when', 'where', 'which', 'while', 'who', 'whom', 'why', 'will', 'with', 'you', 'your', 'yours', 'yourself', 'yourselves']\n"

]

}

],

"source": [

"import nltk\n",

"stopwords = nltk.corpus.stopwords.words(\"english\")\n",

"print(sorted(stopwords)) # sort them alphabetically before printing"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We can test whether one word is an item in another list with the following syntax, here using our small sample."

]

},

{

"cell_type": "code",

"execution_count": 22,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"sample words: ['the', 'gold-bug', 'what', 'ho', 'what', 'ho', 'this', 'fellow']\n",

"sample words not in stopwords list: ['gold-bug', 'ho', 'ho', 'fellow']\n"

]

}

],

"source": [

"print(\"sample words: \", goldBugRealWordTokensLowercaseSample)\n",

"print(\"sample words not in stopwords list: \", [word for word in goldBugRealWordTokensLowercaseSample if not word in stopwords])"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"So we can now tweak our word filter with an additional condition, adding the ```and``` operator between the test for the alphabetic first character and the test for presence in the stopword list. We add a slash (\\) character to treat the code as if it were on one line. Alternatively, we could have done this in two steps (perhaps less efficient but arguably easier to read):\n",

"\n",

"```python\n",

"# first filter tokens with alphabetic characters\n",

"gbWords = [word for word in goldBugTokensLowercase if word[0].isalpha()]\n",

"# then filter stopwords\n",

"gbContentWords = [word for word in gbWords if word not in stopwords]```"

]

},

{

"cell_type": "code",

"execution_count": 25,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"upon de jupiter legrand one said well massa could bug skull parchment tree made first time two much us beetle \n",

" 81 73 53 47 38 35 35 34 33 32 29 27 25 25 24 24 23 23 23 22 \n"

]

}

],

"source": [

"goldBugRealContentWordTokensLowercase = [word for word in goldBugTokensLowercase \\\n",

" if word[0].isalpha() and word not in stopwords]\n",

"goldBugRealContentWordFrequencies = nltk.FreqDist(goldBugRealContentWordTokensLowercase)\n",

"goldBugRealContentWordFrequencies.tabulate(20) # show a sample of the top "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Now we have words that seem a bit more meaningful (even if the table format is a bit off). The first word (\"upon\") could be considered a function word (a preposition) that should be in the stop-word list, though it's less common in modern English. The second word (\"de\") would be in a French stop-word list, but seems striking here in English. The third word \"'s\" is actually an artifact of possessive forms – sometimes tokenization keeps possessives together with the word, sometimes not. The next words (\"jupiter\" and \"legrand\") merit closer inspection, they may be proper names that have been transformed to lowercase. We can continue on like this with various observations and hypotheses, but really we probably want to have a closer look at individual occurences to see what's happening. For that, we'll build a concordance."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Building a Simple Concordance"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"A concordance allows us to see each occurrence of a term in its context. It has a rich history in textual scholarship, dating back to well before the advent of computers. It's a tool for studying word usage in context.\n",

"\n",

"The easiest way to build a concordance is to create an NLTK Text object from a list of word tokens (in this case we'll use the unfiltered list so that we can better read the text). So, for instance, we can ask for a concordance of \"de\" to try to better understand why it occurs so often in this English text."

]

},

{

"cell_type": "code",

"execution_count": 31,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Displaying 10 of 73 matches:\n",

"ou , '' here interrupted Jupiter ; `` de bug is a goole bug , solid , ebery bi\n",

"is your master ? '' `` Why , to speak de troof , massa , him not so berry well\n",

" aint find nowhar -- dat 's just whar de shoe pinch -- my mind is got to be be\n",

"taint worf while for to git mad about de matter -- Massa Will say noffin at al\n",

" -- Massa Will say noffin at all aint de matter wid him -- but den what make h\n",

"a gose ? And den he keep a syphon all de time -- '' '' Keeps a what , Jupiter \n",

" , Jupiter ? '' `` Keeps a syphon wid de figgurs on de slate -- de queerest fi\n",

"' `` Keeps a syphon wid de figgurs on de slate -- de queerest figgurs I ebber \n",

" syphon wid de figgurs on de slate -- de queerest figgurs I ebber did see . Is\n",

"vers . Todder day he gib me slip fore de sun up and was gone de whole ob de bl\n"

]

}

],

"source": [

"goldBugText = nltk.Text(goldBugTokens)\n",

"goldBugText.concordance(\"de\", lines=10)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"In the concordance view above all the occurrences of \"de\" are aligned to make scanning each occurrence easier."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Next Steps"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Here are some tasks to try:\n",

"\n",

"* Show a table of the top 20 words\n",

" * Choose 3 words to add to the stop-words list using list concatenation\n",

" * Regenerate the list of the top 20 words using your new stop-words list\n",

"* Instead of testing for presence in the stopword list, how would you test for words that contain 10 characters or more?\n",

"* Determine whether or not the word provided to the concordance function is case sensitive\n",

"\n",

"In the next notebook we're going to get [Graphical](GettingGraphical.ipynb)."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"---\n",

"[CC BY-SA](https://creativecommons.org/licenses/by-sa/4.0/) From [The Art of Literary Text Analysis](ArtOfLiteraryTextAnalysis.ipynb) by [Stéfan Sinclair](http://stefansinclair.name) & [Geoffrey Rockwell](http://geoffreyrockwell.com). Edited and revised by [Melissa Mony](http://melissamony.com).

Created February 7, 2015 and last modified January 14, 2018 (Jupyter 4)"

]

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.7.1"

}

},

"nbformat": 4,

"nbformat_minor": 1

}