{

"cells": [

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# Introduction to Artificial Neural Networks \n",

"by Shawn Rhoads, Georgetown University (NSCI 526)\n",

" (adapted from [Shamdasani](https://dev.to/shamdasani/build-a-flexible-neural-network-with-backpropagation-in-python))\n",

"***\n",

"Recall from class, artificial neural networks are typically organized into three main layers: the input layer, the hidden layer, and the output layer. There are several inputs (also called features) that produce output(s) (also called a label(s))\n",

"\n",

"In a feed forward network information always moves one direction without cycles/loops in the network; it never goes backwards ([Wikipedia](https://en.wikipedia.org/wiki/Feedforward_neural_network)):\n",

"\n",

"\n",

"Above, the circles represent \"neurons\" while the lines represent \"synapses\". The role of a synapse is to take the multiply the inputs and weights. You can think of weights as the \"strength\" of the connection between neurons. Weights primarily define the output of a neural network. However, they are highly flexible. After, an activation function is applied to return an output.\n",

"\n",

"## Here's a brief overview of how a simple feedforward neural network works:\n",

"1. Takes inputs as a matrix (2D array of numbers)\n",

"2. Multiplies the input by a set weights (performs a dot product aka matrix multiplication)\n",

"3. Applies an activation function\n",

"4. Returns an output\n",

"5. Error is calculated by taking the difference from the desired output from the data and the predicted output. This creates our gradient descent, which we can use to alter the weights\n",

"6. The weights are then altered slightly according to the error.\n",

"7. To train, this process is repeated 1,000+ times. The more the data is trained upon, the more accurate our outputs will be.\n",

"\n",

"> \"They just perform a dot product with the input and weights and apply an activation function. When weights are adjusted via the gradient of loss function, the network adapts to the changes to produce more accurate outputs.\"\n",

">

(via [Shamdasani](https://dev.to/shamdasani/build-a-flexible-neural-network-with-backpropagation-in-python))"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## What does this mean?\n",

"\n",

"Let's model a single hidden later with three inputs and one output. In the network, we will be predicting the score of our exam based on the inputs of how many hours we studied and how many hours we slept the day before. Our test score is the output. Here's our sample data of what we'll be training our Neural Network on: "

]

},

{

"cell_type": "code",

"execution_count": 1,

"metadata": {},

"outputs": [],

"source": [

"import numpy as np"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We will want to predict the test score of someone who studied for four hours and slept for eight hours based on their prior performance.\n",

"\n",

"Our inputs (`X`) are in hours. Our output (`y`) is a test score from 0-100. Therefore, we need to scale our data by dividing by the maximum value for each variable."

]

},

{

"cell_type": "code",

"execution_count": 2,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"[Hours studied, Hours slept]\n",

"[[0.66666667 1. ]\n",

" [0.33333333 0.55555556]\n",

" [1. 0.66666667]]\n",

"\n",

"[Scores on test]\n",

"[0.92 0.86 0.89]\n"

]

}

],

"source": [

"# X = (hours studying, hours sleeping), y = score on test\n",

"X = np.array(([2, 9], [1, 5], [3, 6]), dtype=float)\n",

"y = np.array((92, 86, 89), dtype=float)\n",

"\n",

"# scale units\n",

"X_max = np.amax(X, axis=0)\n",

"X = X/X_max # maximum of X array\n",

"y = y/100 # max test score is 100\n",

"\n",

"# print\n",

"print('[Hours studied, Hours slept]')\n",

"print(X.view())\n",

"\n",

"print('\\n[Scores on test]')\n",

"print(y.view())"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Synapses perform a dot product of the input and weight. For our first calculation, we will generate random weights between 0 and 1.\n",

"\n",

"Our input data, `X`, is a 3x2 matrix. Our output data, `y`, is a 3x1 matrix. Each element in matrix `X` needs to be multiplied by a corresponding weight and then added together with all the other results for each neuron in the hidden layer. \n",

"\n",

"First, the products of the random generated weights (.2, .6, .1, .8, .3, .7) on each synapse and the corresponding inputs are summed to arrive as the first values of the hidden layer. "

]

},

{

"cell_type": "code",

"execution_count": 3,

"metadata": {},

"outputs": [],

"source": [

"W1 = np.array(([.2, .6, .1], [.8, .3, .7]), dtype=float)\n",

"W2 = np.array([.4, .5, .9], dtype=float)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Here's how the first input data element (2 hours studying and 9 hours sleeping) would calculate an output in the network:\n",

"\n",

"\n",

"\n",

"Here is our first calculation for the hidden layer above:\n",

"\n",

"$\\begin{align*} \\mathbf{X_{1}} \\cdot \\mathbf{W1} &= \\begin{bmatrix} x_{11} & x_{12} \\end{bmatrix} \\cdot \\begin{bmatrix} w_{11} & w_{12} & w_{13} \\\\ w_{21} & w_{22} & w_{23} \\end{bmatrix} \\\\ \\\\ &= \\begin{bmatrix} x_{12}w_{11} + x_{12}w_{21} & x_{12}w_{12} + x_{12}w_{22} & x_{12}w_{13} + x_{12}w_{23} \\end{bmatrix} \\\\ \\\\ &= \\begin{bmatrix} .67 & 1 \\end{bmatrix} \\cdot \\begin{bmatrix} .2 & .6 & .1 \\\\ .8 & .3 & .7 \\end{bmatrix} \\\\ \\\\ &= \\begin{bmatrix} (.67*.2 + 1*.8) & (.67*.6 + 1*.3) & (.67*.1 + 1*.7) \\end{bmatrix} \\\\ \\\\ &= \\begin{bmatrix} 0.93 & 0.70 & 0.77 \\end{bmatrix} \\end{align*}$"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

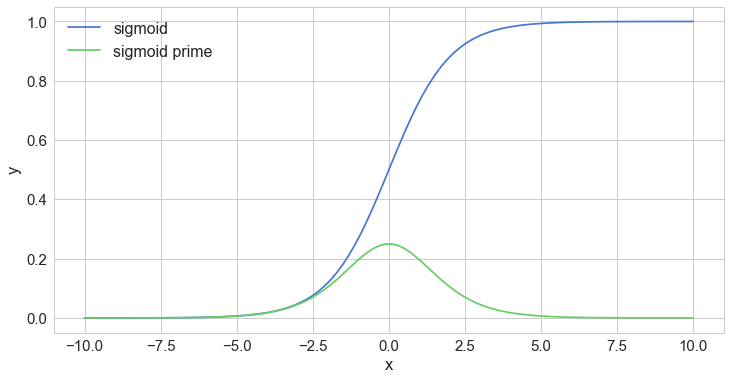

"To obtain the final value for the hidden layer, we need to apply an **activation function**, which will introduce nonlinearity. One advantage of this is that the output is mapped from a range of 0 and 1, making it easier to alter weights in the future.\n",

"\n",

"**Enter the sigmoid function:**\n",

"\n",

"$\\begin{align*} f(x) = \\frac{1}{1+e^{-\\beta x}} \\end{align*}$\n",

"\n",

"\n",

"\n",

"Thus our calculation for the output above:\n",

"```\n",

"1 / (1 + np.exp(-0.93)) = 0.72\n",

"1 / (1 + np.exp(-0.70)) = 0.67\n",

"1 / (1 + np.exp(-0.77)) = 0.68\n",

"```"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Rinse, repeat for output layer:\n",

"\n",

"$\\begin{align*} \\beta \\cdot \\mathbf{F} &= \\begin{bmatrix} \\beta_{11} & \\beta_{21} & \\beta_{31} \\end{bmatrix} \\cdot \\begin{bmatrix} f_{11} \\\\ f_{12} \\\\ f_{13} \\end{bmatrix} \\\\ \\\\ &= \\begin{bmatrix} (\\beta_{11}*f_{11}) + (\\beta_{21}*f_{12}) + (\\beta_{31}*f_{13}) \\end{bmatrix} \\\\ \\\\ &= \\begin{bmatrix} .4 & .5 & .9 \\end{bmatrix} \\cdot \\begin{bmatrix} .72 \\\\ .67 \\\\ .68 \\end{bmatrix} = \\begin{bmatrix} (.4*.72) + (.5*.67) + (.9*.68) \\end{bmatrix} \\\\ \\\\ &= 1.24 \\end{align*}$"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Pass through activation (sigmoid) function:\n",

"\n",

"```\n",

"1 / (1 + np.exp(-1.24)) = 0.77\n",

"```\n",

"\n",

"Theoretically, our neural network would calculate `.77` as our test score. However, our target was `.92`. Does not perform quite as good as one could hope!\n",

"\n",

"---"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Feedforward Implementation\n",

"\n",

"Now, we are ready to write a forward propagation function. Let's pass in our input, `X`. We can use the variable `z` to simulate the activity between the input and output layers.\n",

"\n",

"Remember, we will need to take a dot product of the inputs and weights, apply the activation function, take another dot product of the hidden layer and second set of weights, and lastly apply a final activation function to recieve our output:"

]

},

{

"cell_type": "code",

"execution_count": 4,

"metadata": {},

"outputs": [],

"source": [

"# FORWARD PROPAGATION\n",

"def forward(X,W1,W2):\n",

" z = np.dot(X, W1) # dot product of X (input) and first set of 3x2 weights\n",

" print(\"z=\")\n",

" print(z.view())\n",

" print()\n",

"\n",

" z2 = sigmoid(z) # activation function\n",

" print(\"z2=\")\n",

" print(z2.view())\n",

" print()\n",

"\n",

" z3 = np.dot(z2, W2) # dot product of hidden layer (z2) and second set of 3x1 weights\n",

" print(\"z3=\")\n",

" print(z3.view())\n",

" print()\n",

"\n",

" o = sigmoid(z3) # final activation function\n",

" print(\"o=\")\n",

" print(o.view())\n",

" print()\n",

"\n",

" return z,z2,z3,o"

]

},

{

"cell_type": "code",

"execution_count": 5,

"metadata": {},

"outputs": [],

"source": [

"def sigmoid(s):\n",

" # activation function\n",

" return 1/(1+np.exp(-s))"

]

},

{

"cell_type": "code",

"execution_count": 6,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"z=\n",

"[[0.93333333 0.7 0.76666667]\n",

" [0.51111111 0.36666667 0.42222222]\n",

" [0.73333333 0.8 0.56666667]]\n",

"\n",

"z2=\n",

"[[0.71775106 0.66818777 0.68279939]\n",

" [0.62506691 0.59065328 0.60401489]\n",

" [0.67553632 0.68997448 0.63799367]]\n",

"\n",

"z3=\n",

"[1.23571376 1.0889668 1.18939607]\n",

"\n",

"o=\n",

"[0.77481705 0.74818711 0.76663304]\n",

"\n",

"Predicted Output: \n",

"[0.77481705 0.74818711 0.76663304]\n",

"Actual Output: \n",

"[0.92 0.86 0.89]\n"

]

}

],

"source": [

"z,z2,z3,o = forward(X,W1,W2)\n",

"\n",

"print(\"Predicted Output: \\n\" + str(o))\n",

"print(\"Actual Output: \\n\" + str(y))"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Notice how terrible this performs! With our simple feedforward network, we aren't able to predict our test scores very well. Why? Our network needs to learn!\n",

"\n",

"---"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Enter Backpropagation\n",

"\n",

"Since we initialize with a random set of weights, we need to alter them to make our inputs equal to the corresponding outputs from our data set. This is done through **backpropagation**, which works by using a **loss function** to calculate how far the network was from the target output. \n",

"\n",

"Like the activation function, there is no one-size-fits-all loss function. Two common loss functions include: \n",

"\n",

"__mean absolute error (L1 Loss):__ measured as the average of sum of absolute differences between predictions and actual observations; more robust to outliers since it does not make use of square\n",

"\n",

"$$\n",

"MAE = \\frac {\\sum_{i=1}^n |y_i - \\hat{y_i}|}{n}\n",

"$$\n",

"\n",

"__mean square error (L2 Loss):__ the average of squared difference between predictions and actual observations; due to squaring, predictions that are far away from actual values are penalized heavily in comparison to less deviated predictions\n",

"\n",

"$$\n",

"MSE = \\frac {\\sum_{i=1}^n (y_i - \\hat{y_i})^2}{n}\n",

"$$\n",

"\n",

"Using our example: `o` is our predicted output, and `y` is our actual output. Our goal is to get our loss function as close as we can to `0`, meaning we will need to have close to no loss at all. \n",

"\n",

"Training = minimizing the loss. \n",

"\n",

"$$\n",

"Loss = \\frac {\\sum (o - y)^2}{2}\n",

"$$"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

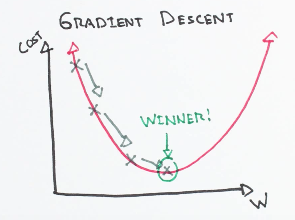

"## Enter Gradient Descent\n",

"To figure out which direction to alter our weights, we need to find the rate of change of our loss with respect to our weights (i.e., we need to use the derivative of the loss function to understand how the weights affect the input):\n",

"\n",

"\n",

"\n",

"*via https://github.com/bfortuner/ml-cheatsheet/blob/master/docs/gradient_descent.rst*\n",

"\n",

"### Here's how we will calculate the incremental change to our weights:\n",

"1. Find the margin of error of the output layer `o` by taking the difference of the predicted output and the actual output `y`.\n",

"2. Apply the derivative of our sigmoid activation function to the output layer error. We call this result the **delta output sum**.\n",

"3. Use the delta output sum of the output layer error to figure out how much our `z2` (hidden) layer contributed to the output error by performing a dot product with our second weight matrix. We can call this the z^2 error.\n",

"4. Calculate the delta output sum for the `z2` layer by applying the derivative of our sigmoid activation function (just like step 2).\n",

"5. Adjust the weights for the first layer by performing a dot product of the input layer with the **hidden delta output sum**. For the second weight, perform a dot product of the hidden(`z2`) layer and the **output (`o`) delta output sum**.\n",

"\n",

"Calculating the delta output sum and then applying the derivative of the sigmoid function are very important to backpropagation. The derivative of the sigmoid, also known as **sigmoid prime**, will give us the rate of change, or slope, of the activation function at output sum.\n",

"\n",

"---"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Backprop Implementation\n",

"Let's continue to code our `Neural_Network` class by adding a `sigmoidPrime` (derivative of sigmoid) function and a `backward` propagation function that performs the four steps above. \n",

"\n",

"Then, we can define our output through initiating foward propagation and intiate the backward function by calling it in a `train` function: "

]

},

{

"cell_type": "code",

"execution_count": 7,

"metadata": {},

"outputs": [],

"source": [

"# BACKPROPAGATION with Mean Square Error Minimization\n",

"def backward(X,W1,W2,y,z,z2,z3,o):\n",

" # backward propgate through the network\n",

" o_error = np.square(y - o) # error in output\n",

" print(\"Error in output (o_error):\")\n",

" print(o_error.view())\n",

" print()\n",

"\n",

" o_delta = o_error*sigmoidPrime(o)\n",

" print(\"Applied gradient of sigmoid to error (o_delta):\")\n",

" print(o_delta.view())\n",

" print()\n",

"\n",

" z2_error = o_delta.dot(W2.T) \n",

" print(\"How much our hidden layer weights contributed to output error (z2 error):\")\n",

" print(z2_error.view())\n",

" print()\n",

"\n",

" z2_delta = z2_error*sigmoidPrime(z2)\n",

" print(\"Applied gradient of sigmoid to z2 error (z2_delta):\")\n",

" print(z2_error.view())\n",

" print()\n",

"\n",

" W1_b = W1 + X.T.dot(z2_delta)\n",

" print(\"Adjusting first set (input --> hidden) weights:\")\n",

" print(\"W1=\")\n",

" print(W1_b.view())\n",

" print()\n",

"\n",

" W2_b = W2 + z2.T.dot(o_delta)\n",

" print(\"Adjusting second set (hidden --> output) weights:\")\n",

" print(\"W2=\")\n",

" print(W2_b.view())\n",

" print()\n",

" \n",

" return W1_b, W2_b"

]

},

{

"cell_type": "code",

"execution_count": 8,

"metadata": {},

"outputs": [],

"source": [

"def sigmoidPrime(s):\n",

" #derivative of sigmoid (e.g., gradient)\n",

" return s * (1 - s)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let's try running one iteration of backpropagation:"

]

},

{

"cell_type": "code",

"execution_count": 9,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"FORWARD PROPOGATION #1\n",

"\n",

"z=\n",

"[[0.93333333 0.7 0.76666667]\n",

" [0.51111111 0.36666667 0.42222222]\n",

" [0.73333333 0.8 0.56666667]]\n",

"\n",

"z2=\n",

"[[0.71775106 0.66818777 0.68279939]\n",

" [0.62506691 0.59065328 0.60401489]\n",

" [0.67553632 0.68997448 0.63799367]]\n",

"\n",

"z3=\n",

"[1.23571376 1.0889668 1.18939607]\n",

"\n",

"o=\n",

"[0.77481705 0.74818711 0.76663304]\n",

"\n",

"----------------------\n",

"\n",

"BACK PROPOGATION #1\n",

"\n",

"Error in output (o_error):\n",

"[0.02107809 0.01250212 0.01521941]\n",

"\n",

"Applied gradient of sigmoid to error (o_delta):\n",

"[0.00367761 0.00235544 0.00272286]\n",

"\n",

"How much our hidden layer weights contributed to output error (z2 error):\n",

"0.005099334735433654\n",

"\n",

"Applied gradient of sigmoid to z2 error (z2_delta):\n",

"0.005099334735433654\n",

"\n",

"Adjusting first set (input --> hidden) weights:\n",

"W1=\n",

"[[0.20220476 0.6022555 0.10232058]\n",

" [0.80244211 0.30254275 0.70256718]]\n",

"\n",

"Adjusting second set (hidden --> output) weights:\n",

"W2=\n",

"[0.40595131 0.50572728 0.90567096]\n",

"\n",

"----------------------\n",

"\n",

"FORWARD PROPOGATION #2\n",

"\n",

"z=\n",

"[[0.93724529 0.70404641 0.7707809 ]\n",

" [0.51320276 0.36883114 0.42442196]\n",

" [0.73716617 0.80395066 0.5706987 ]]\n",

"\n",

"z2=\n",

"[[0.71854288 0.6690843 0.68368979]\n",

" [0.62555698 0.59117651 0.6045409 ]\n",

" [0.67637587 0.69081893 0.63892438]]\n",

"\n",

"z3=\n",

"[1.2492656 1.1004349 1.2025969]\n",

"\n",

"o=\n",

"[0.77717271 0.75034158 0.76898644]\n",

"\n"

]

}

],

"source": [

"print(\"FORWARD PROPOGATION #1\\n\")\n",

"z,z2,z3,o = forward(X,W1,W2)\n",

"print(\"----------------------\\n\")\n",

"print(\"BACK PROPOGATION #1\\n\")\n",

"W1_b, W2_b = backward(X,W1,W2,y,z,z2,z3,o)\n",

"print(\"----------------------\\n\")\n",

"print(\"FORWARD PROPOGATION #2\\n\")\n",

"z,z2,z3,o = forward(X,W1_b,W2_b)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Notice how the outputs are much better after implementing backprop twice.. \n",

"\n",

"---"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Let's train our network!\n",

"\n",

"We will define a python `class` and insert our functions from above. We will add an `init` function where we'll specify our parameters such as the input, hidden, and output layers."

]

},

{

"cell_type": "code",

"execution_count": 10,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"[Hours studied, Hours slept]\n",

"[[0.66666667 1. ]\n",

" [0.33333333 0.55555556]\n",

" [1. 0.66666667]]\n",

"\n",

"[Scores on test]\n",

"[[0.92]\n",

" [0.86]\n",

" [0.89]]\n"

]

}

],

"source": [

"# X = (hours studying, hours sleeping), y = score on test\n",

"X = np.array(([2, 9], [1, 5], [3, 6]), dtype=float)\n",

"y = np.array(([92], [86], [89]), dtype=float)\n",

"\n",

"# scale units\n",

"X_max = np.amax(X, axis=0)\n",

"X = X/X_max # maximum of X array\n",

"y = y/100 # max test score is 100\n",

"\n",

"# print\n",

"print('[Hours studied, Hours slept]')\n",

"print(X.view())\n",

"\n",

"print('\\n[Scores on test]')\n",

"print(y.view())"

]

},

{

"cell_type": "code",

"execution_count": 11,

"metadata": {},

"outputs": [],

"source": [

"class Neural_Network(object):\n",

" \n",

" def __init__(self):\n",

" #parameters\n",

" self.inputNum = 2 # two inputs\n",

" self.outputNum = 1 # 1 ouput\n",

" self.hiddenNum = 3 # 3 nodes in our hidden layer\n",

"\n",

" #weights\n",

" np.random.seed(2019) #set random seed for reproducibility\n",

" self.W1 = np.random.randn(self.inputNum, self.hiddenNum) # (3x2) weight matrix from input to hidden layer\n",

" self.W2 = np.random.randn(self.hiddenNum, self.outputNum) # (3x1) weight matrix from hidden to output layer\n",

" \n",

" def forward(self, X):\n",

" #forward propagation through our network\n",

" self.z = np.dot(X, self.W1) # dot product of X (input) and first set of 3x2 weights\n",

" self.z2 = self.sigmoid(self.z) # activation function\n",

" self.z3 = np.dot(self.z2, self.W2) # dot product of hidden layer (z2) and second set of 3x1 weights\n",

" o = self.sigmoid(self.z3) # final activation function\n",

" return o \n",

" \n",

" def sigmoid(self, s):\n",

" # activation function\n",

" return 1/(1+np.exp(-s))\n",

" \n",

" def sigmoidPrime(self, s):\n",

" #derivative of sigmoid\n",

" return s * (1 - s)\n",

"\n",

" def backward(self, X, y, o):\n",

" # backward propgate through the network\n",

" self.o_error = np.square(y - o) # error in output\n",

" self.o_delta = self.o_error*self.sigmoidPrime(o) # applying derivative of sigmoid to error\n",

"\n",

" self.z2_error = self.o_delta.dot(self.W2.T) # z2 error: how much our hidden layer weights contributed to output error\n",

" self.z2_delta = self.z2_error*self.sigmoidPrime(self.z2) # applying derivative of sigmoid to z2 error\n",

"\n",

" self.W1 += X.T.dot(self.z2_delta) # adjusting first set (input --> hidden) weights\n",

" self.W2 += self.z2.T.dot(self.o_delta) # adjusting second set (hidden --> output) weights\n",

" \n",

" def train (self, X, y):\n",

" o = self.forward(X)\n",

" self.backward(X, y, o)\n",

" \n",

" def test(self, X):\n",

" # test using new data\n",

" return self.forward(X)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"To run the network, all we have to do is to run the `train` function. \n",

"\n",

"We will want to do this multiple (e.g., hundreds) of times. So, we'll use a for loop:"

]

},

{

"cell_type": "code",

"execution_count": 12,

"metadata": {

"scrolled": false

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Interation: #0\n",

"Input: \n",

"[[0.66666667 1. ]\n",

" [0.33333333 0.55555556]\n",

" [1. 0.66666667]]\n",

"Actual Output: \n",

"[[0.92]\n",

" [0.86]\n",

" [0.89]]\n",

"Predicted Output: \n",

"[[0.59829797]\n",

" [0.58952764]\n",

" [0.58856997]]\n",

"Mean square error: \n",

"0.089169184931786\n",

"\n",

"\n",

"Interation: #50\n",

"Input: \n",

"[[0.66666667 1. ]\n",

" [0.33333333 0.55555556]\n",

" [1. 0.66666667]]\n",

"Actual Output: \n",

"[[0.92]\n",

" [0.86]\n",

" [0.89]]\n",

"Predicted Output: \n",

"[[0.81578845]\n",

" [0.78576724]\n",

" [0.81093399]]\n",

"Mean square error: \n",

"0.0075406612338980985\n",

"\n",

"\n",

"Interation: #100\n",

"Input: \n",

"[[0.66666667 1. ]\n",

" [0.33333333 0.55555556]\n",

" [1. 0.66666667]]\n",

"Actual Output: \n",

"[[0.92]\n",

" [0.86]\n",

" [0.89]]\n",

"Predicted Output: \n",

"[[0.84301206]\n",

" [0.81213973]\n",

" [0.83894441]]\n",

"Mean square error: \n",

"0.0036081405451177857\n",

"\n",

"\n",

"Interation: #150\n",

"Input: \n",

"[[0.66666667 1. ]\n",

" [0.33333333 0.55555556]\n",

" [1. 0.66666667]]\n",

"Actual Output: \n",

"[[0.92]\n",

" [0.86]\n",

" [0.89]]\n",

"Predicted Output: \n",

"[[0.85560731]\n",

" [0.82459002]\n",

" [0.85190143]]\n",

"Mean square error: \n",

"0.002283928756475065\n",

"\n",

"\n",

"Interation: #199\n",

"Input: \n",

"[[0.66666667 1. ]\n",

" [0.33333333 0.55555556]\n",

" [1. 0.66666667]]\n",

"Actual Output: \n",

"[[0.92]\n",

" [0.86]\n",

" [0.89]]\n",

"Predicted Output: \n",

"[[0.86312449]\n",

" [0.83210664]\n",

" [0.85963112]]\n",

"Mean square error: \n",

"0.0016450440135330274\n",

"\n",

"\n"

]

}

],

"source": [

"NN = Neural_Network()\n",

"mse = np.array([]) # let's track how the mean sum squared error goes down as the network learns \n",

"\n",

"n_iter = 200\n",

"for i in range(n_iter): # trains the network n_iter times\n",

" mse = np.append(mse,np.mean(np.square(y - NN.forward(X)))) #store error\n",

" # let's print the output every (n_iter/4) iteration and last:\n",

" if i % (n_iter/4) == 0 or i == (n_iter-1):\n",

" print(\"Interation: #%i\" % (i))\n",

" print(\"Input: \\n\" + str(X) )\n",

" print(\"Actual Output: \\n\" + str(y)) \n",

" print(\"Predicted Output: \\n\" + str(NN.forward(X)) )\n",

" print(\"Mean square error: \\n\" + str(mse[i])) # mean sum squared error\n",

" print(\"\\n\")\n",

" NN.train(X, y)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Observe how the mean sum squared error goes down with each iteration. That's the network learning via gradient descent! \n",

"\n",

"We can also plot the gradient descent below (notice how quickly it converges):"

]

},

{

"cell_type": "code",

"execution_count": 13,

"metadata": {},

"outputs": [

{

"data": {

"image/png": "iVBORw0KGgoAAAANSUhEUgAAAYsAAAEKCAYAAADjDHn2AAAABHNCSVQICAgIfAhkiAAAAAlwSFlzAAALEgAACxIB0t1+/AAAADl0RVh0U29mdHdhcmUAbWF0cGxvdGxpYiB2ZXJzaW9uIDIuMi4yLCBodHRwOi8vbWF0cGxvdGxpYi5vcmcvhp/UCwAAIABJREFUeJzt3Xt0XOV57/HvMzOa0V22JdkWvuB7wNyJIRcgJCXJ4ZLETUsSSE7LaTiHpAvSprSrJSerOUm6Tk/pJeRSelJOQ0PTBEhzdRoSSCEJKSSAcWyDMQZhLr5bsi3LkqzLjJ7zx96yR+MZjWxrZo81v89aWrPn3XtmHm+N56d3v3vebe6OiIjIRGJRFyAiIpVPYSEiIkUpLEREpCiFhYiIFKWwEBGRohQWIiJSlMJCRESKUliIiEhRCgsRESkqEXUBU6Wtrc0XLVoUdRkiIqeUp59+utvd24ttN23CYtGiRaxduzbqMkRETilm9upkttNhKBERKUphISIiRSksRESkKIWFiIgUpbAQEZGiFBYiIlKUwkJERIqq+rDY2XOYzz20hZe7+6MuRUSkYlV9WOzvH+aLj3Ty4p5DUZciIlKxqj4sGlLBl9j7h9MRVyIiUrkUFqk4AH1DmYgrERGpXFUfFo1jPYsh9SxERAqp+rCoq4kTM4WFiMhEqj4szIyGZII+hYWISEFVHxYQDHKrZyEiUpjCgmCQu18D3CIiBSksCAa5dRhKRKQwhQU6DCUiUozCgiAs1LMQESlMYUFwGErf4BYRKUxhgQa4RUSKUVigw1AiIsUoLICmVILh9CjD6dGoSxERqUgKC7JmnlXvQkQkL4UFR8NCh6JERPIraViY2ZVmtsXMOs3stjzrU2Z2f7j+CTNbFLbXmNk9ZvaMmW02s0+Uss5GXdNCRGRCJQsLM4sDdwJXASuB681sZc5mNwIH3H0ZcAdwe9j+PiDl7ucArwc+MhYkpaDDUCIiEytlz+JioNPdt7r7MHAfsDpnm9XAPeHyt4ArzMwABxrMLAHUAcNAb6kKbdQFkEREJlTKsJgHbMu6vz1sy7uNu6eBg0ArQXD0A7uA14C/dff9pSpUPQsRkYmVMiwsT5tPcpuLgQxwGrAY+GMzW3LMC5jdZGZrzWxtV1fXCRfakNQAt4jIREoZFtuBBVn35wM7C20THnJqAfYDHwR+7O4j7r4XeAxYlfsC7n6Xu69y91Xt7e0nXKgurSoiMrFShsVTwHIzW2xmSeA6YE3ONmuAG8Lla4FH3N0JDj39hgUagDcCz5eqUB2GEhGZWMnCIhyDuAV4ENgMfNPdN5nZZ83sPeFmXwFazawTuBUYO732TqAReJYgdP7Z3TeWqtZkIkYyHtMAt4hIAYlSPrm7PwA8kNP2qazlQYLTZHMf15evvZSCyQTVsxARyUff4A7pAkgiIoUpLEK6tKqISGEKi1CDLoAkIlKQwiIUXNNCA9wiIvkoLEJNtQkODY5EXYaISEVSWIRa6mroPaywEBHJR2ERaqmr4eDhEYLvBIqISDaFRailroaRjDMwrHELEZFcCotQS10NAAd1KEpE5BgKi9AMhYWISEEKi5B6FiIihSksQs1hWPQMKCxERHIpLEJjPQudPisiciyFRWhGvQ5DiYgUorAINaYSxGOmsBARyUNhETIzmmsTCgsRkTwUFlla6mroUViIiBxDYZFlbMoPEREZT2GRpaU+qbAQEclDYZFFM8+KiOSnsMjSUpegZ2A46jJERCqOwiJLS10NvYNpTVMuIpJDYZGlpa6GzKjTN6RrcYuIZFNYZJlRlwT0LW4RkVwKiyzNmnlWRCQvhUWWI9OUa+ZZEZFxFBZZdE0LEZH8FBZZZjUEYxb7dfqsiMg4CossY2Gxr09hISKSTWGRJZmI0VybYF/fUNSliIhUFIVFjrbGFN396lmIiGRTWORobUyqZyEikkNhkaO1IaUxCxGRHAqLHK2NSfbpMJSIyDgKixytjSkODAyTzoxGXYqISMVQWORoa0ziDgf0LW4RkSNKGhZmdqWZbTGzTjO7Lc/6lJndH65/wswWZa0718x+aWabzOwZM6stZa1jWhtSAOzr1yC3iMiYkoWFmcWBO4GrgJXA9Wa2MmezG4ED7r4MuAO4PXxsAvhX4KPufhbwVqAsf+q3NuqLeSIiuUrZs7gY6HT3re4+DNwHrM7ZZjVwT7j8LeAKMzPgncBGd98A4O773D1TwlqPaAvDolunz4qIHFHKsJgHbMu6vz1sy7uNu6eBg0ArsAJwM3vQzNaZ2Z+WsM5xjhyGUs9CROSIRAmf2/K05V6vtNA2CeBS4CJgAHjYzJ5294fHPdjsJuAmgIULF550wRDMPBuPmcYsRESylLJnsR1YkHV/PrCz0DbhOEULsD9s/7m7d7v7APAAcGHuC7j7Xe6+yt1Xtbe3T0nRsZgxqyGpnoWISJZShsVTwHIzW2xmSeA6YE3ONmuAG8Lla4FH3N2BB4Fzzaw+DJHLgedKWOs4rQ1JuhUWIiJHlOwwlLunzewWgg/+OHC3u28ys88Ca919DfAV4Gtm1knQo7gufOwBM/scQeA48IC7/7BUteZqa0zpMJSISJZSjlng7g8QHELKbvtU1vIg8L4Cj/1XgtNny66tMcmrr/VH8dIiIhVJ3+DOY3ZzLXt6hwiOiImIiMIij7nNtQynR+nRlB8iIoDCIq+5LcHMIrsODkZciYhIZVBY5DEWFnt6FRYiIqCwyGtus3oWIiLZFBZ5tDeliBnsVs9CRARQWORVE4/R1phi98HDUZciIlIRFBYFdLTUsrtXX8wTEQGFRUFzmmvVsxARCSksCuhoqWW3BrhFRACFRUFzW+roHUzTP5SOuhQRkcgpLAqY2xJcBElnRImIKCwKmttcB8AeHYoSEVFYFKIpP0REjlJYFNARhsWOHp0RJSKisCigtibOnOYUr+0fiLoUEZHIKSwmsHBWvcJCRASFxYQWzKpnu8JCRERhMZGFs+rZ1TvIUDoTdSkiIpFSWExg4ax63GHHAQ1yi0h1mzAszOy/Zi1fkrPullIVVSkWzKoH0LiFiFS9Yj2LW7OWv5Sz7sNTXEvFWRiGxTaFhYhUuWJhYQWW892fdtobU6QSMbbpMJSIVLliYeEFlvPdn3ZiMWPBrHpe26eehYhUt0SR9WeY2UaCXsTScJnw/pKSVlYhFsys05iFiFS9YmFxZlmqqGALZ9Xz1CsHcHfMpv2RNxGRvCYMC3d/Nfu+mbUCbwFec/enS1lYpVg6u5G+oTR7Dw0xp7k26nJERCJR7NTZfzezs8PlDuBZgrOgvmZmHy9DfZFb2t4IQOfevogrERGJTrEB7sXu/my4/HvAT9z93cAbqIJTZwGWzVZYiIgUC4uRrOUrgAcA3P0QMFqqoirJ7KYUTakEL3UpLESkehUb4N5mZh8DtgMXAj8GMLM6oKbEtVUEM2Pp7Eb1LESkqhXrWdwInAX8N+AD7t4Ttr8R+OcS1lVRlrYrLESkuhU7G2ov8NE87T8FflqqoirNstmNfHvddnoHR2iurYoOlYjIOBOGhZmtmWi9u79nasupTGOD3C/t7eOChTMjrkZEpPyKjVm8CdgG3As8QRXMB5XP0vYGIDgjSmEhItWoWFjMBd4BXA98EPghcK+7byp1YZVk4ax6UokYW3YfiroUEZFITDjA7e4Zd/+xu99AMKjdCfwsPEOqaiTiMc6Y28Rzu3qjLkVEJBJFr5RnZikz+y3gX4GbgS8C35nMk5vZlWa2xcw6zey2As99f7j+CTNblLN+oZn1mdmfTOb1Smnlac08t6sX92k/2a6IyDGKTfdxD/A4wXcsPuPuF7n7X7j7jmJPbGZx4E7gKmAlcL2ZrczZ7EbggLsvA+4Abs9Zfwfwo0n9S0ps5Wkt9AyMsPPgYNSliIiUXbGexe8AK4A/BB43s97w55CZFTsmczHQ6e5b3X0YuA9YnbPNauCecPlbwBUWTu1qZr8JbAUqYnxkZUczAM/t1KEoEak+xcYsYu7eFP40Z/00uXtzkeeeR3Am1ZjtYVvebdw9DRwEWs2sAfgz4DMTvYCZ3WRma81sbVdXV5FyTs6ZHU2YwaadB0v6OiIilajomMVJyHeabe4B/0LbfAa4w90n/Nq0u9/l7qvcfVV7e/sJljk59ckEi9sa1LMQkapU7NTZk7EdWJB1fz6ws8A2280sAbQA+wlmtb3WzP4amAGMmtmgu/99Cest6qzTWlj36oEoSxARiUQpexZPAcvNbLGZJYHrgNxvhK8BbgiXrwUe8cBl7r7I3RcBnwf+MuqgADj7tGZ29BxmX99Q1KWIiJRVycIiHIO4BXgQ2Ax80903mdlnzWxsmpCvEIxRdAK3AsecXltJxr69/evXeopsKSIyvZTyMBTu/gDhNTCy2j6VtTwIvK/Ic3y6JMWdgHPnt5CIGeteO8DbV86JuhwRkbIp5WGoaae2Js7K05pZ95rGLUSkuigsjtOFC2eyYdtB0pmquFCgiAigsDhuFyycweGRDFv2aFJBEakeCovjdGE4yL1Og9wiUkUUFsdp/sw65jSnePLl/VGXIiJSNgqL42RmvHlpG798qVsz0IpI1VBYnIA3LW2lu2+YF/dOOBuJiMi0obA4AW9e2grA453dEVciIlIeCosTMH9mPQtn1fP4S/uiLkVEpCwUFifozUtb+dXWfWRGNW4hItOfwuIEXbKsjd7BNOu36dvcIjL9KSxO0FtWtBOPGQ9v3ht1KSIiJaewOEEtdTVctGgmjzyvsBCR6U9hcRKuOGMOz+8+xPYDA1GXIiJSUgqLk/AbZ84GUO9CRKY9hcVJWNLWwOK2Bh7ctDvqUkRESkphcRLMjGvO6eCXL+2jW5daFZFpTGFxkq45t4NRhx89q96FiExfCouTdMbcJpa2N/DvG3ZGXYqISMkoLE6SmXHNuafx5Cv72X1wMOpyRERKQmExBd57wTzc4Tu/3h51KSIiJaGwmAKL2xq4eNEs/m3tdl3jQkSmJYXFFHn/RQt4ubufta9qrigRmX4UFlPk6nPm0phKcN+T26IuRURkyikspkh9MsF7L5jHDzbuZJ++cyEi04zCYgrd8ObTGU6Pcu+Tr0VdiojIlFJYTKFls5u4bHkbX/vVq4xkRqMuR0RkyigsptiHL1nMnt4hvr9eX9ITkelDYTHF3vq6ds6Y28Q//KxTl1wVkWlDYTHFzIyb37aMrV39/FjzRYnINKGwKIGrz+lgSVsDX3z4RfUuRGRaUFiUQDxm/NE7VrBlzyG+v35H1OWIiJw0hUWJXHNOB2fPa+bvHnqBoXQm6nJERE6KwqJEYjHjz648gx09h7n7P1+JuhwRkZOisCihy5a38/Yz5/ClR17U9OUickpTWJTYn7/rTNKjzv9+YHPUpYiInLCShoWZXWlmW8ys08xuy7M+ZWb3h+ufMLNFYfs7zOxpM3smvP2NUtZZSqe3NvD7ly/lBxt28sjze6IuR0TkhJQsLMwsDtwJXAWsBK43s5U5m90IHHD3ZcAdwO1hezfwbnc/B7gB+Fqp6iyHm9+2jBVzGvnkd5+ld3Ak6nJERI5bKXsWFwOd7r7V3YeB+4DVOdusBu4Jl78FXGFm5u6/dvex+TI2AbVmliphrSWVTMT462vPY++hIT753Wd1gSQROeWUMizmAdkXd9getuXdxt3TwEGgNWeb3wZ+7e7HzPttZjeZ2VozW9vV1TVlhZfC+QtmcOs7VvCDDTu5/yld80JETi2lDAvL05b7J/WE25jZWQSHpj6S7wXc/S53X+Xuq9rb20+40HL5/cuXctnyNv7Xmk1s2X0o6nJERCatlGGxHViQdX8+kDsV65FtzCwBtAD7w/vzge8Cv+vuL5WwzrKJxYzPvf98mmpruPkb6xgYTkddkojIpJQyLJ4ClpvZYjNLAtcBa3K2WUMwgA1wLfCIu7uZzQB+CHzC3R8rYY1l196U4gvXnc9LXX384X3rNXeUiJwSShYW4RjELcCDwGbgm+6+ycw+a2bvCTf7CtBqZp3ArcDY6bW3AMuAPzez9eHP7FLVWm6XLGvj0+8+i588t4fP/GCTBrxFpOLZdPmgWrVqla9duzbqMo7LXz6wmbse3conrjqDj1y+NOpyRKQKmdnT7r6q2HaJchQj+d125Rns7DnM//nR88xqSPK+VQuKP0hEJAIKiwjFYsbfvu88egZG+NNvbyQ96lx/8cKoyxIROYbmhopYbU2cf7phFZevaOcT33mGf/nlK1GXJCJyDIVFBaitifOPv/N63rFyDp/6/ib+/pEXNegtIhVFYVEhUok4//ChC3nvBfP424de4I+/uUEXTRKRiqExiwpSE4/xufefx5K2Bv7uJy/w6v4B/vF3Xk9b4yk7LZaITBPqWVQYM+NjVyznzg9eyLM7DnL1F37B4y91R12WiFQ5hUWFuubcDr538yU01Sb40D89wece2kI6Mxp1WSJSpRQWFezMjmZ+8LFLufbC+XzxkU5++8u/1ASEIhIJhUWFq08m+Jv3nceXrr+AbfsHeNeXfsEdP3lBg98iUlYKi1PEu887jf+49XKuOaeDLzz8Ild9/hc8vHmPTrEVkbJQWJxCZjUk+fx1F/DV37sIDG68Zy2/e/eTOjQlIiWnsDgFvfV1s3nw42/hz9+1kg3berjyC4/yB/f+mq1dfVGXJiLTlGadPcUd6B/mrl9s5auPvcJQOsPq8+fxPy5bwsrTmqMuTUROAZOddVZhMU10HRriH3/+Et948jUGhjNcsqyV/37pEi5f0U4slu/qtSIiCouqdXBghHufeo2vPvYKu3sHWT67kQ++YSHvvWAeM+qTUZcnIhVGYVHlhtOjPPDMLu5+7GU2bj9IMh7jnWfN4QMXLeCSpW3qbYgIoLCQLM/t7OWba7fxvfU76BkY4bSWWq4+p4Nrzu3g/AUzMFNwiFQrhYUcY3Akw0+e28P31+/g0Re6Gc6MMm9GHVefM5d3njWXCxbMIBHXCXIi1URhIRPqHRzhP57bww837uLRF7sYyTjNtQnesqKdt75uNpevaKe9SbPdikx3CguZtN7BER57sZufbtnLT7d00XVoCIBz57dw6bI23rCkldefPpPGlGa0F5luFBZyQtydTTt7+fkLXfz0+b2s39ZDetSJx4yzT2vmDUtauXjRLFYtmqmzq0SmAYWFTImB4TTrXu3hiZf38cTW/azf1sNwOFX64rYGzpvfwnkLZnDeghms7GimtiYeccUicjwmGxY6riATqk8muHR5G5cubwOCQfL123pY99oBNm47yBMv7+d763cCkIgZK+Y0cWZHM2d2NHHG3GbO6GjSlf5EpgGFhRyX2po4b1zSyhuXtB5p29M7yIZtPazf1sOmnb38Z2cX3163/cj6tsZUGB5NLJvdyJL2Rpa0NTCrIanTdkVOEToMJSWxv3+Y53f1snn3IZ7f1cvzuw+xZc8hhtNHr/bXXJsIgqO9gSVtDSxpb2RxWwOLWhuoS+pwlkg56DCURGpWQ5I3L2vjzcvajrRlRp0dBw7zUncfW7v62drVx8vd/TzeuY/vrNsx7vFtjUnmzaxn/sw6FoS382fWsWBWPfNm1GlsRKTMFBZSNvGYsbC1noWt9bztdePX9Q+lebm7n63d/WzbP8C2/QNsP3CYTTsO8tCm3YxkxveA2xpTzG1JMbe5ljnNtcFtS3Db0RIsN6USOswlMkUUFlIRGlIJzp7XwtnzWo5Zlxl19h4aZPuBw0dCZGfPYXb3Bm1Pv3qAAwMjxzyuPhlnbnMtbU0p2htTtDYmacu6bWtM0tqQoq0pRUMyrmARmYDCQipePGZ0tNTR0VLHRYtm5d1mcCTDnt5Bdh8cZHfvYLg8xJ7eQbr6hti8u5d9fcMcPHxsqACkErEjATKrIcmM+iQz6muYUZdkZkMNLXU1zAzbZtYnaamvUc9FqorCQqaF2po4p7c2cHprw4TbDadH2d8/THffEN19Q+zrC5b39Q/TfWiI7v5huvqGeHFvHz0DI/QNpQs+VzxmzKirCUKlPklzbYKm2hqasm5z2xpTibC9hsbaBHHN/iunCIWFVJVkIsbcllrmttROavuRzCgHD4/QMzBMz8AIBwaOLvccDm8HRjgwEITM1u5+Dg2mOTQ4csw4Sz4NyXhWmCRoSCVoSCaoT8XH3ybjNKTC26z2hlSc+uTRx9RoIkgpEYWFyARq4mOHp47/i4WDI5kjwRHcHl3uzdN2aCi43dM7SP9QhoHhNP3DmXGnGxeTjMeOBEldMk5dTZzamhi1NXFqa8bfr6uJk8rTduz2QVv29jVx0yG4KqOwECmRsQ/ck529N50ZZWAkw8BQhv7h9NHb4fTRUMkKl8PDGfqH0vQPpxkcGWVwJEPfUJruvmEGRzIMjmQ4HN4Ojkw+iLLFLOilpRJxkokYyXiMVE14m4gVXDeuPRFsO7b90e3iR9Yls9bVxGPUxC28DZYT8bF1RjymACslhYVIhUvEYzTHYzTX1kz5c7s7Q+nRrAAZzQmT3LbRI+3D6VGGwp9gOWgbzowyNDLK4ZEMBw+PHG3P3jYzelw9pslKxmMkcgKlJmwruC4WI5kYv5yIhdskjJpwOXisEY/FSMSMRNxIxI7ej8fGr48f2SYWLOe5Hzwm6348eL2x+5V0RUuFhUgVM7MjPaAZZX5td2c4Mz5Exi1nMgyNHA2WdMYZyYyGP056NGgfyTjpsfZRZyQ9fjk9GrxOOnzc2HMMjozSN5hmOGzLXZ/OBI8byYwyGtFEF2aMC494GDbZYRSPGVecMZtPXrOypLWUNCzM7ErgC0Ac+Cd3/6uc9SngX4DXA/uAD7j7K+G6TwA3AhngD9z9wVLWKiLlZWakEnFSiThNURdTRGY0CJHMqJMeDcJpbDl3Xb77Y48pdn/cY8JAzHs/Ez4mvD+3pa7k+6BkYWFmceBO4B3AduApM1vj7s9lbXYjcMDdl5nZdcDtwAfMbCVwHXAWcBrwH2a2wt0zpapXRKSQeMyIx6p7iplSnmd3MdDp7lvdfRi4D1ids81q4J5w+VvAFRaMUK0G7nP3IXd/GegMn09ERCJQyrCYB2zLur89bMu7jbungYNA6yQfKyIiZVLKsMg3jJ87TFRom8k8FjO7yczWmtnarq6uEyhRREQmo5RhsR1YkHV/PrCz0DZmlgBagP2TfCzufpe7r3L3Ve3t7VNYuoiIZCtlWDwFLDezxWaWJBiwXpOzzRrghnD5WuARD67GtAa4zsxSZrYYWA48WcJaRURkAiU7G8rd02Z2C/Agwamzd7v7JjP7LLDW3dcAXwG+ZmadBD2K68LHbjKzbwLPAWngZp0JJSISHV1WVUSkik32sqqaolJERIqaNj0LM+sCXj2Jp2gDuqeonKmkuo6P6jp+lVqb6jo+J1rX6e5e9AyhaRMWJ8vM1k6mK1Zuquv4qK7jV6m1qa7jU+q6dBhKRESKUliIiEhRCouj7oq6gAJU1/FRXcevUmtTXcenpHVpzEJERIpSz0JERIqq+rAwsyvNbIuZdZrZbRHWscDMfmpmm81sk5n9Ydj+aTPbYWbrw5+rI6rvFTN7Jqxhbdg2y8x+YmYvhrczy1zT67L2y3oz6zWzj0exz8zsbjPba2bPZrXl3T8W+GL4nttoZheWua6/MbPnw9f+rpnNCNsXmdnhrP325VLVNUFtBX93ZvaJcJ9tMbP/Uua67s+q6RUzWx+2l22fTfAZUZ73mbtX7Q/BNCQvAUuAJLABWBlRLR3AheFyE/ACsBL4NPAnFbCvXgHactr+GrgtXL4NuD3i3+Vu4PQo9hnwFuBC4Nli+we4GvgRwezKbwSeKHNd7wQS4fLtWXUtyt4uon2W93cX/l/YAKSAxeH/23i56spZ/3fAp8q9zyb4jCjL+6zaexaTuUBTWbj7LndfFy4fAjZT+dfwyL541T3Ab0ZYyxXAS+5+Ml/MPGHu/ijB/GbZCu2f1cC/eOBXwAwz6yhXXe7+kAfXjwH4FcGszmVXYJ8VUrYLok1Ul5kZ8H7g3lK89kQm+Iwoy/us2sOiIi+yZGaLgAuAJ8KmW8Ju5N3lPtSTxYGHzOxpM7spbJvj7rsgeCMDsyOqDYJJKLP/A1fCPiu0fyrpffdhgr8+xyw2s1+b2c/N7LKIasr3u6uUfXYZsMfdX8xqK/s+y/mMKMv7rNrDYlIXWSonM2sEvg183N17gf8LLAXOB3YRdIGjcIm7XwhcBdxsZm+JqI5jWDAF/nuAfwubKmWfFVIR7zsz+yTBrM5fD5t2AQvd/QLgVuAbZtZc5rIK/e4qYp8B1zP+j5Ky77M8nxEFN83TdsL7rNrDYlIXWSoXM6sheBN83d2/A+Due9w94+6jwP8jomuRu/vO8HYv8N2wjj1j3drwdm8UtREE2Dp33xPWWBH7jML7J/L3nZndALwL+JCHB7jDQzz7wuWnCcYFVpSzrgl+d5WwzxLAbwH3j7WVe5/l+4ygTO+zag+LyVygqSzCY6FfATa7++ey2rOPMb4XeDb3sWWorcHMmsaWCQZIn2X8xatuAL5f7tpC4/7aq4R9Fiq0f9YAvxuerfJG4ODYYYRyMLMrgT8D3uPuA1nt7WYWD5eXEFx0bGu56gpft9DvrhIuiPZ24Hl33z7WUM59VugzgnK9z8oxil/JPwRnDLxA8BfBJyOs41KCLuJGYH34czXwNeCZsH0N0BFBbUsIzkTZAGwa209AK/Aw8GJ4OyuC2uqBfUBLVlvZ9xlBWO0CRgj+orux0P4hODxwZ/ieewZYVea6OgmOZY+9z74cbvvb4e93A7AOeHcE+6zg7w74ZLjPtgBXlbOusP2rwEdzti3bPpvgM6Is7zN9g1tERIqq9sNQIiIyCQoLEREpSmEhIiJFKSxERKQohYWIiBSlsBDJw8weD28XmdkHp/i5/2e+1xKpZDp1VmQCZvZWgllQ33Ucj4m7e2aC9X3u3jgV9YmUi3oWInmYWV+4+FfAZeG1Cv7IzOIWXA/iqXCyu4+E2781vNbANwi+AIWZfS+ceHHT2OSLZvZXQF34fF/Pfq3wm7Z/Y2bPWnDtkA9kPffPzOxbFlyH4uvht3lFyiYRdQEiFe42snoW4Yf+QXe/yMxSwGOrCAvwAAABXUlEQVRm9lC47cXA2R5MoQ3wYXffb2Z1wFNm9m13v83MbnH38/O81m8RTKB3HtAWPubRcN0FwFkEc/s8BlwC/OfU/3NF8lPPQuT4vJNgvp31BNNDtxLMBwTwZFZQAPyBmW0guGbEgqztCrkUuNeDifT2AD8HLsp67u0eTLC3nuCiOyJlo56FyPEx4GPu/uC4xmBsoz/n/tuBN7n7gJn9DKidxHMXMpS1nEH/d6XM1LMQmdghgktYjnkQ+P1wqmjMbEU4E2+uFuBAGBRnEFzWcszI2ONzPAp8IBwXaSe4vGe5Z1YVyUt/nYhMbCOQDg8nfRX4AsEhoHXhIHMX+S8n+2Pgo2a2kWCW1F9lrbsL2Ghm69z9Q1nt3wXeRDCDqQN/6u67w7ARiZROnRURkaJ0GEpERIpSWIiISFEKCxERKUphISIiRSksRESkKIWFiIgUpbAQEZGiFBYiIlLU/wfEHQmT6v+SugAAAABJRU5ErkJggg==\n",

"text/plain": [

""

]

},

"metadata": {

"needs_background": "light"

},

"output_type": "display_data"

}

],

"source": [

"import matplotlib.pyplot as plt\n",

"%matplotlib inline\n",

"\n",

"fig, ax = plt.subplots()\n",

"ax.plot(range(n_iter), mse)\n",

"\n",

"ax.set(xlabel='iteration', \n",

" ylabel='MSE')\n",

"\n",

"plt.show()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Testing our network with new data (validation):\n",

"How well does our classifier perform on unseen data? To test how well it performs on new data, let's pretend we split our hours studying/sleeping data into training and testing samples. The example above uses few training samples (N=3) to train our network. Let's use a \"testing sample\" to validate our neural network model."

]

},

{

"cell_type": "code",

"execution_count": 14,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"predicted scores=\n",

"[0.86, 0.83, 0.84, 0.87, 0.85, 0.86]\n",

"actual scores=\n",

"[0.9, 0.88, 0.91, 0.95, 0.9, 0.92]\n"

]

}

],

"source": [

"X_test = np.array(([2, 8], [1, 4], [2, 4], [3, 8], [1,8], [2,9]), dtype=float)\n",

"X_test = X_test/X_max\n",

"y_actual = np.array(([.9], [.88], [.91], [.95], [.90], [.92]), dtype=float)\n",

"\n",

"# Test \n",

"y_pred = NN.test(X_test)\n",

"\n",

"print(\"predicted scores=\")\n",

"print([round(y_pred[i,0],2) for i in range(len(y_pred))])\n",

"\n",

"print(\"actual scores=\")\n",

"print([y_actual[j,0] for j in range(len(y_actual))])"

]

},

{

"cell_type": "code",

"execution_count": 15,

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"accuracy = 79.2%\n"

]

}

],

"source": [

"from scipy.stats.stats import pearsonr\n",

"\n",

"accuracy = pearsonr(y_pred,y_actual)\n",

"print(str('accuracy = %03.1f%%' % (accuracy[0][0]*100)))"

]

},

{

"cell_type": "code",

"execution_count": 16,

"metadata": {},

"outputs": [

{

"data": {

"image/png": "iVBORw0KGgoAAAANSUhEUgAAAZEAAAEKCAYAAADTgGjXAAAABHNCSVQICAgIfAhkiAAAAAlwSFlzAAALEgAACxIB0t1+/AAAADl0RVh0U29mdHdhcmUAbWF0cGxvdGxpYiB2ZXJzaW9uIDIuMi4yLCBodHRwOi8vbWF0cGxvdGxpYi5vcmcvhp/UCwAAGVtJREFUeJzt3Xu0nXV95/H3hwAlIMglqWMImOAgiJVKPaKOFyheoHaUmxcualFX0SreRjKS6lpjsRYrdM1ydWgd7AJFWxlUzMDoEJwUZJbiyIkhhItRRJQktAQxIJJKEr7zx34O2RySc3aek51zds77tdZeeZ7f8zx7f3/ZOfmc5/Z7UlVIktTGLpNdgCRpcBkikqTWDBFJUmuGiCSpNUNEktSaISJJas0QkSS1ZohIklozRCRJre062QVsL7Nmzap58+ZNdhmSNFCWLl36QFXNbrv9ThMi8+bNY3h4eLLLkKSBkuTnE9new1mSpNYMEUlSa4aIJKk1Q0SS1JohIklqzRCRJLVmiEiSWjNEJEmtGSKSpNYMEUlSa4aIJKk1Q0SS1FpfQyTJCUlWJrkryXlbWP6sJEuS3JrkhiRzu5YdnOS6JHcmuSPJvH7WKknadn0LkSQzgIuBPwKOAE5PcsSo1S4CLq+qI4HzgQu6ll0OXFhVzwWOBu7vV62SpHb6uSdyNHBXVd1dVY8BVwAnjlrnCGBJM339yPImbHatqm8DVNUjVfVoH2uVJLXQzxA5ELi3a35V09ZtOXBqM30ysHeSA4DnAOuSXJVkWZILmz0bSdIU0s8QyRbaatT8ucAxSZYBxwCrgY10Hpb1imb5i4BDgLOe8gHJ2UmGkwyvXbt2O5YuSepFP0NkFXBQ1/xcYE33ClW1pqpOqaqjgI81bQ812y5rDoVtBBYBfzD6A6rqkqoaqqqh2bNbP91RktRSP0PkZuDQJPOT7A6cBlzdvUKSWUlGalgIXNq17X5JRpLhOOCOPtYqSWqhbyHS7EGcAywG7gSurKrbk5yf5A3NascCK5P8GHgG8Klm2010DmUtSbKCzqGxz/erVklSO6kafZpiMA0NDdXw8PBklyFJAyXJ0qoaaru9d6xLklozRCRJrRkikqTWDBFJUmuGiCSpNUNEktSaISJJas0QkSS1ZohIklozRCRJrRkikqTWDBFJUmu7TnYBkjQVLFq2mgsXr2TNuvXM2XcmC44/jJOOGv0wVo1miEia9hYtW83Cq1awfsMmAFavW8/Cq1YAGCTj8HCWpGnvwsUrnwiQEes3bOLCxSsnqaLBYYhImvbWrFu/Te3azBCRNO3N2XfmNrVrM0NE0rS34PjDmLnbjCe1zdxtBguOP2ySKhocnliXNO2NnDz36qxtZ4hIEp0gMTS2nYezJEmtGSKSpNYMEUlSa4aIJKk1Q0SS1JohIklqzRCRJLVmiEiSWjNEJEmtGSKSpNYMEUlSa4aIJKm1voZIkhOSrExyV5LztrD8WUmWJLk1yQ1J5o5avk+S1Un+Wz/rlCS107cQSTIDuBj4I+AI4PQkR4xa7SLg8qo6EjgfuGDU8k8C3+lXjZKkiennnsjRwF1VdXdVPQZcAZw4ap0jgCXN9PXdy5O8EHgGcF0fa5QkTUA/Q+RA4N6u+VVNW7flwKnN9MnA3kkOSLIL8DfAgrE+IMnZSYaTDK9du3Y7lS1J6lU/QyRbaKtR8+cCxyRZBhwDrAY2Au8FvlVV9zKGqrqkqoaqamj27Nnbo2ZJ0jbo55MNVwEHdc3PBdZ0r1BVa4BTAJI8DTi1qh5K8lLgFUneCzwN2D3JI1X1lJPzkqTJ088QuRk4NMl8OnsYpwFndK+QZBbwYFU9DiwELgWoqjO71jkLGDJAJGnq6dvhrKraCJwDLAbuBK6sqtuTnJ/kDc1qxwIrk/yYzkn0T/WrHknS9peq0acpBtPQ0FANDw9PdhmSNFCSLK2qobbbe8e6JKk1Q0SS1JohIklqzRCRJLVmiEiSWjNEJEmtGSKSpNZ6CpEkM5Mc1u9iJEmDZdwQSfJ64Bbg2mb+BUmu7ndhkqSpr5c9kU/QeTbIOoCqugWY17+SJEmDopcQ2VhVD/W9EknSwOllFN/bkpwBzEhyKPAB4Hv9LUuSNAh62RN5P/A84LfAPwEPAR/qZ1GSpMEw5p5IkhnAX1TVAuBjO6YkSdKgGHNPpKo2AS/cQbVIkgZML+dEljWX9H4V+M1IY1Vd1beqJEkDoZcQ2R/4JXBcV1sBhogkTXPjhkhVvWNHFCJJGjy93LE+N8k3ktyf5F+TfD3J3B1RnCRpauvlEt/LgKuBOcCBwDVNmyRpmuslRGZX1WVVtbF5fQGY3ee6JEkDoJcQeSDJW5PMaF5vpXOiXZI0zfUSIu8E3gz8C3Af8MamTZI0zfVyddYvgDfsgFokSQOml6uzvphk3675/ZJc2t+yJEmDoJfDWUdW1bqRmar6FXBU/0qSJA2KXkJklyT7jcwk2Z/e7nSXJO3kegmDvwG+l+RrzfybgE/1ryRJ0qDo5cT65UmG6YydFeCUqrqj75VJkqa8cUMkybOBn1bVHUmOBV6dZE33eRJJ0vTUyzmRrwObkvx74B+A+XSecChJmuZ6CZHHq2ojcArw2ar6MPDMXt48yQlJVia5K8l5W1j+rCRLktya5IaRgR2TvCDJTUlub5a9ZVs6JUnaMXo5sb4hyenA24HXN227jbdR82jdi4HXAKuAm5NcPep8ykXA5VX1xSTHARcAbwMeBd5eVT9JMgdYmmSxh9Ck6WfRstVcuHgla9atZ86+M1lw/GGcdNSBk12WGr3sibwDeCnwqar6WZL5wJd72O5o4K6quruqHgOuAE4ctc4RwJJm+vqR5VX146r6STO9BrgfB32Upp1Fy1az8KoVrF63ngJWr1vPwqtWsGjZ6skuTY1xQ6Sq7qiqD1TVV5r5n1XVp3t47wOBe7vmVzVt3ZYDpzbTJwN7Jzmge4UkRwO7Az/t4TMl7UQuXLyS9Rs2Palt/YZNXLh45SRVpNF62RNpK1toq1Hz5wLHJFkGHAOsBjY+8QbJM4EvAe+oqsef8gHJ2UmGkwyvXbt2+1UuaUpYs279NrVrx+tniKwCDuqanwus6V6hqtZU1SlVdRTwsabtIYAk+wDfBD5eVd/f0gdU1SVVNVRVQ7Nne7RL2tnM2XfmNrVrx+tniNwMHJpkfpLdgdPoPCHxCUlmJRmpYSFwadO+O/ANOifdv9rHGiVNYQuOP4yZu814UtvM3Waw4PjDJqkijbbVq7OSXMNTDz89oarGHB6+qjYmOQdYDMwALq2q25OcDwxX1dXAscAFSQq4EXhfs/mbgVcCByQ5q2k7q6pu6alXknYKI1dheXXW1JWqLedEkmOayVOAf8fmK7JOB+6pqj/vf3m9GxoaquHh4ckuQ5IGSpKlVTXUdvut7olU1XeaD/hkVb2ya9E1SW5s+4GSpJ1HL+dEZic5ZGSmuU/Es9iSpJ7uWP8wcEOSu5v5ecC7+1aRJGlg9DIU/LVJDgUOb5p+VFW/7W9ZkqRB0Msz1vcEFgDnVNVy4OAk/7HvlUmSprxezolcBjxGZ/ws6NxE+Jd9q0iSNDB6CZFnV9VngA0AVbWeLQ9pIkmaZnoJkceSzKS58bB50qHnRCRJPV2d9QngWuCgJP8IvIzO8PCSpGmul6uzrkuyFHgJncNYH6yqB/pemSRpyuvl6qwlVfXLqvpmVf2vqnogyZLxtpMk7fzGGoBxD2BPYFaS/dh8Mn0fYM4OqE2SNMWNdTjr3cCH6ATGUjaHyMN0np0uTSqfvS1NvrEGYPws8Nkk76+qv92BNUnjGnn29sijU0eevQ0YJNIO1Mslvo8n2XdkJsl+Sd7bx5qkcfnsbWlq6CVE/rSq1o3MVNWvgD/tX0nS+Hz2tjQ19BIiuyR54g71JDOA3ftXkjQ+n70tTQ29hMhi4Mokr0pyHPAVOjcfSpPGZ29LU0Mvd6x/lM6VWn9G5wqt64B/6GdR0nh89rY0NWz1GeuDxmesS9K269sz1pNcWVVvTrKCZvDFblV1ZNsPlSTtHMY6nPXB5k8fQCVJ2qKxbja8r/nz5zuuHEnSIBnrcNav2cJhrBFVtU9fKpIkDYyx9kT2BkhyPvAvwJfoXJ11JrD3DqlOkjSl9XKfyPFV9XdV9euqeriq/h44td+FSZKmvl5CZFOSM5PMSLJLkjOBTeNuJUna6fUSImcAbwb+tXm9qWmTJE1zvTwe9x7gxP6XIkkaNL08Hvc5SZYkua2ZPzLJx/tfmiRpquvlcNbngYXABoCquhU4rZ9FSZIGQy8hsmdV/WBU28Z+FCNJGiy9hMgDSZ5Nc+NhkjcC9/Xy5klOSLIyyV1JztvC8mc1h8puTXJDkrldy/4kyU+a15/02B9pylu0bDUv+/Q/M/+8b/KyT/8zi5atnuyStrvp0Ed19DIU/PuAS4DDk6wGfkbnhsMxNQ+vuhh4DbAKuDnJ1VV1R9dqFwGXV9UXm2eVXAC8Lcn+wH8BhuiE19Jm219tQ9+kKWc6PBt+OvRRm425J5JkF2Coql4NzAYOr6qX9zie1tHAXVV1d1U9BlzBU6/yOgJY0kxf37X8eODbVfVgExzfBk7oqUfSFDYdng0/HfqozcYMkap6HDinmf5NVf16G977QODervlVTVu35Wy++/1kYO8kB/S4LUnOTjKcZHjt2rXbUJo0OabDs+GnQx+1WS/nRL6d5NwkByXZf+TVw3bZQtvoAR3PBY5Jsgw4BlhN56R9L9tSVZdU1VBVDc2ePbuHkqTJNR2eDT8d+qjNegmRd9I5L3IjsLR59fIIwVXAQV3zc4E13StU1ZqqOqWqjgI+1rQ91Mu20iCaDs+Gnw591Ga93LE+v+V73wwcmmQ+nT2M0xg1XEqSWcCDzWGzhcClzaLFwF8l2a+Zf22zXBpo0+HZ8NOhj9ps3BBJsgfwXuDldA4p/V/gc1X1b2NtV1Ubk5xDJxBmAJdW1e3N0PLDVXU1cCxwQZKis6fzvmbbB5N8kk4QAZxfVQ+26aA01Zx01IE7/X+o06GP6kjVVp871VkhuRL4NfDlpul0YL+qelOfa9smQ0NDNTzcy1E2SdKIJEuraqjt9r3cJ3JYVf1+1/z1SZa3/UBJ0s6jlxPry5K8ZGQmyYuB7/avJEnSoOhlT+TFwNuT/KKZPxi4M8kKoKrqyL5VJ0ma0noJEe8UlyRtUS+X+PYyxIkkaRrq5ZyIJElbZIhIklozRCRJrRkikqTWDBFJUmuGiCSpNUNEktSaISJJas0QkSS1ZohIklozRCRJrRkikqTWDBFJUmuGiCSpNUNEktSaISJJas0QkSS1ZohIklozRCRJrRkikqTWDBFJUmuGiCSpNUNEktSaISJJas0QkSS1ZohIklrra4gkOSHJyiR3JTlvC8sPTnJ9kmVJbk3yuqZ9tyRfTLIiyZ1JFvazTklSO7v2642TzAAuBl4DrAJuTnJ1Vd3RtdrHgSur6u+THAF8C5gHvAn4nap6fpI9gTuSfKWq7ulXvYNk0bLVXLh4JWvWrWfOvjNZcPxhnHTUgZNdlqRpqJ97IkcDd1XV3VX1GHAFcOKodQrYp5l+OrCmq32vJLsCM4HHgIf7WOvAWLRsNQuvWsHqdespYPW69Sy8agWLlq2e7NIkTUP9DJEDgXu75lc1bd0+Abw1ySo6eyHvb9q/BvwGuA/4BXBRVT3Yx1oHxoWLV7J+w6Ynta3fsIkLF6+cpIokTWf9DJFsoa1GzZ8OfKGq5gKvA76UZBc6ezGbgDnAfOAjSQ55ygckZycZTjK8du3a7Vv9FLVm3fptapekfupniKwCDuqan8vmw1Uj3gVcCVBVNwF7ALOAM4Brq2pDVd0PfBcYGv0BVXVJVQ1V1dDs2bP70IWpZ86+M7epXZL6qZ8hcjNwaJL5SXYHTgOuHrXOL4BXASR5Lp0QWdu0H5eOvYCXAD/qY60DY8HxhzFztxlPapu52wwWHH/YJFUkaTrr29VZVbUxyTnAYmAGcGlV3Z7kfGC4qq4GPgJ8PsmH6RzqOquqKsnFwGXAbXQOi11WVbf2q9ZBMnIVlldnSZoKUjX6NMVgGhoaquHh4ckuQ5IGSpKlVfWU0wW98o51SVJrhogkqTVDRJLUmiEiSWrNEJEktWaISJJaM0QkSa0ZIpKk1gwRSVJrhogkqTVDRJLUmiEiSWrNEJEktWaISJJaM0QkSa0ZIpKk1gwRSVJrhogkqTVDRJLUmiEiSWrNEJEktWaISJJaM0QkSa0ZIpKk1gwRSVJrhogkqTVDRJLUmiEiSWrNEJEktWaISJJaM0QkSa0ZIpKk1gwRSVJrqarJrmG7SLIW+PkkljALeGASP397sR9Tz87SF/sx9cwC9qqq2W3fYKcJkcmWZLiqhia7jomyH1PPztIX+zH1bI++eDhLktSaISJJas0Q2X4umewCthP7MfXsLH2xH1PPhPviORFJUmvuiUiSWjNExpHkhCQrk9yV5LwtLD84yfVJliW5NcnrmvbXJFmaZEXz53E7vvqn1Nq2L0cnuaV5LU9y8o6v/kl1turHqOWPJDl3x1X9VBP4PuYlWd/1nXxux1f/pDpbfx9JjkxyU5Lbm5+VPXZs9U+pte13cmbX93FLkseTvGDH9+CJOtv2Y7ckX2y+izuTLBz3w6rK11ZewAzgp8AhwO7AcuCIUetcAvxZM30EcE8zfRQwp5n+PWD1APdlT2DXZvqZwP0j84PUj67lXwe+Cpw7oN/HPOC2yfz3tJ36sStwK/D7zfwBwIxB7MuodZ4P3D2I/QDOAK5opvcE7gHmjfV57omM7Wjgrqq6u6oeA64AThy1TgH7NNNPB9YAVNWyqlrTtN8O7JHkd3ZAzVszkb48WlUbm/Y9mvUmS+t+ACQ5CbibzncymSbUjylkIv14LXBrVS0HqKpfVtWmHVDz1myv7+R04Ct9q3J8E+lHAXsl2RWYCTwGPDzWhxkiYzsQuLdrflXT1u0TwFuTrAK+Bbx/C+9zKrCsqn7bjyJ7NKG+JHlxktuBFcB7ukJlR2vdjyR7AR8F/qL/ZY5rov+25jeHIr6T5BV9rXRsE+nHc4BKsjjJD5P8534XO47t9fP+FiY3RCbSj68BvwHuA34BXFRVD471YYbI2LKFttG/hZ8OfKGq5gKvA76U5Im/1yTPA/4aeHffquzNhPpSVf+vqp4HvAhYOInHrifSj78A/mtVPdLnGnsxkX7cBxxcVUcB/wn4pyT7MDkm0o9dgZcDZzZ/npzkVf0sdhzb4+f9xcCjVXVb/8oc10T6cTSwCZgDzAc+kuSQsT7MEBnbKuCgrvm5PHX39V3AlQBVdROdwz2zAJLMBb4BvL2qftr3asc2ob6MqKo76fym8nt9q3RsE+nHi4HPJLkH+BDw50nO6XfBW9G6H1X126r6ZdO+lM7x7+f0veItm8j3sQr4TlU9UFWP0vmN+A/6XvHWbY+fkdOY3L0QmFg/zgCuraoNVXU/8F1g7GFRJuvkzyC86PymdDedRB45QfW8Uev8b+CsZvq5zZcVYN9m/VMnux/boS/z2Xxi/VlN+6xB68eodT7B5J5Yn8j3MZvmBDSdk6ergf0HsB/7AT+kuXAD+D/AHw/id9LM70LnP/BDJqsP2+E7+ShwWTO9F3AHcOSYnzeZnR2EF51dvR/T+W3vY03b+cAbmukj6KT1cuAW4LVN+8fp/MZ+S9frdwe0L2+jcyL6luaH/qRB7Meo9/gEkxgiE/w+Tm2+j+XN9/H6QexHs+ytTV9uAz4zmf3YDn05Fvj+ZPdhgv+2nkbnysXb6QTIgvE+yzvWJUmteU5EktSaISJJas0QkSS1ZohIklozRCRJrRki0lYkOTbJf5jge0yFu+OlvjFEpK07FphQiOwIzWB50qQwRDStJFmUzvNdbk9ydlf7Cc0ggMuTLEkyD3gP8OHm+RCvSPKFJG/s2uaR5s+nNdv8sHkOw+gRU0fXsFeSbzafdVuStzTtL0ryvab9B0n2TrJHksua912W5A+bdc9K8tUk1wDXNW0LktzcPB9iKgwyqWnA32A03byzqh5MMhO4OcnX6fwy9XnglVX1syT7N+t8Dnikqi4CSPKurbznvwEnV9XDSWYB309ydW39Tt4TgDVV9cfN+z49ye7A/wDeUlU3NwMqrgc+CFBVz09yOHBdkpFxsl5KZ0iKB5O8FjiUzgB6Aa5O8sqqunECf1fSuNwT0XTzgSTLge/TGaTuUOAlwI1V9TOAGmfo6y0I8FdJbqUz/tOBwDPGWH8F8Ookf53kFVX1EHAYcF9V3dzU8HB1htt/OfClpu1HwM/ZPNjit7tqfW3zWkZnKJTDm75JfeWeiKaNJMcCrwZeWlWPJrmBzuilobcHbW2k+cUrSegMbgedocxnAy+sqg3NKMFbHSq/qn6c5IV0xje6IMl1wKKt1LClYb1H/GbUehdU1X/voR/SduOeiKaTpwO/agLkcDp7IAA3AcckmQ+QZP+m/dfA3l3b3wO8sJk+Edit633vbwLkD+mMdLxVSebQeebEl4GL6Ax//iNgTpIXNevs3Zwwv5FOSNEcxjoYWLmFt10MvDPJ05p1D0zyu+P8fUgT5p6IppNrgfc0h51W0jmkRVWtbU6yX9U8mOd+4DXANcDXmhPl76dz3uR/JvkBsITNewL/CFyTZJjOiKg/GqeO5wMXJnkc2EDnWdePNSfY/7Y5X7Oezl7T3wGfS7KCzp7QWVX1286O0GZVdV2S5wI3NcseoTNC7v1t/qKkXjmKrySpNQ9nSZJaM0QkSa0ZIpKk1gwRSVJrhogkqTVDRJLUmiEiSWrNEJEktfb/ATDcFTaki7ZNAAAAAElFTkSuQmCC\n",

"text/plain": [

""

]

},

"metadata": {

"needs_background": "light"

},

"output_type": "display_data"

}

],

"source": [

"fig, ax = plt.subplots()\n",

"ax.scatter(y_pred, y_actual)\n",

"ax.set(xlabel='actual score',\n",

"ylabel='predicted score')\n",

"plt.show()"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": []

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.6.6"

}

},

"nbformat": 4,

"nbformat_minor": 2

}