Building Reliable Websites

Load and Performance Edition

Stephen Kuenzli

@author skuenzli

breaking systems for fun and profit since 2000

The Process

- determine expected site load

- validate site handles expected load

- stay operational when load exceeds expectations

- profit!

determine expected site load

Key Metrics

- Throughput: requests per second

- Performance: response time

What percentage of your customers do you care about?

50%

95%

99%

?

Define a Service Level Agreement

- Throughput: 42 requests per second

- Performance: 99% of response times <= 100ms

Don't Forget!

- network latency and bandwidth

- client processing power

HOWTO: measure historical throughput

# total number of GETs to /myservice for a given day

grep -c 'GET /myservice' logs/app*/access.log.2012-11-16

# estimate peak hour for service from sample

grep 'GET /myservice' logs/app??5/access.log.2012-11-16 | \

perl -nle 'print m|/201\d:(\d\d):|' | sort -n | uniq -c

# total number of GETs to /myservice at peak hour

grep -c '2012-11-16 17:.*GET /myservice' \

logs/app*/access.log.2012-11-16

HOWTO: measure response time

# processing times recorded by server in access log

grep "GET /myservice" logs/app*/access.log.2012-11-16 | \

cut -d\" -f7 | sort -n > service.access_times.2012-11-16

what about network latency and bandwidth?

does request fit in the client's resource budget? 50/95/99%?

all models are wrong; some models are useful

|

model +/- 20%

|

adjust for

|

validate site handles expected load

validation process

- select a tool

- build a simulation

- run simulation multiple times / periodically

- analyze trends

Gatling is an Open Source Stress Tool with:

- A DSL to describe scenarios

- High performance

- HTTP support

- Meaningful reports

- Executable from command-line or maven

- A scenario recorder

gatling-tool.org

build simulation

run simulation multiple times / periodically

- gather statistically significant results

- establish a baseline

- verify site does [not meet] SLAs

- detect changes over time

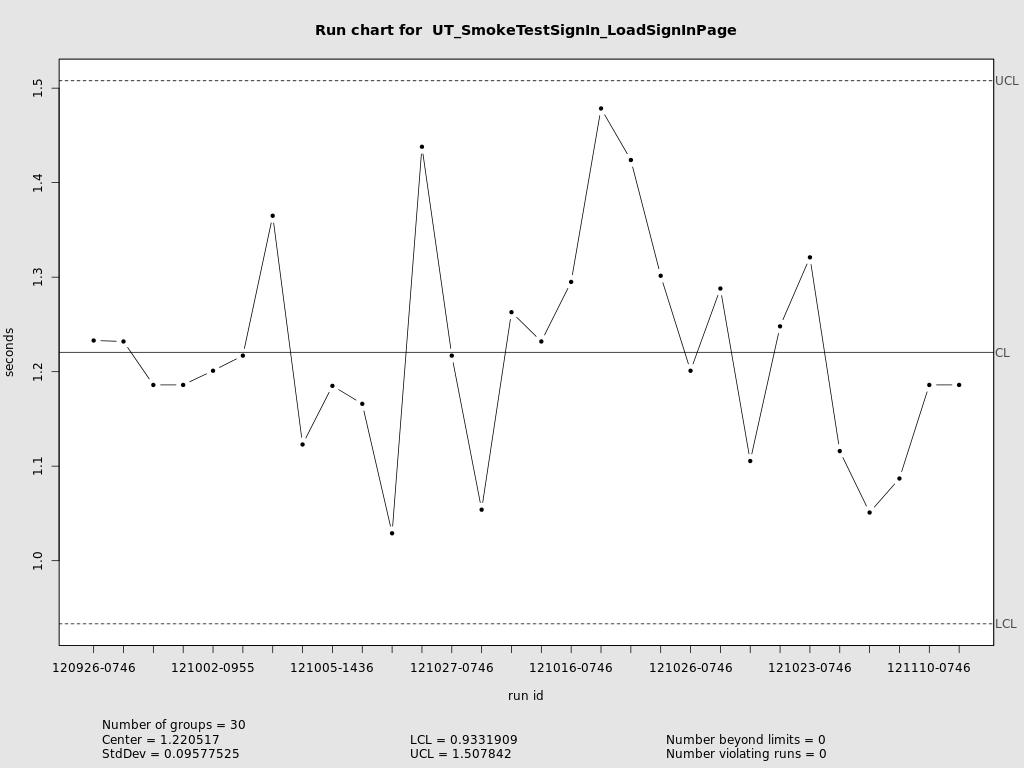

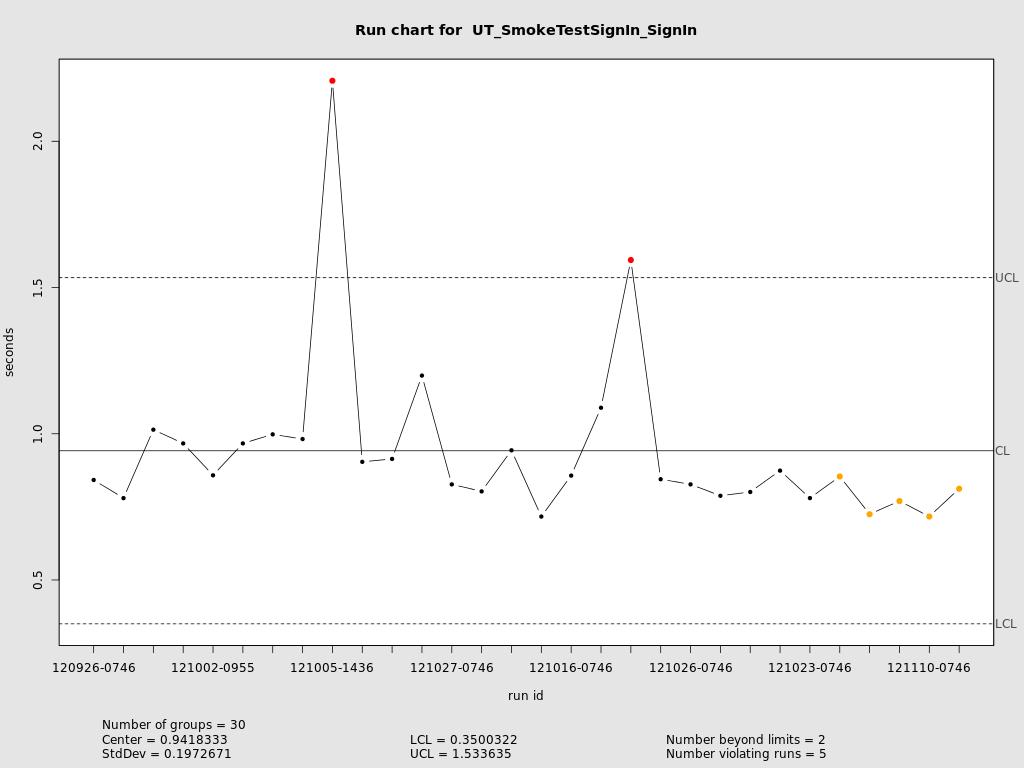

analyze trends

detect changes in trend with control charts

is process changing?

is process changing?

stay operational when load exceeds expectations

the needs of the many outweigh the needs of the few or the one

a dead site is no good to anyone

know the site's limits and stay within them

implement a series of circuit breakers that can be tripped to reduce load in a managed way

- manual breakers, tripped by operations staff

- automatic breakers, tripped by software

Example services ranked by criticality

- send marketing email off-line / off-hours

- update customer's dashboard breaker #1

- upload images breaker #2

- render images breaker #3

- sign-in

- checkout

- save customer's work

there's usually a trade-off available

Resources

- This presentation: https://github.com/skuenzli/building-reliable-websites

- Concurrency Limiting Filter: https://github.com/skuenzli/simplyreliable

- Web Operations: http://shop.oreilly.com/product/0636920000136.do

- Circuit Breaker Pattern: http://doc.akka.io/docs/akka/2.1.0-RC1/common/circuitbreaker.html

- Gatling: http://gatling-tool.org

- USL