# Event-based Vision Resources

## Innovative Neuromorphic Vision Sensors (INVISIONS) workshop, 2026. Feb 5th-6th.

## Neuromorphic Computing and Sensing Meets Extended Reality — IEEE JETCAS Special Issue "Circuits and Systems for Extended Reality" Call for Papers. Paper submission until March 2nd 2026.

## EVS: Event Vision School 2026. May 17-23th, Arenzano (Italy).

## WACV 2026 EVGEN: Event-based Vision in the Era of Generative AI. March 2026, Tucosn (USA). Paper submission until Dec 7th 2025.

## SPIE Photonics West. Vision Tech Forum. Neuromorphic Sensing in Motion: From Event-Based Vision to Adaptive Intelligence, Jan. 21, 2026

## NeuroPAC

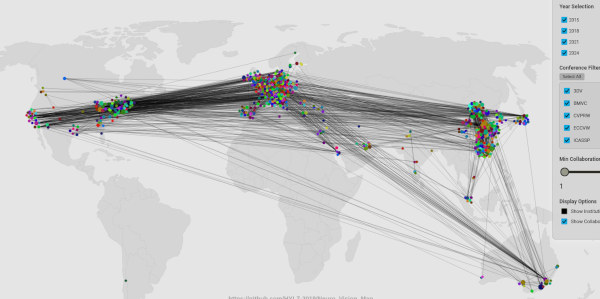

## Map of event-based institutions (from papers)

[](https://hylz-2019.github.io/Neuro_Vision_Map/map.html)

- [Contribute to the above map](https://github.com/HYLZ-2019/Neuro_Vision_Map)

## Table of Contents:

- [Survey paper](#survey_paper)

- [Workshops](#workshops)

- [Devices and Manufacturers](#devices)

- [Companies working on Event-based Vision](#companies_sftwr)

- [Neuromorphic Systems](#neuromorphic-systems)

- [Review papers](#reviewpapers)

- [Bio-inspiration](#reviewpapers-bio)

- [Algorithms, Applications](#reviewpapers-algs)

- [Applications / Algorithms](#algorithms)

- [Feature Detection and Tracking](#feature-detection)

- [Corners](#corner-detection)

- [Particles in fluids](#particle-detection)

- [Eye Tracking](#eye_tracking)

- [Optical Flow Estimation](#optical-flow-estimation)

- [Scene Flow Estimation](#scene-flow-estimation)

- [Reconstruction of Visual Information](#visualization)

- [Intensity-Image Reconstruction](#image-reconstruction)

- [Video Synthesis](#video-synthesis)

- [Image super-resolution](#super-resolution)

- [Joint/guided filtering](#joint-filtering)

- [Tone mapping](#tone-mapping)

- [Visual Stabilization](#visual-stabilization)

- [Polarization Reconstruction](#polarization-reconstruction)

- [Depth Estimation (3D Reconstruction)](#depth-estimation)

- [Monocular](#depth-mono)

- [Monocular Depth Estimation using Structured Light](#depth-mono-active)

- [Monocular Object Reconstruction](#object-mono)

- [Stereo](#depth-stereo)

- [Stereo Depth Estimation using Structured Light](#depth-stereo-active)

- [Stereoscopic panoramic imaging](#depth-stereo-pano)

- [Events and LiDAR](#event-lidar-fusion)

- [SLAM (Simultaneous Localization And Mapping)](#slam)

- [Localization, Ego-motion estimation](#slam-localization)

- [Visual Odometry](#visual-odometry)

- [Visual-Inertial Odometry](#visual-inertial)

- [Segmentation](#segmentation)

- [Object Segmentation](#object-segmentation)

- [Motion Segmentation](#motion-segmentation)

- [Pattern recognition](#pattern-recognition)

- [Object Recognition](#object-recognition)

- [Gesture Recognition](#gesture-recognition)

- [Representation / Feature Extraction](#learning-representation-features)

- [Regression Tasks](#learning-regression)

- [Learning Methods / Frameworks](#learning-methods-frameworks)

- [Signal Processing](#signal_processing)

- [Event Denoising](#denoising)

- [Compression](#compression)

- [Event Downsampling](#downsampling)

- [Control](#control)

- [Obstacle Avoidance](#obstacle_avoidance)

- [Space Applications](#space)

- [Tactile Sensing Applications](#tactile_sensing)

- [Object Pose Estimation](#object_pose_estimation)

- [Human Pose Estimation](#human_pose_estimation)

- [Hand Pose Estimation](#hand_pose_estimation)

- [Indoor Lighting Estimation](#indoor_lighting_estimation)

- [Data Encryption](#data_encription)

- [Nuclear Verification](#nuclear_verification)

- [Optical Communication](#optical_communication)

- [Animal Behavior Monitoring](#animal_monitoring)

- [Optical Applications](#optical_applications)

- [Auto-focus](#auto_focus)

- [Auto-exposure](#auto_exposure)

- [Speckle Analysis](#speckle_analysis)

- [Interferometry or Holography](#interferometry_or_holography)

- [Wavefront sensing](#wavefront_sensing)

- [Optical super-resolution](#super_resolution_imaging)

- [Schlieren imaging](#schlieren_imaging)

- [Event-Based Image Velocimetry (EBIV)](#event-based-image-velocimetry)

- [Driver Monitoring System](#driver_monitoring_system)

- [Multi-tasking networks: Face, Head Pose & Eye Gaze estimation](#DMS)

- [Drowsiness or Yawn](#Drwosiness_or_yawn)

- [Distraction](#distraction_detecton)

- [Face Alignment and Landmark Detection](#face-alignment-landmarking)

- [Visual Voice Activity Detection](#voice-activity-detection)

- [Simulators and Emulators](#simulators-emulators)

- [Synthetic Data Generators](#synthetic-data-generators)

- [Datasets](#datasets)

- [Software](#software)

- [Drivers](#drivers)

- [Synchronization](#synchronization)

- [Lens Calibration](#calibration)

- [Algorithms](#software-algorithms)

- [Utilities](#software-utilities)

- [Neuromorphic Processors and Platforms](#processors-platforms)

- [Courses](#teaching)

- [Theses and Dissertations](#theses)

- [Dissertations](#theses-phd)

- [Master's Theses](#theses-master)

- [People / Organizations](#people)

- [EETimes articles](#press-eetimes)

- [Contributing](#contributing)

___

# Survey paper

- Gallego, G., Delbruck, T., Orchard, G., Bartolozzi, C., Taba, B., Censi, A., Leutenegger, S., Davison, A., Conradt, J., Daniilidis, K., Scaramuzza, D.,

**_[Event-based Vision: A Survey](http://rpg.ifi.uzh.ch/docs/EventVisionSurvey.pdf)_**,

IEEE Trans. Pattern Anal. Machine Intell. (TPAMI), 44(1):154-180, Jan. 2022.

# Workshops

- [EVS 2026: Event Vision School 2026](https://edpr.iit.it/events/2026-evs)

- [WACV 2026 EVGEN: 2nd Worksgop on Event-based Vision in the Era of Generative AI - Transforming Perception and Visual Innovation](https://eventbasedvision.github.io/EVGEN2026/)

- [IROS 2025 Workshop on Event-Based Vision. And Stereo SLAM Challenge](https://sites.google.com/view/neurobots2025)

- [IROS 2025 Workshop on Neuromorphic Perception for Real World Robotics (NeuRobots 2025)](https://sites.google.com/view/iros-2024-workshop)

- [ICCV 2025 2nd Workshop on Neuromorphic Vision (NeVi)](https://sites.google.com/view/nevi-2025/)

- [DSP 2025 Special Session on Event-based Vision: “Processing the Neuromorphic Signal: Event-based Vision and Applications”](https://2025.ic-dsp.org/special-session-4/)

- [2025 Telluride Neuromorphic Cognition Engineering Workshop](https://sites.google.com/view/telluride-2025/home)

- [IEEE T-RO Special Collection on Event-based Vision for Robotics, 2025](https://www.ieee-ras.org/publications/t-ro/special-issues/event-based-vision-for-robotics)

- [CVPR 2025 Fifth International Workshop on Event-based Vision](https://tub-rip.github.io/eventvision2025)

- [WACV 2025 EVGEN: Event-based Vision in the Era of Generative AI - Transforming Perception and Visual Innovation](https://eventbasedvision.github.io/EVGEN2025/)

- [IROS 2024 Workshop on Embodied Neuromorphic AI for Robotic Perception and Control](https://sites.google.com/view/iros-2024-workshop)

- [ECCV 2024 Workshop on Neuromorphic Vision (NeVi)](https://sites.google.com/view/nevi2024), **[Slides](https://sites.google.com/view/nevi2024/program)**

- [ECCV 2024 1st Workshop on Neural Fields Beyond Conventional Cameras](https://neural-fields-beyond-cams.github.io/)

- [Caméra à événements appliquée à la robotique, Sorbonne University, Paris (Nov. 16th, 2023)](https://tub-rip.github.io/eventvision2023/slides/2023-11_Workshop_event_cameras_Sorbonne.pdf)

- [CVPR 2023 Fourth International Workshop on Event-based Vision](https://tub-rip.github.io/eventvision2023), **[Videos](https://www.youtube.com/playlist?list=PLeXWz-g2If96iotpzgBNNTr9VA6hG-LLK)**

- [IEEE Embedded Vision Workshop Series](https://embeddedvisionworkshop.wordpress.com), with focus on Biologically-inspired vision and embedded systems.

- [IISW 2023 Int. Image Sensor Workshop](https://imagesensors.org/2023-international-image-sensor-workshop/)

- [MFI 2022 First Neuromorphic Event Sensor Fusion Workshop](https://sites.google.com/view/eventsensorfusion2022/home) with videos incl. _Event Sensor Fusion Jeopardy_ game - Virtual. **[Videos](https://youtube.com/playlist?list=PLVtZ8f-q0U5gXhjN4inwWZi66bp5vp-lN)**

- [tinyML Neuromorphic Engineering Forum](https://www.tinyml.org/event/tinyml-neuromorphic-engineering-forum/) - Virtual, 2022. **[Videos](https://www.youtube.com/playlist?list=PLeisuBi-nfBM5HayCqF4KMBaJciV5UkLX)**

- [ICCV 2021 Tutorial. Introduction to Event Detection Cameras](https://tub-rip.github.io/eventvision2021/slides/ICCV2021Tutorial.pdf)

- [CVPR 2021 Third International Workshop on Event-based Vision](https://tub-rip.github.io/eventvision2021) - Virtual. **[Videos](https://www.youtube.com/playlist?list=PLeXWz-g2If95mjNpA-y-WIoDaoB8WtmE7)**

- [ICRA 2020 Workshop on Unconventional Sensors in Robotics](https://sites.google.com/view/unconventional-sensors) - Virtual. **[Videos](https://www.youtube.com/playlist?list=PLtW5yHT6tQuD4sLzkldzZEyQ4hz77K64-)**

- [Neuro-Inspired Computational Elements (NICE) Workshop Series](https://niceworkshop.org/nice-2019/). **[Videos](https://www.youtube.com/channel/UCKTLpjY9e8cMK12d2-Z-usA)**

- [Capo Caccia Workshops toward Cognitive Neuromorphic Engineering](http://capocaccia.iniforum.ch/).

- [The Telluride Neuromorphic Cognition Engineering Workshops](https://tellurideneuromorhic.org). **[Videos](https://sites.google.com/view/telluride2020/about-workshop/videos)**, Telluride 2020 (Online): **[Videos](https://www.youtube.com/playlist?list=PLG-iqBTOyCO5NAbqbsHPPnL9h35z0ooSE)**, **[Slides](https://drive.google.com/drive/folders/1lmUSjZoDb7yc_HO9xw0M5J4fIGRIfu_u)**

- [CVPR 2019 Second International Workshop on Event-based Vision and Smart Cameras](http://rpg.ifi.uzh.ch/CVPR19_event_vision_workshop.html). **[Videos](https://www.youtube.com/playlist?list=PLeXWz-g2If97iGiuBHmnW8IFIxwvSeCHx)**

- [IROS 2018 Unconventional Sensing and Processing for Robotic Visual Perception](https://www.jmartel.net/irosws-home).

- [ICRA 2017 First International Workshop on Event-based Vision](http://rpg.ifi.uzh.ch/ICRA17_event_vision_workshop.html). **[Videos](https://www.youtube.com/playlist?list=PLeXWz-g2If94k8mw6GcKU5C9PUgM1sK0U)**

- [IROS 2015 Event-Based Vision for High-Speed Robotics (slides)](http://www.rit.edu/kgcoe/iros15workshop/papers/IROS2015-WASRoP-Invited-04-slides.pdf), Workshop on Alternative Sensing for Robot Perception.

- [ICRA 2015 Workshop on Innovative Sensing for Robotics](http://innovative-sensing.mit.edu/), with a focus on Neuromorphic Sensors.

# Devices & Companies Manufacturing them

- **DVS (Dynamic Vision Sensor)**: Lichtsteiner, P., Posch, C., and Delbruck, T., *[A 128x128 120dB 15μs latency asynchronous temporal contrast vision sensor](http://doi.org/10.1109/JSSC.2007.914337)*, IEEE J. Solid-State Circuits, 43(2):566-576, 2008. [PDF](https://www.ini.uzh.ch/~tobi/wiki/lib/exe/fetch.php?media=lichtsteiner_dvs_jssc08.pdf)

- [Product page at iniVation](https://inivation.com/dvs/). [**Buy a DVS**](https://inivation.com/buy/)

- [Product specifications](https://inivation.com/support/product-specifications/)

- [User guide](https://inivation.github.io/inivation-docs/Hardware%20user%20guides/User_guide_-_DVS128.html)

- [Introductory videos about the DVS technology](https://inivation.com/dvs/videos/)

- [iniVation AG](https://inivation.com/) invents, produces and sells neuromorphic technologies with a special focus on event-based vision into *business*. [Slides](http://rpg.ifi.uzh.ch/docs/ICRA17workshop/Jakobsen.pdf) by [S. E. Jakobsen](https://inivation.com/company/), board member of iniVation.

- [Event Cameras - Tutorial - Tobi Delbruck, version 4](https://youtu.be/Th4TM4SsFGY)

- **Samsung's DVS**

- [Slides](http://rpg.ifi.uzh.ch/docs/CVPR19workshop/CVPRW19_Eric_Ryu_Samsung.pdf) and [Video](https://youtu.be/7fAPckjQSGE) by [Hyunsurk Eric Ryu](https://www.linkedin.com/in/hyunsurk-eric-ryu-82745712), Samsung Electronics (2019).

- Suh et al., *[A 1280×960 Dynamic Vision Sensor with a 4.95-μm Pixel Pitch and Motion Artifact Minimization](https://doi.org/10.1109/ISCAS45731.2020.9180436)*, IEEE Int. Symp. Circuits and Systems (ISCAS), 2020.

- Son, B., et al., *[A 640×480 dynamic vision sensor with a 9µm pixel and 300Meps address-event representation](https://doi.org/10.1109/ISSCC.2017.7870263)*, IEEE Int. Solid-State Circuits Conf. (ISSCC), 2017, pp. 66-67.

- [SmartThings Vision](https://www.samsung.com/se/smartthings/smartthings-vision-u999/) commercial product for home monitoring. [in Australia](https://www.samsung.com/au/smart-home/smartthings-vision-u999/)

- [Paper at IEDM 2019](#Park19iedm), about low-latency applications using Samsung's VGA DVS.

- **HVS** (Hybrid Vision Sensors) like **ATIS**, **DAVIS**, **CDAVIS**, and other HVS that output brightness change events and intensity frames, either mono or color

- **ATIS** [(Asynchronous Time-based Image Sensor), Posch et al. JSSC 2011](#Posch11jssc),

*A QVGA 143 dB Dynamic Range Frame-Free PWM Image Sensor With Lossless Pixel-Level Video Compression and Time-Domain CDS*.

- **DAVIS (Dynamic and Active Pixel Vision Sensor)**: Brandli, C., Berner, R., Yang, M., Liu, S.-C., Delbruck, T., *[A 240x180 130 dB 3 µs Latency Global Shutter Spatiotemporal Vision Sensor](https://doi.org/10.1109/JSSC.2014.2342715)*, IEEE J. Solid-State Circuits, 49(10):2333-2341, 2014. [PDF](https://drive.google.com/file/d/0BzvXOhBHjRhea3RrelA1V0RKVWM/view)

- [Product page at iniVation](https://inivation.com/dvs/). [**Buy a DAVIS**](https://inivation.com/buy/)

- [Product specifications](https://inivation.com/support/product-specifications/)

- [User guide](https://inivation.github.io/inivation-docs/Hardware%20user%20guides/User_guide_-_DAVIS240.html)

- **DAVIS346**: Taverni, G; Paul Moeys, D; Li, C; Cavaco, C; Motsnyi, V; San Segundo Bello, D; Delbruck, T., *[Front and Back Illuminated Dynamic and Active Pixel Vision Sensors Comparison](http://dx.doi.org/10.1109/TCSII.2018.2824899)*, IEEE Trans. Circuits Syst. Express Briefs, 2018

- **CDAVIS HVS**: Li, C., Brandli, C., Berner, R., Liu, H., Yang, M., Liu, S.-C., Delbruck, T., *[An RGBW color VGA rolling and global shutter dynamic and active-pixel vision sensor](https://www.imagesensors.org/Past%20Workshops/2015%20Workshop/2015%20Papers/Sessions/Session_13/13-05_Li_Delbruck.pdf)*, Int. Image Sensors Worskhop, 2015.

- Prototype only

- **SDAVIS192**: Moeys, D. P., Corradi, F., Li, C., Bamford, S. A., Longinotti, L., Voigt, F. F., Berry, S., Taverni, G., Helmchen, F., Delbruck, T., *[A Sensitive Dynamic and Active Pixel Vision Sensor for Color or Neural Imaging Applications](https://doi.org/10.1109/TBCAS.2017.2759783)*, IEEE Trans. Biomed. Circuits Syst. 12(1):123-136 2018.

- Prototype only

- **Omnivision HVS**: Guo et al, [A 3-Wafer-Stacked Hybrid 15MPixel CIS + 1 MPixel EVS with 4.6GEvent/s Readout, In-Pixel TDC and On-Chip ISP and ESP Function](http://dx.doi.org/10.1109/isscc42615.2023.10067476), ISSCC, (2023).

- Prototype, commercially n.a.

- **Sony HVS**: Kodama et al., [1.22μm 35.6Mpixel RGB Hybrid Event-Based Vision Sensor with 4.88μm-Pitch Event Pixels and up to 10K Event Frame Rate by Adaptive Control on Event Sparsity](http://dx.doi.org/10.1109/ISSCC42615.2023.10067520), ISSCC (2023)

- Prototype only, commercially n.a.

- [**Insightness's Silicon Eye**](https://youtu.be/Y0mIb_MehK8) QVGA event sensor.

- [The Silicon Eye Technology](http://www.insightness.com/?p=361)

- [Slides](http://rpg.ifi.uzh.ch/docs/CVPR19workshop/CVPRW19_Insightness.pdf) and [Video](https://youtu.be/9IJwF9xYEoU) by [Stefan Isler](http://www.insightness.com/#team) (2019).

- [Slides](http://rpg.ifi.uzh.ch/docs/ICRA17workshop/Insightness.pdf) and [Video](https://youtu.be/6YyOW6DDGKw) by [Christian Brandli](http://www.insightness.com/#team), CEO and co-founder of Insightness (2017).

- [**PROPHESEE’s Metavision Sensor**](https://www.prophesee.ai/event-based-sensor-packaged/) and [**Software**](https://www.prophesee.ai/metavision-intelligence/)

- **ATIS** (Asynchronous Time-based Image Sensor): Posch, C., Matolin, D., Wohlgenannt, R. (2011). *[A QVGA 143 dB Dynamic Range Frame-Free PWM Image Sensor With Lossless Pixel-Level Video Compression and Time-Domain CDS](http://doi.org/10.1109/JSSC.2010.2085952)*, IEEE J. Solid-State Circuits, 46(1):259-275, 2011. [YouTube](https://youtu.be/YQ8rT9Harb4), [YouTube](https://youtu.be/3Wiw8LA8hLs)

- Prophesee Gen4 is described in: Finateu et al., *[A 1280×720 Back-Illuminated Stacked Temporal Contrast Event-Based Vision Sensor with 4.86μm Pixels, 1.066GEPS Readout, Programmable Event-Rate Controller and Compressive Data-Formatting Pipeline](https://doi.org/10.1109/ISSCC19947.2020.9063149)*, IEEE Int. Solid-State Circuits Conf. (ISSCC), 2020, pp. 112-114.

- [**Buy a Prophesee packaged sensor VGA**](https://www.prophesee.ai/event-based-sensor-packaged)

- [Prophesee Cameras Specifications](https://www.prophesee.ai/event-based-evaluation-kits/)

- What is event-based vision and sample applications, [YouTube](https://www.youtube.com/watch?v=MjX3z-6n3iA)

- [Download free or buy Metavision software ](https://www.prophesee.ai/metavision-intelligence/)

- [Documentation and tutorials](https://docs.prophesee.ai/)

- [Knowledge Base](https://support.prophesee.ai/portal/en/kb/prophesee-1) and [Community Forum](https://support.prophesee.ai/portal/en/community/forum)

- [**SONY's explanation of Event-based Vision Sensor (EVS) Technolgy**](https://www.sony-semicon.com/en/technology/industry/evs.html)

- [**CelePixel**](http://www.celepixel.com/), Shanghai. CeleX-V: the first 1 Mega-pixel event-camera sensor.

- **Sensitive DVS (sDVS)**

- All are prototypes, commerically n.a.

- Leñero-Bardallo, J. A., Serrano-Gotarredona, T., Linares-Barranco, B., *[A 3.6us Asynchronous Frame-Free Event-Driven Dynamic-Vision-Sensor](https://doi.org/10.1109/JSSC.2011.2118490)*, IEEE J. of Solid-State Circuits, 46(6):1443-1455, 2011.

- Serrano-Gotarredona, T. and Linares-Barranco, B., *[A 128x128 1.5% Contrast Sensitivity 0.9% FPN 3us Latency 4mW Asynchronous Frame-Free Dynamic Vision Sensor Using Transimpedance Amplifiers](https://doi.org/10.1109/JSSC.2012.2230553)*, IEEE J. Solid-State Circuits, 48(3):827-838, 2013.

- **SDAVIS192**: Moeys, D. P., Corradi, F., Li, C., Bamford, S. A., Longinotti, L., Voigt, F. F., Berry, S., Taverni, G., Helmchen, F., Delbruck, T., *[A Sensitive Dynamic and Active Pixel Vision Sensor for Color or Neural Imaging Applications](https://doi.org/10.1109/TBCAS.2017.2759783)*, IEEE Trans. Biomed. Circuits Syst. 12(1):123-136 2018.

- **SciDVS**: Graca, R., Zhou, S., McReynolds, B., Delbruck, T., *[SciDVS: A Scientific Event Camera with 1.7% Temporal Contrast Sensitivity at 0.7 lux](https://doi.org/10.1109/ESSERC62670.2024.10719521)*, ESSERC, (2024).

- **DLS (Dynamic Line Sensor)**: Posch, C., Hofstaetter, M., Matolin, D., Vanstraelen, G., Schoen, P., Donath, N., and Litzenberger, M., *[A dual-line optical transient sensor with on-chip precision time-stamp generation](https://doi.org/10.1109/ISSCC.2007.373513)*, IEEE Int. Solid-State Circuits Conf. - Digest of Technical Papers, Lisbon Falls, MN, US, 2007.

- [Fact sheet at AIT](https://www.ait.ac.at/fileadmin/mc/digital_safety_security/downloads/Factsheet_-_Linescan-Chip_DLS_en.pdf).

- **LWIR DVS**: Posch, C., Matolin, D., Wohlgenannt, R., Maier, T., Litzenberger, M., *[A Microbolometer Asynchronous Dynamic Vision Sensor for LWIR](https://doi.org/10.1109/JSEN.2009.2020658)*, IEEE Sensors Journal, 9(6):654-664, 2009.

- Prototype, commercially n.a.

- **Smart DVS (GAEP)**: Posch, C., Hoffstaetter, M., Schoen, P., *[A SPARC-compatible general purpose Address-Event processor with 20-bit 10ns-resolution asynchronous sensor data interface in 0.18um CMOS](https://doi.org/10.1109/ISCAS.2010.5537575)*, IEEE Int. Symp. Circuits and Systems (ISCAS), 2010.

- Prototype, commercially n.a.

- **PDAVIS (Polarization Event Camera)**:

- Prototype, commercially n.a.

- [Bio-inspired Polarization Event Camera](http://arxiv.org/abs/2112.01933), arXiv [cs.CV] (2021) [PDAVIS video](https://drive.google.com/file/d/157mT8960m_QCm15i8HlB5SVyf45X_NUo/view?usp=sharing).

- [PDAVIS: Bio-inspired Polarization Event Camera](https://openaccess.thecvf.com/content/CVPR2023W/EventVision/html/Haessig_PDAVIS_Bio-Inspired_Polarization_Event_Camera_CVPRW_2023_paper.html). CVPR-W Proceedings (2023)

- **Center Surround Event Camera (CSDVS)**: Delbruck, T., Li, C., Graca, R. & Mcreynolds, B.,

*[Utility and Feasibility of a Center Surround Event Camera](http://arxiv.org/abs/2202.13076)*

arXiv [cs.CV] (2022) [CSDVS videos](https://sites.google.com/view/csdvs/home)

- Proposed architecture.

# Companies working on Event-based Vision

- [iniVation AG](https://inivation.com/) invents, produces and sells neuromorphic vision sensors (DAVIS, DVExplorer, and others), with a focus on event-based vision for business; supplies the advanced [DV event camera software](https://docs.inivation.com/software/introduction.html).

- [iniLabs AG](https://inilabs.com/) invents neuromorphic technologies for *research*.

- [Samsung](http://www.samsung.com) develops Gen2 and Gen3 dynamic vision sensors and event-based vision solutions.

- [IBM Research](http://www.research.ibm.com/articles/brain-chip.shtml) ([Synapse project](http://www.research.ibm.com/cognitive-computing/brainpower/)) and Samsung partenered to combine the [TrueNorth chip (brain) with a DVS (eye)](https://www.cnet.com/news/samsung-turns-ibms-brain-like-chip-into-a-digital-eye/).

- [Prophesee](http://www.prophesee.ai) (Formerly [Chronocam](http://www.chronocam.com/)) is the inventor and supplier of 4 Event-Based sensors generations, including commercial-grade versions as well as industry’s largest software suite. The company focuses on Industrial, Mobile-IoT and Automotive applications.

- [Insightness AG](http://www.insightness.com/) built visual systems to give mobile devices spatial awareness. [The Silicon Eye](http://www.insightness.com/?p=361) Technology. Aquired by Sony in 2019 and part of Sony Advanced Imager Sensors division.

- [SLAMcore](https://www.slamcore.com/) develops Localisation and mapping solutions for AR/VR, robotics & autonomous vehicles.

- [CelePixel](https://www.celepixel.com) (formerly Hillhouse Technology) offer integrated sensory platforms that incorporate various components and technologies, including a processing chipset and an image sensor (a dynamic vision sensor called CeleX).

- [AIT Austrian Institute of Technology](https://www.ait.ac.at/en/research-fields/new-sensor-technologies/optical-sensor-systems-for-industrial-processes/) sells neuromorphic sensor products.

- [Inspection during production of carton packs](https://www.youtube.com/watch?v=8PZmb2z2bXw&index=39&list=PL659671AC92E70F19)

- [UCOS Universal Counting Sensor](https://www.ait.ac.at/fileadmin/mc/digital_safety_security/downloads/Factsheet_-_People-Counting-Sensor_en.pdf)

- [IVS Industrial Vision Sensor](https://www.ait.ac.at/fileadmin/mc/digital_safety_security/downloads/Factsheet_-_Industrial-Vision-Sensor_en.pdf)

# Neuromorphic Systems

- Serrano-Gotarredona, T. , Andreou, A.G. , Linares-Barranco, B.,

*[AER Image Filtering Architecture for Vision Processing Systems](https://doi.org/10.1109/81.788808)*,

IEEE Trans. Circuits Syst. I, Fundam. Theory Appl., 46(9):1064-1071, 1999.

- Serrano-Gotarredona, R., Oster, M., Lichtsteiner, P., Linares-Barranco, A., Paz-Vicente, R., Gomez-Rodriguez, F., Riis, H.K., Delbruck, T., Liu, S.-H., Zahnd, S., Whatley, A.M., Douglas, R., Hafliger, P., Jimenez-Moreno, G., Civit, A., Serrano-Gotarredona, T., Acosta-Jimenez, A., Linares-Barranco, B.,

*[AER building blocks for multi-layer multi-chip neuromorphic vision systems](http://papers.nips.cc/paper/2889-aer-building-blocks-for-multi-layer-multi-chip-neuromorphic-vision-systems.pdf)*,

Advances in neural information processing systems, 1217-1224, 2006.

- Liu, S.-C. and Delbruck, T.,

*[Neuromorphic sensory systems](https://doi.org/10.1016/j.conb.2010.03.007)*,

Current Opinion in Neurobiology, 20:3(288-295), 2010.

- Zamarreño-Ramos, C., Linares-Barranco, A., Serrano-Gotarredona, T., Linares-Barranco, B.,

*[Multi-Casting Mesh AER: A Scalable Assembly Approach for Reconfigurable Neuromorphic Structured AER Systems. Application to ConvNets](https://doi.org/10.1109/TBCAS.2012.2195725)*,

IEEE Trans. Biomed. Circuits Syst., 7(1):82-102, 2013.

- Liu, S.-C., Delbruck, T., Indiveri, G., Whatley, A., Douglas, R.,

*[Event-Based Neuromorphic Systems](http://eu.wiley.com/WileyCDA/WileyTitle/productCd-1118927621.html)*,

Wiley. ISBN: 978-1-118-92762-5, 2014.

- Chicca, E., Stefanini, F., Bartolozzi, C., Indiveri, G.,

*[Neuromorphic Electronic Circuits for Building Autonomous Cognitive Systems](http://dx.doi.org/10.1109/JPROC.2014.2313954)*,

Proc. IEEE, 102(9):1367-1388, 2014.

- Vanarse, A., Osseiran, A., Rassau, A,

*[A Review of Current Neuromorphic Approaches for Vision, Auditory, and Olfactory Sensors](http://dx.doi.org/10.3389/fnins.2016.00115)*,

Front. Neurosci. (2016), 10:115.

- [Liu et al., Signal Process. Mag. 2019](#Liu19msp),

*Event-Driven Sensing for Efficient Perception: Vision and audition algorithms*.

- [Event Cameras Tutorial - Tobi Delbruck, version 4.1](https://youtu.be/D6rv6q9XyWU), Sep. 18, 2020.

- Kirkland, P., Di Caterina, G., Soraghan, J., Matich, G.,

[Neuromorphic technologies for defence and security](https://doi.org/10.1117/12.2575978),

SPIE vol 11540, Emerging Imaging and Sensing Technologies for Security and Defence V; and Advanced Manufacturing Technologies for Micro- and Nanosystems in Security and Defence III; 2020.

# Review / Overview papers

## Sensor designs, Bio-inspiration

- Delbruck, T.,

*[Activity-driven, event-based vision sensors](https://doi.org/10.1109/ISCAS.2010.5537149)*,

IEEE Int. Symp. Circuits and Systems (ISCAS), 2010. [PDF](https://e-lab.github.io/data/papers/ISCAS2010actsens.pdf).

- Posch, C.,

*[Bio-inspired vision](https://doi.org/10.1088/1748-0221/7/01/C01054)*,

J. of Instrumentation, 7 C01054, 2012. Bio-inspired explanation of the DVS and the ATIS.

- Posch, C., Serrano-Gotarredona, T., Linares-Barranco, B., Delbruck, T.,

*[Retinomorphic Event-Based Vision Sensors: Bioinspired Cameras With Spiking Output](https://doi.org/10.1109/JPROC.2014.2346153),*

Proc. IEEE (2014), 102(10):1470-1484.

- Posch, C.,

*[Bioinspired vision sensing](https://doi.org/10.1002/9783527680863.ch2)*,

Biologically Inspired Computer Vision, Wiley-Blackwell, pp. 11-28, 2015. [book index](http://bicv.github.io/toc/index.html)

- Posch, C., Benosman, R., Etienne-Cummings, R.,

*[How Neuromorphic Image Sensors Steal Tricks From the Human Eye](https://spectrum.ieee.org/biomedical/devices/how-neuromorphic-image-sensors-steal-tricks-from-the-human-eye)*, also published as *[Giving Machines Humanlike Eyes](https://doi.org/10.1109/MSPEC.2015.7335800)*,

IEEE Spectrum, 52(12):44-49, 2015.

- Cho, D., Lee, T.-J.,

*[A Review of Bioinspired Vision Sensors and Their Applications](https://doi.org/10.18494/SAM.2015.1133)*,

Sensors and Materials, 27(6):447-463, 2015. [PDF](https://myukk.org/SM2017/sm_pdf/SM1083.pdf)

- Sandamirskaya, Y., Kaboli, M., Conradt, J., Celikel, T.,

*[Neuromorphic computing hardware and neural architectures for robotics](https://doi.org/10.1126/scirobotics.abl8419)*,

Science Robotics, 7(67):eabl8419, 2022.

## Algorithms, Applications

- Delbruck, T.,

*[Fun with asynchronous vision sensors and processing](https://www.ini.uzh.ch/~tobi/wiki/lib/exe/fetch.php?media=delbruck_funwithasynsensors_2012.pdf)*.

Computer Vision - ECCV 2012. Workshops and Demonstrations. Springer Berlin/Heidelberg, 2012. A position paper and summary of recent accomplishments of the INI Sensors' group.

- Delbruck, T.,

*[Neuromorophic Vision Sensing and Processing (Invited paper)](https://doi.org/10.1109/ESSDERC.2016.7599576)*,

46th Eur. Solid-State Device Research Conference (ESSDERC), Lausanne, 2016, pp. 7-14.

- Lakshmi, A., Chakraborty, A., Thakur, C.S.,

*[Neuromorphic vision: From sensors to event-based algorithms](https://doi.org/10.1002/widm.1310)*,

Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 9(4), 2019.

- [Steffen, L. et al., Front. Neurorobot. 2019](#Steffen19fnbot),

*Neuromorphic Stereo Vision: A Survey of Bio-Inspired Sensors and Algorithms*.

- [Gallego et al., TPAMI 2020](#Gallego20tpami),

*[Event-based Vision: A Survey](http://rpg.ifi.uzh.ch/docs/EventVisionSurvey.pdf)*.

- Chen, G., Cao, H., Conradt, J., Tang, H., Rohrbein, F., Knoll, A.,

[Event-Based Neuromorphic Vision for Autonomous Driving: A Paradigm Shift for Bio-Inspired Visual Sensing and Perception](https://doi.org/10.1109/MSP.2020.2985815),

IEEE Signal Processing Magazine, 37(4):34-49, 2020.

- Chen, G., Wang, F., Li, W., Hong, L., Conradt, J., Chen, J., Zhang, Z., Lu, Y., Knoll, A.,

*[NeuroIV: Neuromorphic Vision Meets Intelligent Vehicle Towards Safe Driving With a New Database and Baseline Evaluations](https://doi.org/10.1109/TITS.2020.3022921)*,

IEEE Trans. Intelligent Transportation Systems (TITS), 2020.

- Tayarani-Najaran, M.-H., Schmuker, M.,

*[Event-Based Sensing and Signal Processing in the Visual, Auditory, and Olfactory Domain: A Review](https://doi.org/10.3389/fncir.2021.610446)*,

Front. Neural Circuits 15:610446, 2021.

- Sun, R. Shi, D., Zhang, Y., Li, R., Li, R.,

*[Data-Driven Technology in Event-Based Vision](https://doi.org/10.1155/2021/6689337)*,

Complexity, vol. 2021, Article ID 6689337.

- Bartolozzi, C., Indiveri, G., Donati, E.,

*[Embodied neuromorphic intelligence](https://doi.org/10.1038/s41467-022-28487-2)*,

Nat. Commun. 13:1024, 2022.

- Zou, XL., Huang, T.J., Wu, S.,

*[Towards a New Paradigm for Brain-inspired Computer Vision](https://doi.org/10.1007/s11633-022-1370-z)*,

Mach. Intell. Res., 19:412-424, 2022.

- Gehrig, D., Scaramuzza, D.,

*[Are High-Resolution Cameras Really Needed?](https://arxiv.org/abs/2203.14672)*,

arXiv, 2022. [YouTube](https://youtu.be/HV9_FhS-f88), [Code](https://uzh-rpg.github.io/eres/).

- Ercan, B., Eker, O., Erdem, A., Erdem, E.,

*[EVREAL: Towards a Comprehensive Benchmark and Analysis Suite for Event-based Video Reconstruction](https://openaccess.thecvf.com/content/CVPR2023W/EventVision/papers/Ercan_EVREAL_Towards_a_Comprehensive_Benchmark_and_Analysis_Suite_for_Event-Based_CVPRW_2023_paper.pdf)*,

IEEE Conf. Computer Vision and Pattern Recognition Workshops (CVPRW), 2023. [PDF](https://openaccess.thecvf.com/content/CVPR2023W/EventVision/papers/Ercan_EVREAL_Towards_a_Comprehensive_Benchmark_and_Analysis_Suite_for_Event-Based_CVPRW_2023_paper.pdf), [Project Page](https://ercanburak.github.io/evreal.html), [Suppl.](https://openaccess.thecvf.com/content/CVPR2023W/EventVision/supplemental/Ercan_EVREAL_Towards_a_CVPRW_2023_supplemental.zip), [Code](https://github.com/ercanburak/EVREAL).

- Tapia, R., Rodríguez-Gómez, J.P., Sanchez-Diaz, J.A., Gañán, F.J., Rodríguez, I.G., Luna-Santamaria, J., Martínez-De Dios, J.R., Ollero, A.,

*[A Comparison Between Framed-Based and Event-Based Cameras for Flapping-Wing Robot Perception](https://doi.org/10.1109/IROS55552.2023.10342500)*,

IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), 2023, pp. 3025-3032. [PDF](https://arxiv.org/pdf/2309.05450), [YouTube](https://www.youtube.com/watch?v=a0X2NGPtY8w).

- Ghosh, S., Gallego, G.,

*[Event-based Stereo Depth Estimation: A Survey](https://arxiv.org/abs/2409.17680)*,

arXiv 2024,

- Cazzato, D., Bono, F.,

*[An Application-Driven Survey on Event-Based Neuromorphic Computer Vision](https://www.mdpi.com/2078-2489/15/8/472)*,

Information 15.8 (2024): 472.

- AliAkbarpour, H., Moori, A., Khorramdel, J., Blasch, E., Tahri, O.,

*[Emerging Trends and Applications of Neuromorphic Dynamic Vision Sensors: A Survey](https://doi.org/10.1109/SR.2024.3513952)*,

IEEE Sensors Reviews (2024), vol 1, pp. 14-63. [PDF](https://ieeexplore.ieee.org/iel8/10347229/10787061/10795229.pdf)

- Iddrisu, K., Shariff, W., Corcoran, P., O’Connor, N., Lemley, J., Little, S.,

*[Event Camera Based Eye Motion Analysis: A Survey](https://doi.org/10.1109/ACCESS.2024.3462109)*,

IEEE Access, 12:136783-136804 (2025).

# Algorithms

## Feature Detection and Tracking

- Litzenberger, M., Posch, C., Bauer, D., Belbachir, A. N., Schon. P., Kohn, B., Garn, H.,

*[Embedded Vision System for Real-Time Object Tracking using an Asynchronous Transient Vision Sensor](https://doi.org/10.1109/DSPWS.2006.265448)*,

IEEE 12th Digital Signal Proc. Workshop and 4th IEEE Signal Proc. Education Workshop, Teton National Park, WY, 2006, pp. 173-178. [PDF](http://www.belbachir.info/PDF/dsp2006.pdf)

- Litzenberger, M., Kohn, B., Belbachir, A.N., Donath, N., Gritsch, G., Garn, H., Posch, C., Schraml, S.,

*[Estimation of Vehicle Speed Based on Asynchronous Data from a Silicon Retina Optical Sensor](https://doi.org/10.1109/ITSC.2006.1706816)*,

IEEE Intelligent Transportation Systems Conf. (ITSC), 2006, pp. 653-658. [PDF](http://belbachir.info/PDF/itsc2006.pdf)

- Bauer, D., Belbachir, A. N., Donath, N., Gritsch, G., Kohn, B., Litzenberger, M., Posch, C., Schön, P., Schraml, S.,

*[Embedded Vehicle Speed Estimation System Using an Asynchronous Temporal Contrast Vision Sensor](https://link.springer.com/article/10.1155/2007/82174)*,

EURASIP J. Embedded Systems, 2007:082174. [PDF](http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.385.424&rep=rep1&type=pdf)

- Litzenberger, M., Belbachir, N., Schon, P., Posch, C.,

*[Embedded Smart Camera for High Speed Vision](https://doi.org/10.1109/ICDSC.2007.4357509)*,

ACM/IEEE Int. Conf. on Distributed Smart Cameras, 2007. [PDF](http://belbachir.info/PDF/icdsc2007.pdf)

- Ni, Z., Bolopion, A., Agnus, J., Benosman, R., Regnier, S.,

*[Asynchronous event-based visual shape tracking for stable haptic feedback in microrobotics](https://doi.org/10.1109/TRO.2012.2198930)*,

IEEE Trans. Robot. (TRO), 28(5):1081-1089, 2012.

- [Ni, Ph.D. Thesis, 2013](#Ni13PhD),

*Asynchronous Event Based Vision: Algorithms and Applications to Microrobotics*.

- Ni, Z., Ieng, S. H., Posch, C., Regnier, S., Benosman, R.,

*[Visual Tracking Using Neuromorphic Asynchronous Event-Based Cameras](https://doi.org/10.1162/NECO_a_00720)*,

Neural Computation (2015), 27(4):925-953. [YouTube](https://youtu.be/eQ7reEN9PrA)

- Piatkowska, E., Belbachir, A. N., Schraml, S., Gelautz, M.,

*[Spatiotemporal multiple persons tracking using Dynamic Vision Sensor](https://doi.org/10.1109/CVPRW.2012.6238892)*,

IEEE Conf. Computer Vision and Pattern Recognition Workshops (CVPRW), 2012, pp. 35-40. [PDF](https://publik.tuwien.ac.at/files/PubDat_209369.pdf)

- Lagorce, X., Ieng, S.-H., Clady, X., Pfeiffer, M., Benosman, R.,

*[Spatiotemporal features for asynchronous event-based data](http://dx.doi.org/10.3389/fnins.2015.00046)*,

Front. Neurosci. (2015), 9:46.

- Lagorce, X., Ieng, S. H., Benosman, R.,

*[Event-based features for robotic vision](http://dx.doi.org/10.1109/IROS.2013.6696960)*,

IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), 2013, pp. 4214-4219.

- Saner, D., Wang, O., Heinzle, S., Pritch, Y., Smolic, A., Sorkine-Hornung, A., Gross, M.,

*[High-Speed Object Tracking Using an Asynchronous Temporal Contrast Sensor](http://dx.doi.org/10.2312/vmv.20141280)*,

Int. Symp. Vision, Modeling and Visualization (VMV), 2014. [PDF](http://ahornung.net/files/pub/2014-vmv-siliconretina-saner.pdf)

- Lagorce, X., Meyer, C., Ieng, S. H., Filliat, D., Benosman, R.,

*[Asynchronous Event-Based Multikernel Algorithm for High-Speed Visual Features Tracking](https://doi.org/10.1109/TNNLS.2014.2352401)*,

IEEE Trans. Neural Netw. Learn. Syst. (TNNLS), 26(8):1710-1720, 2015. [YouTube](https://youtu.be/ze8Wgou9yA4)

- Lagorce, X., Meyer, C., Ieng, S. H., Filliat, D., Benosman, R.,

*[Live demonstration: Neuromorphic event-based multi-kernel algorithm for high speed visual features tracking](https://doi.org/10.1109/BioCAS.2014.6981681)*,

IEEE Biomedical Circuits and Systems Conference (BioCAS), 2014, pp. 178.

- Reverter Valeiras, D., Lagorce, X., Clady, X., Bartolozzi, C., Ieng, S., Benosman, R.,

*[An Asynchronous Neuromorphic Event-Driven Visual Part-Based Shape Tracking](https://doi.org/10.1109/TNNLS.2015.2401834)*,

IEEE Trans. Neural Netw. Learn. Syst. (TNNLS), 26(12):3045-3059, 2015. [YouTube](https://youtu.be/XeQYNYESJtQ)

- Linares-Barranco, A., Gómez-Rodríguez, F., Villanueva, V., Longinotti, L., Delbrück, T.,

*[A USB3.0 FPGA event-based filtering and tracking framework for dynamic vision sensors](https://doi.org/10.1109/ISCAS.2015.7169172)*,

IEEE Int. Symp. Circuits and Systems (ISCAS), 2015.

- Leow, H. S., Nikolic, K.,

*[Machine vision using combined frame-based and event-based vision sensor](https://doi.org/10.1109/ISCAS.2015.7168731)*,

IEEE Int. Symp. Circuits and Systems (ISCAS), 2015.

- Liu, H., Moeys, D. P., Das, G., Neil, D., Liu, S.-C., Delbruck, T.,

*[Combined frame- and event-based detection and tracking](https://doi.org/10.1109/ISCAS.2016.7539103)*,

IEEE Int. Symp. Circuits and Systems (ISCAS), 2016.

- Tedaldi, D., Gallego, G., Mueggler, E., Scaramuzza, D.,

*[Feature detection and tracking with the dynamic and active-pixel vision sensor (DAVIS)](https://doi.org/10.1109/EBCCSP.2016.7605086)*,

IEEE Int. Conf. Event-Based Control Comm. and Signal Proc. (EBCCSP), 2016. [PDF](http://rpg.ifi.uzh.ch/docs/EBCCSP16_Tedaldi.pdf), [YouTube](https://www.youtube.com/watch?v=nglfEkiK308)

- [Kueng et al., IROS 2016](#Kueng16iros)

*Low-Latency Visual Odometry using Event-based Feature Tracks*.

- Braendli, C., Strubel, J., Keller, S., Scaramuzza, D., Delbruck, T.,

*[ELiSeD - An Event-Based Line Segment Detector](https://doi.org/10.1109/EBCCSP.2016.7605244)*,

Int. Conf. on Event-Based Control Comm. and Signal Proc. (EBCCSP), 2016. [PDF](http://rpg.ifi.uzh.ch/docs/EBCCSP16_Braendli.pdf)

- Glover, A. and Bartolozzi, C.,

*[Event-driven ball detection and gaze fixation in clutter](https://doi.org/10.1109/IROS.2016.7759345)*,

IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), 2016, pp. 2203-2208. [YouTube](https://youtu.be/n6qTkw5U7YI), [Code](https://github.com/robotology/event-driven)

- Glover, A. and Bartolozzi, C.,

*[Robust Visual Tracking with a Freely-moving Event Camera](https://doi.org/10.1109/IROS.2017.8206226)*,

IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), 2017. [YouTube](https://youtu.be/xS-7xYRYSLc), [Code](https://github.com/robotology/event-driven)

- Glover, A., Stokes, A.B., Furber, S., Bartolozzi, C.,

*[ATIS + SpiNNaker: a Fully Event-based Visual Tracking Demonstration](https://arxiv.org/pdf/1912.01320)*,

IEEE/RSJ Int. Conf. Intelligent Robots and Systems Workshops (IROSW), 2018. Workshop on Unconventional Sensing and Processing for Robotic Visual Perception.

- Clady, X., Maro, J.-M., Barré, S., Benosman, R. B.,

*[A Motion-Based Feature for Event-Based Pattern Recognition](https://doi.org/10.3389/fnins.2016.00594)*.

Front. Neurosci. (2017), 10:594.

- Zhu, A., Atanasov, N., Daniilidis, K.,

*[Event-based Feature Tracking with Probabilistic Data Association](https://doi.org/10.1109/ICRA.2017.7989517)*,

IEEE Int. Conf. Robotics and Automation (ICRA), 2017. [PDF](https://fling.seas.upenn.edu/~alexzhu/dynamic/wp-content/uploads/2017/07/EventBasedFeatureTrackingICRA2017.pdf), [YouTube](https://youtu.be/m93XCqAS6Fc), [Code](https://github.com/daniilidis-group/event_feature_tracking)

- Barrios-Avilés, J., Iakymchuk, T., Samaniego, J., Medus, L.D., Rosado-Muñoz, A.,

*[Movement Detection with Event-Based Cameras: Comparison with Frame-Based Cameras in Robot Object Tracking Using Powerlink Communication](https://doi.org/10.3390/electronics7110304)*,

Electronics 2018, 7, 304. [PDF pre-print](https://arxiv.org/abs/1707.07188)

- Li, J., Shi, F., Liu, W., Zou, D., Wang, Q., Park, P.K.J., Ryu, H.,

*[Adaptive Temporal Pooling for Object Detection using Dynamic Vision Sensor](https://www.dropbox.com/s/m77i7cqqy7xbg51/0099.pdf?dl=1)*,

British Machine Vision Conf. (BMVC), 2017.

- Peng, X., Zhao, B., Yan, R., Tang H., Yi, Z.,

*[Bag of Events: An Efficient Probability-Based Feature Extraction Method for AER Image Sensors](http://dx.doi.org/10.1109/TNNLS.2016.2536741)*,

IEEE Trans. Neural Netw. Learn. Syst. (TNNLS), 28(4):791-803, 2017.

- Ramesh, B., Yang, H., Orchard, G., Le Thi, N.A., Xiang, C,

*[DART: Distribution Aware Retinal Transform for Event-based Cameras](https://doi.org/10.1109/TPAMI.2019.2919301)*,

IEEE Trans. Pattern Anal. Machine Intell. (TPAMI), 2019. [PDF](https://arxiv.org/pdf/1710.10800.pdf)

- Gehrig, D., Rebecq, H., Gallego, G., Scaramuzza, D.,

*[EKLT: Asynchronous, Photometric Feature Tracking using Events and Frames](http://rpg.ifi.uzh.ch/docs/IJCV19_Gehrig.pdf)*,

Int. J. Computer Vision (IJCV), 2019. [YouTube](https://youtu.be/ZyD1YPW1h4U), [Tracking code](https://github.com/uzh-rpg/rpg_eklt), [Evaluation code](https://github.com/uzh-rpg/rpg_feature_tracking_analysis)

- Gehrig, D., Rebecq, H., Gallego, G., Scaramuzza, D.,

*[Asynchronous, Photometric Feature Tracking using Events and Frames](http://rpg.ifi.uzh.ch/docs/ECCV18_Gehrig.pdf)*,

European Conf. Computer Vision (ECCV), 2018. [Poster](http://rpg.ifi.uzh.ch/docs/ECCV18_Gehrig_poster.pdf), [YouTube](https://youtu.be/A7UfeUnG6c4), [Oral presentation](https://youtu.be/7EvY8SxdLl8), [Tracking code](https://github.com/uzh-rpg/rpg_eklt), [Evaluation code](https://github.com/uzh-rpg/rpg_feature_tracking_analysis)

- Everding, L., Conradt, J.,

*[Low-Latency Line Tracking Using Event-Based Dynamic Vision Sensors](https://doi.org/10.3389/fnbot.2018.00004)*,

Front. Neurorobot. 12:4, 2018. [Videos](http://www.frontiersin.org/articles/10.3389/fnbot.2018.00004/full#supplementary-material)

- Linares-Barranco, A., Liu, H., Rios-Navarro, A., Gomez-Rodriguez, F., Moeys, D., Delbruck, T.

*[Approaching Retinal Ganglion Cell Modeling and FPGA Implementation for Robotics](https://doi.org/10.3390/e20060475)*,

Entropy 2018, 20(6), 475.

- Mitrokhin, A., Fermüller, C., Parameshwara, C., Aloimonos, Y.,

*[Event-based Moving Object Detection and Tracking](https://doi.org/10.1109/IROS.2018.8593805)*,

IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), 2018. [PDF](https://arxiv.org/pdf/1803.04523.pdf), [YouTube](https://youtu.be/UCAJi0ZFaZ8), [Project page and Dataset](http://prg.cs.umd.edu/BetterFlow.html)

- Iacono, M., Weber, S., Glover, A., Bartolozzi, C.,

*[Towards Event-Driven Object Detection with Off-The-Shelf Deep Learning](https://doi.org/10.1109/IROS.2018.8594119)*,

IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), 2018.

- Ramesh, B., Zhang, S., Lee, Z.-W., Gao, Z., Orchard, G., Xiang, C.,

*[Long-term object tracking with a moving event camera](http://bmvc2018.org/contents/papers/0814.pdf)*,

British Machine Vision Conf. (BMVC), 2018. [Video](http://bmvc2018.org/contents/supplementary/video/0814_video.mp4)

- Ramesh, B., Zhang, S., Yang, H., Ussa, A., Ong, M., Orchard, G., Xiang, C.,

*[e-TLD: Event-based Framework for Dynamic Object Tracking](https://arxiv.org/pdf/2009.00855.pdf)*,

arXiv, 2020.

- Dardelet, L., Ieng, S.-H., Benosman, R.,

*[Event-Based Features Selection and Tracking from Intertwined Estimation of Velocity and Generative Contours](https://arxiv.org/pdf/1811.07839)*,

arXiv:1811.07839, 2018.

- Wu, J., Zhang, K., Zhang, Y., Xie, X., Shi, G.,

*[High-Speed Object Tracking with Dynamic Vision Sensor](https://doi.org/10.1007/978-981-13-6553-9_18)*,

China High Resolution Earth Observation Conference (CHREOC), 2018.

- Huang, J., Wang, S., Guo, M., Chen, S.,

*[Event-Guided Structured Output Tracking of Fast-Moving Objects Using a CeleX Sensor](https://doi.org/10.1109/TCSVT.2018.2841516)*,

IEEE Trans. Circuits Syst. Video Technol. (TCSVT), 28(9):2413-2417, 2018.

- Renner, A., Evanusa, M., Sandamirskaya, Y.,

*[Event-based attention and tracking on neuromorphic hardware](http://openaccess.thecvf.com/content_CVPRW_2019/papers/EventVision/Renner_Event-Based_Attention_and_Tracking_on_Neuromorphic_Hardware_CVPRW_2019_paper.pdf)*,

IEEE Conf. Computer Vision and Pattern Recognition Workshops (CVPRW), 2019. [Video pitch](https://youtu.be/eWBEJOr056E)

- Foster, B.J., Ye, D.H., Bouman, C.A.,

*[Multi-target tracking with an event-based vision sensor and a partial-update GMPHD filter](https://www.ingentaconnect.com/contentone/ist/ei/2019/00002019/00000013/art00002?crawler=true&mimetype=application/pdf)*,

IS&T International Symposium on Electronic Imaging 2019. Computational Imaging XVII.

- Alzugaray, I., Chli, M.,

*[Asynchronous Multi-Hypothesis Tracking of Features with Event Cameras](https://doi.org/10.3929/ethz-b-000360434)*,

IEEE Int. Conf. 3D Vision (3DV), 2019. [PDF](https://doi.org/10.3929/ethz-b-000360434), [Code](https://github.com/ialzugaray/haste), [YouTube](https://youtu.be/eguV_AIbteU)

- Linares-Barranco, A., Perez-Pena, F., Moeys, D.P., Gomez-Rodriguez, F., Jimenez-Moreno, G., Delbruck, T.

*[Low Latency Event-based Filtering and Feature Extraction for Dynamic Vision Sensors in Real-Time FPGA Applications](https://doi.org/10.1109/ACCESS.2019.2941282)*,

IEEE Access, 7:134926-134942, 2019. [Code](https://github.com/RTC-research-group/EDIP_library)

- Li, K., Shi, D., Zhang, Y., Li, R., Qin, W., Li, R.,

*[Feature Tracking Based on Line Segments With the Dynamic and Active-Pixel Vision Sensor (DAVIS)](https://doi.org/10.1109/ACCESS.2019.2933594)*,

IEEE Access, 7:110874-110883, 2019.

- Bolten T., Pohle-Fröhlich R., Tönnies K.D.,

*[Application of Hierarchical Clustering for Object Tracking with a Dynamic Vision Sensor](https://doi.org/10.1007/978-3-030-22750-0_13)*,

Int. Conf. Computational Science (ICCS) 2019. [PDF](https://www.hs-niederrhein.de/fileadmin/dateien/Institute_und_Kompetenzzentren/iPattern/selfarchived/bolten-iccs-2019.pdf)

- Chen, H., Wu, Q., Liang, Y., Gao, X., Wang, H.,

*[Asynchronous Tracking-by-Detection on Adaptive Time Surfaces for Event-based Object Tracking](https://doi.org/10.1145/3343031.3350975)*,

ACM Int. Conf. on Multimedia (MM), 2019.

- Reverter Valeiras, D., Clady, X., Ieng, S.-H., Benosman, R.,

*[Event-Based Line Fitting and Segment Detection Using a Neuromorphic Visual Sensor](https://doi.org/10.1109/TNNLS.2018.2807983)*,

IEEE Trans. Neural Netw. Learn. Syst. (TNNLS), 30(4):1218-1230, 2019.

- Li, H., Shi, L.,

*[Robust Event-Based Object Tracking Combining Correlation Filter and CNN Representation](https://doi.org/10.3389/fnbot.2019.00082)*,

Front. Neurorobot. 13:82, 2019. [Dataset](https://figshare.com/s/70565903453eef7c3965)

- Chen, H., Suter, D., Wu, Q., Wang, H.,

*[End-to-end Learning of Object Motion Estimation from Retinal Events for Event-based Object Tracking](https://doi.org/10.1609/aaai.v34i07.6625)*,

AAAI Conf. Artificial Intelligence, 2020. [PDF](https://www.aaai.org/Papers/AAAI/2020GB/AAAI-ChenH.2586.pdf), [PDF](https://arxiv.org/pdf/2002.05911).

- Monforte, M., Arriandiaga, A., Glover, A., Bartolozzi, C.,

*[Exploiting Event Cameras for Spatio-Temporal Prediction of Fast-Changing Trajectories](https://arxiv.org/pdf/2001.01248)*,

IEEE Int. Conf. Artificial Intelligence Circuits and Systems (AICAS), 2020.

- Sengupta, J. P., Kubendran, R., Neftci, E., Andreou, A. G.,

*[High-Speed, Real-Time, Spike-Based Object Tracking and Path Prediction on Google Edge TPU.](https://par.nsf.gov/servlets/purl/10212648)*

IEEE Int. Conf. Artificial Intelligence Circuits and Systems (AICAS), 2020, pp. 134-135.

- Seok, H., Lim, J.,

*[Robust Feature Tracking in DVS Event Stream using Bezier Mapping](http://openaccess.thecvf.com/content_WACV_2020/papers/Seok_Robust_Feature_Tracking_in_DVS_Event_Stream_using_Bezier_Mapping_WACV_2020_paper.pdf)*,

IEEE Winter Conf. Applications of Computer Vision (WACV), 2020. [YouTube](https://youtu.be/mskBdueW9Hc)

- Xu, L., Xu, W., Golyanik, V., Habermann, M., Fang, L., Theobalt, C.,

*[EventCap: Monocular 3D Capture of High-Speed Human Motions using an Event Camera](https://arxiv.org/pdf/1908.11505)*,

IEEE Conf. Computer Vision and Pattern Recognition (CVPR), 2020. [ZDNet news](https://www.zdnet.com/article/high-speed-motion-capture-using-a-single-event-camera/)

- Rodríguez-Gómez, J.P., Gómez Eguíluz, A., Martínez-de Dios, J.R., Ollero, A.,

*[Asynchronous event-based clustering and tracking for intrusion monitoring](https://ras.papercept.net/proceedings/ICRA20/1523.pdf)*,

IEEE Int. Conf. Robotics and Automation (ICRA), 2020. [PDF](https://zenodo.org/record/3816654#.XxHQ9hFS9hE).

- [Boettiger, J. P., MSc 2020](#Boettiger20MSc),

*A Comparative Evaluation of the Detection and Tracking Capability Between Novel Event-Based and Conventional Frame-Based Sensors*.

- Sarmadi, H., Muñoz-Salinas, R., Olivares-Mendez, M. A., Medina-Carnicer, R.,

*[Detection of Binary Square Fiducial Markers Using an Event Camera](https://arxiv.org/pdf/2012.06516)*,

arXiv, 2020.

- Alzugaray, I., Chli, M.,

*[HASTE: multi-Hypothesis Asynchronous Speeded-up Tracking of Events](https://www.bmvc2020-conference.com/conference/papers/paper_0744.html)*,

British Machine Vision Conf. (BMVC), 2020. [PDF](https://www.bmvc2020-conference.com/assets/papers/0744.pdf), [Suppl. Mat.](https://www.bmvc2020-conference.com/assets/supp/0744_supp.zip), [Code](https://github.com/ialzugaray/haste), [Presentation](https://www.bmvc2020-conference.com/conference/papers/paper_0744.html), [Youtube](https://youtu.be/6DZxIzrVLcI)

- Liu, Z., Fu, Y.,

*[e-ACJ: Accurate Junction Extraction For Event Cameras](https://arxiv.org/pdf/2101.11251)*,

arXiv, 2021.

- Dong, Y., Zhang, T.,

*[Standard and Event Cameras Fusion for Feature Tracking](https://dl.acm.org/doi/10.1145/3459066.3459075)*,

Int. Conf. on Machine Vision and Applications (ICMVA), 2021. [Code](https://github.com/LarryDong/FusionTracking)

- Mondal, A., Shashant, R., Giraldo, J. H., Bouwmans, T., Chowdhury, A. S.,

*[Moving Object Detection for Event-based Vision using Graph Spectral Clustering](https://openaccess.thecvf.com/content/ICCV2021W/GSP-CV/papers/Mondal_Moving_Object_Detection_for_Event-Based_Vision_Using_Graph_Spectral_Clustering_ICCVW_2021_paper.pdf)*,

IEEE Int. Conf. Computer Vision Workshop (ICCVW), 2021. [Youtube](https://youtu.be/ST6Z-3SlNS4), [Code](https://github.com/anindya2001/GSCEventMOD).

- Xiao Wang, Jianing Li, Lin Zhu, Zhipeng Zhang, Zhe Chen, Xin Li, Yaowei Wang, Yonghong Tian, Feng Wu,

*[VisEvent: Reliable Object Tracking via Collaboration of Frame and Event Flows](https://arxiv.org/abs/2108.05015)*,

arXiv, 2021. [Code](https://github.com/wangxiao5791509/VisEvent_SOT_Benchmark)

- [Alzugaray, I., Ph.D. Thesis, 2022](#Alzugaray22PhD),

*Event-driven Feature Detection and Tracking for Visual SLAM*.

- Zhang, J., Zhao, K., Dong, B., Fu, Y., Wang, Y., Yang, X., Yin, B.,

*[Multi-domain collaborative feature representation for robust visual object tracking](https://doi.org/10.1007/s00371-021-02237-9)*,

The Visual Computer, 2021. [PDF](https://link.springer.com/article/10.1007/s00371-021-02237-9), [Project](https://zhangjiqing.com/publication/multi-domain-collaborative-feature-representation-for-robust-visual-object-tracking-the-visual-computer-2021-proc-cgi-2021-/).

- Zhang, J., Yang, X., Fu, Y., Wei, X., Yin, B., Dong, B.,

*[Object Tracking by Jointly Exploiting Frame and Event Domain](https://openaccess.thecvf.com/content/ICCV2021/papers/Zhang_Object_Tracking_by_Jointly_Exploiting_Frame_and_Event_Domain_ICCV_2021_paper.pdf)*,

IEEE Int. Conf. Computer Vision (ICCV), 2021. [Project](https://zhangjiqing.com/publication/iccv21_fe108_tracking/), [PDF](https://arxiv.org/abs/2109.09052), [Code](https://github.com/Jee-King/ICCV2021_Event_Frame_Tracking), [Dataset](https://zhangjiqing.com/dataset/).

- Dietsche, A., Cioffi, G., Hidalgo-Carrio, J., Scaramuzza, D.,

*[Powerline Tracking with Event Cameras](http://dx.doi.org/10.1109/IROS51168.2021.9636824)*,

IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), 2021. [PDF](https://rpg.ifi.uzh.ch/docs/IROS21_Dietsche.pdf), [Dataset](https://download.ifi.uzh.ch/rpg/powerline_tracking_dataset/), [YouTube](https://www.youtube.com/watch?v=KnBJqed5qDI), [Code](https://github.com/uzh-rpg/line_tracking_with_event_cameras).

- Li, H., Stueckler, J.,

*[Tracking 6-DoF Object Motion from Events and Frames](https://arxiv.org/pdf/2103.15568.pdf)*,

IEEE Int. Conf. Robotics and Automation (ICRA), 2021.

- Zhang, J., Dong, B., Zhang, H., Ding, J., Heide, F., Yin, B., Yang, X.,

*[Spiking Transformers for Event-based Single Object Tracking](https://openaccess.thecvf.com/content/CVPR2022/papers/Zhang_Spiking_Transformers_for_Event-Based_Single_Object_Tracking_CVPR_2022_paper.pdf)*,

IEEE Conf. Computer Vision and Pattern Recognition (CVPR), 2022. [Project](https://zhangjiqing.com/publication/stnet/), [PDF](https://openaccess.thecvf.com/content/CVPR2022/papers/Zhang_Spiking_Transformers_for_Event-Based_Single_Object_Tracking_CVPR_2022_paper.pdf), [Code](https://github.com/Jee-King/CVPR2022_STNet).

- [Gao, et at., FPGA, 2022](#Gao22FPGA),

*REMOT: A Hardware-Software Architecture for Attention-Guided Multi-Object Tracking with Dynamic Vision Sensors on FPGAs*.

- El Shair, Z., Rawashdeh, S.A.,

*[High-Temporal-Resolution Object Detection and Tracking using Images and Events](https://doi.org/10.3390/jimaging8080210)*,

Journal of Imaging, 2022. [PDF](https://www.mdpi.com/2313-433X/8/8/210/pdf), [Dataset](http://sar-lab.net/event-based-vehicle-detection-and-tracking-dataset/).

- Hu, S., Kim, Y., Lim, H., Lee, A., Myung, H.,

[eCDT: Event Clustering for Simultaneous Feature Detection and Tracking](https://doi.org/10.1109/IROS47612.2022.9981451),

IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), 2022. [YouTube](https://www.youtube.com/watch?v=eS-3PP-8sek&ab_channel=KAISTUrbanRoboticsLab)

- Zhu, Z., Hou, J., Lyu, X.,

*[Learning Graph-embedded Key-event Back-tracing for Object Tracking in Event Clouds](https://openreview.net/pdf?id=hTxYJAKY85)*,

Thirty-sixth Conference on Neural Information Processing Systems (NeurIPS), 2022. [PDF](https://openreview.net/pdf?id=hTxYJAKY85), [Code](https://github.com/ZHU-Zhiyu/Event-tracking).

- El Shair, Z., Rawashdeh, S.A.,

*[High-temporal-resolution event-based vehicle detection and tracking](https://doi.org/10.1117/1.OE.62.3.031209)*,

Optical Engineering, 2022. [Dataset](http://sar-lab.net/event-based-vehicle-detection-and-tracking-dataset/).

- Guillen-Garcia, J., Palacios-Alonso, D., Cabello, E., Conde, C.,

*[Unsupervised adaptive multi-object tracking-by-clustering algorithm with a bio-inspired system](https://doi.org/10.1109/ACCESS.2022.3154895)*,

IEEE Access, 2022.

- Messikommer, N., Fang, C., Gehrig, M., Scaramuzza, D.,

*[Data-driven Feature Tracking for Event Cameras](https://arxiv.org/abs/2211.12826)*,

IEEE Conf. Computer Vision and Pattern Recognition (CVPR), 2023. [PDF](https://rpg.ifi.uzh.ch/docs/CVPR23_Messikommer.pdf), [YouTube](https://youtu.be/dtkXvNXcWRY), [Code](https://github.com/uzh-rpg/deep_ev_tracker).

- Pedersen, J., Singhal, R., Conradt, J.,

[Translation and Scale Invariance for Event-Based Object tracking](https://dl.acm.org/doi/10.1145/3584954.3584996),

Proc. Annual Neuro-Inspired Computational Elements Conf. (NICE), 2023, pp. 86-91. Website, code, presentation.

- Zhu, Z., Hou, J., Wu DO.,

*[Cross-modal Orthogonal High-rank Augmentation for RGB-Event Transformer-trackers](https://arxiv.org/abs/2307.04129).*,

IEEE Int. Conf. Computer Vision (ICCV), 2023., [Code](https://github.com/ZHU-Zhiyu/High-Rank_RGB-Event_Tracker).

- Nagaraj, M., Liyanagedera, C.M., Roy, K.,

*[DOTIE - Detecting Objects through Temporal Isolation of Events using a Spiking Architecture](https://ieeexplore.ieee.org/abstract/document/10161164).*,

IEEE Int. Conf. Robotics and Automation (ICRA), 2023. [Arxiv](https://arxiv.org/abs/2210.00975), [CVPR 2023 workshop](https://tub-rip.github.io/eventvision2023/papers/2023CVPRW_Live_Demonstration_Real-time_Event-based_Speed_Detection_using_Spiking_Neural_Networks.pdf), [Code](https://github.com/manishnagaraj/DOTIE).

- [Gao et al., ICCV 2023](#Gao23iccv), *A 5-Point Minimal Solver for Event Camera Relative Motion Estimation*.

- [Gao et al., CVPR 2024](#Gao24cvpr), *An N-Point Linear Solver for Line and Motion Estimation with Event Cameras*.

- Li, S., Zhou, Z., Xue, Z., Li, Y., Du, S., Gao, Y.,

*3D Feature Tracking via Event Camera*,

IEEE Conf. Computer Vision and Pattern Recognition (CVPR), 2024. [Code](https://github.com/lisiqi19971013/E-3DTrack), [Dataset](https://github.com/lisiqi19971013/event-based-datasets).

- Kang, Y., Caron, G., Ishikawa, R., Escande, A., Chappellet, K., Sagawa, R., Oishi, T.,

*[Direct 3D model-based object tracking with event camera by motion interpolation](https://www.cvl.iis.u-tokyo.ac.jp/~kyf/ICRA2024/BIAM.pdf).*,

IEEE Int. Conf. Robotics and Automation (ICRA), 2024. [Dataset](https://www.cvl.iis.u-tokyo.ac.jp/~kyf/ICRA2024/).

- Wang, Z., Molloy, T., van Goor, P., Mahony, R.,

*[Asynchronous Blob Tracker for Event Cameras](https://doi.org/10.1109/TRO.2024.3454410).*,

IEEE Trans. Robot. (TRO), 2024. [PDF](https://arxiv.org/pdf/2307.10593.pdf), [Video](https://www.youtube.com/watch?v=L_wJjhcToOU), [Project page](https://github.com/ziweiWWANG/AEB-Tracker).

- Ikura, M., Gentil, L. C., Müller, G. M., Schuler, F., Yamashita, A., Stürzl, W.,

*[RATE: Real-time Asynchronous Feature Tracking with Event Cameras](https://doi.org/10.1109/IROS58592.2024.10802050).*,

IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), 2024. [PDF](https://www.robot.t.u-tokyo.ac.jp/~yamashita/paper/B/B320Final.pdf), [Code](https://github.com/mikihiroikura/RATE).

- Ikura, M., Glover, A., Mizuno, M., Bartolozzi, C.,

*[Lattice-allocated Real-time Line Segment Feature Detection and Tracking Using Only an Event-based Camera](https://openaccess.thecvf.com/content/ICCV2025W/NeVi/html/Ikura_Lattice-allocated_Real-time_Line_Segment_Feature_Detection_and_Tracking_Using_Only_ICCVW_2025_paper.html).*,

IEEE/CVF Int. Conf. Computer Vision (ICCV) Workshop on Neuromorphic Vision (NeVi), 2025. [PDF](https://arxiv.org/pdf/2510.06829), [Code](https://github.com/event-driven-robotics/RT-EvLDT), [Dataset](https://zenodo.org/records/17299174)

- Burkhardt, Y., Schaefer, S., Leutenegger, S.

*[SuperEvent: Cross-Modal Learning of Event-based Keypoint Detection for SLAM](https://openaccess.thecvf.com/content/ICCV2025/html/Burkhardt_SuperEvent_Cross-Modal_Learning_of_Event-based_Keypoint_Detection_for_SLAM_ICCV_2025_paper.html).*,

IEEE/CVF Int. Conf. Computer Vision (ICCV), 2025. [PDF](https://arxiv.org/pdf/2504.00139), [YouTube](https://youtu.be/YWBr8oChfDE?si=DnR1gnQ-MSbFl7Ru), [Code](https://github.com/ethz-mrl/SuperEvent), [Project page](https://ethz-mrl.github.io/SuperEvent/)

### Corner Detection and Tracking

- Clady, X., Ieng, S.-H., Benosman, R.,

*[Asynchronous event-based corner detection and matching](https://doi.org/10.1016/j.neunet.2015.02.013)*,

Neural Networks (2015), 66:91-106.

- Vasco, V., Glover, A., Bartolozzi, C.,

*[Fast event-based Harris corner detection exploiting the advantages of event-driven cameras](https://doi.org/10.1109/IROS.2016.7759610)*,

IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), 2016, pp. 4144-4149. [YouTube](https://youtu.be/YkI7AfBDBKE), [Code](https://github.com/robotology/event-driven)

- Mueggler, E., Bartolozzi, C., Scaramuzza, D.,

*[Fast Event-based Corner Detection](http://rpg.ifi.uzh.ch/docs/BMVC17_Mueggler.pdf)*,

British Machine Vision Conf. (BMVC), 2017. [YouTube](https://youtu.be/tgvM4ELesgI), [Code](https://github.com/uzh-rpg/rpg_corner_events)

- Liu, H., Kao, W.-T., Delbruck, T.,

*[Live Demonstration: A Real-time Event-based Fast Corner Detection Demo based on FPGA](http://openaccess.thecvf.com/content_CVPRW_2019/papers/EventVision/Liu_Live_Demonstration_A_Real-Time_Event-Based_Fast_Corner_Detection_Demo_Based_CVPRW_2019_paper.pdf)*,

IEEE Conf. Computer Vision and Pattern Recognition Workshops (CVPRW), 2019.

- Standalone Rust implementation, [Code](https://github.com/ac-freeman/dvs-fast-corners)

- Alzugaray, I., Chli, M.,

*[Asynchronous Corner Detection and Tracking for Event Cameras in Real Time](http://dx.doi.org/10.1109/LRA.2018.2849882)*,

IEEE Robotics and Automation Letters (RA-L), 3(4):3177-3184, Oct. 2018. [PDF](https://doi.org/10.3929/ethz-b-000277131), [YouTube](https://youtu.be/bKUAZ7IQcf0), [Code](https://github.com/ialzugaray/arc_star_ros).

- Alzugaray, I., Chli, M.,

*[ACE: An Efficient Asynchronous Corner Tracker for Event Cameras](https://doi.org/10.1109/3DV.2018.00080)*,

IEEE Int. Conf. 3D Vision (3DV), 2018. [PDF](https://doi.org/10.3929/ethz-b-000291763), [YouTube](https://youtu.be/I31yQqmCsfs)

- Scheerlinck, C., Barnes, N., Mahony, R.,

*[Asynchronous Spatial Image Convolutions for Event Cameras](https://doi.org/10.1109/LRA.2019.2893427)*,

IEEE Robotics and Automation Letters (RA-L), 4(2):816-822, Apr. 2019. [PDF](https://cedric-scheerlinck.github.io/files/2018_event_convolutions.pdf), [Website](https://cedric-scheerlinck.github.io/2018_event_convolutions)

- Manderscheid, J., Sironi, A., Bourdis, N., Migliore, D., Lepetit, V.,

*[Speed Invariant Time Surface for Learning to Detect Corner Points with Event-Based Cameras](http://openaccess.thecvf.com/content_CVPR_2019/html/Manderscheid_Speed_Invariant_Time_Surface_for_Learning_to_Detect_Corner_Points_CVPR_2019_paper.html)*,

IEEE Conf. Computer Vision and Pattern Recognition (CVPR), 2019. [PDF](https://arxiv.org/pdf/1903.11332)

- Li, R., Shi, D., Zhang, Y., Li, K., Li, R.,

*[FA-Harris: A Fast and Asynchronous Corner Detector for Event Cameras](https://doi.org/10.1109/IROS40897.2019.8968491)*,

IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), 2019. [PDF](https://arxiv.org/pdf/1906.10925)

- Mohamed, S. A. S., Yasin, J. N., Haghbayan, M.-H., Miele, A., Heikkonen, J., Tenhunen, H., Plosila, J.,

*[Dynamic Resource-aware Corner Detection for Bio-inspired Vision Sensors](https://arxiv.org/pdf/2010.15507)*,

Int. Conf. Pattern Recognition (ICPR), 2020.

- Mohamed, S. A. S., Yasin, J. N., Haghbayan, M.-H., Miele, A., Heikkonen, J., Tenhunen, H., Plosila, J.,

*[Asynchronous Corner Tracking Algorithm based on Lifetime of Events for DAVIS Cameras](https://arxiv.org/pdf/2010.15510)*,

Int. Symposium on Visual Computing (ISVC), 2020.

- Yılmaz, Ö., Simon-Chane, C., Histace A.,

*[Evaluation of Event-Based Corner Detectors ](https://doi.org/10.3390/jimaging7020025)*,

J. Imaging, 2021.

- Chiberre, P., Perot, E., Sironi, A., Lepetit, V.,

*[Detecting Stable Keypoints From Events Through Image Gradient Prediction](https://openaccess.thecvf.com/content/CVPR2021W/EventVision/papers/Chiberre_Detecting_Stable_Keypoints_From_Events_Through_Image_Gradient_Prediction_CVPRW_2021_paper.pdf)*,

IEEE Conf. Computer Vision and Pattern Recognition Workshops (CVPRW), 2021. [YouTube](https://youtu.be/Rrkwxp8J18c).

- Chui, J., Klenk, S., Cremers, D.,

*[Event-Based Feature Tracking in Continuous Time with Sliding Window Optimization](https://arxiv.org/pdf/2107.04536)*,

arXiv preprint arXiv, 2021.

- Glover A, Dinale A, De Souza Rosa L, Bamford S, Bartolozzi C

*[luvharris: A practical corner detector for event-cameras](https://doi.org/10.1109/TPAMI.2021.3135635)*,

IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI), 2021. [Code](https://github.com/robotology/event-driven)

- Sengupta, J. P., Villemur, M., Andreou, A. G.,

*[Efficient, event-driven feature extraction and unsupervised object tracking for embedded applications](https://doi.org/10.1109/CISS50987.2021.9400234)*,

55th Annual Conf. on Information Sciences and Systems (CISS), 2021.

- [Alzugaray, I., Ph.D. Thesis, 2022](#Alzugaray22PhD),

*Event-driven Feature Detection and Tracking for Visual SLAM*.

- Gava L, Monforte M, Iacono M, Bartolozzi C, Glover A

*[Puck: Parallel surface and convolution-kernel tracking for event-based cameras](https://arxiv.org/pdf/2205.07657)*,

arXiv preprint arXiv, 2022. [Code](https://github.com/lunagava/study-air-hockey/tree/master)

- Freeman, A., Mayer-Patel, K., Singh, M.,

*[Accelerated Event-Based Feature Detection and Compression for Surveillance Video Systems](https://doi.org/10.1145/3625468.3647618)*,

ACM Multimedia Systems (MMSys), 2024. [PDF](https://arxiv.org/pdf/2312.08213.pdf), [Code](https://github.com/ac-freeman/adder-codec-rs).

- Sun PSV, Glover A, Bartolozzi C, Basu A

*[Memory Efficient Corner Detection for Event-Driven Dynamic Vision Sensors](https://ieeexplore.ieee.org/abstract/document/10445937)*,

Int. Conf. on Acoustics, Speech and Signal Proc. (ICASSP), 2024.

### Particle Detection and Tracking

- Drazen, D., Lichtsteiner, P., Haefliger, P., Delbruck, T., Jensen, A.,

*[Toward real-time particle tracking using an event-based dynamic vision sensor](https://doi.org/10.1007/s00348-011-1207-y)*,

Experiments in Fluids (2011), 51(1):1465-1469. [PDF](http://www.zora.uzh.ch/60624/1/Drazen_EIF_2011.pdf)

- Ni, Z., Pacoret, C., Benosman, R., Ieng, S., Regnier, S.,

*[Asynchronous event-based high speed vision for microparticle tracking](http://doi.org/10.1111/j.1365-2818.2011.03565.x)*,

J. Microscopy (2011), 245(3):236-244.

- Borer, D., Roesgen, T.,

*[Large-scale Particle Tracking with Dynamic Vision Sensors](https://www.research-collection.ethz.ch/handle/20.500.11850/86729)*,

ISFV16 - 16th Int. Symp. Flow Visualization, Okinawa 2014. [Project page](http://www.ifd.mavt.ethz.ch/research/group-roesgen/dynamic-vision-sensors.html), [Poster](http://www.ifd.mavt.ethz.ch/content/dam/ethz/special-interest/mavt/fluid-dynamics/ifd-dam/research/documents/posters/experimental-methods/daniel_borer_dynamic_vision_sensor.pdf)

- Wang, Y., Idoughi, R., Heidrich, W.,

*[Stereo Event-based Particle Tracking Velocimetry for 3D Fluid Flow Reconstruction](https://www.ecva.net/papers/eccv_2020/papers_ECCV/papers/123740035.pdf)*,

European Conf. Computer Vision (ECCV), 2020. [Suppl. Mat.](https://www.ecva.net/papers/eccv_2020/papers_ECCV/papers/123740035-supp.zip)

### Eye Tracking

- Ryan, C., Sullivan, B. O., Elrasad, A., Lemley, J., Kielty., P., Posch, C., Perot, E.,

*[Real-Time Face & Eye Tracking and Blink Detection using Event Cameras](https://arxiv.org/pdf/2010.08278)*,

arXiv, 2020.

- Angelopoulos, A.N., Martel, J.N.P., Kohli, A.P.S., Conradt, J., Wetzstein, G.,

*[Event Based, Near-Eye Gaze Tracking Beyond 10,000Hz](https://arxiv.org/pdf/2004.03577)*,

IEEE Trans. Vis. Comput. Graphics (Proc. VR), 2021. [YouTube](https://youtu.be/-7EneYIfinM), [Dataset](https://github.com/aangelopoulos/event_based_gaze_tracking), [Project page](http://www.computationalimaging.org/publications/event-based-eye-tracking/)

- Chen, Q., Wang, Z., Liu, S.-C., Gao, C.,

*[3ET: Efficient Event-based Eye Tracking using a Change-Based ConvLSTM Network](https://arxiv.org/abs/2308.11771)*,

IEEE BioCAS Conf., 2023. [YouTube](https://www.youtube.com/watch?v=aRB5mDNfrHM), [Code](https://github.com/qinche106/cb-convlstm-eyetracking)

- Wang, Z., Gao, C., Wu, Z., Conde, M., Timofte, R., Liu, S.-C., Chen, Q., et al.

*[Event-based Eye Tracking. AIS 2024 Challenge Survey](https://arxiv.org/abs/2404.11770)*,

IEEE Conf. Computer Vision and Pattern Recognition Workshops (CVPRW), 2024. [Challenge page](https://eetchallenge.github.io/EET.github.io/), [Code](https://github.com/EETChallenge/challenge_demo_code), [Kaggle page](https://www.kaggle.com/competitions/event-based-eye-tracking-ais2024)

- Bonazzi, P., Bian, S., Lippolis, G., Sheik, S., Magno, M.

*[Retina: Low-Power Eye Tracking with Event Camera and Spiking Hardware](https://arxiv.org/pdf/2312.00425)*,

IEEE Conf. Computer Vision and Pattern Recognition Workshops (CVPRW), 2024. [Dataset](https://pietrobonazzi.com/projects/retina), [Code](https://github.com/pbonazzi/retina), [PDF](https://arxiv.org/pdf/2312.00425).

- Vullers Y, Gava L, Glover A, Bartolozzi C

*[Towards Low-power, High-frequency Gaze Direction Tracking with an Event-camera](https://github.com/event-driven-robotics/workbook_yvonne-vullers)*,

European Conf. Comp. Vision (ECCV) Workshop on Eyes of the Future: Integrating Computer Vision in Smart Eyewear (ICVSE), 2024. [Code](https://github.com/event-driven-robotics/workbook_yvonne-vullers)

- Sen, A., Bandara, N.S., Gokarn, I., Kandappu, T., Misra, A.

*[EyeTrAES: Fine-grained, Low-Latency Eye Tracking via Adaptive Event Slicing](https://dl.acm.org/doi/abs/10.1145/3699745)*,

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT), 2024. [Code](https://github.com/arghasen10/EyeTrAES), [PDF](https://dl.acm.org/doi/pdf/10.1145/3699745).

- Bandara, N., Kandappu, T., Sen, A., Gokarn, I., Misra, A.

*[EyeGraph: Modularity-aware Spatio Temporal Graph Clustering for Continuous Event-based Eye Tracking](https://openreview.net/forum?id=YxuuzyplFZ)*,

Advances in Neural Information Processing Systems (NeurIPS) Datasets and Benchmarks Track, 2024. [Project Page](https://eye-tracking-for-physiological-sensing.github.io/eyegraph/), [PDF](https://openreview.net/pdf?id=YxuuzyplFZ), [Supplementary Materials](https://openreview.net/attachment?id=YxuuzyplFZ&name=supplementary_material).

- Iddrisu, K., Shariff, W., O’Connor, N. E., Lemley, J., Little, S.,

*[Evaluating Image-Based Face and Eye Tracking with Event Cameras](https://doi.org/10.1007/978-3-031-92460-6_14)*,

European Conference on Computer Vision (ECCV), 2024, pp. 224–240. [PDF](https://arxiv.org/pdf/2408.10395?)

- Iddrisu, K., Shariff, W., Little, S.,

*[A Framework for Pupil Tracking with Event Cameras](https://doi.org/10.1049/icp.2024.3288)*,

IET Conference Proceedings CP887, vol. 2024, no. 10, pp. 87–94.

## Optical Flow Estimation

- Delbruck, T.,

*[Frame-free dynamic digital vision](https://www.research-collection.ethz.ch/handle/20.500.11850/81769),*

Int. Symp. on Secure-Life Electronics, Advanced Electronics for Quality Life and Society, pp. 21-26, 2008. [PDF](www.ini.uzh.ch/admin/extras/doc_get.php?id=42508)

- [Cook et al., IJCNN 2011](#Cook11ijcnn),

*Interacting maps for fast visual interpretation*. (Joint estimation of optical flow, image intensity and angular velocity with a rotating event camera).

- Benosman, R., Ieng, S.-H., Clercq, C., Bartolozzi, C., Srinivasan, M.,

*[Asynchronous Frameless Event-Based Optical Flow](https://doi.org/10.1016/j.neunet.2011.11.001),*

Neural Networks (2012), 27:32-37.

- Orchard, G., Benosman, R., Etienne-Cummings, R., Thakor, N,

*[A Spiking Neural Network Architecture for Visual Motion Estimation](https://doi.org/10.1109/BioCAS.2013.6679698)*,

IEEE Biomedical Circuits and Systems Conf. (BioCAS), 2013. [PDF](https://www.researchgate.net/publication/261075772_A_spiking_neural_network_architecture_for_visual_motion_estimation), [Code](https://github.com/gorchard/Spiking_Motion)

- Benosman, R., Clercq, C., Lagorce, X., Ieng, S.-H., Bartolozzi, C.,

*[Event-Based Visual Flow](https://doi.org/10.1109/TNNLS.2013.2273537),*

IEEE Trans. Neural Netw. Learn. Syst. (TNNLS), 25(2):407-417, 2014. [Code (jAER): LocalPlanesFlow](https://github.com/SensorsINI/jaer/blob/master/src/ch/unizh/ini/jaer/projects/rbodo/opticalflow/LocalPlanesFlow.java)

- [Clady et al., Front. Neurosci. 2014](#Clady14fnins),

*Asynchronous visual event-based time-to-contact*.

- E. Mueggler, C. Forster, N. Baumli, G. Gallego, D. Scaramuzza,

*[Lifetime Estimation of Events from Dynamic Vision Sensors](http://dx.doi.org/10.1109/ICRA.2015.7139876)*,

IEEE Int. Conf. Robotics and Automation (ICRA), 2015, pp. 4874-4881. [PDF](http://rpg.ifi.uzh.ch/docs/ICRA15_Mueggler.pdf), [PPT](http://rpg.ifi.uzh.ch/docs/ICRA15_Mueggler.pptm), [Code](https://www.github.com/uzh-rpg/rpg_event_lifetime)

- Lee, A. J., Kim, A.,

*[Event-based Real-time Optical Flow Estimation](https://doi.org/10.23919/ICCAS.2017.8204333)*,

IEEE Int. Conf. on Control, Automation and Systems (ICCAS), 2017.

- Aung, M.T., Teo, R., Orchard, G.,

*[Event-based Plane-fitting Optical Flow for Dynamic Vision Sensors in FPGA](https://doi.org/10.1109/ISCAS.2018.8351588)*,

IEEE Int. Symp. Circuits and Systems (ISCAS), 2018. [Code](https://github.com/gorchard/FPGA_event_based_optical_flow)

- Barranco, F., Fermüller, C., Aloimonos, Y.,

*[Contour motion estimation for asynchronous event-driven cameras](https://doi.org/10.1109/JPROC.2014.2347207)*,

Proc. IEEE (2014), 102(10):1537-1556. [PDF](http://www.cfar.umd.edu/~fer/postscript/contourmotion-dvs-final.pdf)

- Lee, J.H., Lee, K., Ryu, H., Park, P.K.J., Shin, C.W., Woo, J., Kim, J.-S.,

*[Real-time motion estimation based on event-based vision sensor](https://doi.org/10.1109/ICIP.2014.7025040)*,

IEEE Int. Conf. Image Processing (ICIP), 2014.

- Richter, C., Röhrbein, F., Conradt, J.,

[Bio inspired optic flow detection using neuromorphic hardware](https://doi.org/10.12751/NNCN.BC2014.0032),

Bernstein Conf. 2014. [PDF](https://mediatum.ub.tum.de/doc/1281617/789727.pdf)

- Barranco, F., Fermüller, C., Aloimonos, Y.,

*[Bio-inspired Motion Estimation with Event-Driven Sensors](https://doi.org/10.1007/978-3-319-19258-1_27)*,

Int. Work-Conf. Artificial Neural Networks (IWANN) 2015, Advances in Computational Intell., pp. 309-321. [PDF](https://www.ugr.es/~fbarranco/docs/IWANN_Barranco_et_al_2015.pdf)

- Conradt, J.,

*[On-Board Real-Time Optic-Flow for Miniature Event-Based Vision Sensors](https://doi.org/10.1109/ROBIO.2015.7419043)*,

IEEE Int. Conf. Robotics and Biomimetics (ROBIO), 2015.

- Brosch, T., Tschechne, S., Neumann, H.,

*[On event-based optical flow detection](https://doi.org/10.3389/fnins.2015.00137)*,

Front. Neurosci. (2015), 9:137.

- Tschechne, S., Brosch, T., Sailer, R., von Egloffstein, N., Abdul-Kreem L.I., Neumann, H.,

*[On event-based motion detection and integration](http://dx.doi.org/10.4108/icst.bict.2014.257904)*,

Int. Conf. Bio-inspired Information and Comm. Technol. (BICT), 2014. [PDF](https://dl.acm.org/citation.cfm?id=2744588)

- Tschechne, S., Sailer R., Neumann, H.,

*[Bio-Inspired Optic Flow from Event-Based Neuromorphic Sensor Input](https://doi.org/10.1007/978-3-319-11656-3_16)*,

IAPR Workshop on Artificial Neural Networks in Pattern Recognition (ANNPR) 2014, pp. 171-182.

- Brosch, T., Neumann, H.,

*[Event-based optical flow on neuromorphic hardware](https://doi.org/10.4108/eai.3-12-2015.2262447)*,

Int. Conf. Bio-inspired Information and Comm. Technol. (BICT), 2015. [PDF](https://dl.acm.org/citation.cfm?id=2954727)

- Brosch, T., Tschechne, S., Neumann, H.,

*[Visual Processing in Cortical Architecture from Neuroscience to Neuromorphic Computing](https://doi.org/10.1007/978-3-319-50862-7_7)*,

Int. Workshop on Brain-Inspired Computing (BrainComp), 2015. LNCS, vol 10087.

- Kosiorek, A., Adrian, D., Rausch, J., Conradt, J.,

*[An Efficient Event-Based Optical Flow Implementation in C/C++ and CUDA](http://tum.neurocomputing.systems/fileadmin/w00bqs/www/publications/pp/2015SS-PP-RealTimeDVSOpticFlow.pdf),*

Tech. Rep. TU Munich, 2015.

- [Milde et al., EBCCSP 2015](#Milde15ebccsp),

*Bioinspired event-driven collision avoidance algorithm based on optic flow*.

- Giulioni, M., Lagorce, X., Galluppi, F., Benosman, R.,

*[Event-Based Computation of Motion Flow on a Neuromorphic Analog Neural Platform](https://doi.org/10.3389/fnins.2016.00035)*,

Front. Neurosci. (2016), 10:35.

- Haessig, G., Galluppi, F., Lagorce, X., Benosman, R.,

*[Neuromorphic networks on the SpiNNaker platform](https://doi.org/10.1109/AICAS.2019.8771512)*,

IEEE Int. Conf. Artificial Intelligence Circuits and Systems (AICAS), 2019.

- Rueckauer, B. and Delbruck, T.,

*[Evaluation of Event-Based Algorithms for Optical Flow with Ground-Truth from Inertial Measurement Sensor](https://doi.org/10.3389/fnins.2016.00176),*

Front. Neurosci. (2016), 10:176. [YouTube](https://youtu.be/Ji1MzE4QbM4)

- [Code (jAER)](https://github.com/SensorsINI/jaer/tree/master/src/ch/unizh/ini/jaer/projects/rbodo/opticalflow)

- Bardow, P. A., Davison, A. J., Leutenegger, S.,

*[Simultaneous Optical Flow and Intensity Estimation from an Event Camera](http://www.cv-foundation.org/openaccess/content_cvpr_2016/papers/Bardow_Simultaneous_Optical_Flow_CVPR_2016_paper.pdf)*,

IEEE Conf. Computer Vision and Pattern Recognition (CVPR), 2016. [YouTube](https://youtu.be/1zqJpiheaaI), [YouTube 2](https://youtu.be/CASsIFuPxmc), [Dataset: 4 sequences](http://wp.doc.ic.ac.uk/pb2114/datasets/)

- Stoffregen, T., Kleeman, L.,