{

"cells": [

{

"cell_type": "markdown",

"metadata": {

"id": "lKcz5E_HTbX4"

},

"source": [

"# DINOv3 visual search\n",

"## 1. Install Required Libraries\n",

"We start by installing [FiftyOne](https://docs.voxel51.com/) and the Hugging Face `transformers` library.\n",

"This will allow us to load the [DINOv3 model from Hugging Face](https://huggingface.co/facebook/dinov3-vits16-pretrain-lvd1689m) and use FiftyOne's dataset visualization and analysis features.\n",

"\n",

"**Note:** We install `transformers` directly from the development branch to ensure compatibility with the latest DINOv3 features."

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "BMWUs1Z1gwmy"

},

"source": [

"Since the DINOv3 functionality is **not yet available** in the stable `transformers` release, we install it from the development branch.\n",

"See the [FiftyOne + Hugging Face integration guide](https://docs.voxel51.com/integrations/huggingface.html) for more details on using experimental model versions."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/"

},

"id": "QbLfOkFDNRuQ",

"outputId": "2ff67376-0acf-44e7-841a-8705e24e5c88"

},

"outputs": [],

"source": [

"!pip install --upgrade pip\n",

"!pip install git+https://github.com/huggingface/transformers\n",

"!pip install -q huggingface_hub\n",

"!pip install fiftyone"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "1g1TbwEKQLPA"

},

"source": [

"## 2. Log in to Hugging Face\n",

"We authenticate with Hugging Face to retrieve the latest model weights.\n",

"You must have access to the model you want to load. See [Hugging Face authentication docs](https://huggingface.co/docs/huggingface_hub/quick-start#login) for details."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 17,

"referenced_widgets": [

"aa928e3e578948d28334d531f5d61107",

"7b9a5cc495404135af541cce7f9aaaa5",

"92abcfa7a87b40a88d8ea5b4f2b2ecfa",

"ab5a41b642ba49bda5a49de17b16e6d7",

"486b63af02774eebb0ea21b02d2ad18f",

"63baf6fc8ca84698a550486b259cc1dc",

"a945462f01bc4c8d8ad8f274c1693157",

"a820b326a14a4daa928b04a5844845a8",

"7080e31f4b2b434ea8df79ab69023747",

"84ba947505324c73bed4c4081f78a367",

"478933d0bf4041d9961a0095f190f2ba",

"7e5d18e991bd4a229c8d787ce5043d58",

"5cc5cce4402a4e15b39e7c70d9b42553",

"05e98bb91a594f95bc80b2d1bfdc564e",

"2fd06a427bf842de81aa54013687c504",

"74d13be66ffc467e87109ff33571bb12",

"11f2e03a656e4e79893896de8592f5a2",

"b6873b5e558f4e20a152ba29c5b66aa8",

"9a1c06a99eb74a8f8161d0892c2814aa",

"f6cc4edcc36648c99b1870562bfb5c5c"

]

},

"id": "7Ny0rXQbPXvc",

"outputId": "7985ba54-23f0-40b3-e6f5-4540897e9850"

},

"outputs": [],

"source": [

"from huggingface_hub import notebook_login\n",

"notebook_login()"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "XO2HQWkhPtv0"

},

"source": [

"### Load a quick start dataset\n",

"To explore more dataset option visit the docs [Dataset Zoo](https://docs.voxel51.com/dataset_zoo/index.html) or load your [own dataset](https://docs.voxel51.com/api/fiftyone.core.odm.utils.html#fiftyone.core.odm.utils.load_dataset)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/"

},

"id": "KniGJG8mNQLp",

"outputId": "a4245a78-9c3a-482d-f236-80bbe7d44bb8"

},

"outputs": [],

"source": [

"import fiftyone as fo\n",

"import fiftyone.zoo as foz\n",

"\n",

"# You can load your own dataset\n",

"dataset = foz.load_zoo_dataset(\n",

" \"https://github.com/voxel51/coco-2017\",\n",

" split=\"validation\",\n",

")"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "h9VxrvyKhYji"

},

"source": [

"You can load any of the model available in Hugging Face\n",

"https://huggingface.co/collections/facebook/dinov3-68924841bd6b561778e31009"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "2mll1x98WbVp"

},

"source": [

"Thanks to the integration of Hugging Face in Fiftyone we are able to perform multiple tasks with the model, explore more here [Integration HuggingFace](https://docs.voxel51.com/integrations/huggingface.html)"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "PE_PuhvmIlUp"

},

"source": [

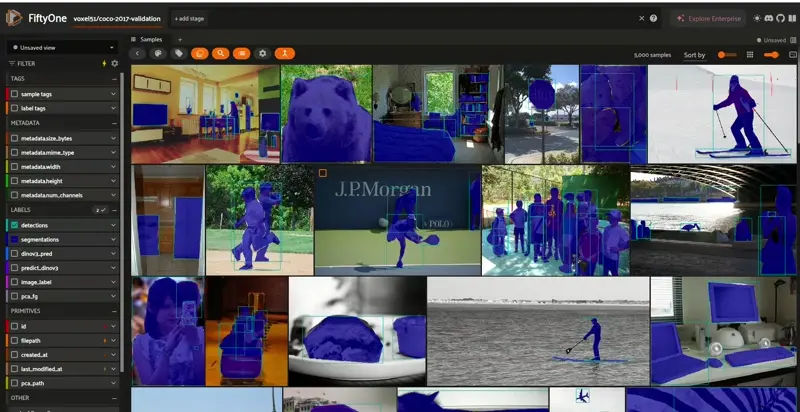

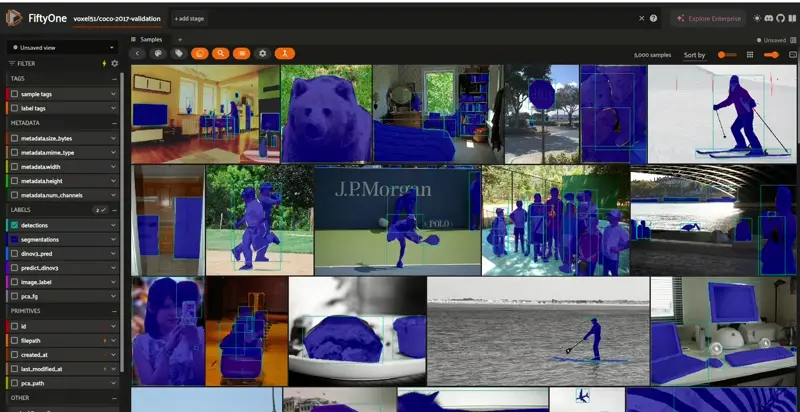

"## Working with DINOv3 Embeddings in FiftyOne\n",

"\n",

"In this example, we focus on using **DINOv3 embeddings** for visual search and similarity-based exploration in FiftyOne.\n",

"\n",

"### Workflow\n",

"1. **Compute embeddings** \n",

" We run each image through the DINOv3 model and extract either:\n",

" - The **class token embedding** (for global representation), or\n",

" - The **patch token embeddings** (for more granular, region-level similarity). \n",

" \n",

" Learn more: [Computing embeddings in FiftyOne](https://docs.voxel51.com/api/fiftyone.core.models.html#fiftyone.core.models.compute_embeddings).\n",

"\n",

"2. **Visualize embeddings** \n",

" We project the embeddings into 2D space using dimensionality reduction (e.g., t-SNE or UMAP) so we can see clusters of visually similar images. \n",

" - FiftyOne makes this interactive through its Embeddings Visualization in the App.\n",

"\n",

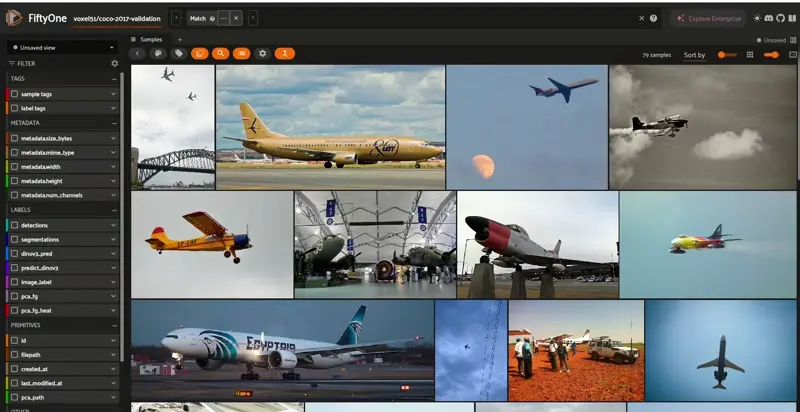

"3. **Compute similarity search** \n",

" Using FiftyOne’s similarity search tools, we select a **query image** (in this example, the first image in the dataset, but you can choose any). \n",

" - The system finds and ranks the most visually similar images based on embedding distance. \n",

" - Docs: [Similarity search in FiftyOne](https://docs.voxel51.com/api/fiftyone.brain.similarity.html).\n",

"\n",

"4. **Sort by similarity** \n",

" We display the results sorted from most to least similar, making it easy to:\n",

" - Detect near-duplicates.\n",

" - Explore visual clusters.\n",

" - Identify outliers in the dataset."

]

},

{

"cell_type": "code",

"execution_count": 51,

"metadata": {

"id": "ViEK08c5FkvS"

},

"outputs": [],

"source": [

"import transformers\n",

"import fiftyone.utils.transformers as fouhft\n",

"transformers_model = transformers.AutoModel.from_pretrained(\"facebook/dinov3-vitl16-pretrain-lvd1689m\")\n",

"model_config = fouhft.FiftyOneTransformerConfig(\n",

" {\n",

" \"model\": transformers_model,\n",

" \"name_or_path\":\"facebook/dinov3-vitl16-pretrain-lvd1689m\",\n",

" }\n",

")\n",

"model = fouhft.FiftyOneTransformer(model_config)"

]

},

{

"cell_type": "code",

"execution_count": 52,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/"

},

"id": "tLDpX5RO_dsc",

"outputId": "5f0d9818-50fb-4b35-e117-38fd3d30fc12"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

" 100% |███████████████| 5000/5000 [5.1m elapsed, 0s remaining, 16.3 samples/s] \n"

]

},

{

"name": "stderr",

"output_type": "stream",

"text": [

"INFO:eta.core.utils: 100% |███████████████| 5000/5000 [5.1m elapsed, 0s remaining, 16.3 samples/s] \n"

]

}

],

"source": [

"dataset.compute_embeddings(model, embeddings_field=\"embeddings_dinov3\")"

]

},

{

"cell_type": "code",

"execution_count": 54,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/"

},

"id": "g_x44RcaPlPN",

"outputId": "f8da246b-4d06-4ed6-ced0-18076baaa077"

},

"outputs": [

{

"data": {

"text/plain": [

"Name: voxel51/coco-2017-validation\n",

"Media type: image\n",

"Num samples: 5000\n",

"Persistent: False\n",

"Tags: []\n",

"Sample fields:\n",

" id: fiftyone.core.fields.ObjectIdField\n",

" filepath: fiftyone.core.fields.StringField\n",

" tags: fiftyone.core.fields.ListField(fiftyone.core.fields.StringField)\n",

" metadata: fiftyone.core.fields.EmbeddedDocumentField(fiftyone.core.metadata.ImageMetadata)\n",

" created_at: fiftyone.core.fields.DateTimeField\n",

" last_modified_at: fiftyone.core.fields.DateTimeField\n",

" detections: fiftyone.core.fields.EmbeddedDocumentField(fiftyone.core.labels.Detections)\n",

" segmentations: fiftyone.core.fields.EmbeddedDocumentField(fiftyone.core.labels.Detections)\n",

" embeddings_dinov3: fiftyone.core.fields.VectorField"

]

},

"execution_count": 54,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"dataset"

]

},

{

"cell_type": "code",

"execution_count": 55,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 500,

"referenced_widgets": [

"9324815c83504b93b77ad40be930567a",

"a4ab637e90214c7a88f253e2ef0a2cd1",

"21d8159b79214826afb3b3e50cfb902b",

"7048fb69f8bd45b3b5ddd1326ac79e18",

"9453b087f3704c8b967bbe06b7e670d6",

"7cfc053aed0d48bc9508cc1c801a590c",

"03a35f36c1da47a4a6f63264d6017413",

"9767e82a2fe94cfb852e2d3e8bcedf1b",

"a5d80d3e579d46e597241e8594d2f563",

"e318a8ae7ba1477f83625e2fbd42f39f",

"95b3c7726b4f47248f2404369c794d40"

]

},

"id": "i2J1K9riH31Z",

"outputId": "89aae627-4356-4321-f5cb-7b468aa3b2c0"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Generating visualization...\n"

]

},

{

"name": "stderr",

"output_type": "stream",

"text": [

"INFO:fiftyone.brain.visualization:Generating visualization...\n"

]

},

{

"name": "stdout",

"output_type": "stream",

"text": [

"UMAP( verbose=True)\n",

"Fri Aug 15 20:04:17 2025 Construct fuzzy simplicial set\n",

"Fri Aug 15 20:04:17 2025 Finding Nearest Neighbors\n",

"Fri Aug 15 20:04:17 2025 Building RP forest with 9 trees\n",

"Fri Aug 15 20:04:23 2025 NN descent for 12 iterations\n",

"\t 1 / 12\n",

"\t 2 / 12\n",

"\t 3 / 12\n",

"\t 4 / 12\n",

"\t 5 / 12\n",

"\tStopping threshold met -- exiting after 5 iterations\n",

"Fri Aug 15 20:04:39 2025 Finished Nearest Neighbor Search\n",

"Fri Aug 15 20:04:39 2025 Construct embedding\n"

]

},

{

"data": {

"application/vnd.jupyter.widget-view+json": {

"model_id": "9324815c83504b93b77ad40be930567a",

"version_major": 2,

"version_minor": 0

},

"text/plain": [

"Epochs completed: 0%| 0/500 [00:00]"

]

},

"metadata": {},

"output_type": "display_data"

},

{

"name": "stdout",

"output_type": "stream",

"text": [

"\tcompleted 0 / 500 epochs\n",

"\tcompleted 50 / 500 epochs\n",

"\tcompleted 100 / 500 epochs\n",

"\tcompleted 150 / 500 epochs\n",

"\tcompleted 200 / 500 epochs\n",

"\tcompleted 250 / 500 epochs\n",

"\tcompleted 300 / 500 epochs\n",

"\tcompleted 350 / 500 epochs\n",

"\tcompleted 400 / 500 epochs\n",

"\tcompleted 450 / 500 epochs\n",

"Fri Aug 15 20:04:44 2025 Finished embedding\n"

]

}

],

"source": [

"import fiftyone.brain as fob\n",

"\n",

"viz = fob.compute_visualization(\n",

" dataset,\n",

" embeddings=\"embeddings_dinov3\",\n",

" brain_key=\"dino_dense_umap\"\n",

")"

]

},

{

"cell_type": "code",

"execution_count": 57,

"metadata": {

"id": "FLcEG2-3JCB1"

},

"outputs": [],

"source": [

"session = fo.launch_app(dataset, port=5151)"

]

},

{

"cell_type": "code",

"execution_count": 57,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/",

"height": 34

},

"id": "0xtVDq-fJCjJ",

"outputId": "6ff573b6-e4f8-4693-886c-b4e3f2e4aba2"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"https://5151-gpu-t4-s-85ayl83jjz0q-a.us-west4-1.prod.colab.dev?polling=true\n"

]

}

],

"source": [

"print(session.url)"

]

},

{

"cell_type": "code",

"execution_count": 58,

"metadata": {

"id": "ToDrO3n4IWmN"

},

"outputs": [],

"source": [

"idx = fob.compute_similarity(\n",

" dataset,\n",

" embeddings=\"embeddings_dinov3\",\n",

" metric=\"cosine\",\n",

" brain_key=\"dino_sim\",\n",

")"

]

},

{

"cell_type": "code",

"execution_count": 59,

"metadata": {

"id": "C0AYNmiRIzZX"

},

"outputs": [],

"source": [

"query_id = dataset.first().id\n",

"view = dataset.sort_by_similarity(query_id, k=20)"

]

},

{

"cell_type": "code",

"execution_count": 115,

"metadata": {

"id": "jryaSDOAI_bh"

},

"outputs": [],

"source": [

"session.view = view"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

""

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "IddqyN6jiHYg"

},

"source": [

"## Classification Tasks with DINOv3\n",

"\n",

"In this example, we use the **DINOv3** model to perform an image classification task by integrating its embeddings with a **Logistic Regression (linear)** classifier.\n",

"\n",

"### Workflow\n",

"1. **Extract embeddings** \n",

" We feed each image through the DINOv3 model and extract the **class token embedding**. \n",

" - The class token acts as a compact representation of the entire image. \n",

" - More on embeddings: [FiftyOne embeddings guide](https://docs.voxel51.com/tutorials/image_embeddings.html).\n",

"\n",

"2. **Train a linear classifier** \n",

" Using the extracted embeddings as input features and the ground truth labels from our dataset, we train a **Logistic Regression (linear)** classifier to predict image classes. \n",

" - Linear classifiers are effective for high-dimensional feature spaces like DINOv3 embeddings.\n",

"\n",

"3. **Run inference** \n",

" We pass unseen images through the same pipeline to generate embeddings and predict their class labels using the trained SVM.\n",

"\n",

"4. **Evaluate results in FiftyOne** \n",

" We visualize and analyze the model predictions in FiftyOne, using:\n",

" - [Classification evaluation](https://docs.voxel51.com/user_guide/evaluation.html#classification-evaluation) to compute metrics like accuracy, precision, and recall.\n",

" - [Confusion matrix](https://docs.voxel51.com/user_guide/plots.html#confusion-matrices) to see where the model is making mistakes.\n",

"\n",

"\n"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "lZ-CADexYhg3"

},

"source": [

"Get id, path, embeddinds and classes to create a classifier"

]

},

{

"cell_type": "code",

"execution_count": 95,

"metadata": {

"id": "g_KXbgamT1lP"

},

"outputs": [],

"source": [

"from collections import Counter\n",

"from sklearn.preprocessing import normalize\n",

"from sklearn.linear_model import LogisticRegression\n",

"import numpy as np\n",

"\n",

"ids = dataset.values(\"id\")\n",

"paths = dataset.values(\"filepath\")\n",

"embs = dataset.values(\"embeddings_dinov3\")\n",

"det_lists = dataset.values(\"detections.detections.label\")\n",

"img_labels = [Counter(L).most_common(1)[0][0] if L else None for L in det_lists]\n",

"\n",

"dataset.set_values(\n",

" \"image_label\",\n",

" [fo.Classification(label=l) if l is not None else None for l in img_labels],\n",

")"

]

},

{

"cell_type": "code",

"execution_count": 96,

"metadata": {

"id": "JzG_tkVLZ3oo"

},

"outputs": [],

"source": [

"mask = [(x is not None) and (y is not None) for x, y in zip(embs, img_labels)]\n",

"X = normalize(np.stack([x for x,m in zip(embs,mask) if m], axis=0))\n",

"y = [lab for lab,m in zip(img_labels,mask) if m]"

]

},

{

"cell_type": "code",

"execution_count": 97,

"metadata": {

"id": "cmrV4uYYZ82G"

},

"outputs": [],

"source": [

"# 3) Train a tiny linear head\n",

"clf = LogisticRegression(max_iter=2000, class_weight=\"balanced\", n_jobs=-1).fit(X, y)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/"

},

"id": "wXktysDWakfF",

"outputId": "2a5424a3-c741-4c6c-8e80-f538d2ab04b3"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

" 100% |███████████████| 5000/5000 [1.2m elapsed, 0s remaining, 93.4 samples/s] \n"

]

},

{

"name": "stderr",

"output_type": "stream",

"text": [

"INFO:eta.core.utils: 100% |███████████████| 5000/5000 [1.2m elapsed, 0s remaining, 93.4 samples/s] \n"

]

}

],

"source": [

"# --- inference on ALL samples using embeddings only ---\n",

"for sample in dataset.iter_samples(autosave=True, progress=True):\n",

" v = sample[\"embeddings_dinov3\"]\n",

" if v is None:\n",

" continue\n",

"\n",

" X = normalize(np.asarray(v, dtype=np.float32).reshape(1, -1))\n",

" p = clf.predict_proba(X)[0]\n",

" k = int(np.argmax(p))\n",

"\n",

" sample[\"predict_dinov3\"] = fo.Classification(\n",

" label=str(clf.classes_[k]),\n",

" confidence=float(p[k]),\n",

" )"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "vJh-sjUCeLYQ"

},

"source": [

"Evaluate the results of the classification"

]

},

{

"cell_type": "code",

"execution_count": 99,

"metadata": {

"id": "MrA0MFYLbNH6"

},

"outputs": [],

"source": [

"results = dataset.evaluate_classifications(\n",

" \"predict_dinov3\", gt_field=\"image_label\", method=\"simple\", eval_key=\"dino_simple\"\n",

")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"\n"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "YqsALSuvffy_"

},

"source": [

"## PCA/CLS Foreground Segmentation with DINOv3\n",

"\n",

"This step builds a **foreground mask** per image straight from DINOv3’s internal features, no training required. It’s useful to:\n",

"- Quickly **highlight the main subject** (saliency-ish) to reduce background bias\n",

"- Improve **visual search** by focusing on foreground regions\n",

"- Speed up **data curation** (find images with weak/strong foreground, spot occlusions)\n",

"- Generate **lightweight pseudo-labels** that you can review in the FiftyOne App\n",

"\n",

"### What we compute (high level)\n",

"- **ViT models (DINOv3 ViT)**: for each image patch, compute the **cosine similarity to the CLS token**. Patches more aligned with CLS are treated as foreground.\n",

"- **ConvNeXt-style models**: compute the cosine similarity of each spatial feature to the **global average feature vector**.\n",

"\n",

"We then **normalize → optionally smooth → threshold** the similarity map:\n",

"1. Min–max scale to `[0, 1]` \n",

"2. Optional average pooling in patch space to denoise \n",

"3. Threshold to get a **binary mask** (foreground/background)\n",

"\n",

"Finally, we upsample to the original image size and write the results into the dataset:\n",

"- A **binary segmentation** field (`fo.Segmentation`)\n",

"- Optionally, a **soft heatmap** field (`fo.Heatmap`) with values in `[0, 1]`\n",

"\n",

"These overlays render natively in the **FiftyOne App**."

]

},

{

"cell_type": "code",

"execution_count": 117,

"metadata": {

"id": "JF1e5-f4ulfU"

},

"outputs": [],

"source": [

"import numpy as np\n",

"from PIL import Image, ImageOps\n",

"import torch\n",

"import torch.nn.functional as F\n",

"import fiftyone as fo\n",

"from fiftyone import Segmentation, Heatmap\n",

"from transformers import AutoImageProcessor, AutoModel\n",

"\n",

"def build_pca_fg_masks(\n",

" dataset: fo.Dataset,\n",

" model_id: str = \"facebook/dinov3-vits16-pretrain-lvd1689m\",\n",

" field: str = \"pca_fg\", # Segmentation field (binary 0/1)\n",

" heatmap_field: str | None = None, # optional: store soft map (0..1) as fo.Heatmap\n",

" thresh: float = 0.5, # FG threshold after smoothing\n",

" smooth_k: int = 3, # avg-pool kernel in patch space (0/1 to disable)\n",

" device: str | None = None,\n",

"):\n",

" \"\"\"\n",

" Compute a DINOv3 PCA/CLS-style foreground mask for every sample and write to dataset.\n",

"\n",

" - ViT: cosine(sim) to CLS over patch tokens\n",

" - ConvNeXt: cosine(sim) to global-avg feature over feature map\n",

" - Masks are overlaid natively in the FiftyOne App.\n",

" \"\"\"\n",

" device = device or (\"cuda\" if torch.cuda.is_available() else \"cpu\")\n",

" processor = AutoImageProcessor.from_pretrained(model_id)\n",

" model = AutoModel.from_pretrained(model_id).to(device).eval()\n",

"\n",

" # --- schema (once) ---\n",

" if not dataset.has_field(field):\n",

" dataset.add_sample_field(field, fo.EmbeddedDocumentField, embedded_doc_type=fo.Segmentation)\n",

"\n",

" if heatmap_field and not dataset.has_field(heatmap_field):\n",

" dataset.add_sample_field(heatmap_field, fo.EmbeddedDocumentField, embedded_doc_type=fo.Heatmap)\n",

"\n",

" # mask targets (older APIs use property, not a setter)\n",

" mt = dict(dataset.mask_targets or {})\n",

" mt[field] = {0: \"background\", 1: \"foreground\"}\n",

" dataset.mask_targets = mt\n",

" dataset.save()\n",

"\n",

" @torch.inference_mode()\n",

" def _fg_mask(path: str) -> tuple[np.ndarray, np.ndarray]:\n",

" \"\"\"Returns (mask_uint8_HxW, soft_fg01_HxW_float32).\"\"\"\n",

" img = ImageOps.exif_transpose(Image.open(path).convert(\"RGB\"))\n",

" W0, H0 = img.size\n",

"\n",

" bf = processor(images=img, return_tensors=\"pt\").to(device)\n",

" last = model(**bf).last_hidden_state # ViT: [B,1+R+P,D] | ConvNeXt: [B,C,H,W]\n",

"\n",

" # ---- ViT path ----\n",

" if last.ndim == 3:\n",

" hs = last[0].float() # [1+R+P,D]\n",

" num_reg = getattr(model.config, \"num_register_tokens\", 0)\n",

" patch = getattr(model.config, \"patch_size\", 16)\n",

" patches = hs[1 + num_reg :, :] # [P,D]\n",

" _, _, Hc, Wc = bf[\"pixel_values\"].shape\n",

" gh, gw = Hc // patch, Wc // patch\n",

"\n",

" cls = hs[0:1, :]\n",

" sims = (F.normalize(patches, dim=1) @ F.normalize(cls, dim=1).T).squeeze(1) # [P]\n",

" fg = sims.detach().cpu().view(gh, gw) # CPU [gh,gw]\n",

"\n",

" # ---- ConvNeXt path ----\n",

" else:\n",

" fm = last[0].float() # [C,H,W]\n",

" C, gh, gw = fm.shape\n",

" grid = F.normalize(fm.permute(1, 2, 0).reshape(-1, C), dim=1) # [H*W,C]\n",

" gvec = F.normalize(fm.mean(dim=(1, 2), keepdim=True).squeeze().unsqueeze(0), dim=1) # [1,C]\n",

" fg = (grid @ gvec.T).detach().cpu().reshape(gh, gw) # CPU [gh,gw]\n",

"\n",

" # min-max → [0,1]\n",

" fg01 = (fg - fg.min()) / (fg.max() - fg.min() + 1e-8)\n",

"\n",

" # optional smoothing in patch space\n",

" if smooth_k and smooth_k > 1:\n",

" fg01 = F.avg_pool2d(fg01.unsqueeze(0).unsqueeze(0), smooth_k, 1, smooth_k // 2).squeeze()\n",

"\n",

" # threshold → binary mask on patch grid\n",

" mask_small = (fg01 > thresh).to(torch.uint8).numpy() # [gh,gw] {0,1}\n",

"\n",

" # upsample both to original size\n",

" mask_full = Image.fromarray(mask_small * 255).resize((W0, H0), Image.NEAREST)\n",

" soft_full = Image.fromarray((fg01.numpy() * 255).astype(np.uint8)).resize((W0, H0), Image.BILINEAR)\n",

"\n",

" mask = (np.array(mask_full) > 127).astype(np.uint8) # HxW {0,1}\n",

" soft = np.array(soft_full).astype(np.float32) / 255.0 # HxW [0,1]\n",

" return mask, soft\n",

"\n",

" # --- process all samples ---\n",

" skipped = 0\n",

" for s in dataset.iter_samples(autosave=True, progress=True):\n",

" try:\n",

" m, soft = _fg_mask(s.filepath)\n",

" s[field] = Segmentation(mask=m)\n",

" if heatmap_field:\n",

" # Heatmap expects a 2D float array in [0,1]; the App colors it\n",

" s[heatmap_field] = Heatmap(map=soft)\n",

" except Exception:\n",

" s[field] = None\n",

" if heatmap_field:\n",

" s[heatmap_field] = None\n",

" skipped += 1\n",

"\n",

" print(f\"✓ wrote masks to '{field}'\" + (f\" and heatmaps to '{heatmap_field}'\" if heatmap_field else \"\") + f\". skipped: {skipped}\")"

]

},

{

"cell_type": "code",

"execution_count": 118,

"metadata": {

"colab": {

"base_uri": "https://localhost:8080/"

},

"id": "Lfsrqae5upbv",

"outputId": "65857488-24b0-4b24-f5af-6ea1b7d04bf5"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

" 100% |███████████████| 5000/5000 [6.1m elapsed, 0s remaining, 18.1 samples/s] \n"

]

},

{

"name": "stderr",

"output_type": "stream",

"text": [

"INFO:eta.core.utils: 100% |███████████████| 5000/5000 [6.1m elapsed, 0s remaining, 18.1 samples/s] \n"

]

},

{

"name": "stdout",

"output_type": "stream",

"text": [

"✓ wrote masks to 'pca_fg' and heatmaps to 'pca_fg_heat'. skipped: 0\n"

]

}

],

"source": [

"build_pca_fg_masks(dataset, field=\"pca_fg\", heatmap_field=\"pca_fg_heat\", thresh=0.5, smooth_k=3)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

""

]

}

],

"metadata": {

"accelerator": "GPU",

"colab": {

"gpuType": "T4",

"provenance": []

},

"kernelspec": {

"display_name": "env",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.11.13"

},

"widgets": {

"application/vnd.jupyter.widget-state+json": {

"03a35f36c1da47a4a6f63264d6017413": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "DescriptionStyleModel",

"state": {

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "DescriptionStyleModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "StyleView",

"description_width": ""

}

},

"05e98bb91a594f95bc80b2d1bfdc564e": {

"model_module": "@jupyter-widgets/base",

"model_module_version": "1.2.0",

"model_name": "LayoutModel",

"state": {

"_model_module": "@jupyter-widgets/base",

"_model_module_version": "1.2.0",

"_model_name": "LayoutModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "LayoutView",

"align_content": null,

"align_items": null,

"align_self": null,

"border": null,

"bottom": null,

"display": null,

"flex": null,

"flex_flow": null,

"grid_area": null,

"grid_auto_columns": null,

"grid_auto_flow": null,

"grid_auto_rows": null,

"grid_column": null,

"grid_gap": null,

"grid_row": null,

"grid_template_areas": null,

"grid_template_columns": null,

"grid_template_rows": null,

"height": null,

"justify_content": null,

"justify_items": null,

"left": null,

"margin": null,

"max_height": null,

"max_width": null,

"min_height": null,

"min_width": null,

"object_fit": null,

"object_position": null,

"order": null,

"overflow": null,

"overflow_x": null,

"overflow_y": null,

"padding": null,

"right": null,

"top": null,

"visibility": null,

"width": null

}

},

"11f2e03a656e4e79893896de8592f5a2": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "DescriptionStyleModel",

"state": {

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "DescriptionStyleModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "StyleView",

"description_width": ""

}

},

"21d8159b79214826afb3b3e50cfb902b": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "FloatProgressModel",

"state": {

"_dom_classes": [],

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "FloatProgressModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/controls",

"_view_module_version": "1.5.0",

"_view_name": "ProgressView",

"bar_style": "success",

"description": "",

"description_tooltip": null,

"layout": "IPY_MODEL_9767e82a2fe94cfb852e2d3e8bcedf1b",

"max": 500,

"min": 0,

"orientation": "horizontal",

"style": "IPY_MODEL_a5d80d3e579d46e597241e8594d2f563",

"value": 500

}

},

"2fd06a427bf842de81aa54013687c504": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "ButtonStyleModel",

"state": {

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "ButtonStyleModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "StyleView",

"button_color": null,

"font_weight": ""

}

},

"478933d0bf4041d9961a0095f190f2ba": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "DescriptionStyleModel",

"state": {

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "DescriptionStyleModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "StyleView",

"description_width": ""

}

},

"486b63af02774eebb0ea21b02d2ad18f": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "ButtonModel",

"state": {

"_dom_classes": [],

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "ButtonModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/controls",

"_view_module_version": "1.5.0",

"_view_name": "ButtonView",

"button_style": "",

"description": "Login",

"disabled": false,

"icon": "",

"layout": "IPY_MODEL_05e98bb91a594f95bc80b2d1bfdc564e",

"style": "IPY_MODEL_2fd06a427bf842de81aa54013687c504",

"tooltip": ""

}

},

"5cc5cce4402a4e15b39e7c70d9b42553": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "DescriptionStyleModel",

"state": {

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "DescriptionStyleModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "StyleView",

"description_width": ""

}

},

"63baf6fc8ca84698a550486b259cc1dc": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "HTMLModel",

"state": {

"_dom_classes": [],

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "HTMLModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/controls",

"_view_module_version": "1.5.0",

"_view_name": "HTMLView",

"description": "",

"description_tooltip": null,

"layout": "IPY_MODEL_74d13be66ffc467e87109ff33571bb12",

"placeholder": "",

"style": "IPY_MODEL_11f2e03a656e4e79893896de8592f5a2",

"value": "\nPro Tip: If you don't already have one, you can create a dedicated\n'notebooks' token with 'write' access, that you can then easily reuse for all\nnotebooks. "

}

},

"7048fb69f8bd45b3b5ddd1326ac79e18": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "HTMLModel",

"state": {

"_dom_classes": [],

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "HTMLModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/controls",

"_view_module_version": "1.5.0",

"_view_name": "HTMLView",

"description": "",

"description_tooltip": null,

"layout": "IPY_MODEL_e318a8ae7ba1477f83625e2fbd42f39f",

"placeholder": "",

"style": "IPY_MODEL_95b3c7726b4f47248f2404369c794d40",

"value": " 500/500 [00:05]"

}

},

"7080e31f4b2b434ea8df79ab69023747": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "DescriptionStyleModel",

"state": {

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "DescriptionStyleModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "StyleView",

"description_width": ""

}

},

"74d13be66ffc467e87109ff33571bb12": {

"model_module": "@jupyter-widgets/base",

"model_module_version": "1.2.0",

"model_name": "LayoutModel",

"state": {

"_model_module": "@jupyter-widgets/base",

"_model_module_version": "1.2.0",

"_model_name": "LayoutModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "LayoutView",

"align_content": null,

"align_items": null,

"align_self": null,

"border": null,

"bottom": null,

"display": null,

"flex": null,

"flex_flow": null,

"grid_area": null,

"grid_auto_columns": null,

"grid_auto_flow": null,

"grid_auto_rows": null,

"grid_column": null,

"grid_gap": null,

"grid_row": null,

"grid_template_areas": null,

"grid_template_columns": null,

"grid_template_rows": null,

"height": null,

"justify_content": null,

"justify_items": null,

"left": null,

"margin": null,

"max_height": null,

"max_width": null,

"min_height": null,

"min_width": null,

"object_fit": null,

"object_position": null,

"order": null,

"overflow": null,

"overflow_x": null,

"overflow_y": null,

"padding": null,

"right": null,

"top": null,

"visibility": null,

"width": null

}

},

"7b9a5cc495404135af541cce7f9aaaa5": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "HTMLModel",

"state": {

"_dom_classes": [],

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "HTMLModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/controls",

"_view_module_version": "1.5.0",

"_view_name": "HTMLView",

"description": "",

"description_tooltip": null,

"layout": "IPY_MODEL_a820b326a14a4daa928b04a5844845a8",

"placeholder": "",

"style": "IPY_MODEL_7080e31f4b2b434ea8df79ab69023747",

"value": "

Copy a token from your Hugging Face\ntokens page and paste it below.

Immediately click login after copying\nyour token or it might be stored in plain text in this notebook file. "

}

},

"7cfc053aed0d48bc9508cc1c801a590c": {

"model_module": "@jupyter-widgets/base",

"model_module_version": "1.2.0",

"model_name": "LayoutModel",

"state": {

"_model_module": "@jupyter-widgets/base",

"_model_module_version": "1.2.0",

"_model_name": "LayoutModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "LayoutView",

"align_content": null,

"align_items": null,

"align_self": null,

"border": null,

"bottom": null,

"display": null,

"flex": null,

"flex_flow": null,

"grid_area": null,

"grid_auto_columns": null,

"grid_auto_flow": null,

"grid_auto_rows": null,

"grid_column": null,

"grid_gap": null,

"grid_row": null,

"grid_template_areas": null,

"grid_template_columns": null,

"grid_template_rows": null,

"height": null,

"justify_content": null,

"justify_items": null,

"left": null,

"margin": null,

"max_height": null,

"max_width": null,

"min_height": null,

"min_width": null,

"object_fit": null,

"object_position": null,

"order": null,

"overflow": null,

"overflow_x": null,

"overflow_y": null,

"padding": null,

"right": null,

"top": null,

"visibility": null,

"width": null

}

},

"7e5d18e991bd4a229c8d787ce5043d58": {

"model_module": "@jupyter-widgets/base",

"model_module_version": "1.2.0",

"model_name": "LayoutModel",

"state": {

"_model_module": "@jupyter-widgets/base",

"_model_module_version": "1.2.0",

"_model_name": "LayoutModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "LayoutView",

"align_content": null,

"align_items": null,

"align_self": null,

"border": null,

"bottom": null,

"display": null,

"flex": null,

"flex_flow": null,

"grid_area": null,

"grid_auto_columns": null,

"grid_auto_flow": null,

"grid_auto_rows": null,

"grid_column": null,

"grid_gap": null,

"grid_row": null,

"grid_template_areas": null,

"grid_template_columns": null,

"grid_template_rows": null,

"height": null,

"justify_content": null,

"justify_items": null,

"left": null,

"margin": null,

"max_height": null,

"max_width": null,

"min_height": null,

"min_width": null,

"object_fit": null,

"object_position": null,

"order": null,

"overflow": null,

"overflow_x": null,

"overflow_y": null,

"padding": null,

"right": null,

"top": null,

"visibility": null,

"width": null

}

},

"84ba947505324c73bed4c4081f78a367": {

"model_module": "@jupyter-widgets/base",

"model_module_version": "1.2.0",

"model_name": "LayoutModel",

"state": {

"_model_module": "@jupyter-widgets/base",

"_model_module_version": "1.2.0",

"_model_name": "LayoutModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "LayoutView",

"align_content": null,

"align_items": null,

"align_self": null,

"border": null,

"bottom": null,

"display": null,

"flex": null,

"flex_flow": null,

"grid_area": null,

"grid_auto_columns": null,

"grid_auto_flow": null,

"grid_auto_rows": null,

"grid_column": null,

"grid_gap": null,

"grid_row": null,

"grid_template_areas": null,

"grid_template_columns": null,

"grid_template_rows": null,

"height": null,

"justify_content": null,

"justify_items": null,

"left": null,

"margin": null,

"max_height": null,

"max_width": null,

"min_height": null,

"min_width": null,

"object_fit": null,

"object_position": null,

"order": null,

"overflow": null,

"overflow_x": null,

"overflow_y": null,

"padding": null,

"right": null,

"top": null,

"visibility": null,

"width": null

}

},

"92abcfa7a87b40a88d8ea5b4f2b2ecfa": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "PasswordModel",

"state": {

"_dom_classes": [],

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "PasswordModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/controls",

"_view_module_version": "1.5.0",

"_view_name": "PasswordView",

"continuous_update": true,

"description": "Token:",

"description_tooltip": null,

"disabled": false,

"layout": "IPY_MODEL_84ba947505324c73bed4c4081f78a367",

"placeholder": "",

"style": "IPY_MODEL_478933d0bf4041d9961a0095f190f2ba",

"value": ""

}

},

"9324815c83504b93b77ad40be930567a": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "HBoxModel",

"state": {

"_dom_classes": [],

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "HBoxModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/controls",

"_view_module_version": "1.5.0",

"_view_name": "HBoxView",

"box_style": "",

"children": [

"IPY_MODEL_a4ab637e90214c7a88f253e2ef0a2cd1",

"IPY_MODEL_21d8159b79214826afb3b3e50cfb902b",

"IPY_MODEL_7048fb69f8bd45b3b5ddd1326ac79e18"

],

"layout": "IPY_MODEL_9453b087f3704c8b967bbe06b7e670d6"

}

},

"9453b087f3704c8b967bbe06b7e670d6": {

"model_module": "@jupyter-widgets/base",

"model_module_version": "1.2.0",

"model_name": "LayoutModel",

"state": {

"_model_module": "@jupyter-widgets/base",

"_model_module_version": "1.2.0",

"_model_name": "LayoutModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "LayoutView",

"align_content": null,

"align_items": null,

"align_self": null,

"border": null,

"bottom": null,

"display": null,

"flex": null,

"flex_flow": null,

"grid_area": null,

"grid_auto_columns": null,

"grid_auto_flow": null,

"grid_auto_rows": null,

"grid_column": null,

"grid_gap": null,

"grid_row": null,

"grid_template_areas": null,

"grid_template_columns": null,

"grid_template_rows": null,

"height": null,

"justify_content": null,

"justify_items": null,

"left": null,

"margin": null,

"max_height": null,

"max_width": null,

"min_height": null,

"min_width": null,

"object_fit": null,

"object_position": null,

"order": null,

"overflow": null,

"overflow_x": null,

"overflow_y": null,

"padding": null,

"right": null,

"top": null,

"visibility": null,

"width": null

}

},

"95b3c7726b4f47248f2404369c794d40": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "DescriptionStyleModel",

"state": {

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "DescriptionStyleModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "StyleView",

"description_width": ""

}

},

"9767e82a2fe94cfb852e2d3e8bcedf1b": {

"model_module": "@jupyter-widgets/base",

"model_module_version": "1.2.0",

"model_name": "LayoutModel",

"state": {

"_model_module": "@jupyter-widgets/base",

"_model_module_version": "1.2.0",

"_model_name": "LayoutModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "LayoutView",

"align_content": null,

"align_items": null,

"align_self": null,

"border": null,

"bottom": null,

"display": null,

"flex": null,

"flex_flow": null,

"grid_area": null,

"grid_auto_columns": null,

"grid_auto_flow": null,

"grid_auto_rows": null,

"grid_column": null,

"grid_gap": null,

"grid_row": null,

"grid_template_areas": null,

"grid_template_columns": null,

"grid_template_rows": null,

"height": null,

"justify_content": null,

"justify_items": null,

"left": null,

"margin": null,

"max_height": null,

"max_width": null,

"min_height": null,

"min_width": null,

"object_fit": null,

"object_position": null,

"order": null,

"overflow": null,

"overflow_x": null,

"overflow_y": null,

"padding": null,

"right": null,

"top": null,

"visibility": null,

"width": null

}

},

"9a1c06a99eb74a8f8161d0892c2814aa": {

"model_module": "@jupyter-widgets/base",

"model_module_version": "1.2.0",

"model_name": "LayoutModel",

"state": {

"_model_module": "@jupyter-widgets/base",

"_model_module_version": "1.2.0",

"_model_name": "LayoutModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "LayoutView",

"align_content": null,

"align_items": null,

"align_self": null,

"border": null,

"bottom": null,

"display": null,

"flex": null,

"flex_flow": null,

"grid_area": null,

"grid_auto_columns": null,

"grid_auto_flow": null,

"grid_auto_rows": null,

"grid_column": null,

"grid_gap": null,

"grid_row": null,

"grid_template_areas": null,

"grid_template_columns": null,

"grid_template_rows": null,

"height": null,

"justify_content": null,

"justify_items": null,

"left": null,

"margin": null,

"max_height": null,

"max_width": null,

"min_height": null,

"min_width": null,

"object_fit": null,

"object_position": null,

"order": null,

"overflow": null,

"overflow_x": null,

"overflow_y": null,

"padding": null,

"right": null,

"top": null,

"visibility": null,

"width": null

}

},

"a4ab637e90214c7a88f253e2ef0a2cd1": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "HTMLModel",

"state": {

"_dom_classes": [],

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "HTMLModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/controls",

"_view_module_version": "1.5.0",

"_view_name": "HTMLView",

"description": "",

"description_tooltip": null,

"layout": "IPY_MODEL_7cfc053aed0d48bc9508cc1c801a590c",

"placeholder": "",

"style": "IPY_MODEL_03a35f36c1da47a4a6f63264d6017413",

"value": "Epochs completed: 100%| "

}

},

"a5d80d3e579d46e597241e8594d2f563": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "ProgressStyleModel",

"state": {

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "ProgressStyleModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "StyleView",

"bar_color": null,

"description_width": ""

}

},

"a820b326a14a4daa928b04a5844845a8": {

"model_module": "@jupyter-widgets/base",

"model_module_version": "1.2.0",

"model_name": "LayoutModel",

"state": {

"_model_module": "@jupyter-widgets/base",

"_model_module_version": "1.2.0",

"_model_name": "LayoutModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "LayoutView",

"align_content": null,

"align_items": null,

"align_self": null,

"border": null,

"bottom": null,

"display": null,

"flex": null,

"flex_flow": null,

"grid_area": null,

"grid_auto_columns": null,

"grid_auto_flow": null,

"grid_auto_rows": null,

"grid_column": null,

"grid_gap": null,

"grid_row": null,

"grid_template_areas": null,

"grid_template_columns": null,

"grid_template_rows": null,

"height": null,

"justify_content": null,

"justify_items": null,

"left": null,

"margin": null,

"max_height": null,

"max_width": null,

"min_height": null,

"min_width": null,

"object_fit": null,

"object_position": null,

"order": null,

"overflow": null,

"overflow_x": null,

"overflow_y": null,

"padding": null,

"right": null,

"top": null,

"visibility": null,

"width": null

}

},

"a945462f01bc4c8d8ad8f274c1693157": {

"model_module": "@jupyter-widgets/base",

"model_module_version": "1.2.0",

"model_name": "LayoutModel",

"state": {

"_model_module": "@jupyter-widgets/base",

"_model_module_version": "1.2.0",

"_model_name": "LayoutModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "LayoutView",

"align_content": null,

"align_items": "center",

"align_self": null,

"border": null,

"bottom": null,

"display": "flex",

"flex": null,

"flex_flow": "column",

"grid_area": null,

"grid_auto_columns": null,

"grid_auto_flow": null,

"grid_auto_rows": null,

"grid_column": null,

"grid_gap": null,

"grid_row": null,

"grid_template_areas": null,

"grid_template_columns": null,

"grid_template_rows": null,

"height": null,

"justify_content": null,

"justify_items": null,

"left": null,

"margin": null,

"max_height": null,

"max_width": null,

"min_height": null,

"min_width": null,

"object_fit": null,

"object_position": null,

"order": null,

"overflow": null,

"overflow_x": null,

"overflow_y": null,

"padding": null,

"right": null,

"top": null,

"visibility": null,

"width": "50%"

}

},

"aa928e3e578948d28334d531f5d61107": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "VBoxModel",

"state": {

"_dom_classes": [],

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "VBoxModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/controls",

"_view_module_version": "1.5.0",

"_view_name": "VBoxView",

"box_style": "",

"children": [],

"layout": "IPY_MODEL_a945462f01bc4c8d8ad8f274c1693157"

}

},

"ab5a41b642ba49bda5a49de17b16e6d7": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "CheckboxModel",

"state": {

"_dom_classes": [],

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "CheckboxModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/controls",

"_view_module_version": "1.5.0",

"_view_name": "CheckboxView",

"description": "Add token as git credential?",

"description_tooltip": null,

"disabled": false,

"indent": true,

"layout": "IPY_MODEL_7e5d18e991bd4a229c8d787ce5043d58",

"style": "IPY_MODEL_5cc5cce4402a4e15b39e7c70d9b42553",

"value": true

}

},

"b6873b5e558f4e20a152ba29c5b66aa8": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "LabelModel",

"state": {

"_dom_classes": [],

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "LabelModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/controls",

"_view_module_version": "1.5.0",

"_view_name": "LabelView",

"description": "",

"description_tooltip": null,

"layout": "IPY_MODEL_9a1c06a99eb74a8f8161d0892c2814aa",

"placeholder": "",

"style": "IPY_MODEL_f6cc4edcc36648c99b1870562bfb5c5c",

"value": "Connecting..."

}

},

"e318a8ae7ba1477f83625e2fbd42f39f": {

"model_module": "@jupyter-widgets/base",

"model_module_version": "1.2.0",

"model_name": "LayoutModel",

"state": {

"_model_module": "@jupyter-widgets/base",

"_model_module_version": "1.2.0",

"_model_name": "LayoutModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "LayoutView",

"align_content": null,

"align_items": null,

"align_self": null,

"border": null,

"bottom": null,

"display": null,

"flex": null,

"flex_flow": null,

"grid_area": null,

"grid_auto_columns": null,

"grid_auto_flow": null,

"grid_auto_rows": null,

"grid_column": null,

"grid_gap": null,

"grid_row": null,

"grid_template_areas": null,

"grid_template_columns": null,

"grid_template_rows": null,

"height": null,

"justify_content": null,

"justify_items": null,

"left": null,

"margin": null,

"max_height": null,

"max_width": null,

"min_height": null,

"min_width": null,

"object_fit": null,

"object_position": null,

"order": null,

"overflow": null,

"overflow_x": null,

"overflow_y": null,

"padding": null,

"right": null,

"top": null,

"visibility": null,

"width": null

}

},

"f6cc4edcc36648c99b1870562bfb5c5c": {

"model_module": "@jupyter-widgets/controls",

"model_module_version": "1.5.0",

"model_name": "DescriptionStyleModel",

"state": {

"_model_module": "@jupyter-widgets/controls",

"_model_module_version": "1.5.0",

"_model_name": "DescriptionStyleModel",

"_view_count": null,

"_view_module": "@jupyter-widgets/base",

"_view_module_version": "1.2.0",

"_view_name": "StyleView",

"description_width": ""

}

}

}

}

},

"nbformat": 4,

"nbformat_minor": 0

}