---

execute:

echo: true

message: false

warning: false

fig-format: "svg"

format:

revealjs:

highlight-style: a11y-dark

reference-location: margin

theme: lecture_styles.scss

controls: true

controls-tutorial: true

slide-number: true

code-link: true

chalkboard: true

incremental: false

smaller: true

preview-links: true

code-line-numbers: true

history: false

progress: true

link-external-icon: true

code-annotations: hover

pointer:

color: "#b18eb1"

revealjs-plugins:

- pointer

---

## {#title-slide data-menu-title="Importing, Exporting, and Cleaning Data" background="#1e4655" background-image="../../images/csss-logo.png" background-position="center top 5%" background-size="50%"}

```{r}

#| echo: false

#| cache: false

require(downlit)

require(xml2)

require(tidyverse)

knitr::opts_chunk$set(comment = ">")

```

[Importing, Exporting, & Cleaning Data]{.custom-title}

[CS&SS 508 • Lecture 5]{.custom-subtitle}

[{{< var lectures.five >}}]{.custom-subtitle2}

[Victoria Sass]{.custom-subtitle3}

# Roadmap{.section-title background-color="#99a486"}

---

:::: {.columns}

::: {.column width="50%"}

### Last time, we learned:

* Types of Data

* Logical Operators

* Using `dplyr` to:

* Subset data

* Modify data

* Summarize data

* Merge data

:::

::: {.column width="50%"}

::: {.fragment}

### Today, we will cover:

* Importing and Exporting Data

* Tidying and Reshaping Data

* Types of Data

* Working with Factors

* Wrangling Date/Date-Time Data

:::

:::

::::

. . .

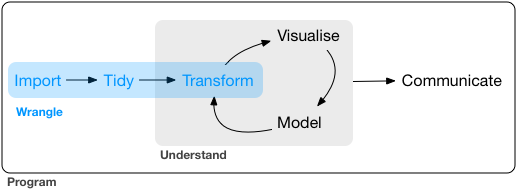

{fig-align="center" width=7in}

# Importing and Exporting Data{.section-title background-color="#99a486"}

## Data Packages

R has a *big* user base. If you are working with a popular data source, it will often have a devoted R package on *CRAN* or *Github*.

. . .

Examples:

* [`WDI`](https://vincentarelbundock.github.io/WDI/): World Development Indicators (World Bank)

* [`tidycensus`](https://walker-data.com/tidycensus/): Census and American Community Survey

* [`quantmod`](https://walker-data.com/tidycensus/): financial data from Yahoo, FRED, Google

* [`gssr`](https://kjhealy.github.io/gssr/): The General Social Survey Cumulative Data (1972-2021)

* [`psidR`](https://github.com/floswald/psidR): Panel Study of Income Dynamics (basic & public datasets)

. . .

If you have an actual data file, you'll have to import it yourself...

## Delimited Text Files

Besides a package, it's easiest when data is stored in a text file. The most commonly encountered delimited file is a **.csv**.

. . .

A comma-separated values (.csv) file looks like the following:

```

"Subject","Depression","Sex","Week","HamD","Imipramine"

101,"Non-endogenous","Second",0,26,NA

101,"Non-endogenous","Second",1,22,NA

101,"Non-endogenous","Second",2,18,4.04305

101,"Non-endogenous","Second",3,7,3.93183

101,"Non-endogenous","Second",4,4,4.33073

101,"Non-endogenous","Second",5,3,4.36945

103,"Non-endogenous","First",0,33,NA

103,"Non-endogenous","First",1,24,NA

103,"Non-endogenous","First",2,15,2.77259

```

# {data-menu-title="`readr``" background-image="images/readr.png" background-size="contain" background-position="center" .section-title background-color="#1e4655"}

## `readr`

R has some built-in functions for importing data, such as `read.table()` and `read.csv()`.

. . .

The `readr` package provides similar functions, like `read_csv()`, that have slightly better features:

::: {.incremental}

* Faster!

* Better defaults (e.g. doesn't automatically convert characters to factors)

* A *bit* smarter about dates and times

* Loading progress bars for large files

:::

. . .

`readr` is one of the core `tidyverse` packages so loading `tidyverse` will load it too:

```{r}

library(tidyverse)

```

. . .

Alternatively, you can just load `readr` like so:

```{r}

#| eval: false

library(readr)

```

## `readr` Importing Example

Let's import some data about song ranks on the Billboard Hot 100 in 2000:

```{r}

billboard_2000_raw <- read_csv(file = "data/billboard_top100.csv")

```

. . .

How do we know it loaded?

. . .

Let's look at it!

```{r}

#| output-location: fragment

glimpse(billboard_2000_raw)

```

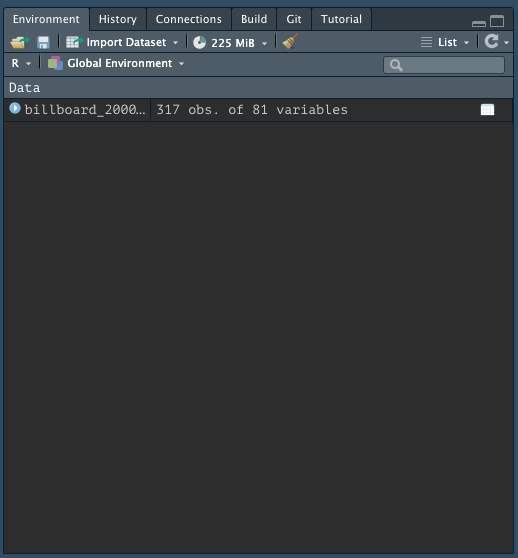

## Alternate Solution

When you import data from an external file you'll also see it in the Global Environment tab in the upper-right pane of RStudio:

:::: {.columns}

::: {.column width="50%"}

::: {.fragment}

You can also import the data manually!

In the upper right-hand pane of RStudio (make sure you're in the Environment tab), select:

`Import Dataset > From Text (readr)` and browse to the file on your computer^[Ideally you've saved it in your project folder! 😌].

:::

::: {.fragment}

**Once you've imported the data, you can `copy/paste` the import code from the console into your file!!**

This makes the process *reproducible!*

:::

:::

::: {.column width="50%"}

:::

::::

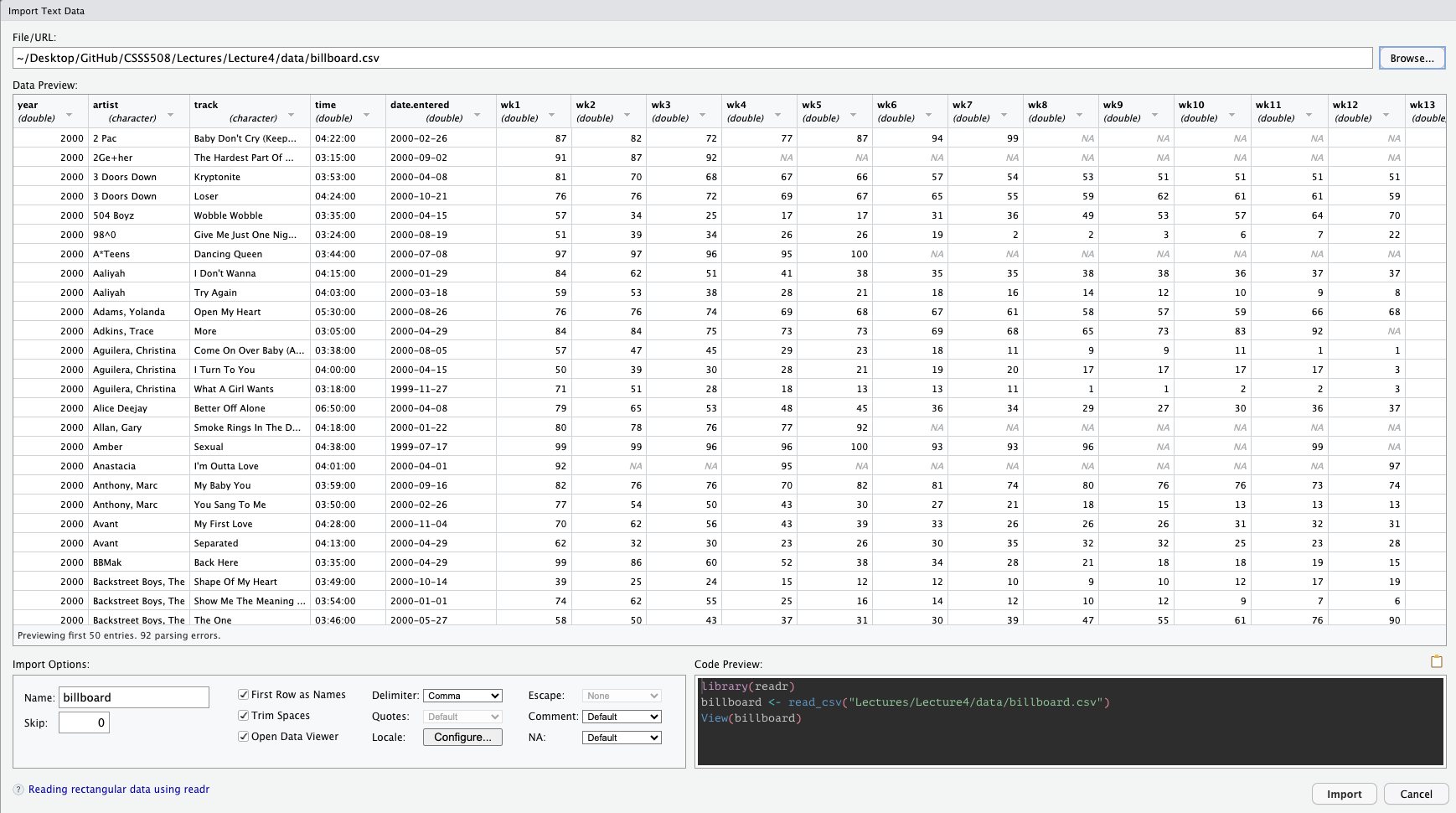

## Manual Data Import

{fig-align="center"}

## Specifying `NA`s

`NA`s are technically logical (boolean) variables that indicate a missing value.

. . .

Sometimes a particular dataset or file read from a different software will code `NA`s differently than `R`. If that's the case, you can add additional specifications to `read_csv` for what to read in as `NA`.

```{r}

#| eval: false

#| code-line-numbers: "|2"

billboard_2000_raw <- read_csv(file = "data/billboard_top100.csv",

na = c("N/A", "999"))

```

## Skipping lines

Depending on how the data were input, there may be several lines that precede the beginning of the data table you're interested in importing. You can skip these lines of metadata with the `skip` argument:

```{r}

#| eval: false

#| code-line-numbers: "|2"

billboard_2000_raw <- read_csv(file = "data/billboard_top100.csv",

skip = 1)

```

## Variable names

`read_csv` will automatically take the first row as column names. If you want to rename them you can save yourself some time recoding later on if you specify your preferred variable names upfront with the `col_names` argument.

. . .

It takes a character vector to be used as column names (in their order of appearance).

```{r}

billboard_renamed <- read_csv(

file = "data/billboard_top100.csv",

col_names = c("year", "artist", "track", "time", "date_entered",

paste("wk", 1:76, sep = "_")) # <1>

)

billboard_renamed |> names() |> head(10) # <2>

```

1. `paste` "pastes" together the first argument to the second argument (separated by whatever is specified in the `sep` argument) as character strings. Since the first argument here is a singular value, it is repeated for the entire length of the vector in the second argument. The first several values of `paste("wk", 1:76, sep = "_")` are: `r head(paste("wk", 1:76, sep = "_"))`

2. `names` here returns the column names of our data frame.

. . .

If you don't have any variable names you can specify that instead.

```{r}

#| eval: false

#| code-line-numbers: "|2"

billboard_2000_raw <- read_csv(file = "data/billboard_top100.csv",

col_names = FALSE)

```

## Snake Case

If you simply want to change your variables to snake case (all lower case; words separated by `_`), you can use the function `clean_names()` from the `janitor` package which replaces other punctuation separators with `_`.

```{r}

#| output-location: fragment

billboard_renamed <- billboard_2000_raw |> # <1>

janitor::clean_names(numerals = "right") # <2>

billboard_renamed |> names() |> head(10)

```

1. Create new object for renamed data

2. Run `install.packages("janitor")` in the console first. You can then call a function from `janitor` without loading the entire package by specifying its package name followed by `::` before the function name.;

The `numerals` argument specifies if you additionally want to put a separator before a number.

## Other Data File Types with `readr`

The other functions in `readr` employ a similar approach to `read_csv` so the trick is just knowing which to use for what data type.

. . .

* `read_csv2` is separated by semicolons (instead of commas)

* `read_tsv` is separated by tabs

* `read_delim` guesses the delimiter

* `read_fwf` reads in fixed-width-files

* `read_table` is a variation of `fwf` where columns are separated by white space

* `read_log` reads in Apache-style log files

## Other Packages to Read in Data

There are a range of other ways, besides delimited files, that data are stored.

The following packages are part of the extended `tidyverse` and therefore operate with similar syntax and logic as `readr`.

## Other Packages to Read in Data

There are a range of other ways, besides delimited files, that data are stored.

The following packages are part of the extended `tidyverse` and therefore operate with similar syntax and logic as `readr`.

:::: {.columns}

::: {.column width="50%"}

{.absolute top=185 left=135}

:::

::: {.column width="50%"}

* For Excel files (`.xls` or `.xlsx`), use package [`readxl`](https://readxl.tidyverse.org/)^[Functions have additional arguments to read in specific sheets or a range of cells.]

:::

::::

::: aside

Note: For Excel files and Googlesheets You **won't** keep text formatting, color, comments, or merged cells. See the [`openxlsx`](https://ycphs.github.io/openxlsx/) package for those capabilities. Also, [`tidyxl`](https://github.com/nacnudus/tidyxl) can help import non-tabular data from Excel.

:::

## Other Packages to Read in Data

There are a range of other ways, besides delimited files, that data are stored.

The following packages are part of the extended `tidyverse` and therefore operate with similar syntax and logic as `readr`.

:::: {.columns}

::: {.column width="50%"}

{.absolute top=185 left=135}

:::

::: {.column width="50%"}

* For Excel files (`.xls` or `.xlsx`), use package [`readxl`](https://readxl.tidyverse.org/)^[Functions have additional arguments to read in specific sheets or a range of cells.]

* For Google Docs Spreadsheets, use package [`googlesheets4`](https://googlesheets4.tidyverse.org/)^[Very similar to `readxl` with some slight variations you can read about [here](https://r4ds.hadley.nz/spreadsheets.html#google-sheets).]

:::

::::

::: aside

Note: For Excel files and Googlesheets You **won't** keep text formatting, color, comments, or merged cells. See the [`openxlsx`](https://ycphs.github.io/openxlsx/) package for those capabilities. Also, [`tidyxl`](https://github.com/nacnudus/tidyxl) can help import non-tabular data from Excel.

:::

## Other Packages to Read in Data

There are a range of other ways, besides delimited files, that data are stored.

The following packages are part of the extended `tidyverse` and therefore operate with similar syntax and logic as `readr`.

:::: {.columns}

::: {.column width="50%"}

{.absolute top=185 left=135}

:::

::: {.column width="50%"}

* For Excel files (`.xls` or `.xlsx`), use package [`readxl`](https://readxl.tidyverse.org/)^[Functions have additional arguments to read in specific sheets or a range of cells.]

* For Google Docs Spreadsheets, use package [`googlesheets4`](https://googlesheets4.tidyverse.org/)^[Very similar to `readxl` with some slight variations you can read about [here](https://r4ds.hadley.nz/spreadsheets.html#google-sheets).]

* For Stata, SPSS, and SAS files, use package [`haven`](https://haven.tidyverse.org/)^[SAS, SPSS, and Stata have so-called "labelled" vectors for which `haven` provides a [class](https://haven.tidyverse.org/reference/index.html#labelled-vectors) to represent in `R`. Alternatively, you can get rid of them with [these functions](https://haven.tidyverse.org/reference/index.html#remove-attributes).]

:::

::::

::: aside

Note: For Excel files and Googlesheets You **won't** keep text formatting, color, comments, or merged cells. See the [`openxlsx`](https://ycphs.github.io/openxlsx/) package for those capabilities. Also, [`tidyxl`](https://github.com/nacnudus/tidyxl) can help import non-tabular data from Excel.

:::

## How does `readr` parse different data types?

For each column in a data frame, `readr` functions pull the first 1000 rows and checks:

```{mermaid}

%%| echo: false

%%| fig-width: 11

%%| fig-height: 5.5

%%| fig-align: center

flowchart LR

id1((Variable))==>A(["1: Does it contain only F, T, FALSE,

TRUE, or NA (ignoring case)?"])==>id2{{Logical}}

id1((Variable))==>B(["2: Does it contain only numbers

(e.g., 1, -4.5, 5e6, Inf?)"])==>id3{{Number}}

id1((Variable))==>C(["3: Does it match the

ISO8601 standard?"])==>id4{{Date/Date-time}}

id1((Variable))==>D(["4: None of the above"])==>id5{{String}}

style id1 fill:#1e4655,color:#c7cdac,stroke:#c7cdac

style id2 fill:#c7cdac,color:#1e4655,stroke:#1e4655

style id3 fill:#c7cdac,color:#1e4655,stroke:#1e4655

style id4 fill:#c7cdac,color:#1e4655,stroke:#1e4655

style id5 fill:#c7cdac,color:#1e4655,stroke:#1e4655

style A fill:#FFFFFF,color:#000000,stroke:#000000

style B fill:#FFFFFF,color:#000000,stroke:#000000

style C fill:#FFFFFF,color:#000000,stroke:#000000

style D fill:#FFFFFF,color:#000000,stroke:#000000

```

## How does `readr` parse different data types?

For each column in a data frame, `readr` functions pull the first 1000 rows and checks:

```{mermaid}

%%| echo: false

%%| fig-width: 11

%%| fig-height: 5.5

%%| fig-align: center

flowchart LR

id1((Variable))==>A(["1: Does it contain only F, T, FALSE,

TRUE, or NA (ignoring case)?"])==>id2{{Logical}}

id1((Variable))==>B(["2: Does it contain only numbers

(e.g., 1, -4.5, 5e6, Inf?)"])==>id3{{Number}}

id1((Variable))==>C(["3: Does it match the

ISO8601 standard?"])==>id4{{Date/Date-time}}

id1((Variable))==>D(["4: None of the above"])==>id5{{String}}

style id1 fill:#1e4655,color:#c7cdac,stroke:#c7cdac

style id2 fill:#1e4655,color:#c7cdac,stroke:#c7cdac

style id3 fill:#c7cdac,color:#1e4655,stroke:#1e4655

style id4 fill:#c7cdac,color:#1e4655,stroke:#1e4655

style id5 fill:#c7cdac,color:#1e4655,stroke:#1e4655

style A fill:#ffa07a,color:#000000,stroke:#000000

style B fill:#FFFFFF,color:#000000,stroke:#000000

style C fill:#FFFFFF,color:#000000,stroke:#000000

style D fill:#FFFFFF,color:#000000,stroke:#000000

```

## How does `readr` parse different data types?

For each column in a data frame, `readr` functions pull the first 1000 rows and checks:

```{mermaid}

%%| echo: false

%%| fig-width: 11

%%| fig-height: 5.5

%%| fig-align: center

flowchart LR

id1((Variable))==>A(["1: Does it contain only F, T, FALSE,

TRUE, or NA (ignoring case)?"])==>id2{{Logical}}

id1((Variable))==>B(["2: Does it contain only numbers

(e.g., 1, -4.5, 5e6, Inf?)"])==>id3{{Number}}

id1((Variable))==>C(["3: Does it match the

ISO8601 standard?"])==>id4{{Date/Date-time}}

id1((Variable))==>D(["4: None of the above"])==>id5{{String}}

style id1 fill:#1e4655,color:#c7cdac,stroke:#c7cdac

style id2 fill:#c7cdac,color:#1e4655,stroke:#1e4655

style id3 fill:#1e4655,color:#c7cdac,stroke:#c7cdac

style id4 fill:#c7cdac,color:#1e4655,stroke:#1e4655

style id5 fill:#c7cdac,color:#1e4655,stroke:#1e4655

style A fill:#FFFFFF,color:#000000,stroke:#000000

style B fill:#ffa07a,color:#000000,stroke:#000000

style C fill:#FFFFFF,color:#000000,stroke:#000000

style D fill:#FFFFFF,color:#000000,stroke:#000000

```

## How does `readr` parse different data types?

For each column in a data frame, `readr` functions pull the first 1000 rows and checks:

```{mermaid}

%%| echo: false

%%| fig-width: 11

%%| fig-height: 5.5

%%| fig-align: center

flowchart LR

id1((Variable))==>A(["1: Does it contain only F, T, FALSE,

TRUE, or NA (ignoring case)?"])==>id2{{Logical}}

id1((Variable))==>B(["2: Does it contain only numbers

(e.g., 1, -4.5, 5e6, Inf?)"])==>id3{{Number}}

id1((Variable))==>C(["3: Does it match the

ISO8601 standard?"])==>id4{{Date/Date-time}}

id1((Variable))==>D(["4: None of the above"])==>id5{{String}}

style id1 fill:#1e4655,color:#c7cdac,stroke:#c7cdac

style id2 fill:#c7cdac,color:#1e4655,stroke:#1e4655

style id3 fill:#c7cdac,color:#1e4655,stroke:#1e4655

style id4 fill:#1e4655,color:#c7cdac,stroke:#c7cdac

style id5 fill:#c7cdac,color:#1e4655,stroke:#1e4655

style A fill:#FFFFFF,color:#000000,stroke:#000000

style B fill:#FFFFFF,color:#000000,stroke:#000000

style C fill:#ffa07a,color:#000000,stroke:#000000

style D fill:#FFFFFF,color:#000000,stroke:#000000

```

## How does `readr` parse different data types?

For each column in a data frame, `readr` functions pull the first 1000 rows and checks:

```{mermaid}

%%| echo: false

%%| fig-width: 11

%%| fig-height: 5.5

%%| fig-align: center

flowchart LR

id1((Variable))==>A(["1: Does it contain only F, T, FALSE,

TRUE, or NA (ignoring case)?"])==>id2{{Logical}}

id1((Variable))==>B(["2: Does it contain only numbers

(e.g., 1, -4.5, 5e6, Inf?)"])==>id3{{Number}}

id1((Variable))==>C(["3: Does it match the

ISO8601 standard?"])==>id4{{Date/Date-time}}

id1((Variable))==>D(["4: None of the above"])==>id5{{String}}

style id1 fill:#1e4655,color:#c7cdac,stroke:#c7cdac

style id2 fill:#c7cdac,color:#1e4655,stroke:#1e4655

style id3 fill:#c7cdac,color:#1e4655,stroke:#1e4655

style id4 fill:#c7cdac,color:#1e4655,stroke:#1e4655

style id5 fill:#1e4655,color:#c7cdac,stroke:#c7cdac

style A fill:#FFFFFF,color:#000000,stroke:#000000

style B fill:#FFFFFF,color:#000000,stroke:#000000

style C fill:#FFFFFF,color:#000000,stroke:#000000

style D fill:#ffa07a,color:#000000,stroke:#000000

```

## Most Common Issue with Reading in Data

The most common problem that occurs when reading in data is having mixed data. Most often, given the heuristic provided in the last slide, `readr` will parse a variable as a character string to preserve whatever it contains.

. . .

Let's actually look at how the billboard data was read in:

```{r}

#| output-location: fragment

glimpse(billboard_2000_raw)

```

## What Went Wrong?

[Since `readr` uses the values in the first 1000 rows to guess the type of the column (logical, numeric, date/date-time, character), if the first 1000 rows don't have any data, they will be coded as logical variables. ]{style="font-size: 85%;"}

. . .

[There are not many songs in the data that charted for 60+ weeks—and none in the first 1000 that charted for 66+ weeks!]{style="font-size: 85%;"}

. . .

::: {.callout-note icon=false}

## {{< fa circle-info >}} \ `NA` is logical?

```{r}

#| output-location: fragment

class(c(T, F, NA, FALSE, TRUE)) # <1>

```

1. `class` returns the data type of an object.

```{r}

#| output-location: fragment

class(c(1, NA, 17.5, 5.3, NA))

```

```{r}

#| output-location: fragment

class(as.Date(c(NA, "2023-10-31", "1986-06-21", "1997-01-15"), tz = "America/Los_Angeles")) # <2>

```

2. `as.Date` turns a character string of dates into an official date class in Base `R`. If we had an accompanying time stamp we would need to use `as.POSIXct` which turns a character string of dates and times into an official date-time class in Base `R`.

```{r}

#| output-location: fragment

class(c("apple", NA, "mango", "blackberry", "plum"))

```

```{r}

#| output-location: fragment

class(c(NA, NA, NA, NA, NA))

```

:::

::: aside

[Technically, `NA`s can be any data type depending upon what they are grouped with. However, by themselves they are a logical indicator of missing data, so their class is logical.]{.fragment style="font-size: 85%;"}

:::

## Column types

[Since the `wk*` variables should all be read in as integers, we can specify this explicitly with the `col_types` argument. ]{style="font-size: 85%;"}

. . .

```{r}

#| output-location: fragment

# Create character string of shortcode column types

bb_types <- paste(c("cctD", rep("i", 76)), collapse="") # <1>

bb_types

```

1. You can short-code column types with `c` = character, `t` = time, `D` = date, `i` = integer.

The `collapse` argument collapses the first two arguments into one complete character string.

. . .

```{r}

billboard_2000_raw <- read_csv(file = "data/billboard_top100.csv",

col_types = bb_types) # <2>

billboard_2000_raw |> select(wk66:wk76) # <3>

```

2. When we re-read in the data, this string now specifies the data type for each column of our data frame. Visit [this reference page](https://readr.tidyverse.org/reference/cols.html) to see all available column types and their short codes.

3. Checking the previously incorrectly parsed variables

```{r}

#| echo: false

billboard_renamed <- read_csv(file = "data/billboard_top100.csv",

col_types = bb_types) |>

janitor::clean_names(numerals = "right")

```

## Column types

To specify a default column type you can use `.default` like so:

```{r}

#| eval: false

#| code-line-numbers: "|2"

billboard_2000_raw <- read_csv(file = "data/billboard_top100.csv",

col_types = cols(.default = col_character()))

```

. . .

Another useful helper is `cols_only()` for when you only want to read in a subset of all available variables.

```{r}

#| eval: false

#| code-line-numbers: "|2"

billboard_2000_raw <- read_csv(file = "data/billboard_top100.csv",

col_types = cols_only(x = col_character))

```

. . .

In summary, the `col_types` argument gives you greater control over how your data are read in and can save you recoding time down the road and/or point out where your data are behaving differently than you expect.

## Reading in Multiple Files

If your data are split across multiple files you can read them in all at once by specifying the `id` argument.

```{r}

#| output-location: fragment

# Create list of files manually

sales_files <- c("data/01_sales.csv", "data/02_sales.csv", "data/03_sales.csv")

read_csv(sales_files, id = "file")

```

## Reading in Multiple Files

If you have too many files to reasonably type out all their names you can also use the base `r` function `list.files` to list the files for you.

```{r}

#| output-location: fragment

# Create list of files with pattern-matching

sales_files <- list.files("data",

pattern = "sales\\.csv$", # <1>

full.names = TRUE)

sales_files

```

1. We will discuss pattern-matching more in a couple of weeks; if all your data was in one folder without anything else in it, you wouldn't need to specify this argument. Sometimes, however, you may be searching through larger directories that you did not organize and that's when pattern-matching is really powerful.

## Data Entry

Sometimes you'll need to create a data set in your code. You can do this two ways:

::: {.panel-tabset}

### `tibble()`

Tibbles lay out the data by columns (i.e. a dataframe transposed).

```{r}

#| output-location: fragment

# Creating data with tibble

tibble(

x = c(1, 2, 5),

y = c("h", "m", "g"),

z = c(0.08, 0.83, 0.60)

)

```

### `tribble()`

Tibbles (`tr`ansposed ti`bble`) lay out the data by rows (i.e. the way a dataframe looks) which is much more intuitive.

```{r}

#| output-location: fragment

# Creating data with tribble

tribble(

~x, ~y, ~z,

1, "h", 0.08,

2, "m", 0.83,

5, "g", 0.60

)

```

:::

## Writing Delimited Files

Getting data out of R into a delimited file is very similar to getting it into R:

```{r}

#| eval: false

write_csv(billboard_2000_raw, path = "data/billboard_data.csv")

```

This saved the data we pulled off the web in a file called `billboard_data.csv` in the `data` folder of my working directory.

. . .

However, saving data in this way will not preserve `R` data types since delimited files code everything as a character string.

. . .

To save `R` objects and all associated metadata you have two options:

::: {.panel-tabset}

### `.Rds` format:

* Used for single objects, doesn't save original the object name

* Save: `write_rds(old_object_name, "path.Rds")`

* Load: `new_object_name <- read_rds("path.Rds")`

### `.Rdata` or `.Rda` format:

* Used for saving multiple files where the original object names are preserved

* Save: `save(object1, object2, ... , file = "path.Rdata")`

* Load: `load("path.Rdata")` without assignment operator

:::

## Writing Other File-Types

::: {.panel-tabset}

### `writexl`

:::: {.columns}

::: {.column width="60%"}

* `write_xlsx()` writes to an xlsx file

:::

::: {.column width="40%"}

{width=50%}

:::

::::

### `googlesheets4`

:::: {.columns}

::: {.column width="60%"}

* `sheet_write()` or `write_sheet()` (over)writes new data into a Sheet

* `gs4_create()` creates a new Sheet

* `sheet_append()` appends rows to a sheet

* `range_write()` (over)writes new data into a range

* `range_flood()` floods a range of cells

* ``range_clear()` clears a range of cells

:::

::: {.column width="40%"}

{width=50%}

:::

::::

### `haven`

:::: {.columns}

::: {.column width="60%"}

* `write_dta()` writes Stata DTA files

* `write_sav()` writes SPSS files

* `write_xpt()` writes SAS transport files

:::

::: {.column width="40%"}

{width=50%}

:::

::::

:::

# Lab{.section-title background-color="#99a486"}

## Importing & Tidying Data

::: {.incremental}

- Go to the [Lecture 5 Homepage](https://vsass.github.io/CSSS508/Lectures/Lecture5/CSSS508_Lecture5_index.html) and click on the link `Religion & Income` under the Data section.

- Click *File* > *Save* to download this data to the same folder where your source document^[`R` Script or `.qmd`] will be saved for this lab.

- Read in your data using the appropriate function from `readr`.

:::

# Tidying and Reshaping Data {.section-title background-color="#99a486"}

# {data-menu-title="`tidyr`" background-image="images/tidyr.png" background-size="contain" background-position="center" .section-title background-color="#1e4655"}

## Initial Spot Checks

First things to check after loading new data:

::: {.incremental}

* Did all the rows/columns from the original file make it in?

+ Check using `dim()` or `str()`

* Are the column names in good shape?

+ Use `names()` to check; re-read in using `col_names()` or fix with `rename()`

* Are there "decorative" blank rows or columns to remove?

+ `filter()` or `select()` out those rows/columns

* How are missing values represented: `NA`, `" "` (blank), `.` (period), `999`?

+ Read in the data again specifying `NA`s with the `na` argument

* Are there character data (e.g. ZIP codes with leading zeroes) being incorrectly represented as numeric or vice versa?

+ Read in the data again specifying desired `col_types`

:::

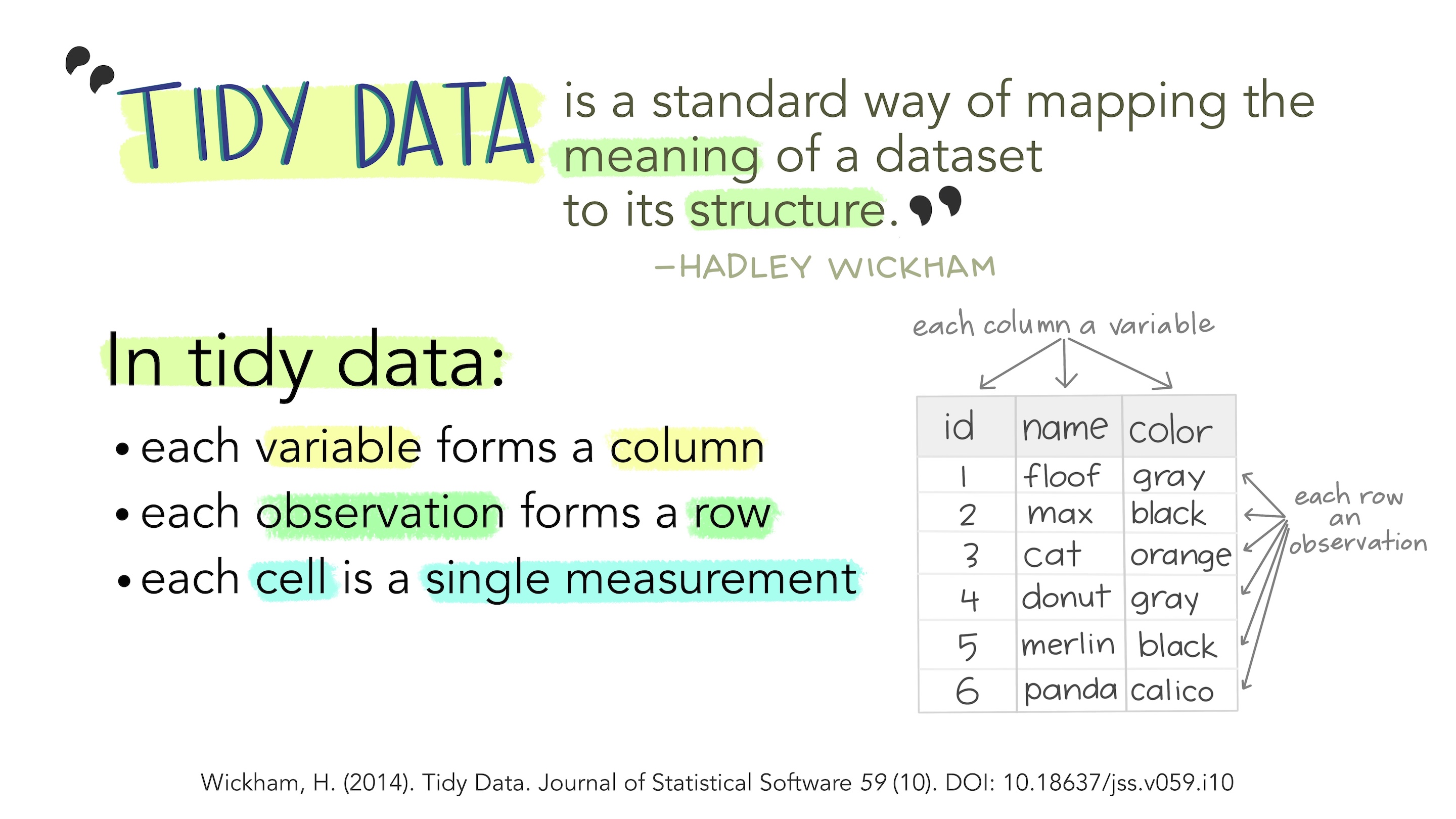

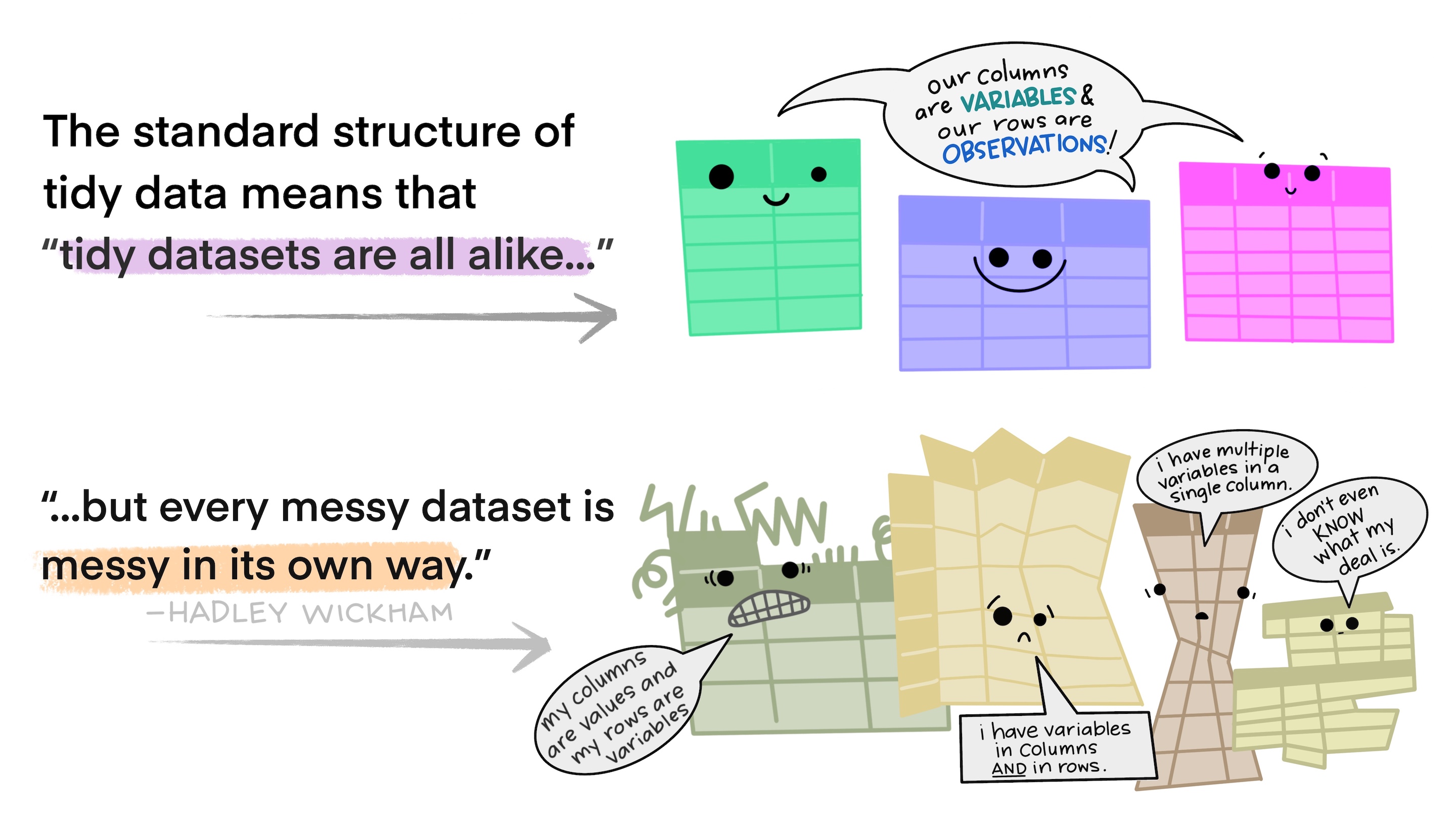

## What is Tidy Data?

. . .

{.absolute top=50 right=0 left=0}^[Illustrations from the [Openscapes](https://openscapes.org/) blog [Tidy Data for reproducibility, efficiency, and collaboration](https://www.openscapes.org/blog/2020/10/12/tidy-data/) by Julia Lowndes and Allison Horst]

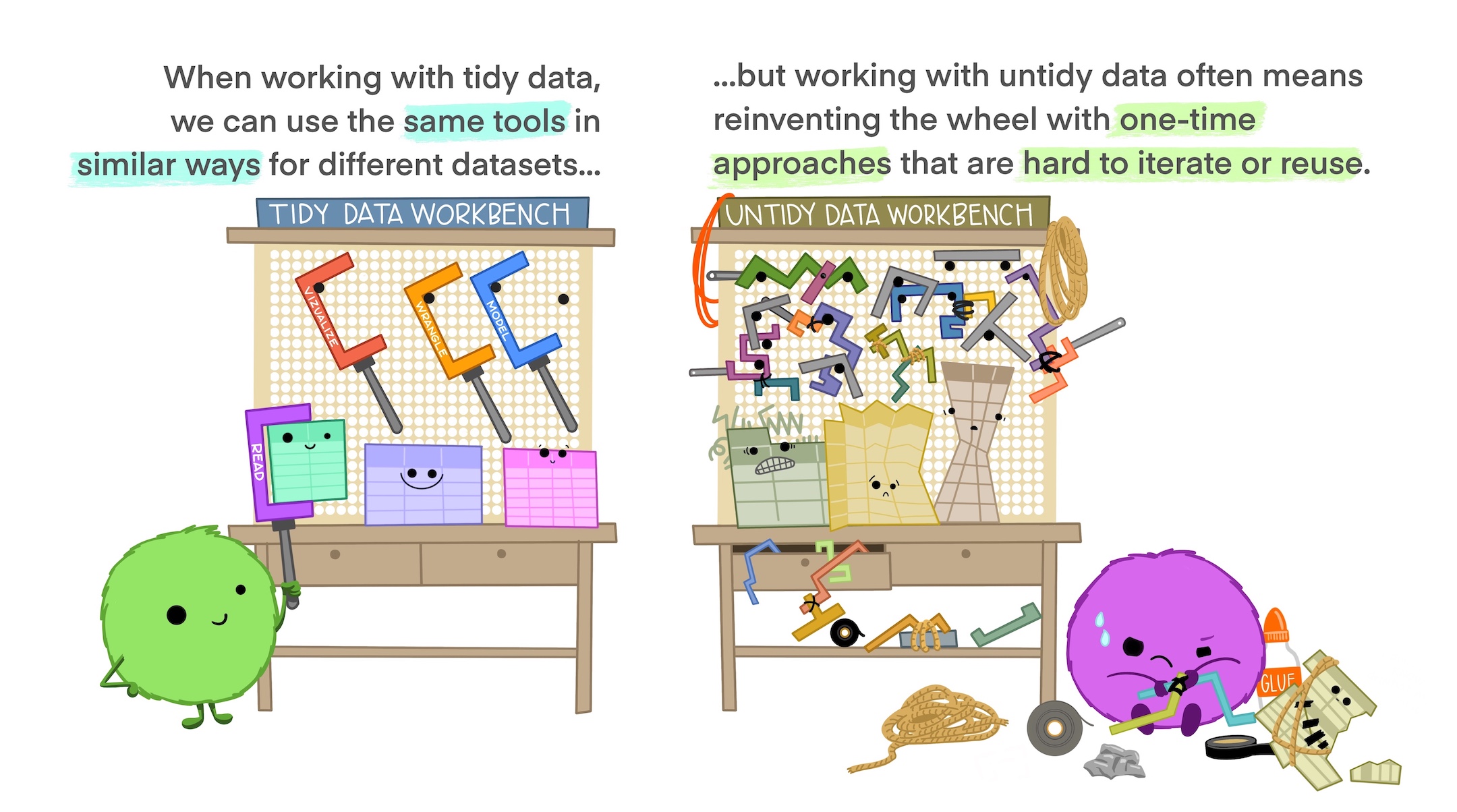

## Why do we Want Tidy Data?

::: {.incremental}

* **Easier to understand** many rows than many columns^[Placing variables in columns also leverages `R`'s vectorized nature, i.e. most built-in `R` functions work with values of vectors.]

* Required for **plotting** in `ggplot2`^[In fact, all tidyverse functions are designed to work with tidy data.]

* Required for many types of **statistical procedures** (e.g. hierarchical or mixed effects models)

* Fewer issues with **missing values and "imbalanced"** repeated measures data

* Having a consistent method for storing data means it's easier to learn the tools to work with it since there's an underlying uniformity.

:::

. . .

Most real-world data is not tidy because data are often organized for goals other than analysis (i.e. data entry) and most people aren't familiar with the principles of tidy data.

## Why do we Want Tidy Data?

. . .

{.absolute top=50 right=0 left=0}^[Illustrations from the [Openscapes](https://openscapes.org/) blog [Tidy Data for reproducibility, efficiency, and collaboration](https://www.openscapes.org/blog/2020/10/12/tidy-data/) by Julia Lowndes and Allison Horst]

## Slightly "Messy" Data

::::{.columns}

:::{.column width="60%"}

| **Program** | **First Year** | **Second Year** |

|-----------------|-----------:|---------:|

| Evans School | 10 | 6 |

| Arts & Sciences | 5 | 6 |

| Public Health | 2 | 3 |

| Other | 5 | 1 |

:::

:::{.column width="40%"}

* What is an **observation**?

+ A group of students from a program of a given year

* What are the **variables**?

+ Program, Year

* What are the **values**?

+ Program: Evans School, Arts & Sciences, Public Health, Other

+ Year: First, Second -- **in column headings. Bad!**

+ Count: **spread over two columns!**

:::

::::

## Tidy Version

::::{.columns}

:::{.column width="50%"}

| **Program** | **Year** | **Count** |

|-----------------|-----------:|---------:|

| Evans School | First | 10 |

| Evans School | Second | 6 |

| Arts & Sciences | First | 5 |

| Arts & Sciences | Second | 6 |

| Public Health | First | 2 |

| Public Health | Second | 3 |

| Other | First | 5 |

| Other | Second | 1 |

:::

:::{.column width="50%"}

* Each variable is a column.

* Each observation is a row.

* Each cell has a single value.

:::

::::

## Billboard is Just Ugly-Messy

```{r}

#| echo: false

billboard_2000_raw |>

head(10)

```

::: aside

Week columns continue up to `wk76`!

:::

## Billboard

::: {.incremental}

* What are the **observations** in the data?

+ Song on the Billboard chart each week

* What are the **variables** in the data?

+ Year, artist, track, song length, date entered Hot 100, week since first entered Hot 100 (**spread over many columns**), rank during week (**spread over many columns**)

* What are the **values** in the data?

+ e.g. 2000; 3 Doors Down; Kryptonite; 3 minutes 53 seconds; April 8, 2000; Week 3 (**stuck in column headings**); rank 68 (**spread over many columns**)

:::

## Reminder: Why do we Want Tidy Data?

. . .

{.absolute top=50 right=0 left=0}^[Illustrations from the [Openscapes](https://openscapes.org/) blog [Tidy Data for reproducibility, efficiency, and collaboration](https://www.openscapes.org/blog/2020/10/12/tidy-data/) by Julia Lowndes and Allison Horst]

## `tidyr`

The `tidyr` package provides functions to tidy up data.

. . .

**Key functions:**

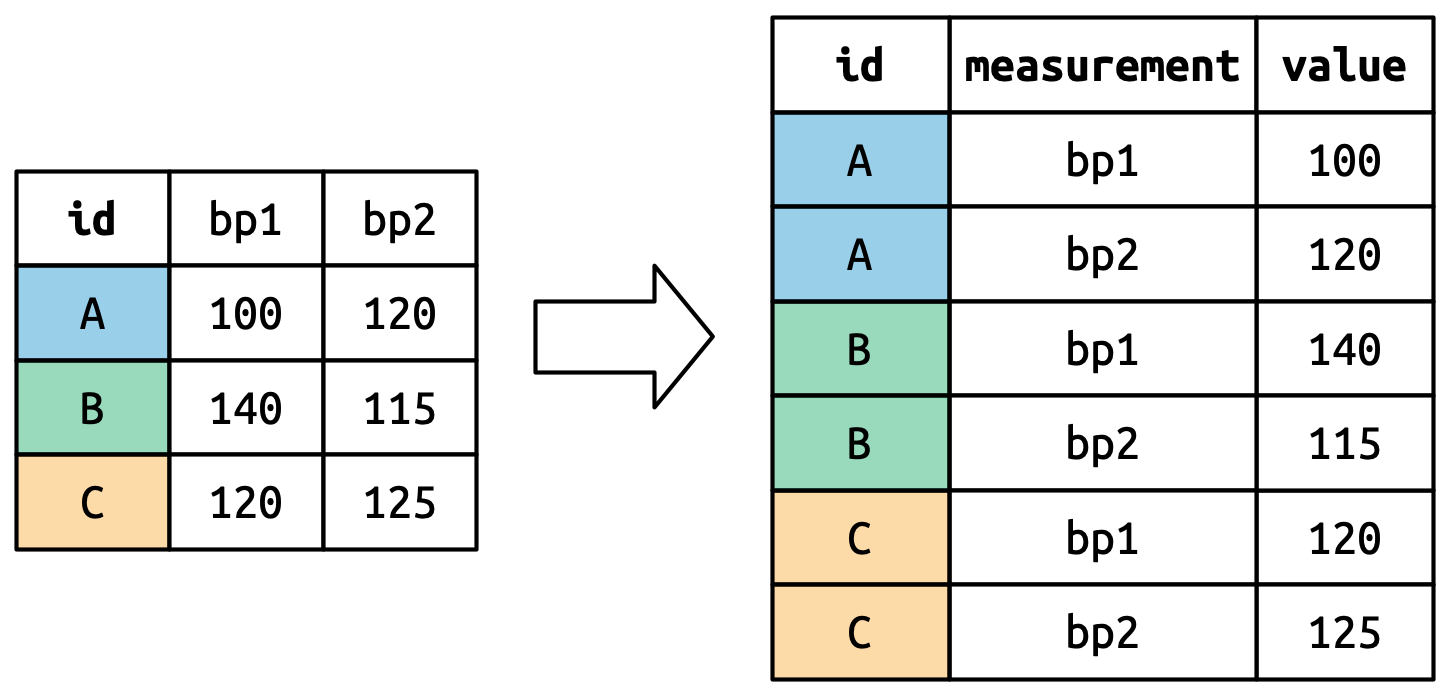

* **`pivot_longer()`**: takes a set of columns and pivots them down ("longer") to make two new columns (which you can name yourself):

* A `name` column that stores the original column names

* A `value` with the values in those original columns

. . .

* **`pivot_wider()`**: inverts `pivot_longer()` by taking two columns and pivoting them up and across ("wider") into multiple columns

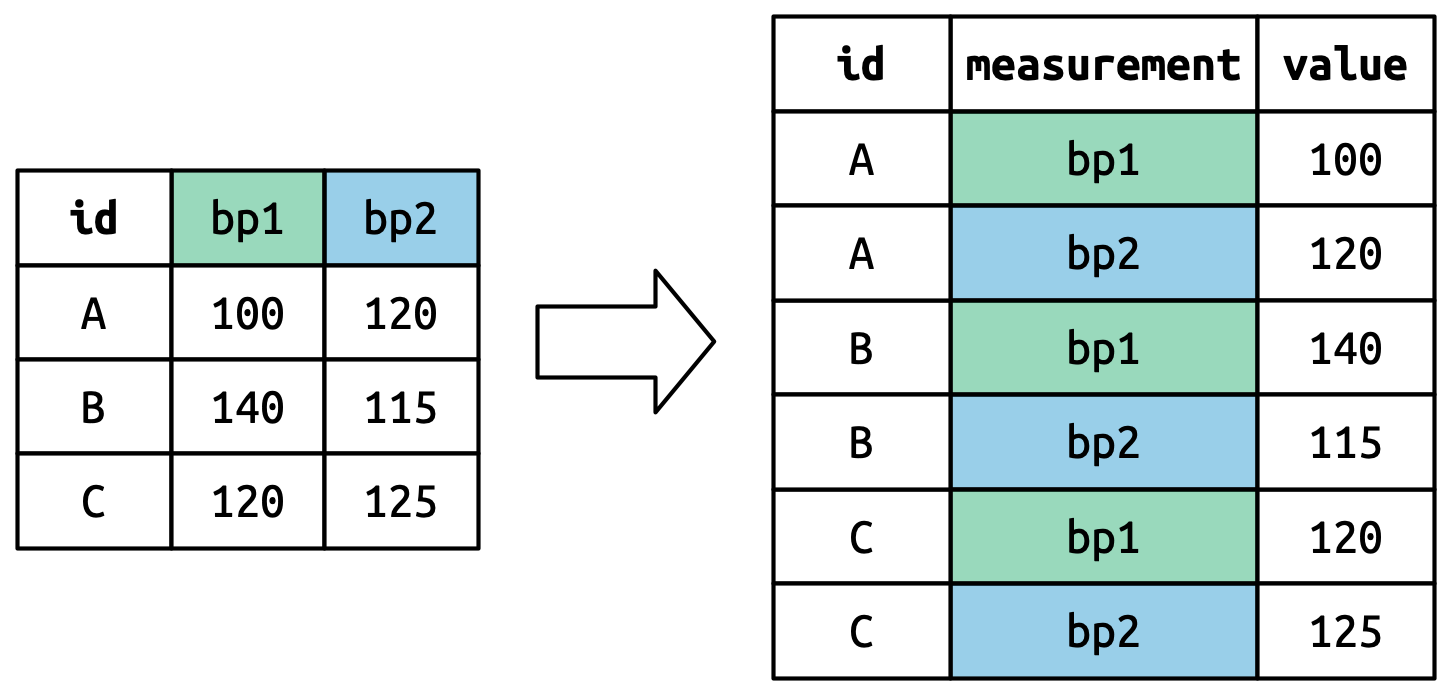

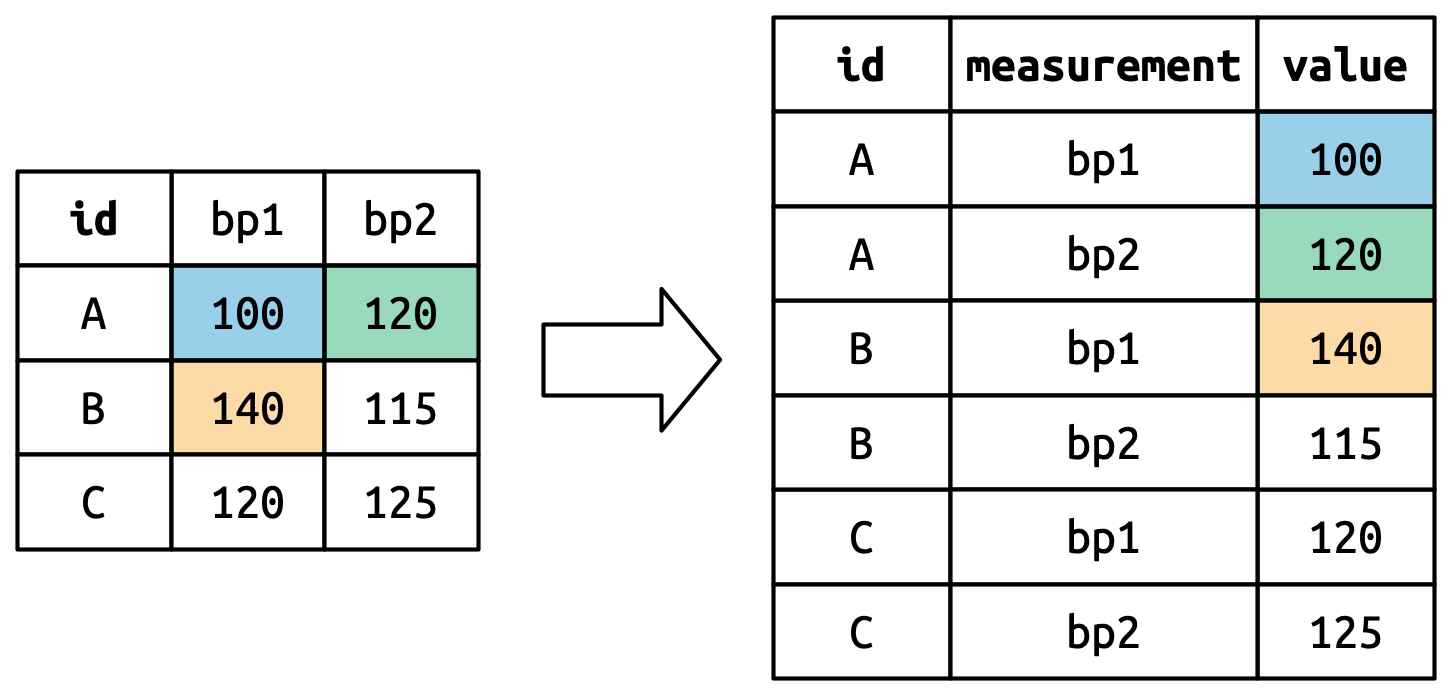

## `pivot_longer()`

This function usually takes three arguments:

::: {.incremental}

1. `cols`: The columns that need to be pivoted (are not variables)

2. `names_to`: Names the new variable that is stored in multiple columns

3. `values_to`: Names the variable stored in the cell values

:::

## `pivot_longer()`

This function usually takes three arguments:

1. `cols`: The columns that need to be pivoted (are not variables)

2. **`names_to`: Names the new variable that is stored in multiple columns**

3. `values_to`: Names the variable stored in the cell values

{fig-align="center"}

## `pivot_longer()`

This function usually takes three arguments:

1. `cols`: The columns that need to be pivoted (are not variables)

2. `names_to`: Names the new variable that is stored in multiple columns

3. **`values_to`: Names the variable stored in the cell values**

{fig-align="center"}

## `pivot_longer()`

This function usually takes three arguments:

1. `cols`: The columns that need to be pivoted (are not variables)

2. `names_to`: Names the new variable that is stored in multiple columns

3. `values_to`: Names the variable stored in the cell values

{fig-align="center"}

## `pivot_longer()` Example

```{r}

#| output-location: fragment

billboard_2000 <- billboard_renamed |> # <1>

pivot_longer(cols = starts_with("wk_"), # <2>

names_to ="week", # <3>

values_to = "rank") # <4>

billboard_2000 |> head(10)

```

1. `billboard_renamed` here has snake_case variable names and correctly specified column types

2. `starts_with()` is one of the helper functions from [`tidyselect`](https://tidyselect.r-lib.org/index.html) that helps select certain common patterns. We could have also used `cols = wk_1:wk_76`.

3. Creates a new variable `week` that will take values of the current column names we're pivoting

4. Creates a new variable `value` that will take the values from the respective `wk_` column and row

. . .

Now we have a single week column!

## Lots of Missing Values?!

```{r}

#| output-location: fragment

glimpse(billboard_2000)

```

::: {.fragment}

It looks like 2 Pac's song "Baby Don't Cry" was only on the Billboard Hot 100 for 7 weeks and then dropped off the charts.

:::

. . .

```{r}

#| output-location: fragment

summary(billboard_2000$rank)

```

::: {.fragment}

We don't want to keep the `r sum(is.na(billboard_2000$rank))` rows with missing ranks.

:::

## Pivoting Better: `values_drop_na`

Adding the argument `values_drop_na = TRUE` to `pivot_longer()` will remove rows with missing ranks. Since these `NA`s don’t really represent unknown observations (i.e. they were forced to exist by the structure of the dataset) this is an appropriate approach here.

```{r}

#| output-location: fragment

#| code-line-numbers: "|5"

billboard_2000 <- billboard_renamed |>

pivot_longer(cols = wk_1:wk_76,

names_to = "week",

values_to = "rank",

values_drop_na = TRUE)

summary(billboard_2000$rank)

```

. . .

No more `NA` values!

```{r}

#| output-location: fragment

dim(billboard_2000)

```

. . .

And way fewer rows!

## `parse_number()`

The week column is of the type `character`, but it should be `numeric.`

```{r}

#| output-location: fragment

head(billboard_2000$week)

```

. . .

`parse_number()` grabs just the numeric information from a character string:

```{r}

#| output-location: fragment

billboard_2000 <- billboard_2000 |>

mutate(week = parse_number(week)) # <1>

summary(billboard_2000$week)

```

1. You can use `mutate()` to overwrite existing columns.

. . .

More sophisticated tools for character strings will be covered later in this course!

## Use `pivot_longer` arguments

Alternatively (and more efficiently), there are a number of optional arguments for `pivot_longer` that are meant to help deal with naming issues.

. . .

```{r}

#| output-location: fragment

billboard_2000 <- billboard_renamed |>

pivot_longer(starts_with("wk_"),

names_to = "week",

values_to = "rank",

values_drop_na = TRUE,

names_prefix = "wk_", # <1>

names_transform = list(week = as.integer)) # <2>

head(billboard_2000, 5)

```

1. `names_prefix` is used to remove "wk_" from the values of `week`

2. `names_transform` converts `week` into an integer number. We'll cover `list`s in more detail next week but this is the structure required for this argument and it flexibly allows for multiple naming transformations to be applied at once.

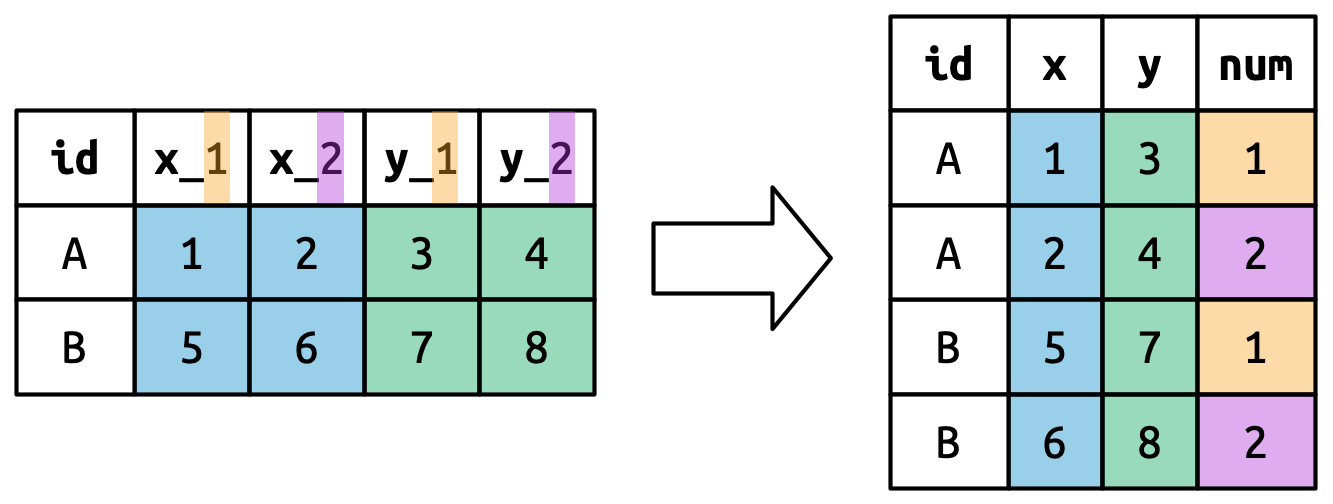

## Multiple Variables in Column Names

[A more challenging situation occurs when you have multiple pieces of information crammed into the column names, and you would like to store these in separate new variables.]{style="font-size: 85%;"}

. . .

[This dataset contains tuberculosis diagnoses collected by the World Health Organization.]{style="font-size: 85%;"}

```{r}

#| output-location: fragment

who2

```

. . .

[The first two columns are self explanatory but what's going on with the rest?]{style="font-size: 85%;"}

## Multiple Variables in Column Names

Data documentation and some minor investigation would lead you to figure out that the three elements in each of these column names are actually data!

. . .

```{r}

who2 |> select(-c(country, year)) |> names() # <1>

```

1. Remove the first two columns to see the more mysteriously-named variables.

::: {.aside}

::: {.r-stack}

::: {.fragment .fade-in-then-out}

* The first piece, `sp`/`sn`/`rel`/`ep`, describes the method used for the diagnosis

* The second piece, `m`/`f` is the gender (coded as a binary variable in this dataset)

* The third piece, `014`/`1524`/`2534`/`3544`/`4554`/`5564`/`65` is the age range (014 represents 0-14, for example)

:::

::: {.fragment}

To organize the six pieces of information in this dataset into six separate columns, we'll use `pivot_longer()` with a vector of column names for `names_to` and instructions for splitting the original variable names into pieces for `names_sep` as well as a column name for `values_to`!

:::

:::

:::

## Multiple Variables in Column Names

```{r}

#| output-location: fragment

who2 |>

pivot_longer(

cols = !(country:year), # <1>

names_to = c("diagnosis", "gender", "age"), # <2>

names_sep = "_", # <3>

values_to = "count"

)

```

1. You can also exclude variables by negating a vector of them.

2. `names_to` allows you to specify how to split up the current column names into new column names.

3. You can use `names_pattern` instead of `names_sep` to extract variables from more complicated naming scenarios once you've learned regular expressions. You'll get a basic introduction to them in a few weeks.

## Variable & Values in Column Names

This dataset contains data about five families, with the names and dates of birth of up to two children.

```{r}

#| output-location: fragment

household

```

. . .

The new challenge in this dataset is that the column names contain the names of two variables (`dob`, `name`) and the *values* of another (`child`, with values `1` or `2`).

## Variable & Values in Column Names

```{r}

#| output-location: column-fragment

household |>

pivot_longer(

cols = !family,

names_to = c(".value", "child"), # <1>

names_sep = "_",

values_drop_na = TRUE # <2>

)

```

1. `.value` isn’t the name of a variable but a unique value that tells `pivot_longer` to use the first component of the pivoted column name as a variable name in the output.

2. Using `values_drop_na = TRUE` again since not every family has 2 children.

. . .

{width=75% fig-align="center"}

## `pivot_wider`

`pivot_wider()` is the opposite of `pivot_longer()`, which you use if you have data for the same observation taking up multiple rows.

. . .

Here's an example of data that we probably want to pivot wider (unless we want to plot each statistic in its own facet):

```{r}

#| echo: false

long_stats <- tibble(Group = c(rep("A", 3), rep("B", 3)),

Statistic = rep(c("Mean", "Median", "SD"), 2),

Value = c(1.28, 1.0, 0.72, 2.81, 2, 1.33))

long_stats

```

. . .

A common cue to use `pivot_wider()` is having measurements of different quantities in the same column.

## `pivot_wider` Example

```{r}

#| output-location: fragment

wide_stats <- long_stats |>

pivot_wider(id_cols = Group, # <1>

names_from = Statistic, # <2>

values_from = Value) # <3>

wide_stats

```

1. `id_cols` is the column that uniquely identifies each row in the new dataset. Default is everything not in `names_from` and `values_from`.

2. `names_from` provides the names that will be used for the new columns

3. `values_from` provides the values that will be used to populate the cells of the new columns.

. . .

[`pivot_wider()`](https://tidyr.tidyverse.org/reference/pivot_wider.html) also has a number of optional `names_*` and `values_*` arguments for more complicated transformations.

. . .

::: {.callout-warning icon=false}

## {{< fa triangle-exclamation >}} Nested Data

If there are multiple rows in the input that correspond to one cell in the output you'll get a list-column. \

This means that you:

\

1) need to fix something in your code/data because it shouldn't be nested in this way or \

2) need to use `unnest_wider()` or `unnest_longer()` in order to access this column of data. \

More on this [here](https://r4ds.hadley.nz/rectangling.html#unnesting).

:::

# Lab{.section-title background-color="#99a486"}

## Importing & Tidying Data

- [Go to the [Lecture 5 Homepage](https://vsass.github.io/CSSS508/Lectures/Lecture5/CSSS508_Lecture5_index.html) and click on the link `Religion & Income` under the Data section.]{.semi-transparent}

- [Click *File* > *Save* to download this data to the same folder where your source document^[`R` Script or `.qmd`] will be saved for this lab.]{.semi-transparent}

- [Read in your data using the appropriate function from `readr`.]{.semi-transparent}

- Pivot your data to make it tidy^[There should be 3 columns in your final dataset].

# Break!{.section-title background-color="#1e4655"}

## Data types in `R`

Going back to our list of data types in `R`:

. . .

* Logicals

* Factors

* Date/Date-time

* Numbers

* Missing Values

* Strings

## Data types in `R`

Going back to our list of data types in `R`:

* ~~Logicals~~

* Factors

* Date/Date-time

* Numbers

* Missing Values

* Strings

## Data types in `R`

Going back to our list of data types in `R`:

* ~~Logicals~~

* Factors

* Date/Date-time

* Numbers

* Missing Values

* Strings

# Working with Factors {.section-title background-color="#99a486"}

## {data-menu-title="Forcats" background-image="images/forcats.png" background-size="contain" background-position="center" .section-title background-color="#1e4655"}

## Why Use Factors?

Factors are a special class of data specifically for categorical variables^[For categoricals; forcats is also an anagram of factors.] which have a fixed, known, and mutually exclusive set of possible values^[Factors can also be created from character strings that you want to display in non-alphabetical order].

. . .

Imagine we have a variable that records the month that an event occurred.

```{r}

month <- c("Dec", "Apr", "Jan", "Mar")

```

. . .

The two main issues with coding this simply as a character string are that:

@) It doesn't help catch *spelling errors* and that

```{r}

#| eval: false

month <- c("Dec", "Apr", "Jam", "Mar")

```

. . .

@) Characters are *sorted alphabetically*, which is not necessarily intuitive or useful for your variable.

```{r}

sort(month)

```

## Factors

Factors have an additional specification called levels. These are the categories of the categorical variable. We can create a vector of the levels first:

```{r}

month_levels <- c(

"Jan", "Feb", "Mar", "Apr", "May", "Jun",

"Jul", "Aug", "Sep", "Oct", "Nov", "Dec"

)

```

. . .

And then we can create a factor like so:

```{r}

month_factor <- factor(month, levels = month_levels)

month_factor

```

. . .

We can see that the levels specify in which order the categories should be displayed:

```{r}

sort(month_factor)

```

## Creating Factors

`factor` is Base `R`'s function for creating factors while `fct` is `forcats` function for making factors. A couple of things to note about their differences:

. . .

:::: {.columns}

::: {.column width="50%"}

#### `factor`

* Any values not specified as a level will be **silently** converted to `NA`

* Without specified levels, they'll be created from the data in **alphabetical** order^[This is risky to rely on since not every computer will sort strings the same way.]

:::

::: {.column width="50%"}

#### `fct`

* Will send a **error message** if a value exists outside the specified levels

* Without specified levels, they'll be created from the data in **order of first appearance**

:::

::::

. . .

You can create a factor by specifying `col_factor()` when reading in data with `readr`:

```{r}

#| eval: false

df <- read_csv(csv, col_types = cols(month = col_factor(month_levels)))

```

. . .

If you need to access the levels directly you can use the Base `R` function `levels()`.

```{r}

levels(month_factor)

```

## Changing the Order of Levels

One of the more common data manipulations you'll want to do with factors is to change the ordering of the levels. This could be to put them in a more intuitive order but also to make a visualization clearer and more impactful.

. . .

Let's use a subset of the General Social Survey^[Once you've loaded `forcats` you can run `?gss_cat` to learn more.] data to see what this might look like.

```{r}

gss_cat

```

## Changing the Order of Levels

There are four related functions to change the level ordering in `forcats`.

. . .

**`fct_reorder()`**

```{r}

#| eval: false

fct_reorder(.f = factor, # <1>

.x = ordering_vector, # <2>

.fun = optional_function) # <3>

```

1. `factor` is the factor to reorder (or a character string to be turned into a factor)

2. `ordering_vector` specifies how to reorder `factor`

3. `optional_function` is applied if there are multiple values of `ordering_vector` for each value of `factor` (the default is to take the median)

. . .

**`fct_relevel()`**

```{r}

#| eval: false

fct_relevel(.f = factor,

... = value, # <4>

after = placement) # <5>

```

4. `value` is either a function (i.e. `sort`) or a character level (default is to move it to the front of the vector)

5. `placement` is an optional vector index where the level should be placed

. . .

**`fct_reorder2()`**

```{r}

#| eval: false

fct_reorder2(.f = factor,

.x = vector1, # <6>

.y = vector2) # <6>

```

6. `fct_reorder2` reorders `factor` by the values of `vector2` associated with the largest values of `vector1.`

. . .

**`fct_infreq()`**

```{r}

#| eval: false

fct_infreq(.f = factor) # <7>

```

7. `fct_infreq` reorders `factor` in decreasing frequency. See other variations [here](https://forcats.tidyverse.org/reference/fct_inorder.html). Use with `fct_rev()` for increasing frequency.

## Changing the Order of Levels {{< fa scroll >}} {.scrollable}

There are four related functions to change the level ordering in `forcats`.

::: {.panel-tabset}

### fct_reorder^[Use `fct_reorder` and `fct_reorder2` for categorical variables where ordering is arbitary.]

**Without `fct_reorder()`**

```{r}

#| fig-width: 12

#| fig-height: 6

#| fig-align: center

#| code-fold: show

relig_summary <- gss_cat |>

summarize(

tvhours = mean(tvhours, na.rm = TRUE),

.by = relig

)

ggplot(relig_summary, aes(x = tvhours, y = relig)) +

geom_point() +

theme_minimal(base_size = 16)

```

**With `fct_reorder()`**

```{r}

#| fig-width: 12

#| fig-height: 6

#| fig-align: center

#| code-fold: show

relig_summary |>

mutate(

relig = fct_reorder(relig, tvhours) # <1>

) |>

ggplot(aes(x = tvhours, y = relig)) +

geom_point() +

theme_minimal(base_size = 16)

```

1. `relig` ordered by `tvhours`

### fct_relevel^[Use `fct_relevel` for categorical variables that already have an inherent ordering.]

**Without `fct_relevel()`**

```{r}

#| fig-width: 12

#| fig-height: 6

#| fig-align: center

#| code-fold: show

rincome_summary <- gss_cat |>

summarize(

age = mean(age, na.rm = TRUE),

.by = rincome

)

ggplot(rincome_summary, aes(x = age, y = rincome)) +

geom_point() +

theme_minimal(base_size = 16)

```

**With `fct_relevel()`**

```{r}

#| fig-width: 12

#| fig-height: 6

#| fig-align: center

#| code-fold: show

ggplot(rincome_summary,

aes(x = age, y = fct_relevel(rincome, "Not applicable"))) + # <1>

geom_point() +

theme_minimal(base_size = 16)

```

1. Sets `"Not applicable"` as the first level of `rincome`

### fct_reorder2

**Without `fct_reorder2()`**

```{r}

#| fig-width: 12

#| fig-height: 6

#| fig-align: center

#| code-fold: show

by_age <- gss_cat |>

filter(!is.na(age)) |>

count(age, marital) |>

mutate(

prop = n / sum(n),

.by = age

)

ggplot(by_age, aes(x = age, y = prop, color = marital)) +

geom_line(linewidth = 1) +

scale_color_brewer(palette = "Set1") +

theme_minimal(base_size = 16)

```

**With `fct_reorder()`**

```{r}

#| fig-width: 12

#| fig-height: 6

#| fig-align: center

#| code-fold: show

ggplot(by_age,

aes(x = age,

y = prop,

color = fct_reorder2(marital, age, prop))) + # <1>

geom_line(linewidth = 1) +

scale_color_brewer(palette = "Set1") +

labs(color = "marital") +

theme_minimal(base_size = 16)

```

1. `marital` is being reordered by `prop` when `age` is at its maximum.

### fct_infreq

**Without `fct_infreq()`**

```{r}

#| fig-width: 12

#| fig-height: 6

#| fig-align: center

#| code-fold: show

gss_cat |>

ggplot(aes(x = marital)) +

geom_bar() +

theme_minimal(base_size = 16)

```

**With `fct_infreq()`**

```{r}

#| fig-width: 12

#| fig-height: 6

#| fig-align: center

#| code-fold: show

gss_cat |>

mutate(marital = marital |> fct_infreq() |> fct_rev()) |> # <1>

ggplot(aes(x = marital)) +

geom_bar() +

theme_minimal(base_size = 16)

```

1. Orders `marital` levels by frequency (decreasing) and then reverses that order (increasing).

:::

## Changing the Value of Levels

You may also want to change the actual values of your factor levels. The main way to do this is `fct_recode()`.

. . .

```{r}

gss_cat |> count(partyid) # <1>

```

1. You can use `count()` to get the full list of levels for a variable and their respective counts.

## `fct_recode()`

:::: {.columns}

::: {.column width="60%"}

```{r}

#| eval: false

gss_cat |>

mutate(

partyid = fct_recode(partyid,

"Republican, strong" = "Strong republican",

"Republican, weak" = "Not str republican",

"Independent, near rep" = "Ind,near rep",

"Independent, near dem" = "Ind,near dem",

"Democrat, weak" = "Not str democrat",

"Democrat, strong" = "Strong democrat"

)

) |>

count(partyid)

```

:::

::: {.column width="40%"}

```{r}

#| echo: false

gss_cat |>

mutate(

partyid = fct_recode(partyid,

"Republican, strong" = "Strong republican",

"Republican, weak" = "Not str republican",

"Independent, near rep" = "Ind,near rep",

"Independent, near dem" = "Ind,near dem",

"Democrat, weak" = "Not str democrat",

"Democrat, strong" = "Strong democrat"

)

) |>

count(partyid)

```

:::

::::

. . .

Some features of `fct_recode()`:

::: {.incremental}

* Will leave the levels that aren’t explicitly mentioned, as is.

* Will warn you if you accidentally refer to a level that doesn’t exist.

* You can combine groups by assigning multiple old levels to the same new level.

:::

## `fct_collapse()`

A useful variant of `fct_recode()` is `fct_collapse()` which will allow you to collapse a lot of levels at once.

. . .

```{r}

gss_cat |>

mutate(

partyid = fct_collapse(partyid,

"other" = c("No answer", "Don't know", "Other party"),

"rep" = c("Strong republican", "Not str republican"),

"ind" = c("Ind,near rep", "Independent", "Ind,near dem"),

"dem" = c("Not str democrat", "Strong democrat")

)

) |>

count(partyid)

```

## `fct_lump_*`

Sometimes you'll have several levels of a variable that have a small enough `N` to warrant grouping them together into an `other` category. The family of `fct_lump_*` functions are designed to help with this.

. . .

```{r}

gss_cat |>

mutate(relig = fct_lump_n(relig, n = 10)) |> # <1>

count(relig, sort = TRUE)

```

1. Other functions include: `fct_lump_min()`, `fct_lump_prop()`, `fct_lump_lowfreq()`. Read more about them [here](https://forcats.tidyverse.org/reference/fct_lump.html).

## Ordered Factors

So far we've mostly been discussing how to code nominal variables, or categorical variables that have no inherent ordering.

. . .

If you want to specify that your factor has a strict order you can classify it as a ordered factor.

```{r}

ordered(c("a", "b", "c")) # <1>

```

1. Ordered factors imply a strict ordering and equal distance between levels: the first level is “less than” the second level by the same amount that the second level is “less than” the third level, and so on.

. . .

In practice there are only two ways in which ordered factors are different than factors:

:::{.incremental}

1) `scale_color_viridis()`/`scale_fill_viridis()` will be used automatically when mapping an ordered factor in `ggplot2` because it implies an ordered ranking

2) If you use an ordered function in a linear model, it will use “polygonal contrasts”. You can learn more about what this means [here](https://debruine.github.io/faux/articles/contrasts.html).

:::

# Lab{.section-title background-color="#99a486"}

## Importing & Tidying Data

- [Go to the [Lecture 5 Homepage](https://vsass.github.io/CSSS508/Lectures/Lecture5/CSSS508_Lecture5_index.html) and click on the link `Religion & Income` under the Data section.]{.semi-transparent}

- [Click *File* > *Save* to download this data to the same folder where your source document^[`R` Script or `.qmd`] will be saved for this lab.]{.semi-transparent}

- [Read in your data using the appropriate function from `readr`.]{.semi-transparent}

- [Pivot your data to make it tidy^[There should be 3 columns in your final dataset].]{.semi-transparent}

- Turn two of the variables into factors^[Hint: Which two variables are categorical?].

# Wrangling Date/

Date-Time Data {.section-title background-color="#99a486"}

# {data-menu-title=`lubridate` background-image="images/lubridate.png" background-size="contain" background-position="center" .section-title background-color="#1e4655"}

## Date and Date-Time

While they may look like character strings, Dates, Date-Times and Times^[The `hms` package can be used to deal with times but they're not very common.] are each separate classes of data.

:::: {.columns}

::: {.column width="33%"}

::: {.fragment}

**Data Type**

* Date

* Date

* Date-Time

* Date-Time

* Time

:::

:::

::: {.column width="34%"}

::: {.fragment}

**Package**

* `base`

* `lubridate`

* `base`

* `lubridate`

* `hms`

:::

:::

::: {.column width="33%"}

::: {.fragment}

**Reference in `R`**

* `POSIXct`

* `date`

* `POSIXlt`

* `dttm`

* `time`

:::

:::

::::

. . .

Dates and times are challenging data types because there are physical properties but also additional geopolitical definitions that don't always neatly align with physical reality.

## Creating Dates/Date-Times {{< fa scroll >}} {.scrollable}

::: {.panel-tabset}

### On Import

If your data is in `ISO8601` date or date-time format^[The international standard for writing dates.] `readr` will automatically recognize it and read it in as a date/date-time.

::: {.fragment}

If you're reading in a different date/date-time format you can use `col_types` plus `col_date()` or `col_datetime()` along with a date-time format.

:::

::: {.fragment}

::: {.custom-small}

| Type | Code | Meaning | Example |

|-------|-------|--------------------------------|-----------------|

| Year | `%Y` | 4 digit year | 2021 |

| | `%y` | 2 digit year | 21 |

| Month | `%m` | Number | 2 |

| | `%b` | Abbreviated name | Feb |

| | `%B`^[If you're using either `%b` or `%B` and working with non-English dates, you’ll also need to provide a locale(). See [these functions](https://readr.tidyverse.org/reference/date_names.html) for more information.] | Full name | February |

| Day | `%d` | Two digits | 02 |

| | `%e` | One or two digits | 2 |

| Time | `%H` | 24-hour hour | 13 |

| | `%I` | 12-hour hour | 1 |

| | `%p` | AM/PM | pm |

| | `%M` | Minutes | 35 |

| | `%S` | Seconds | 45 |

| | `%OS` | Seconds with decimal component | 45.35 |

| | `%Z` | Time zone name | America/Chicago |

| | `%z` | Offset from UTC | +0800 |

| Other | `%.` | Skip one non-digit | : |

| | `%*` | Skip any number of non-digits | |

: All date formats understood by readr^[Note that no matter how you specify the date format, it’s always displayed the same way once you get it into R: `%Y-%m-%d`]

:::

:::

::: {.fragment}

```{r}

csv <- "

date

05/22/23

"

read_csv(csv, col_types = cols(date = col_date("%m/%d/%y")))

```

:::

### String

You can also use lubridate's helper functions to specify a date format automatically.

```{r}

ymd("2017-01-31")

mdy("January 31st, 2017")

dmy("31-Jan-2017")

```

::: {.fragment}

If you need to specify a date-time you can use these helper functions:

```{r}

ymd_hms("2017-01-31 20:11:59")

mdy_hm("01/31/2017 08:01")

```

:::

::: {.fragment}

If you provide a date object with a timezone it'll automatically be converted to a date-time object.

```{r}

ymd("2017-01-31", tz = "UTC")

```

:::

### Individual Components

If you have time elements in separate variables, like in the `flights` dataset...

```{r}

#| output-location: fragment

library(nycflights13)

flights |>

select(year, month, day, hour, minute)

```

::: {.fragment}

...you can use `make_date` or `make_datetime` to create your date/time object.

```{r}

#| output-location: fragment

flights2 <- flights |>

select(year, month, day, hour, minute) |>

mutate(departure = make_datetime(year, month, day, hour, minute))

flights2

```

:::

::: {.fragment}

Note that when you use date-times in a numeric context (like in a histogram), 1 means 1 second, so a `binwidth` of 86400 means one day. For dates, 1 means 1 day.

```{r}

#| fig-align: center

#| fig-width: 12

#| fig-height: 6

ggplot(flights2, aes(x = departure)) +

geom_freqpoly(binwidth = 86400)

```

:::

```{r}

#| eval: false

#| echo: false

`Weeks at #1` = sum(rank == 1)

billboard_2000 |>

mutate(date = if_else(week == 1,

date_entered,

date_entered %m+% weeks(x = week))) |>

mutate(top10_weeks = sum(rank <= 10),

.by = track) |>

filter(top10_weeks > 0) |>

ggplot(aes(x = date, y = rank, group = track, color = top10_weeks)) +

geom_point() +

geom_line() +

scale_color_manual(palette = "Viridis")

billboard_2000 |> filter(track == "Try Again") |>

mutate(date = if_else(week == 1,

date_entered,

date_entered %m+% weeks(x = week))) |>

ggplot(aes(x = date, y = rank, group = track)) +

geom_point() +

geom_line()

```

### Existing Object

`as_datetime()` and `as_date()` are used to coerce existing date/time objects into their counterpart.

```{r}

as_datetime(today())

as_date(now())

```

::: {.fragment}

While `ISO8601` format is read in as a string of human-readable date/times, another common date/time format, `Unix` time, is represented by the number of seconds that have elapsed since 1 January 1970 at 0:00:00 UTC.

:::

::: {.fragment}

If the offset is in seconds you'll want to use `as_datetime()` and if it's in days use `as_date()` for the conversion.

```{r}

as_datetime(1)

as_datetime(5057)

as_date(1)

as_date(5057)

```

:::

:::

## Extracting Time Components

We've discussed how to make a date/time object from individual components using `make_date()` and `make_datetime()` but what if you need to extract an element from a date/time?

. . .

There are a number of accessor functions that allow you to do just that.

```{r}

#| output-location: fragment

datetime <- ymd_hms("2020-01-30 12:30:45")

year(datetime)

month(datetime) # <1>

mday(datetime) # <2>

yday(datetime) # <3>

wday(datetime) # <4>

hour(datetime)

minute(datetime)

second(datetime)

```

1. You can set `label = TRUE` to return the abbreviated name and `abbr = FALSE` to return the full name (i.e. `month(datetime, label = TRUE)` returns **`r month(datetime, label = TRUE)`** and `month(datetime, label = TRUE, abbr = FALSE)` returns **`r month(datetime, label = TRUE, abbr = FALSE)`**)

2. Day of the month.

3. Day of the year.

4. Day of the week. You can set `label = TRUE` to return the abbreviated name and `abbr = FALSE` to return the full name (i.e. `wday(datetime, label = TRUE)` returns **`r wday(datetime, label = TRUE)`** and `wday(datetime, label = TRUE, abbr = FALSE)` returns **`r wday(datetime, label = TRUE, abbr = FALSE)`**)

## Changing Date/Times

While less common, you may need to recode a date/time variable which you can also do with these accessor functions.

. . .

```{r}

datetime <- ymd_hms("2020-01-30 08:05:35")

year(datetime) <- 2030

hour(datetime) <- hour(datetime) + 1

datetime

```

. . .

An alternative^[And more efficient when needing to specify multiple aspects of a date/time variable.] way to do this is by using `update()`:

. . .

```{r}

update(datetime, year = 2030, month = 2, mday = 2, hour = 2)

```

. . .

A nice feature of this function is that if values are too big for the unit in question, they will automatically roll over:

. . .

```{r}

update(ymd("2023-02-01"), mday = 30)

```

## Rounding Dates

Alternatively, you can round your date/time objects to a nearby unit with these three functions: `floor_date()`, `round_date()`, and `ceiling_date()`.

. . .

```{r}

#| output-location: fragment

datetime <- ymd_hms("2020-01-30 08:05:35")

floor_date(datetime, unit = "week") # <1>

round_date(datetime, unit = "week", week_start = 1) # <1>

ceiling_date(datetime, unit = "hour") # <1>

```

1. These functions take 3 arguments: a date/time vector, a unit of time to round by (valid base units include `second`, `minute`, `hour`, `day`, `week`, `month`, `bimonth`, `quarter`, `season`, `halfyear` and `year`), and the day of the week that the week starts (default is 7, or Sunday).

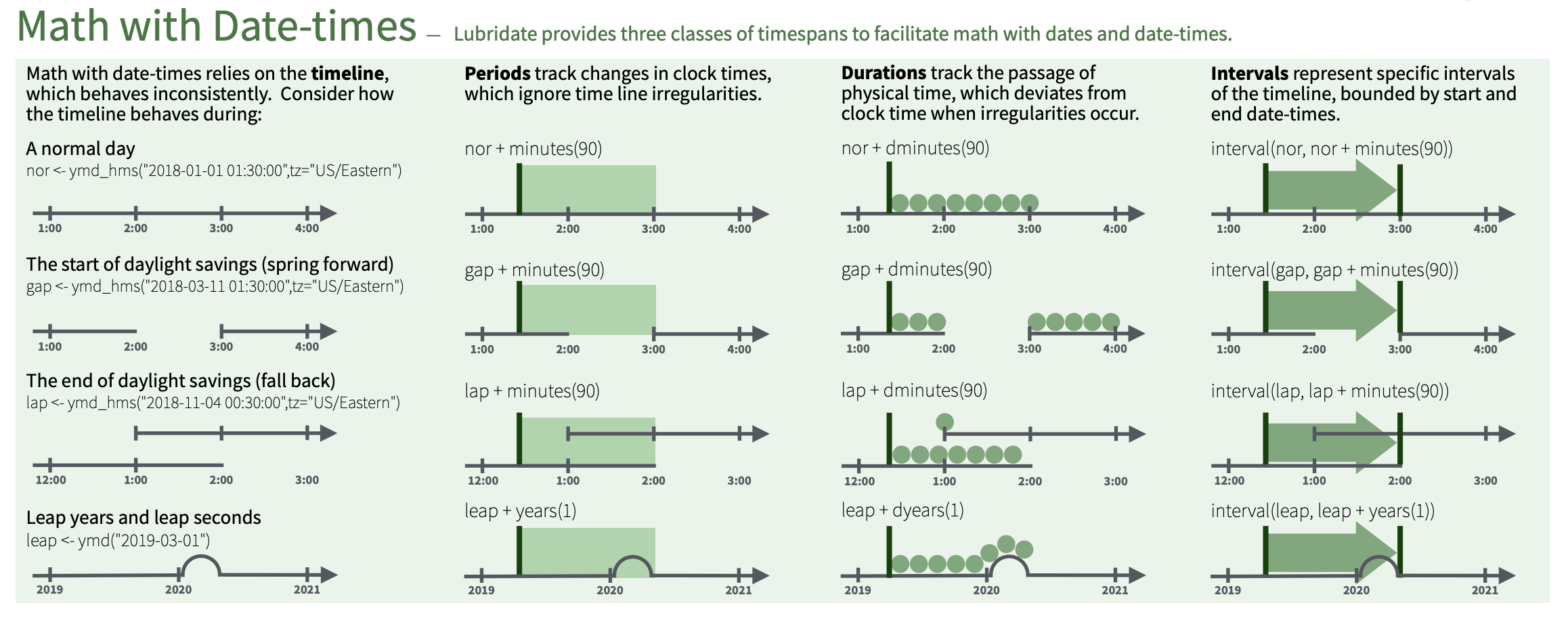

## Spans of time

There are three different timespan classes in `lubridate`, which reflect the complexity of date/time data.

. . .

{fig-align="center"}

## Durations

Durations represent precise physical time. When you subtract two dates, you'll get a `difftime` object.

. . .

```{r}

s_age <- today() - ymd("2023-05-28")

s_age # <1>

as.duration(s_age) # <2>

```

1. `difftime` objects record time spans in seconds, minutes, hours, days, or weeks. This is fairly ambiguous so `lubridate` provides a more consistent alternative: the `duration`, which always uses seconds.

2. Larger units are created by converting minutes, hours, days, weeks, and years to seconds.

. . .

There are a variety of constructors to create durations:

```{r}

dseconds(15)

dminutes(10)

dhours(c(12, 24))

ddays(0:5)

dweeks(3)

dyears(1) # <1>

```

1. Aggregating to larger time units is more problematic. A year uses the “average” number of days in a year, i.e. 365.25. There’s no way to convert a month to a duration, because there’s just too much variation.

## Math with Durations

You can add and multiply durations:

```{r}

2 * dyears(1)

dyears(1) + dweeks(12) + dhours(15)

```

. . .

\

Sometimes you'll get an unexpected results though:

```{r}

one_am <- ymd_hms("2026-03-08 01:00:00", tz = "America/New_York")

one_am

one_am + ddays(1) # <1>

```

1. Daylight Savings Time is a human construction so March 8th only has 23 hours because it’s when DST starts. If we add a full days worth of seconds we end up with a different time and a different time zone.

. . .

\

For this reason, `lubridate` also has `periods`.

## Periods

Periods represent "human" times like days and months and therefore do not have a fixed length in seconds.

. . .

```{r}

one_am

one_am + days(1)

```

. . .

Periods also have their own constructor functions:

. . .

```{r}

hours(c(12, 24))

days(7)

months(1:6)

```

## Math with Periods

We can add and multiply periods:

```{r}

10 * (months(6) + days(1))

days(50) + hours(25) + minutes(2)

```

. . .

And also add them to dates.

```{r}

# A leap year

ymd("2024-01-01") + dyears(1)

ymd("2024-01-01") + years(1)

# Daylight saving time

one_am + ddays(1)

one_am + days(1) # <1>

```

1. Periods are more likely than durations to do what you expect.

## Intervals

Intervals represent the length of a time span in human units. You can think of it as a duration with a starting point.

. . .

Imagine we wanted to know how many days are in a year?

```{r}

years(1) / days(1) # <1>

dyears(1) / ddays(365) # <2>

```

1. Since this value changed depending on the year in question, lubridate gives an estimate (or the average) for the number of days in *any* given year.

2. A similar thing happens for durations.

. . .

To be more accurate we need to give the specific range of time in questions.

. . .

```{r}

y2023 <- ymd("2023-01-01") %--% ymd("2024-01-01") # <3>

y2024 <- ymd("2024-01-01") %--% ymd("2025-01-01")

y2023

y2024

y2023 / days(1) # <4>

y2024 / days(1)

```

3. You can create an interval by writing `start %--% end`.

4. Now when we do this math we get the exact number of "human" days within the span of dates we specified.

## Time zones

[Time zones are a very complicated topic because different places have different rules which can, or already have, change(d) over time! To really clarify things `R` uses the international standard IANA time zones which use a consistent naming scheme: `{area}/{location}`.]{style="font-size: 85%;"}

. . .

```{r}

Sys.timezone() # <1>

```

1. Use `Sys.timezone()` to find out which timezone `R` thinks you're in.

```{r}

OlsonNames() # <2>

```

2. See the complete list of all time zone names with `OlsonNames()`

## Changing Time Zones

There are two ways you may want to change the time zone:

. . .

#### **Keep instant in time, change display**

```{r}

x <- ymd_hms("2024-06-01 12:00:00", tz = "America/New_York")

x

with_tz(x, tzone = "Australia/Lord_Howe") # <1>

```

1. The `+1030` offset is the difference relative to `UTC` (functionally similar to Greenwich Mean Time) which is `2024-06-01 16:00:00 UTC` in this example.

#### **Change underlying instant in time**

```{r}

y <- ymd_hms("2024-06-01 9:00:00", tz = "America/Los_Angeles")

y

force_tz(y, tzone = "Australia/Lord_Howe") # <2>

```

2. In this case the time zone was labelled incorrectly so by forcing the correct time zone we changed the underlying instant in time.

## Default Time Zones

[Time zones in `R` only control printing of date/time objects. Unless otherwise specified, lubridate always uses UTC^[UTC (Coordinated Universal Time) is the standard time zone used by the scientific community and is roughly equivalent to GMT (Greenwich Mean Time). It does not have DST, which makes a convenient representation for computation.]. ]{style="font-size: 80%;"}

. . .

[Operations that combine date-times, like `c()`, will often drop the time zone. In that case, the date-times will display in the time zone of the first element:]{style="font-size: 80%;"}

. . .

```{r}

a <- ymd_hms("2024-06-01 14:00:00", tz = "Asia/Gaza")

a # <1>

```

1. EEST = Eastern European Summer Time

```{r}

b <- ymd_hms("2024-06-01 14:00:00", tz = "Cuba")

b # <2>

```

2. CDT = Central Daylight Time

```{r}

c <- ymd_hms("2024-06-01 14:00:00", tz = "Africa/Kinshasa")

c # <3>

```

3. WAT = West Africa Time

```{r}

c(a, b, c)

```

# Lab{.section-title background-color="#99a486"}

## Importing & Tidying Data

- [Go to the [Lecture 5 Homepage](https://vsass.github.io/CSSS508/Lectures/Lecture5/CSSS508_Lecture5_index.html) and click on the link `Religion & Income` under the Data section.]{.semi-transparent}

- [Click *File* > *Save* to download this data to the same folder where your source document^[`R` Script or `.qmd`] will be saved for this lab.]{.semi-transparent}

- [Read in your data using the appropriate function from `readr`.]{.semi-transparent}

- [Pivot your data to make it tidy^[There should be 3 columns in your final dataset].]{.semi-transparent}

- [Turn two of the variables into factors^[Hint: Which two variables are categorical?].]{.semi-transparent}

- Excluding the income category `Don't know/refused`, which religion-income combination has the largest frequency?^[You can determine this by *summarizing* the data **or** creating a *visualization* of the data.]

## Answers

Click *File* > *Save* to download this data to the same folder where your source document^[`R` Script or `.qmd`] will be saved for this lab.

. . .

Read in your data using the appropriate function from `readr`.

```{r}

#| output-location: fragment

religion_income <- read_csv(file = "data/religion-income.csv",

col_types = c("f", rep("i", 10))) # <1>

glimpse(religion_income)

```

1. If you already know the data type for your variables you can explicitly read them in as such (i.e. in this case we'd want religion to be a factor since it is a categorical variable.)

## Answers {auto-animate="true"}

Pivot your data to make it tidy^[There should be 3 columns in your final dataset].

```{r}

#| output-location: fragment

religion_income_tidy <- religion_income |>

pivot_longer(cols = !religion,

names_to = "income",

values_to = "frequency")

religion_income_tidy

```

## Answers {auto-animate="true"}

Turn two of the variables into factors^[Hint: Which two variables are categorical?].

```{r}

#| output-location: fragment

religion_income_tidy <- religion_income |>

pivot_longer(cols = !religion,

names_to = "income",

values_to = "frequency") |>

mutate(income = fct(income,

levels = c("Don't know/refused", "<$10k", "$10-20k",

"$20-30k", "$30-40k", "$40-50k", "$50-75k",

"$75-100k", "$100-150k", ">150k")))

```

## Answers {auto-animate="true"}

Turn two of the variables into factors^[Hint: Which two variables are categorical?].

```{r}

#| eval: false

#| code-line-numbers: "|9"

religion_income_tidy <- religion_income |>

pivot_longer(cols = !religion,

names_to = "income",

values_to = "frequency") |>

mutate(income = fct(income,

levels = c("Don't know/refused", "<$10k", "$10-20k",

"$20-30k", "$30-40k", "$40-50k", "$50-75k",

"$75-100k", "$100-150k", ">150k")),

religion = fct(religion)) # <1>

```

1. If we hadn't initially read in religion as a factor, we'd need to recode it as one during this step.

## Answers

```{r}

#| output-location: fragment

religion_income_tidy

```

## Answers

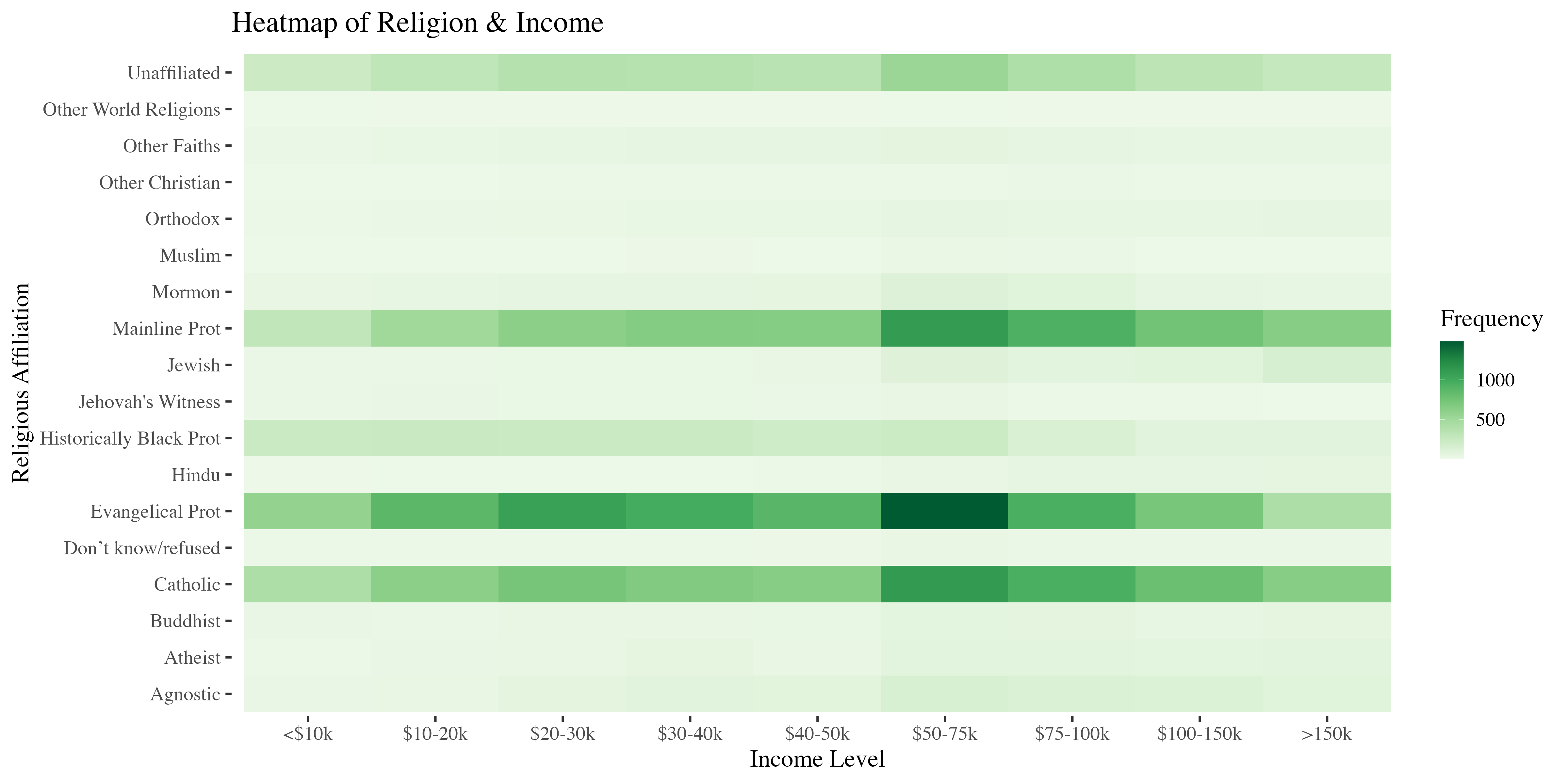

[Excluding the income category `Don't know/refused`, which religion-income combination has the largest frequency?^[You can determine this by *summarizing* the data **or** creating a *visualization* of the data.] ]{style="font-size: 85%;"}

. . .

#### Summarizing the data

```{r}

religion_income_tidy |>

filter(income != "Don't know/refused") |>

slice_max(frequency)

```

## Answers {{< fa scroll >}} {.scrollable}

[Excluding the income category `Don't know/refused`, which religion-income combination has the largest frequency?^[You can determine this by *summarizing* the data **or** creating a *visualization* of the data.] ]{style="font-size: 85%;"}

. . .

#### Data visualization

```{r}

#| eval: false

#| fig-align: center

#| fig-width: 20

#| fig-height: 15

#| code-fold: true

library(ggthemes)

heatmap <- religion_income_tidy |>

filter(income != "Don't know/refused") |>

ggplot(aes(x = income, y = religion, fill = frequency)) +

geom_raster() +

scale_fill_distiller("Frequency", palette = "Greens", direction = 1) +

labs(title = "Heatmap of Religion & Income",

x = "Income Level",

y = "Religious Affiliation") +

theme_tufte(base_size = 18)

```

```{r}

#| echo: false

#| eval: false

ggsave(plot = heatmap, filename = "images/heatmap.png", width = 16, height = 8, units = "in")

```

{fig-align="center" width=150% height=150%}

# Homework{.section-title background-color="#1e4655"}

## {data-menu-title="Homework 5" background-iframe="https://vsass.github.io/CSSS508/Homework/HW5/homework5.html" background-interactive=TRUE}