Chapter 2 (R)e-Introduction to statistics

The previous material served to get us started in R and to get a quick review of same basic graphical and descriptive statistics. Now we will begin to engage some new material and exploit the power of R to do statistical inference. Because inference is one of the hardest topics to master in statistics, we will also review some basic terminology that is required to move forward in learning more sophisticated statistical methods. To keep this “review” as short as possible, we will not consider every situation you learned in introductory statistics and instead focus exclusively on the situation where we have a quantitative response variable measured on two groups, adding a new graphic called a “pirate-plot” to help us see the differences in the observations in the groups.

2.1 Histograms, boxplots, and density curves

Part of learning statistics is learning to correctly use the terminology, some of which is used colloquially differently than it is used in formal statistical settings. The most commonly “misused” statistical term is data. In statistical parlance, we want to note the plurality of data. Specifically, datum is a single measurement, possibly on multiple random variables, and so it is appropriate to say that “a datum is…”. Once we move to discussing data, we are now referring to more than one observation, again on one, or possibly more than one, random variable, and so we need to use “data are…” when talking about our observations. We want to distinguish our use of the term “data” from its more colloquial11 usage that often involves treating it as singular. In a statistical setting “data” refers to measurements of our cases or units. When we summarize the results of a study (say providing the mean and SD), that information is not “data”. We used our data to generate that information. Sometimes we also use the term “data set” to refer to all our observations and this is a singular term to refer to the group of observations and this makes it really easy to make mistakes on the usage of “data”12.

It is also really important to note that variables have to vary – if you measure the level of education of your subjects but all are high school graduates, then you do not have a “variable”. You may not know if you have real variability in a “variable” until you explore the results you obtained.

The last, but probably most important, aspect of data is the context of the measurement. The “who, what, when, and where” of the collection of the observations is critical to the sort of conclusions we can make based on the results. The information on the study design provides information required to assess the scope of inference of the study. Generally, remember to think about the research questions the researchers were trying to answer and whether their study actually would answer those questions. There are no formulas to help us sort some of these things out, just critical thinking about the context of the measurements.

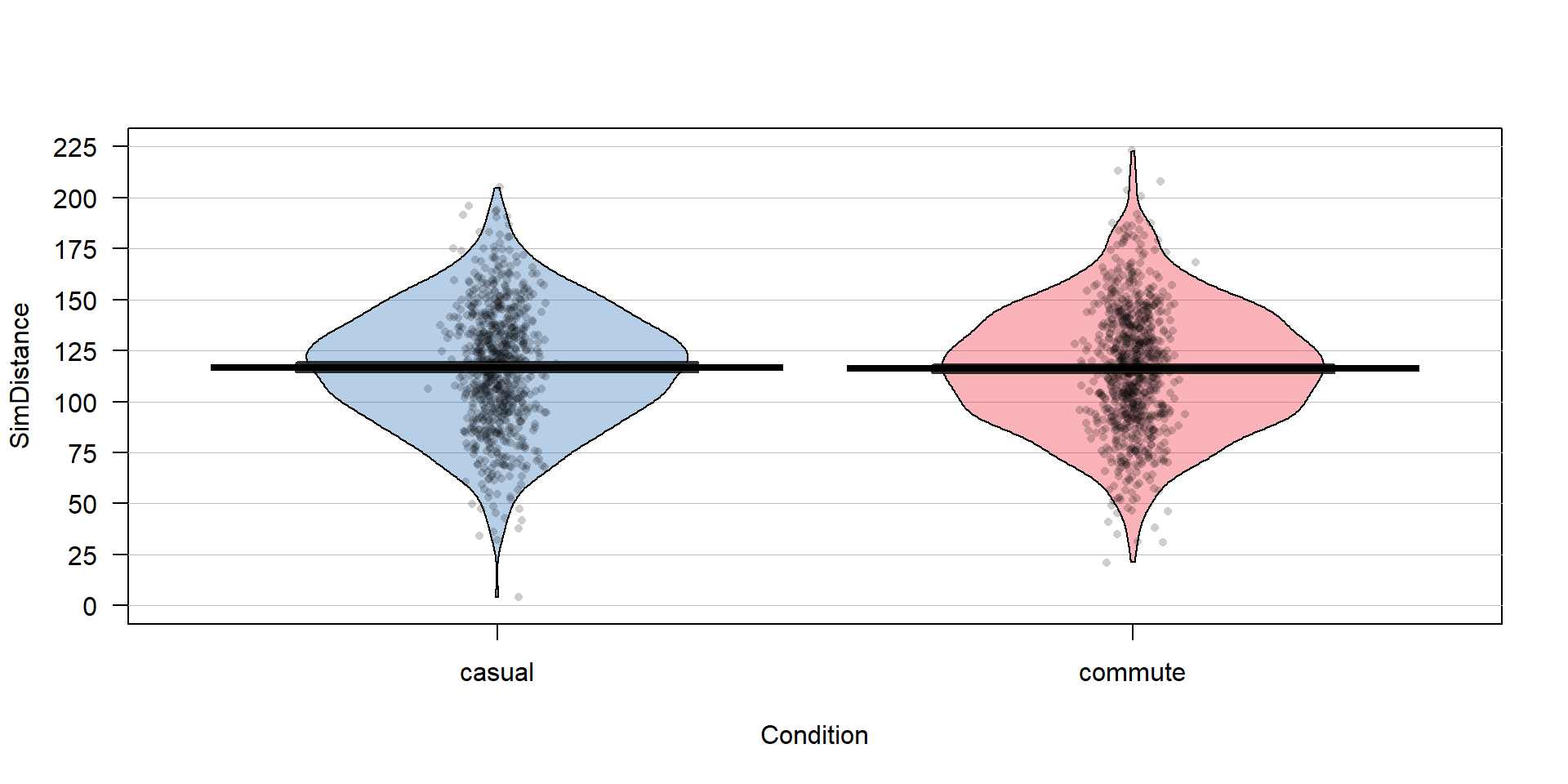

To make this concrete, consider the data collected from a study (Walker, Garrard, and Jowitt 2014) to investigate whether clothing worn by a bicyclist might impact the passing distance of cars. One of the authors wore seven different outfits (outfit for the day was chosen randomly by shuffling seven playing cards) on his regular 26 km commute near London in the United Kingdom. Using a specially instrumented bicycle, they measured how close the vehicles passed to the widest point on the handlebars. The seven outfits (“conditions”) that you can view at https://www.sciencedirect.com/science/article/pii/S0001457513004636 were:

COMMUTE: Plain cycling jersey and pants, reflective cycle clips, commuting helmet, and bike gloves.

CASUAL: Rugby shirt with pants tucked into socks, wool hat or baseball cap, plain gloves, and small backpack.

HIVIZ: Bright yellow reflective cycle commuting jacket, plain pants, reflective cycle clips, commuting helmet, and bike gloves.

RACER: Colorful, skin-tight, Tour de France cycle jersey with sponsor logos, Lycra bike shorts or tights, race helmet, and bike gloves.

NOVICE: Yellow reflective vest with “Novice Cyclist, Pass Slowly” and plain pants, reflective cycle clips, commuting helmet, and bike gloves.

POLICE: Yellow reflective vest with “POLICEwitness.com – Move Over – Camera Cyclist” and plain pants, reflective cycle clips, commuting helmet, and bike gloves.

POLITE: Yellow reflective vest with blue and white checked banding and the words “POLITE notice, Pass Slowly” looking similar to a police jacket and plain pants, reflective cycle clips, commuting helmet, and bike gloves.

They collected data (distance to the vehicle in cm for each car “overtake”) on between 8 and 11 rides in each outfit and between 737 and 868 “overtakings” across these rides. The outfit is a categorical predictor or explanatory variable) that has seven different levels here. The distance is the response variable and is a quantitative variable here13. Note that we do not have the information on which overtake came from which ride in the data provided or the conditions related to individual overtake observations other than the distance to the vehicle (they only included overtakings that had consistent conditions for the road and riding).

The data are posted on my website14 at http://www.math.montana.edu/courses/s217/documents/Walker2014_mod.csv if you want to download the file to a local directory and then import the data into R using “Import Dataset”. Or you can use the code in the following codechunk to directly read the data set into R using the URL.

suppressMessages(library(readr))

dd <- read_csv("http://www.math.montana.edu/courses/s217/documents/Walker2014_mod.csv")It is always good to review the data you have read by running the code and printing the tibble by typing the tibble name (here > dd) at the command prompt in the console, using the View function, (here View(dd)), to open a spreadsheet-like view, or using the head and tail functions have been show the first and last ten observations:

## # A tibble: 6 x 8

## Condition Distance Shirt Helmet Pants Gloves ReflectClips Backpack

## <chr> <dbl> <chr> <chr> <chr> <chr> <chr> <chr>

## 1 casual 132 Rugby hat plain plain no yes

## 2 casual 137 Rugby hat plain plain no yes

## 3 casual 174 Rugby hat plain plain no yes

## 4 casual 82 Rugby hat plain plain no yes

## 5 casual 106 Rugby hat plain plain no yes

## 6 casual 48 Rugby hat plain plain no yes## # A tibble: 6 x 8

## Condition Distance Shirt Helmet Pants Gloves ReflectClips Backpack

## <chr> <dbl> <chr> <chr> <chr> <chr> <chr> <chr>

## 1 racer 122 TourJersey race lycra bike yes no

## 2 racer 204 TourJersey race lycra bike yes no

## 3 racer 116 TourJersey race lycra bike yes no

## 4 racer 132 TourJersey race lycra bike yes no

## 5 racer 224 TourJersey race lycra bike yes no

## 6 racer 72 TourJersey race lycra bike yes noAnother option is to directly access specific rows and/or columns of the tibble, especially for larger data sets. In objects containing data, we can select certain rows and columns using the brackets, [..., ...], to specify the row (first element) and column (second element). For example, we can extract the datum in the fourth row and second column using dd[4,2]:

## # A tibble: 1 x 1

## Distance

## <dbl>

## 1 82This provides the distance (in cm) of a pass at 82 cm. To get all of either the rows or columns, a space is used instead of specifying a particular number. For example, the information in all the columns on the fourth observation can be obtained using dd[4, ]:

## # A tibble: 1 x 8

## Condition Distance Shirt Helmet Pants Gloves ReflectClips Backpack

## <chr> <dbl> <chr> <chr> <chr> <chr> <chr> <chr>

## 1 casual 82 Rugby hat plain plain no yesSo this was an observation from the casual condition that had a passing distance of 82 cm. The other columns describe some other specific aspects of the condition. To get a more complete sense of the data set, we can extract a suite of observations from each condition using their row numbers concatenated, c(), together, extracting all columns for two observations from each of the conditions based on their rows.

## # A tibble: 14 x 8

## Condition Distance Shirt Helmet Pants Gloves ReflectClips Backpack

## <chr> <dbl> <chr> <chr> <chr> <chr> <chr> <chr>

## 1 casual 132 Rugby hat plain plain no yes

## 2 casual 137 Rugby hat plain plain no yes

## 3 commute 70 PlainJers~ commut~ plain bike yes no

## 4 commute 151 PlainJers~ commut~ plain bike yes no

## 5 hiviz 94 Jacket commut~ plain bike yes no

## 6 hiviz 145 Jacket commut~ plain bike yes no

## 7 novice 12 Vest_Novi~ commut~ plain bike yes no

## 8 novice 122 Vest_Novi~ commut~ plain bike yes no

## 9 police 113 Vest_Poli~ commut~ plain bike yes no

## 10 police 174 Vest_Poli~ commut~ plain bike yes no

## 11 polite 156 Vest_Poli~ commut~ plain bike yes no

## 12 polite 14 Vest_Poli~ commut~ plain bike yes no

## 13 racer 104 TourJersey race lycra bike yes no

## 14 racer 141 TourJersey race lycra bike yes noNow we can see the Condition variable seems to have seven different levels, the Distance variable contains the overtake distance, and then a suite of columns that describe aspects of each outfit, such as the type of shirt or whether reflective cycling clips were used or not. We will only use the “Distance” and “Condition” variables to start with.

When working with data, we should always start with summarizing the sample size. We will use n for the number of subjects in the sample and denote the population size (if available) with N. Here, the sample size is n=5690. In this situation, we do not have a random sample from a population (these were all of the overtakes that met the criteria during the rides) so we cannot make inferences from our sample to a larger group (other rides or for other situations like different places, times, or riders). But we can assess whether there is a causal effect15: if sufficient evidence is found to conclude that there is some difference in the responses across the conditions, we can attribute those differences to the treatments applied, since the overtake events should be same otherwise due to the outfit being randomly assigned to the rides. The story of the data set – that it was collected on a particular route for a particular rider in the UK – becomes pretty important in thinking about the ramifications of any results. Are drivers in Montana or South Dakota different from drivers near London? Are the road and traffic conditions likely to be different? If so, then we should not assume that the detected differences, if detected, would also exist in some other location for a different rider. The lack of a random sample here from all the overtakes in the area (or more generally all that happen around the world) makes it impossible to assume that this set of overtakes might be like others. So there are definite limitations to the inferences in the following results. But it is still interesting to see if the outfits worn caused a difference in the mean overtake distances, even though the inferences are limited to the conditions in this individual’s commute. If this had been an observational study (suppose that the researcher could select their outfit), then we would have to avoid any of the “causal” language that we can consider here because the outfits were not randomly assigned to the rides. Without random assignment, the explanatory variable of outfit choice could be confounded with another characteristic of rides that might be related to the passing distances, such as wearing a particular outfit because of an expectation of heavy traffic or poor light conditions. Confounding is not the only reason to avoid causal statements with non-random assignment but the inability to separate the effect of other variables (measured or unmeasured) from the differences we are observing means that our inferences in these situations need to be carefully stated to avoid implying causal effects.

In order to get some summary statistics, we will rely on the R package called

mosaic (Pruim, Kaplan, and Horton 2019) as introduced previously. First (but only once),

you need to install the package, which can

be done either using the Packages tab in the lower right panel of RStudio or

using the install.packages function with quotes around the package name:

If you open a .Rmd file that contains code that incorporates packages and they are not installed, the bar at the top of the markdown document will prompt you to install those missing packages. This is the easiest way to get packages you might need installed. After making sure that any required packages are installed, use the library

function around the package name (no quotes now!) to load the package, something that

you need to do any time you want to use features of a package.

When you are loading a package, R might mention a need to install other packages. If the output says that it needs a package that is unavailable, then follow the same process noted above to install that package and then repeat trying to load the package you wanted. These are called package “dependencies” and are due to one package developer relying on functions that already exist in another package.

With tibbles, you have to declare categorical variables as “factors” to have R correctly handle the variables using the factor function. This can be a bit time repetitive but provides some utility for data wrangling in more complex situations to read in the data and then declare their type. For quantitative variables, this is not required and they are stored as numeric variables. The following code declares the categorical variables in the data set as factors and saves them back into the variables of the same names:

dd$Condition <- factor(dd$Condition)

dd$Shirt <- factor(dd$Shirt)

dd$Helmet <- factor(dd$Helmet)

dd$Pants <- factor(dd$Pants)

dd$Gloves <- factor(dd$Gloves)

dd$ReflectClips <- factor(dd$ReflectClips)

dd$Backpack <- factor(dd$Backpack) With many variables in a data set, it is often useful to get some

quick information about all of them; the summary function provides

useful information whether the variables are categorical or

quantitative and notes if any values were missing.

## Condition Distance Shirt Helmet Pants Gloves ReflectClips Backpack

## casual :779 Min. : 2.0 Jacket :737 commuter:4059 lycra: 852 bike :4911 no : 779 no :4911

## commute:857 1st Qu.: 99.0 PlainJersey:857 hat : 779 plain:4838 plain: 779 yes:4911 yes: 779

## hiviz :737 Median :117.0 Rugby :779 race : 852

## novice :807 Mean :117.1 TourJersey :852

## police :790 3rd Qu.:134.0 Vest_Novice:807

## polite :868 Max. :274.0 Vest_Police:790

## racer :852 Vest_Polite:868The output is organized by variable,

providing summary information based on the type of

variable, either counts by category for categorical variables or the 5-number summary plus the mean for the quantitative

variable Distance. If present, you would also get a count of missing values that are

called “NAs” in R. For the first variable, called Condition and that we might more explicitly name Outfit, we find counts of the

number of overtakes for each outfit: \(779\) out of \(5,690\) were when wearing the casual outfit, \(857\) for “commute”, and the other observations from the other five outfits, with the most observations when wearing the “polite” vest.

We can also see that overtake distances (variable

Distance) ranged from 2 cm to 274 cm with a median of 117 cm.

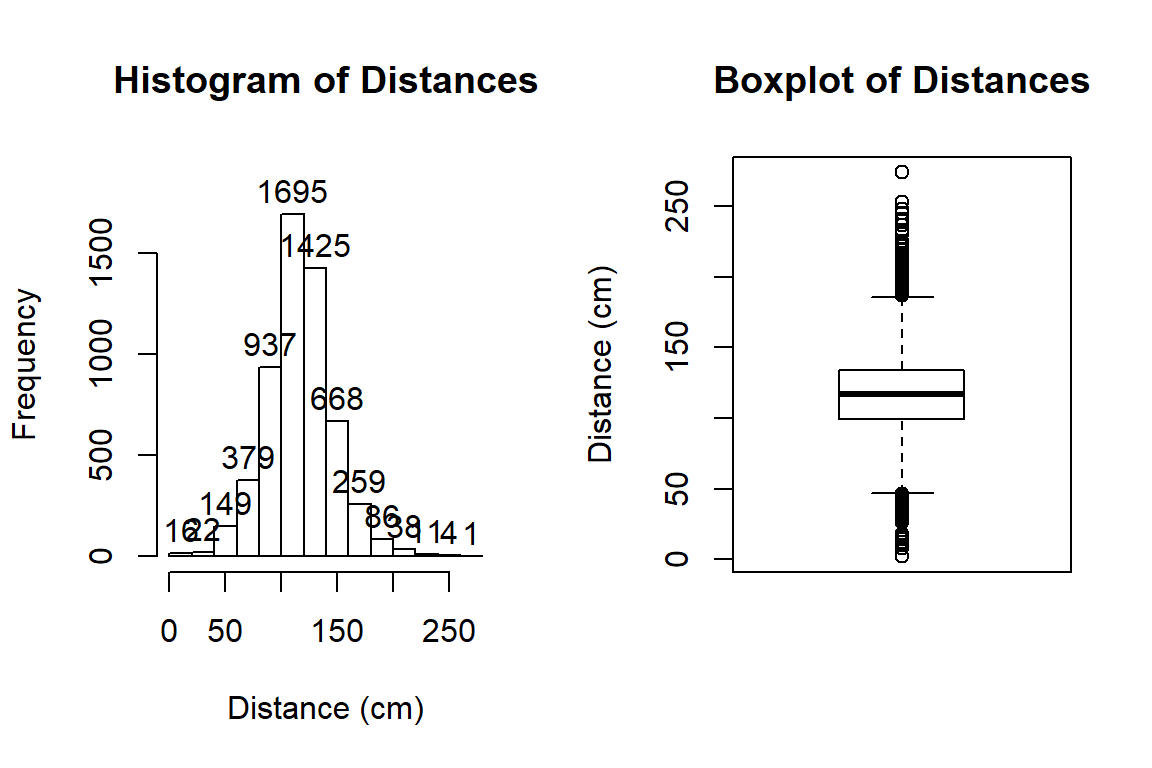

To accompany the numerical summaries, histograms and boxplots can

provide some initial information on the shape of the distribution of

the responses for the different Outfits. Figure 2.1

contains the histogram

and boxplot of Distance, ignoring any information on which outfit was being worn. The calls to the two plotting functions are

enhanced slightly to add better labels using xlab, ylab, and main.

Figure 2.1: Histogram and boxplot of passing distances in cm.

hist(dd$Distance, xlab="Distance (cm)", labels=T, main="Histogram of Distances")

boxplot(dd$Distance, ylab="Distance (cm)", main="Boxplot of Distances")The distribution appears to be relatively symmetric with many observations in both tails flagged as potential outliers. Despite being flagged as potential outliers, they seem to be part of a common distribution. In real data sets, outliers are commonly encountered and the first step is to verify that they were not errors in recording (if so, fixing or removing them is easily justified). If they cannot be easily dismissed or fixed, the next step is to study their impact on the statistical analyses performed, potentially considering reporting results with and without the influential observation(s) in the results (if there are just handful). If the analysis is unaffected by the “unusual” observations, then it matters little whether they are dropped or not. If they do affect the results, then reporting both versions of results allows the reader to judge the impacts for themselves. It is important to remember that sometimes the outliers are the most interesting part of the data set. For example, those observations that were the closest would be of great interest, whether they are outliers or not.

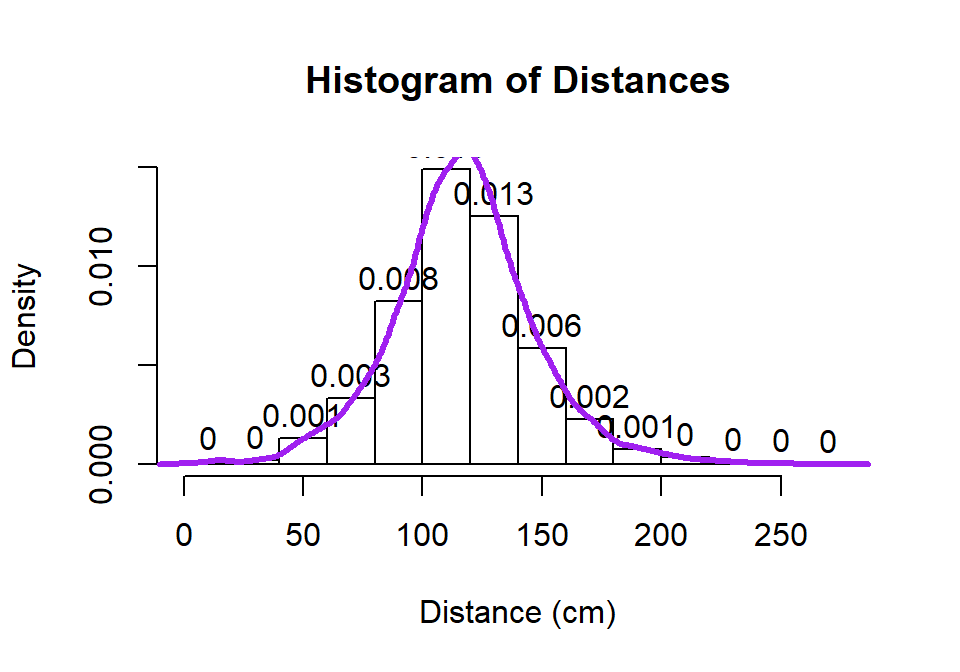

Often when statisticians think of distributions of data, we think of the smooth underlying shape that led to the data set that is being displayed in the histogram. Instead of binning up observations and making bars in the histogram, we can estimate what is called a density curve as a smooth curve that represents the observed distribution of the responses. Density curves can sometimes help us see features of the data sets more clearly.

To understand the density curve, it is useful to initially see

the histogram and density curve together. The height of the density curve is scaled

so that the total area under the curve16 is 1. To make a comparable histogram, the

y-axis needs to be scaled so that the histogram is also on the “density”

scale which makes the bar heights adjust so that the proportion of the

total data set in each bar is represented by the area in each bar

(remember that area is height times width). So the height depends on the

width of the bars and the total area across all the bars has to be 1. In the

hist function, the freq=F option does this required re-scaling to get

density-scaled histogram bars. The

density curve is added to the histogram using the R code of

lines(density()), producing the result in Figure 2.2 with

added modifications of options for lwd (line width) and col (color)

to make the plot more visually appealing. You can see how the density curve

somewhat matches the histogram bars but deals with the bumps up and down

and edges a little differently. We can pick out the relatively symmetric distribution using

either display and will rarely make both together.

hist(dd$Distance, freq=F, xlab="Distance (cm)", labels=T, main="Histogram of Distances")

lines(density(dd$Distance), lwd=3,col="purple")

Figure 2.2: Histogram and density curve of Distance responses.

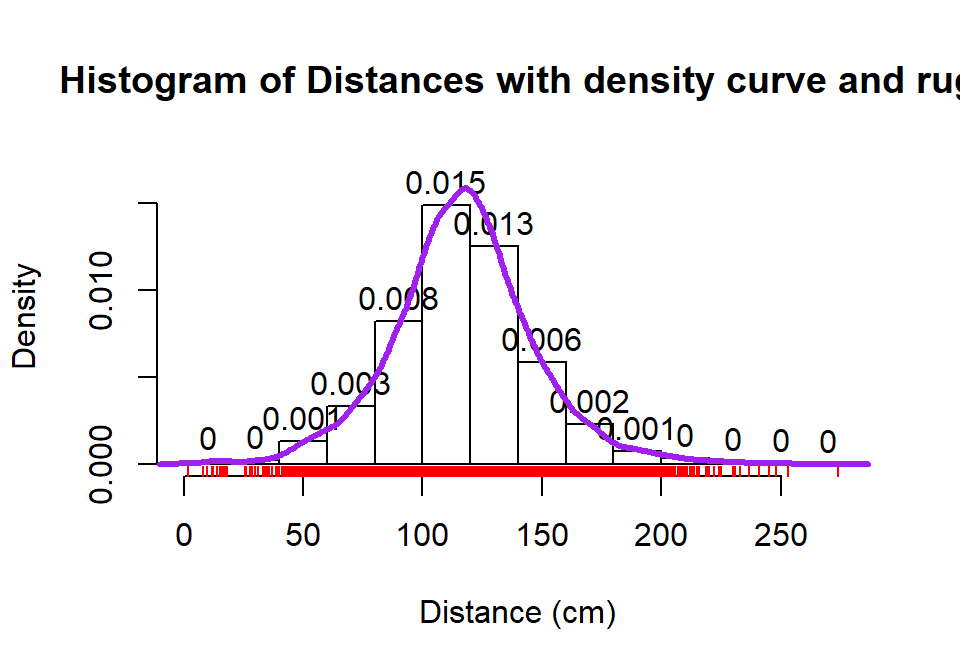

Histograms can be sensitive to the choice of the number of bars and

even the cut-offs used to define the bins for a given number of bars.

Small changes in the definition of cut-offs for the bins can have

noticeable impacts on the shapes observed but

this does not impact density curves. We are not going to tinker with the

default choices for bars in histogram, as they are reasonably selected in R, but we

can add information on the original observations being included in each bar to

better understand the choices that hist is making. In the previous

display, we can add what is called a rug to the plot, where a tick

mark is made on the x-axis for each observation. Because the responses appear to be rounded to the nearest cm, there is some discreteness in the responses and we need to use a graphical

technique called jittering to add a little noise17 to each observation so all the

observations at each distance value do not

plot as a single line. In Figure 2.3, the added tick marks

on the x-axis show the approximate locations of the original observations.

We can (barely) see how there are 2 observations at 2 cm (the noise

added generates a wider line than for an individual observation so it is possible to see that it is more than one observation there). A limitation of the

histogram arises at the center of the distribution where the bar that goes from 100 to 120 cm suggests that the mode (peak) is in this range (but it is unclear where) but the density curve suggests that the peak is closer to 120 than 100. The

density curve also shows some small bumps in the tails of the distributions tied to individual observations that are not really displayed in the histogram. Density curves are, however,

not perfect and this one shows a tiny bit of area for distances less than 0 cm which is

not possible here. When we make density curves below, we will cut off the curves at the most extreme values to avoid this issue.

hist(dd$Distance, freq=F, xlab="Distance (cm)", labels=T,

main="Histogram of Distances with density curve and rug", ylim=c(0,0.017))

lines(density(dd$Distance), lwd=3,col="purple")

set.seed(900)

rug(jitter(dd$Distance), col="red", lwd=1)

Figure 2.3: Histogram with density curve and rug plot of the jittered distance responses.

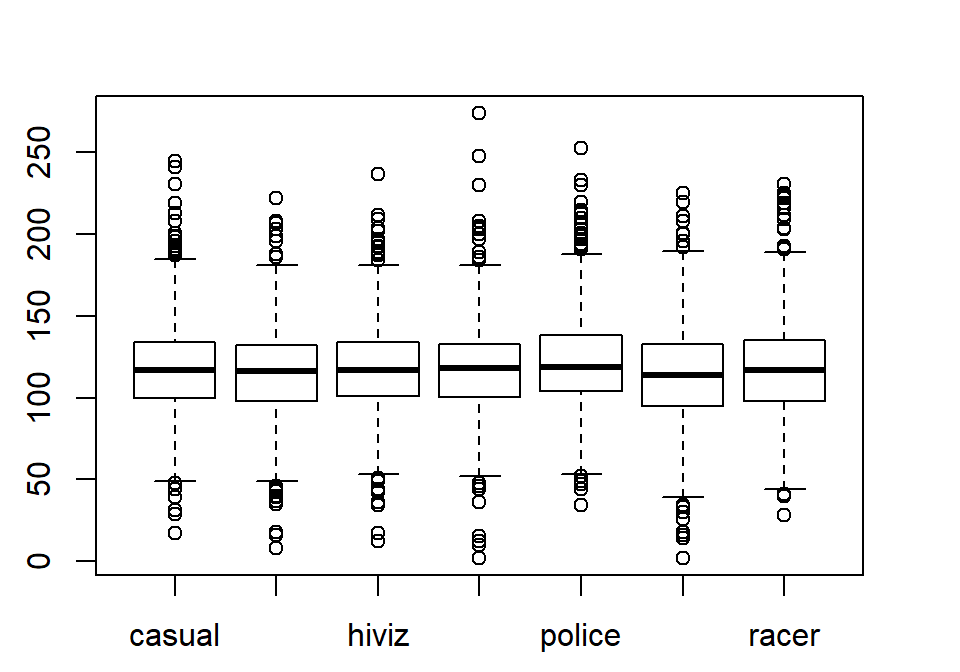

The graphical tools we’ve just discussed are going to help us move to comparing the

distribution of responses across more than one group. We will have two displays

that will help us make these comparisons. The simplest is

the side-by-side boxplot, where a boxplot is displayed for each group

of interest using the same y-axis scaling. In R, we can use its formula

notation to see if the response (Distance) differs based on the group

(Condition) by using something like Y~X or, here, Distance~Condition.

We also need to tell R where to find the variables – use the last option in the command, data=DATASETNAME , to inform R of the tibble to look in

to find the variables. In this example, data=dd. We will use

the formula and data=... options in almost every function we use

from here forward. Figure 2.4 contains the side-by-side

boxplots showing similar distributions for all the groups, with a slightly higher median in the “police” group and some outliers identified in all groups.

Figure 2.4: Side-by-side boxplot of distances based on outfits.

The “~” (which is read as the tilde symbol18, which you can find in the

upper left corner of your keyboard) notation will be used in two ways in this

material. The formula use in R employed previously declares that the

response variable here is Distance and the explanatory variable is Condition.

The other use for “~” is as shorthand for “is distributed as” and is used in

the context of \(Y \sim N(0,1)\), which translates (in statistics) to defining the

random variable Y as following a Normal distribution19

with mean 0

and standard deviation of 1. In the current situation, we could ask whether

the Distance variable seems like it may follow a normal distribution in each group, in

other words, is \(\text{Distance}\sim N(\mu,\sigma^2)\)? Since the responses are relatively symmetric, it is not clear that we have a violation of the assumption of the normality assumption for the Distance variable for any of the seven groups (more later on how we can assess this and the issues that occur when we have a violation of this assumption). Remember that

\(\mu\) and \(\sigma\) are parameters where

\(\mu\) (“mu”) is our standard symbol for the population mean

and that \(\sigma\) (“sigma”) is the symbol of the

population standard deviation.

2.2 Pirate-plots

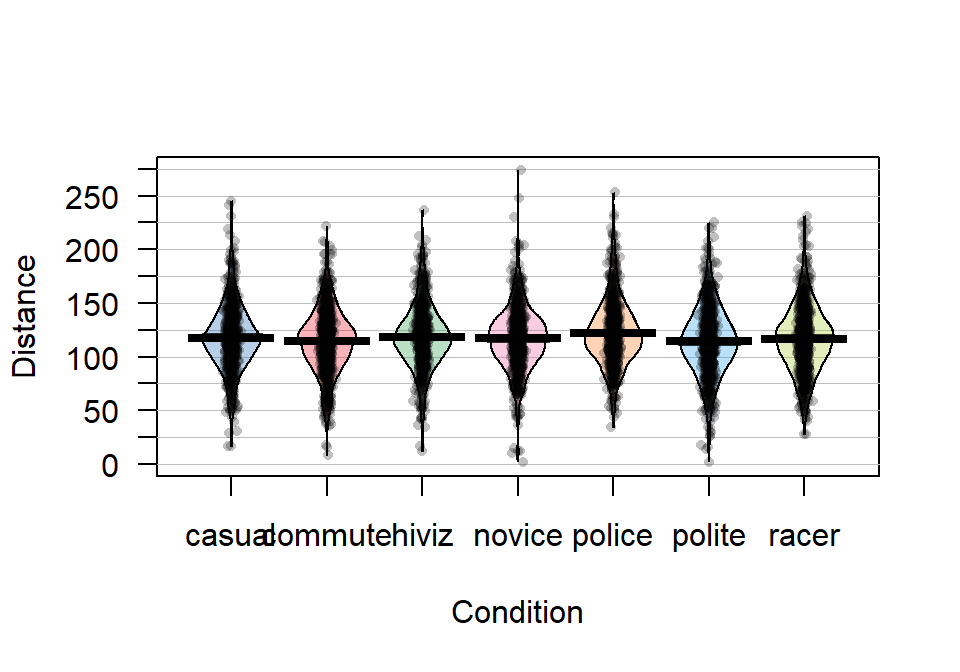

An alternative graphical display for comparing multiple groups that we will use is a display called a pirate-plot (Phillips 2017a) from the yarrr package20. Figure 2.5

shows an example of a pirate-plot that provides a side-by-side display that

contains the density curves, the original observations that generated the

density curve as jittered points (jittered both vertically and horizontally a little), the sample mean of each group (wide bar), and a box that represents the confidence interval for the true mean of that group. For each group, the density curves

are mirrored to aid in visual assessment of the shape of the distribution. This mirroring also

creates a shape that resembles the outline of a violin with skewed distributions so versions of this

display have also been called a “violin plot” or a “bean plot”. All together this plot shows us information

on the original observations, center (mean) and its confidence interval, spread, and shape of the distributions of the responses. Our inferences typically focus on the means of the groups and this plot allows

us to compare those across the groups while gaining information on the shapes

of the distributions of responses in each group.

To use the pirateplot function we need to install and then load the yarrr

package (Phillips 2017b).

The function works like the boxplot used previously

except that options

for the type of confidence interval needs to be specified with inf.method="ci" - otherwise you will get a different kind of interval than you learned in introductory statistics and we don’t want to get caught up in trying to understand the kind of interval it makes by default. There are many other options in the function that might be useful in certain situations, but that is the only one that is really needed to get started with pirate-plots.

Figure 2.5: Pirate-plot of distances by outfit group. Bold horizontal lines correspond to sample mean of each group, boxes around lines (here they are very tight to the lines for the means) are the 95% confidence intervals.

Figure 2.5 suggests that the distributions are relatively symmetric which would suggest that the means and medians are similar even though only the means are displayed in these plots. In this display, none of the observations are flagged as outliers (it is not a part of this display). It is up to the consumer of the graphic to decide if observations look to be outside of the overall pattern of the rest of the observations. By plotting the observations by groups, we can also explore the narrowest (and likely most scary) overtakes in the data set. The police and racer conditions seem to have all observations over 25 cm and the most close passes were in the novice and polite outfits, including the two 2 cm passes. By displaying the original observations, we are able to explore and identify features that aggregation and summarization in plots can sometimes obfuscate. But the pirate-plots also allow you to compare the shape of the distributions (relatively symmetric and somewhat bell-shaped), variability (they look to have relatively similar variability), and the means of the groups. Our inferences are going to focus on the means but those inferences are only valid if the distributions are either approximately normal or at least have similar shapes and spreads (more on this soon).

It appears that the mean for police is higher than the other groups but that the others are not too different. But is this difference real? We will never

know the answer to that question, but we

can assess how likely we are to have seen a result as extreme or more

extreme than our result, assuming that there is no difference in the

means of the groups. And if the observed result is

(extremely) unlikely to occur, then we can reject the hypothesis that the

groups have the same mean and conclude that there is evidence of a real

difference. After that, we will want to carefully explore how big the estimated differences in the means are - is this enough of a difference to matter to you or the subject in the study? To accompany the pirate-plot, we

need to have numerical values to compare. We can get means and standard

deviations by groups easily using the same formula notation as for the plots with the mean

and sd functions, if the mosaic package is loaded.

## casual commute hiviz novice police polite racer

## 117.6110 114.6079 118.4383 116.9405 122.1215 114.0518 116.7559## casual commute hiviz novice police polite racer

## 29.86954 29.63166 29.03384 29.03812 29.73662 31.23684 30.60059We can also use the favstats function to get those summaries and others by groups.

## Condition min Q1 median Q3 max mean sd n missing

## 1 casual 17 100.0 117 134 245 117.6110 29.86954 779 0

## 2 commute 8 98.0 116 132 222 114.6079 29.63166 857 0

## 3 hiviz 12 101.0 117 134 237 118.4383 29.03384 737 0

## 4 novice 2 100.5 118 133 274 116.9405 29.03812 807 0

## 5 police 34 104.0 119 138 253 122.1215 29.73662 790 0

## 6 polite 2 95.0 114 133 225 114.0518 31.23684 868 0

## 7 racer 28 98.0 117 135 231 116.7559 30.60059 852 0Based on these results, we can see that there is an estimated difference of over 8 cm between the smallest mean (polite at 114.05 cm) and the largest mean (police at 122.12 cm). The differences among some of the other groups are much smaller, such as between casual and commute with sample means of 117.611 and 114.608 cm, respectively. Because there are seven groups being compared in this study, we will have to wait until Chapter 3 and the One-Way ANOVA test to fully assess evidence related to some difference among the seven groups. For now, we are going to focus on comparing the mean Distance between casual and commute groups – which is a two independent sample mean situation and something you should have seen before. Remember that the “independent” sample part of this refers to observations that are independently observed for the two groups as opposed to the paired sample situation that you may have explored where one observation from the first group is related to an observation in the second group (the same person with one measurement in each group (we generically call this “repeated measures”) or the famous “twin” studies with one twin assigned to each group). This study has some potential violations of the “independent” sample situation (for example, repeated measurements made during a single ride), but those do not clearly fit into the matched pairs situation, so we will note this potential issue and proceed with exploring the method that assumes that we have independent samples, even though this is not true here. In Chapter 9, methods for more complex study designs like this one will be discussed briefly, but mostly this is beyond the scope of this material.

Here we are going to use the “simple” two independent group scenario to review some basic statistical concepts and connect two different frameworks for conducting statistical inference: randomization and parametric inference techniques. Parametric statistical methods involve making assumptions about the distribution of the responses and obtaining confidence intervals and/or p-values using a named distribution (like the \(z\) or \(t\)-distributions). Typically these results are generated using formulas and looking up areas under curves or cutoffs using a table or a computer. Randomization-based statistical methods use a computer to shuffle, sample, or simulate observations in ways that allow you to obtain distributions of possible results to find areas and cutoffs without resorting to using tables and named distributions. Randomization methods are what are called nonparametric methods that often make fewer assumptions (they are not free of assumptions!) and so can handle a larger set of problems more easily than parametric methods. When the assumptions involved in the parametric procedures are met by a data set, the randomization methods often provide very similar results to those provided by the parametric techniques. To be a more sophisticated statistical consumer, it is useful to have some knowledge of both of these techniques for performing statistical inference and the fact that they can provide similar results might deepen your understanding of both approaches.

To be able to work just with the observations from two of the conditions (casual and commute) we could remove all the other observations in a spreadsheet program and read that new data set

back into R, but it is actually pretty easy to use R to do data

management once the data set is loaded. It is also a better scientific process to do as much of your data management within R as possible so that your steps in managing the data are fully documented and reproducible. Highlighting and clicking in spreadsheet programs is a dangerous way to work and can be impossible to recreate steps that were taken from initial data set to the version that was analyzed. In R, we could identify the rows that contain the observations we want to retain and just extract those rows, but this is hard with over five thousand observations. The subset function (also an option in some functions) is the best way to be able to focus on observations that meet a particular condition, we can “subset” the data set to retain those rows. The subset function takes the data set as its first argument and then in the “subset” option, we need to define the condition we want to meet to retain those rows. Specifically, we need to define the variable we want to work with, Condition, and then request rows that meet a condition (are %in%) and the aspects that meet that condition (here by concatenating “casual” and “commute”), leading to code of:

subset(dd, Condition %in% c("casual", "commute"))We would actually want to save that new subsetted data set into a new tibble for future work, so we can use the following to save the reduced data set into ddsub:

There is also a “select” option that we could also use to just focus on certain columns in the data set and we can use that just to focus on the Condition and Distance variables using:

You will always want to check that the correct observations were dropped

either using View(ddsub) or by doing a quick summary of the

Condition variable in the new tibble.

## casual commute hiviz novice police polite racer

## 779 857 0 0 0 0 0It ends up that R remembers the other categories even though there are

0 observations in them now and that can cause us some problems. When we remove a

group of observations, we sometimes need to clean up categorical variables to

just reflect the categories that are present. The factor

function

creates categorical variables based on the levels of the variables that are

observed and is useful to run here to clean up Condition to just reflect the categories that are now present.

## casual commute

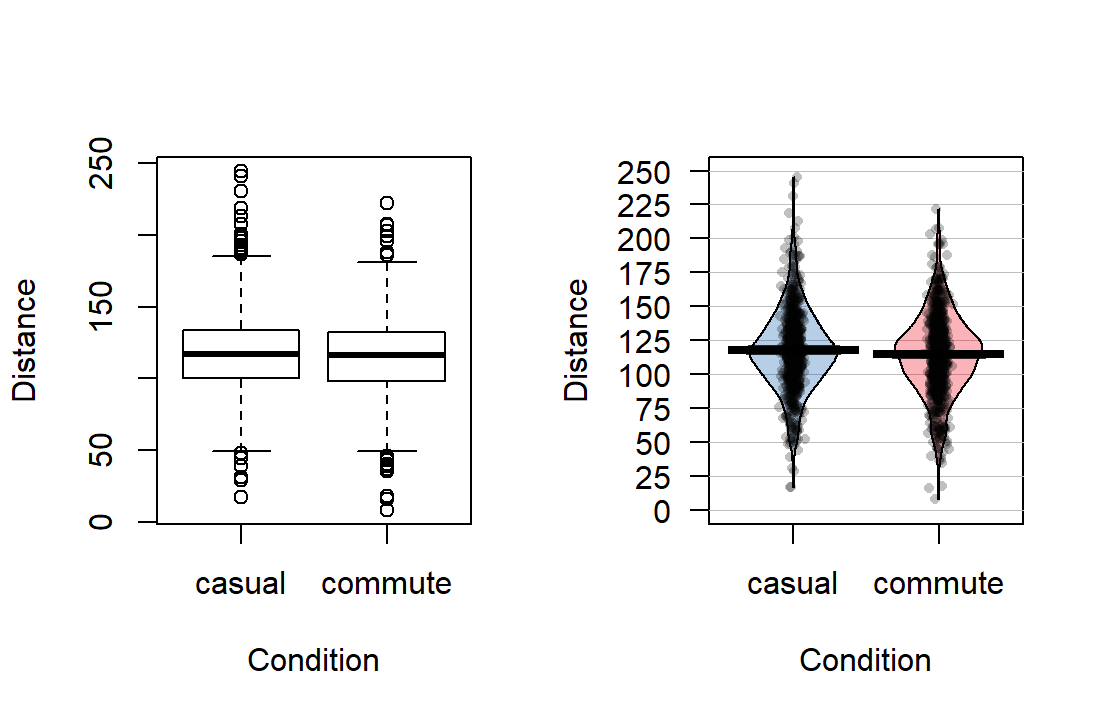

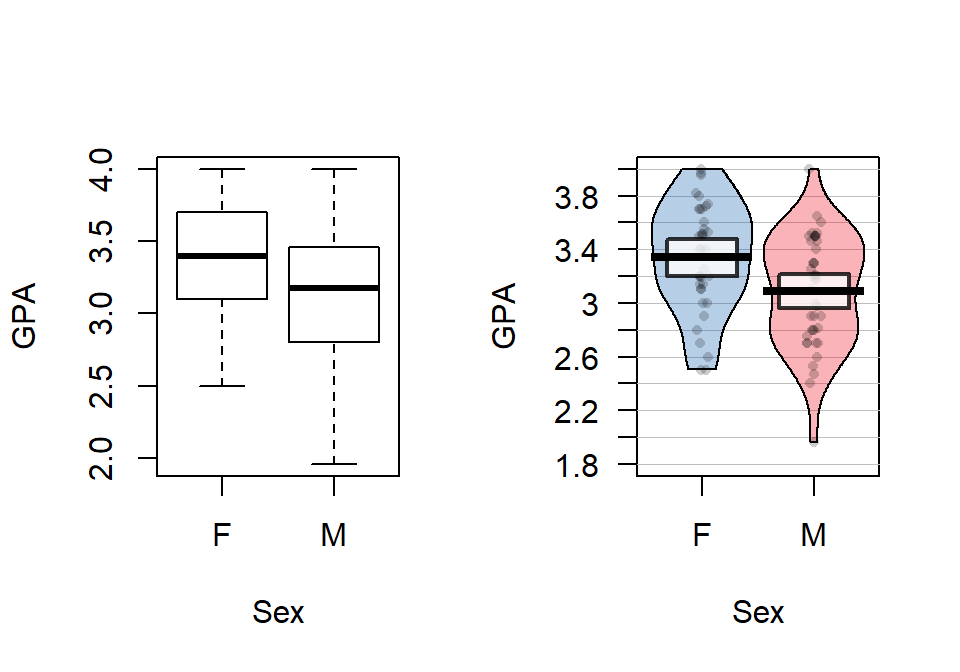

## 779 857The two categories of interest now were selected because neither looks particularly “racey” or has high visibility but could present a common choice between getting fully “geared up” for the commute or just jumping on a bike to go to work. Now if we remake the boxplots and pirate-plots, they only contain results for the two groups of interest here as seen in Figure 2.6. Note that these are available in the previous version of the plots, but now we will just focus on these two groups.

Figure 2.6: Boxplot and pirate-plot of the Distance responses on the reduced ddsub data set.

The two-sample mean techniques you learned in your previous course all

start with comparing the means the two groups. We can obtain the two

means using the mean function or directly obtain the difference

in the means using the diffmean function (both require the mosaic

package). The diffmean function provides

\(\bar{x}_\text{commute} - \bar{x}_\text{casual}\) where \(\bar{x}\)

(read as “x-bar”) is the sample mean of observations in the subscripted

group. Note that there are two directions that you could compare the

means and this function chooses to take the mean from the second group

name alphabetically and subtract the mean from the first alphabetical group

name. It is always good to check the direction of this calculation as

having a difference of \(-3.003\) cm versus \(3.003\) cm could be important.

## casual commute

## 117.6110 114.6079## diffmean

## -3.0031052.3 Models, hypotheses, and permutations for the two sample mean situation

There appears to be some evidence that the casual clothing group is getting higher average overtake distances than the commute group of observations, but we want to try to make sure that the difference is real – that there is evidence to reject the assumption that the means are the same “in the population”. First, a null hypothesis21 which defines a null model22 needs to be determined in terms of parameters (the true values in the population). The research question should help you determine the form of the hypotheses for the assumed population. In the two independent sample mean problem, the interest is in testing a null hypothesis of \(H_0: \mu_1 = \mu_2\) versus the alternative hypothesis of \(H_A: \mu_1 \ne \mu_2\), where \(\mu_1\) is the parameter for the true mean of the first group and \(\mu_2\) is the parameter for the true mean of the second group. The alternative hypothesis involves assuming a statistical model for the \(i^{th}\ (i=1,\ldots,n_j)\) response from the \(j^{th}\ (j=1,2)\) group, \(\boldsymbol{y}_{ij}\), that involves modeling it as \(y_{ij} = \mu_j + \varepsilon_{ij}\), where we assume that \(\varepsilon_{ij} \sim N(0,\sigma^2)\). For the moment, focus on the models that either assume the means are the same (null) or different (alternative), which imply:

Null Model: \(y_{ij} = \mu + \varepsilon_{ij}\) There is no difference in true means for the two groups.

Alternative Model: \(y_{ij} = \mu_j + \varepsilon_{ij}\) There is a difference in true means for the two groups.

Suppose we are considering the alternative model for the 4th observation (\(i=4\)) from the second group (\(j=2\)), then the model for this observation is \(y_{42} = \mu_2 +\varepsilon_{42}\), that defines the response as coming from the true mean for the second group plus a random error term for that observation, \(\varepsilon_{42}\). For, say, the 5th observation from the first group (\(j=1\)), the model is \(y_{51} = \mu_1 +\varepsilon_{51}\). If we were working with the null model, the mean is always the same (\(\mu\)) – the group specified does not change the mean we use for that observation, so the model for \(y_{42}\) would be \(\mu +\varepsilon_{42}\).

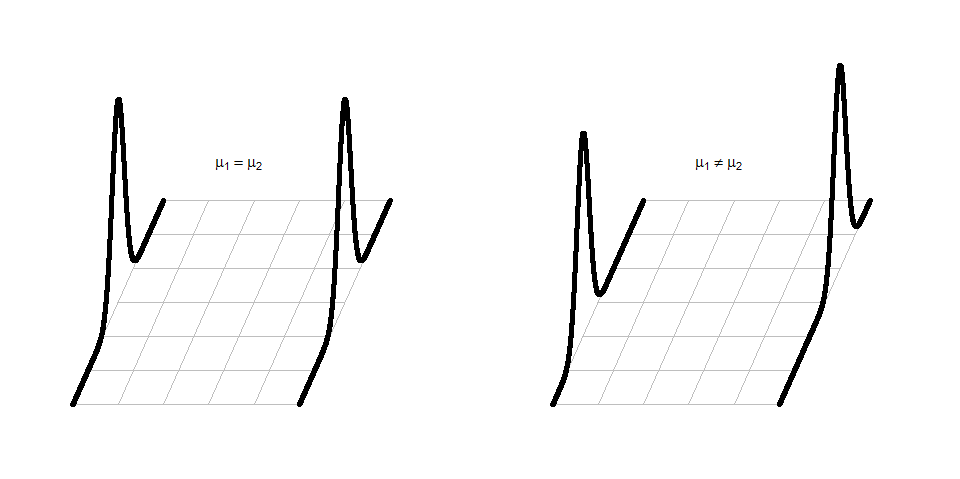

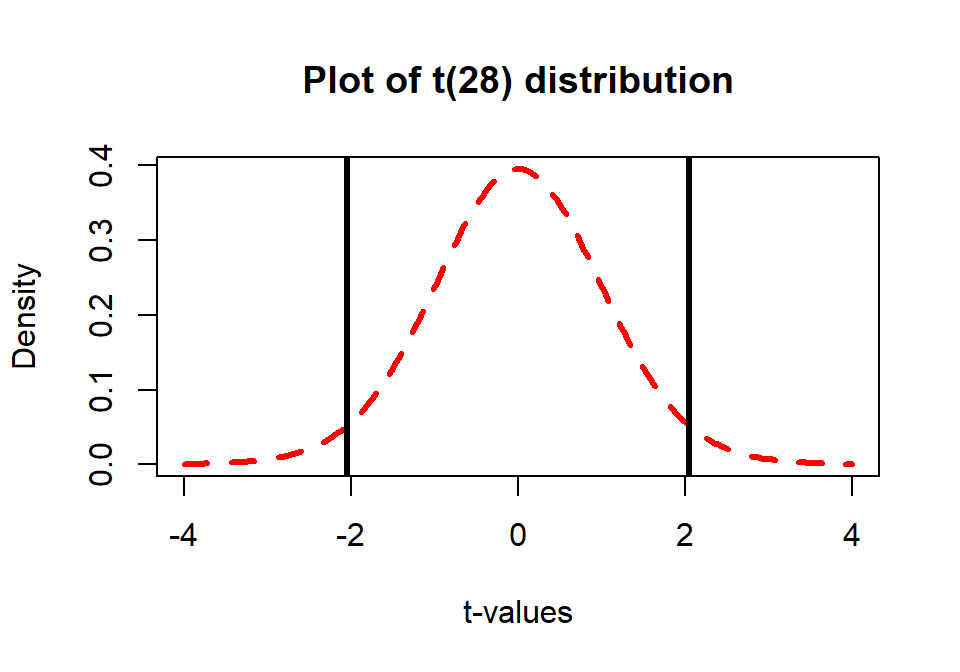

It can be helpful to think about the null and alternative models graphically. By assuming the null hypothesis is true (means are equal) and that the random errors around the mean follow a normal distribution, we assume that the truth is as displayed in the left panel of Figure 2.7 – two normal distributions with the same mean and variability. The alternative model allows the two groups to potentially have different means, such as those displayed in the right panel of Figure 2.7 where the second group has a larger mean. Note that in this scenario, we assume that the observations all came from the same distribution except that they had different means. Depending on the statistical procedure we are using, we basically are going to assume that the observations (\(y_{ij}\)) either were generated as samples from the null or alternative model. You can imagine drawing observations at random from the pictured distributions. For hypothesis testing, the null model is assumed to be true and then the unusualness of the actual result is assessed relative to that assumption. In hypothesis testing, we have to decide if we have enough evidence to reject the assumption that the null model (or hypothesis) is true. If we reject the null hypothesis, then we would conclude that the other model considered (the alternative model) is more reasonable. The researchers obviously would have hoped to encounter some sort of noticeable difference in the distances for the different outfits and have been able to find enough evidence to reject the null model where the groups “look the same”.

Figure 2.7: Illustration of the assumed situations under the null (left) and a single possibility that could occur if the alternative were true (right) and the true means were different. There are an infinite number of ways to make a plot like the right panel that satisfies the alternative hypothesis.

In statistical inference, null hypotheses (and their implied models) are set up as “straw men” with every interest in rejecting them even though we assume they are true to be able to assess the evidence \(\underline{\text{against them}}\). Consider the original study design here, the outfits were randomly assigned to the rides. If the null hypothesis were true, then we would have no difference in the population means of the groups. And this would apply if we had done a different random assignment of the outfits. So let’s try this: assume that the null hypothesis is true and randomly re-assign the treatments (outfits) to the observations that were obtained. In other words, keep the Distance results the same and shuffle the group labels randomly. The technical term for this is doing a permutation (a random shuffling of a grouping variable relative to the observed responses). If the null is true and the means in the two groups are the same, then we should be able to re-shuffle the groups to the observed Distance values and get results similar to those we actually observed. If the null is false and the means are really different in the two groups, then what we observed should differ from what we get under other random permutations. The differences between the two groups should be more noticeable in the observed data set than in (most) of the shuffled data sets. It helps to see an example of a permutation of the labels to understand what this means here.

The data set we are working with is a little on the large size, especially to explore individual observations. So for the moment we are going to work with a random sample of 30 of the \(n=1,636\) observations in ddsub, fifteen from each group, that are generated using the sample function. To do this23, we will use the sample function twice - once to sample from the subsetted commute observations (creating the s1 data set) and once to sample from the casual ones (creating s2). A new function for us, called rbind, is used to bind the rows together — much like pasting a chunk of rows below another chunk in a spreadsheet program. This operation only works if the columns all have the same names and meanings both for rbind and in a spreadsheet. Together this code creates the dsample data set that we will analyze below and compare to results from the full data set. The sample means are now 135.8 and 109.87 cm for casual and commute groups, respectively, and so the difference in the sample means has increased in magnitude to -25.93 cm (commute - casual). This difference would vary based on the different random samples from the larger data set, but for the moment pretend this was the entire data set that the researchers had collected and that we want to try to find evidence against the null hypothesis that the true means are the same in these two groups.

set.seed(9432)

s1 <- sample(subset(ddsub, Condition %in% "commute"), size=15)

s2 <- sample(subset(ddsub, Condition %in% "casual"), size=15)

dsample <- rbind(s1, s2)

mean(Distance~Condition, data=dsample)## casual commute

## 135.8000 109.8667In order to assess evidence against the null hypothesis of no difference, we want to permute the group labels versus the observations. In the mosaic package, the shuffle function allows us to easily perform

a permutation24. One permutation of the

treatment labels is provided in the PermutedCondition variable below. Note

that the Distances are held in the same place while the group labels are shuffled.

Perm1 <- with(dsample, tibble(Distance, Condition, PermutedCondition=shuffle(Condition)))

#To force the tibble to print out all rows in data set - not used often

data.frame(Perm1) ## Distance Condition PermutedCondition

## 1 168 commute commute

## 2 137 commute commute

## 3 80 commute casual

## 4 107 commute commute

## 5 104 commute casual

## 6 60 commute casual

## 7 88 commute commute

## 8 126 commute commute

## 9 115 commute casual

## 10 120 commute casual

## 11 146 commute commute

## 12 113 commute casual

## 13 89 commute commute

## 14 77 commute commute

## 15 118 commute casual

## 16 148 casual casual

## 17 114 casual casual

## 18 124 casual commute

## 19 115 casual casual

## 20 102 casual casual

## 21 77 casual casual

## 22 72 casual commute

## 23 193 casual commute

## 24 111 casual commute

## 25 161 casual casual

## 26 208 casual commute

## 27 179 casual casual

## 28 143 casual commute

## 29 144 casual commute

## 30 146 casual casualIf you count up the number of subjects in each group by counting the number

of times each label (commute, casual) occurs, it is the same in both the

Condition and PermutedCondition columns (15 each). Permutations involve randomly

re-ordering the values of a variable – here the Condition group labels – without

changing the content of the variable.

This result can also be generated using

what is called sampling without replacement: sequentially select \(n\) labels

from the original variable (Condition), removing each observed label and making sure that each of the

original Condition labels is selected once and only once. The new, randomly

selected order of selected labels provides the permuted labels. Stepping

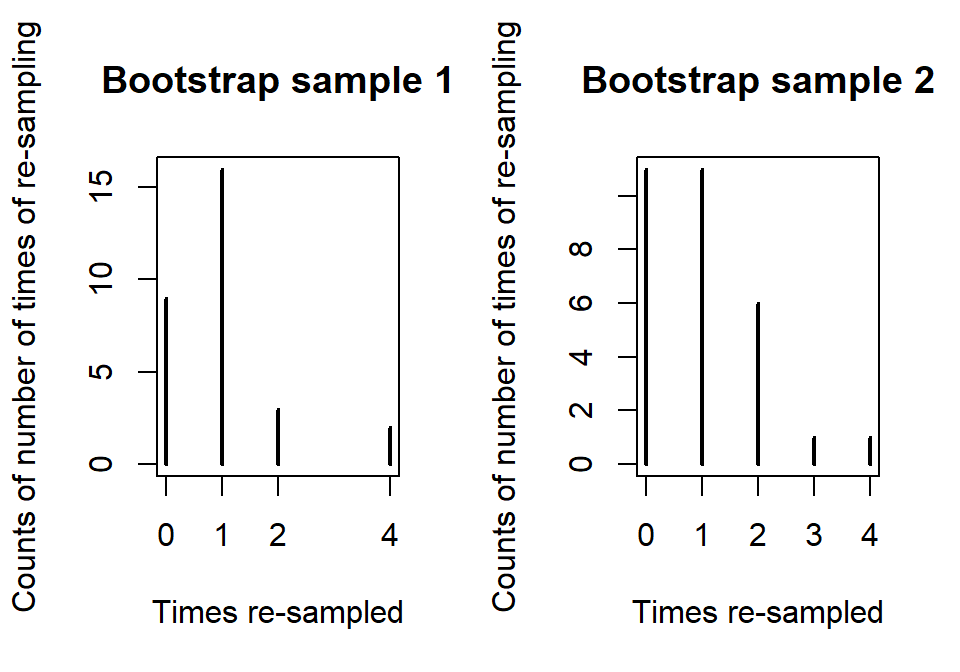

through the process helps to understand how it works: after the initial random

sample of one label, there would \(n - 1\) choices possible; on the \(n^{th}\)

selection, there would only be one label remaining to select. This makes sure

that all original labels are re-used but that the order is random. Sampling

without replacement is like picking names out of a hat, one-at-a-time, and not

putting the names back in after they are selected. It is an exhaustive process

for all the original observations. Sampling with replacement, in contrast,

involves sampling from the specified list with each observation having an equal

chance of selection for each sampled observation – in other words, observations

can be selected more than once. This is like picking \(n\) names out of a hat that

contains \(n\) names, except that every time a name is selected, it goes back into

the hat – we’ll use this technique in Section 2.9

to do what is called bootstrapping.

Both sampling mechanisms can be

used to generate inferences but each has particular situations

where they are most useful. For hypothesis testing,

we will use permutations

(sampling without replacement) as its mechanism most closely matches the null hypotheses we will be testing.

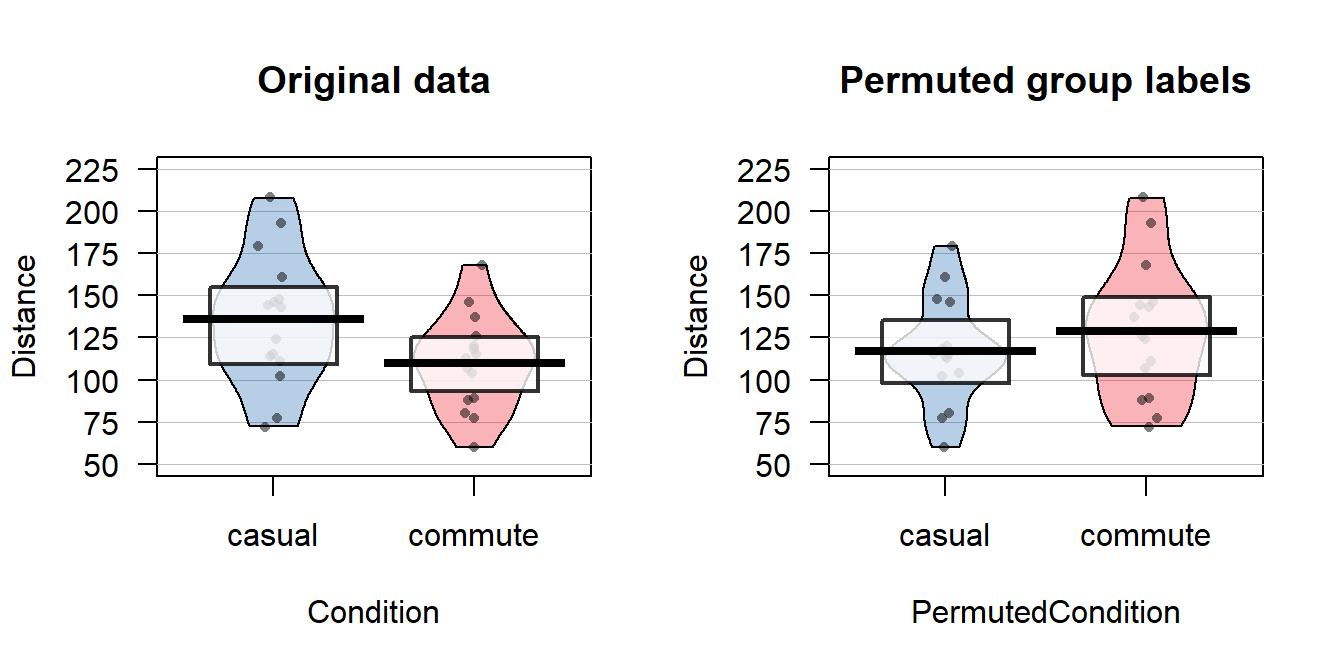

The comparison of the pirate-plots between the real \(n=30\) data set and permuted version is what is really interesting (Figure 2.8). The original difference in the sample means of the two groups was -25.93 cm (commute - casual). The sample means are the statistics that estimate the parameters for the true means of the two groups and the difference in the sample means is a way to create a single number that tracks a quantity directly related to the difference between the null and alternative models. In the permuted data set, the difference in the means is 12.07 cm in the opposite direction (the commute group had a higher mean than casual in the permuted data).

## casual commute

## 116.8000 128.8667## diffmean

## 12.06667

Figure 2.8: Pirate-plots of Distance responses versus actual treatment groups and permuted groups. Note how the responses are the same but that they are shuffled between the two groups differently in the permuted data set. With the smaller sample size, the 95% confidence intervals are more clearly visible than with the original large data set.

The diffmean function is a simple way to get the differences in the means, but we can also start to learn about using the lm function - that will be used for every chapter except for Chapter 5. The lm stands for linear model and, as we will see moving forward, encompasses a wide array of different models and scenarios. Here we will consider among its simplest usage25 to be able to estimate the difference in the mean of two groups. Notationally, it is very similar to other functions we have considered, lm(y ~ x, data=...) where y is the response variable and x is the explanatory variable. Here that is lm(Distance~Condition, data=dsample) with Condition defined as a factor variable. With linear models, we will need to interrogate them to obtain a variety of useful information and our first “interrogation” function is usually the summary function. To use it, it is best to have stored the model into an object, something like lm1, and then we can apply the summary() function to the stored model object to get a suite of output:

##

## Call:

## lm(formula = Distance ~ Condition, data = dsample)

##

## Residuals:

## Min 1Q Median 3Q Max

## -63.800 -21.850 4.133 15.150 72.200

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 135.800 8.863 15.322 3.83e-15

## Conditioncommute -25.933 12.534 -2.069 0.0479

##

## Residual standard error: 34.33 on 28 degrees of freedom

## Multiple R-squared: 0.1326, Adjusted R-squared: 0.1016

## F-statistic: 4.281 on 1 and 28 DF, p-value: 0.04789This output is explored more in Chapter 3, but for the moment, focus on the row labeled as Conditioncommute in the middle of the output. In the first (Estimate) column, there is -25.933. This is a number we saw before – it is the difference in the sample means between commute and casual (commute - casual). When lm denotes a category in the row of the output (here commute), it is trying to indicate that the information to follow relates to the difference between this category and a baseline or reference category (here casual). The first ((Intercept)) row also contains a number we have seen before: - 135.8 is the sample mean for the casual group. So the lm is generating a coefficient for the mean of one of the groups and another as the difference in the two groups26. In developing a test to assess evidence against the null hypothesis, we will focus on the difference in the sample means. So we want to be able to extract that number from this large suite of information. It ends up that we can apply the coef function to lm models and then access that second coefficient using the bracket notation. Specifically:

## Conditioncommute

## -25.93333This is the same result as using the diffmean function, so either could be used here. The estimated difference in the sample means in the permuted data set of 12.07 cm is available with:

## PermutedConditioncommute

## 12.06667Comparing the pirate-plots and the estimated difference in the sample means suggests that the observed difference was larger than what we got when we did a single permutation. Conceptually, permuting observations between group labels is consistent with the null hypothesis – this is a technique to generate results that we might have gotten if the null hypothesis were true since the responses are the same in the two groups if the null is true. We just need to repeat the permutation process many times and track how unusual our observed result is relative to this distribution of potential responses if the null were true. If the observed differences are unusual relative to the results under permutations, then there is evidence against the null hypothesis, and we can conclude, in the direction of the alternative hypothesis, that the true means differ. If the observed differences are similar to (or at least not unusual relative to) what we get under random shuffling under the null model, we would have a tough time concluding that there is any real difference between the groups based on our observed data set. This is formalized using the p-value as a measure of the strength of evidence against the null hypothesis and how we use it.

2.4 Permutation testing for the two sample mean situation

In any testing situation, you must define some function of the observations that gives us a single number that addresses our question of interest. This quantity is called a test statistic. These often take on complicated forms and have names like \(t\) or \(z\) statistics that relate to their parametric (named) distributions so we know where to look up p-values27. In randomization settings, they can have simpler forms because we use the data set to find the distribution of the statistic under the null hypothesis and don’t need to rely on a named distribution. We will label our test statistic T (for Test statistic) unless the test statistic has a commonly used name. Since we are interested in comparing the means of the two groups, we can define

\[T=\bar{x}_\text{commute} - \bar{x}_\text{casual},\]

which coincidentally is what the diffmean function and the second coefficient from the lm provided us previously.

We label our observed test statistic (the one from the original data

set) as

\[T_{obs}=\bar{x}_\text{commute} - \bar{x}_\text{casual},\]

which happened to be -25.933 cm here. We will compare this result to the results for the test statistic that we obtain from permuting the group labels. To denote permuted results, we will add an * to the labels:

\[T^*=\bar{x}_{\text{commute}^*}-\bar{x}_{\text{casual}^*}.\]

We then compare the \(T_{obs}=\bar{x}_\text{commute} - \bar{x}_\text{casual} = -25.933\) to the distribution of results that are possible for the permuted results (\(T^*\)) which corresponds to assuming the null hypothesis is true.

We need to consider lots of permutations to do a permutation test.

In contrast to

your introductory statistics course where, if you did this, it was just a click

away, we are going to learn what was going on “under the hood” of the software you were using. Specifically, we

need a for loop in R to be able to repeatedly generate the permuted data

sets and record \(T^*\) for each one. Loops are a basic programming task that make

randomization methods possible as well as potentially simplifying any repetitive

computing task. To write a “for loop”, we need to choose how many times we want

to do the loop (call that B) and decide on a counter to keep track of where

we are at in the loops (call that b, which goes from 1 up to B). The

simplest loop just involves printing out the index, print(b) at each step.

This is our first use of curly braces, { and }, that are used to group the code

we want to repeatedly run as we proceed through the loop. By typing the following

code in a codechunk and then highlighting it all and hitting the run button,

R will go through the loop B = 5 times, printing out the counter:

B <- 5

for (b in (1:B)){

print(b)

}Note that when you highlight and run the code, it will look about the same with “+” printed after the first line to indicate that all the code is connected when it appears in the console, looking like this:

When you run these three lines of code (or compile a .Rmd file that contains this), the console will show you the following output:

Instead of printing the counter, we want to use the loop to repeatedly compute

our test statistic across B random permutations of the observations. The

shuffle function performs permutations of the group labels relative to

responses and the coef(lmP)[2] extracts the estimated difference in the two group means in the permuted

data set. For a single permutation, the combination of shuffling Condition and

finding the difference in the means, storing it in a variable called Ts is:

## shuffle(Condition)commute

## 16.6And putting this inside the print function allows us to find the test

statistic under 5 different permutations easily:

B <- 5

for (b in (1:B)){

lmP <- lm(Distance~shuffle(Condition), data=dsample)

Ts <- coef(lmP)[2]

print(Ts)

}## shuffle(Condition)commute

## -1.8

## shuffle(Condition)commute

## -9.533333

## shuffle(Condition)commute

## 3.8

## shuffle(Condition)commute

## 9.4

## shuffle(Condition)commute

## 26.73333Finally, we would like to store the values of the test statistic instead of

just printing them out on each pass through the loop. To do this, we need to

create a variable to store the results, let’s call it Tstar. We know that

we need to store B results so will create a vector28 of length B, which

contains B elements, full of missing values (NA) using the matrix function with the nrow option specifying the number of elements:

## [,1]

## [1,] NA

## [2,] NA

## [3,] NA

## [4,] NA

## [5,] NANow we can run our loop B times and store the results in Tstar.

for (b in (1:B)){

lmP <- lm(Distance~shuffle(Condition), data=dsample)

Tstar[b] <- coef(lmP)[2]

}

#Print out the results stored in Tstar with the next line of code## [,1]

## [1,] 9.533333

## [2,] 9.933333

## [3,] 23.000000

## [4,] -11.000000

## [5,] -17.400000Five permutations are still not enough to assess whether our \(T_{obs}\)

of -25.933 is unusual and we need to do many permutations to get an accurate

assessment of the possibilities under the null hypothesis.

It is common practice

to consider something like 1,000 permutations. The Tstar vector when we set

B to be large, say B=1000, contains the permutation distribution for the

selected test statistic under29 the null

hypothesis – what is called the null distribution of the statistic. The

null distribution is the distribution of possible values of a statistic

under the null hypothesis. We want to visualize this distribution and use it to

assess how unusual our \(T_{obs}\) result of -25.933 cm was relative to all the

possibilities under permutations (under the null hypothesis). So we repeat the

loop, now with \(B=1000\) and generate a histogram, density curve, and summary

statistics of the results:

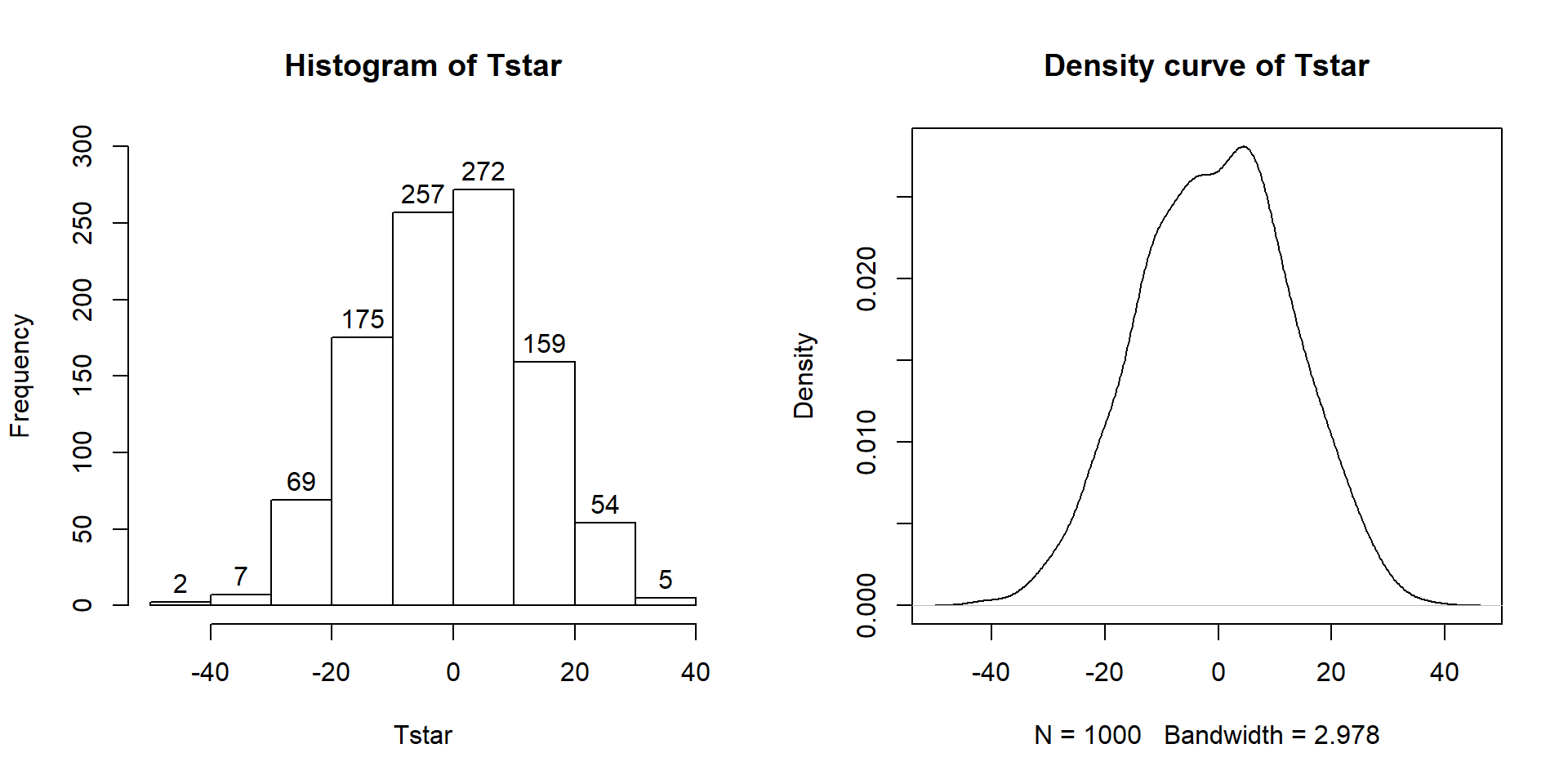

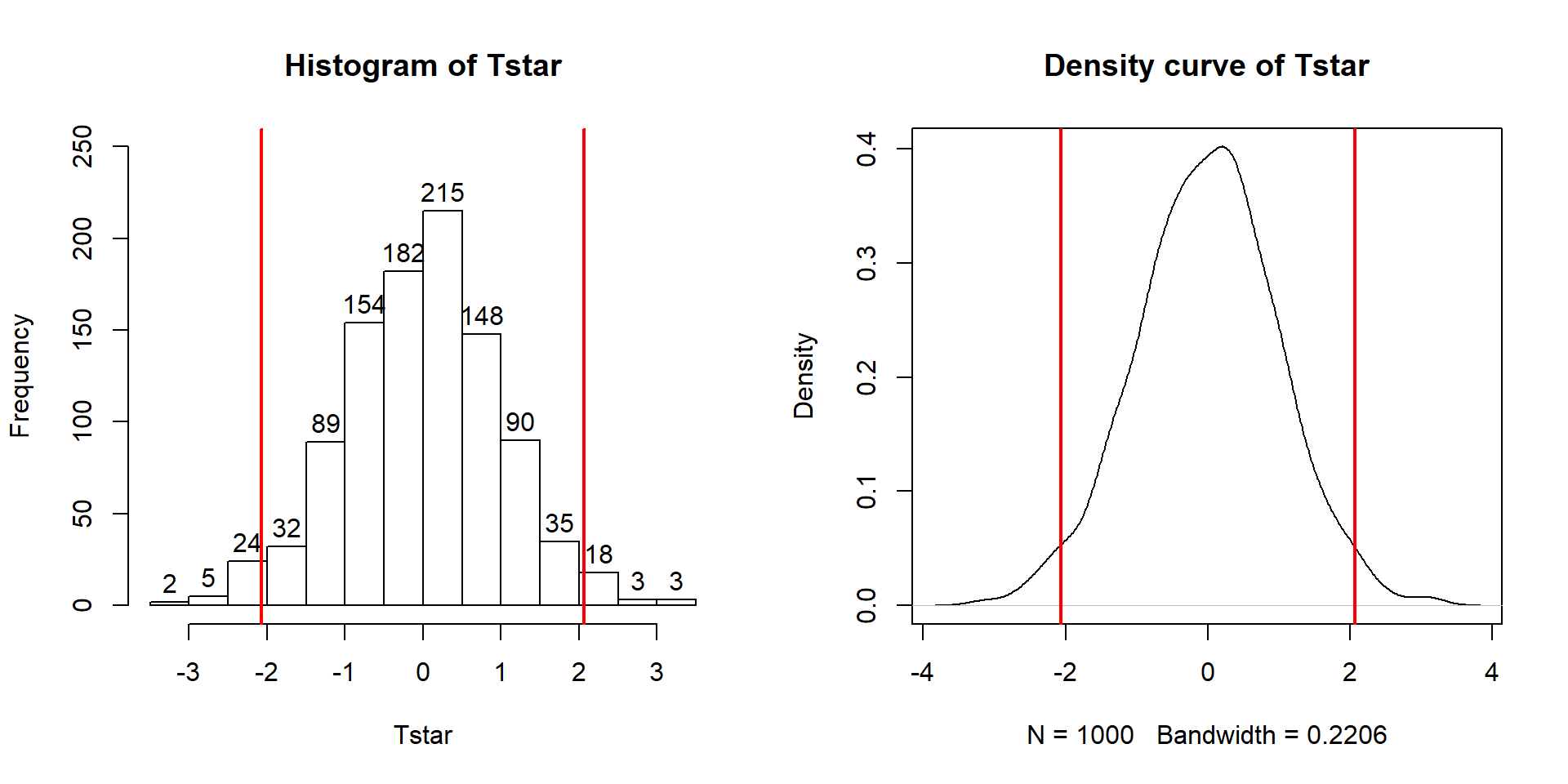

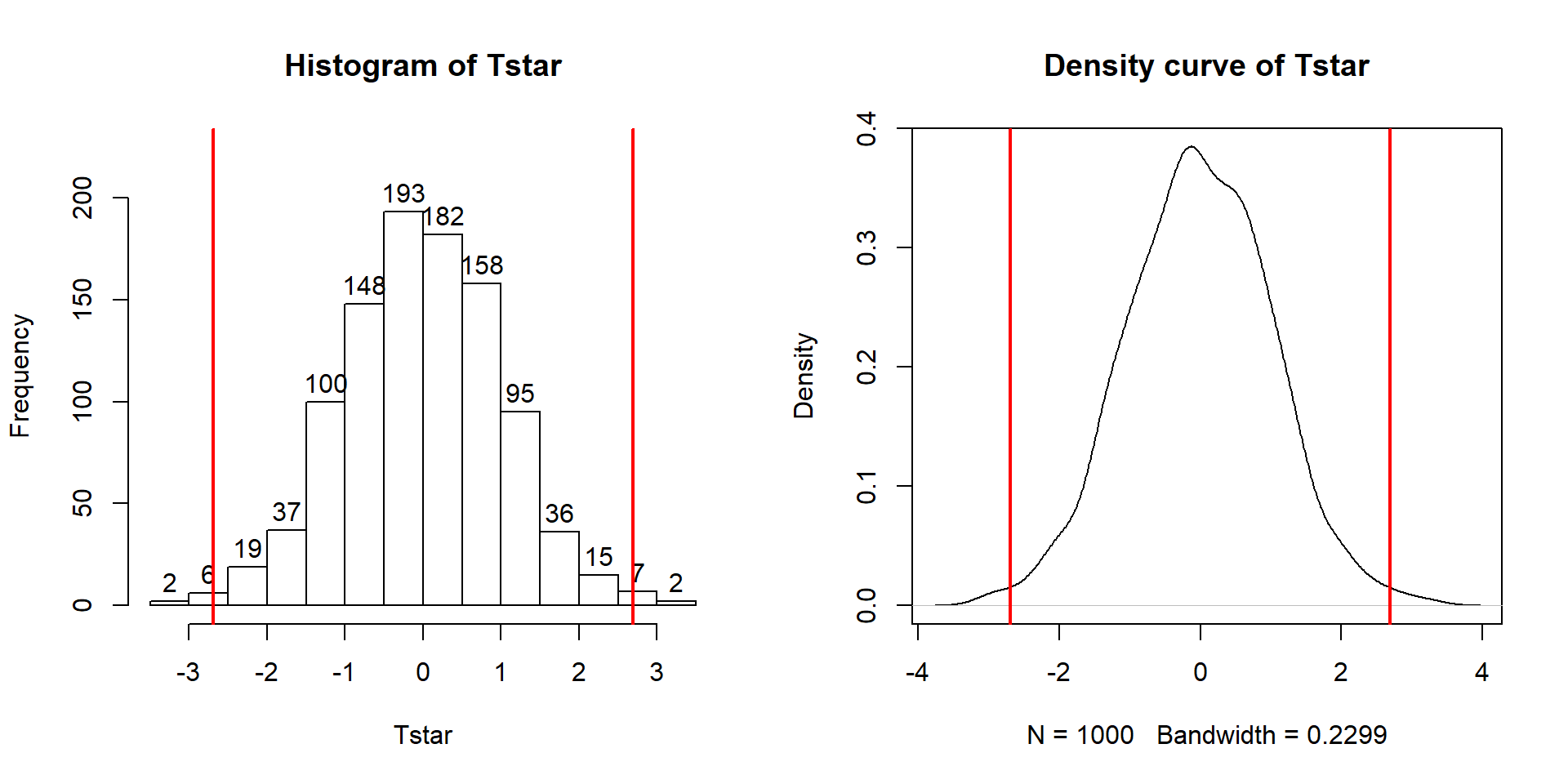

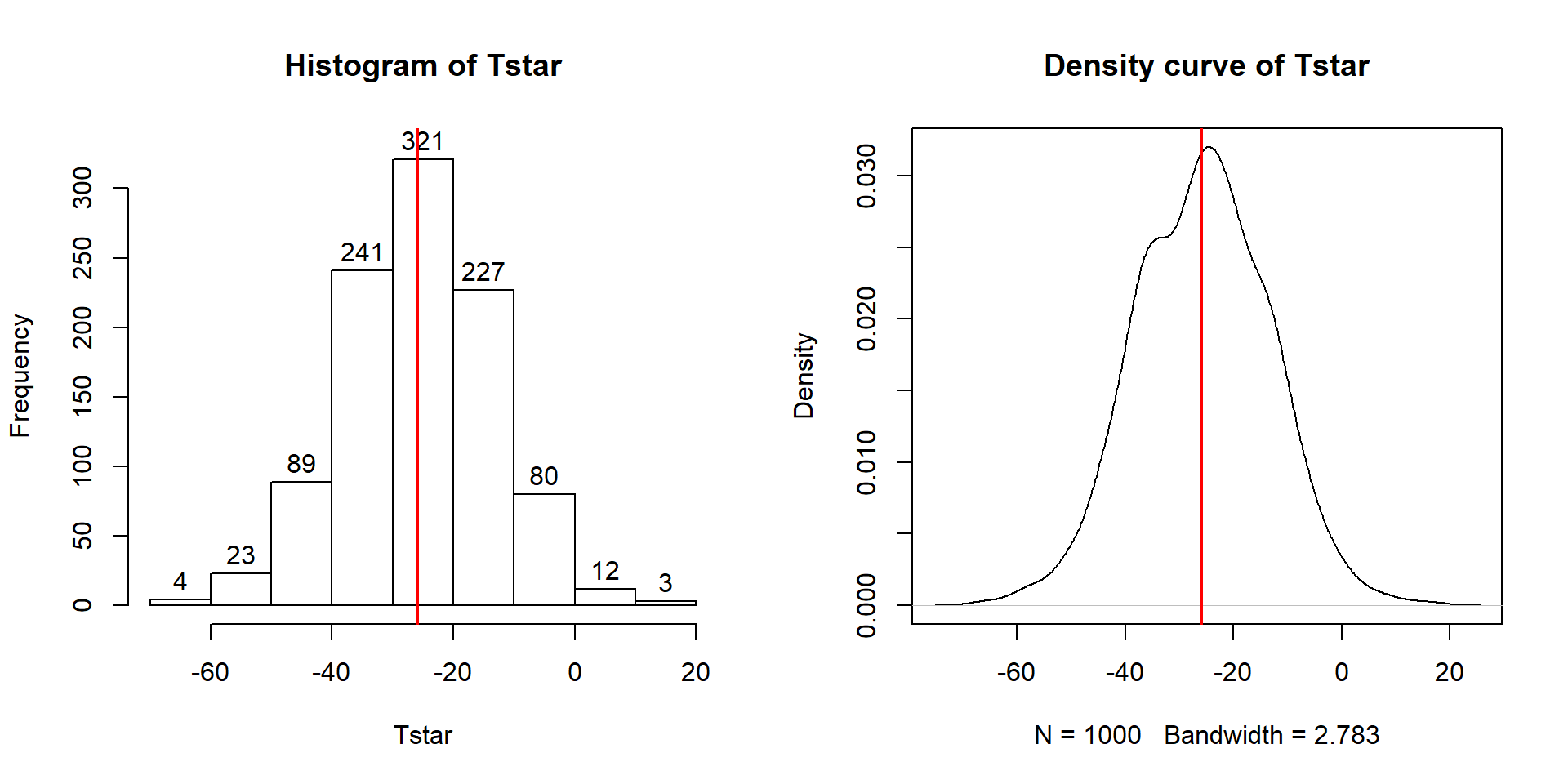

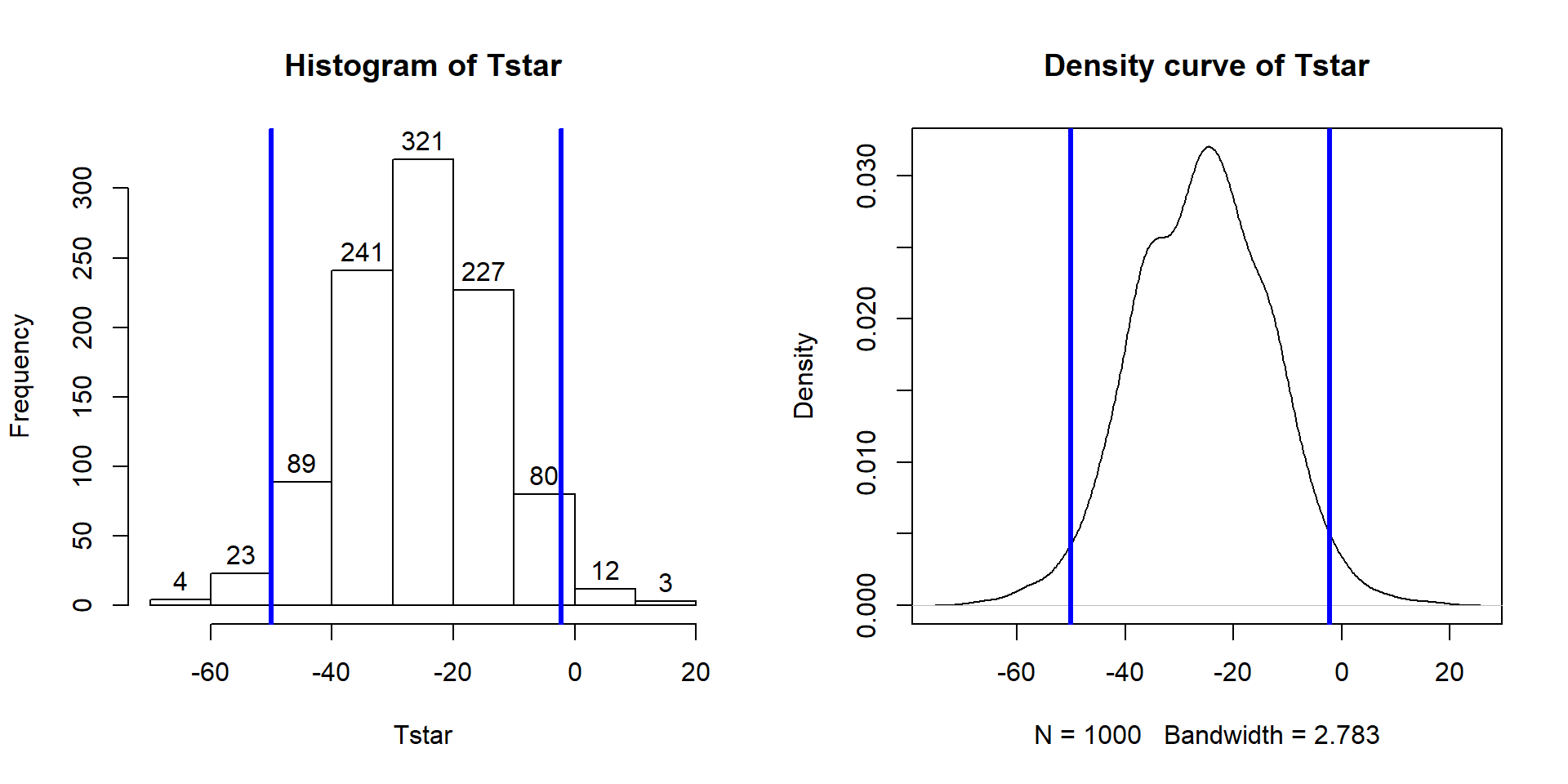

Figure 2.9: Histogram (left, with counts in bars) and density curve (right) of values of test statistic for B = 1,000 permutations.

B <- 1000

Tstar <- matrix(NA, nrow=B)

for (b in (1:B)){

lmP <- lm(Distance~shuffle(Condition), data=dsample)

Tstar[b] <- coef(lmP)[2]

}

hist(Tstar, label=T,ylim=c(0,300))

plot(density(Tstar), main="Density curve of Tstar")## min Q1 median Q3 max mean sd n

## -41.26667 -10.06667 -0.3333333 8.6 37.26667 -0.5054667 13.17156 1000

## missing

## 0Figure 2.9 contains visualizations of \(T^*\) and the favstats

summary provides the related numerical summaries. Our observed \(T_{obs}\)

of -25.933 seems somewhat unusual relative to these results with only

9 \(T^*\) values smaller than -30 based on the

histogram. We need to make more specific comparisons of the permuted results

versus our observed result to be able to clearly decide whether our observed

result is really unusual.

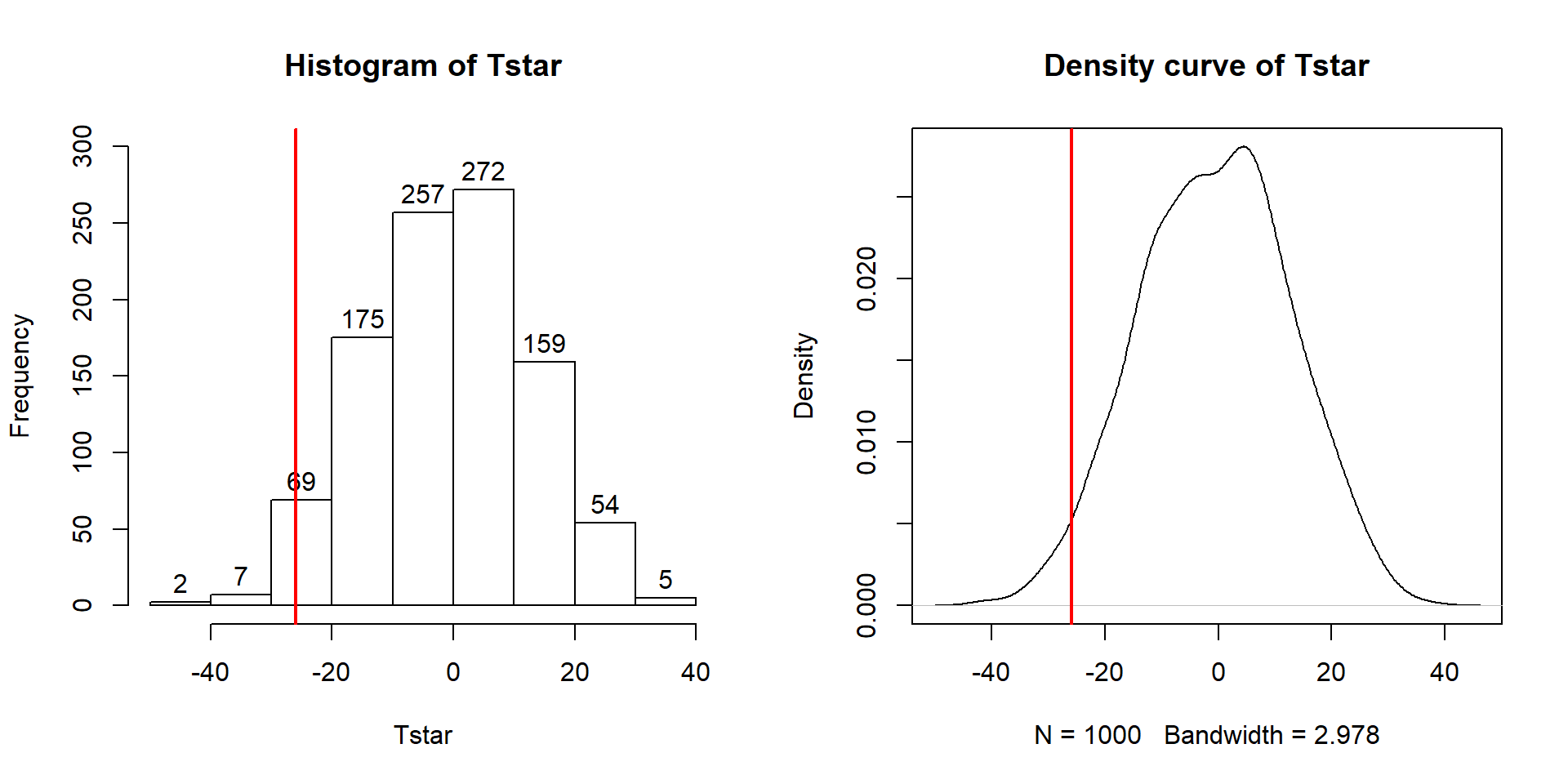

To make the comparisons more concrete, first we can enhance the previous graphs

by adding the value of the test statistic from the real data set, as shown in

Figure 2.10, using the abline function to draw a vertical

line at our \(T_{obs}\) value specified in the v (for vertical) option.

Figure 2.10: Histogram (left) and density curve (right) of values of test statistic for 1,000 permutations with bold vertical line for value of observed test statistic.

Tobs <- -25.933

hist(Tstar, labels=T)

abline(v=Tobs, lwd=2, col="red")

plot(density(Tstar), main="Density curve of Tstar")

abline(v=Tobs, lwd=2, col="red")Second, we can calculate the exact number of permuted results that were as

small or smaller

than what we observed. To calculate the proportion of the 1,000 values that were

as small or smaller than what we observed, we will use the pdata function.

To use this

function, we need to provide the distribution of values to compare to the cut-off

(Tstar), the cut-off point (Tobs), and whether we want calculate the

proportion that are below (left of) or above (right of) the cut-off

(lower.tail=T option provides the proportion of values to the left of (below) the cutoff

of interest).

## [1] 0.027The proportion of 0.027 tells us that 27 of the 1,000 permuted results (2.7%) were as small or smaller than what we observed. This type of work is how we can generate p-values using permutation distributions. P-values, as you should remember, are the probability of getting a result as extreme as or more extreme than what we observed, \(\underline{\text{given that the null is true}}\). Finding only 27 permutations of 1,000 that were as small or smaller than our observed result suggests that it is hard to find a result like what we observed if there really were no difference, although it is not impossible.

When testing hypotheses for two groups, there are two types of alternative

hypotheses, one-sided or two-sided. One-sided tests involve only considering

differences in one-direction (like \(\mu_1 > \mu_2\)) and are performed when

researchers can decide a priori30 which group should have a larger mean

if there is going to be any sort of difference. In this situation, we did not

know enough about the potential impacts of the outfits to know which group should

be larger than the other so should do a two-sided test. It is important to

remember that you can’t look at the responses to decide on the hypotheses. It is

often safer and more conservative31 to start with a

two-sided alternative (\(\mathbf{H_A: \mu_1 \ne \mu_2}\)). To do a 2-sided

test, find the area smaller than what we observed as above (or larger if the test statistic had been positive). We also need to add

the area in the other tail (here the right tail) similar to what we observed in the

right tail. Some statisticians suggest doubling the area in one tail but we will collect

information on the number that were as or more extreme than the same

value in the other

tail32. In other words, we count the proportion below -25.933 and over 25.933. So

we need to find how many of the permuted results were larger than or equal

to 25.933 cm

to add to our previous proportion. Using pdata with -Tobs as the cut-off

and lower.tail =F provides this result:

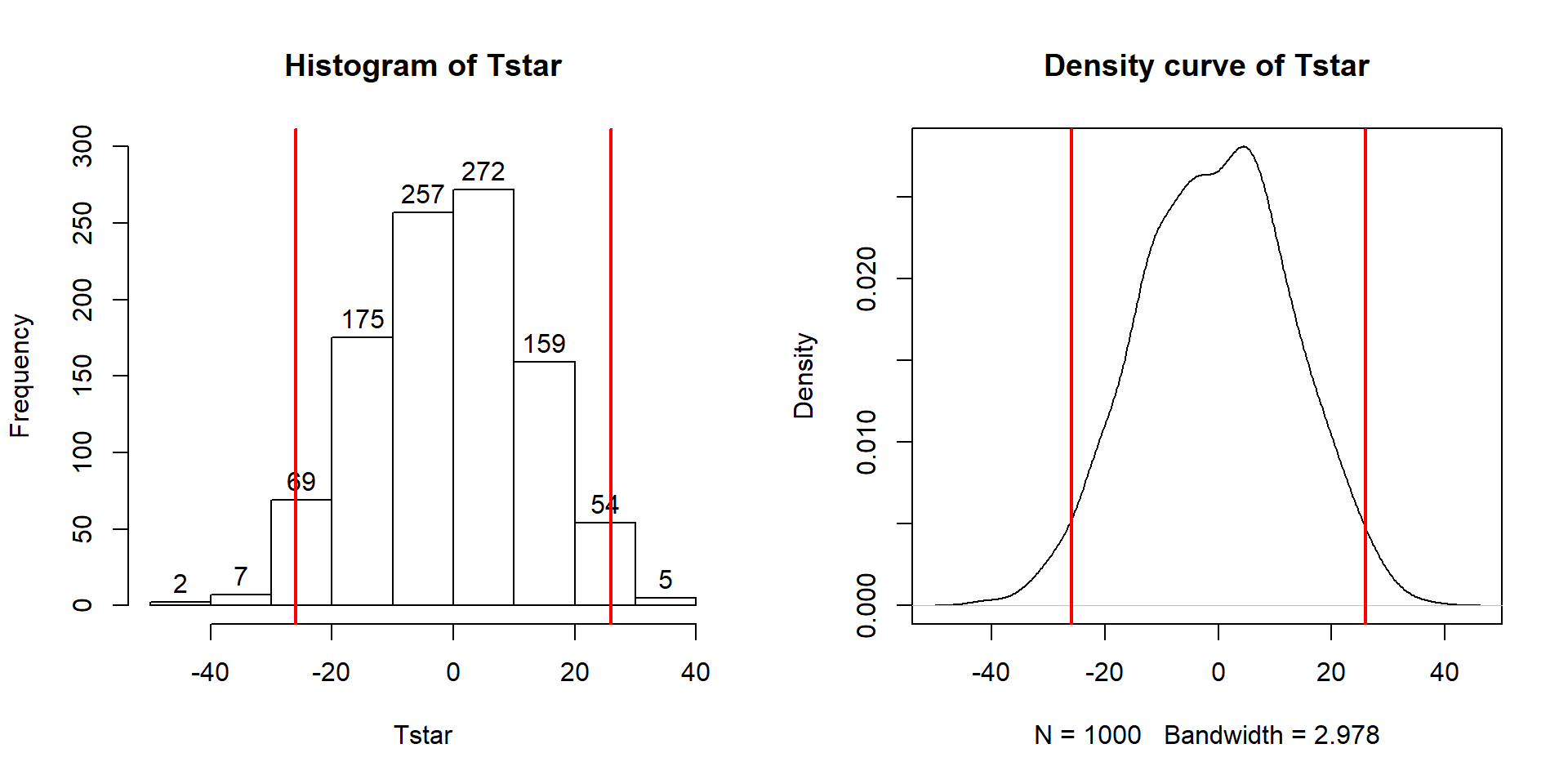

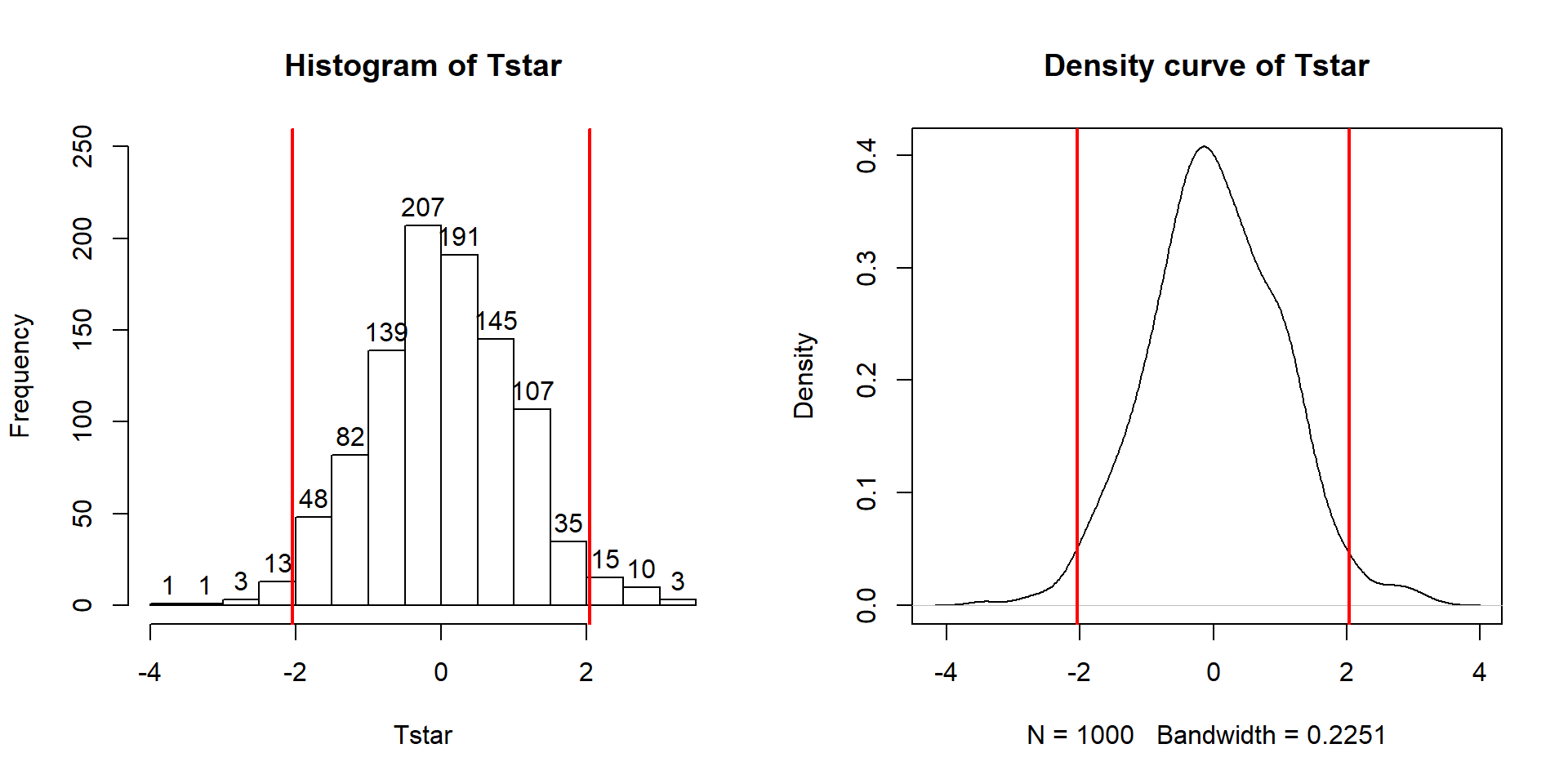

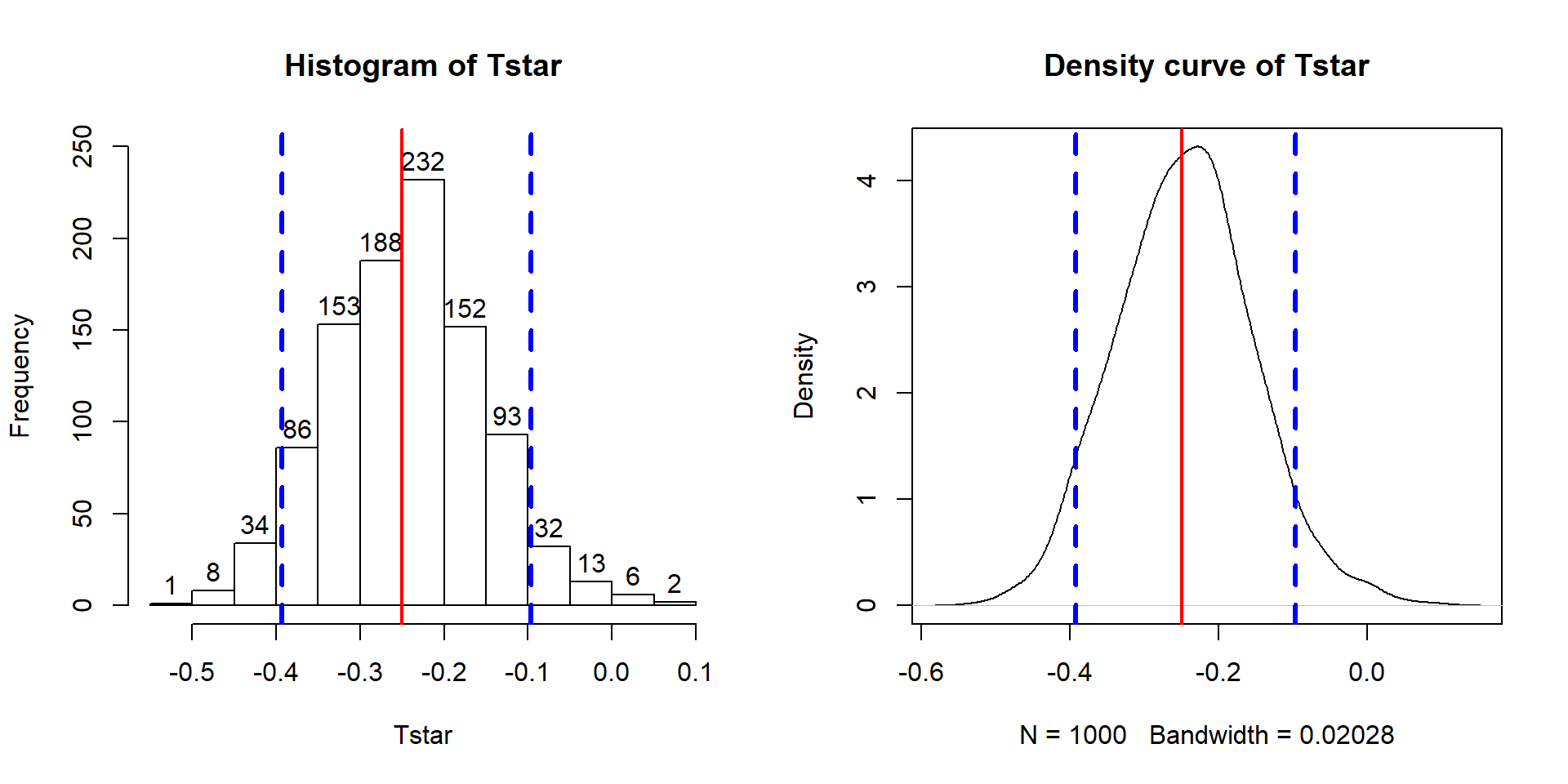

## [1] 0.017So the p-value to test our null hypothesis of no difference in the true means between the groups is 0.027 + 0.017, providing a p-value of 0.044. Figure 2.11 shows both cut-offs on the histogram and density curve.

Figure 2.11: Histogram and density curve of values of test statistic for 1,000 permutations with bold lines for value of observed test statistic (-25.933) and its opposite value (25.933) required for performing the two-sided test.

hist(Tstar, labels=T)

abline(v=c(-1,1)*Tobs, lwd=2, col="red")

plot(density(Tstar), main="Density curve of Tstar")

abline(v=c(-1,1)*Tobs, lwd=2, col="red")In general, the one-sided test p-value

is the proportion of the

permuted results that are as extreme or more extreme than observed in the

direction of the alternative

hypothesis (lower or upper tail, remembering that this also depends on the

direction of the difference taken). For the two-sided test, the p-value

is the

proportion of the permuted results that are less than or equal to the negative

version of the observed statistic and greater than or equal to the positive

version of the observed statistic. Using absolute

values (| |), we can simplify this: the two-sided p-value is the

proportion of the |permuted statistics| that are as large or larger than

|observed statistic|.

This will always work and finds areas in both tails regardless of whether the

observed statistic is positive or negative. In R, the abs function provides the

absolute value and we can again use pdata to find our p-value in one line

of code:

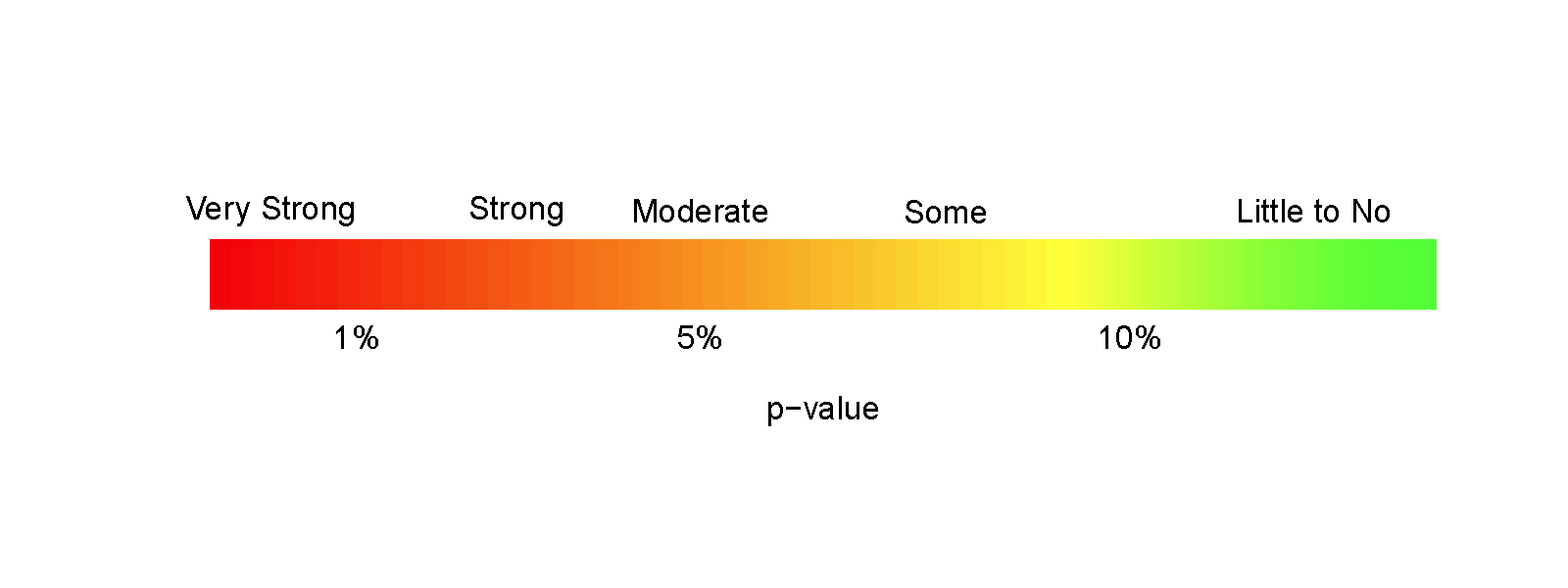

## [1] 0.044We will encourage you to think through what might constitute strong evidence against your null hypotheses and then discuss how strong you feel the evidence is against the null hypothesis in the p-value that you obtained. Basically, p-values present a measure of evidence against the null hypothesis, with smaller values presenting more evidence against the null. They range from 0 to 1 and you should interpret them on a graded scale from strong evidence (close to 0) to little evidence to no evidence (1). We will discuss the use of a fixed significance level below as it is still commonly used in many fields and is necessary to discuss to think about the theory of hypothesis testing, but, for the moment, we can conclude that there is moderate evidence against the null hypothesis presented by having a p-value of 0.044 because our observed result is somewhat rare relative to what we would expect if the null hypothesis was true. And so we might conclude (in the direction of the alternative) that there is a difference in the population means in the two groups, but that depends on what you think about how unusual that result was.

Before we move on, let’s note some interesting features of the permutation distribution of the difference in the sample means shown in Figure 2.11.

It is basically centered at 0. Since we are performing permutations assuming the null model is true, we are assuming that \(\mu_1 = \mu_2\) which implies that \(\mu_1 - \mu_2 = 0\). This also suggests that 0 should be the center of the permutation distribution and it was.

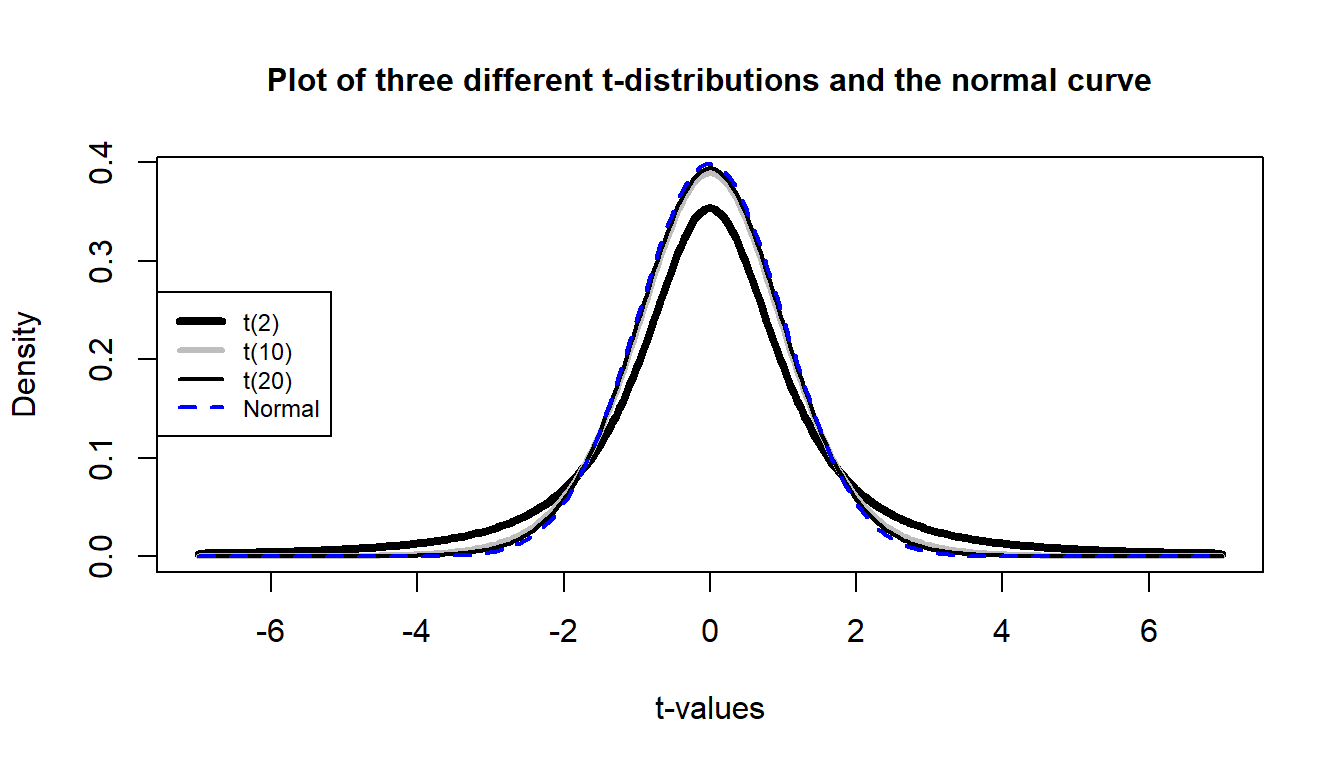

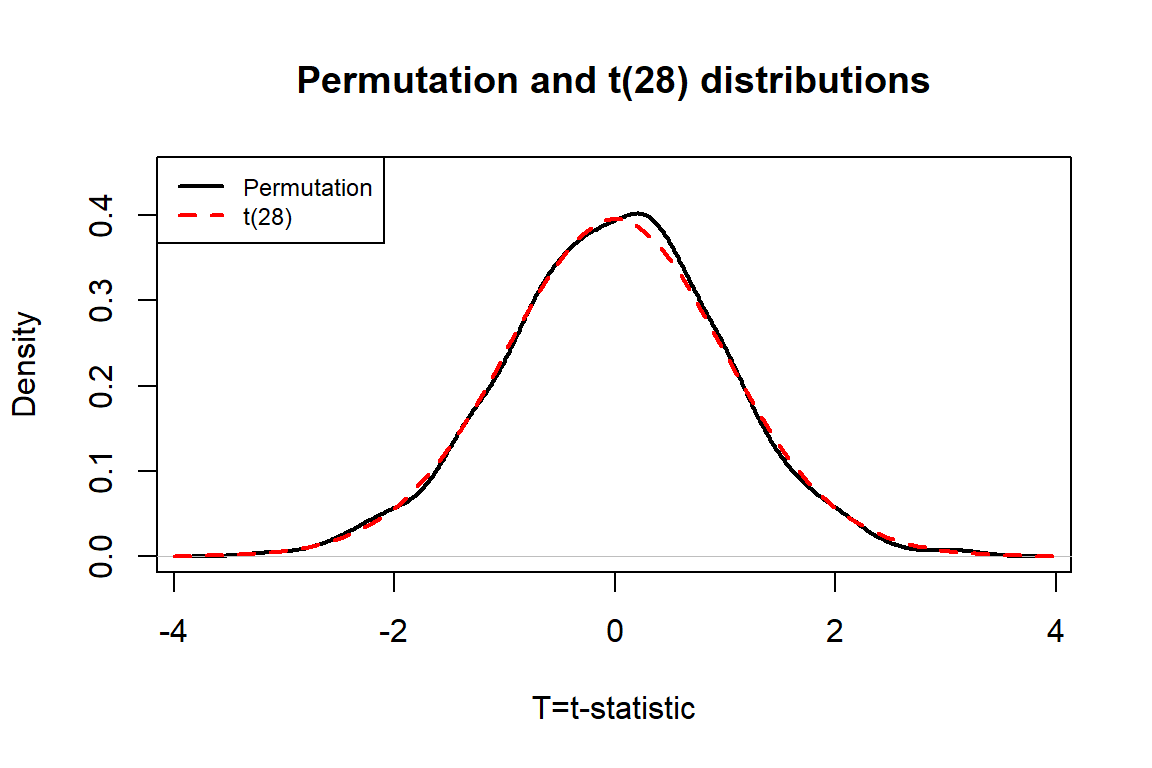

It is approximately normally distributed. This is due to the Central Limit Theorem33, where the sampling distribution (distribution of all possible results for samples of this size) of the difference in sample means (\(\bar{x}_1 - \bar{x}_2\)) becomes more normally distributed as the sample sizes increase. With 15 observations in each group, we have no guarantee to have a relatively normal looking distribution of the difference in the sample means but with the distributions of the original observations looking somewhat normally distributed, the sampling distribution of the sample means likely will look fairly normal. This result will allow us to use a parametric method to approximate this sampling distribution under the null model if some assumptions are met, as we’ll discuss below.

Our observed difference in the sample means (-25.933) is a fairly unusual result relative to the rest of these results but there are some permuted data sets that produce more extreme differences in the sample means. When the observed differences are really large, we may not see any permuted results that are as extreme as what we observed. When

pdatagives you 0, the p-value should be reported to be smaller than 0.001 (not 0!) if B is 1,000 since it happened in less than 1 in 1,000 tries but does occur once – in the actual data set.Since our null model is not specific about the direction of the difference, considering a result like ours but in the other direction (25.933 cm) needs to be included. The observed result seems to put about the same area in both tails of the distribution but it is not exactly the same. The small difference in the tails is a useful aspect of this approach compared to the parametric method discussed below as it accounts for potential asymmetry in the sampling distribution.

Earlier, we decided that the p-value provided moderate evidence against the null hypothesis. You should use your own judgment about whether the p-value obtain is sufficiently small to conclude that you think the null hypothesis is wrong. Remembering that the p-value is the probability you would observe a result like you did (or more extreme), assuming the null hypothesis is true; this tells you that the smaller the p-value is, the more evidence you have against the null. Figure 2.12 provides a diagram of some suggestions for the graded p-value interpretation that you can use. The next section provides a more formal review of the hypothesis testing infrastructure, terminology, and some of things that can happen when testing hypotheses. P-values have been (validly) criticized for the inability of studies to be reproduced, for the bias in publications to only include studies that have small p-values, and for the lack of thought that often accompanies using a fixed significance level to make decisions (and only focusing on that decision). To alleviate some of these criticisms, we recommend reporting the strength of evidence of the result based on the p-value and also reporting and discussing the size of the estimated results (with a measure of precision of the estimated difference). We will explore the implications of how p-values are used in scientific research in Section 2.8.

Figure 2.12: Graphic suggesting potential interpretations of strength of evidence based on gradient of p-values.

2.5 Hypothesis testing (general)

In hypothesis testing (sometimes more explicitly called “Null Hypothesis Significance Testing” or NHST), it is formulated to answer a specific question about a population or true parameter(s) using a statistic based on a data set. In your previous statistics course, you (hopefully) considered one-sample hypotheses about population means and proportions and the two-sample mean situation we are focused on here. Hypotheses relate to trying to answer the question about whether the population mean overtake distances between the two groups are different, with an initial assumption of no difference.

NHST is much like a criminal trial with a jury where you are in the role of a jury member. Initially, the defendant is assumed innocent. In our situation, the true means are assumed to be equal between the groups. Then evidence is presented and, as a juror, you analyze it. In statistical hypothesis testing, data are collected and analyzed. Then you have to decide if we had “enough” evidence to reject the initial assumption (“innocence” that is initially assumed). To make this decision, you want to have thought about and decided on the standard of evidence required to reject the initial assumption. In criminal cases, “beyond a reasonable doubt” is used. Wikipedia’s definition (https://en.wikipedia.org/wiki/Reasonable_doubt) suggests that this standard is that “there can still be a doubt, but only to the extent that it would not affect a reasonable person’s belief regarding whether or not the defendant is guilty”. In civil trials, a lower standard called a “preponderance of evidence” is used. Based on that defined and pre-decided (a priori) measure, you decide that the defendant is guilty or not guilty. In statistics, the standard is set by choosing a significance level, \(\alpha\), and then you compare the p-value to it. In this approach, if the p-value is less than \(\alpha\), we reject the null hypothesis. The choice of the significance level is like the variation in standards of evidence between criminal and civil trials – and in all situations everyone should know the standards required for rejecting the initial assumption before any information is “analyzed”. Once someone is found guilty, then there is the matter of sentencing which is related to the impacts (“size”) of the crime. In statistics, this is similar to the estimated size of differences and the related judgments about whether the differences are practically important or not. If the crime is proven beyond a reasonable doubt but it is a minor crime, then the sentence will be small. With the same level of evidence and a more serious crime, the sentence will be more dramatic. This latter step is more critical than the p-value as it directly relates to actions to be taken based on the research but unfortunately p-values and the related decisions get most of the attention.

There are some important aspects of the testing process to note that inform how we interpret statistical hypothesis test results. When someone is found “not guilty”, it does not mean “innocent”, it just means that there was not enough evidence to find the person guilty “beyond a reasonable doubt”. Not finding enough evidence to reject the null hypothesis does not imply that the true means are equal, just that there was not enough evidence to conclude that they were different. There are many potential reasons why we might fail to reject the null, but the most common one is that our sample size was too small (which is related to having too little evidence). Other reasons include simply the variation in taking a random sample from the population(s). This randomness in samples and the differences in the sample means also implies that p-values are random and can easily vary if the data set had been slightly different. This also relates to the suggestion of using a graded interpretation of p-values instead of the fixed \(\alpha\) usage – if the p-value is an estimated quantity, is there really any difference between p-values of 0.049 and 0.051? We probably shouldn’t think there is a big difference in results for these two p-values even though the standard NHST reject/fail to reject the null approach considers these as completely different results. So where does that leave us? Interpret the p-values using strength of evidence against the null hypothesis, remembering that smaller (but not really small) p-values can still be interesting. And if you think the p-value is small enough, then you can reject the null hypothesis and conclude that the alternative hypothesis is a better characterization of the truth – and then estimate the size of the differences.

Throughout this material, we will continue to re-iterate the distinctions between parameters and statistics and want you to be clear about the distinctions between estimates based on the sample and inferences for the population or true values of the parameters of interest. Remember that statistics are summaries of the sample information and parameters are characteristics of populations (which we rarely know). In the two-sample mean situation, the sample means are always at least a little different – that is not an interesting conclusion. What is interesting is whether we have enough evidence to feel like we have proven that the population or true means differ “beyond a reasonable doubt”.

The scope of any inferences is constrained based on whether there is a random sample (RS) and/or random assignment (RA). Table 2.1 contains the four possible combinations of these two characteristics of a given study. Random assignment of treatment levels to subjects allows for causal inferences for differences that are observed – the difference in treatment levels is said to cause differences in the mean responses. Random sampling (or at least some sort of representative sample) allows inferences to be made to the population of interest. If we do not have RA, then causal inferences cannot be made. If we do not have a representative sample, then our inferences are limited to the sampled subjects.

| Random Sampling/Random Assignment |

Random Assignment (RA) – Yes (controlled experiment) |

Random Assignment (RA) – No (observational study) |

|---|---|---|

| Random Sampling (RS) – Yes (or some method that results in a representative sample of population of interest) |

Because we have RS, we can generalize inferences to the population the RS was taken from. Because we have RA we can assume the groups were equivalent on all aspects except for the treatment and can establish causal inference. |

Can generalize inference to population the RS was taken from but cannot establish causal inference (no RA – cannot isolate treatment variable as only difference among groups, could be confounding variables). |

| Random Sampling (RS) – No (usually a convenience sample) |

Cannot generalize inference

to the population of interest because the sample was not random and could be biased – may not be “representative” of the population of interest. Can establish causal inference due to RA \(\rightarrow\) the inference from this type of study applies only to the sample. |

Cannot generalize inference

to the population of interest because the sample was not random and could be biased – may not be “representative” of the population of interest. Cannot establish causal inference due to lack of RA of the treatment. |

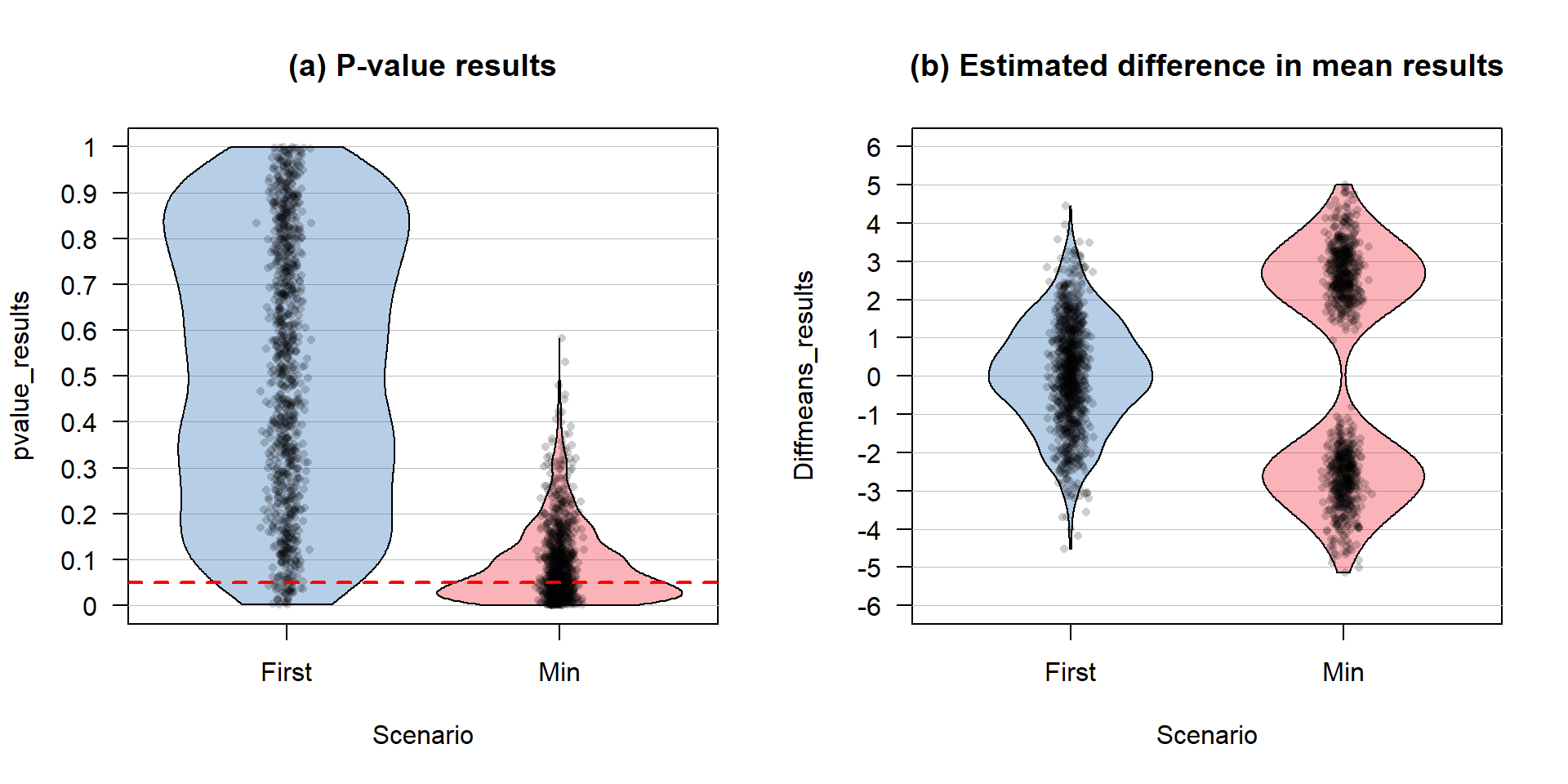

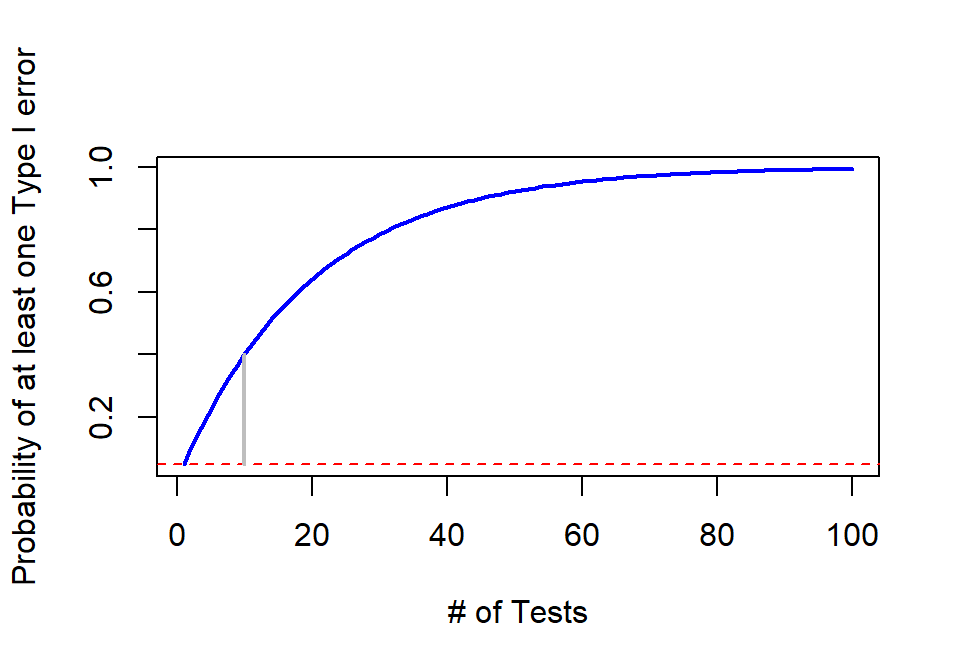

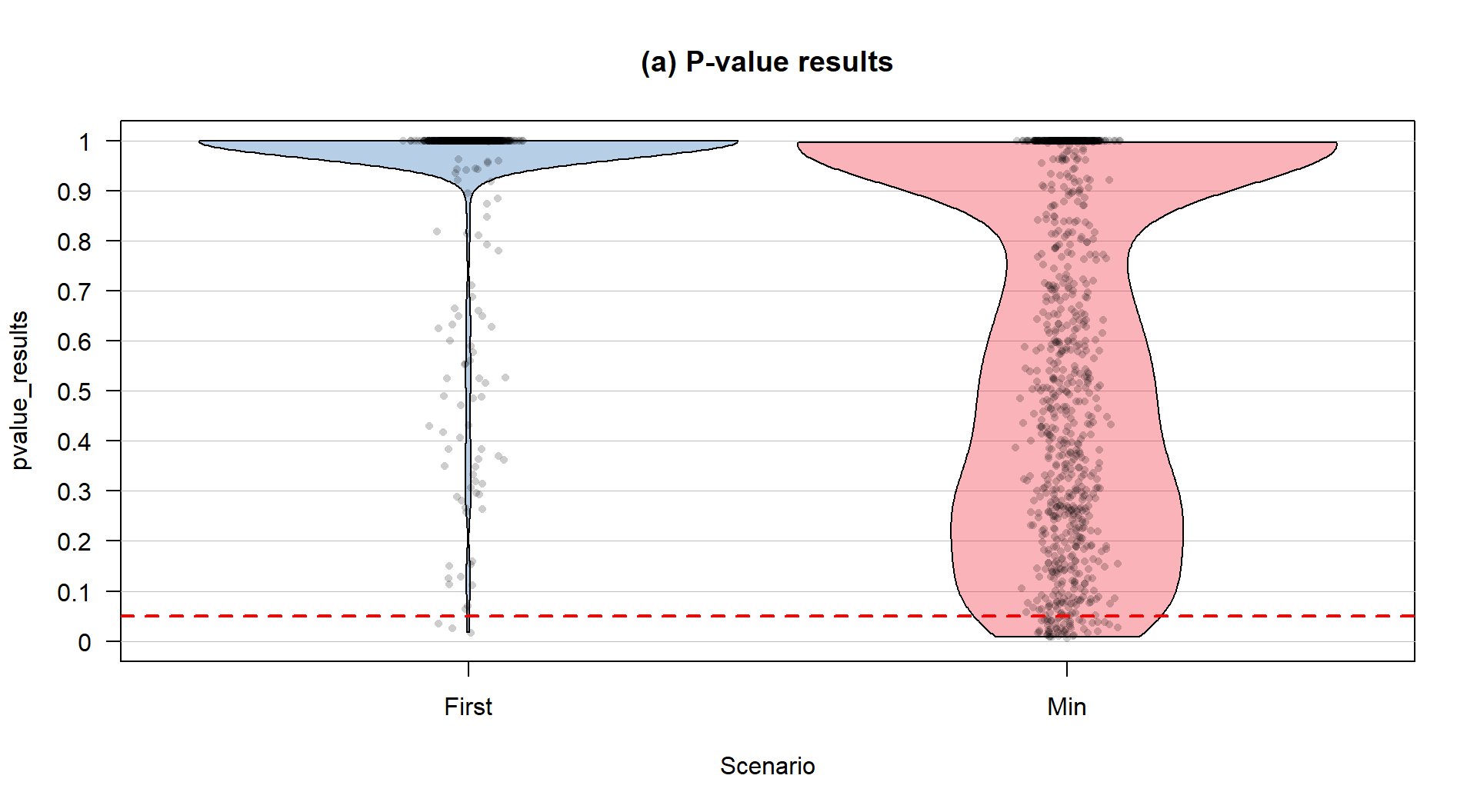

A simple example helps to clarify how the scope of inference can change based on the study design. Suppose we are interested in studying the GPA of students. If we had taken a random sample from, say, Intermediate Statistics students in a given semester at a university, our scope of inference would be the population of students in that semester taking that course. If we had taken a random sample from the entire population of students at that school, then the inferences would be to the entire population of students in that semester. These are similar types of problems but the two populations are very different and the group you are trying to make conclusions about should be noted carefully in your results – it does matter! If we did not have a representative sample, say the students could choose to provide this information or not and some chose not to, then we can only make inferences to volunteers. These volunteers might differ in systematic ways from the entire population of Intermediate Statistics students (for example, they are proud of their GPA) so we cannot safely extend our inferences beyond the group that volunteered.