Chapter 8 Multiple linear regression

8.1 Going from SLR to MLR

In many situations, especially in observational studies, it is unlikely that the system is simple enough to be characterized by a single predictor variable. In experiments, if we randomly assign levels of a predictor variable we can assume that the impacts of other variables cancel out as a direct result of the random assignment. But it is possible even in these experimental situations that we can “improve” our model for the response variable by adding additional predictor variables that explain additional variation in the responses, reducing the amount of unexplained variation. This can allow more precise inferences to be generated from the model. As mentioned previously, it might be useful to know the sex or weight of the subjects in the Beers vs BAC study to account for more of the variation in the responses – this idea motivates our final topic: multiple linear regression (MLR) models. In observational studies, we can think of a suite of characteristics of observations that might be related to a response variable. For example, consider a study of yearly salaries and variables that might explain the amount people get paid. We might be most interested in seeing how incomes change based on age, but it would be hard to ignore potential differences based on sex and education level. Trying to explain incomes would likely require more than one predictor variable and we wouldn’t be able to explain all the variability in the responses just based on gender and education level, but a model using those variables might still provide some useful information about each component and about age impacts on income after we adjust (control) for sex and education. The extension to MLR allows us to incorporate multiple predictors into a regression model. Geometrically, this is a way of relating many different dimensions (number of \(x\text{'s}\)) to what happened in a single response variable (one dimension).

We start with the same model as in SLR except now we allow \(K\) different \(x\text{'s}\):

\[y_i = \beta_0 + \beta_1x_{1i} + \beta_2x_{2i}+ \ldots + \beta_Kx_{Ki} + \varepsilon_i\]

Note that if \(K=1\), we are back to SLR. In the MLR model, there are \(K\) predictors and we still have a \(y\)-intercept. The MLR model carries the same assumptions as an SLR model with a couple of slight tweaks specific to MLR (see Section 8.2 for the details on the changes to the validity conditions).

We are able to use the

least squares criterion for estimating the regression coefficients in MLR, but

the mathematics are beyond the scope of this course.

The lm function takes

care of finding the least squares coefficients using a very sophisticated

algorithm112. The estimated

regression equation it returns is:

\[\widehat{y}_i = b_0 + b_1x_{1i} +b_2x_{2i}+\ldots+b_Kx_{Ki}\]

where each \(b_k\) estimates its corresponding parameter \(\beta_k\).

An example of snow depths at some high elevation locations in Montana on a day in

April provides a nice motivation for these methods. A random sample of

\(n=25\) Montana locations (from the population of \(N=85\) at the time) were obtained

from the Natural Resources Conversation Service’s website

(http://www.wcc.nrcs.usda.gov/snotel/Montana/montana.html) a few years ago.

Information on the snow depth (Snow.Depth) in inches, daily Minimum and

Maximum Temperatures (Min.Temp and Max.Temp) in \(^\circ F\) and

elevation of the site (Elevation) in feet. A snow science researcher (or

spring back-country skier) might be interested in understanding Snow depth

as a function of Minimum Temperature, Maximum Temperature, and Elevation.

One might assume that colder

and higher places will have more snow, but using just one of the predictor

variables might leave out some important predictive information. The following

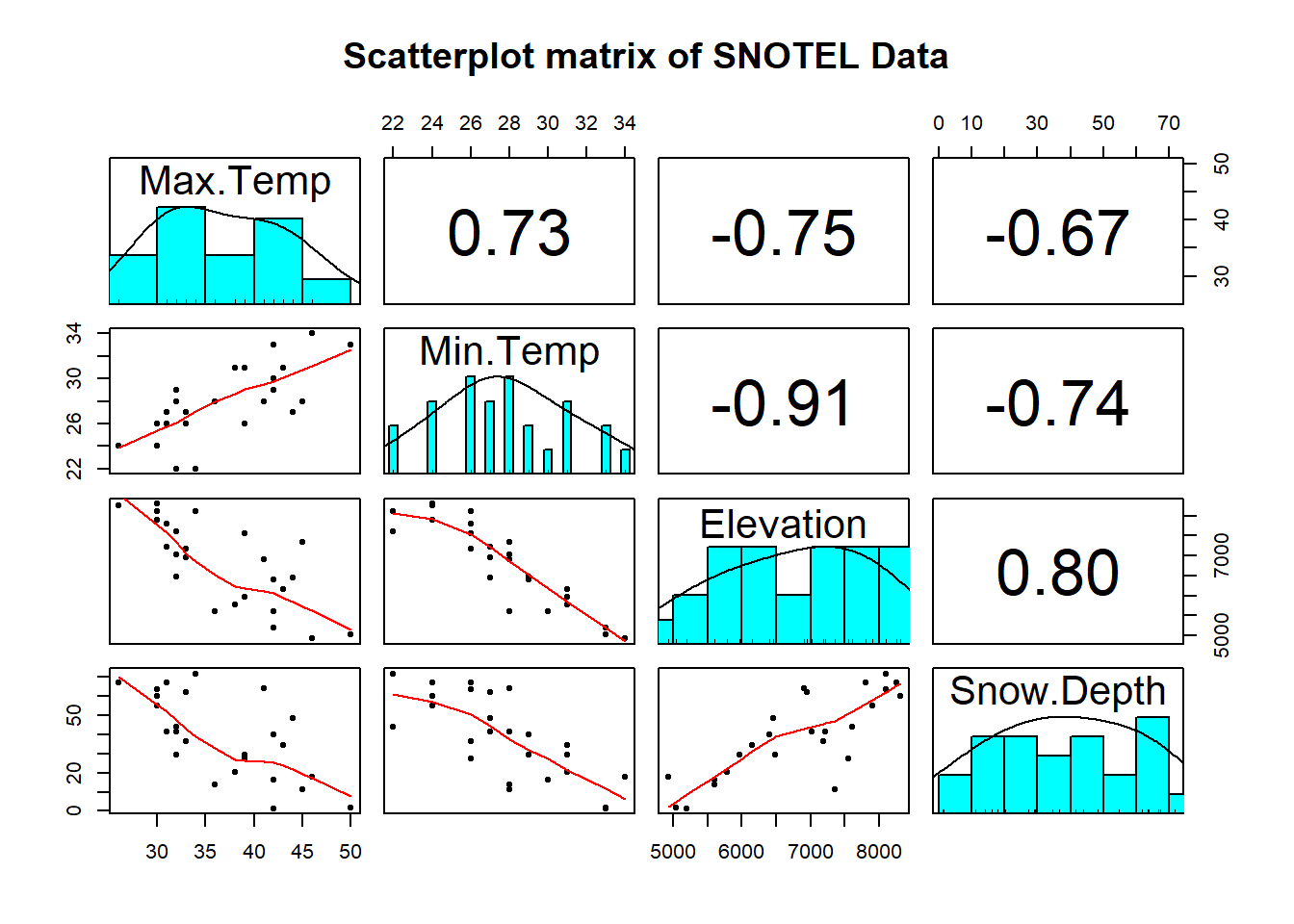

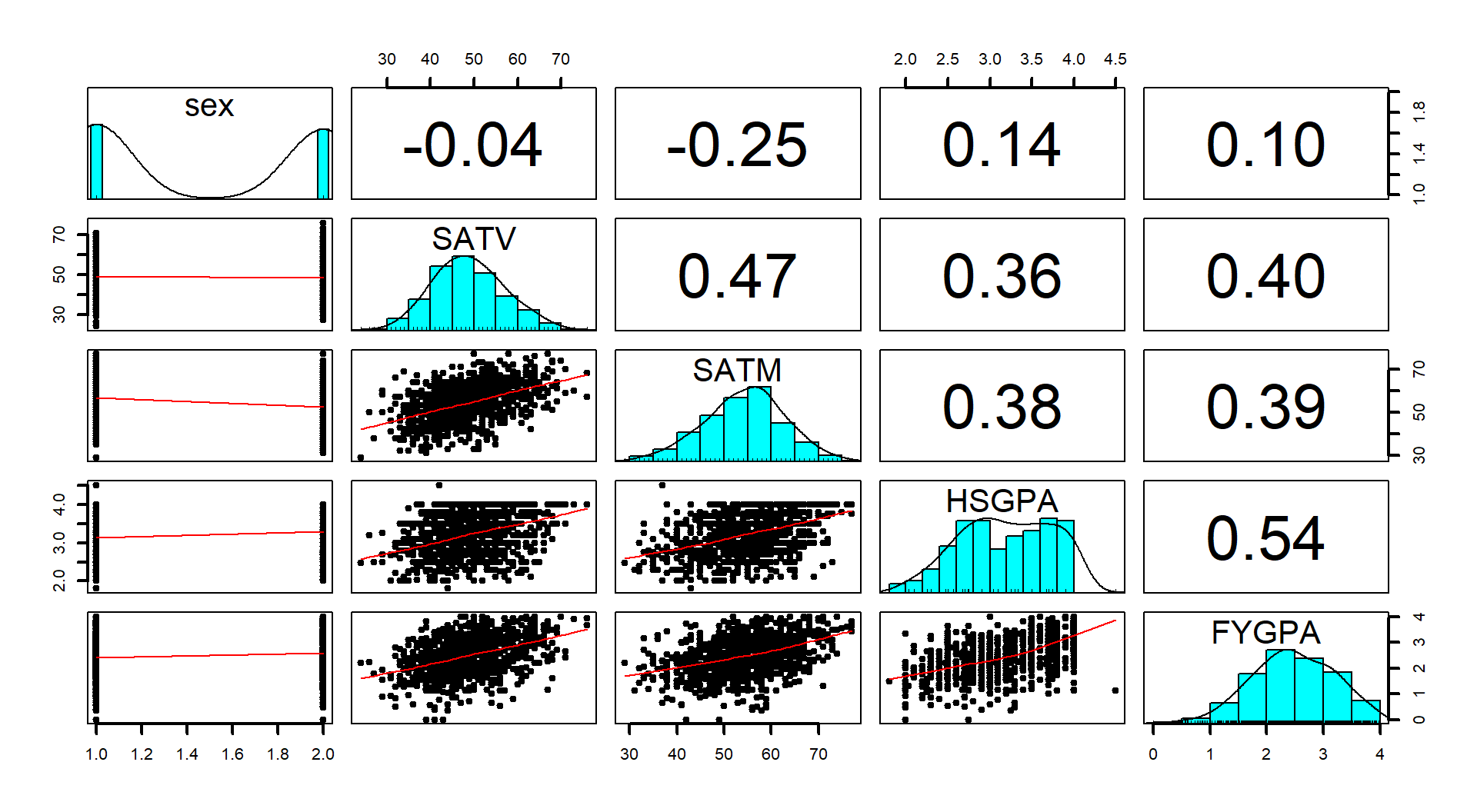

code loads the data set and makes the scatterplot matrix

(Figure 8.1) to allow

some preliminary assessment of the pairwise relationships.

snotel2 <- snotel_s[,c(1:2,4:6,3)] #Reorders columns for nicer pairs.panel display

library(psych)

pairs.panels(snotel2[,-c(1:2)], ellipse=F,

main="Scatterplot matrix of SNOTEL Data")

Figure 8.1: Scatterplot matrix of data from a sample of SNOTEL sites in April on four variables.

It appears that there are many strong linear relationships between the variables, with Elevation and Snow Depth having the largest magnitude, r = 0.80. Higher temperatures seem to be associated with less snow – not a big surprise so far! There might be an outlier at an elevation of 7400 feet and a snow depth below 10 inches that we should explore further.

A new issue arises in attempting to build MLR models called multicollinearity. Again, it is a not surprise that temperature and elevation are correlated but that creates a problem if we try to put them both into a model to explain snow depth. Is it the elevation, temperature, or the combination of both that matters for getting and retaining more snow? Correlation between predictor variables is called multicollinearity and makes estimation and interpretation of MLR models more complicated than in SLR. Section 8.5 deals with this issue directly and discusses methods for detecting its presence. For now, remember that in MLR this issue sometimes makes it difficult to disentangle the impacts of different predictor variables on the response when the predictors share information – when they are correlated.

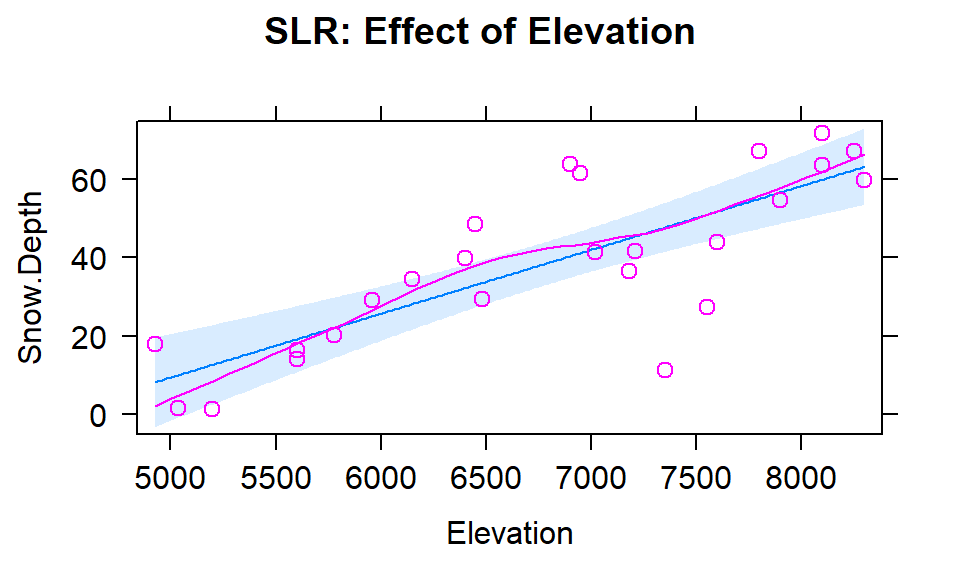

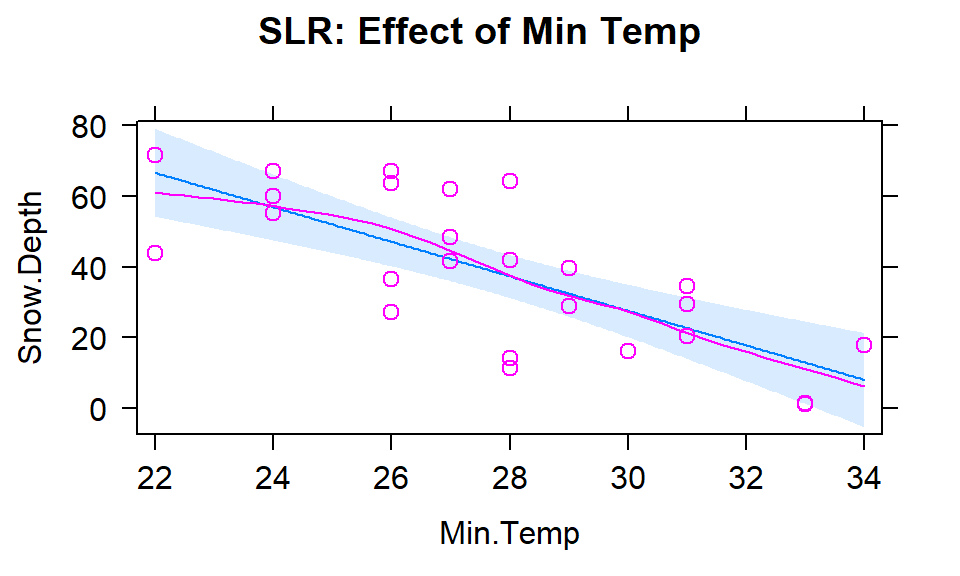

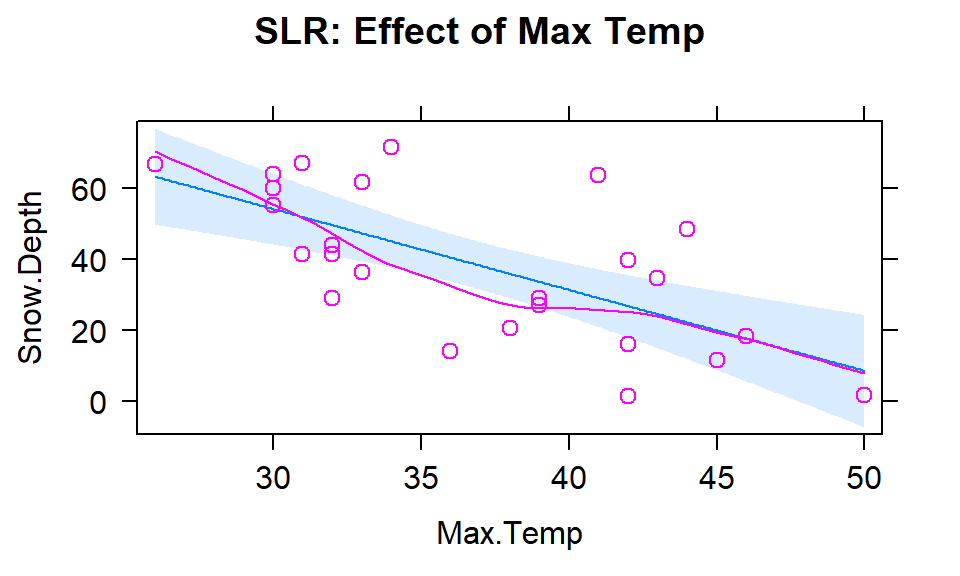

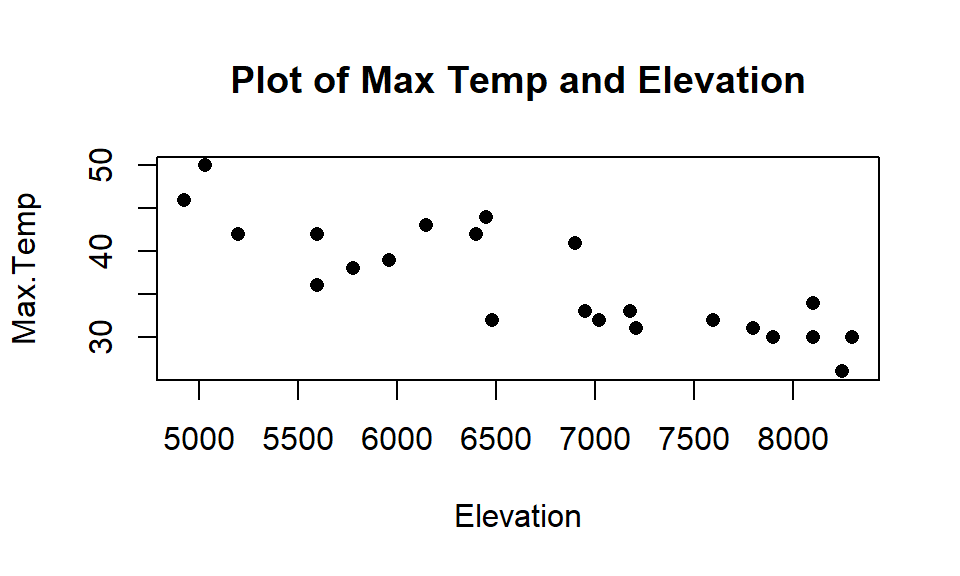

To get familiar with this example, we can start with fitting some potential SLR models and plotting the estimated models. Figure 8.2 contains the result for the SLR using Elevation and results for two temperature based models are in Figures 8.3 and 8.4. Snow Depth is selected as the obvious response variable both due to skier interest and potential scientific causation (snow can’t change elevation but elevation could be the driver of snow deposition and retention).

Figure 8.2: Plot of the estimated SLR model for Snow Depth with Elevation as the predictor along with observations and smoothing line generated by the residuals=T option being specified.

Based on the model summaries provided below, the three estimated SLR models are:

\[\begin{array}{rl} \widehat{\text{SnowDepth}}_i &= -72.006 + 0.0163\cdot\text{Elevation}_i, \\ \widehat{\text{SnowDepth}}_i &= 174.096 - 4.884\cdot\text{MinTemp}_i,\text{ and} \\ \widehat{\text{SnowDepth}}_i &= 122.672 - 2.284\cdot\text{MaxTemp}_i. \end{array}\]

The term-plots of the estimated models reinforce our expected results, showing a positive change in Snow Depth for higher Elevations and negative impacts for increasing temperatures on Snow Depth. These plots are made across the observed range113 of the predictor variable and help us to get a sense of the total impacts of predictors. For example, for elevation in Figure 8.2, the smallest observed value was 4925 feet and the largest was 8300 feet. The regression line goes from estimating a mean snow depth of 8 inches to 63 inches. That gives you some practical idea of the size of the estimated Snow Depth change for the changes in Elevation observed in the data. Putting this together, we can say that there was around a 55 inch change in predicted snow depths for a close to 3400 foot increase in elevation. This helps make the slope coefficient of 0.0163 in the model more easily understood.

Remember that in SLR, the range of \(x\) matters just as much as the units of \(x\) in determining the practical importance and size of the slope coefficient. A value of 0.0163 looks small but is actually at the heart of a pretty interesting model for predicting snow depth. A one foot change of elevation is “tiny” here relative to changes in the response so the slope coefficient can be small and still amount to big changes in the predicted response across the range of values of \(x\). If the Elevation had been recorded in thousands of feet, then the slope would have been estimated to be \(0.0163*1000=16.3\) inches change in mean Snow Depth for a 1000 foot increase in elevation.

The plots of the two estimated temperature models in Figures 8.3 and 8.4 suggest a similar change in the responses over the range of observed temperatures. Those predictors range from 22\(^\circ F\) to 34\(^\circ F\) (minimum temperature) and from 26\(^\circ F\) to 50\(^\circ F\) (maximum temperature). This tells us a 1\(^\circ F\) increase in either temperature is a greater proportion of the observed range of each predictor than a 1 unit (foot) increase in elevation, so the two temperature variables will generate larger apparent magnitudes of slope coefficients. But having large slope coefficients is no guarantee of a good model – in fact, the elevation model has the highest R2 value of these three models even though its slope coefficient looks tiny compared to the other models.

Figure 8.3: Plot of the estimated SLR model using Min Temp as predictor.

Figure 8.4: Plot of the estimated SLR model using Max Temp as predictor.

m1 <- lm(Snow.Depth~Elevation, data=snotel2)

m2 <- lm(Snow.Depth~Min.Temp, data=snotel2)

m3 <- lm(Snow.Depth~Max.Temp, data=snotel2)

library(effects)

plot(allEffects(m1, residuals=T), main="SLR: Effect of Elevation")

plot(allEffects(m2, residuals=T), main="SLR: Effect of Min Temp")

plot(allEffects(m3, residuals=T), main="SLR: Effect of Max Temp")##

## Call:

## lm(formula = Snow.Depth ~ Elevation, data = snotel2)

##

## Residuals:

## Min 1Q Median 3Q Max

## -36.416 -5.135 -1.767 7.645 23.508

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -72.005873 17.712927 -4.065 0.000478

## Elevation 0.016275 0.002579 6.311 1.93e-06

##

## Residual standard error: 13.27 on 23 degrees of freedom

## Multiple R-squared: 0.634, Adjusted R-squared: 0.618

## F-statistic: 39.83 on 1 and 23 DF, p-value: 1.933e-06##

## Call:

## lm(formula = Snow.Depth ~ Min.Temp, data = snotel2)

##

## Residuals:

## Min 1Q Median 3Q Max

## -26.156 -11.238 2.810 9.846 26.444

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 174.0963 25.5628 6.811 6.04e-07

## Min.Temp -4.8836 0.9148 -5.339 2.02e-05

##

## Residual standard error: 14.65 on 23 degrees of freedom

## Multiple R-squared: 0.5534, Adjusted R-squared: 0.534

## F-statistic: 28.5 on 1 and 23 DF, p-value: 2.022e-05##

## Call:

## lm(formula = Snow.Depth ~ Max.Temp, data = snotel2)

##

## Residuals:

## Min 1Q Median 3Q Max

## -26.447 -10.367 -4.394 10.042 34.774

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 122.6723 19.6380 6.247 2.25e-06

## Max.Temp -2.2840 0.5257 -4.345 0.000238

##

## Residual standard error: 16.25 on 23 degrees of freedom

## Multiple R-squared: 0.4508, Adjusted R-squared: 0.4269

## F-statistic: 18.88 on 1 and 23 DF, p-value: 0.0002385Since all three variables look like they are potentially useful in predicting

snow depth, we want to consider if an MLR model might explain more of the

variability in Snow Depth. To fit an MLR model, we use the same general format

as in previous topics but with adding “+” between any additional

predictors114 we want to add to the model,

y~x1+x2+...+xk:

##

## Call:

## lm(formula = Snow.Depth ~ Elevation + Min.Temp + Max.Temp, data = snotel2)

##

## Residuals:

## Min 1Q Median 3Q Max

## -29.508 -7.679 -3.139 9.627 26.394

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -10.506529 99.616286 -0.105 0.9170

## Elevation 0.012332 0.006536 1.887 0.0731

## Min.Temp -0.504970 2.042614 -0.247 0.8071

## Max.Temp -0.561892 0.673219 -0.835 0.4133

##

## Residual standard error: 13.6 on 21 degrees of freedom

## Multiple R-squared: 0.6485, Adjusted R-squared: 0.5983

## F-statistic: 12.91 on 3 and 21 DF, p-value: 5.328e-05

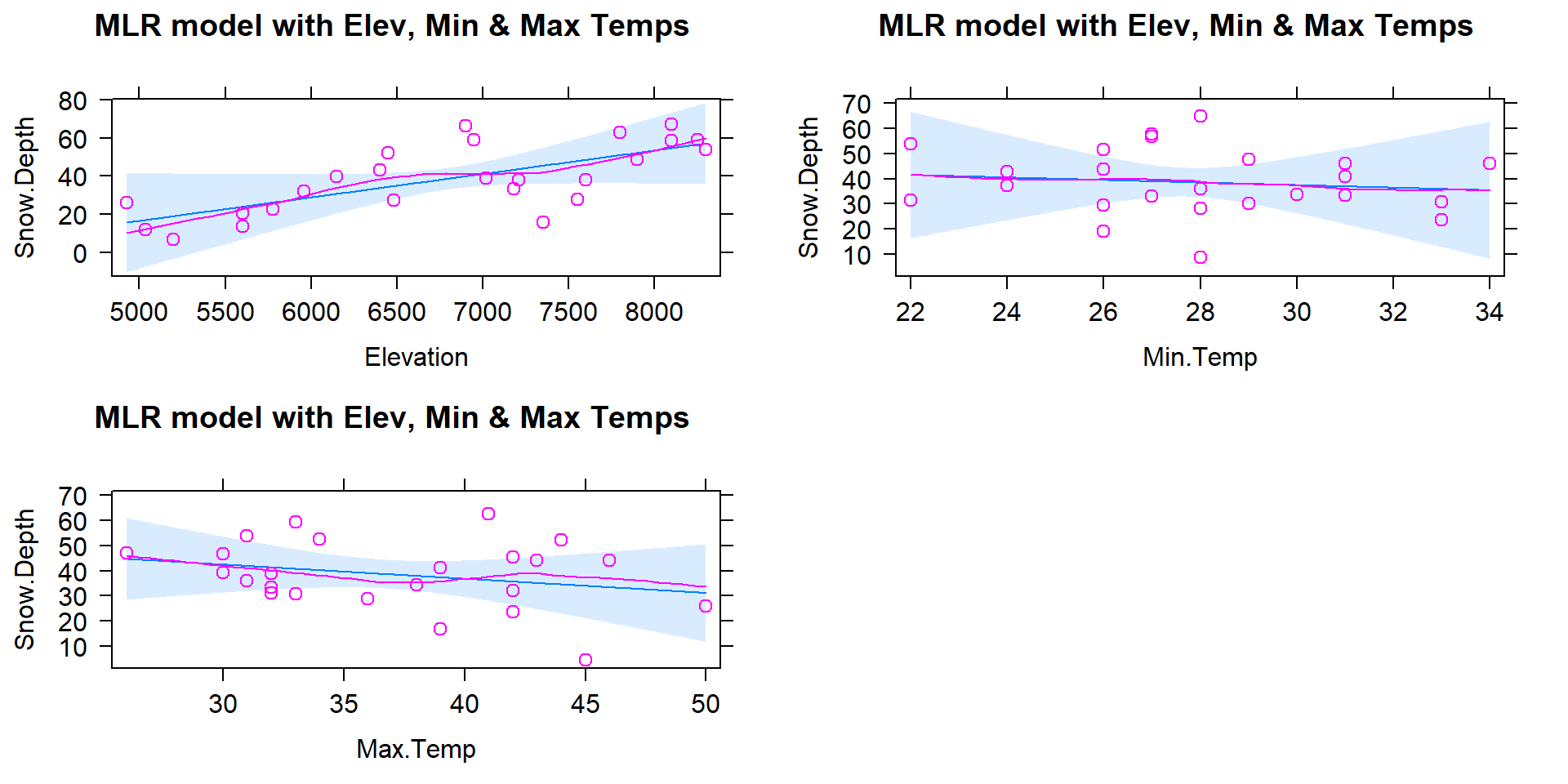

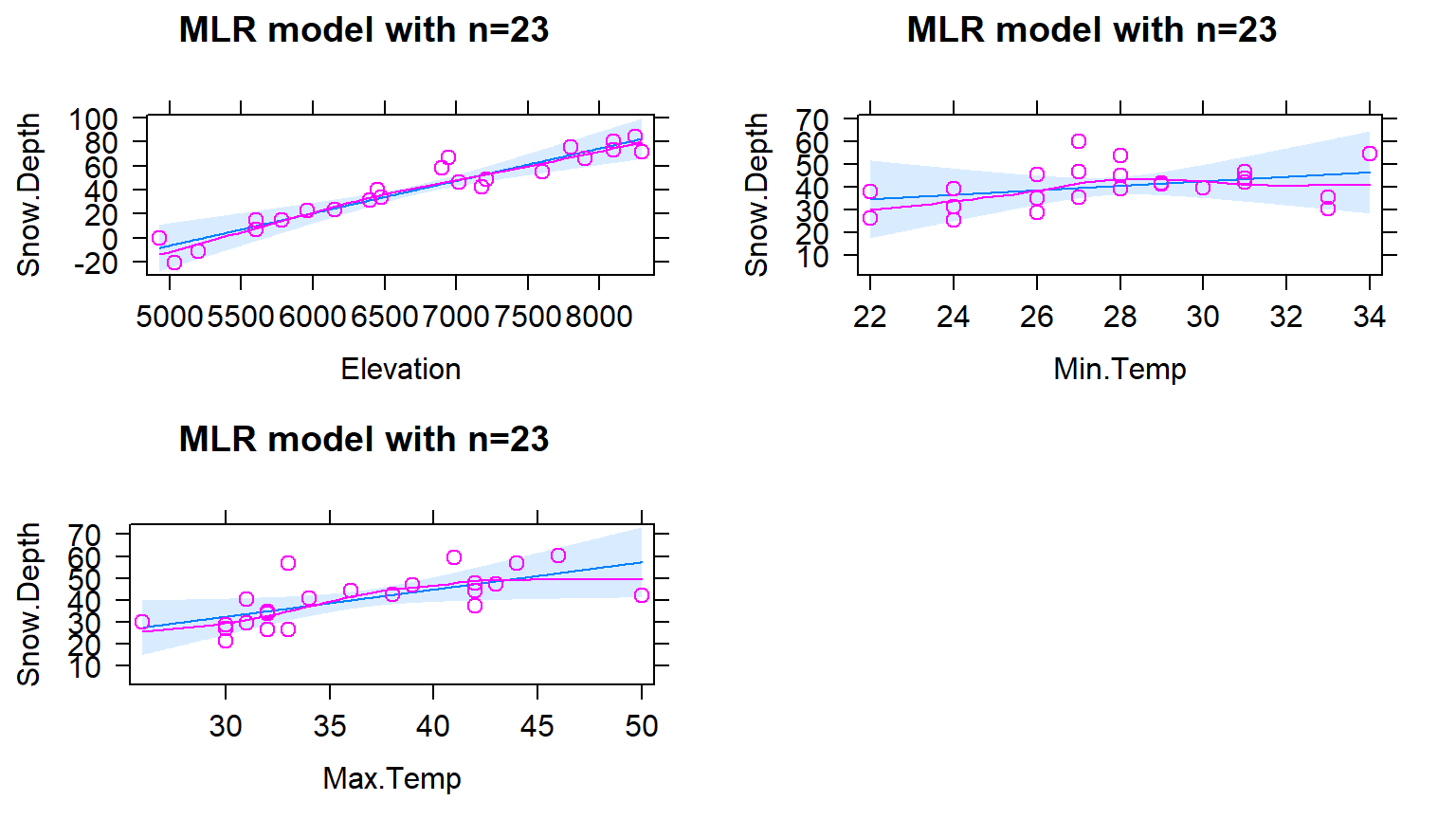

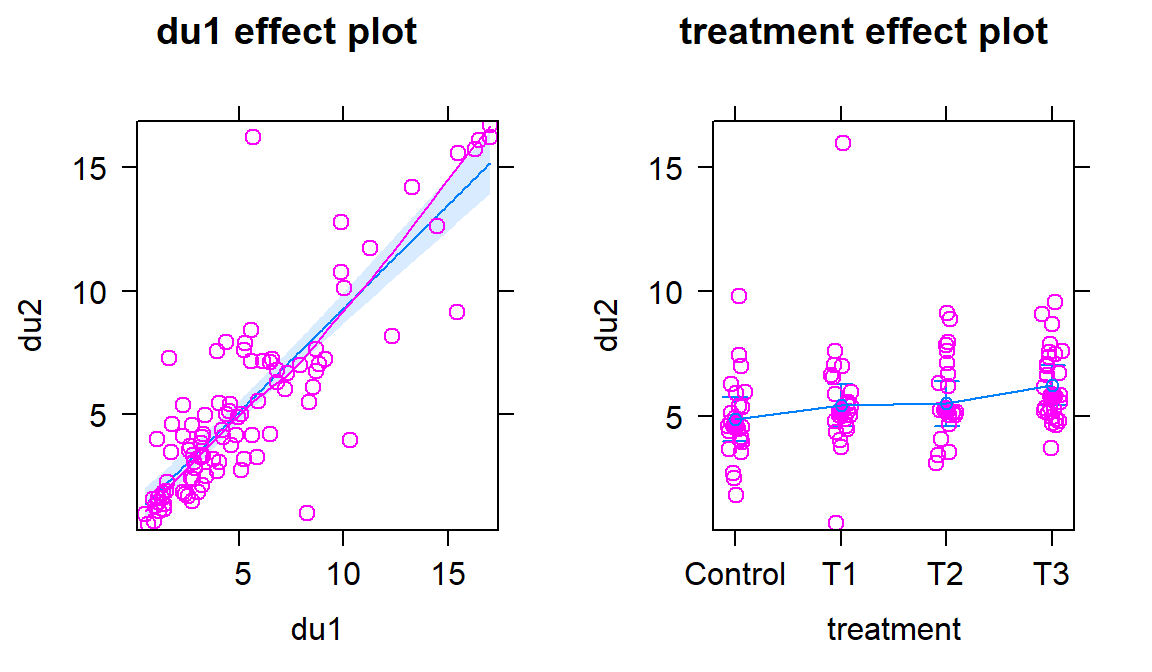

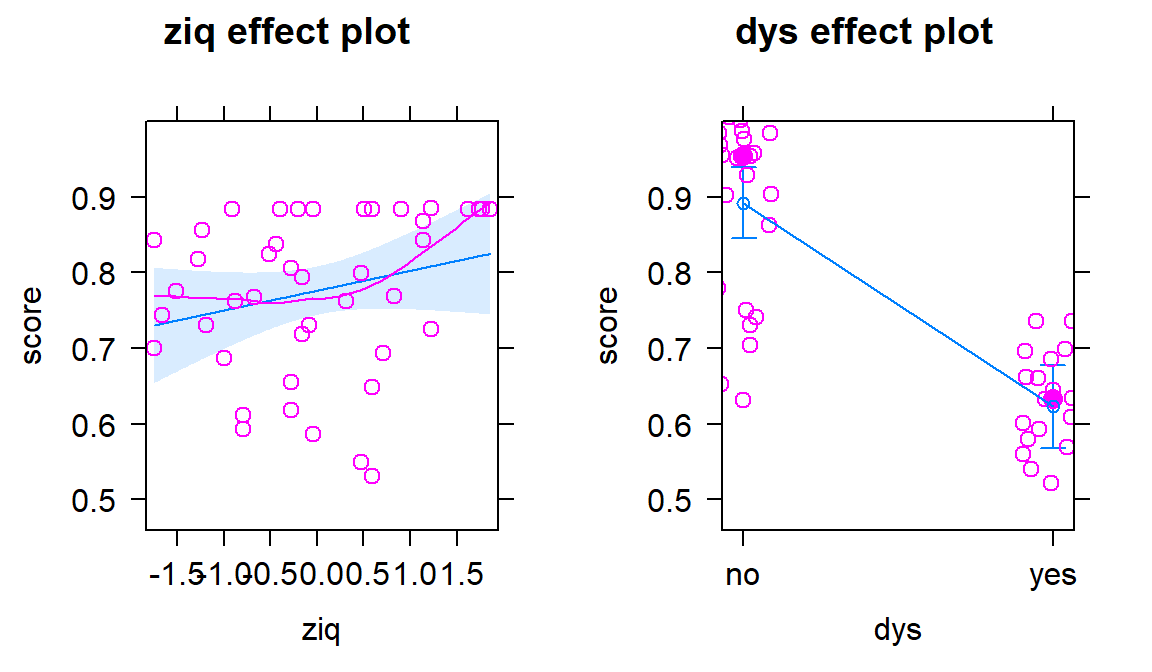

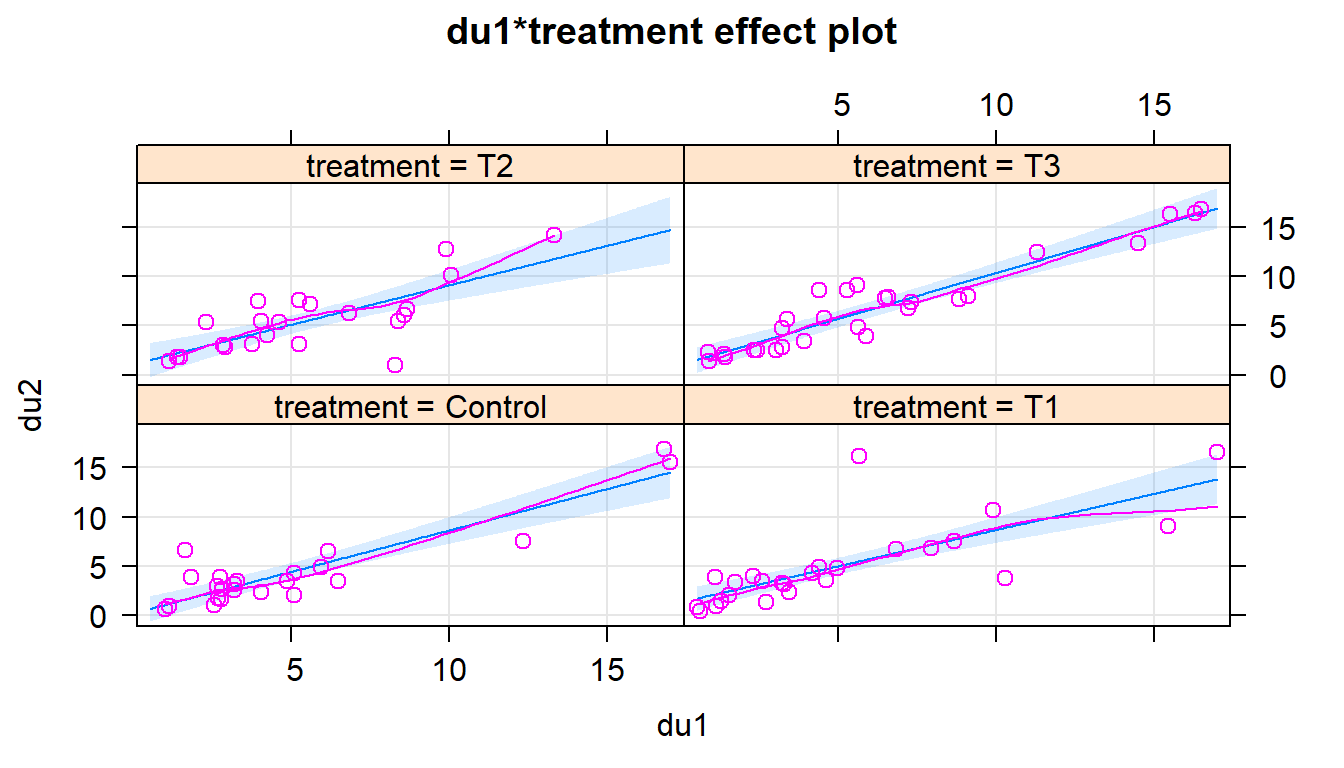

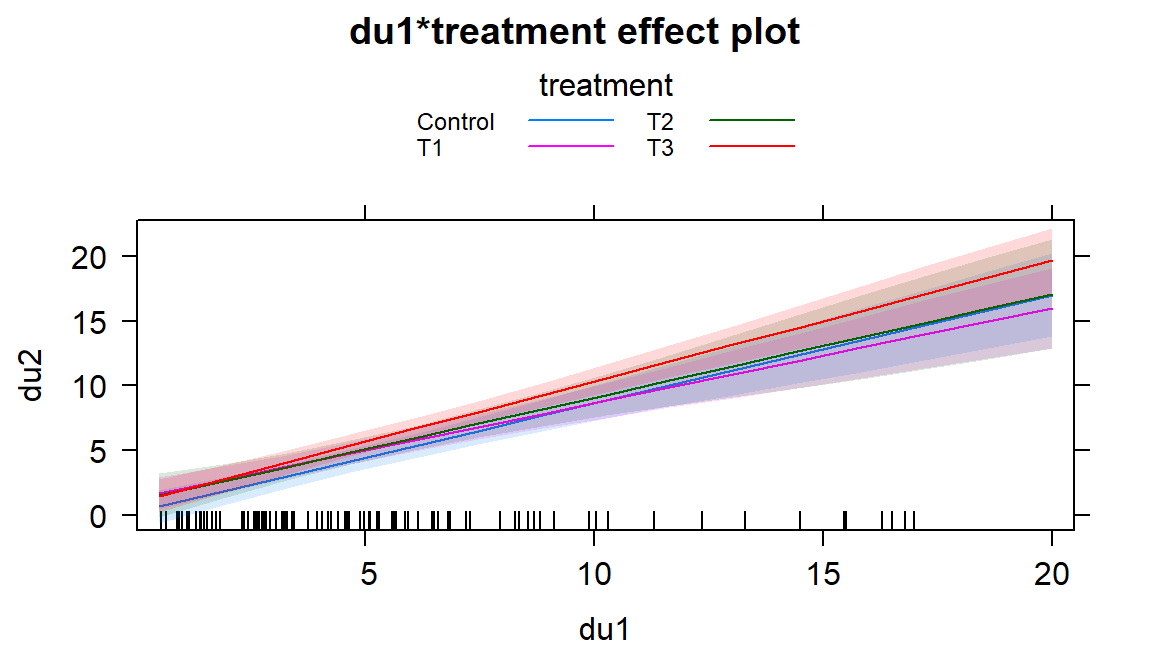

Figure 8.5: Term-plots for the MLR for Snow Depth based on Elevation, Min Temp and Max Temp. Compare this plot that comes from one MLR model to Figures 8.2, 8.3, and 8.4 for comparable SLR models. Note the points in these panels are the partial residuals that are generated after controlling for the other two of the three variables.

Based on the output, the estimated MLR model is

\[\widehat{\text{SnowDepth}}_i = -10.51 + 0.0123\cdot\text{Elevation}_i -0.505\cdot\text{MinTemp}_i - 0.562\cdot\text{MaxTemp}_i\ .\]

The direction of the estimated slope coefficients were similar but they all changed in magnitude as compared to the respective SLRs, as seen in the estimated term-plots from the MLR model in Figure 8.5.

There are two ways to think about the changes from individual SLR slope coefficients to the similar MLR results here.

Each term in the MLR is the result for estimating each slope after controlling for the other two variables (and we will always use this interpretation any time we interpret MLR effects). For the Elevation slope, we would say that the slope coefficient is “corrected for” or “adjusted for” the variability that is explained by the temperature variables in the model.

Because of multicollinearity in the predictors, the variables might share information that is useful for explaining the variability in the response variable, so the slope coefficients of each predictor get perturbed because the model cannot separate their effects on the response. This issue disappears when the predictors are uncorrelated or even just minimally correlated.

There are some ramifications of multicollinearity in MLR:

Adding variables to a model might lead to almost no improvement in the overall variability explained by the model.

Adding variables to a model can cause slope coefficients to change signs as well as magnitudes.

Adding variables to a model can lead to inflated standard errors for some or all of the coefficients (this is less obvious but is related to the shared information in predictors making it less clear what slope coefficient to use for each variable, so more uncertainty in their estimation).

In extreme cases of multicollinearity, it may even be impossible to obtain some or any coefficient estimates.

These seem like pretty serious issues and they are but there are many, many situations where we proceed with MLR even in the presence of potentially correlated predictors. It is likely that you have heard or read about inferences from models that are dealing with this issue – for example, medical studies often report the increased risk of death from some behavior or trait after controlling for gender, age, health status, etc. In many research articles, it is becoming common practice to report the slope for a variable that is of most interest with it in the model alone (SLR) and in models after adjusting for the other variables that are expected to matter. The “adjusted for other variables” results are built with MLR or related multiple-predictor models like MLR.

8.2 Validity conditions in MLR

But before we get too excited about any results, we should always assess our validity conditions. For MLR, they are similar to those for SLR:

Quantitative variables condition:

Independence of observations:

This assumption is about the responses – we must assume that they were collected in a fashion so that they can be assumed to be independent. This implies that we also have independent random errors.

This is not an assumption about the predictor variables!

Linearity of relationship (NEW VERSION FOR MLR!):

Linearity is assumed between the response variable and each explanatory variable (\(y\) and each \(x\)).

We can check this three ways:

Make plots of the response versus each explanatory variable:

- Only visual evidence of a curving relationship is a problem here. Transformations of individual explanatory variables or the response are possible. It is possible to not find a problem in this plot that becomes more obvious when we account for variability that is explained by other variables in the partial residuals.

Examine the Residuals vs Fitted plot:

- When using MLR, curves in the residuals vs. fitted values suggest a missed curving relationship with at least one predictor variable, but it will not be specific as to which one is non-linear. Revisit the scatterplots to identify the source of the issue.

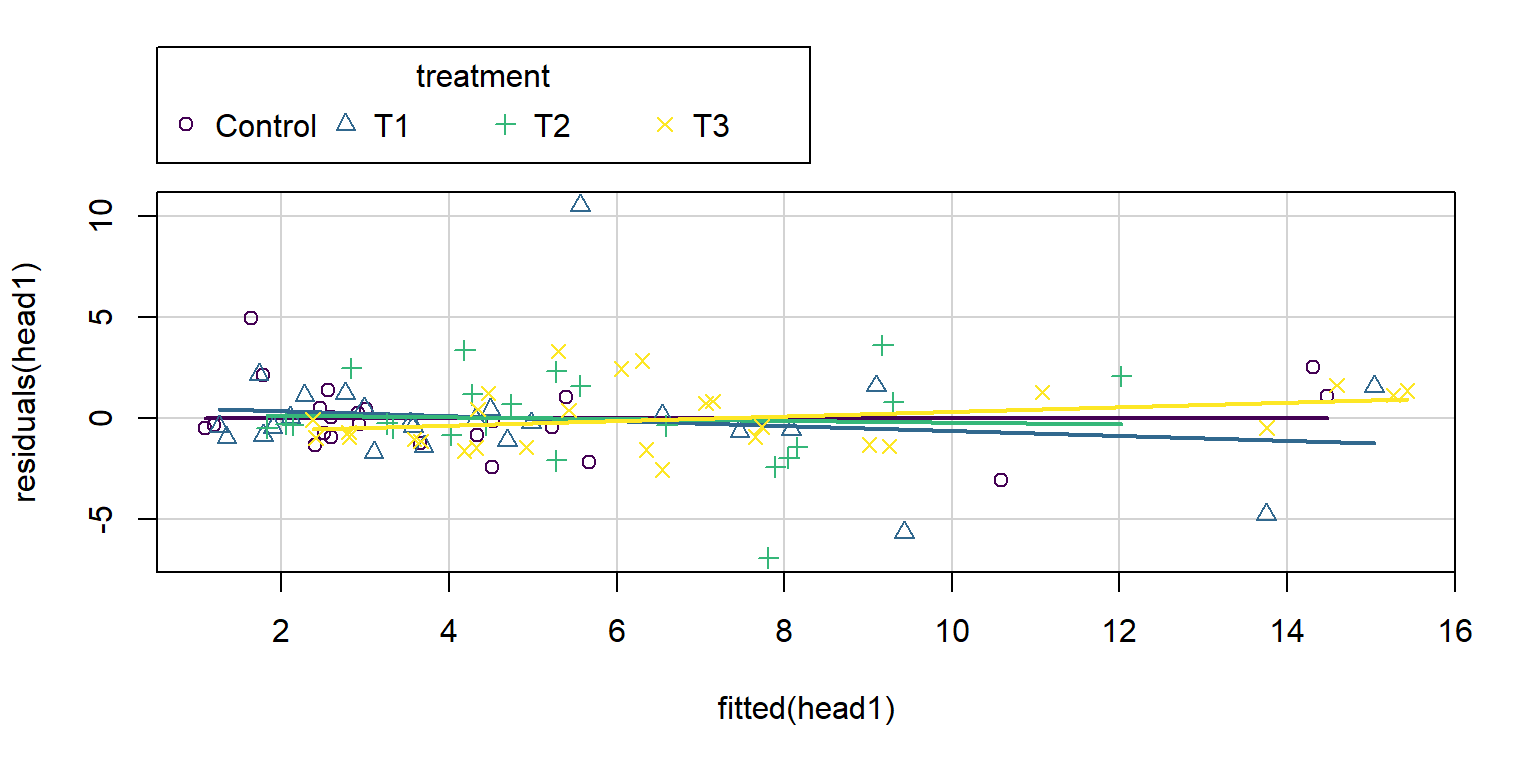

Examine partial residuals and smoothing line in term-plots.

- Turning on the

residuals=Toption in the effects plot allows direct assessment of residuals vs each predictor after accounting for others. Look for clear patterns in the partial residuals115 that the smoothing line is also following for potential issues with the linearity assumption.

- Turning on the

Multicollinearity effects checked for:

Issues here do not mean we cannot proceed with a given model, but it can impact our ability to trust and interpret the estimated terms. Extreme issues might require removing some highly correlated variables prior to really focusing on a model.

Check a scatterplot or correlation matrix to assess the potential for shared information in different predictor variables.

Use the diagnostic measure called a variance inflation factor (VIF) discussed in Section 8.5 (we need to develop some ideas first to understand this measure).

Equal (constant) variance:

- Same as before since it pertains to the residuals.

Normality of residuals:

- Same as before since it pertains to the residuals.

No influential points:

Leverage is now determined by how unusual a point is for multiple explanatory variables.

The leverage values in the Residuals vs Leverage plot are scaled to add up to the degrees of freedom (df) used for the model which is the number of explanatory variables (\(K\)) plus 1, so \(K+1\).

The scale of leverages depends on the complexity of the model through the df and the sample size.

The interpretation is still that the larger the leverage value, the more leverage the point has.

The mean leverage is always (model used df)/n = (K+1)/n – so focus on the values with above average leverage.

- For example, with \(K=3\) and \(n=20\), the average leverage is \(4/20=1/5\).

High leverage points whose response does not follow the pattern defined by the other observations (now based on patterns for multiple \(x\text{'s}\) with the response) will be influential.

Use the Residual’s vs Leverage plot to identify problematic points. Explore further with Cook’s D continuing to provide a measure of the influence of each observation.

- The rules and interpretations for Cook’s D are the same as in SLR (over 0.5 is possibly influential and over 1 is definitely influential).

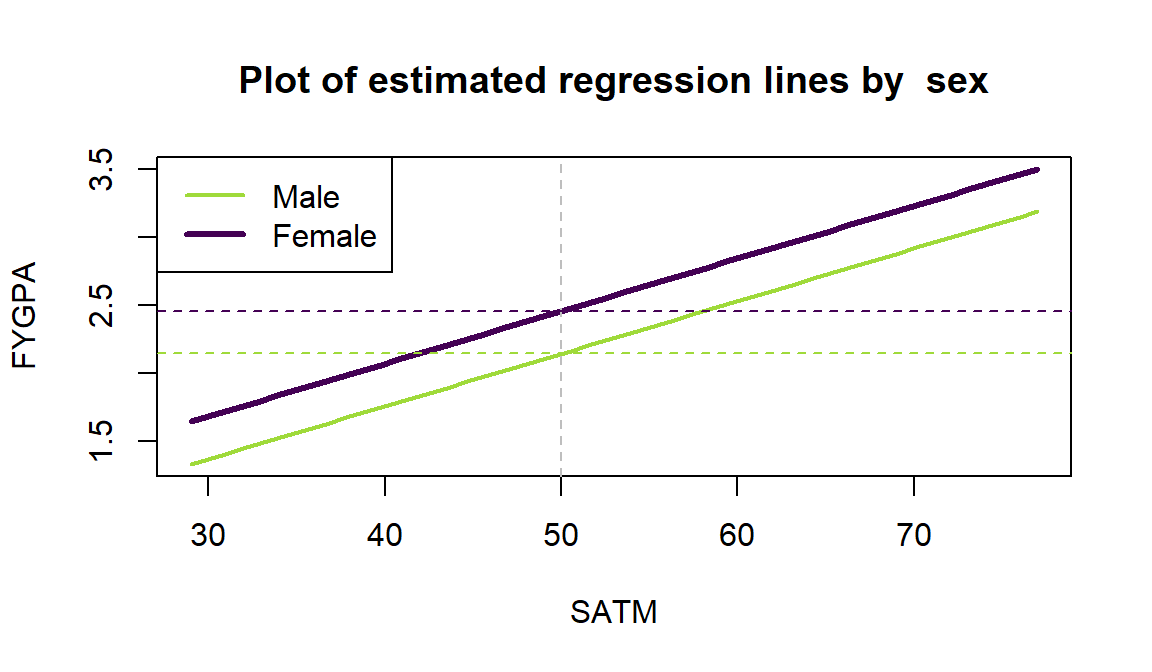

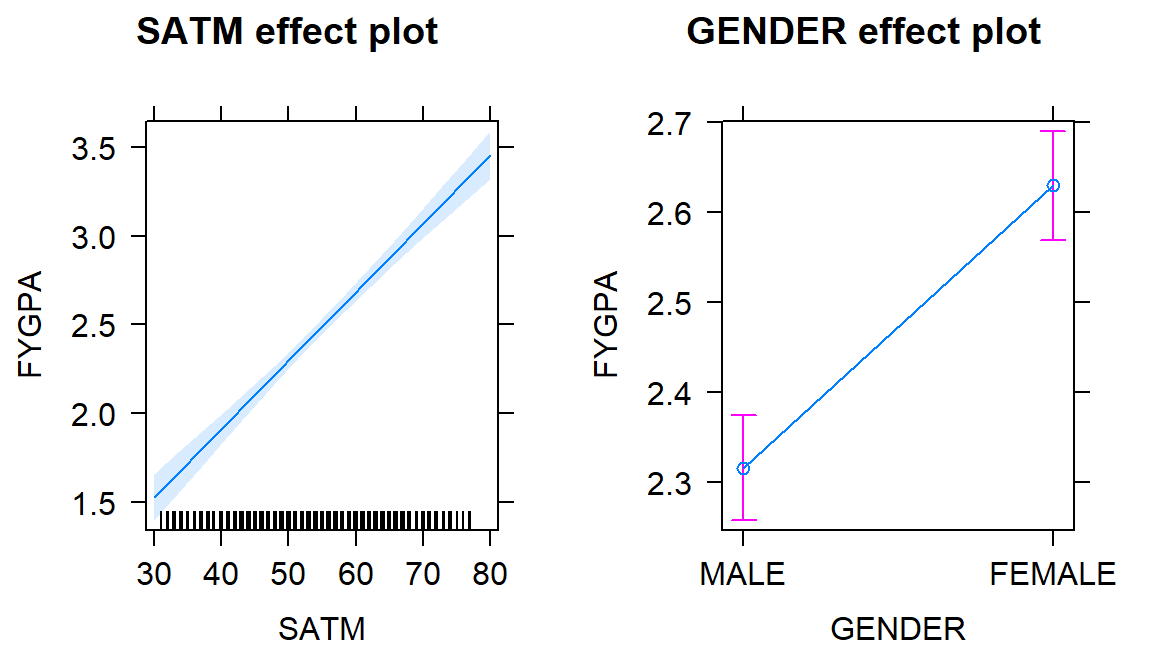

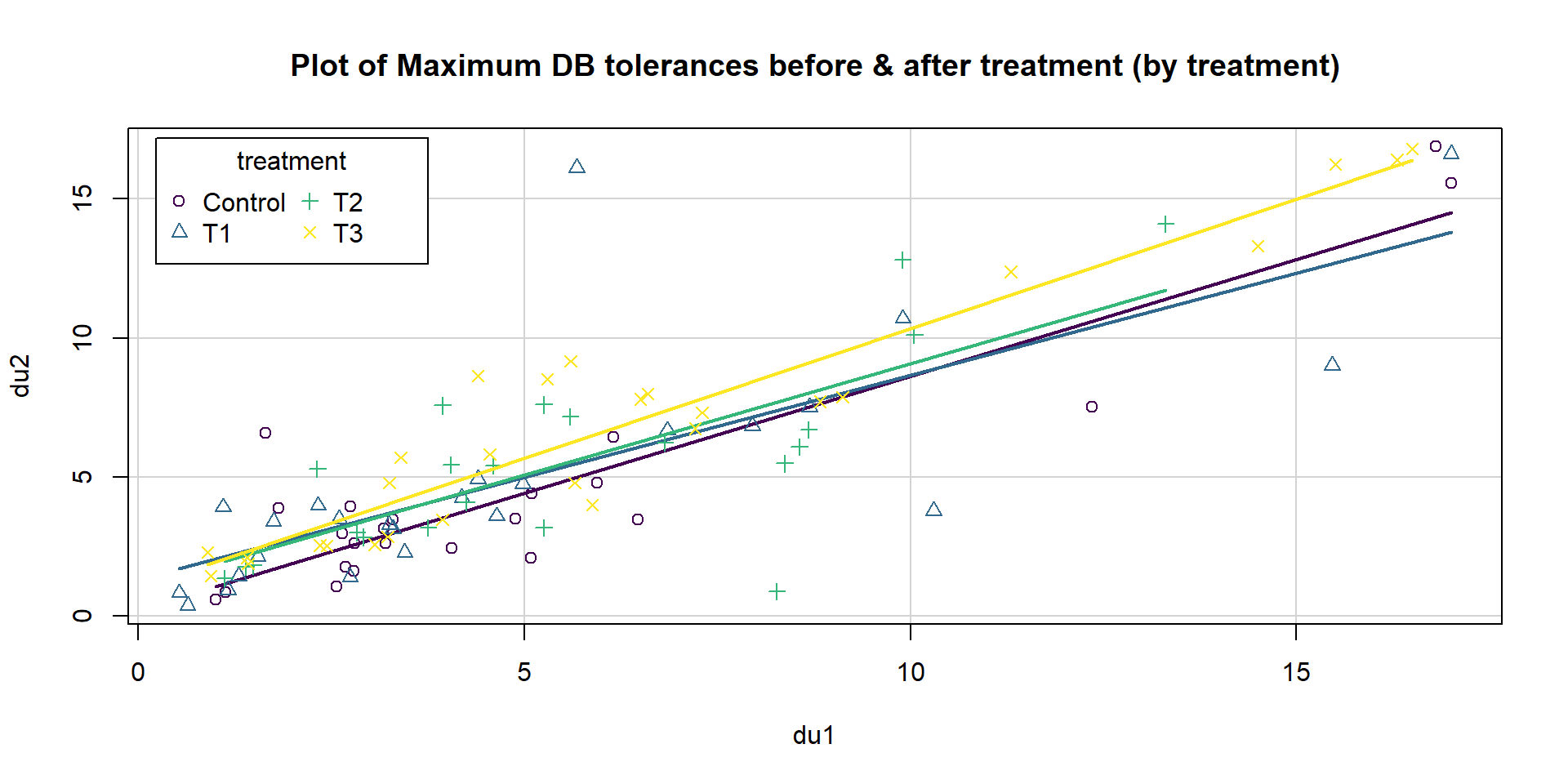

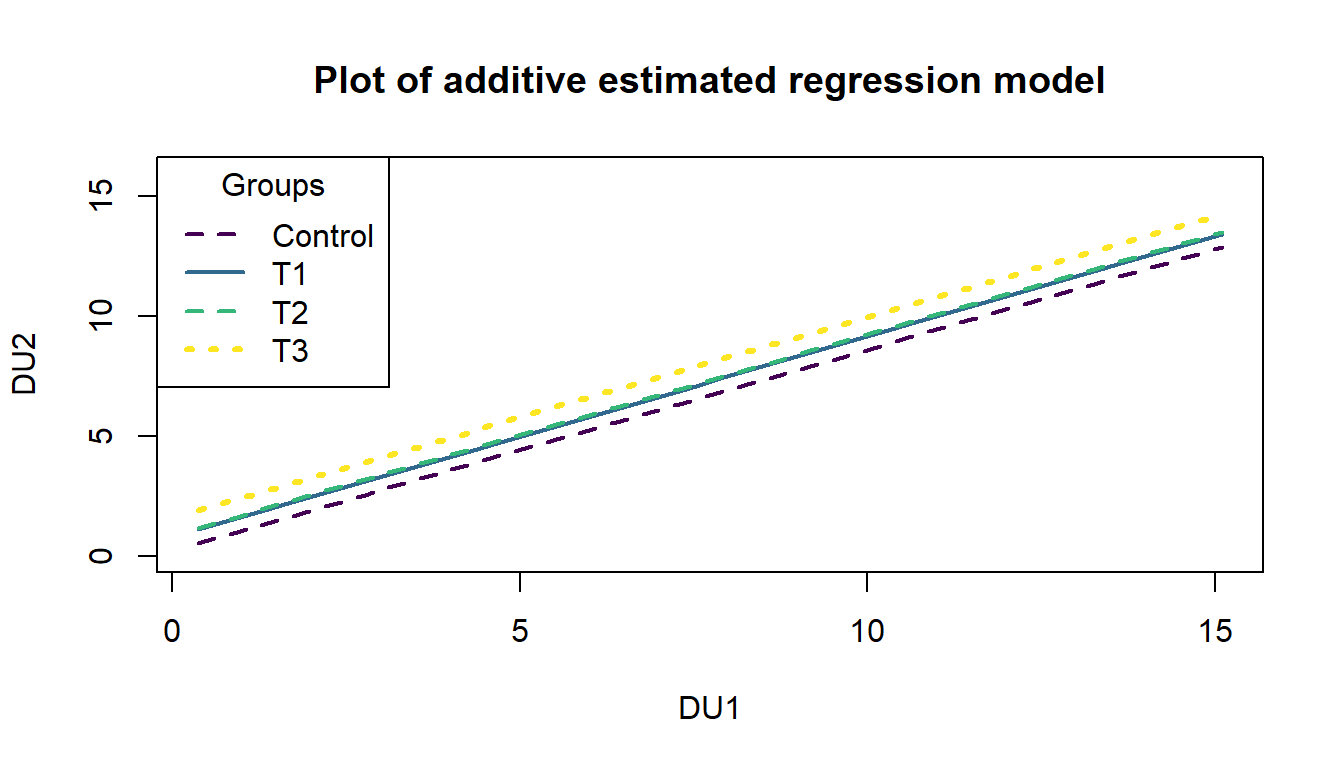

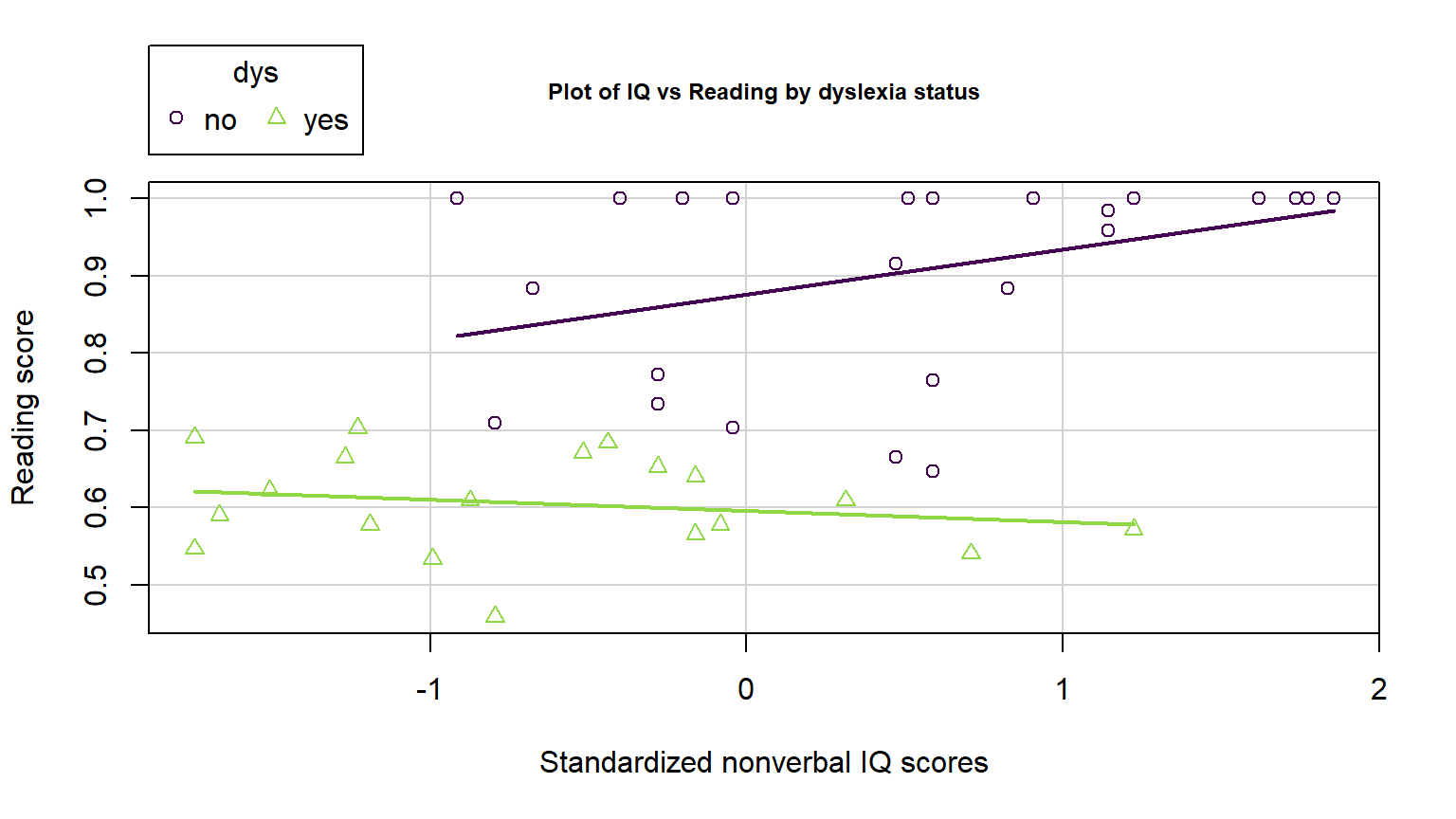

While not a condition for use of the methods, a note about random assignment and random sampling is useful here in considering the scope of inference of any results. To make inferences about a population, we need to have a representative sample. If we have randomly assigned levels of treatment variables(s), then we can make causal inferences to subjects like those that we could have observed. And if we both have a representative sample and randomization, we can make causal inferences for the population. It is possible to randomly assign levels of variable(s) to subjects and still collect additional information from other explanatory (sometimes called control) variables. The causal interpretations would only be associated with the explanatory variables that were randomly assigned even though the model might contain other variables. Their interpretation still involves noting all the variables included in the model, as demonstrated below. It is even possible to include interactions between randomly assigned variables and other variables – like drug dosage and sex of the subjects. In these cases, causal inference could apply to the treatment levels but noting that the impacts differ based on the non-randomly assigned variable.

For the Snow Depth data, the conditions can be assessed as:

Quantitative variables condition:

- These are all clearly quantitative variables.

Independence of observations:

- The observations are based on a random sample of sites from the population and the sites are spread around the mountains in Montana. Many people would find it to be reasonable to assume that the sites are independent of one another but others would be worried that sites closer together in space might be more similar than they are to far-away observations (this is called spatial correlation). I have been in a heated discussion with statistics colleagues about whether spatial dependency should be considered or if it is valid to ignore it in this sort of situation. It is certainly possible to be concerned about independence of observations here but it takes more advanced statistical methods to actually assess whether there is spatial dependency in these data. Even if you were going to pursue models that incorporate spatial correlations, the first task would be to fit this sort of model and then explore the results. When data are collected across space, you should note that there might be some sort of spatial dependency that could violate the independence assumption.

To assess the remaining assumptions, we can use our diagnostic plots.

The same code

as before will provide diagnostic plots. There is some extra code

(par(...)) added to allow us to add labels to the plots (sub.caption="...") to know which model

is being displayed since we have so many to discuss here. We can also employ a new approach, which is to simulate new observations from the model and make plots to compare simulated data sets to what was observed. The simulate function from Chapter 2 can be used to generate new observations from the model based on the estimated coefficients and where we know that the assumptions are true. If the simulated data and the observed data are very different, then the model is likely dangerous to use for inferences because of this mis-match. This method can be used to assess the linearity, constant variance, normality of residuals, and influential points aspects of the model. It is not something used in every situation, but is especially helpful if you are struggling to decide if what you are seeing in the diagnostics is just random variability or is really a clear issue. The regular steps in assessing each assumption are discussed first.

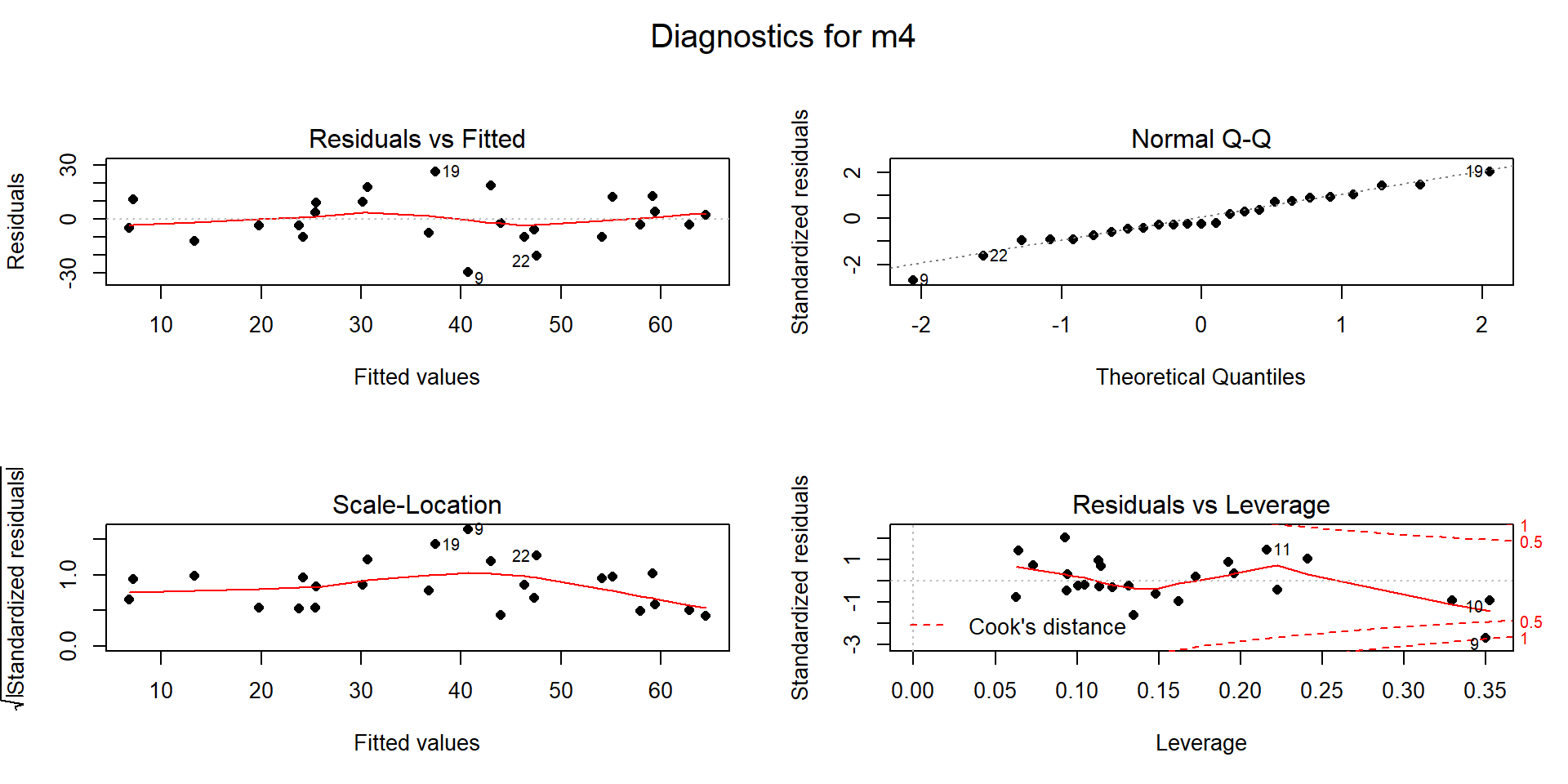

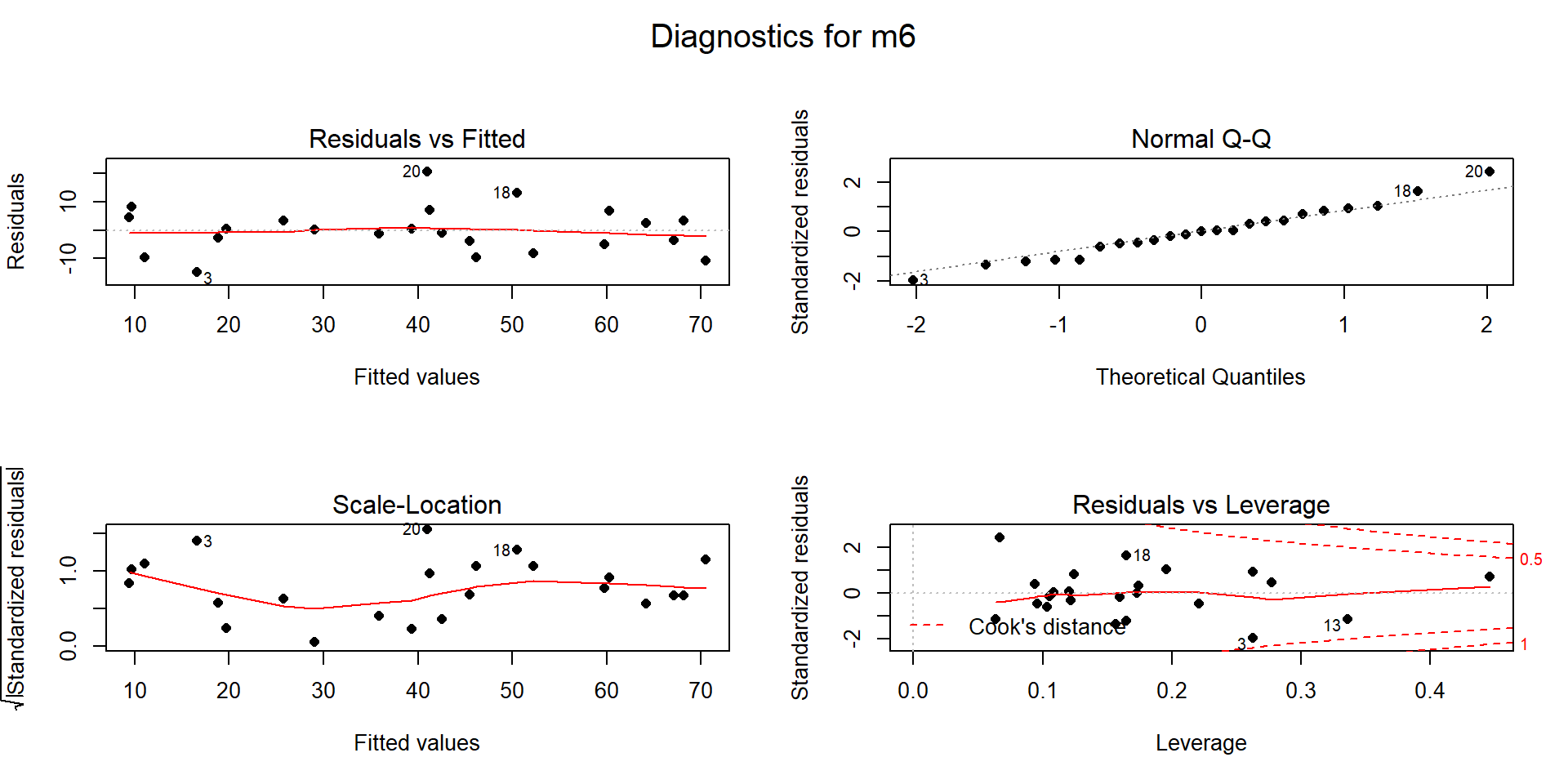

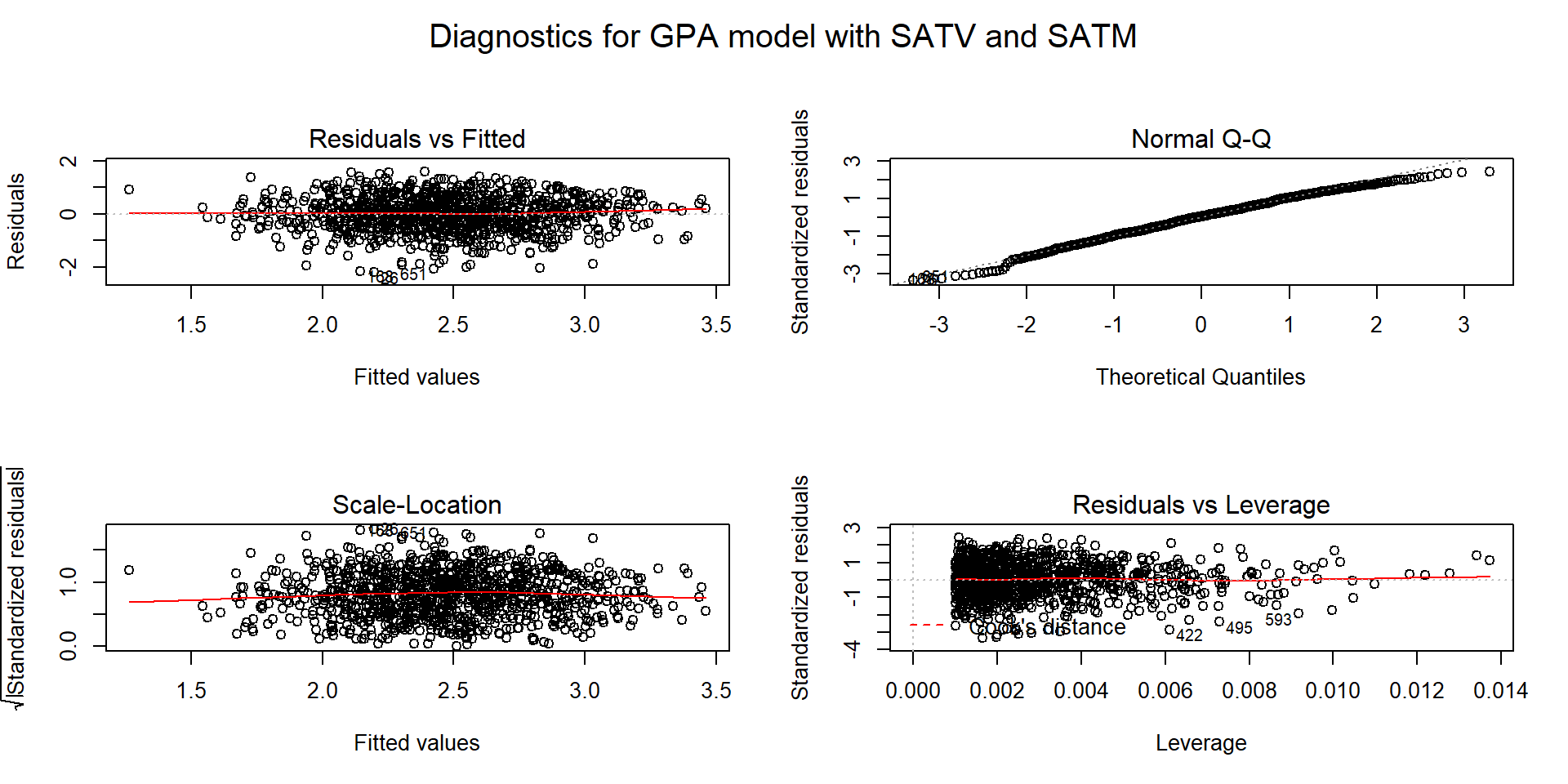

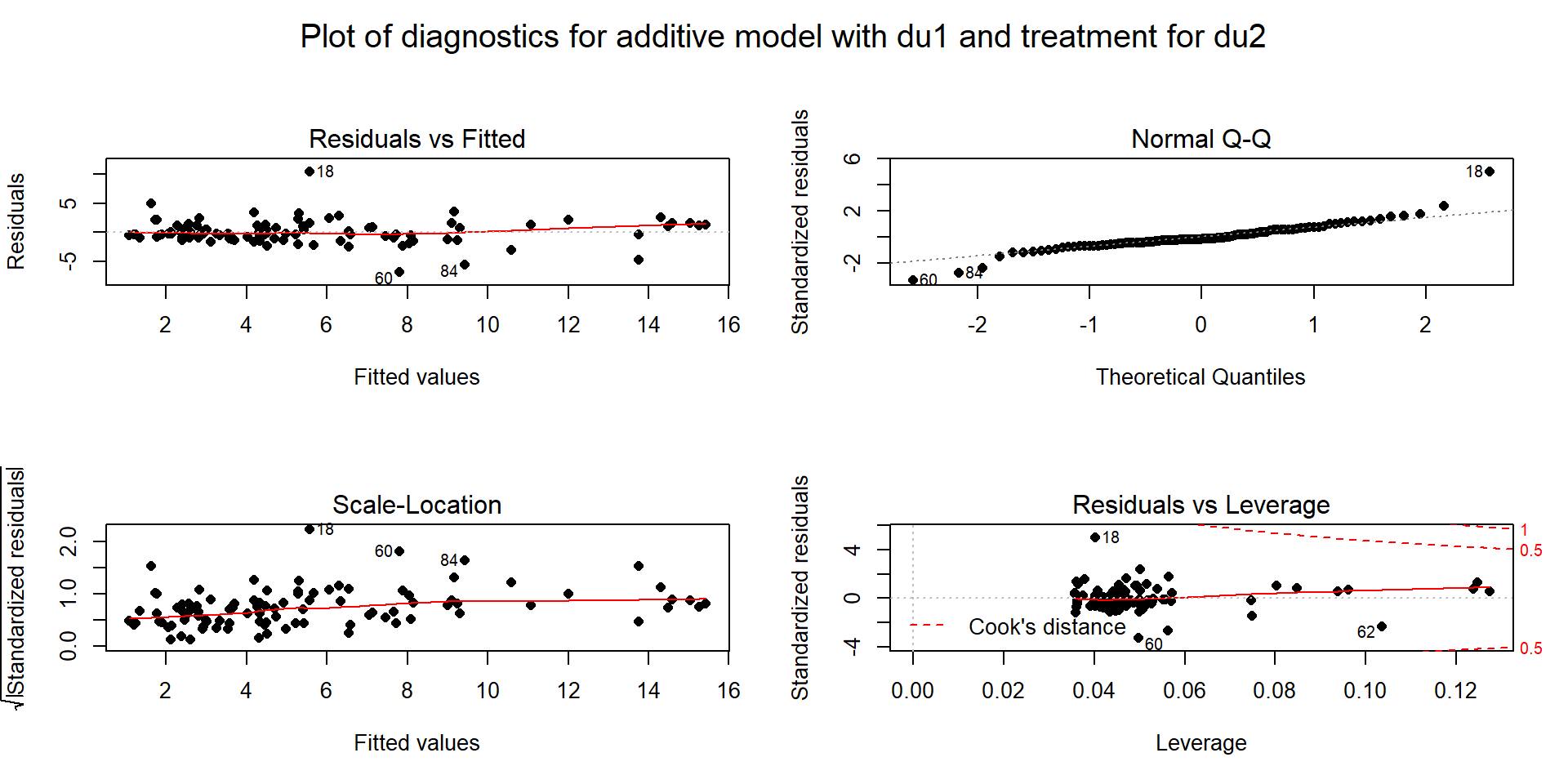

Figure 8.6: Diagnostic plots for model m4: \(\text{Snow.Depth}\sim \text{Elevation} + \text{Min.Temp} + \text{Max.Temp}\).

Linearity of relationship (NEW VERSION FOR MLR!):

Make plots of the response versus each explanatory variable:

- In Figure 8.1, the plots of each variable versus snow depth do not clearly show any nonlinearity except for a little dip around 7000 feet in the plot vs Elevation.

Examine the Residuals vs Fitted plot in Figure 8.6:

- Generally, there is no clear curvature in the Residuals vs Fitted panel and that would be an acceptable answer. However, there is some pattern in the smoothing line that could suggest a more complicated relationship between at least one predictor and the response. This also resembles the pattern in the Elevation vs. Snow depth panel in Figure 8.1 so that might be the source of this “problem”. This suggests that there is the potential to do a little bit better but that it is not unreasonable to proceed on with the MLR, ignoring this little wiggle in the diagnostic plot.

Examine partial residuals as seen in Figure 8.5:

- In the term-plot for elevation from this model, there is a slight pattern in the partial residuals between 6,500 and 7,500 feet. This was also apparent in the original plot and suggests a slight nonlinearity in the pattern of responses versus this explanatory variable.

Multicollinearity effects checked for:

The predictors certainly share information in this application (correlations between -0.67 and -0.91) and multicollinearity looks to be a major concern in being able to understand/separate the impacts of temperatures and elevations on snow depths.

See Section 8.5 for more on this issue in these data.

Equal (constant) variance:

- While there is a little bit more variability in the middle of the fitted values, this is more an artifact of having a smaller data set with a couple of moderate outliers that fell in the same range of fitted values and maybe a little bit of missed curvature. So there is not too much of an issue with this condition.

Normality of residuals:

- The residuals match the normal distribution fairly closely the QQ-plot, showing only a little deviation for observation 9 from a normal distribution and that deviation is extremely minor. There is certainly no indication of a violation of the normality assumption here.

No influential points:

With \(K=3\) predictors and \(n=25\) observations, the average leverage is \(4/25=0.16\). This gives us a scale to interpret the leverage values on the \(x\)-axis of the lower right panel of our diagnostic plots.

There are three higher leverage points (leverages over 0.3) with only one being influential (point 9) with Cook’s D close to 1.

- Note that point 10 had the same leverage but was not influential with Cook’s D less than 0.5.

We can explore both of these points to see how two observations can have the same leverage and different amounts of influence.

The two flagged points, observations 9 and 10 in the data set, are for the

sites “Northeast Entrance” (to Yellowstone) and “Combination”. We can use the

MLR equation to do some prediction for each observation and calculate residuals

to see how far the model’s predictions are from the actual observed values for

these sites. For the Northeast Entrance, the Max.Temp was 45, the Min.Temp

was 28, and the Elevation was 7350 as you can see in

this printout of just the two rows of the data set available by referencing

rows 9 and 10 in the bracket from snotel2:

## # A tibble: 2 x 6

## ID Station Max.Temp Min.Temp Elevation Snow.Depth

## <dbl> <chr> <dbl> <dbl> <dbl> <dbl>

## 1 18 Northeast Entrance 45 28 7350 11.2

## 2 53 Combination 36 28 5600 14The estimated Snow Depth for the Northeast Entrance site (observation 9) is found using the estimated model with

\[\begin{array}{rl} \widehat{\text{SnowDepth}}_9 &= -10.51 + 0.0123\cdot\text{Elevation}_9 - 0.505\cdot\text{MinTemp}_9 - 0.562\cdot\text{MaxTemp}_9 \\ & = -10.51 + 0.0123*\boldsymbol{7350} -0.505*\boldsymbol{28} - 0.562*\boldsymbol{45} \\ & = 40.465 \text{ inches,} \end{array}\]

but the observed snow depth was actually \(y_9=11.2\) inches. The observed residual is then

\[e_9=y_9-\widehat{y}_9 = 11.2-40.465 = -29.265 \text{ inches.}\]

So the model “misses” the snow depth by over 29 inches with the model suggesting over 40 inches of snow but only 11 inches actually being present116.

## [1] 40.465## [1] -29.265This point is being rated as influential (Cook’s D \(\approx\) 1) with a leverage of nearly 0.35 and a standardized residual (\(y\)-axis of Residuals vs. Leverage plot) of nearly -3. This suggests that even with this observation impacting/distorting the slope coefficients (that is what influence means), the model is still doing really poorly at fitting this observation. We’ll drop it and re-fit the model in a second to see how the slopes change. First, let’s compare that result to what happened for data point 10 (“Combination”) which was just as high leverage but not identified as influential.

The estimated snow depth for the Combination site is

\[\begin{array}{rl} \widehat{\text{SnowDepth}}_{10} &= -10.51 + 0.0123\cdot\text{Elevation}_{10} - 0.505\cdot\text{MinTemp}_{10} - 0.562\cdot\text{MaxTemp}_{10} \\ & = -10.51 + 0.0123*\boldsymbol{5600} -0.505*\boldsymbol{28} - 0.562*\boldsymbol{36} \\ & = 23.998 \text{ inches.} \end{array}\]

The observed snow depth here was \(y_{10} = 14.0\) inches so the observed residual is then

\[e_{10}=y_{10}-\widehat{y}_{10} = 14.0-23.998 = -9.998 \text{ inches.}\]

This results in a standardized residual of around -1. This is still a “miss” but not as glaring as the previous result and also is not having a major impact on the model’s estimated slope coefficients based on the small Cook’s D value.

## [1] 23.998## [1] -9.998Note that any predictions using this model presume that it is

trustworthy, but

the large Cook’s D on one observation suggests we should consider the model

after removing that observation. We can re-run the model without the

9th observation using the data set snotel2[-9,].

##

## Call:

## lm(formula = Snow.Depth ~ Elevation + Min.Temp + Max.Temp, data = snotel2[-9,

## ])

##

## Residuals:

## Min 1Q Median 3Q Max

## -29.2918 -4.9757 -0.9146 5.4292 20.4260

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -1.424e+02 9.210e+01 -1.546 0.13773

## Elevation 2.141e-02 6.101e-03 3.509 0.00221

## Min.Temp 6.722e-01 1.733e+00 0.388 0.70217

## Max.Temp 5.078e-01 6.486e-01 0.783 0.44283

##

## Residual standard error: 11.29 on 20 degrees of freedom

## Multiple R-squared: 0.7522, Adjusted R-squared: 0.715

## F-statistic: 20.24 on 3 and 20 DF, p-value: 2.843e-06

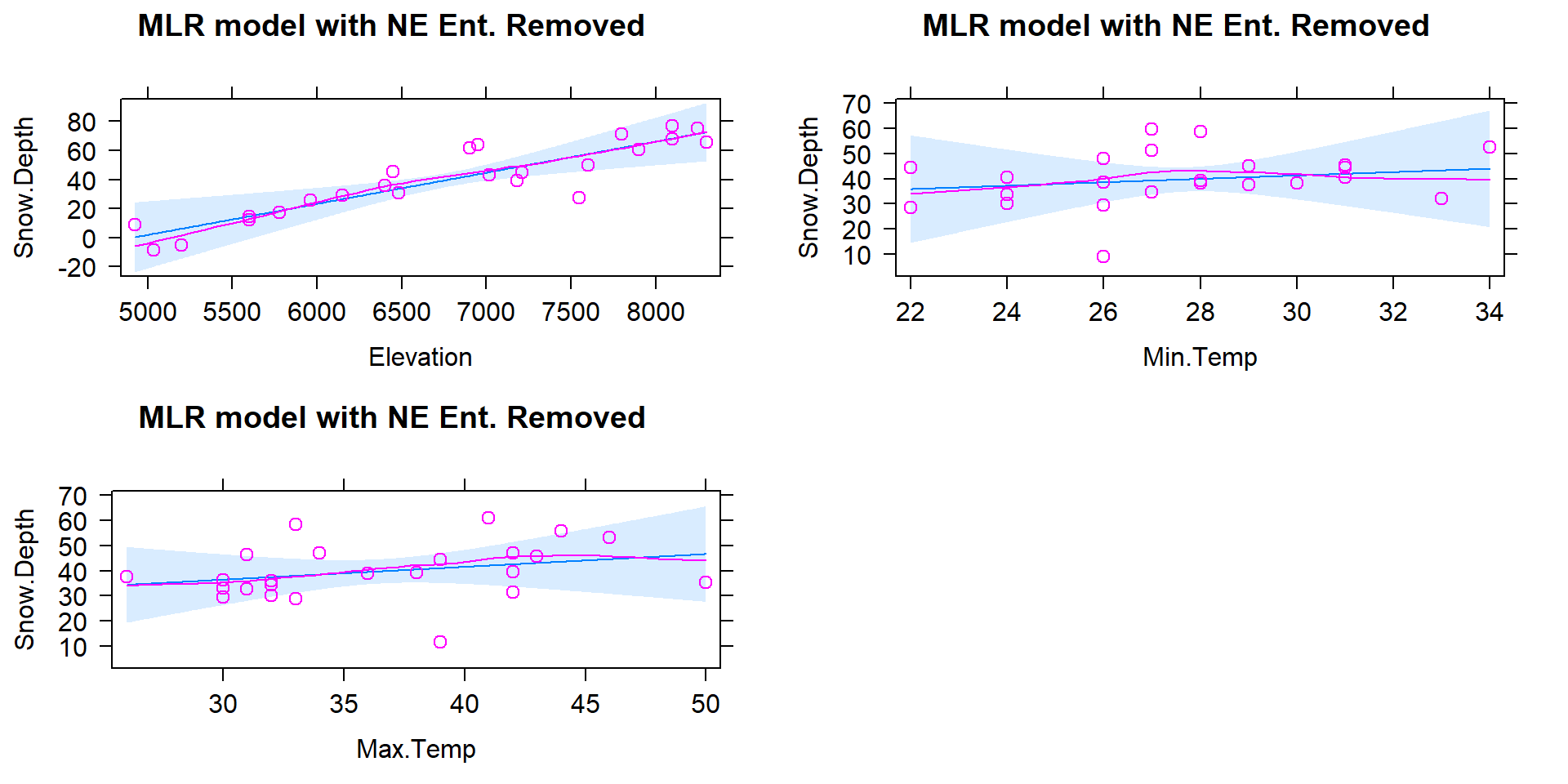

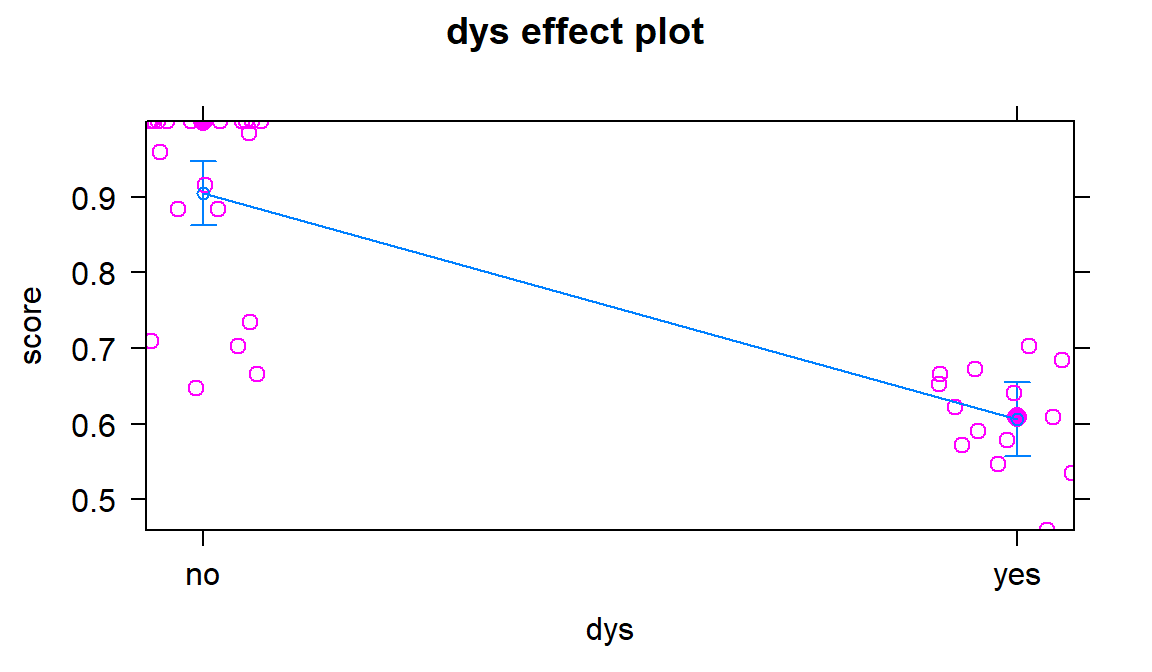

Figure 8.7: Term-plots for the MLR for Snow Depth based on Elevation, Min Temp, and Max Temp with Northeast entrance observation removed from data set (n=24).

The estimated MLR model with \(n=24\) after removing the influential “NE Entrance” observation is

\[\widehat{\text{SnowDepth}}_i = -142.4 + 0.0214\cdot\text{Elevation}_i +0.672\cdot\text{MinTemp}_i +0.508\cdot\text{MaxTemp}_i\ .\]

Something unusual has happened here: there is a positive slope for both temperature terms in Figure 8.7 that both contradicts reasonable expectations (warmer temperatures are related to higher snow levels?) and our original SLR results. So what happened? First, removing the influential point has drastically changed the slope coefficients (remember that was the definition of an influential point). Second, when there are predictors that share information, the results can be somewhat unexpected for some or all the predictors when they are all in the model together. Note that the Elevation term looks like what we might expect and seems to have a big impact on the predicted Snow Depths. So when the temperature variables are included in the model they might be functioning to explain some differences in sites that the Elevation term could not explain. This is where our “adjusting for” terminology comes into play. The unusual-looking slopes for the temperature effects can be explained by interpreting them as the estimated change in the response for changes in temperature after we control for the impacts of elevation. Suppose that Elevation explains most of the variation in Snow Depth except for a few sites where the elevation cannot explain all the variability and the site characteristics happen to show higher temperatures and more snow (or lower temperatures and less snow). This could be because warmer areas might have been hit by a recent snow storm while colder areas might have been missed (this is just one day and subject to spatial and temporal fluctuations in precipitation patterns). Or maybe there is another factor related to having marginally warmer temperatures that are accompanied by more snow (maybe the lower snow sites for each elevation were so steep that they couldn’t hold much snow but were also relatively colder?). Thinking about it this way, the temperature model components could provide useful corrections to what Elevation is providing in an overall model and explain more variability than any of the variables could alone. It is also possible that the temperature variables are not needed in a model with Elevation in it, are just “explaining noise”, and should be removed from the model. Each of the next sections take on various aspects of these issues and eventually lead to a general set of modeling and model selection recommendations to help you work in situations as complicated as this. Exploring the results for this model assumes we trust it and, once again, we need to check diagnostics before getting too focused on any particular results from it.

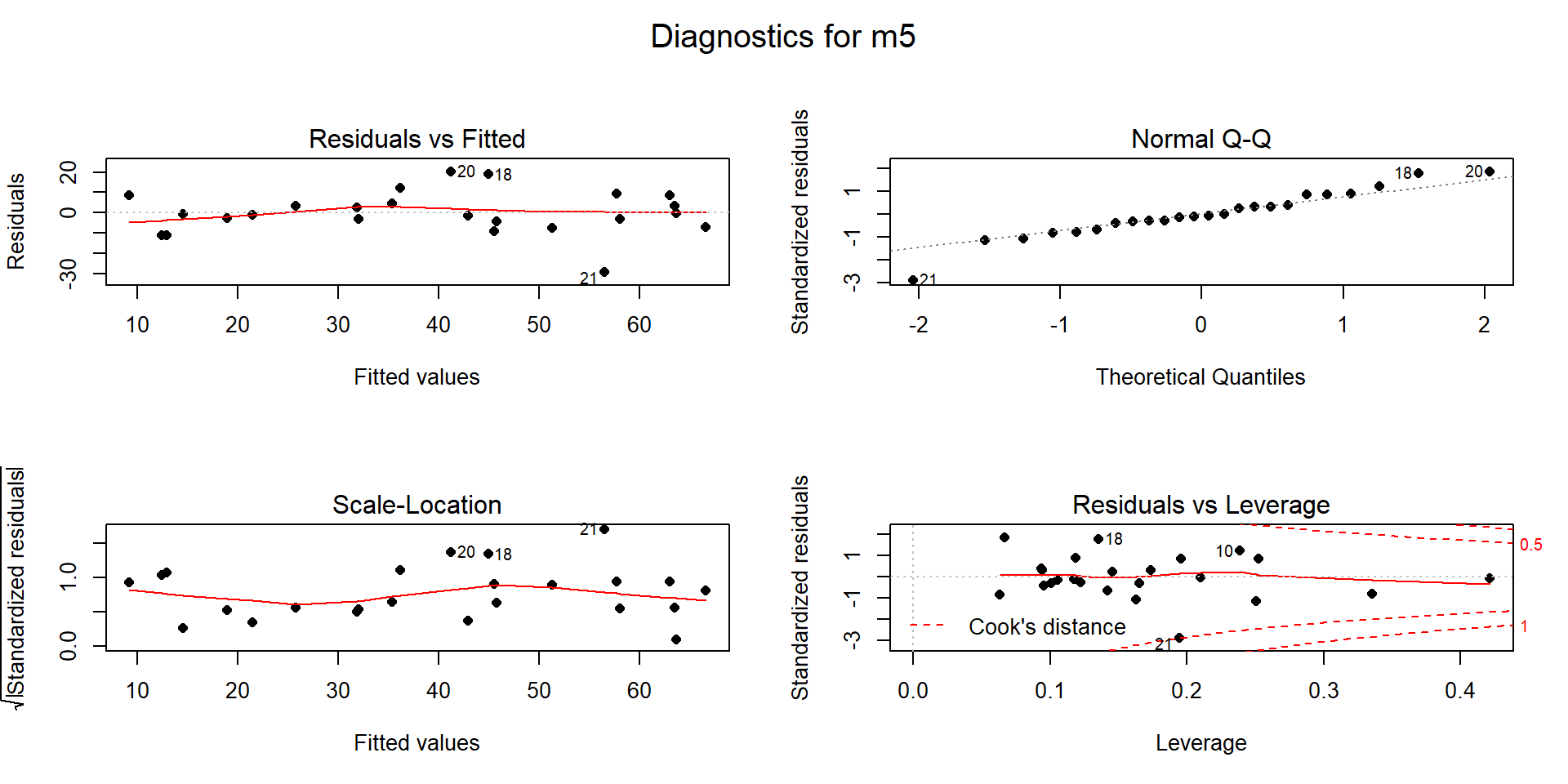

The Residuals vs. Leverage diagnostic plot in Figure 8.8 for the model fit to the data set without NE Entrance (now \(n=24\)) reveals a new point that is somewhat influential (point 22 in the data set has Cook’s D \(\approx\) 0.5). It is for a location called "Bloody \(\require{color}\colorbox{black}{Redact.}\)117 which has a leverage of nearly 0.2 and a standardized residual of nearly -3. This point did not show up as influential in the original version of the data set with the same model but it is now. It also shows up as a potential outlier. As we did before, we can explore it a bit by comparing the model predicted snow depth to the observed snow depth. The predicted snow depth for this site (see output below for variable values) is

\[\widehat{\text{SnowDepth}}_{22} = -142.4 + 0.0214*\boldsymbol{7550} +0.672*\boldsymbol{26} +0.508*\boldsymbol{39} = 56.45 \text{ inches.}\]

The observed snow depth was 27.2 inches, so the estimated residual is -39.25 inches. Again, this point is potentially influential and an outlier. Additionally, our model contains results that are not what we would have expected a priori, so it is not unreasonable to consider removing this observation to be able to work towards a model that is fully trustworthy.

Figure 8.8: Diagnostic plots for MLR for Snow Depth based on Elevation, Min Temp and Max Temp with Northeast entrance observation removed from data set.

##

## Call:

## lm(formula = Snow.Depth ~ Elevation + Min.Temp + Max.Temp, data = snotel2[-c(9,

## 22), ])

##

## Residuals:

## Min 1Q Median 3Q Max

## -14.878 -4.486 0.024 3.996 20.728

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -2.133e+02 7.458e+01 -2.859 0.0100

## Elevation 2.686e-02 4.997e-03 5.374 3.47e-05

## Min.Temp 9.843e-01 1.359e+00 0.724 0.4776

## Max.Temp 1.243e+00 5.452e-01 2.280 0.0343

##

## Residual standard error: 8.832 on 19 degrees of freedom

## Multiple R-squared: 0.8535, Adjusted R-squared: 0.8304

## F-statistic: 36.9 on 3 and 19 DF, p-value: 4.003e-08

Figure 8.9: Diagnostic plots for MLR for Snow Depth based on Elevation, Min Temp and Max Temp with two observations removed (\(n=23\)).

This worry-some observation is located in the 22nd row of the original data set:

## # A tibble: 1 x 6

## ID Station Max.Temp Min.Temp Elevation Snow.Depth

## <dbl> <fct> <dbl> <dbl> <dbl> <dbl>

## 1 36 Bloody [Redact.] 39 26 7550 27.2With the removal of both the “Northeast Entrance” and “Bloody

\(\require{color}\colorbox{black}{Redact.}\)” sites, there are \(n=23\) observations

remaining. This model (m6) seems to contain residual diagnostics (Figure

8.9) that are finally generally reasonable.

m6 <- lm(Snow.Depth~Elevation+Min.Temp+Max.Temp, data=snotel2[-c(9,22),])

summary(m6)

par(mfrow=c(2,2), oma=c(0,0,2,0))

plot(m6, sub.caption="Diagnostics for m6", pch=16)It is hard to suggest that there any curvature issues and the slight variation in the Scale-Location plot is mostly due to few observations with fitted values around 30 happening to be well approximated by the model. The normality assumption is generally reasonable and no points seem to be overly influential on this model (finally!).

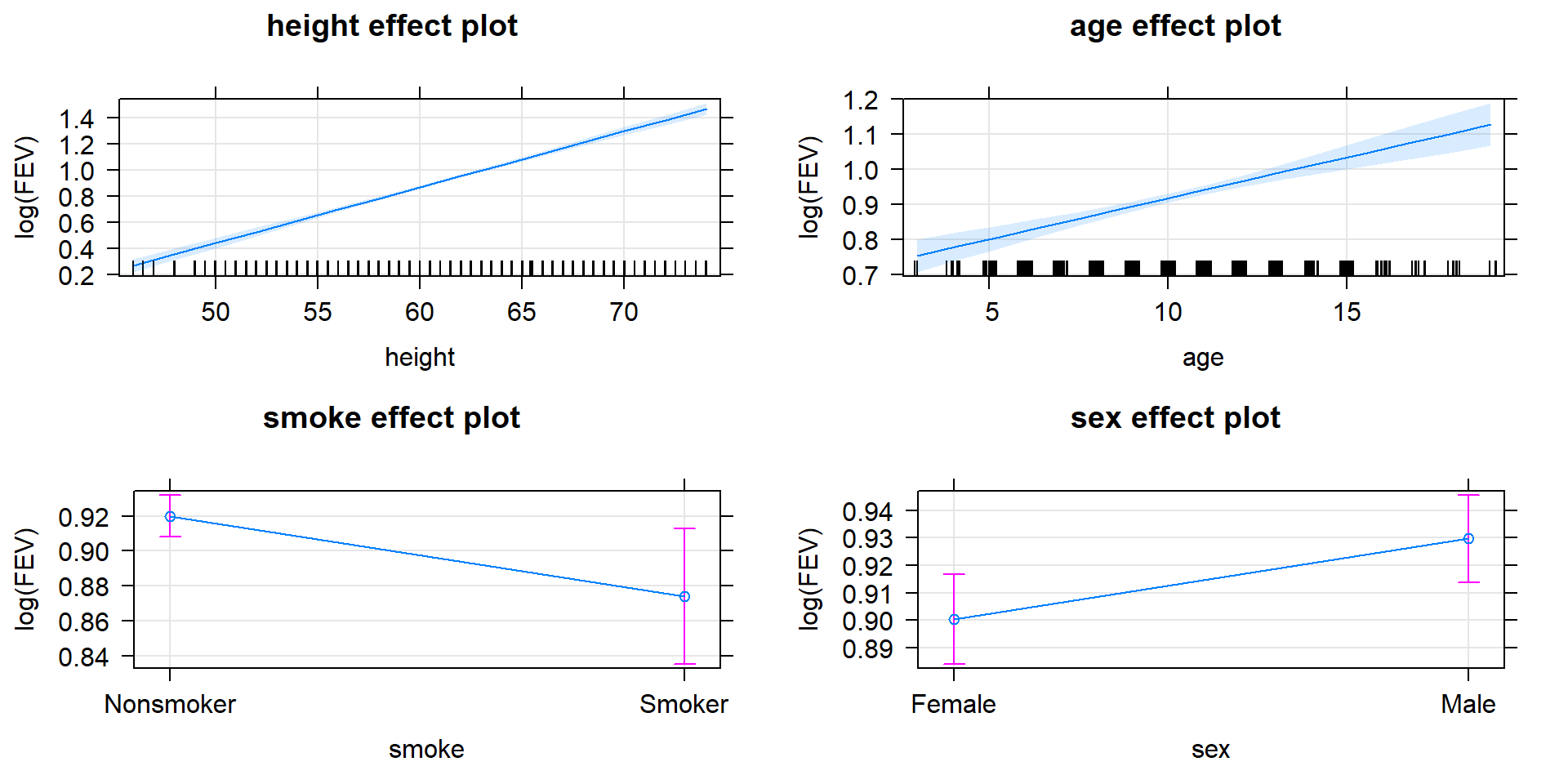

The term-plots (Figure 8.10) show that the temperature slopes are both positive although in this model Max.Temp seems to be more “important” than Min.Temp. We have ruled out individual influential points as the source of un-expected directions in slope coefficients and the more likely issue is multicollinearity – in a model that includes Elevation, the temperature effects may be positive, again acting with the Elevation term to generate the best possible predictions of the observed responses. Throughout this discussion, we have mainly focused on the slope coefficients and diagnostics. We have other tools in MLR to more quantitatively assess and compare different regression models that are considered in the next sections.

Figure 8.10: Term-plots for the MLR for Snow Depth based on Elevation, Min Temp and Max Temp with two observations removed.

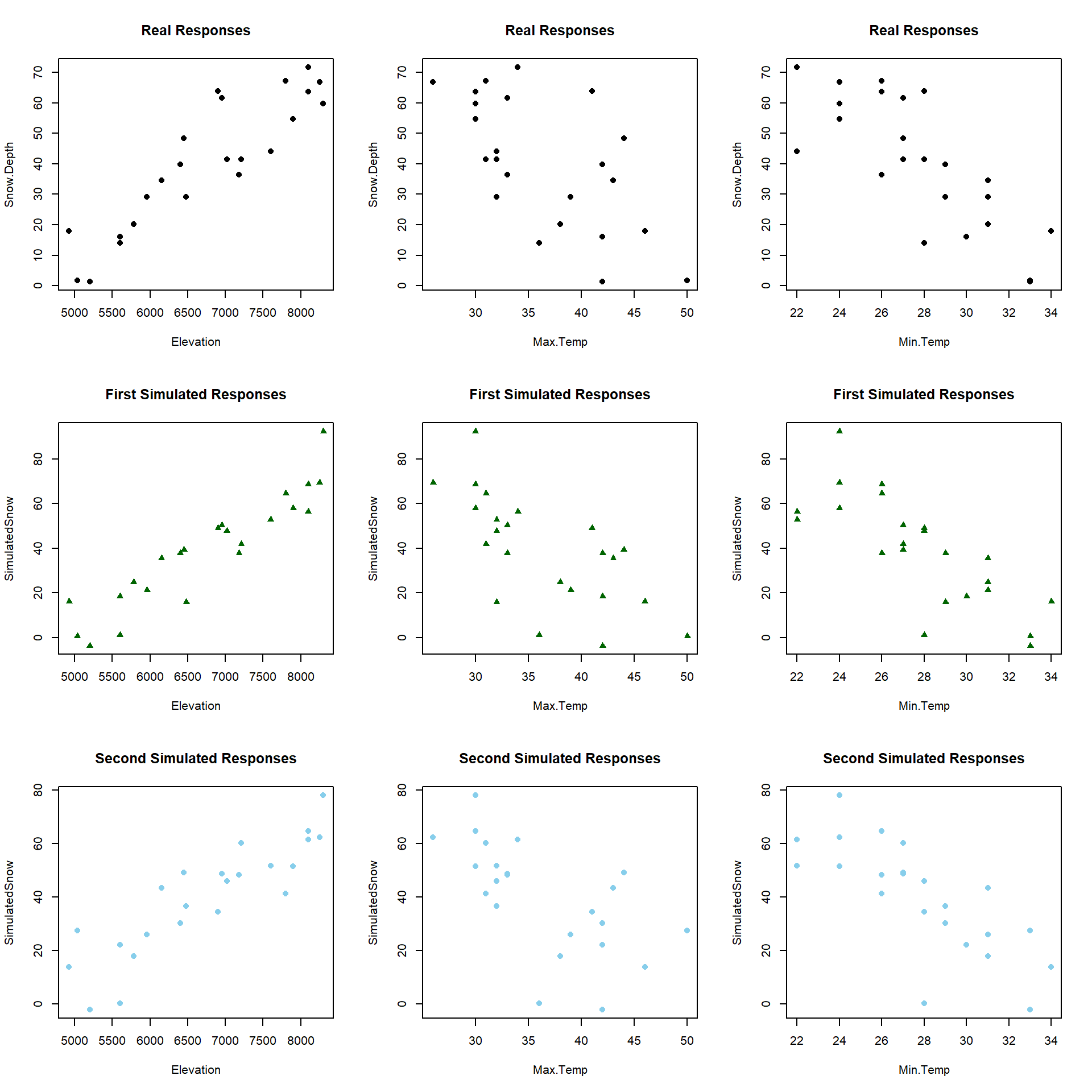

As a final assessment of this model, we can consider simulating a set of \(n=23\) responses from this model and then comparing that data set to the one we just analyzed. This does not change the predictor variables, but creates a new response that is called SimulatedSnow in the following codechunk. Figure 8.11 uses the plot function to just focus on the relationship of the original (Snow.Depth) and new (fake) responses (SimulatedSnow) versus each of the predictors. In exploring two realizations of simulated responses from the model, the results look fairly similar to the original data set. This model appeared to have reasonable assumptions and the match between simulated responses and the original ones reinforces those previous assessments. When the match is not so close, it can reinforce or create concern about the way that the assumptions have been assessed using other tools.

set.seed(307)

snotel_final <- snotel2[-c(9,22),]

snotel_final$SimulatedSnow <- simulate(m6)[[1]] #Creates first set of simulated responses

par(mfrow=c(3,3))

plot(Snow.Depth~Elevation + Max.Temp + Min.Temp, data=snotel_final,

pch=16, main="Real Responses")

plot(SimulatedSnow~Elevation + Max.Temp + Min.Temp, data=snotel_final,

pch=17, main="First Simulated Responses", col="darkgreen")

# Creates a second set of simulared responses in the same variable name

snotel_final$SimulatedSnow <- simulate(m6)[[1]]

plot(SimulatedSnow~Elevation + Max.Temp + Min.Temp, data=snotel_final,

pch=16, main="Second Simulated Responses", col="skyblue")

Figure 8.11: Plot of the original responses versus the three predictors (\(n\)=23 data set) in the top row and two sets of simulated responses versus the predictors in the bottom two rows.

8.3 Interpretation of MLR terms

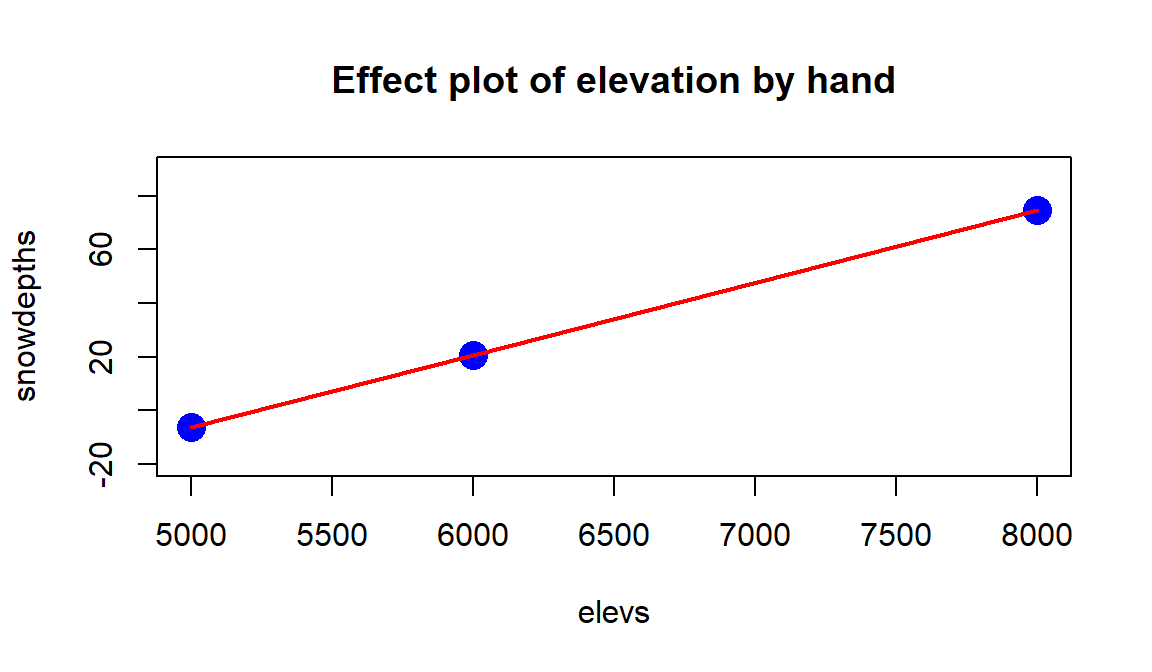

Since these results (finally) do not contain any highly influential points, we can formally discuss interpretations of the slope coefficients and how the term-plots (Figure 8.10) aid our interpretations. Term-plots in MLR are constructed by holding all the other quantitative variables118 at their mean and generating predictions and 95% CIs for the mean response across the levels of observed values for each predictor variable. This idea also helps us to work towards interpretations of each term in an MLR model. For example, for Elevation, the term-plot starts at an elevation around 5000 feet and ends at an elevation around 8000 feet. To generate that line and CIs for the mean snow depth at different elevations, the MLR model of

\[\widehat{\text{SnowDepth}}_i = -213.3 + 0.0269\cdot\text{Elevation}_i +0.984\cdot\text{MinTemp}_i +1.243\cdot\text{MaxTemp}_i\]

is used, but we need to have “something” to put in for the two temperature variables to predict Snow Depth for different Elevations. The typical convention is to hold the “other” variables at their means to generate these plots. This tactic also provides a way of interpreting each slope coefficient. Specifically, we can interpret the Elevation slope as: For a 1 foot increase in Elevation, we estimate the mean Snow Depth to increase by 0.0269 inches, holding the minimum and maximum temperatures constant. More generally, the slope interpretation in an MLR is:

For a 1 [units of \(\boldsymbol{x_k}\)] increase in \(\boldsymbol{x_k}\), we estimate the mean of \(\boldsymbol{y}\) to change by \(\boldsymbol{b_k}\) [units of y], after controlling for [list of other explanatory variables in model].

To make this more concrete, we can recreate some points in the Elevation term-plot. To do this, we first need the mean of the “other” predictors, Min.Temp and Max.Temp.

## [1] 27.82609## [1] 36.3913We can put these values into the MLR equation and simplify it by combining like terms, to an equation that is in terms of just Elevation given that we are holding Min.Temp and Max.Temp at their means:

\[\begin{array}{rl} \widehat{\text{SnowDepth}}_i &= -213.3 + 0.0269\cdot\text{Elevation}_i +0.984*\boldsymbol{27.826} +1.243*\boldsymbol{36.391} \\ &= -213.3 + 0.0269\cdot\text{Elevation}_i + 27.38 + 45.23 \\ &= \boldsymbol{-140.69 + 0.0269\cdot\textbf{Elevation}_i}. \end{array}\]

So at the means on the two temperature variables, the model looks like an SLR with an estimated \(y\)-intercept of -140.69 (mean Snow Depth for Elevation of 0 if temperatures are at their means) and an estimated slope of 0.0269. Then we can plot the predicted changes in \(y\) across all the values of the predictor variable (Elevation) while holding the other variables constant. To generate the needed values to define a line, we can plug various Elevation values into the simplified equation:

For an elevation of 5000 at the average temperatures, we predict a mean snow depth of \(-140.69 + 0.0269*5000 = -6.19\) inches.

For an elevation of 6000 at the average temperatures, we predict a mean snow depth of \(-140.69 + 0.0269*6000 = 20.71\) inches.

For an elevation of 8000 at the average temperatures, we predict a mean snow depth of \(-140.69 + 0.0269*8000 = 74.51\) inches.

We can plot this information (Figure 8.12) using the plot

function to show the points we calculated and the lines function to add

a line that connects the dots. In the plot function, we used the

ylim=... option to make the scaling on the \(y\)-axis match the previous

term-plot’s scaling.

Figure 8.12: Term-plot for Elevation “by-hand”, holding temperature variables constant at their means.

#Making own effect plot:

elevs <- c(5000,6000,8000)

snowdepths <- c(-6.19,20.71,74.51)

plot(snowdepths~elevs, ylim=c(-20,90), cex=2, col="blue", pch=16,

main="Effect plot of elevation by hand")

lines(snowdepths~elevs, col="red", lwd=2)Note that we only needed 2 points to define the line but need a denser grid of elevations if we want to add the 95% CIs for the true mean snow depth across the different elevations since they vary as a function of the distance from the mean of the explanatory variables.

To get the associated 95% CIs, we could return to using the predict

function for the MLR, again holding the temperatures at their

mean values. The predict function is sensitive and needs the same variable

names as used in the original model fitting to work. First we create a “new”

data set using the seq function to generate the desired grid of

elevations and the rep function119 to repeat the means of the

temperatures for each of elevation values we need to make the plot. The code

creates a specific version of the predictor variables to force the predict

function to provide fitted values and CIs across different elevations with

temperatures held constant that is stored in newdata1.

elevs <- seq(from=5000, to=8000, length.out=30)

newdata1 <- tibble(Elevation=elevs, Min.Temp=rep(27.826,30),

Max.Temp=rep(36.3913,30))

newdata1## # A tibble: 30 x 3

## Elevation Min.Temp Max.Temp

## <dbl> <dbl> <dbl>

## 1 5000 27.8 36.4

## 2 5103. 27.8 36.4

## 3 5207. 27.8 36.4

## 4 5310. 27.8 36.4

## 5 5414. 27.8 36.4

## 6 5517. 27.8 36.4

## 7 5621. 27.8 36.4

## 8 5724. 27.8 36.4

## 9 5828. 27.8 36.4

## 10 5931. 27.8 36.4

## # ... with 20 more rowsThe predicted snow depths along with 95% confidence intervals for the mean, holding temperatures at their means, are:

## fit lwr upr

## 1 -6.3680312 -24.913607 12.17754

## 2 -3.5898846 -21.078518 13.89875

## 3 -0.8117379 -17.246692 15.62322

## 4 1.9664088 -13.418801 17.35162

## 5 4.7445555 -9.595708 19.08482

## 6 7.5227022 -5.778543 20.82395

## 7 10.3008489 -1.968814 22.57051

## 8 13.0789956 1.831433 24.32656

## 9 15.8571423 5.619359 26.09493

## 10 18.6352890 9.390924 27.87965

## 11 21.4134357 13.140233 29.68664

## 12 24.1915824 16.858439 31.52473

## 13 26.9697291 20.531902 33.40756

## 14 29.7478758 24.139153 35.35660

## 15 32.5260225 27.646326 37.40572

## 16 35.3041692 31.002236 39.60610

## 17 38.0823159 34.139812 42.02482

## 18 40.8604626 36.997617 44.72331

## 19 43.6386092 39.559231 47.71799

## 20 46.4167559 41.866745 50.96677

## 21 49.1949026 43.988619 54.40119

## 22 51.9730493 45.985587 57.96051

## 23 54.7511960 47.900244 61.60215

## 24 57.5293427 49.759987 65.29870

## 25 60.3074894 51.582137 69.03284

## 26 63.0856361 53.377796 72.79348

## 27 65.8637828 55.154251 76.57331

## 28 68.6419295 56.916422 80.36744

## 29 71.4200762 58.667725 84.17243

## 30 74.1982229 60.410585 87.98586So we could do this with any model for each predictor variable to create

term-plots, or we can just use the allEffects function to do this for us.

This exercise is useful to complete once to understand what is being displayed

in term-plots but using the allEffects function makes getting these plots

much easier.

There are two other model components of possible interest in this model. The slope of 0.984 for Min.Temp suggests that for a 1\(^\circ F\) increase in Minimum Temperature, we estimate a 0.984 inch change in the mean Snow Depth, after controlling for Elevation and Max.Temp at the sites. Similarly, the slope of 1.243 for the Max.Temp suggests that for a 1\(^\circ F\) increase in Maximum Temperature, we estimate a 1.243 inch change in the mean Snow Depth, holding Elevation and Min.Temp constant. Note that there are a variety of ways to note that each term in an MLR is only a particular value given the other variables in the model. We can use words such as “holding the other variables constant” or “after adjusting for the other variables” or “in a model with…” or “for observations with similar values of the other variables but a difference of 1 unit in the predictor..”. The main point is to find words that reflect that this single slope coefficient might be different if we had a different overall model and the only way to interpret it is conditional on the other model components.

Term-plots have a few general uses to enhance our regular slope interpretations. They can help us assess how much change in the mean of \(y\) the model predicts over the range of each observed \(x\). This can help you to get a sense of the “practical” importance of each term. Additionally, the term-plots show 95% confidence intervals for the mean response across the range of each variable, holding the other variables at their means. These intervals can be useful for assessing the precision in the estimated mean at different values of each predictor. However, note that you should not use these plots for deciding whether the term should be retained in the model – we have other tools for making that assessment. And one last note about term-plots – they do not mean that the relationships are really linear between the predictor and response variable being displayed. The model forces the relationship to be linear even if that is not the real functional form. Term-plots are not diagnostics for the model unless you add the partial residuals, the lines are just summaries of the model you assumed was correct! Any time we do linear regression, the inferences are contingent upon the model we chose. We know our model is not perfect, but we hope that it helps us learn something about our research question(s) and, to trust its results, we hope it matches the data fairly well.

8.4 Comparing multiple regression models

With more than one variable, we now have many potential models that we could consider. We could include only one of the predictors, all of them, or combinations of sets of the variables. For example, maybe the model that includes Elevation does not “need” both Min.Temp and Max.Temp? Or maybe the model isn’t improved over an SLR with just Elevation as a predictor. Or maybe none of the predictors are “useful”? In this section, we discuss some general model comparison issues and a metric that can be used to pick among a suite of different models (often called a set of candidate models to reflect that they are all potentially interesting and we need to compare them and possibly pick one).

It is certainly possible the researchers may have an a priori reason to only consider a single model. For example, in a designed experiment where combinations of, say, three different predictors are randomly assigned, the initial model with all three predictors may be sufficient to address all the research questions of interest. One advantage in these situations is that the variable combinations can be created to prevent multicollinearity among the predictors and avoid that complication in interpretations. However, this is more the exception than the rule. Usually, there are competing predictors or questions about whether some predictors matter more than others. This type of research always introduces the potential for multicollinearity to complicate the interpretation of each predictor in the presence of others. Because of this, multiple models are often considered, where “unimportant” variables are dropped from the model. The assessment of “importance” using p-values will be discussed in Section 8.6, but for now we will consider other reasons to pick one model over another.

There are some general reasons to choose a particular model:

Diagnostics are better with one model compared to others.

One model predicts/explains the responses better than the others (R2).

a priori reasons to “use” a particular model, for example in a designed experiment or it includes variable(s) whose estimated slopes directly address the research question(s), even if the variables are not “important” in the model.

Model selection “criteria” suggest one model is better than the others120.

It is OK to consider multiple reasons to select a model but it is dangerous to “shop” for a model across many possible models – a practice which is sometimes called data-dredging and leads to a high chance of spurious results from a single model that is usually reported based on this type of exploration. Just like in other discussions of multiple testing issues previously, if you explore many versions of a model, maybe only keeping the best ones, this is very different from picking one model (and tests) a priori and just exploring that result.

As in SLR, we can use the R2 (the coefficient of determination) to measure the percentage of the variation in the response variable that the model explains. In MLR, it is important to remember that R2 is now an overall measure for the model and not specific to a single variable. It is comparable to other models including those fit with only a single predictor (SLR). So to meet criterion (2), we could simply find the model with the largest R2 value, finding the model that explains the most variation in the responses. Unfortunately for this idea, when you add more “stuff” to a regression model (even “unimportant” predictors), the R2 will always go up. This can be seen by considering

\[R^2 = \frac{\text{SS}_{\text{regression}}}{\text{SS}_{\text{total}}}\ \text{ where }\ \text{SS}_{\text{regression}} = \text{SS}_{\text{total}} - \text{SS}_{\text{error}}\ \text{ and }\ \text{SS}_{\text{error}} = \Sigma(y-\widehat{y})^2\ .\]

Because adding extra variables to a linear model will only make the fitted values better, not worse, the \(\text{SS}_{\text{error}}\) will always go down if more predictors are added to the model. If \(\text{SS}_{\text{error}}\) goes down and \(\text{SS}_{\text{total}}\) is fixed, then adding extra variables will always increase \(\text{SS}_{\text{regression}}\) and, thus, increase R2. This means that R2 is only useful for selecting models when you are picking between two models of the same size (same number of predictors). So we mainly use it as a summary of model quality once we pick a model, not a method of picking among a set of candidate models. Remember that R2 continues to have the property of being between 0 and 1 (or 0% and 100%) and that value refers to the proportion (percentage) of variation in the response explained by the model, whether we are using it for SLR or MLR.

However, there is an adjustment to the R2 measure that makes it useful for selecting among models. The measure is called the adjusted R2. The \(\boldsymbol{R}^2_{\text{adjusted}}\) measure adds a penalty for adding more variables to the model, providing the potential for this measure to decrease if the extra variables do not really benefit the model. The measure is calculated as

\[R^2_{\text{adjusted}} = 1 - \frac{\text{SS}_{\text{error}}/df_{\text{error}}}{\text{SS}_{\text{total}}/(N-1)} = 1 - \frac{\text{MS}_{\text{error}}}{\text{MS}_{\text{total}}},\]

which incorporates the degrees of freedom for the model via the error degrees of freedom which go down as the model complexity increases. This adjustment means that just adding extra useless variables (variables that do not explain very much extra variation) do not increase this measure. That makes this measure useful for model selection since it can help us to stop adding unimportant variables and find a “good” model among a set of candidates. Like the regular R2, larger values are better. The downside to \(\boldsymbol{R}^2_{\text{adjusted}}\) is that it is no longer a percentage of variation in the response that is explained by the model; it can be less than 0 and so has no interpretable scale. It is just “larger is better”. It provides one method for building a model (different from using p-values to drop unimportant variables as discussed below), by fitting a set of candidate models containing different variables and then picking the model with the largest \(\boldsymbol{R}^2_{\text{adjusted}}\). You will want to interpret this new measure on a percentage scale, but do not do that. It is a just a measure to help you pick a model and that is all it is!

One other caveat in model comparison is worth mentioning: make sure you are comparing models for the same responses. That may sound trivial and usually it is. But when there are missing values in the data set, especially on some explanatory variables and not others, it is important to be careful that the \(y\text{'s}\) do not change between models you are comparing. This relates to our Snow Depth modeling because responses were being removed due to their influential nature. We can’t compare R2 or \(\boldsymbol{R}^2_{\text{adjusted}}\) for \(n=25\) to a model when \(n=23\) – it isn’t a fair comparison on either measure since they based on the total variability which is changing as the responses used change.

In the MLR (or SLR) model summaries, both the R2 and \(\boldsymbol{R}^2_{\text{adjusted}}\) are available. Make sure you are able to pick out the correct one. For the reduced data set (\(n=23\)) Snow Depth models, the pertinent part of the model summary for the model with all three predictors is in the last three lines:

##

## Call:

## lm(formula = Snow.Depth ~ Elevation + Min.Temp + Max.Temp, data = snotel2[-c(9,

## 22), ])

##

## Residuals:

## Min 1Q Median 3Q Max

## -14.878 -4.486 0.024 3.996 20.728

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -2.133e+02 7.458e+01 -2.859 0.0100

## Elevation 2.686e-02 4.997e-03 5.374 3.47e-05

## Min.Temp 9.843e-01 1.359e+00 0.724 0.4776

## Max.Temp 1.243e+00 5.452e-01 2.280 0.0343

##

## Residual standard error: 8.832 on 19 degrees of freedom

## Multiple R-squared: 0.8535, Adjusted R-squared: 0.8304

## F-statistic: 36.9 on 3 and 19 DF, p-value: 4.003e-08There is a value for \(\large{\textbf{Multiple R-Squared}} \text{ of } 0.8535\), this is the R2 value and suggests that the model with Elevation, Min and Max temperatures explains 85.4% of the variation in Snow Depth. The \(\boldsymbol{R}^2_{\text{adjusted}}\) is 0.8304 and is available further to the right labeled as \(\color{red}{{\textbf{Adjusted R-Squared}}}\). We repeated this for a suite of different models for this same \(n=23\) data set and found the following results in Table 8.1. The top \(\boldsymbol{R}^2_{\text{adjusted}}\) model is the model with Elevation and Max.Temp, which beats out the model with all three variables on \(\boldsymbol{R}^2_{\text{adjusted}}\). Note that the top R2 model is the model with three predictors, but the most complicated model will always have that characteristic.

| Model | \(\boldsymbol{K}\) | \(\boldsymbol{R^2}\) | \(\boldsymbol{R^2_{\text{adjusted}}}\) | \(\boldsymbol{R^2_{\text{adjusted}}}\) Rank |

|---|---|---|---|---|

| SD \(\sim\) Elevation | 1 | 0.8087 | 0.7996 | 3 |

| SD \(\sim\) Min.Temp | 1 | 0.6283 | 0.6106 | 5 |

| SD \(\sim\) Max.Temp | 1 | 0.4131 | 0.3852 | 7 |

| SD \(\sim\) Elevation + Min.Temp | 2 | 0.8134 | 0.7948 | 4 |

| SD \(\sim\) Elevation + Max.Temp | 2 | 0.8495 | 0.8344 | 1 |

| SD \(\sim\) Min.Temp + Max.Temp | 2 | 0.6308 | 0.5939 | 6 |

| SD \(\sim\) Elevation + Min.Temp + Max.Temp | 3 | 0.8535 | 0.8304 | 2 |

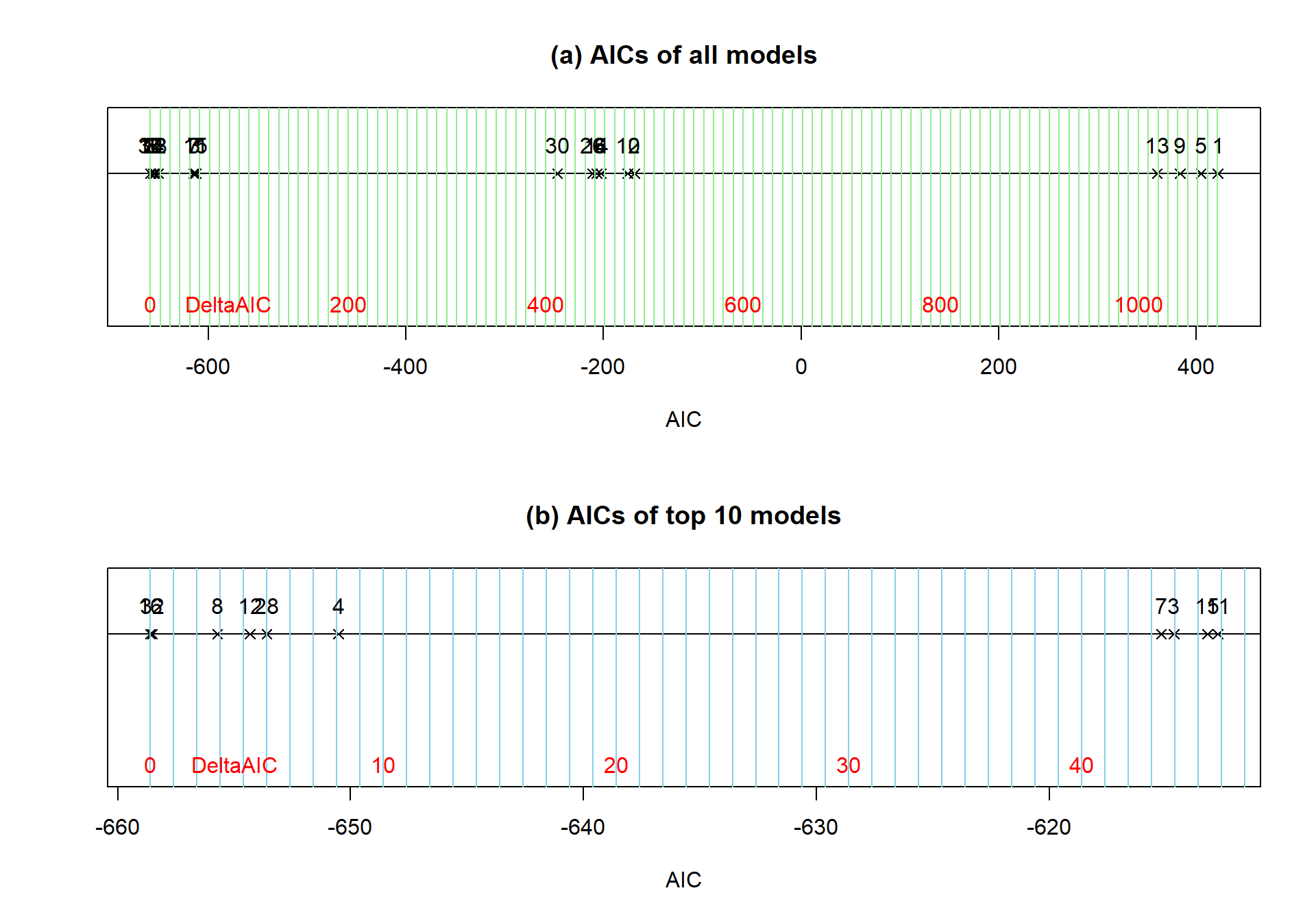

The top adjusted R2 model contained Elevation and Max.Temp and has an R2 of 0.8495, so we can say that the model with Elevation and Maximum Temperature explains 84.95% percent of the variation in Snow Depth and also that this model was selected based on the \(\boldsymbol{R}^2_{\text{adjusted}}\). One of the important features of \(\boldsymbol{R}^2_{\text{adjusted}}\) is available in this example – adding variables often does not always increase its value even though R2 does increase with any addition. In Section 8.13 we consider a competitor for this model selection criterion that may “work” a bit better and be extendable into more complicated modeling situations; that measure is called the AIC.

8.5 General recommendations for MLR interpretations and VIFs

There are some important issues to remember121 when interpreting regression models that can result in common mistakes.

Don’t claim to “hold everything constant” for a single individual:

Mathematically this is a correct interpretation of the MLR model but it is rarely the case that we could have this occur in real applications. Is it possible to increase the Elevation while holding the Max.Temp constant? We discussed making term-plots doing exactly this – holding the other variables constant at their means. If we interpret each slope coefficient in an MLR conditionally then we can craft interpretations such as: For locations that have a Max.Temp of, say, \(45^\circ F\) and Min.Temp of, say, \(30^\circ F\), a 1 foot increase in Elevation tends to be associated with a 0.0268 inch increase in Snow Depth on average. This does not try to imply that we can actually make that sort of change but that given those other variables, the change for that variable is a certain magnitude.

Don’t interpret the regression results causally (or casually?)…

Unless you are analyzing the results of a designed experiment (where the levels of the explanatory variable(s) were randomly assigned) you cannot state that a change in that \(x\) causes a change in \(y\), especially for a given individual. The multicollinearity in predictors makes it especially difficult to put too much emphasis on a single slope coefficient because it may be corrupted/modified by the other variables being in the model. In observational studies, there are also all the potential lurking variables that we did not measure or even confounding variables that we did measure but can’t disentangle from the variable used in a particular model. While we do have a complicated mathematical model relating various \(x\text{'s}\) to the response, do not lose that fundamental focus on causal vs non-causal inferences based on the design of the study.

Be cautious about doing prediction in MLR – you might be doing extrapolation!

It is harder to know if you are doing extrapolation in MLR since you could be in a region of the \(x\text{'s}\) that no observations were obtained. Suppose we want to predict the Snow Depth for an Elevation of 6000 and Max.Temp of 30. Is this extrapolation based on Figure 8.13? In other words, can you find any observations “nearby” in the plot of the two variables together? What about an Elevation of 6000 and a Max.Temp of 40? The first prediction is in a different proximity to observations than the second one… In situations with more than two explanatory variables it becomes even more challenging to know whether you are doing extrapolation and the problem grows as the number of dimensions to search increases… In fact, in complicated MLR models we typically do not know whether there are observations “nearby” if we are doing predictions for unobserved combinations of our predictors. Note that Figure 8.13 also reinforces our potential collinearity problem between Elevation and Max.Temp with higher elevations being strongly associated with lower temperatures.

Figure 8.13: Scatterplot of observed Elevations and Maximum Temperatures for SNOTEL data.

Don’t think that the sign of a coefficient is special…

Adding other variables into the MLR models can cause a switch in the coefficients or change their magnitude or make them go from “important” to “unimportant” without changing the slope too much. This is related to the conditionality of the relationships being estimated in MLR and the potential for sharing of information in the predictors when it is present.

Multicollinearity in MLR models:

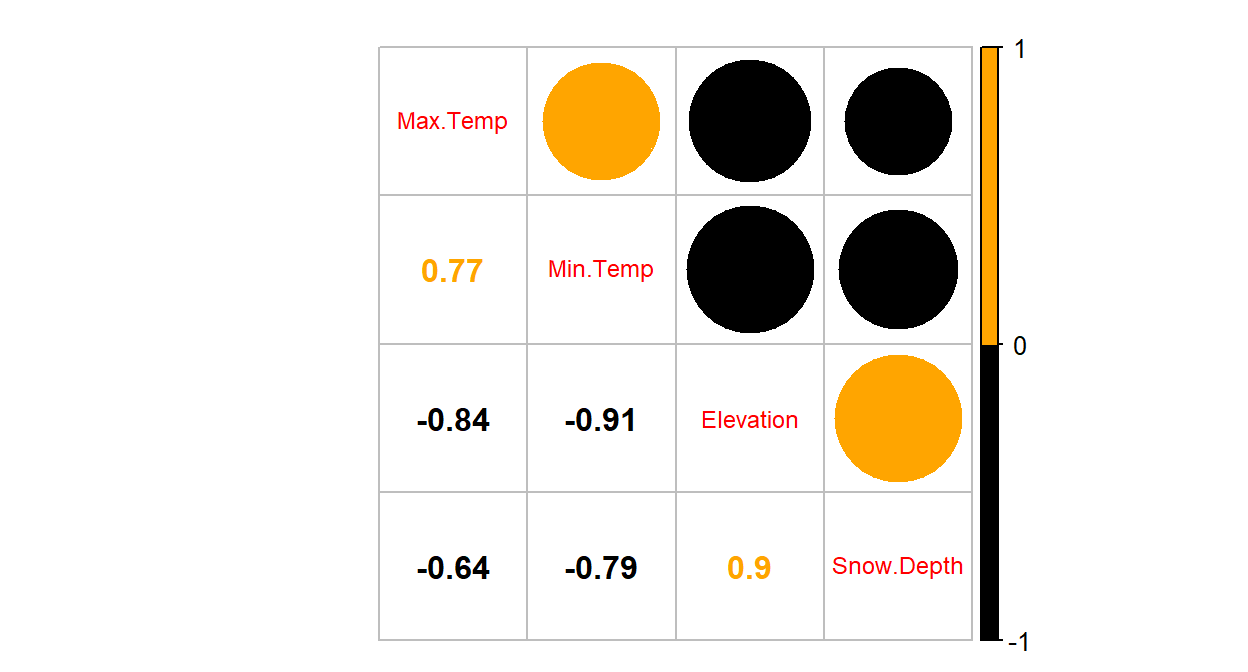

When explanatory variables are not independent (related) to one another, then including/excluding one variable will have an impact on the other variable. Consider the correlations among the predictors in the SNOTEL data set or visually displayed in Figure 8.14:

library(corrplot) par(mfrow=c(1,1), oma=c(0,0,1,0)) corrplot.mixed(cor(snotel2[-c(9,22),3:6]), upper.col=c(1, "orange"), lower.col=c(1, "orange")) round(cor(snotel2[-c(9,22),3:6]),2)

Figure 8.14: Plot of correlation matrix in the snow depth data set with influential points removed

## Max.Temp Min.Temp Elevation Snow.Depth ## Max.Temp 1.00 0.77 -0.84 -0.64 ## Min.Temp 0.77 1.00 -0.91 -0.79 ## Elevation -0.84 -0.91 1.00 0.90 ## Snow.Depth -0.64 -0.79 0.90 1.00The predictors all share at least moderately strong linear relationships. For example, the \(\boldsymbol{r}=-0.91\) between Min.Temp and Elevation suggests that they contain very similar information and that extends to other pairs of variables as well. When variables share information, their addition to models may not improve the performance of the model and actually can make the estimated coefficients unstable, creating uncertainty in the correct coefficients because of the shared information. It seems that Elevation is related to Snow Depth but maybe it is because it has lower Minimum Temperatures? So you might wonder how we can find the “correct” slopes when they are sharing information in the response variable. The short answer is that we can’t. But we do use Least Squares to find coefficient estimates as we did before – except that we have to remember that these estimates are conditional on other variables in the model for our interpretation since they impact one another within the model. It ends up that the uncertainty of pinning those variables down in the presence of shared information leads to larger SEs for all the slopes. And that we can actually measure how much each of the SEs are inflated because of multicollinearity with other variables in the model using what are called Variance Inflation Factors (or VIFs).

VIFs provide a way to assess the multicollinearity in the MLR model that

is caused by including specific variables. The amount of information that is

shared between a single explanatory variable and the others can be found by

regressing that variable on the others and calculating R2

for that model. The code for this regression is something like:

lm(X1~X2+X3+...+XK), which regresses X1on X2 through XK.

The

\(1-\boldsymbol{R}^2\) from this regression is the amount of independent

information in X1 that is not explained by (or related to) the other variables in the model.

The VIF for each variable is defined using this quantity as

\(\textbf{VIF}_{\boldsymbol{k}}\boldsymbol{=1/(1-R^2_k)}\) for variable \(k\).

If there is no shared information \((\boldsymbol{R}^2=0)\), then the VIF will be

1. But if the information is completely shared with other variables

\((\boldsymbol{R}^2=1)\), then the VIF goes to infinity (1/0). Basically, large

VIFs are bad, with the rule of thumb that values over 5 or 10 are considered

“large” values indicating high or extreme multicollinearity in the model for that particular

variable. We use this scale to determine if multicollinearity is a definite problem for a

variable of interest. But any value of the VIF over 1 indicates some amount of multicollinearity is present. Additionally, the \(\boldsymbol{\sqrt{\textbf{VIF}_k}}\) is

also very interesting as it is the number of times larger than the SE for the

slope for variable \(k\) is due to collinearity with other variables in the model.

This is the most useful scale to understand VIFs and allows you to make your own assessment of whether you think the multicollinearity is “important” based on how inflated the SEs are in a particular situation. An example will show how to easily get these results

and where the results come from.

In general, the easy way to obtain VIFs is using the vif function from the

car package (Fox, Weisberg, and Price (2018), Fox (2003)).

It has the advantage of also providing a reasonable

result when we include categorical variables in models

(Sections 8.9 and 8.11) over some other sources of this information in R. We apply the vif

function directly to a model of interest and it generates values for each explanatory variable.

## Elevation Min.Temp Max.Temp

## 8.164201 5.995301 3.350914Not surprisingly, there is an indication of extreme problems with multicollinearity in two of the three variables in the model with the largest issues identified for Elevation and Min.Temp. Both of their VIFs exceed 5 indicating large multicollinearity problems. On the square-root scale, the VIFs show more interpretation utility.

## Elevation Min.Temp Max.Temp

## 2.857307 2.448530 1.830550The result for Elevation of 2.86 suggests that the SE for Elevation is 2.86 times larger than it should be because of multicollinearity with other variables in the model. Similarly, the Min.Temp SE is 2.45 times larger and the Max.Temp SE is 1.83 times larger. Even the result for Max.Temp suggests an issue with multicollinearity even though it is below the cut-off for noting extreme issues with shared information. All of this generally suggests issues with multicollinearity in the model and that we need to be cautious in interpreting any slope coefficients from this model because they are all being impacted by shared information in the predictor variables.

In order to see how the VIF is calculated for Elevation, we need to regress Elevation on Min.Temp and Max.Temp. Note that this model is only fit to find the percentage of variation in elevation explained by the temperature variables. It ends up being 0.8775 – so a high percentage of Elevation can be explained by the linear model using min and max temperatures.

##

## Call:

## lm(formula = Elevation ~ Min.Temp + Max.Temp, data = snotel2[-c(9,

## 22), ])

##

## Residuals:

## Min 1Q Median 3Q Max

## -1120.05 -142.99 14.45 186.73 624.61

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 14593.21 699.77 20.854 4.85e-15

## Min.Temp -208.82 38.94 -5.363 3.00e-05

## Max.Temp -56.28 20.90 -2.693 0.014

##

## Residual standard error: 395.2 on 20 degrees of freedom

## Multiple R-squared: 0.8775, Adjusted R-squared: 0.8653

## F-statistic: 71.64 on 2 and 20 DF, p-value: 7.601e-10Using this result, we can calculate

\[\text{VIF}_{\text{elevation}} = \dfrac{1}{1-R^2_{\text{elevation}}} = \dfrac{1}{1-0.8775} = \dfrac{1}{0.1225} = 8.16\]

## [1] 0.1225## [1] 8.163265Note that when we observe small VIFs (close to 1), that provides us with confidence that multicollinearity is not causing problems under the surface of a particular MLR model. Also note that we can’t use the VIFs to do anything about multicollinearity in the models – it is just a diagnostic to understand the magnitude of the problem.

8.6 MLR inference: Parameter inferences using the t-distribution

I have been deliberately vague about what an important variable is up to this point, and chose to focus on some bigger modeling issues. Now we turn our attention to one of the most common tasks in any basic statistical model – assessing whether a particular observed result is more unusual than we would expect by chance if it really wasn’t related to the response. The previous discussions of estimation in MLR models informs our interpretations of of the tests. The \(t\)-tests for slope coefficients are based on our standard recipe – take the estimate, divide it by its standard error and then, assuming the statistic follows a \(t\)-distribution under the null hypothesis, find a p-value. This tests whether each true slope coefficient, \(\beta_k\), is 0 or not, in a model that contains the other variables. Again, sometimes we say “after adjusting for” the other \(x\text{'s}\) or “conditional on” the other \(x\text{'s}\) in the model or “after allowing for”… as in the slope coefficient interpretations above. The main point is that you should not interpret anything related to slope coefficients in MLR without referencing the other variables that are in the model! The tests for the slope coefficients assess \(\boldsymbol{H_0:\beta_k=0}\), which in words is a test that there is no linear relationship between explanatory variable \(k\) and the response variable, \(y\), in the population, given the other variables in model. The typical alternative hypothesis is \(\boldsymbol{H_0:\beta_k\ne 0}\). In words, the alternative hypothesis is that there is some linear relationship between explanatory variable \(k\) and the response variable, \(y\), in the population, given the other variables in the model. It is also possible to test for positive or negative slopes in the alternative, but this is rarely the first concern, especially when MLR slopes can occasionally come out in unexpected directions.

The test statistic for these hypotheses is

\(\boldsymbol{t=\dfrac{b_k}{\textbf{SE}_k}}\) and, if our assumptions hold,

follows a \(t\)-distribution with \(n-K-1\) df where \(K\) is the number of

predictor variables in the model.

We perform the test for each slope

coefficient, but the test is conditional on the other variables in the model – the order the variables are fit in does

not change \(t\)-test results. For the Snow Depth example with Elevation

and Maximum Temperature as predictors, the pertinent output is in the four

columns of the Coefficient table that is the first part of the model

summary we’ve been working with. You can find the estimated slope

(Estimate column), the SE of the slopes (Std. Error column), the

\(t\)-statistics (t value column), and the p-values (Pr(>|t|) column).

The degrees of freedom for the \(t\)-distributions show up below the coefficients

and the \(df=20\) here. This is because \(n=23\) and \(K=2\), so \(df=23-2-1=20\).

##

## Call:

## lm(formula = Snow.Depth ~ Elevation + Max.Temp, data = snotel2[-c(9,

## 22), ])

##

## Residuals:

## Min 1Q Median 3Q Max

## -14.652 -4.645 0.518 3.744 20.550

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -1.675e+02 3.924e+01 -4.269 0.000375

## Elevation 2.407e-02 3.162e-03 7.613 2.48e-07

## Max.Temp 1.253e+00 5.385e-01 2.327 0.030556

##

## Residual standard error: 8.726 on 20 degrees of freedom

## Multiple R-squared: 0.8495, Adjusted R-squared: 0.8344

## F-statistic: 56.43 on 2 and 20 DF, p-value: 5.979e-09The hypotheses for the Maximum Temperature term (Max.Temp) are:

\(\boldsymbol{H_0: \beta_{\textbf{Max.Temp}}=0}\) given that Elevation is in the model vs

\(\boldsymbol{H_A: \beta_{\textbf{Max.Temp}}\ne 0}\) given that Elevation is in the model.

The test statistic is \(t=2.327\) with \(df = 20\) (so under the null hypothesis the test statistic follows a \(t_{20}\)-distribution).

The output provides a p-value of \(0.0306\) for this test. We can also find this

using pt:

## [1] 0.03058319The chance of observing a slope for Max.Temp as extreme or more extreme than assuming there really is no linear relationship between Max.Temp and Snow Depth (in a model with Elevation), is about 3% so this presents moderate evidence against the null hypothesis, in favor of retaining this term in the model.

Conclusion: There is moderate evidence against the null hypothesis of no linear relationship between Max.Temp and Snow Depth (\(t_{20}=2.33\), p-value=0.03), once we account for Elevation, so we can conclude that there is a linear relationship between them given Elevation in the population of SNOTEL sites in Montana on this day. Because we cannot randomly assign the temperatures to sites, we cannot conclude that temperature causes changes in the snow depth – in fact it might even be possible for a location to have different temperatures because of different snow depths. The inferences do pertain to the population of SNOTEL sites on this day because of the random sample from the population of sites.

Similarly, we can test for Elevation after controlling for the Maximum Temperature:

\[\boldsymbol{H_0: \beta_{\textbf{Elevation}}=0 \textbf{ vs } H_A: \beta_{\textbf{Elevation}}\ne 0},\]

given that Max.Temp is in the model:

\(t=7.613\) (\(df=20\)) with a p-value of \(0.00000025\) or just \(<0.00001\).

So there is strong evidence to suggest that there is a linear relationship between Elevation and Snow Depth, once we adjust for Max.Temp in the population of SNOTEL sites in Montana on this day.

There is one last test that is of dubious interest in almost every situation – to test that the \(y\)-intercept \((\boldsymbol{\beta_0})\) in an MLR is 0. This tests if the true mean response is 0 when all the predictor variables are set to 0. I see researchers reporting this p-value frequently and it is possibly the most useless piece of information in the regression model summary. Sometimes less educated statistics users even think this result is proof of something interesting or are disappointed when the p-value is not small. Unless you want to do some prediction and are interested in whether the mean response when all the predictors are set to 0 is different from 0, this test should not be reported or, if reported, is certainly not very interesting122. But we should at least go through the motions on this test once so you don’t make the same mistakes:

\(\boldsymbol{H_0: \beta_0=0 \textbf{ vs } H_A: \beta_0\ne 0}\) in a model with Elevation and Maximum Temperature.

\(t=-4.269\), with an assumption that the test statistic follows a \(t_{20}\)-distribution under the null hypothesis, and the p-value \(= 0.000375\).

There is strong evidence against the null hypothesis that the true mean Snow Depth is 0 when the Maximum Temperature is 0 and the Elevation is 0 in the population of SNOTEL sites, so we could conclude that the true mean Snow Depth is different from 0 at these values of the predictors. To reinforce the general uselessness of this test, think about the combination of \(x\text{'s}\) – is that even physically possible in Montana (or the continental US) in April?

Remember when testing slope coefficients in MLR, that if we find weak evidence against the null hypothesis, it does not mean that there is no relationship or even no linear relationship between the variables, but that there is insufficient evidence against the null hypothesis of no linear relationship once we account for the other variables in the model. If you do not find a small p-value for a variable, you should either be cautious when interpreting the coefficient, or not interpret it. Some model building strategies would lead to dropping the term from the model but sometimes we will have models to interpret that contain terms with larger p-values. Sometimes they are still of interest but the weight on the interpretation isn’t as heavy as if the term had a small p-value – you should remember that you can’t prove that coefficient is different from 0 in that model. It also may mean that you don’t know too much about its specific value. Confidence intervals will help us pin down where we think the true slope coefficient might be located, given the other variables in the model, and so are usually pretty interesting to report, regardless of how you approached model building and possible refinement.

Confidence intervals provide the dual uses of inferences for the location of the true slope and whether the true slope seems to be different from 0. The confidence intervals here have our regular format of estimate \(\mp\) margin of error. Like the previous tests, we work with \(t\)-distributions with \(n-K-1\) degrees of freedom. Specifically the 95% confidence interval for slope coefficient \(k\) is

\[\boldsymbol{b_k \mp t^*_{n-K-1}\textbf{SE}_{b_k}}\ .\]

The interpretation is the same as in SLR with the additional tag of “after controlling for the other variables in the model” for the reasons discussed before. The general slope CI interpretation for predictor \(\boldsymbol{x_k}\) in an MLR is:

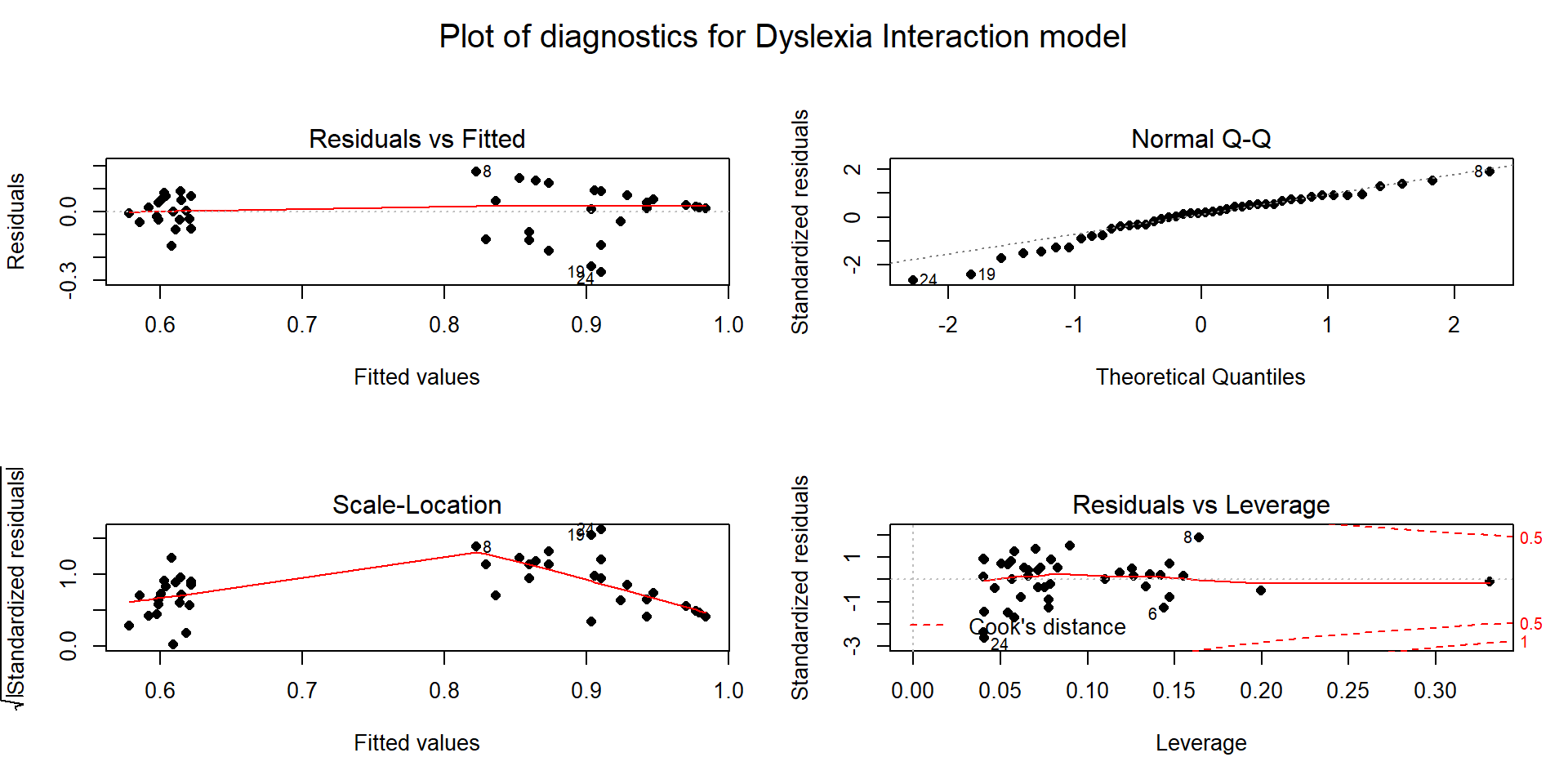

For a 1 [unit of \(\boldsymbol{x_k}\)] increase in \(\boldsymbol{x_k}\), we are 95% confident that the true mean of \(\boldsymbol{y}\) changes by between LL and UL [units of \(\boldsymbol{Y}\)] in the population, after adjusting for the other \(x\text{'s}\) [list them!].