Note

Go to the end to download the full example code.

Mass-univariate twoway repeated measures ANOVA on single trial power#

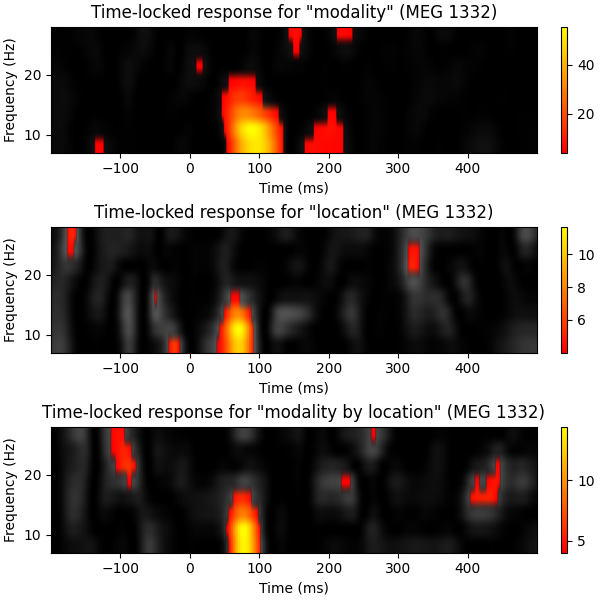

This script shows how to conduct a mass-univariate repeated measures ANOVA. As the model to be fitted assumes two fully crossed factors, we will study the interplay between perceptual modality (auditory VS visual) and the location of stimulus presentation (left VS right). Here we use single trials as replications (subjects) while iterating over time slices plus frequency bands for to fit our mass-univariate model. For the sake of simplicity we will confine this analysis to one single channel of which we know that it exposes a strong induced response. We will then visualize each effect by creating a corresponding mass-univariate effect image. We conclude with accounting for multiple comparisons by performing a permutation clustering test using the ANOVA as clustering function. The results final will be compared to multiple comparisons using False Discovery Rate correction.

# Authors: Denis Engemann <denis.engemann@gmail.com>

# Eric Larson <larson.eric.d@gmail.com>

# Alexandre Gramfort <alexandre.gramfort@inria.fr>

# Alex Rockhill <aprockhill@mailbox.org>

#

# License: BSD-3-Clause

# Copyright the MNE-Python contributors.

import matplotlib.pyplot as plt

import numpy as np

import mne

from mne.datasets import sample

from mne.stats import f_mway_rm, f_threshold_mway_rm, fdr_correction

print(__doc__)

Set parameters#

data_path = sample.data_path()

meg_path = data_path / "MEG" / "sample"

raw_fname = meg_path / "sample_audvis_raw.fif"

event_fname = meg_path / "sample_audvis_raw-eve.fif"

tmin, tmax = -0.2, 0.5

# Setup for reading the raw data

raw = mne.io.read_raw_fif(raw_fname)

events = mne.read_events(event_fname)

include = []

raw.info["bads"] += ["MEG 2443"] # bads

# picks MEG gradiometers

picks = mne.pick_types(

raw.info,

meg="grad",

eeg=False,

eog=True,

stim=False,

include=include,

exclude="bads",

)

ch_name = "MEG 1332"

# Load conditions

reject = dict(grad=4000e-13, eog=150e-6)

event_id = dict(aud_l=1, aud_r=2, vis_l=3, vis_r=4)

epochs = mne.Epochs(

raw,

events,

event_id,

tmin,

tmax,

picks=picks,

baseline=(None, 0),

preload=True,

reject=reject,

)

epochs.pick([ch_name]) # restrict example to one channel

Opening raw data file /home/circleci/mne_data/MNE-sample-data/MEG/sample/sample_audvis_raw.fif...

Read a total of 3 projection items:

PCA-v1 (1 x 102) idle

PCA-v2 (1 x 102) idle

PCA-v3 (1 x 102) idle

Range : 25800 ... 192599 = 42.956 ... 320.670 secs

Ready.

Not setting metadata

289 matching events found

Setting baseline interval to [-0.19979521315838786, 0.0] s

Applying baseline correction (mode: mean)

0 projection items activated

Loading data for 289 events and 421 original time points ...

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

53 bad epochs dropped

We have to make sure all conditions have the same counts, as the ANOVA expects a fully balanced data matrix and does not forgive imbalances that generously (risk of type-I error).

epochs.equalize_event_counts(event_id)

# Factor to down-sample the temporal dimension of the TFR computed by

# tfr_morlet.

decim = 2

freqs = np.arange(7, 30, 3) # define frequencies of interest

n_cycles = freqs / freqs[0]

zero_mean = False # don't correct morlet wavelet to be of mean zero

# To have a true wavelet zero_mean should be True but here for illustration

# purposes it helps to spot the evoked response.

Dropped 12 epochs: 50, 92, 128, 147, 152, 154, 155, 186, 196, 198, 206, 207

Create TFR representations for all conditions#

epochs_power = list()

for condition in [epochs[k] for k in event_id]:

this_tfr = condition.compute_tfr(

"morlet",

freqs,

n_cycles=n_cycles,

decim=decim,

average=False,

zero_mean=zero_mean,

return_itc=False,

)

this_tfr.apply_baseline(mode="ratio", baseline=(None, 0))

this_power = this_tfr.data[:, 0, :, :] # we only have one channel.

epochs_power.append(this_power)

Applying baseline correction (mode: ratio)

Applying baseline correction (mode: ratio)

Applying baseline correction (mode: ratio)

Applying baseline correction (mode: ratio)

Setup repeated measures ANOVA#

We will tell the ANOVA how to interpret the data matrix in terms of factors. This is done via the factor levels argument which is a list of the number factor levels for each factor.

n_conditions = len(epochs.event_id)

n_replications = epochs.events.shape[0] // n_conditions

factor_levels = [2, 2] # number of levels in each factor

effects = "A*B" # this is the default signature for computing all effects

# Other possible options are 'A' or 'B' for the corresponding main effects

# or 'A:B' for the interaction effect only (this notation is borrowed from the

# R formula language)

n_freqs = len(freqs)

times = 1e3 * epochs.times[::decim]

n_times = len(times)

Now we’ll assemble the data matrix and swap axes so the trial replications are the first dimension and the conditions are the second dimension.

data = np.swapaxes(np.asarray(epochs_power), 1, 0)

# so we have replications × conditions × observations

# where the time-frequency observations are freqs × times:

print(data.shape)

(56, 4, 8, 211)

While the iteration scheme used above for assembling the data matrix makes sure the first two dimensions are organized as expected (with A = modality and B = location):

trial |

A1B1 |

A1B2 |

A2B1 |

B2B2 |

|---|---|---|---|---|

1 |

1.34 |

2.53 |

0.97 |

1.74 |

… |

… |

… |

… |

… |

56 |

2.45 |

7.90 |

3.09 |

4.76 |

Now we’re ready to run our repeated measures ANOVA.

Note. As we treat trials as subjects, the test only accounts for time locked responses despite the ‘induced’ approach. For analysis for induced power at the group level averaged TRFs are required.

fvals, pvals = f_mway_rm(data, factor_levels, effects=effects)

effect_labels = ["modality", "location", "modality by location"]

fig, axes = plt.subplots(3, 1, figsize=(6, 6), layout="constrained")

# let's visualize our effects by computing f-images

for effect, sig, effect_label, ax in zip(fvals, pvals, effect_labels, axes):

# show naive F-values in gray

ax.imshow(

effect,

cmap="gray",

aspect="auto",

origin="lower",

extent=[times[0], times[-1], freqs[0], freqs[-1]],

)

# create mask for significant time-frequency locations

effect[sig >= 0.05] = np.nan

c = ax.imshow(

effect,

cmap="autumn",

aspect="auto",

origin="lower",

extent=[times[0], times[-1], freqs[0], freqs[-1]],

)

fig.colorbar(c, ax=ax)

ax.set_xlabel("Time (ms)")

ax.set_ylabel("Frequency (Hz)")

ax.set_title(f'Time-locked response for "{effect_label}" ({ch_name})')

Account for multiple comparisons using FDR versus permutation clustering test#

First we need to slightly modify the ANOVA function to be suitable for the clustering procedure. Also want to set some defaults. Let’s first override effects to confine the analysis to the interaction

effects = "A:B"

A stat_fun must deal with a variable number of input arguments. Inside the clustering function each condition will be passed as flattened array, necessitated by the clustering procedure. The ANOVA however expects an input array of dimensions: subjects × conditions × observations (optional). The following function catches the list input and swaps the first and the second dimension and finally calls the ANOVA function.

def stat_fun(*args):

return f_mway_rm(

np.swapaxes(args, 1, 0),

factor_levels=factor_levels,

effects=effects,

return_pvals=False,

)[0]

# The ANOVA returns a tuple f-values and p-values, we will pick the former.

pthresh = 0.001 # set threshold rather high to save some time

f_thresh = f_threshold_mway_rm(n_replications, factor_levels, effects, pthresh)

tail = 1 # f-test, so tail > 0

n_permutations = 256 # Save some time (the test won't be too sensitive ...)

F_obs, clusters, cluster_p_values, h0 = mne.stats.permutation_cluster_test(

epochs_power,

stat_fun=stat_fun,

threshold=f_thresh,

tail=tail,

n_jobs=None,

n_permutations=n_permutations,

buffer_size=None,

out_type="mask",

seed=0,

)

stat_fun(H1): min=6.658469887778116e-07 max=14.419588379647625

Running initial clustering …

Found 1 cluster

0%| | Permuting : 0/255 [00:00<?, ?it/s]

0%| | Permuting : 1/255 [00:00<00:08, 28.94it/s]

1%| | Permuting : 2/255 [00:00<00:08, 29.33it/s]

2%|▏ | Permuting : 4/255 [00:00<00:06, 39.80it/s]

2%|▏ | Permuting : 6/255 [00:00<00:05, 45.04it/s]

3%|▎ | Permuting : 7/255 [00:00<00:05, 41.57it/s]

4%|▎ | Permuting : 9/255 [00:00<00:05, 44.92it/s]

4%|▍ | Permuting : 10/255 [00:00<00:05, 42.27it/s]

5%|▍ | Permuting : 12/255 [00:00<00:05, 44.81it/s]

5%|▌ | Permuting : 13/255 [00:00<00:05, 42.78it/s]

6%|▌ | Permuting : 15/255 [00:00<00:05, 44.83it/s]

6%|▋ | Permuting : 16/255 [00:00<00:05, 42.89it/s]

7%|▋ | Permuting : 18/255 [00:00<00:05, 44.68it/s]

8%|▊ | Permuting : 20/255 [00:00<00:05, 46.18it/s]

9%|▊ | Permuting : 22/255 [00:00<00:04, 47.46it/s]

9%|▉ | Permuting : 23/255 [00:00<00:05, 45.82it/s]

9%|▉ | Permuting : 24/255 [00:00<00:05, 44.39it/s]

10%|▉ | Permuting : 25/255 [00:00<00:05, 43.13it/s]

11%|█ | Permuting : 27/255 [00:00<00:05, 44.44it/s]

11%|█▏ | Permuting : 29/255 [00:00<00:04, 45.63it/s]

12%|█▏ | Permuting : 31/255 [00:00<00:04, 46.63it/s]

13%|█▎ | Permuting : 32/255 [00:00<00:04, 45.35it/s]

13%|█▎ | Permuting : 33/255 [00:00<00:05, 44.20it/s]

14%|█▎ | Permuting : 35/255 [00:00<00:04, 45.30it/s]

15%|█▍ | Permuting : 37/255 [00:00<00:04, 46.26it/s]

15%|█▍ | Permuting : 38/255 [00:00<00:04, 45.12it/s]

16%|█▌ | Permuting : 40/255 [00:00<00:04, 46.02it/s]

16%|█▋ | Permuting : 42/255 [00:00<00:04, 46.91it/s]

17%|█▋ | Permuting : 43/255 [00:00<00:04, 45.79it/s]

18%|█▊ | Permuting : 45/255 [00:00<00:04, 46.67it/s]

18%|█▊ | Permuting : 47/255 [00:01<00:04, 47.44it/s]

19%|█▉ | Permuting : 48/255 [00:01<00:04, 46.33it/s]

20%|█▉ | Permuting : 50/255 [00:01<00:04, 47.14it/s]

20%|██ | Permuting : 52/255 [00:01<00:04, 47.89it/s]

21%|██ | Permuting : 53/255 [00:01<00:04, 46.76it/s]

22%|██▏ | Permuting : 55/255 [00:01<00:04, 47.52it/s]

22%|██▏ | Permuting : 56/255 [00:01<00:04, 46.41it/s]

22%|██▏ | Permuting : 57/255 [00:01<00:04, 45.43it/s]

23%|██▎ | Permuting : 59/255 [00:01<00:04, 46.24it/s]

24%|██▍ | Permuting : 61/255 [00:01<00:04, 47.00it/s]

25%|██▍ | Permuting : 63/255 [00:01<00:04, 47.68it/s]

25%|██▌ | Permuting : 64/255 [00:01<00:04, 46.66it/s]

26%|██▌ | Permuting : 66/255 [00:01<00:03, 47.33it/s]

26%|██▋ | Permuting : 67/255 [00:01<00:04, 46.34it/s]

27%|██▋ | Permuting : 69/255 [00:01<00:03, 47.07it/s]

28%|██▊ | Permuting : 71/255 [00:01<00:03, 47.75it/s]

28%|██▊ | Permuting : 72/255 [00:01<00:03, 46.73it/s]

29%|██▉ | Permuting : 74/255 [00:01<00:03, 47.43it/s]

30%|██▉ | Permuting : 76/255 [00:01<00:03, 48.03it/s]

30%|███ | Permuting : 77/255 [00:01<00:03, 47.04it/s]

31%|███ | Permuting : 79/255 [00:01<00:03, 47.71it/s]

31%|███▏ | Permuting : 80/255 [00:01<00:03, 46.73it/s]

32%|███▏ | Permuting : 82/255 [00:01<00:03, 47.39it/s]

33%|███▎ | Permuting : 84/255 [00:01<00:03, 48.03it/s]

34%|███▎ | Permuting : 86/255 [00:01<00:03, 48.58it/s]

34%|███▍ | Permuting : 87/255 [00:01<00:03, 47.58it/s]

35%|███▍ | Permuting : 88/255 [00:01<00:03, 46.64it/s]

35%|███▌ | Permuting : 90/255 [00:01<00:03, 47.24it/s]

36%|███▌ | Permuting : 91/255 [00:01<00:03, 46.32it/s]

36%|███▋ | Permuting : 93/255 [00:01<00:03, 47.00it/s]

37%|███▋ | Permuting : 95/255 [00:02<00:03, 47.65it/s]

38%|███▊ | Permuting : 96/255 [00:02<00:03, 46.72it/s]

38%|███▊ | Permuting : 97/255 [00:02<00:03, 45.79it/s]

39%|███▉ | Permuting : 99/255 [00:02<00:03, 46.49it/s]

40%|███▉ | Permuting : 101/255 [00:02<00:03, 47.16it/s]

40%|████ | Permuting : 102/255 [00:02<00:03, 46.26it/s]

41%|████ | Permuting : 104/255 [00:02<00:03, 46.91it/s]

42%|████▏ | Permuting : 106/255 [00:02<00:03, 47.55it/s]

42%|████▏ | Permuting : 107/255 [00:02<00:03, 46.59it/s]

43%|████▎ | Permuting : 109/255 [00:02<00:03, 47.24it/s]

43%|████▎ | Permuting : 110/255 [00:02<00:03, 46.35it/s]

44%|████▍ | Permuting : 112/255 [00:02<00:03, 47.02it/s]

44%|████▍ | Permuting : 113/255 [00:02<00:03, 46.11it/s]

45%|████▌ | Permuting : 115/255 [00:02<00:02, 46.79it/s]

46%|████▌ | Permuting : 117/255 [00:02<00:02, 47.38it/s]

47%|████▋ | Permuting : 119/255 [00:02<00:02, 48.00it/s]

47%|████▋ | Permuting : 120/255 [00:02<00:02, 47.07it/s]

48%|████▊ | Permuting : 122/255 [00:02<00:02, 47.66it/s]

48%|████▊ | Permuting : 123/255 [00:02<00:02, 46.69it/s]

49%|████▉ | Permuting : 125/255 [00:02<00:02, 47.33it/s]

50%|████▉ | Permuting : 127/255 [00:02<00:02, 47.94it/s]

50%|█████ | Permuting : 128/255 [00:02<00:02, 47.02it/s]

51%|█████ | Permuting : 130/255 [00:02<00:02, 47.65it/s]

51%|█████▏ | Permuting : 131/255 [00:02<00:02, 46.69it/s]

52%|█████▏ | Permuting : 133/255 [00:02<00:02, 47.34it/s]

53%|█████▎ | Permuting : 134/255 [00:02<00:02, 46.45it/s]

53%|█████▎ | Permuting : 136/255 [00:02<00:02, 47.10it/s]

54%|█████▎ | Permuting : 137/255 [00:02<00:02, 46.20it/s]

55%|█████▍ | Permuting : 139/255 [00:02<00:02, 46.86it/s]

55%|█████▌ | Permuting : 141/255 [00:03<00:02, 47.45it/s]

56%|█████▌ | Permuting : 142/255 [00:03<00:02, 46.56it/s]

56%|█████▋ | Permuting : 144/255 [00:03<00:02, 47.20it/s]

57%|█████▋ | Permuting : 145/255 [00:03<00:02, 46.25it/s]

58%|█████▊ | Permuting : 147/255 [00:03<00:02, 46.91it/s]

58%|█████▊ | Permuting : 149/255 [00:03<00:02, 47.54it/s]

59%|█████▉ | Permuting : 150/255 [00:03<00:02, 46.65it/s]

59%|█████▉ | Permuting : 151/255 [00:03<00:02, 45.80it/s]

60%|██████ | Permuting : 153/255 [00:03<00:02, 46.43it/s]

60%|██████ | Permuting : 154/255 [00:03<00:02, 45.59it/s]

61%|██████ | Permuting : 156/255 [00:03<00:02, 46.28it/s]

62%|██████▏ | Permuting : 158/255 [00:03<00:02, 46.87it/s]

62%|██████▏ | Permuting : 159/255 [00:03<00:02, 46.02it/s]

63%|██████▎ | Permuting : 161/255 [00:03<00:02, 46.69it/s]

64%|██████▎ | Permuting : 162/255 [00:03<00:02, 45.84it/s]

64%|██████▍ | Permuting : 164/255 [00:03<00:01, 46.49it/s]

65%|██████▍ | Permuting : 165/255 [00:03<00:01, 45.63it/s]

65%|██████▌ | Permuting : 167/255 [00:03<00:01, 46.32it/s]

66%|██████▌ | Permuting : 168/255 [00:03<00:01, 45.49it/s]

67%|██████▋ | Permuting : 170/255 [00:03<00:01, 46.18it/s]

67%|██████▋ | Permuting : 171/255 [00:03<00:01, 45.34it/s]

68%|██████▊ | Permuting : 173/255 [00:03<00:01, 46.04it/s]

69%|██████▊ | Permuting : 175/255 [00:03<00:01, 46.66it/s]

69%|██████▉ | Permuting : 176/255 [00:03<00:01, 45.82it/s]

69%|██████▉ | Permuting : 177/255 [00:03<00:01, 45.01it/s]

70%|███████ | Permuting : 179/255 [00:03<00:01, 45.66it/s]

71%|███████ | Permuting : 180/255 [00:03<00:01, 44.87it/s]

71%|███████▏ | Permuting : 182/255 [00:03<00:01, 45.59it/s]

72%|███████▏ | Permuting : 184/255 [00:03<00:01, 46.28it/s]

73%|███████▎ | Permuting : 185/255 [00:03<00:01, 45.36it/s]

73%|███████▎ | Permuting : 186/255 [00:04<00:01, 44.59it/s]

74%|███████▎ | Permuting : 188/255 [00:04<00:01, 45.32it/s]

74%|███████▍ | Permuting : 189/255 [00:04<00:01, 44.55it/s]

75%|███████▍ | Permuting : 191/255 [00:04<00:01, 45.29it/s]

76%|███████▌ | Permuting : 193/255 [00:04<00:01, 45.99it/s]

76%|███████▌ | Permuting : 194/255 [00:04<00:01, 45.18it/s]

77%|███████▋ | Permuting : 196/255 [00:04<00:01, 45.89it/s]

78%|███████▊ | Permuting : 198/255 [00:04<00:01, 46.52it/s]

78%|███████▊ | Permuting : 199/255 [00:04<00:01, 45.68it/s]

79%|███████▉ | Permuting : 201/255 [00:04<00:01, 46.36it/s]

79%|███████▉ | Permuting : 202/255 [00:04<00:01, 45.53it/s]

80%|███████▉ | Permuting : 203/255 [00:04<00:01, 44.72it/s]

80%|████████ | Permuting : 205/255 [00:04<00:01, 45.45it/s]

81%|████████ | Permuting : 207/255 [00:04<00:01, 46.10it/s]

82%|████████▏ | Permuting : 208/255 [00:04<00:01, 45.29it/s]

82%|████████▏ | Permuting : 210/255 [00:04<00:00, 45.99it/s]

83%|████████▎ | Permuting : 211/255 [00:04<00:00, 45.18it/s]

84%|████████▎ | Permuting : 213/255 [00:04<00:00, 45.89it/s]

84%|████████▍ | Permuting : 214/255 [00:04<00:00, 45.04it/s]

85%|████████▍ | Permuting : 216/255 [00:04<00:00, 45.75it/s]

85%|████████▌ | Permuting : 217/255 [00:04<00:00, 44.96it/s]

86%|████████▌ | Permuting : 219/255 [00:04<00:00, 45.67it/s]

87%|████████▋ | Permuting : 221/255 [00:04<00:00, 46.33it/s]

87%|████████▋ | Permuting : 222/255 [00:04<00:00, 45.51it/s]

88%|████████▊ | Permuting : 224/255 [00:04<00:00, 46.15it/s]

88%|████████▊ | Permuting : 225/255 [00:04<00:00, 45.33it/s]

89%|████████▉ | Permuting : 227/255 [00:04<00:00, 46.03it/s]

90%|████████▉ | Permuting : 229/255 [00:04<00:00, 46.63it/s]

90%|█████████ | Permuting : 230/255 [00:04<00:00, 45.79it/s]

91%|█████████ | Permuting : 231/255 [00:05<00:00, 44.99it/s]

91%|█████████▏| Permuting : 233/255 [00:05<00:00, 45.71it/s]

92%|█████████▏| Permuting : 234/255 [00:05<00:00, 44.91it/s]

93%|█████████▎| Permuting : 236/255 [00:05<00:00, 45.63it/s]

93%|█████████▎| Permuting : 238/255 [00:05<00:00, 46.32it/s]

94%|█████████▎| Permuting : 239/255 [00:05<00:00, 45.49it/s]

94%|█████████▍| Permuting : 240/255 [00:05<00:00, 44.70it/s]

95%|█████████▍| Permuting : 242/255 [00:05<00:00, 45.43it/s]

96%|█████████▌| Permuting : 244/255 [00:05<00:00, 46.13it/s]

96%|█████████▌| Permuting : 245/255 [00:05<00:00, 45.31it/s]

97%|█████████▋| Permuting : 247/255 [00:05<00:00, 46.01it/s]

98%|█████████▊| Permuting : 249/255 [00:05<00:00, 46.68it/s]

98%|█████████▊| Permuting : 250/255 [00:05<00:00, 45.83it/s]

99%|█████████▉| Permuting : 252/255 [00:05<00:00, 46.51it/s]

100%|█████████▉| Permuting : 254/255 [00:05<00:00, 47.15it/s]

100%|██████████| Permuting : 255/255 [00:05<00:00, 47.48it/s]

100%|██████████| Permuting : 255/255 [00:05<00:00, 46.38it/s]

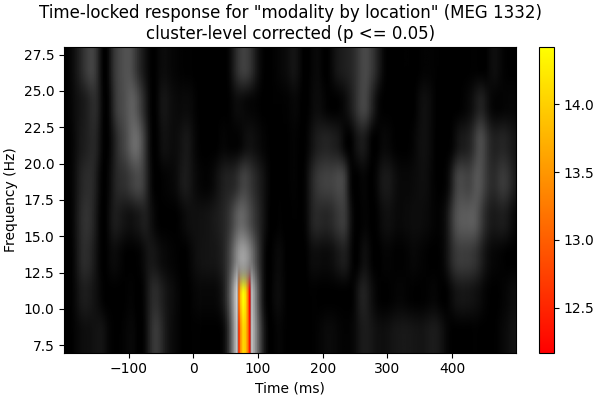

Create new stats image with only significant clusters:

good_clusters = np.where(cluster_p_values < 0.05)[0]

F_obs_plot = np.full_like(F_obs, np.nan)

for ii in good_clusters:

F_obs_plot[clusters[ii]] = F_obs[clusters[ii]]

fig, ax = plt.subplots(figsize=(6, 4), layout="constrained")

for f_image, cmap in zip([F_obs, F_obs_plot], ["gray", "autumn"]):

c = ax.imshow(

f_image,

cmap=cmap,

aspect="auto",

origin="lower",

extent=[times[0], times[-1], freqs[0], freqs[-1]],

)

fig.colorbar(c, ax=ax)

ax.set_xlabel("Time (ms)")

ax.set_ylabel("Frequency (Hz)")

ax.set_title(

f'Time-locked response for "modality by location" ({ch_name})\n'

"cluster-level corrected (p <= 0.05)"

)

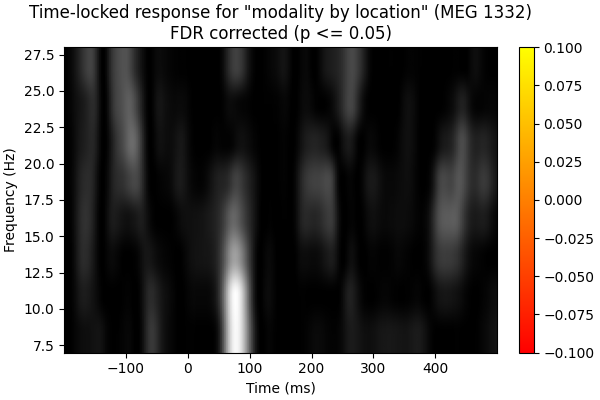

Now using FDR:

mask, _ = fdr_correction(pvals[2])

F_obs_plot2 = F_obs.copy()

F_obs_plot2[~mask.reshape(F_obs_plot.shape)] = np.nan

fig, ax = plt.subplots(figsize=(6, 4), layout="constrained")

for f_image, cmap in zip([F_obs, F_obs_plot2], ["gray", "autumn"]):

c = ax.imshow(

f_image,

cmap=cmap,

aspect="auto",

origin="lower",

extent=[times[0], times[-1], freqs[0], freqs[-1]],

)

fig.colorbar(c, ax=ax)

ax.set_xlabel("Time (ms)")

ax.set_ylabel("Frequency (Hz)")

ax.set_title(

f'Time-locked response for "modality by location" ({ch_name})\n'

"FDR corrected (p <= 0.05)"

)

Both cluster-level and FDR correction help get rid of potential false-positives that we saw in the naive f-images. The cluster permutation correction is biased toward time-frequencies with contiguous areas of high or low power, which is likely appropriate given the highly correlated nature of this data. This is the most likely explanation for why one cluster was preserved by the cluster permutation correction, but no time-frequencies were significant using the FDR correction.

Total running time of the script: (0 minutes 9.945 seconds)