For a while I’ve been interested in exploring sound as a new medium. Pure Data is a sound program which I’ve been particularly interested in. The program is something like a visual programming language, with a similar interface to quartz composer. Objects which represent chunks of code are placed on the canvas. These objects have inputs and outputs through which they send and receive data in the form of numbers, strings, and audio signals. Some graphic interface elements call also be added to control applications.

Starting to work with Pure Data is a little intimidating. Objects added to the stage are blank and you have to type into them what object the should be. Until you understand what the basic objects are and how they interact trying to get anything working isn’t easy. For at least a good while I’ve been opening up Pure Data every few months only to put it away again after beating my head against it for a while.

For my recent flash game, Pulsus, I decided to create the sound using Pure Data to force myself to learn the program. I managed to cobble together a basic understanding and build a few synthesizers and sound generators.

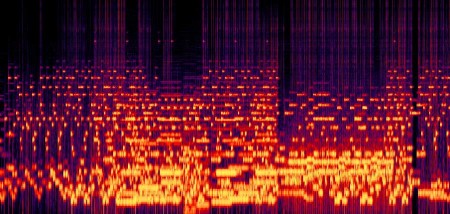

I used this first patch to create most of the sound effects in the game. For a number of oscillators the pitch and envelope can be changed. The pitches can create harmonics, harmonies, or dissonant chords. The envelope, the volume over the course of the sound, creates pulsing tones, short beats, and any other type of tone. I also added an amplitude modulator and a global envelope to add some more control.

In my next experiment I created a simple mono synthesizer. Key inputs, from my computer keyboard, are mapped into midi notes. When a key is pressed the frequency slides to that note if another note is still playing. Key presses trigger the envelope generator which reads data from an array (top right). The synth also has frequency and amplitude modulators and reads the waveform from a table to include harmonics.

Next I build a polyphonic synthesizer which has a separate oscillator for every note in the scale.

Here are the pure data files for these patches in case they might be useful to anyone, but again they are not super efficient, organized, or annotated.

These are my first moderately successful explorations with Pure Data. Some things, I realize, are not done as efficiently as possible, but I’m working things out in the next iteration. My next frustration is to find a way to control the instruments I build. I need a midi sequencer with which I can construct songs that could then send the midi info to Pure Data. I tried using a garage band plugin to output midi info from garage band but It came out pretty garbled in PD, I could be doing something wrong though. Any thoughts on how I should go about this?

Post Page »

Although Processing does not have the ability to process audio on it’s own there are a few libraries which can be used to add such features. I’ve been experimenting with the

Although Processing does not have the ability to process audio on it’s own there are a few libraries which can be used to add such features. I’ve been experimenting with the