- Cover

- Acknowledgments

- 1 Preface

- 2 (R)e-Introduction to statistics

- 2.1 Histograms, boxplots, and density curves

- 2.2 Pirate-plots

- 2.3 Models, hypotheses, and permutations for the two sample mean situation

- 2.4 Permutation testing for the two sample mean situation

- 2.5 Hypothesis testing (general)

- 2.6 Connecting randomization (nonparametric) and parametric tests

- 2.7 Second example of permutation tests

- 2.8 Reproducibility Crisis: Moving beyond p < 0.05, publication bias, and multiple testing issues

- 2.9 Confidence intervals and bootstrapping

- 2.10 Bootstrap confidence intervals for difference in GPAs

- 2.11 Chapter summary

- 2.12 Summary of important R code

- 2.13 Practice problems

- 3 One-Way ANOVA

- 3.1 Situation

- 3.2 Linear model for One-Way ANOVA (cell-means and reference-coding)

- 3.3 One-Way ANOVA Sums of Squares, Mean Squares, and F-test

- 3.4 ANOVA model diagnostics including QQ-plots

- 3.5 Guinea pig tooth growth One-Way ANOVA example

- 3.6 Multiple (pair-wise) comparisons using Tukey’s HSD and the compact letter display

- 3.7 Pair-wise comparisons for the Overtake data

- 3.8 Chapter summary

- 3.9 Summary of important R code

- 3.10 Practice problems

- 4 Two-Way ANOVA

- 4.1 Situation

- 4.2 Designing a two-way experiment and visualizing results

- 4.3 Two-Way ANOVA models and hypothesis tests

- 4.4 Guinea pig tooth growth analysis with Two-Way ANOVA

- 4.5 Observational study example: The Psychology of Debt

- 4.6 Pushing Two-Way ANOVA to the limit: Un-replicated designs and Estimability

- 4.7 Chapter summary

- 4.8 Summary of important R code

- 4.9 Practice problems

- 5 Chi-square tests

- 5.1 Situation, contingency tables, and tableplots

- 5.2 Homogeneity test hypotheses

- 5.3 Independence test hypotheses

- 5.4 Models for R by C tables

- 5.5 Permutation tests for the \(X^2\) statistic

- 5.6 Chi-square distribution for the \(X^2\) statistic

- 5.7 Examining residuals for the source of differences

- 5.8 General protocol for \(X^2\) tests

- 5.9 Political party and voting results: Complete analysis

- 5.10 Is cheating and lying related in students?

- 5.11 Analyzing a stratified random sample of California schools

- 5.12 Chapter summary

- 5.13 Summary of important R commands

- 5.14 Practice problems

- 6 Correlation and Simple Linear Regression

- 6.1 Relationships between two quantitative variables

- 6.2 Estimating the correlation coefficient

- 6.3 Relationships between variables by groups

- 6.4 Inference for the correlation coefficient

- 6.5 Are tree diameters related to tree heights?

- 6.6 Describing relationships with a regression model

- 6.7 Least Squares Estimation

- 6.8 Measuring the strength of regressions: R2

- 6.9 Outliers: leverage and influence

- 6.10 Residual diagnostics – setting the stage for inference

- 6.11 Old Faithful discharge and waiting times

- 6.12 Chapter summary

- 6.13 Summary of important R code

- 6.14 Practice problems

- 7 Simple linear regression inference

- 7.1 Model

- 7.2 Confidence interval and hypothesis tests for the slope and intercept

- 7.3 Bozeman temperature trend

- 7.4 Randomization-based inferences for the slope coefficient

- 7.5 Transformations part I: Linearizing relationships

- 7.6 Transformations part II: Impacts on SLR interpretations: log(y), log(x), & both log(y) & log(x)

- 7.7 Confidence interval for the mean and prediction intervals for a new observation

- 7.8 Chapter summary

- 7.9 Summary of important R code

- 7.10 Practice problems

- 8 Multiple linear regression

- 8.1 Going from SLR to MLR

- 8.2 Validity conditions in MLR

- 8.3 Interpretation of MLR terms

- 8.4 Comparing multiple regression models

- 8.5 General recommendations for MLR interpretations and VIFs

- 8.6 MLR inference: Parameter inferences using the t-distribution

- 8.7 Overall F-test in multiple linear regression

- 8.8 Case study: First year college GPA and SATs

- 8.9 Different intercepts for different groups: MLR with indicator variables

- 8.10 Additive MLR with more than two groups: Headache example

- 8.11 Different slopes and different intercepts

- 8.12 F-tests for MLR models with quantitative and categorical variables and interactions

- 8.13 AICs for model selection

- 8.14 Case study: Forced expiratory volume model selection using AICs

- 8.15 Chapter summary

- 8.16 Summary of important R code

- 8.17 Practice problems

- 9 Case studies

- 9.1 Overview of material covered

- 9.2 The impact of simulated chronic nitrogen deposition on the biomass and N2-fixation activity of two boreal feather moss–cyanobacteria associations

- 9.3 Ants learn to rely on more informative attributes during decision-making

- 9.4 Multi-variate models are essential for understanding vertebrate diversification in deep time

- 9.5 What do didgeridoos really do about sleepiness?

- 9.6 General summary

- References

2.3 Models, hypotheses, and permutations for the two sample mean situation

There appears to be some evidence that the casual clothing group is getting higher average overtake distances than the commute group of observations, but we want to try to make sure that the difference is real – that there is evidence to reject the assumption that the means are the same “in the population”. First, a null hypothesis22 which defines a null model23 needs to be determined in terms of parameters (the true values in the population). The research question should help you determine the form of the hypotheses for the assumed population. In the two independent sample mean problem, the interest is in testing a null hypothesis of \(H_0: \mu_1 = \mu_2\) versus the alternative hypothesis of \(H_A: \mu_1 \ne \mu_2\), where \(\mu_1\) is the parameter for the true mean of the first group and \(\mu_2\) is the parameter for the true mean of the second group. The alternative hypothesis involves assuming a statistical model for the \(i^{th}\ (i=1,\ldots,n_j)\) response from the \(j^{th}\ (j=1,2)\) group, \(\boldsymbol{y}_{ij}\), that involves modeling it as \(y_{ij} = \mu_j + \varepsilon_{ij}\), where we assume that \(\varepsilon_{ij} \sim N(0,\sigma^2)\). For the moment, focus on the models that either assume the means are the same (null) or different (alternative), which imply:

Null Model: \(y_{ij} = \mu + \varepsilon_{ij}\) There is no difference in true means for the two groups.

Alternative Model: \(y_{ij} = \mu_j + \varepsilon_{ij}\) There is a difference in true means for the two groups.

Suppose we are considering the alternative model for the 4th observation (\(i=4\)) from the second group (\(j=2\)), then the model for this observation is \(y_{42} = \mu_2 +\varepsilon_{42}\), that defines the response as coming from the true mean for the second group plus a random error term for that observation, \(\varepsilon_{42}\). For, say, the 5th observation from the first group (\(j=1\)), the model is \(y_{51} = \mu_1 +\varepsilon_{51}\). If we were working with the null model, the mean is always the same (\(\mu\)) – the group specified does not change the mean we use for that observation, so the model for \(y_{42}\) would be \(\mu +\varepsilon_{42}\).

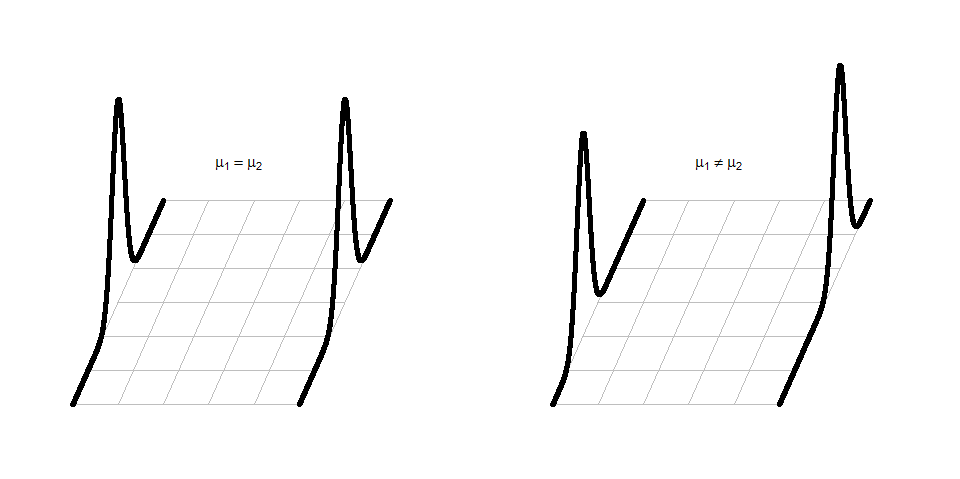

It can be helpful to think about the null and alternative models graphically. By assuming the null hypothesis is true (means are equal) and that the random errors around the mean follow a normal distribution, we assume that the truth is as displayed in the left panel of Figure 2.7 – two normal distributions with the same mean and variability. The alternative model allows the two groups to potentially have different means, such as those displayed in the right panel of Figure 2.7 where the second group has a larger mean. Note that in this scenario, we assume that the observations all came from the same distribution except that they had different means. Depending on the statistical procedure we are using, we basically are going to assume that the observations (\(y_{ij}\)) either were generated as samples from the null or alternative model. You can imagine drawing observations at random from the pictured distributions. For hypothesis testing, the null model is assumed to be true and then the unusualness of the actual result is assessed relative to that assumption. In hypothesis testing, we have to decide if we have enough evidence to reject the assumption that the null model (or hypothesis) is true. If we reject the null hypothesis, then we would conclude that the other model considered (the alternative model) is more reasonable. The researchers obviously would have hoped to encounter some sort of noticeable difference in the distances for the different outfits and have been able to find enough evidence to reject the null model where the groups “look the same”.

Figure 2.7: Illustration of the assumed situations under the null (left) and a single possibility that could occur if the alternative were true (right) and the true means were different. There are an infinite number of ways to make a plot like the right panel that satisfies the alternative hypothesis.

In statistical inference, null hypotheses (and their implied models) are set up as “straw men” with every interest in rejecting them even though we assume they are true to be able to assess the evidence \(\underline{\text{against them}}\). Consider the original study design here, the outfits were randomly assigned to the rides. If the null hypothesis were true, then we would have no difference in the population means of the groups. And this would apply if we had done a different random assignment of the outfits. So let’s try this: assume that the null hypothesis is true and randomly re-assign the treatments (outfits) to the observations that were obtained. In other words, keep the Distance results the same and shuffle the group labels randomly. The technical term for this is doing a permutation (a random shuffling of a grouping variable relative to the observed responses). If the null is true and the means in the two groups are the same, then we should be able to re-shuffle the groups to the observed Distance values and get results similar to those we actually observed. If the null is false and the means are really different in the two groups, then what we observed should differ from what we get under other random permutations. The differences between the two groups should be more noticeable in the observed data set than in (most) of the shuffled data sets. It helps to see an example of a permutation of the labels to understand what this means here.

The data set we are working with is a little on the large size, especially to explore individual observations. So for the moment we are going to work with a random sample of 30 of the \(n=1,636\) observations in ddsub, fifteen from each group, that are generated using the sample function. To do this24, we will use the sample function twice - once to sample from the subsetted commute observations (creating the s1 data set) and once to sample from the casual ones (creating s2). A new function for us, called rbind , is used to bind the rows together — much like pasting a chunk of rows below another chunk in a spreadsheet program. This operation only works if the columns all have the same names and meanings both for rbind and in a spreadsheet. Together this code creates the dsample data set that we will analyze below and compare to results from the full data set. The sample means are now 135.8 and 109.87 cm for casual and commute groups, respectively, and so the difference in the sample means has increased in magnitude to -25.93 cm (commute - casual). This difference would vary based on the different random samples from the larger data set, but for the moment pretend this was the entire data set that the researchers had collected and that we want to try to find evidence against the null hypothesis that the true means are the same in these two groups.

set.seed(9432)

s1 <- sample(subset(ddsub, Condition %in% "commute"), size=15)

s2 <- sample(subset(ddsub, Condition %in% "casual"), size=15)

dsample <- rbind(s1, s2)

mean(Distance~Condition, data=dsample)## casual commute

## 135.8000 109.8667In order to assess evidence against the null hypothesis of no difference, we want to permute the group labels versus the observations. In the mosaic package, the shuffle function allows us to easily perform

a permutation25. One permutation of the

treatment labels is provided in the PermutedCondition variable below. Note

that the Distances are held in the same place while the group labels are shuffled.

Perm1 <- with(dsample, tibble(Distance, Condition, PermutedCondition=shuffle(Condition)))

#To force the tibble to print out all rows in data set - not used often

data.frame(Perm1) ## Distance Condition PermutedCondition

## 1 168 commute commute

## 2 137 commute commute

## 3 80 commute casual

## 4 107 commute commute

## 5 104 commute casual

## 6 60 commute casual

## 7 88 commute commute

## 8 126 commute commute

## 9 115 commute casual

## 10 120 commute casual

## 11 146 commute commute

## 12 113 commute casual

## 13 89 commute commute

## 14 77 commute commute

## 15 118 commute casual

## 16 148 casual casual

## 17 114 casual casual

## 18 124 casual commute

## 19 115 casual casual

## 20 102 casual casual

## 21 77 casual casual

## 22 72 casual commute

## 23 193 casual commute

## 24 111 casual commute

## 25 161 casual casual

## 26 208 casual commute

## 27 179 casual casual

## 28 143 casual commute

## 29 144 casual commute

## 30 146 casual casualIf you count up the number of subjects in each group by counting the number

of times each label (commute, casual) occurs, it is the same in both the

Condition and PermutedCondition columns (15 each). Permutations involve randomly

re-ordering the values of a variable – here the Condition group labels – without

changing the content of the variable.

This result can also be generated using

what is called sampling without replacement: sequentially select \(n\) labels

from the original variable (Condition), removing each observed label and making sure that each of the

original Condition labels is selected once and only once. The new, randomly

selected order of selected labels provides the permuted labels. Stepping

through the process helps to understand how it works: after the initial random

sample of one label, there would \(n - 1\) choices possible; on the \(n^{th}\)

selection, there would only be one label remaining to select. This makes sure

that all original labels are re-used but that the order is random. Sampling

without replacement is like picking names out of a hat, one-at-a-time, and not

putting the names back in after they are selected. It is an exhaustive process

for all the original observations. Sampling with replacement, in contrast,

involves sampling from the specified list with each observation having an equal

chance of selection for each sampled observation – in other words, observations

can be selected more than once. This is like picking \(n\) names out of a hat that

contains \(n\) names, except that every time a name is selected, it goes back into

the hat – we’ll use this technique in Section 2.8

to do what is called bootstrapping.

Both sampling mechanisms can be

used to generate inferences but each has particular situations

where they are most useful. For hypothesis testing,

we will use permutations

(sampling without replacement) as its mechanism most closely matches the null hypotheses we will be testing.

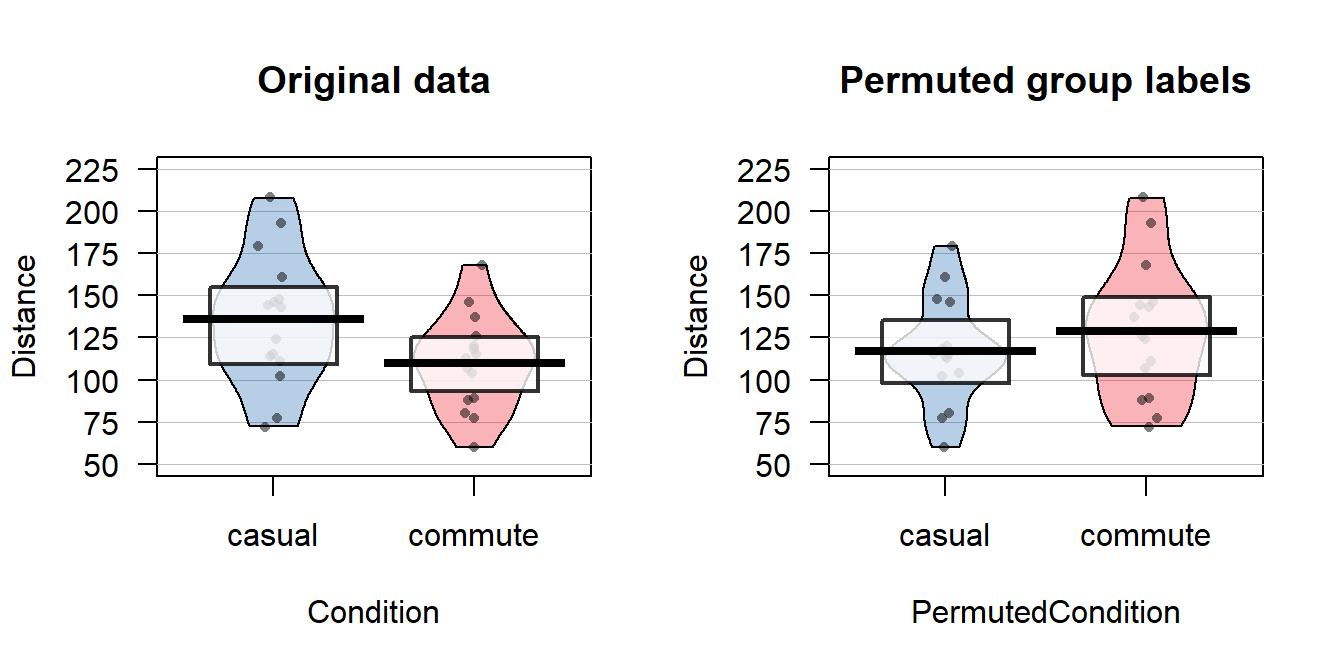

The comparison of the pirate-plots between the real \(n=30\) data set and permuted version is what is really interesting (Figure 2.8). The original difference in the sample means of the two groups was -25.93 cm (commute - casual). The sample means are the statistics that estimate the parameters for the true means of the two groups and the difference in the sample means is a way to create a single number that tracks a quantity directly related to the difference between the null and alternative models. In the permuted data set, the difference in the means is 12.07 cm in the opposite direction (the commute group had a higher mean than casual in the permuted data).

## casual commute

## 116.8000 128.8667## diffmean

## 12.06667

Figure 2.8: Pirate-plots of Distance responses versus actual treatment groups and permuted groups. Note how the responses are the same but that they are shuffled between the two groups differently in the permuted data set. With the smaller sample size, the 95% confidence intervals are more clearly visible than with the original large data set.

The diffmean function is a simple way to get the differences in the means, but we can also start to learn about using the lm function - that will be used for every chapter except for Chapter ??. The lm stands for linear model and, as we will see moving forward, encompasses a wide array of different models and scenarios. Here we will consider among its simplest usage26 to be able to estimate the difference in the mean of two groups. Notationally, it is very similar to other functions we have considered, lm(y ~ x, data=...) where y is the response variable and x is the explanatory variable. Here that is lm(Distance~Condition, data=dsample) with Condition defined as a factor variable. With linear models, we will need to interrogate them to obtain a variety of useful information and our first “interrogation” function is usually the summary function. To use it, it is best to have stored the model into an object, something like lm1, and then we can apply the summary() function to the stored model object to get a suite of output:

##

## Call:

## lm(formula = Distance ~ Condition, data = dsample)

##

## Residuals:

## Min 1Q Median 3Q Max

## -63.800 -21.850 4.133 15.150 72.200

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 135.800 8.863 15.322 3.83e-15

## Conditioncommute -25.933 12.534 -2.069 0.0479

##

## Residual standard error: 34.33 on 28 degrees of freedom

## Multiple R-squared: 0.1326, Adjusted R-squared: 0.1016

## F-statistic: 4.281 on 1 and 28 DF, p-value: 0.04789This output is explored more in Chapter ??, but for the moment, focus on the row labeled as Conditioncommute in the middle of the output. In the first (Estimate) column, there is -25.933. This is a number we saw before – it is the difference in the sample means between commute and casual (commute - casual). When lm denotes a category in the row of the output (here commute), it is trying to indicate that the information to follow relates to the difference between this category and a baseline or reference category (here casual). The first ((Intercept)) row also contains a number we have seen before: - 135.8 is the sample mean for the casual group. So the lm is generating a coefficient for the mean of one of the groups and another as the difference in the two groups27. In developing a test to assess evidence against the null hypothesis, we will focus on the difference in the sample means. So we want to be able to extract that number from this large suite of information. It ends up that we can apply the coef function to lm models and then access that second coefficient using the bracket notation. Specifically:

## Conditioncommute

## -25.93333This is the same result as using the diffmean function, so either could be used here. The estimated difference in the sample means in the permuted data set of 12.07 cm is available with:

## PermutedConditioncommute

## 12.06667Comparing the pirate-plots and the estimated difference in the sample means suggests that the observed difference was larger than what we got when we did a single permutation . Conceptually, permuting observations between group labels is consistent with the null hypothesis – this is a technique to generate results that we might have gotten if the null hypothesis were true since the responses are the same in the two groups if the null is true. We just need to repeat the permutation process many times and track how unusual our observed result is relative to this distribution of potential responses if the null were true. If the observed differences are unusual relative to the results under permutations, then there is evidence against the null hypothesis, and we can conclude, in the direction of the alternative hypothesis, that the true means differ. If the observed differences are similar to (or at least not unusual relative to) what we get under random shuffling under the null model, we would have a tough time concluding that there is any real difference between the groups based on our observed data set. This is formalized using the p-value as a measure of the strength of evidence against the null hypothesis and how we use it.

The hypothesis of no difference that is typically generated in the hopes of being rejected in favor of the alternative hypothesis, which contains the sort of difference that is of interest in the application.↩

The null model is the statistical model that is implied by the chosen null hypothesis. Here, a null hypothesis of no difference translates to having a model with the same mean for both groups.↩

While note required, we often set our random number seed using the

set.seedfunction so that when we re-run code with randomization in it we get the same results. ↩We’ll see the

shufflefunction in a more common usage below; while the code to generatePerm1is provided, it isn’t something to worry about right now.↩This is a bit like getting a new convertible sports car and driving it to the grocery store - there might be better ways to get groceries, but we want to drive our new car as soon as we get it.↩

This will be formalized and explained more in the next chapter when we encounter more than two groups in these same models. For now, it is recommended to start with the sample means from

favstatsfor the two groups and then use that to sort out which direction the differencing was done in thelmoutput.↩