- Cover

- Acknowledgments

- 1 Preface

- 2 (R)e-Introduction to statistics

- 2.1 Histograms, boxplots, and density curves

- 2.2 Pirate-plots

- 2.3 Models, hypotheses, and permutations for the two sample mean situation

- 2.4 Permutation testing for the two sample mean situation

- 2.5 Hypothesis testing (general)

- 2.6 Connecting randomization (nonparametric) and parametric tests

- 2.7 Second example of permutation tests

- 2.8 Reproducibility Crisis: Moving beyond p < 0.05, publication bias, and multiple testing issues

- 2.9 Confidence intervals and bootstrapping

- 2.10 Bootstrap confidence intervals for difference in GPAs

- 2.11 Chapter summary

- 2.12 Summary of important R code

- 2.13 Practice problems

- 3 One-Way ANOVA

- 3.1 Situation

- 3.2 Linear model for One-Way ANOVA (cell-means and reference-coding)

- 3.3 One-Way ANOVA Sums of Squares, Mean Squares, and F-test

- 3.4 ANOVA model diagnostics including QQ-plots

- 3.5 Guinea pig tooth growth One-Way ANOVA example

- 3.6 Multiple (pair-wise) comparisons using Tukey’s HSD and the compact letter display

- 3.7 Pair-wise comparisons for the Overtake data

- 3.8 Chapter summary

- 3.9 Summary of important R code

- 3.10 Practice problems

- 4 Two-Way ANOVA

- 4.1 Situation

- 4.2 Designing a two-way experiment and visualizing results

- 4.3 Two-Way ANOVA models and hypothesis tests

- 4.4 Guinea pig tooth growth analysis with Two-Way ANOVA

- 4.5 Observational study example: The Psychology of Debt

- 4.6 Pushing Two-Way ANOVA to the limit: Un-replicated designs and Estimability

- 4.7 Chapter summary

- 4.8 Summary of important R code

- 4.9 Practice problems

- 5 Chi-square tests

- 5.1 Situation, contingency tables, and tableplots

- 5.2 Homogeneity test hypotheses

- 5.3 Independence test hypotheses

- 5.4 Models for R by C tables

- 5.5 Permutation tests for the \(X^2\) statistic

- 5.6 Chi-square distribution for the \(X^2\) statistic

- 5.7 Examining residuals for the source of differences

- 5.8 General protocol for \(X^2\) tests

- 5.9 Political party and voting results: Complete analysis

- 5.10 Is cheating and lying related in students?

- 5.11 Analyzing a stratified random sample of California schools

- 5.12 Chapter summary

- 5.13 Summary of important R commands

- 5.14 Practice problems

- 6 Correlation and Simple Linear Regression

- 6.1 Relationships between two quantitative variables

- 6.2 Estimating the correlation coefficient

- 6.3 Relationships between variables by groups

- 6.4 Inference for the correlation coefficient

- 6.5 Are tree diameters related to tree heights?

- 6.6 Describing relationships with a regression model

- 6.7 Least Squares Estimation

- 6.8 Measuring the strength of regressions: R2

- 6.9 Outliers: leverage and influence

- 6.10 Residual diagnostics – setting the stage for inference

- 6.11 Old Faithful discharge and waiting times

- 6.12 Chapter summary

- 6.13 Summary of important R code

- 6.14 Practice problems

- 7 Simple linear regression inference

- 7.1 Model

- 7.2 Confidence interval and hypothesis tests for the slope and intercept

- 7.3 Bozeman temperature trend

- 7.4 Randomization-based inferences for the slope coefficient

- 7.5 Transformations part I: Linearizing relationships

- 7.6 Transformations part II: Impacts on SLR interpretations: log(y), log(x), & both log(y) & log(x)

- 7.7 Confidence interval for the mean and prediction intervals for a new observation

- 7.8 Chapter summary

- 7.9 Summary of important R code

- 7.10 Practice problems

- 8 Multiple linear regression

- 8.1 Going from SLR to MLR

- 8.2 Validity conditions in MLR

- 8.3 Interpretation of MLR terms

- 8.4 Comparing multiple regression models

- 8.5 General recommendations for MLR interpretations and VIFs

- 8.6 MLR inference: Parameter inferences using the t-distribution

- 8.7 Overall F-test in multiple linear regression

- 8.8 Case study: First year college GPA and SATs

- 8.9 Different intercepts for different groups: MLR with indicator variables

- 8.10 Additive MLR with more than two groups: Headache example

- 8.11 Different slopes and different intercepts

- 8.12 F-tests for MLR models with quantitative and categorical variables and interactions

- 8.13 AICs for model selection

- 8.14 Case study: Forced expiratory volume model selection using AICs

- 8.15 Chapter summary

- 8.16 Summary of important R code

- 8.17 Practice problems

- 9 Case studies

- 9.1 Overview of material covered

- 9.2 The impact of simulated chronic nitrogen deposition on the biomass and N2-fixation activity of two boreal feather moss–cyanobacteria associations

- 9.3 Ants learn to rely on more informative attributes during decision-making

- 9.4 Multi-variate models are essential for understanding vertebrate diversification in deep time

- 9.5 What do didgeridoos really do about sleepiness?

- 9.6 General summary

- References

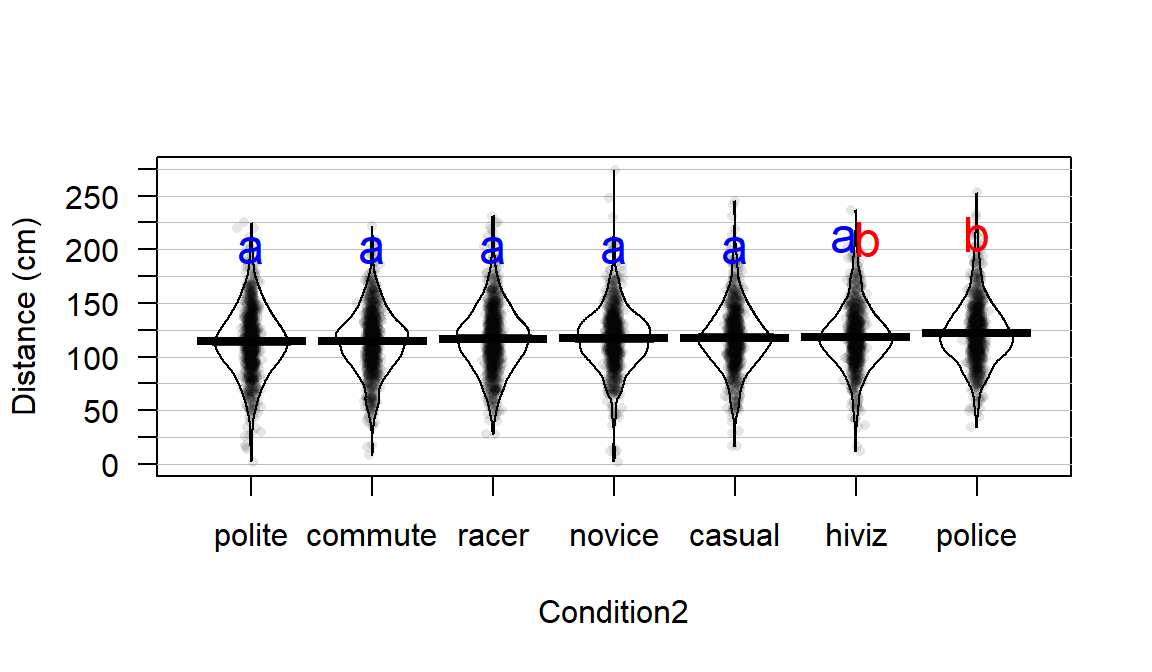

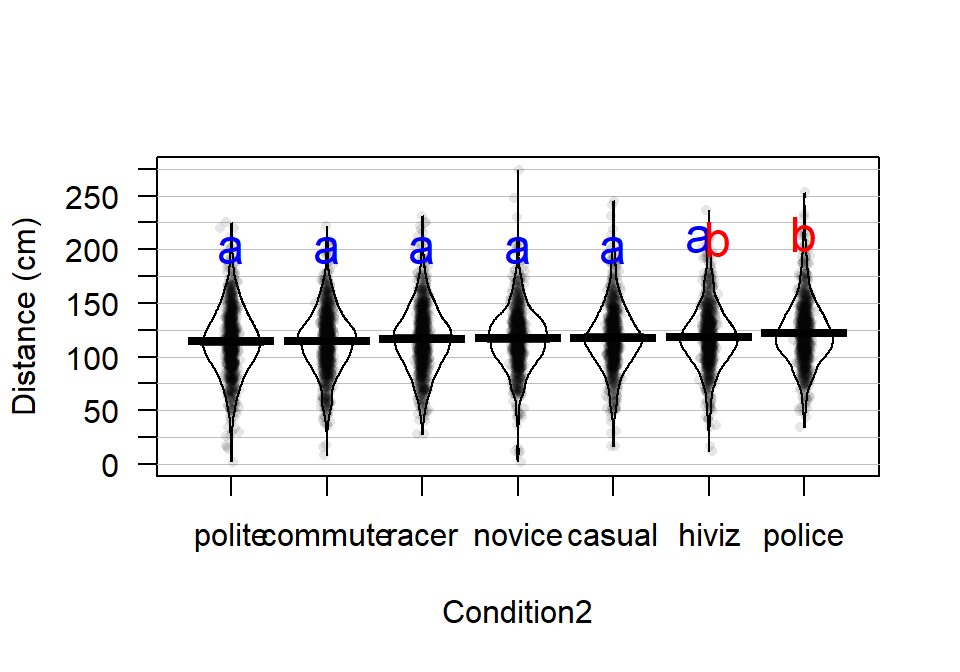

3.7 Pair-wise comparisons for the Overtake data

In our previous work with the overtake data, the overall ANOVA test

led to a conclusion that there is some difference in the true means across the

seven groups with a p-value < 0.001 giving very strong evidence against the null hypothesis. The original authors followed up their overall \(F\)-test with comparing every pair of outfits using one of the other methods for multiple testing adjustments available in the p.adjust function and detected differences between the police outfit and all others except for hiviz and no other pairs had p-values less than 0.05 using their approach. We will employ the Tukey’s HSD approach to address the same exploration and get basically the same results as they obtained.

The code is similar69 to the previous example focusing on the Condition variable for the 21 pairs to compare. To make these results easier to read and generally to make all the results with seven groups easier to understand, we can sort the levels of the explanatory based on the values in the response, using something like the the means or medians of the responses for the groups. This does not change the analyses (the \(F\)-statistic and all pair-wise comparisons are the same), it just sorts them to be easier to discuss. Note that it might change the baseline group so would impact the reference-coded model even though the fitted values are the same. Specifically, we can use the reorder function based on the mean using something like reorder(FACTORVARIABLE, RESPONSEVARIABLE, FUN=mean). Unfortunately the reorder function doesn’t have a data=... option, so we will let the function know where to find the two variables with a wrapper around it of with(DATASETNAME, reorder(...)); this approach saves us from having to use dd$... to reference each variable. I like to put this “reordered” factor into a new variable so I can always go back to the other version if I want it. The code creates Condition2 here and checking the levels for it and the original Condition variable show the change in the order of the levels of the two factor variables:

## [1] "casual" "commute" "hiviz" "novice" "police" "polite" "racer"## [1] "polite" "commute" "racer" "novice" "casual" "hiviz" "police"And to verify that this worked, we can compare the means based on Condition and Condition2, and now it is even more clear which groups have the smallest and largest mean passing distances:

## casual commute hiviz novice police polite racer

## 117.6110 114.6079 118.4383 116.9405 122.1215 114.0518 116.7559## polite commute racer novice casual hiviz police

## 114.0518 114.6079 116.7559 116.9405 117.6110 118.4383 122.1215In Figure 2.47, the 95% family-wise confidence intervals are displayed. There are only five pairs that have confidence intervals that do not contain 0 and all contain comparisons of the police group with others. So there is a detectable difference between police and polite, commute, racer, novice, and casual. The police versus casual comparison is hard to see whether 0 is in the interval or not in the plot, but the confidence interval goes from 0.06 to 8.97 cm (look at the results from confint), so suggests sufficient evidence to detect a difference in these groups (barely!) at the 5% family-wise significance level.

Figure 2.47: Tukey’s HSD confidence interval results at the 95% family-wise confidence level for the overtake distances linear model using the new Condition2 explanatory variable.

lm2 <- lm(Distance~Condition2, data=dd)

require(multcomp)

TmOV <- glht(lm2, linfct = mcp(Condition2 = "Tukey"))##

## Simultaneous Confidence Intervals

##

## Multiple Comparisons of Means: Tukey Contrasts

##

##

## Fit: lm(formula = Distance ~ Condition2, data = dd)

##

## Quantile = 2.9486

## 95% family-wise confidence level

##

##

## Linear Hypotheses:

## Estimate lwr upr

## commute - polite == 0 0.55609 -3.69182 4.80400

## racer - polite == 0 2.70403 -1.55015 6.95820

## novice - polite == 0 2.88868 -1.42494 7.20230

## casual - polite == 0 3.55920 -0.79441 7.91281

## hiviz - polite == 0 4.38642 -0.03208 8.80492

## police - polite == 0 8.06968 3.73207 12.40728

## racer - commute == 0 2.14793 -2.11975 6.41562

## novice - commute == 0 2.33259 -1.99435 6.65952

## casual - commute == 0 3.00311 -1.36370 7.36991

## hiviz - commute == 0 3.83033 -0.60118 8.26183

## police - commute == 0 7.51358 3.16273 11.86443

## novice - racer == 0 0.18465 -4.14844 4.51774

## casual - racer == 0 0.85517 -3.51773 5.22807

## hiviz - racer == 0 1.68239 -2.75512 6.11991

## police - racer == 0 5.36565 1.00868 9.72262

## casual - novice == 0 0.67052 -3.76023 5.10127

## hiviz - novice == 0 1.49774 -2.99679 5.99227

## police - novice == 0 5.18100 0.76597 9.59603

## hiviz - casual == 0 0.82722 -3.70570 5.36015

## police - casual == 0 4.51048 0.05637 8.96458

## police - hiviz == 0 3.68326 -0.83430 8.20081## polite commute racer novice casual hiviz police

## "a" "a" "a" "a" "a" "ab" "b"The CLD also reinforces the previous discussion of which levels were detected as different and elucidates the other aspects of the results. Specifically, police is in a group with hiviz only (group “b”, not detectably different). But hiviz is also in a group with all the other levels so also is in group “a”. Figure 2.48 adds the CLD to the pirate-plot with the sorted means to help visually present these results with the original data, reiterating the benefits of sorting factor levels to make these plots easier to read. To wrap up this example (finally), we can see that we found that there was clear evidence against the null hypothesis of no difference in the true means, so concluded that there was some difference. The follow-up explorations show that we can really only suggest that the police outfit has detectably different mean distances and that is only for five of the six other levels. So if you are bike commuter (in the UK near London?), you are left to consider the size of this difference. The biggest estimated mean difference was 8.07 cm (3.2 inches) between police and polite. Do you think it is worth this potential extra average distance, especially given the wide variability in the distances, to make and then wear this vest? It is interesting that this result is found but it also is a fairly minimum size of a difference. It required an extremely large data set to detect these differences because the differences in the means are not very large relative to the variability in the responses. It seems like there might be many other reasons for why overtake distances vary that were not included our suite of predictors (they explored traffic volume in the paper as one other factor but we don’t have that in our data set) or maybe it is just unexplanably variable. But it makes me wonder whether it matters what I wear when I bike and whether it has an impact that matters for average overtake distances – even in the face of these “statistically significant” results. But maybe there is an impact on the “close calls” as you can see some differences in the lower tails of the distributions across the groups. The authors looked at the rates of “closer” overtakes by classifying the distances as either less than 100 cm (39.4 inches) as closer or not and also found some interesting results. Chapter ?? discusses a method called a Chi-square test of Homogeneity that would be appropriate here and allow for an analysis of the rates of closer passes and this study is revisited in the Practice Problems there. It ends up showing that rates of “closer passes” are smallest in the police group.

#old.par <- par(mai=c(0.5,1,1,1))

pirateplot(Distance~Condition2, data=dd, ylab="Distance (cm)", inf.method="ci", theme=2,inf.f.o = 0)

text(x=1:5,y=200,"a",col="blue",cex=1.5) #CLD added

text(x=5.9,y=210,"a",col="blue",cex=1.5)

text(x=6.1,y=210,"b",col="red",cex=1.5)

text(x=7,y=215,"b",col="red",cex=1.5)

Figure 2.48: Pirate-plot of overtake distances by group, sorted by sample means with Tukey’s HSD CLD displayed.

There is a warning message produced by the default Tukey’s code here related to the algorithms used to generate approximate p-values and then the CLD, but the results seem reasonable and just a few p-values seem to vary in the second or third decimal points.↩